1、配置

1.1 core-site.xml配置

位置:$HADOOP_HOME/etc/hadoop/core-site.xml

fs.defaultFS

hdfs://localhost:9000

PS:如提示无写权限,执行chmod -R 775 /usr/local/hadoop 添加读写权限

1.1.1 配置参数

详见 core-site.xml参数释义

1.2 hdfs-site.xml配置

位置:位置$HADOOP_HOME/etc/hadoop/hdfs-site.xml

dfs.replication

1

1.2.1 配置参数

详见hdfs-site.xml参数释义

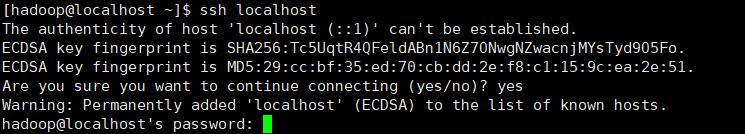

1.3 设置免密SSH

首先尝试ssh localhost,如提示需要密码:

则生成ssh密钥,执行如下操作:

$ ssh-keygen -t rsa -P '' -f ~/.ssh/id_rsa

$ cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

$ chmod 0600 ~/.ssh/authorized_keys2、运行

2.1 格式化文件系统

$ bin/hdfs namenode -format显示结果

[hadoop@localhost hadoop]$ bin/hdfs namenode -format

18/05/27 17:43:30 INFO namenode.NameNode: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting NameNode

STARTUP_MSG: host = localhost/127.0.0.1

STARTUP_MSG: args = [-format]

STARTUP_MSG: version = 2.9.1

STARTUP_MSG: classpath =

......

18/05/27 17:43:33 INFO common.Storage: Storage directory /tmp/hadoop-hadoop/dfs/name has been successfully formatted.

18/05/27 17:43:33 INFO namenode.FSImageFormatProtobuf: Saving image file /tmp/hadoop-hadoop/dfs/name/current/fsimage.ckpt_0000000000000000000 using no compression

18/05/27 17:43:33 INFO namenode.FSImageFormatProtobuf: Image file /tmp/hadoop-hadoop/dfs/name/current/fsimage.ckpt_0000000000000000000 of size 323 bytes saved in 0 seconds .

18/05/27 17:43:33 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0

18/05/27 17:43:33 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at localhost/127.0.0.1

************************************************************/

2.2 启动namenode 和 datanode 守护线程

$ sbin/start-dfs.sh提示如下:JAVA_HOME未识别到

[hadoop@localhost hadoop]$ sbin/start-dfs.sh

Starting namenodes on [localhost]

localhost: Error: JAVA_HOME is not set and could not be found.

localhost: Error: JAVA_HOME is not set and could not be found.

Starting secondary namenodes [0.0.0.0]

The authenticity of host '0.0.0.0 (0.0.0.0)' can't be established.

ECDSA key fingerprint is SHA256:KkgCrCih0ZSBx/61V6D30J7m6wAl6HNuD3K0Q/gQobw.

ECDSA key fingerprint is MD5:8c:07:a9:6d:85:66:e4:ca:c8:89:d5:3e:ae:b7:d5:70.

Are you sure you want to continue connecting (yes/no)? yes

0.0.0.0: Warning: Permanently added '0.0.0.0' (ECDSA) to the list of known hosts.

0.0.0.0: Error: JAVA_HOME is not set and could not be found.设置hadoop-env.sh文件的java环境变量,编辑$HADOOP_HOME/etc/haddop/hadoop-env.sh

export JAVA_HOME=/usr/local/apps/java再次执行 sbin/start-dfs.sh

[hadoop@localhost hadoop]$ sbin/start-dfs.sh

Starting namenodes on [localhost]

localhost: starting namenode, logging to /usr/local/apps/hadoop/logs/hadoop-hadoop-namenode-localhost.localdomain.out

localhost: starting datanode, logging to /usr/local/apps/hadoop/logs/hadoop-hadoop-datanode-localhost.localdomain.out

Starting secondary namenodes [0.0.0.0]

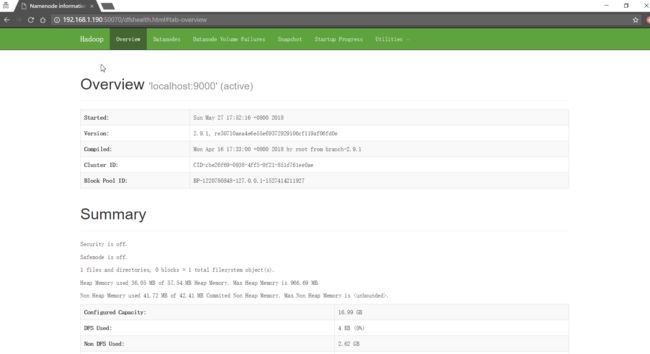

0.0.0.0: starting secondarynamenode, logging to /usr/local/apps/hadoop/logs/hadoop-hadoop-secondarynamenode-localhost.localdomain.out访问 http://host:50070

因为博主采用的是虚拟机CentOS7,防火墙默认启动,所以需要关闭防火墙:

//临时关闭

# systemctl stop firewalld

//禁止开机启动

# systemctl disable firewalld关闭防火墙后,访问结果

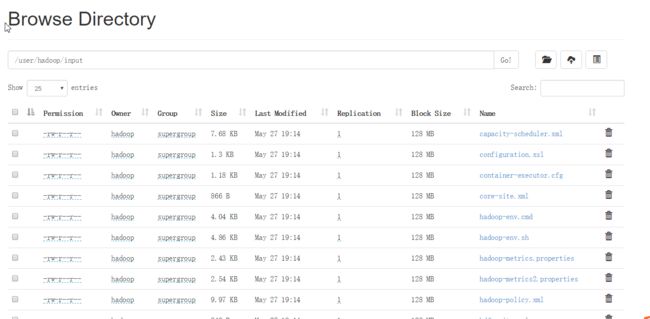

2.3 测试运行

#新建文件夹

$ bin/hdfs dfs -mkdir /usr

$ bin/hdfs dfs -mkdir /usr/hadoop#复制文件

$ bin/hdfs dfs -put etc/hadoop input#运行测试样例

bin/hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-2.9.1.jar grep input output 'dfs[a-z.]+'

#查看样例运行结果

$ bin/hdfs dfs -cat output/*当然,也可以把结果复制到本地系统中查看

#获取hdfs系统上output文件夹至本地output文件夹

$ bin/hdfs dfs -get output output

$ cat output/*3 停止

#运行停止脚本,停止namenode 和datanode 守护线程

$ sbin/stop-dfs.sh4 单机模式YARN配置

4.1 配置

- 配置文件$HADOOP_HOME/etc/hadoop/mapred-site.xml

- 配置参数详解

mapreduce.framework.name

yarn

- 配置文件$HADOOP_HOME/etc/hadoop/yarn-site.xml

- 配置参数详解

yarn.nodemanager.aux-services

mapreduce_shuffle

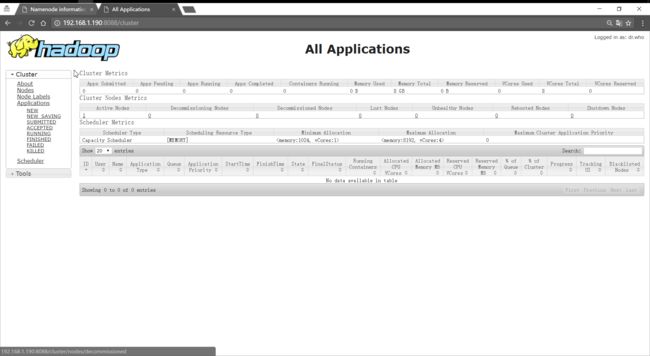

4.2 启动与停止

- 启动

$HADOOP_HOME/sbin/start-yarn.sh- 停止

$HADOOP_HOME/sbin/stop-yarn.sh- 访问http://host:8088