人机验证

内容 (Contents)

1. Intro

1.简介

2. AI — An overview

2. AI —概述

3. Human + Machine Diagram

3.人机图

4. MELDS Framework

4. MELDS框架

5. Responsible AI

5.负责任的AI

-

--

Trust, transparency & responsibility

信任,透明和责任

-

--

Fair & ethical design standards

公平和道德的设计标准

-

--

Regulation & GDPR compliance

法规与GDPR合规

-

--

Human checking, testing & auditing

人工检查,测试和审核

6. Fusion Skills

6.融合技巧

7. New Roles Created

7.创建的新角色

8. Conclusion

8.结论

9. Further Reading

9.进一步阅读

1.简介 (1. Intro)

Human + Machine is written by Paul Daugherty (Accenture Chief Technology Officer) and James Wilson (Accenture Managing Director of IT and Business Research).

Human + Machine由Paul Daugherty(埃森哲首席技术官)和James Wilson(埃森哲IT和业务研究常务董事)撰写。

Dispelling the common narrative of the binary approach of human vs machine “fighting for the other’s jobs”, Daugherty and Wilson argue in favour of a future symbiosis between human and machine, in what they call “the third wave of business transformation”. Wave 1 involved standardised processes — think Henry Ford. Wave 2 saw automation — IT from the 1970s-90s. Wave 3 will involve “adaptive processes… driven by real-time data rather than by a prior sequence of steps”.

Daugherty和Wilson驳斥了人类与机器“为他人的工作而战”的二元方法的共同叙述,主张人类与机器之间未来的共生,即所谓的“ 第三波业务转型 ”。 第一波涉及标准化流程-想想亨利·福特(Henry Ford)。 Wave 2看到了自动化-1970年代至90年代的IT。 第三波将涉及“自适应过程……由实时数据驱动,而不是由先前的步骤序列驱动”。

This “third wave” will allow for “machines … doing what they do best: performing repetitive tasks, analysing huge data sets, and handling routine cases. And humans … doing what they do best: resolving ambiguous information, exercising judgment in difficult cases, and dealing with dissatisfied customers.” They go further in discussing the “missing middle” — human and machine hybrid activities — where “humans complement machines”, and “AI gives humans superpowers”, as well as making a business case for AI: “AI isn’t your typical capital investment; its value actually increases over time and it, in turn, improves the value of people as well.” And unexpectedly, “the problem right now isn’t so much that robots are replacing jobs; it’s that workers aren’t prepared with the right skills necessary for jobs that are evolving fast due to new technologies, such as AI.” Future issues will actually surround the constant re-skilling and learning of the workforce.

这“第三波”将使“机器……尽力而为:执行重复性任务,分析大量数据集并处理例行案件。 而人类……做他们最擅长的事情:解决模棱两可的信息,在困难的情况下做出判断,并与不满意的客户打交道。” 他们进一步讨论了“ 缺失的中间点 ”,即人与机器的混合活动,其中“人类补充了机器”,“人工智能赋予了人类超能力”,并提出了人工智能的商业案例:“人工智能不是您的典型资本投资; 它的价值实际上随着时间的推移而增加,反过来也提高了人们的价值。” 出乎意料的是,“目前的问题不仅仅在于机器人正在替代工作;而是在于解决问题。” 这是因为,由于诸如AI之类的新技术,工人没有为快速发展的工作所必需的正确技能做好准备。” 未来的问题实际上将围绕员工不断的重新技能和学习。

2. AI —概述 (2. AI — An Overview)

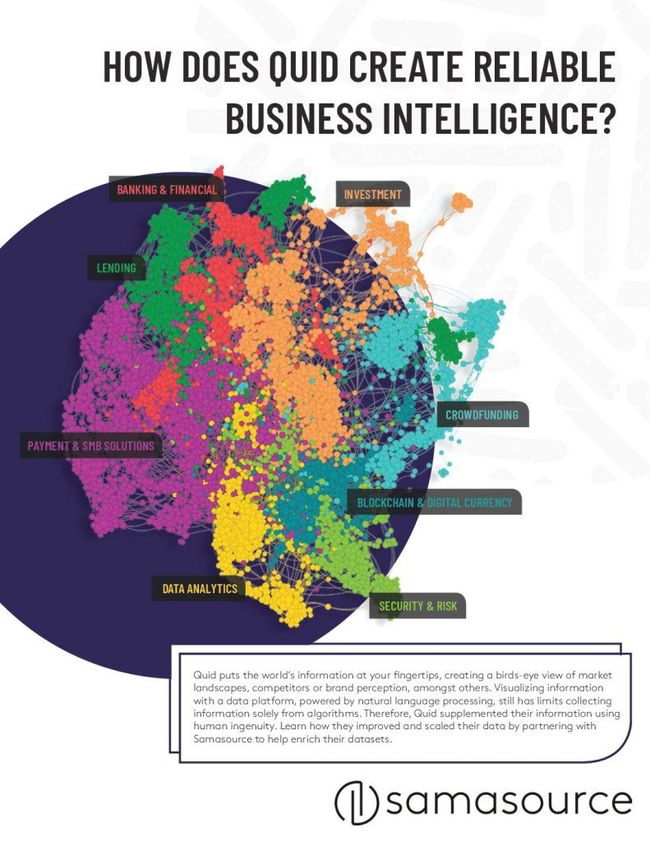

First envisioned in the 1950s, Artificial Intelligence (AI) and Machine Learning (ML) allow computers to see patterns in data and produce outputs without every step of the process being hard-coded. To distinguish the two, AI is the broader concept of machines carrying out ‘intelligent’ tasks, and ML is an application of AI where machines can be provided with data (e.g. an individual’s health data), learn for themselves, and produce an output (e.g. a recommended course of treatment for an individual).

人工智能(AI)和机器学习(ML)最早在1950年代被设想出来,它使计算机可以看到数据模式并产生输出,而无需对过程的每个步骤进行硬编码。 为了区别两者,人工智能是机器执行“智能”任务的广义概念,而机器学习是人工智能的应用,在机器上可以向机器提供数据(例如个人的健康数据),自己学习并产生输出(例如针对个人的推荐治疗方案)。

After some funding dry-ups, AI has been gaining popularity since around the 2000s and is becoming ever more visible in businesses and everyday life today: you wake up to an alarm set by Alexa, who tells you your schedule for the day. You open your computer, likely made by smart robots in a factory. You log in, making use of its facial recognition system. You procrastinate from work by scrolling a curated Facebook feed designed to keep your attention, or on Netflix where you’re recommended TV shows and movies to cater to your viewing-tastes. It’s also likely your work software implements many kinds of AI and ML.

经过一番资金枯竭之后,自2000年代以来,人工智能就开始流行,并且在当今的企业和日常生活中变得越来越明显:您会醒来Alexa发出的警报,告诉您当天的行程。 您打开计算机,这很可能是由工厂中的智能机器人制造的。 您使用其面部识别系统登录。 您可以滚动浏览旨在吸引您注意力的精选Facebook feed,或在Netflix上推荐您观看电视节目和电影来满足自己的观看口味的工作,从而拖延工作。 您的工作软件也可能实现了多种AI和ML。

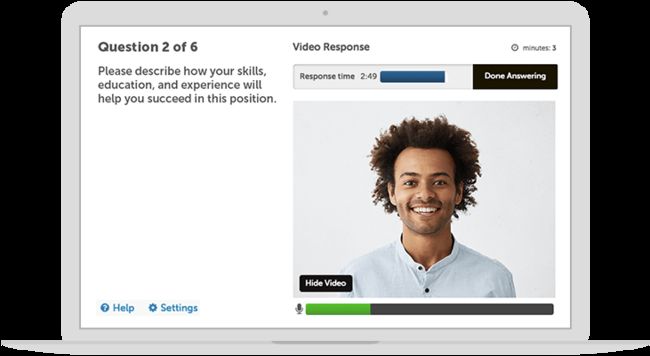

These algorithms work by having train and test data. For instance, a CV-checking model could be trained on past hiring data, accepting through to the next stage people who demonstrate qualities described in the job description, and rejecting those who do not. The model then gets fed new test data (new CVs it hasn’t seen before) so someone can check whether the predicted outcomes are desirable. Once the model has been tweaked, it is rolled out for use on actual applicants’ CVs. The issue then is making sure the training data is not biased — take the 2014 case study where Amazon’s trial recruitment algorithm was trained on data where a majority of men were hired, resulting in female applicants being penalised — this algorithm was soon scrapped.

这些算法通过训练和测试数据来工作 。 例如,可以使用过去的招聘数据来训练CV检查模型,接受进入下一个阶段的人员,这些人员要表现出工作说明中描述的素质,而拒绝那些没有表现出来的素质。 然后,该模型将获得新的测试数据(之前从未见过的新CV),因此有人可以检查预测结果是否令人满意。 对模型进行调整后,将其推出以供实际申请人的简历使用。 然后,问题是确保培训数据没有偏见-以2014年的案例研究为例,其中亚马逊的试行招募算法是根据雇用大多数男性的数据进行培训的,从而导致女性申请人受到惩罚-该算法很快就被取消了。

Audi Robotic Telepresence (ART) — An expert technician remotely controls this robot, which works alongside an on-site technician to make repairs 奥迪机器人网真(ART)-专家技术人员远程控制该机器人,该机器人与现场技术人员一起进行维修There are different branches of ML too:

ML也有不同的分支:

Supervised learning: labelled train data. The algorithm wants to learn the rules connecting an input to a given output, and to use those rules for making predictions e.g. using which past job applicants got hired to decide whether to hire a new applicant, this has a yes/no label to whether the applicant was hired

监督学习 :标记的火车数据。 该算法要学习将输入连接到给定输出的规则,并要使用这些规则进行预测, 例如使用过去雇用的应聘者来决定是否雇用新的应聘者,这对是否应聘者有一个是/否标签。申请人被录用

Unsupervised learning: unlabelled train data. The algorithm must find structures and patterns of inputs on its own e.g. clustering types of customer based on demographic info and their spending habits, there are no labels as the customer segments are not yet known in this example

无监督学习 :未标记的训练数据。 该算法必须自行找到输入的结构和模式, 例如,根据人口统计信息及其消费习惯来确定客户的聚类类型,因为在此示例中尚不了解客户群,所以没有标签

Reinforcement learning: training an algorithm given a specific goal. Each move the algorithm makes towards the goal is either rewarded or punished, and the feedback allows the algorithm to build the most efficient path toward the goal e.g. a robot arm picking up a component on a production line, or an AI trying to win the game Go

强化学习 :训练给定特定目标的算法。 算法朝目标迈出的每一步都将得到奖励或惩罚,而反馈则使算法能够建立朝目标迈出的最有效路径, 例如,机械臂在生产线上拾取零部件,或者 AI试图赢得比赛走

Neural network: a machine with connected nodes/neurons which strengthen when fired more often, similar to the human brain e.g. a neural network which learns how to make stock market predictions based on past data

神经网络:一种具有连接的节点/神经元的机器,在发射频率更高时会增强,类似于人的大脑, 例如,一个神经网络,该神经网络学习如何根据过去的数据进行股市预测

Deep learning: more complex neural networks, like deep neural networks (DNN), recurrent neural networks (RNN), feedforward neural networks (FNN)

深度学习 :更复杂的神经网络,例如深度神经网络(DNN),递归神经网络(RNN),前馈神经网络(FNN)

Natural Language Processing (NLP): computers processing human language e.g. speech recognition, translation, sentiment analysis

自然语言处理(NLP):处理人类语言的计算机, 例如语音识别,翻译,情感分析

Computer vision: teaching computers to identify, categorise, and understand the content within images and video e.g. facial recognition systems

计算机视觉 :教计算机识别,分类和理解图像和视频中的内容, 例如面部识别系统

Audio and signal processing e.g. text-to-speech, audio transcription, voice control, closed-captioning

音频和信号处理, 例如文本到语音,音频转录,语音控制,隐藏式字幕

Intelligent agents e.g. collaborative robotics (cobots), voice agents like Alexa, Cortana, Siri

智能代理, 例如协作机器人(cobot),语音代理(例如Alexa,Cortana,Siri)

Recommender systems: making suggestions based on subtle patterns over time e.g. targeted advertising, recommendations on Amazon/Netflix

推荐系统:根据一段时间内的细微模式提出建议, 例如针对性广告,有关Amazon / Netflix的 建议

Augmented reality (AR) and Virtual Reality (VR) e.g. flight simulation training

增强现实(AR)和虚拟现实(VR), 例如飞行模拟培训

3.人机图 (3. Human + Machine Diagram)

4. MELDS框架 (4. MELDS Framework)

A key focus of the book is the MELDS framework, with 5 key principles required to become an AI-fuelled business:

本书的重点是MELDS框架 ,要成为AI推动的业务,必须具备5条关键原则:

Mindset: reimagining work around the missing middle

心态 :围绕缺失的中部重新构想工作

Experimentation: seeking opportunities to test and refine AI system

实验 :寻找机会测试和完善AI系统

Leadership: commitment to responsible use of AI

领导力 :对负责任使用AI的承诺

Data: building a data supply chain to fuel intelligent systems

数据 :构建数据供应链以推动智能系统

Skills: developing the skills necessary for reimagining processes in the missing middle

技能 :发展重新构想缺失中部过程的必要技能

5.负责任的AI (5. Responsible AI)

The Leadership part of MELDS calls for Responsible AI, an important consideration as AI gains increasing prominence in today’s society, for instance in cases where:

MELDS的领导力部分要求负责任的AI,这是一个重要的考虑因素,因为AI在当今社会日益受到重视,例如在以下情况下:

An algorithm is making a decision, deciding what factors to optimise e.g. deciding a student’s A-level grade with the goal to reduce grade inflation vs the goal of individual fairness, deciding whether to give an individual a loan with fairness vs profit in mind

一种算法正在做出决定 ,决定要优化的因素, 例如确定学生的A级成绩,以降低年级通胀率为目标,相对于个人公平为目标,确定是否向个人贷款时要考虑公平与收益

There are already systemic issues in society e.g. when an algorithm is trained using biased hiring data which favours certain demographics: white, male, heterosexual. Or when an algorithm is used to make criminal convictions and disproportionately convicts black defendants

社会上已经存在系统性的问题 , 例如,当使用偏向某些特定人群的招聘数据来训练算法时,这些人群是白人,男性,异性恋。 或使用算法进行刑事定罪并过多定罪黑人被告时

The stakes are high e.g. self-driving cars where people’s lives are at stake, political advertising on social media

风险很高, 例如 , 危及 人们生命的自动驾驶汽车,社交媒体上的政治广告

When designing responsible AI, we should address a number of legal, ethical, and moral issues, ensuring there is/are:

在设计负责任的AI时,我们应该解决许多法律,道德和道德问题,并确保存在以下方面:

Trust, transparency & responsibility — consideration of societal impact, and clearly stated goals and processes of the algorithms

信任,透明度和责任感 -考虑社会影响,并明确说明算法的目标和流程

Fair & ethical design standards

公平和道德的设计标准

Regulation & GDPR compliance — defined standards for the provenance, use and security of data sets, as well as data privacy

法规和GDPR合规性 -为数据集的来源,使用和安全性以及数据隐私定义的标准

Human checking, testing and auditing — of systems before and after deployment

在部署前后对系统进行人工检查,测试和审计

信任,透明和责任 (Trust, transparency & responsibility)

When implementing an AI/tech system, it requires public trust, whether this be trust that a self-driving car will keep us safe, or trust that a test-and-trace app is worth downloading, using, and following instructions from. The problem is that tech projects don’t always go to plan, and just as with any process, mistakes can be made — as we know, an A-level algorithm can give you the wrong grade. What’s important to ensure public trust is being transparent in how an algorithm works, as well as allowing for appeals and changes to decisions made by computers.

实施AI /技术系统时,需要公众的信任,无论是信任无人驾驶汽车将确保我们的安全,还是信任测试跟踪应用程序值得下载,使用并遵循其中的说明。 问题在于,技术项目并非总是按计划进行,就像任何过程一样,都可能会犯错误-我们知道,A级算法会给您错误的成绩。 确保公众信任的关键是算法的工作过程是透明的,以及允许对计算机决策做出上诉和更改。

With AI also comes the issue of where responsibility lies. Although on the whole, self-driving cars reduce numbers of road fatalities, if a self-driving car collides with a pedestrian, who is legally responsible: the algorithm designers or the person behind the wheel? And in terms of public attitudes, Gill Pratt, chief executive of the Toyota Research Institute, told lawmakers on Capitol Hill in 2017 that people are more inclined to forgive mistakes that humans make than those by machines. This desire to put trust in humans rather than machines (even if statistically machines perform better) has been termed “algorithmic aversion”, and happens for instance where people would rather rely on the decisions of a human doctor than trust AI.

人工智能也带来了责任所在的问题。 虽然从总体上讲,自动驾驶汽车减少了道路致死事故的数量,但是如果自动驾驶汽车与行人发生碰撞 ,谁是法律责任人:算法设计者还是车轮背后的人? 就公众态度而言,丰田汽车研究所首席执行官吉尔·普拉特(Gill Pratt)在2017年告诉国会山的立法者, 人们更愿意宽恕人类犯的错误而不是机器犯的错误 。 这种信任人类而不是机器(即使从统计学上讲机器性能更好)的愿望被称为“ 算法厌恶 ”,例如,人们宁愿依靠人类医生的决定而不信任人工智能 。

Regarding responsibility, the term “moral crumple zones” has also been coined: “[T]he human in a highly complex and automated system may become simply a component — accidentally or intentionally — that bears the brunt of the moral and legal responsibilities when the overall system malfunctions.” This could be as serious as facing charges of vehicular manslaughter, down to an Uber driver getting bad feedback from a customer because their app malfunctioned and directed them to the wrong place — the human becomes a “liability sponge”. “While the crumple zone in a car is meant to protect the human driver, the moral crumple zone protects the integrity of the technological system, itself.”

关于责任,还曾创造了“ 道德崩溃区 ”一词:“在高度复杂和自动化的系统中,人类可能只是偶然地或有意地变成了承担道德和法律责任首当其冲的一个组成部分。系统整体故障。” 这可能像面对车辆过失杀人罪一样严重,最终导致Uber驾驶员由于应用程序故障并将他们定向到错误的位置而从客户那里得到不好的反馈-人类成为“ 责任海绵 ”。 “虽然汽车的压痕区旨在保护驾驶员,但道德的压痕区却保护了技术系统本身的完整性。”

Tesla — 特斯拉 - In 2016, Tesla announced every new vehicle would have the hardware to drive autonomously but at first Tesla will test drivers against software simulations running in the background. Tesla drivers are teaching the fleet of cars how to drive 特斯拉在2016年宣布,每辆新车都将具有自动驾驶的硬件,但首先,特斯拉将针对在后台运行的软件仿真对驾驶员进行测试。 特斯拉司机正在教车队如何驾驶公平和道德的设计标准 (Fair & ethical design standards)

Firms should do all they can to eliminate bias from their AI systems, whether that be in the training data, the algorithm itself, or the outcome results/decisions. Examples include HR-system AI which looks over CVs or video interviews, which could have a white-male bias. Or software used to predict defendants’ future criminal behaviour, which could be biased against black defendants.

公司应该尽一切努力消除AI系统中的偏差,无论是在训练数据,算法本身还是结果结果/决策中。 例如,人力资源系统的AI会检查简历或视频采访,这可能会产生白人偏见。 或用于预测被告未来犯罪行为的软件,这可能会对黑人被告产生偏见。

However, just because AI has the potential to be biased, it doesn’t mean it is not worth using. Humans themselves have biases, whether that be the interviewer for a job or the jury in a court. When created responsibly and tested thoroughly, AI has the potential to reduce this human bias, as well as having added cost and time savings. A healthcare AI can allow doctors to treat more patients, a CV-checking AI can scan through many more CVs in a fraction of the time.

但是,仅因为AI可能会受到偏见,但这并不意味着它不值得使用。 人类本身都有偏见,无论是工作的面试官还是法庭的陪审员。 如果以负责任的方式创建并进行全面测试,人工智能有可能减少这种人为偏见,并增加成本和时间节省。 医疗保健AI可以让医生治疗更多的病人,而CV检查AI可以在很短的时间内扫描更多的CV。

To address data biases, Google has launched the PAIR (People + AI Research) initiative, with open source tools to investigate bias. Some of the ideas they discuss in their Guidebook include:

为了解决数据偏差,Google发起了PAIR(人+ AI研究)计划,该计划采用开源工具来调查偏差。 他们在《指南》中讨论的一些想法包括:

Being clear about the goal of the algorithm. For instance, when picking a threshold for granting a loan or insurance, looking at goals such as being group-unaware (even if there are group differences e.g. women paying less for life insurance than men as they tend to live longer), using demographic parity, or providing equal opportunity

明确算法的目标 。 例如,在选择发放贷款或保险的门槛时,应使用人口统计学的同等目标,例如不了解群体(即使存在群体差异,例如,女性为人寿保险支付的费用比男性少,因为她们往往寿命更长)或提供平等机会

Providing the right level of explanation to users so they build clear mental models of the system’s capabilities and limits. Sometimes an explainable model beats a complex model with better accuracy

向用户提供正确的解释级别,以便他们为系统的功能和限制建立清晰的思维模型。 有时,可解释的模型会以更高的精度击败复杂的模型

Show a confidence level of the model’s output, which could be a numeric value or a list of potential outputs. As AI outputs are based on statistics and probability, a user shouldn’t trust the system completely and should know when to exercise their own judgment

显示模型输出的置信度 ,可以是数字值或潜在输出的列表。 由于AI输出基于统计数据和概率,因此用户不应完全信任系统,而应知道何时执行自己的判断

AI models are also often described as “black box systems” where we don’t fully know what’s going on behind the scenes, and what the computer finds to be the important decision-making criteria. Take the example of an image recognition system that learns to “cheat” by looking for a copyright tag in the corner of all the correct images, rather than the content of the images themselves. In comes the need for Explainable AI (XAI) (thoroughly-tested systems where we test how changes in input affect the output decision or classification), or “human-in-the-loop” AI, where humans review AI decisions. IBM has also developed an open source AI Fairness toolkit for this purpose.

人工智能模型通常也被称为“黑匣子系统” ,其中我们不完全了解幕后发生的事情以及计算机认为什么是重要的决策标准。 以一个图像识别系统为例,该系统通过在所有正确图像的角落查找版权标签来学习“作弊”,而不是图像本身的内容。 随之而来的是需要可解释的AI(XAI) (经过全面测试的系统,在此系统中我们测试输入的变化如何影响输出决策或分类)或“人工在环”的AI , 人工在此审查AI决策。 为此,IBM还开发了一个开源 AI Fairness工具包。

HireVue — Video interviews for recruitment HireVue —招聘视频采访法规与GDPR合规 (Regulation & GDPR compliance)

Should it be down to government regulation to keep tech companies in check? If yes, can this regulation even keep up with such a fast-moving and complex field? And what happens when there are conflicts of interest, like with the FBI-Apple encryption dispute where Apple refused to unlock a terrorist’s phone? Or what about when tech companies have used social media for election-meddling?

监管科技公司是否应由政府监管? 如果是的话,这项规定是否能跟上如此Swift和复杂的领域? 当存在利益冲突时,例如在FBI-Apple加密纠纷中 ,Apple拒绝解锁恐怖分子的手机,会发生什么? 还是科技公司何时使用社交媒体进行选举干预 ?

Although Institutional Review Boards (IRBs) are required of research affiliated with universities, they’re not really present in the commercial world. Companies often take it upon themselves to develop their own rules regarding their research ethics committees. Companies need to think about the wider impact of their technology, and there is a place for considering ethical design standards, such as those proposed by the IEEE, with the general principles of human rights, well-being, accountability, transparency, and awareness of misuse.

尽管大学附属研究机构需要机构审查委员会(IRB) ,但实际上它们在商业领域并不存在。 公司通常会自行制定有关研究道德委员会的规则。 公司需要考虑其技术的更广泛影响,并且有一个地方可以考虑道德设计标准 ,例如由IEEE提出的道德设计标准 ,其中包含人权,福祉,问责制,透明性和意识的一般原则。滥用。

人工检查,测试和审核 (Human checking, testing and auditing)

Testing is vital for AI that make decisions or interact with humans. For many companies, their brand image and perception are tied to that of their AI-agents: interactions with Amazon’s Alexa will shape your perception of Amazon as a company, meaning the company’s reputation is at stake. One example of this human-AI relationship turning sour is Microsoft’s chatbot Tay, who in 2016 was trained on Twitter interactions, and consequently tweeted vulgar, racist and sexist language. In an ideal world, Microsoft would’ve envisaged these issues and implemented “guardrails” like keyword/content filters, or a sentiment monitor, to prevent this from happening.

测试对于做出决策或与人类互动的AI至关重要。 对于许多公司而言,他们的品牌形象和认知度与AI代理人的品牌息息相关:与亚马逊Alexa的互动将塑造您对亚马逊作为一家公司的认知,这意味着该公司的声誉受到威胁。 这种人与AI关系恶化的例子是微软的聊天机器人Tay ,他在2016年接受了Twitter交互方面的培训,因此在推特上发布了粗俗,种族主义和性别歧视的语言。 在理想的情况下,Microsoft会设想这些问题并实施“ 护栏 ”,例如关键字/内容过滤器或情绪监视器,以防止这种情况发生。

Other suggested checking methods include letting humans second-guess AI: trusting that workers have judgments and often understand context better than machines, for instance with the example of using an AI system to inform hospital bed allocation, but letting humans have the final say.

其他建议的检查方法包括让人们对AI进行第二次猜测 :例如,相信工人具有判断力并且通常比机器更能理解上下文,例如使用AI系统来告知病床分配,但让人们拥有最终决定权。

Amazon Go — a store where cameras monitor the items you pick, sensors talk to your phone and automatically charge your account Amazon Go-商店,其中相机监视您挑选的物品,传感器与您的手机通话并自动为您的账户收费6.融合技巧 (6. Fusion Skills)

The “missing middle” embodies 8 “fusion skills” which combine human and machine capabilities.

“中间缺失”体现了8种“ 融合技能 ”,将人与机器的能力相结合。

1. Re-humanising time: Reimagining business processes to amplify the time available for distinctly human tasks like interpersonal interactions, creativity, and decision-making e.g. Chatbots like Microsoft’s Cortana, IBM’s Watson or IPsoft’s Amelia, which handle simple requests

1. 重新人性化的时间 :重新构想业务流程,以扩大用于人际交往,创造力和决策等独特的人工任务的时间, 例如像Microsoft的Cortana,IBM的Watson或IPsoft的Amelia之类的聊天机器人,它们可以处理简单的请求

2. Responsible normalising: The act of responsibly shaping the purpose and perception of human-machine interaction as it relates to individuals, businesses, and society e.g. A-level algorithm

2. 负责任的规范化:负责地塑造人机交互的目的和感知的行为,因为它涉及个人,企业和社会, 例如A级算法

3. Judgment integration: The judgment-based ability to decide a course of action when a machine is uncertain about what to do. This informs employees where to set up guardrails, investigate anomalies, or avoid putting a model into customer settings e.g. Driverless cars, restricting the language/tone a chatbot/agent can use

3. 判断集成:当机器不确定要做什么时,基于判断的能力来决定操作过程。 这会告知员工在哪里设置护栏,调查异常情况或避免将模型放入客户设置中, 例如无人驾驶汽车,限制聊天机器人/代理可以使用的语言/语调

4. Intelligent interrogation: Knowing how best to ask an AI agent questions to get the insights you need e.g. price optimisation in a store

4. 智能询问:知道如何最好地向AI代理提问,以获得所需的见解, 例如商店中的价格优化

5. Bot-based empowerment: Working well with AI to extend your capabilities e.g. financial crime experts receiving help from AI for fraud detection, or using network analysis for anti-money-laundering (AML)

5. 基于机器人的授权 :与AI一起很好地扩展您的能力, 例如金融犯罪专家从AI获得帮助以进行欺诈检测,或使用网络分析进行反洗钱(AML)

6. Holistic melding: Developing mental models of AI agents that improve collaborative outcomes e.g. Robotic surgery

6. 整体融合 :开发AI代理的心理模型以改善协作成果, 例如机器人手术

7. Reciprocal apprenticing: Two-way teaching and learning from people to AI and AI to people e.g. Training an AI system to recognise faults on a production line. The AI is then used to train new employees

7. 互惠的学徒:从人到AI,再到人与人的双向教学, 例如,培训AI系统以识别生产线上的故障。 然后使用AI培训新员工

8. Relentless reimagining: The rigorous discipline of creating new processes and business models from scratch, rather than simply automating old processes e.g. AI for predictive maintenance in cars, or to aid product development

8. 不懈地重新构想:从头开始创建新流程和业务模型的严格纪律,而不是简单地自动化旧流程( 例如,用于汽车的预测性维护或帮助产品开发的AI)

Amazon robots, after their 2012 acquisition of 亚马逊机器人,2012年收购 Kiva Robots Kiva Robots7.创建的新角色 (7. New Roles Created)

Returning to the idea of the “missing middle”, it features 6 roles, with the first 3 seeing humans training ML models, explaining algorithm outputs, and sustaining the machines in a responsible manner. The latter 3 see machines amplifying human insight with advanced data analysis, interacting with us at scale through novel interfaces, and embodying human-like traits (for instance with AI chatbots or agents, like Alexa, Cortana or Siri).

回到“缺少中间人”的概念 ,它具有6个角色,其中前3个是看到人类训练ML模型,解释算法输出并以负责任的方式维持机器。 后3个机器通过高级数据分析来放大人类洞察力,通过新颖的界面与我们进行大规模互动,并体现人类的特征(例如与AI聊天机器人或代理,例如Alexa,Cortana或Siri)。

These missing-middle roles could create new kinds of jobs of the future, for example:

这些中间的角色可能会创造未来的新工作,例如:

Trainer: providing the train data e.g. language translators for NLP, empathy/human behaviour training

培训师 :提供培训数据, 例如用于NLP,同理/人类行为培训的语言翻译

Explainer: explaining complex black-box systems to non-technical people

解释器 :向非技术人员解释复杂的黑匣子系统

Data supply-chain officer

数据供应链官

Data hygienist: for data cleansing, quality-checking, and pre-processing

数据卫生师:用于数据清理,质量检查和预处理

Algorithm forensics analyst: investigating when AI outputs differ from what is intended

算法取证分析师:调查AI输出何时不同于预期

Context designer: balance the tech with the business context

上下文设计师:在技术与业务上下文之间取得平衡

AI safety engineer: anticipate unintended consequences and mitigate against them

AI安全工程师:预测意外后果并减轻后果

Ethics compliance manager

道德合规经理

Automation ethicist

自动化伦理学家

Machine relations manager: like HR managers, “except they will oversee AI systems instead of human workers. They will be responsible for regularly conducting performance reviews of a company’s AI systems. They will promote those systems that perform well, replicating variants and deploying them to other parts of the organisation. Those systems with poor performance will be demoted and possibly decommissioned.”

机器关系经理 :像人力资源经理一样,“除了他们将监督AI系统而不是人工监督。 他们将负责定期对公司的AI系统进行性能评估。 他们将推广性能良好的系统,复制变体并将其部署到组织的其他部分。 那些性能不佳的系统将被降级并可能退役。”

8.结论 (8. Conclusion)

AI can have far-reaching impacts, working in collaboration with humans using the 8 “fusion skills” as part of the “missing middle”, and a number of new jobs being created. Businesses of the future should apply the MELDS (Mindset, Experimentation, Leadership, Data Skills) framework, and will need to address big questions: How do we ensure public trust in AI, and that companies design responsible AI? What design standards should we use to mitigate bias, and what do we do when AI outputs go wrong? How do we deal with the mass skilling and re-skilling of the workforce of the future? Governments, businesses, and wider society will shape the direction of AI and the Human + Machine relationship. What kind of future do we want to build?

人工智能可以产生深远的影响,与人类合作使用8种“ 融合 技能 ”作为“ 缺失的中间 ”的一部分,并创造许多新的工作机会。 未来的企业应采用MELDS (思维方式,实验,领导力,数据技能)框架,并且需要解决一些大问题:我们如何确保公众对AI的信任,以及公司设计负责任的AI? 我们应该使用什么设计标准来减轻偏差,当AI输出出现问题时我们应该怎么做? 我们如何应对未来劳动力的大规模技能和再技能? 政府,企业和更广泛的社会将塑造AI和人机关系的方向。 我们要建立什么样的未来?

9.进一步阅读 (9. Further Reading)

Reports by Accenture, McKinsey, BCG, IBM (who also have an open source AI Fairness toolkit), Cognizant, World Economic Forum Centre for the Fourth Industrial Revolution, AI Now Institute, Partnership on AI, AI for Good. Also look into YCombinator startup accelerator, funding many innovative tech startups. Former president of YCombinator Sam Altman has taken over as CEO at OpenAI, an AI research lab, aiming to maximise AI’s benefits to humanity and limit its risks, and considered a competitor to Google’s DeepMind.

埃森哲 ,麦肯锡, BCG , IBM (也有开放源代码的 AI公平性工具包), Cognizant ,第四次工业革命世界经济论坛中心, AI Now Institute , AI伙伴关系 , AI for Good的报告 。 还要研究YCombinator创业加速器,为许多创新的科技创业公司提供资金。 YCombinator前总裁山姆·奥特曼(Sam Altman)接任了AI研究实验室OpenAI的首席执行官,旨在最大程度地发挥AI对人类的利益并限制其风险,并被认为是Google DeepMind的竞争对手。

翻译自: https://medium.com/swlh/human-machine-3b9c535bc6c8

人机验证