- java项目打包_Java项目打包方式分析

weixin_39727402

java项目打包

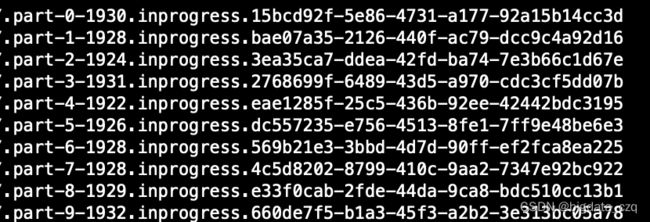

概述在项目实践过程中,有个需求需要做一个引擎能执行指定jar包的指定main方法。起初我们以一个简单的spring-boot项目进行测试,使用spring-boot-maven-plugin进行打包,使用java-cpdemo.jar.执行,结果报错找不到对应的类。我分析了spring-boot-maven-plugin打包的结构,又回头复习了java原生jar命令打包的结果,以及其他Maven打

- java spi 好处_Java SPI 实战

Gaven Wang

javaspi好处

SPI全称为(ServiceProviderInterface),是JDK内置的一种服务提供发现机制,可以轻松实现面向服务的注册与发现,完成服务提供与使用的解耦,并且可以实现动态加载SPI能做什么利用SPI机制,sdk的开发者可以为使用者提供扩展点,使用者无需修改源码,有点类似Spring@ConditionalOnMissingBean的意思动手实现一个SPI例如我们要正在开发一个sdk其中有一

- DHCP协议---动态主机配置协议

W111115_

计算机网络---HCIAlinux网络运维网络协议服务器

什么是DHCPDHCP(DynamicHostConfigurationProtocol,动态主机配置协议),前身是BOOTP协议,是一个局域网的网络协议,使用UDP协议工作,统一使用两个IANA分配的端口:67(服务器端),68(客户端)。DHCP通常被用于局域网环境,主要作用是集中的管理、分配IP地址,使client动态的获得IP地址、Gateway地址、DNS服务器地址等信息,并能够提升地址

- Java中的批处理优化:使用Spring Batch处理大规模数据的实践

微赚淘客系统开发者@聚娃科技

javaspringbatch

Java中的批处理优化:使用SpringBatch处理大规模数据的实践大家好,我是微赚淘客返利系统3.0的小编,是个冬天不穿秋裤,天冷也要风度的程序猿!在处理大规模数据的场景中,批处理是一个非常常见且必要的操作。Java中的SpringBatch是一个强大的框架,能够帮助我们高效地执行复杂的批处理任务。本文将带大家了解如何使用SpringBatch处理大规模数据,并通过代码示例展示如何实现高效的批

- Spring Batch :高效处理海量数据的利器

一叶飘零_sweeeet

Springbootspringboot

SpringBatch是Spring框架中一个功能强大的批处理框架,旨在帮助开发人员轻松处理大量数据的批量操作,比如数据的导入、导出、转换以及定期的数据清理等任务。它提供了一套完善且灵活的机制,使得原本复杂繁琐的数据批处理工作变得条理清晰、易于管理和扩展。接下来,我们将全方位深入探究SpringBatch,从其核心概念、架构组成,到具体的使用示例以及在不同场景下的应用优势等,带你充分领略它的魅力所

- RK3566系统移植 | 基于rk-linux-sdk移植uboot(2017.09)

Mculover666

linux

文章目录一、测试已有的配置二、移植到fireflyROC-RK3566开发板1.新建单板2.新建设备树3.编译4.测试一、测试已有的配置查看rksdk中提供的uboot中对于rk3566的配置:rk3566.config内容如下:CONFIG_BASE_DEFCONFIG="rk3568_defconfig"CONFIG_LOADER_INI="RK3566MINIALL.ini"因为rk3566

- Spring AI快速入门

学java的cc

spring大数据java

一、引入依赖org.springframework.aispring-ai-starter-model-openaiorg.springframework.aispring-ai-bom${spring-ai.version}pomimport二、配置模型spring:ai:openai:base-url:https://dashscope.aliyuncs.com/compatible-mode

- Spring Cloud 微服务架构部署模式

Java技术栈实战

架构springcloud微服务ai

SpringCloud微服务架构部署模式:从单体到云原生的进化路径关键词:SpringCloud、微服务架构、部署模式、容器化、Kubernetes、服务网格、DevOps摘要:本文系统解析SpringCloud微服务架构的核心部署模式,涵盖传统物理机部署、容器化部署、Kubernetes集群编排、服务网格集成等技术栈。通过技术原理剖析、实战案例演示和最佳实践总结,揭示不同部署模式的适用场景、技术

- Spring Boot项目初始化加载自定义配置文件内容到静态属性字段

@Corgi

Java面试题springboot后端java

文章目录创建配置文件cXXX.properties配置类XXXConfig.java添加第三方JAR包创建配置文件cXXX.properties在resource目录下新建配置文件cXXX.properties,内容如下:#商户号mch_id=xxxxx#商户密码pwd=xxxx#接口请求地址req_url=https://xxx#异步回调通知地址(请替换为实际地址)notify_url=htt

- 三阶落地:腾讯云Serverless+Spring Cloud的微服务实战架构

大熊计算机

#腾讯云架构腾讯云serverless

云原生演进的关键挑战(1)传统微服务架构痛点资源利用率低(非峰值期资源闲置率>60%)运维复杂度高(需管理数百个容器实例)突发流量处理能力弱(扩容延迟导致P99延迟飙升)(2)Serverless的破局价值腾讯云SCF(ServerlessCloudFunction)提供:毫秒级计费粒度(成本下降40%~70%)百毫秒级弹性伸缩(支持每秒万级并发扩容)零基础设施运维同步调用异步事件用户请求API网

- RAG 调优指南:Spring AI Alibaba 模块化 RAG 原理与使用

ApacheDubbo

spring人工智能架构SpringAIRAG

>夏冬,SpringAIAlibabaContributorRAG简介什么是RAG(检索增强生成)RAG(RetrievalAugmentedGeneration,检索增强生成)是一种结合信息检索和文本生成的技术范式。核心设计理念RAG技术就像给AI装上了「实时百科大脑」,通过先查资料后回答的机制,让AI摆脱传统模型的"知识遗忘"困境。️四大核心步骤1.文档切割→建立智能档案库核心任务:将海量文档

- 大数据面试必备:Kafka性能优化 Producer与Consumer配置指南

Kafka面试题-在Kafka中,如何通过配置优化Producer和Consumer的性能?回答重点在Kafka中,通过优化Producer和Consumer的配置,可以显著提高性能。以下是一些关键配置项和策略:1、Producer端优化:batch.size:批处理大小。增大batch.size可以使Producer每次发送更多的消息,但要注意不能无限制增大,否则会导致内存占用过多。linger

- Spring AI Alibaba 支持国产大模型的Spring ai框架

程序员老陈头

面试学习路线阿里巴巴spring人工智能java

总计30万奖金,SpringAIAlibaba应用框架挑战赛开赛点此了解SpringAI:java做ai应用的最好选择过去,Java在AI应用开发方面缺乏一个高效且易于集成的框架,这限制了开发者快速构建和部署智能应用程序的能力。SpringAI正是为解决这一问题而生,它提供了一套统一的接口,使得AI功能能够以一种标准化的方式被集成到现有的Java项目中。此外,SpringAI与原有的Spring生

- 企业级AI开发利器:Spring AI框架深度解析与实战_spring ai实战

AI大模型-海文

人工智能springpython算法开发语言java机器学习

企业级AI开发利器:SpringAI框架深度解析与实战一、前言:Java生态的AI新纪元在人工智能技术爆发式发展的今天,Java开发者面临着一个新的挑战:如何将大语言模型(LLMs)和生成式AI(GenAI)无缝融入企业级应用。传统的Java生态缺乏统一的AI集成方案,开发者往往需要为不同AI供应商(如OpenAI、阿里云、HuggingFace)编写大量重复的接口适配代码,这不仅增加了开发成本,

- Linux操作系统,故障排查

月堂

linux运维服务器

案例1:GRUB引导故障故障现象:系统启动卡在"GRUB>"提示符,无法进入系统原因分析:GRUB配置文件损坏(/boot/grub/grub.cfg)引导文件被误删或磁盘损坏解决步骤:在GRUB命令行依次执行:insmodxfssetroot=(hd0,msdos1)linux/vmlinuz-root=/dev/sda1initrd/initramfs-.imgboot进入系统后执行:grub

- Linux命令行操作基础

EnigmaCoder

Linuxlinux运维服务器

目录前言目录结构✍️语法格式操作技巧Tab补全光标操作基础命令登录和电源管理命令⚙️login⚙️last⚙️exit⚙️shutdown⚙️halt⚙️reboot文件命令⚙️浏览目录类命令pwdcdls⚙️浏览文件类命令catmorelessheadtail⚙️目录操作类命令mkdirrmdir⚙️文件操作类命令mvrmtouchfindgziptar⚙️cp前言大家好!我是EnigmaCod

- 图扑软件智慧云展厅,开启数字化展馆新模式

智慧园区

可视化5g人工智能大数据安全云计算

随着疫情的影响以及新兴技术的不断发展,展会的发展形式也逐渐从线下转向线上。通过“云”上启动、云端互动、双线共频的形式开展。通过应用大数据、人工智能、沉浸式交互等多重技术手段,构建数据共享、信息互通、精准匹配的高精度“云展厅”,突破时空壁垒限制。图扑软件运用HT强大的渲染功能,数字孪生“云展位”,1:1复现实际展厅内部独特的结构造型和建筑特色。也可以第一人称视角漫游,模拟用户在展厅内的参观场景,在保

- 高并发系统架构设计

茫茫人海一粒沙

系统架构java

在互联网系统中,“高并发”从来不是稀罕事:双十一秒杀、12306抢票、新人注册峰值、热点直播点赞……,如果你的系统没有良好的架构设计,很容易出现:接口超时、数据错乱、系统宕机。本文从六个核心维度出发,系统性讲解如何构建一套“抗得住流量洪峰”的企业级高并发架构。一、系统拆分——降低系统耦合度,提高弹性伸缩能力核心思想将单体系统按业务域/模块/职责划分为多个服务;采用微服务架构(如SpringClou

- flowable 修改历史变量

小云小白

springbootflowable修改历史变量springboot

简洁场景:对已结束流程的变量进行改动方法包含2个类1)核心方法,flowablecommand类:HistoricVariablesUpdateCmd2)执行command类:BpmProcessCommandService然后springboot执行方法即可:bpmProcessCommandService.executeUpdateHistoricVariables(processInstan

- vue大数据量列表渲染性能优化:虚拟滚动原理

Java小卷

Vue3开源组件实战vue3自定义Tree虚拟滚动

前面咱完成了自定义JuanTree组件各种功能的实现。在数据量很大的情况下,我们讲了两种实现方式来提高渲染性能:前端分页和节点数据懒加载。前端分页小节:Vue3扁平化Tree组件的前端分页实现节点数据懒加载小节:ElementTreePlus版功能演示:数据懒加载关于扁平化结构Tree和嵌套结构Tree组件的渲染嵌套结构的Tree组件是一种递归渲染,性能上比起列表结构的v-for渲染比较一般。对于

- Springboot --- 整合spring-data-jpa和spring-data-elasticsearch

百世经纶『一页書』

SpringbootJavaspringboot

Springboot---整合spring-data-jpa和spring-data-elasticsearch1.依赖2.配置文件3.代码部分3.1Entity3.2Repository3.3Config3.4Service3.5启动类3.6Test3.7项目结构SpringBoot:整合Ldap.SpringBoot:整合SpringDataJPA.SpringBoot:整合Elasticse

- redis的scan使用详解,结合spring使用详解

黑皮爱学习

redis自学笔记redisspring数据库

Redis的SCAN命令是一种非阻塞的迭代器,用于逐步遍历数据库中的键,特别适合处理大数据库。下面详细介绍其使用方法及在Spring框架中的集成方式。SCAN命令基础SCAN命令的基本语法:SCANcursor[MATCHpattern][COUNTcount]cursor:迭代游标,初始为0,每次迭代返回新的游标值。MATCHpattern:可选,用于过滤键的模式(如user:*)。COUNTc

- 实例化Bean对象的三种方式

越来越无动于衷

javasql开发语言

默认是无参数的构造方法(默认方式,基本上使用)静态工厂实例化特点:工厂方法属于静态方法,可直接通过类名调用,无需先创建工厂类的对象。优势:调用起来更为简便,性能方面也稍占优势。Spring配置:class属性指向静态工厂类,factory-method属性指向静态方法。示例代码:publicclassStaticFactory{publicstaticUserServicecreateUs(){r

- Spring AI入门教学:从零搭建智能应用(2025最新实践)

程序员子固

spring人工智能javaai

目录引言:为什么选择SpringAI?一、环境搭建(附避坑指南)1.开发环境要求2.依赖配置二、实战:智能客服接入(代码级详解)1.配置模型参数2.实现流式对话接口三、高级功能:多模态AI开发1.图像描述生成2.智能文档处理四、开发者工具箱1.调试技巧2.性能优化五、学习路径建议引言:为什么选择SpringAI?随着生成式AI技术的爆发式发展(如OpenAI的GPT-4.5新动态24),Java开

- springboot 集成mqtt 以bytes收发消息

springboot集成mqtt,且订阅和推送消息全部以bytesmaven加入依赖org.springframework.integrationspring-integration-streamorg.springframework.integrationspring-integration-mqttapplication.yml添加配置参数spring:#mqttmqtt:url:tcp://

- 基于SpringBoot实现MQTT消息收发

萧雲漢

SpringBootspringbootspringjava中间件iot

基于SpringBoot实现MQTT消息收发实验环境SpringBoot2.2.2.RELEASE:项目框架EMQXcommunitylatest:MQTT服务端Docker18.0.~:部署容器POM引入依赖包#pom.xmlorg.springframework.bootspring-boot-starter-integration2.2.2.RELEASEorg.springframewor

- springboot mqtt收发消息

java知路

springbootjavaspring

在SpringBoot中,可以使用MQTT协议来收发消息。以下是一个简单的示例:1.添加依赖在`pom.xml`文件中添加以下依赖:```xmlorg.springframework.integrationspring-integration-mqtt5.5.3```2.配置MQTT连接工厂在`application.properties`文件中添加以下配置:```propertiesspring

- SpringBoot Admin 详解

m0_74824170

springboot后端java

SpringBootAdmin详解一、Actuator详解1.Actuator原生端点1.1监控检查端点:health1.2应用信息端点:info1.3http调用记录端点:httptrace1.4堆栈信息端点:heapdump1.5线程信息端点:threaddump1.6获取全量Bean的端点:beans1.7条件自动配置端点:conditions1.8配置属性端点:configprops1.9

- Maven 多模块项目调试与问题排查总结

博主简介:CSDN博客专家,历代文学网(PC端可以访问:https://literature.sinhy.com/#/?__c=1000,移动端可微信小程序搜索“历代文学”)总架构师,15年工作经验,精通Java编程,高并发设计,Springboot和微服务,熟悉Linux,ESXI虚拟化以及云原生Docker和K8s,热衷于探索科技的边界,并将理论知识转化为实际应用。保持对新技术的好奇心,乐于分

- Spring启动流程简要分析

synsdeng

springspringjavaweb框架

本文基于SpringBoot1.4.x所写,最新源代码可到https://github.com/spring-projects/spring-boot上去下载。SpringBoot伴随Spring4.x发布,可以说是JavaWeb开发近几年来最有影响力的项目之一,极大的提高了开发效率。据我说知,很多公司新起的项目当中都用起了SpringBoot框架。SpringBoot项目启动非常简单,调用Spr

- 面向对象面向过程

3213213333332132

java

面向对象:把要完成的一件事,通过对象间的协作实现。

面向过程:把要完成的一件事,通过循序依次调用各个模块实现。

我把大象装进冰箱这件事为例,用面向对象和面向过程实现,都是用java代码完成。

1、面向对象

package bigDemo.ObjectOriented;

/**

* 大象类

*

* @Description

* @author FuJian

- Java Hotspot: Remove the Permanent Generation

bookjovi

HotSpot

openjdk上关于hotspot将移除永久带的描述非常详细,http://openjdk.java.net/jeps/122

JEP 122: Remove the Permanent Generation

Author Jon Masamitsu

Organization Oracle

Created 2010/8/15

Updated 2011/

- 正则表达式向前查找向后查找,环绕或零宽断言

dcj3sjt126com

正则表达式

向前查找和向后查找

1. 向前查找:根据要匹配的字符序列后面存在一个特定的字符序列(肯定式向前查找)或不存在一个特定的序列(否定式向前查找)来决定是否匹配。.NET将向前查找称之为零宽度向前查找断言。

对于向前查找,出现在指定项之后的字符序列不会被正则表达式引擎返回。

2. 向后查找:一个要匹配的字符序列前面有或者没有指定的

- BaseDao

171815164

seda

import java.sql.Connection;

import java.sql.DriverManager;

import java.sql.SQLException;

import java.sql.PreparedStatement;

import java.sql.ResultSet;

public class BaseDao {

public Conn

- Ant标签详解--Java命令

g21121

Java命令

这一篇主要介绍与java相关标签的使用 终于开始重头戏了,Java部分是我们关注的重点也是项目中用处最多的部分。

1

- [简单]代码片段_电梯数字排列

53873039oycg

代码

今天看电梯数字排列是9 18 26这样呈倒N排列的,写了个类似的打印例子,如下:

import java.util.Arrays;

public class 电梯数字排列_S3_Test {

public static void main(S

- Hessian原理

云端月影

hessian原理

Hessian 原理分析

一. 远程通讯协议的基本原理

网络通信需要做的就是将流从一台计算机传输到另外一台计算机,基于传输协议和网络 IO 来实现,其中传输协议比较出名的有 http 、 tcp 、 udp 等等, http 、 tcp 、 udp 都是在基于 Socket 概念上为某类应用场景而扩展出的传输协

- 区分Activity的四种加载模式----以及Intent的setFlags

aijuans

android

在多Activity开发中,有可能是自己应用之间的Activity跳转,或者夹带其他应用的可复用Activity。可能会希望跳转到原来某个Activity实例,而不是产生大量重复的Activity。

这需要为Activity配置特定的加载模式,而不是使用默认的加载模式。 加载模式分类及在哪里配置

Activity有四种加载模式:

standard

singleTop

- hibernate几个核心API及其查询分析

antonyup_2006

html.netHibernatexml配置管理

(一) org.hibernate.cfg.Configuration类

读取配置文件并创建唯一的SessionFactory对象.(一般,程序初始化hibernate时创建.)

Configuration co

- PL/SQL的流程控制

百合不是茶

oraclePL/SQL编程循环控制

PL/SQL也是一门高级语言,所以流程控制是必须要有的,oracle数据库的pl/sql比sqlserver数据库要难,很多pl/sql中有的sqlserver里面没有

流程控制;

分支语句 if 条件 then 结果 else 结果 end if ;

条件语句 case when 条件 then 结果;

循环语句 loop

- 强大的Mockito测试框架

bijian1013

mockito单元测试

一.自动生成Mock类 在需要Mock的属性上标记@Mock注解,然后@RunWith中配置Mockito的TestRunner或者在setUp()方法中显示调用MockitoAnnotations.initMocks(this);生成Mock类即可。二.自动注入Mock类到被测试类 &nbs

- 精通Oracle10编程SQL(11)开发子程序

bijian1013

oracle数据库plsql

/*

*开发子程序

*/

--子程序目是指被命名的PL/SQL块,这种块可以带有参数,可以在不同应用程序中多次调用

--PL/SQL有两种类型的子程序:过程和函数

--开发过程

--建立过程:不带任何参数

CREATE OR REPLACE PROCEDURE out_time

IS

BEGIN

DBMS_OUTPUT.put_line(systimestamp);

E

- 【EhCache一】EhCache版Hello World

bit1129

Hello world

本篇是EhCache系列的第一篇,总体介绍使用EhCache缓存进行CRUD的API的基本使用,更细节的内容包括EhCache源代码和设计、实现原理在接下来的文章中进行介绍

环境准备

1.新建Maven项目

2.添加EhCache的Maven依赖

<dependency>

<groupId>ne

- 学习EJB3基础知识笔记

白糖_

beanHibernatejbosswebserviceejb

最近项目进入系统测试阶段,全赖袁大虾领导有力,保持一周零bug记录,这也让自己腾出不少时间补充知识。花了两天时间把“传智播客EJB3.0”看完了,EJB基本的知识也有些了解,在这记录下EJB的部分知识,以供自己以后复习使用。

EJB是sun的服务器端组件模型,最大的用处是部署分布式应用程序。EJB (Enterprise JavaBean)是J2EE的一部分,定义了一个用于开发基

- angular.bootstrap

boyitech

AngularJSAngularJS APIangular中文api

angular.bootstrap

描述:

手动初始化angular。

这个函数会自动检测创建的module有没有被加载多次,如果有则会在浏览器的控制台打出警告日志,并且不会再次加载。这样可以避免在程序运行过程中许多奇怪的问题发生。

使用方法: angular .

- java-谷歌面试题-给定一个固定长度的数组,将递增整数序列写入这个数组。当写到数组尾部时,返回数组开始重新写,并覆盖先前写过的数

bylijinnan

java

public class SearchInShiftedArray {

/**

* 题目:给定一个固定长度的数组,将递增整数序列写入这个数组。当写到数组尾部时,返回数组开始重新写,并覆盖先前写过的数。

* 请在这个特殊数组中找出给定的整数。

* 解答:

* 其实就是“旋转数组”。旋转数组的最小元素见http://bylijinnan.iteye.com/bl

- 天使还是魔鬼?都是我们制造

ducklsl

生活教育情感

----------------------------剧透请原谅,有兴趣的朋友可以自己看看电影,互相讨论哦!!!

从厦门回来的动车上,无意中瞟到了书中推荐的几部关于儿童的电影。当然,这几部电影可能会另大家失望,并不是类似小鬼当家的电影,而是关于“坏小孩”的电影!

自己挑了两部先看了看,但是发现看完之后,心里久久不能平

- [机器智能与生物]研究生物智能的问题

comsci

生物

我想,人的神经网络和苍蝇的神经网络,并没有本质的区别...就是大规模拓扑系统和中小规模拓扑分析的区别....

但是,如果去研究活体人类的神经网络和脑系统,可能会受到一些法律和道德方面的限制,而且研究结果也不一定可靠,那么希望从事生物神经网络研究的朋友,不如把

- 获取Android Device的信息

dai_lm

android

String phoneInfo = "PRODUCT: " + android.os.Build.PRODUCT;

phoneInfo += ", CPU_ABI: " + android.os.Build.CPU_ABI;

phoneInfo += ", TAGS: " + android.os.Build.TAGS;

ph

- 最佳字符串匹配算法(Damerau-Levenshtein距离算法)的Java实现

datamachine

java算法字符串匹配

原文:http://www.javacodegeeks.com/2013/11/java-implementation-of-optimal-string-alignment.html------------------------------------------------------------------------------------------------------------

- 小学5年级英语单词背诵第一课

dcj3sjt126com

englishword

long 长的

show 给...看,出示

mouth 口,嘴

write 写

use 用,使用

take 拿,带来

hand 手

clever 聪明的

often 经常

wash 洗

slow 慢的

house 房子

water 水

clean 清洁的

supper 晚餐

out 在外

face 脸,

- macvim的使用实战

dcj3sjt126com

macvim

macvim用的是mac里面的vim, 只不过是一个GUI的APP, 相当于一个壳

1. 下载macvim

https://code.google.com/p/macvim/

2. 了解macvim

:h vim的使用帮助信息

:h macvim

- java二分法查找

蕃薯耀

java二分法查找二分法java二分法

java二分法查找

>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>

蕃薯耀 2015年6月23日 11:40:03 星期二

http:/

- Spring Cache注解+Memcached

hanqunfeng

springmemcached

Spring3.1 Cache注解

依赖jar包:

<!-- simple-spring-memcached -->

<dependency>

<groupId>com.google.code.simple-spring-memcached</groupId>

<artifactId>simple-s

- apache commons io包快速入门

jackyrong

apache commons

原文参考

http://www.javacodegeeks.com/2014/10/apache-commons-io-tutorial.html

Apache Commons IO 包绝对是好东西,地址在http://commons.apache.org/proper/commons-io/,下面用例子分别介绍:

1) 工具类

2

- 如何学习编程

lampcy

java编程C++c

首先,我想说一下学习思想.学编程其实跟网络游戏有着类似的效果.开始的时候,你会对那些代码,函数等产生很大的兴趣,尤其是刚接触编程的人,刚学习第一种语言的人.可是,当你一步步深入的时候,你会发现你没有了以前那种斗志.就好象你在玩韩国泡菜网游似的,玩到一定程度,每天就是练级练级,完全是一个想冲到高级别的意志力在支持着你.而学编程就更难了,学了两个月后,总是觉得你好象全都学会了,却又什么都做不了,又没有

- 架构师之spring-----spring3.0新特性的bean加载控制@DependsOn和@Lazy

nannan408

Spring3

1.前言。

如题。

2.描述。

@DependsOn用于强制初始化其他Bean。可以修饰Bean类或方法,使用该Annotation时可以指定一个字符串数组作为参数,每个数组元素对应于一个强制初始化的Bean。

@DependsOn({"steelAxe","abc"})

@Comp

- Spring4+quartz2的配置和代码方式调度

Everyday都不同

代码配置spring4quartz2.x定时任务

前言:这些天简直被quartz虐哭。。因为quartz 2.x版本相比quartz1.x版本的API改动太多,所以,只好自己去查阅底层API……

quartz定时任务必须搞清楚几个概念:

JobDetail——处理类

Trigger——触发器,指定触发时间,必须要有JobDetail属性,即触发对象

Scheduler——调度器,组织处理类和触发器,配置方式一般只需指定触发

- Hibernate入门

tntxia

Hibernate

前言

使用面向对象的语言和关系型的数据库,开发起来很繁琐,费时。由于现在流行的数据库都不面向对象。Hibernate 是一个Java的ORM(Object/Relational Mapping)解决方案。

Hibernte不仅关心把Java对象对应到数据库的表中,而且提供了请求和检索的方法。简化了手工进行JDBC操作的流程。

如

- Math类

xiaoxing598

Math

一、Java中的数字(Math)类是final类,不可继承。

1、常数 PI:double圆周率 E:double自然对数

2、截取(注意方法的返回类型) double ceil(double d) 返回不小于d的最小整数 double floor(double d) 返回不大于d的整最大数 int round(float f) 返回四舍五入后的整数 long round