Flink 类型转换与东八时区问题处理

实时计算支持的数据类型

| 数据类型 |

说明 |

值域 |

| VARCHAR |

可变长度字符串 |

VARCHAR最大容量为4MB。 |

| BOOLEAN |

逻辑值 |

取值为TRUE、FALSE或UNKNOWN。 |

| TINYINT |

微整型,1字节整数。 |

|

| SMALLINT |

短整型,2字节整数。 |

|

| INT |

整型,4字节整数。 |

|

| BIGINT |

长整型,8字节整数。 |

|

| FLOAT |

4字节浮点型 |

6位数字精度 |

| DECIMAL |

小数类型 |

示例: |

| DOUBLE |

浮点型,8字节浮点型。 |

15位十进制精度。 |

| DATE |

日期类型 |

示例: |

| TIME |

时间类型 |

示例: |

| TIMESTAMP |

时间戳,显示日期和时间。 |

示例: |

| VARBINARY |

二进制数据 |

|

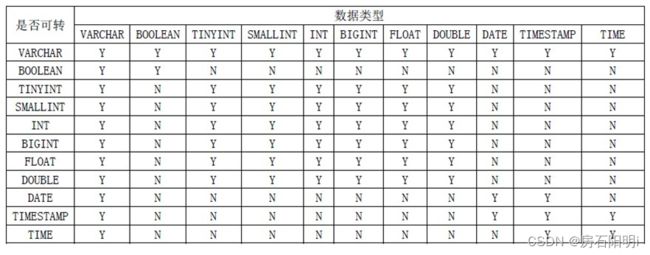

数据类型转换:

类型转换示例

测试数据:

var1(VARCHAR) big1(BIGINT)

1000 323

测试语句:

cast (var1 as bigint) as AA;

cast (big1 as varchar) as BB;

测试结果:

AA(BIGINT) BB(VARCHAR)

1000 323

Oracle时区问题:

测试中时间字段转换成BIGINT后时间多了8小时导致结果不正确

解决办法:

1.CAST(CURRENT_TIMESTAMP AS BIGINT) * 1000 - 8 * 60 * 60 * 1000直接减或加八小时

2.CAST(DATE_FORMAT(LOCALTIMESTAMP,'yyMM') AS VARCHAR) 使用LOCALTIMESTAMP获取当前系统时间

使用CURRENT_DATE、CURRENT_TIMESTAMP均会存在时区问题,LOCALTIMESTAMP可以放心使用

实例 (Oracle To ES):

package FlinkTableApi;

import org.apache.flink.streaming.api.CheckpointingMode;

import org.apache.flink.streaming.api.environment.CheckpointConfig;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.table.api.EnvironmentSettings;

import org.apache.flink.table.api.SqlDialect;

import org.apache.flink.table.api.TableResult;

import org.apache.flink.table.api.bridge.java.StreamTableEnvironment;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

public class CP_LOGIN_INFO {

private static final Logger log = LoggerFactory.getLogger(CP_LOGIN_INFO.class); //设置log

public static void main(String[] args) throws Exception {

EnvironmentSettings fsSettings = EnvironmentSettings.newInstance() //构建环境

.useBlinkPlanner()

.inStreamingMode()

.build();

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1); //设置流的并行

StreamTableEnvironment tableEnv = StreamTableEnvironment.create(env, fsSettings); //流表环境创造

tableEnv.getConfig().setSqlDialect(SqlDialect.DEFAULT);

log.info("This message contains {} placeholders. {}", 2, "Yippie"); // 打印日志

//配置检查点

env.enableCheckpointing(180000); // 开启checkpoint 每180000ms 一次

env.getCheckpointConfig().setMinPauseBetweenCheckpoints(50000);// 确认 checkpoints 之间的时间会进行 50000 ms

env.getCheckpointConfig().setCheckpointTimeout(600000); //设置checkpoint的超时时间 即一次checkpoint必须在该时间内完成 不然就丢弃

env.getCheckpointConfig().setCheckpointingMode(CheckpointingMode.EXACTLY_ONCE);//设置有且仅有一次模式 目前支持 EXACTLY_ONCE/AT_LEAST_ONCE

env.getCheckpointConfig().setMaxConcurrentCheckpoints(1);// 设置并发checkpoint的数目

env.getCheckpointConfig().setCheckpointStorage("hdfs:///flink-checkpoints/oracle/CP_LOGIN_INFO"); // 这个是存放到hdfs目录下

env.getCheckpointConfig().enableExternalizedCheckpoints(CheckpointConfig.ExternalizedCheckpointCleanup.RETAIN_ON_CANCELLATION);// 开启在 job 中止后仍然保留的 externalized checkpoints

env.getCheckpointConfig().enableUnalignedCheckpoints(); // 开启实验性的 unaligned checkpoints

String sourceDDL ="CREATE TABLE Oracle_Source (\n" +

" ID DECIMAL(12,0), \n" +

" USER_CODE STRING, \n" +

" LOGIN_TIME STRING, \n" + //需要设置成STRING类型,Data或者TIMESTAMP 类型无法CAST转换

" OVER_TIME STRING, \n" + //需要设置成STRING类型,Data或者TIMESTAMP 类型无法CAST转换

" TOKEN STRING, \n" +

" INSERT_TIME_HIS STRING, \n" + //需要设置成STRING类型,Data或者TIMESTAMP 类型无法CAST转换

" UPDATE_TIME_HIS STRING, \n" + //需要设置成STRING类型,Data或者TIMESTAMP 类型无法CAST转换

" VERSION STRING, \n" +

" PRIMARY KEY (ID) NOT ENFORCED \n" +

" ) WITH (\n" +

" 'connector' = 'oracle-cdc',\n" +

" 'hostname' = 'Oracle_IP地址',\n" +

" 'port' = '1521',\n" +

" 'username' = 'usernamexxx',\n" +

" 'password' = 'pwdxxx',\n" +

" 'database-name' = 'ORCL',\n" +

" 'schema-name' = 'namexxx',\n" + // 注意这里要大写

" 'table-name' = 'CP_LOGIN_INFO',\n" +

" 'debezium.log.mining.continuous.mine'='true',\n" + //oracle11G可以设置此参数,19c会报错

" 'debezium.log.mining.strategy'='online_catalog',\n" + //只读oracle日志不会参数新的归档日志文件

" 'debezium.log.mining.sleep.time.increment.ms'='5000',\n" + //设置睡眠时间可以降低Oracle连接进行内存上涨速度

" 'debezium.log.mining.batch.size.max'='50000000000000',\n" + //如果此值太小会造成SCN追不上,而导致任务失败

" 'debezium.log.mining.batch.size.min'='10000',\n" +

" 'debezium.log.mining.session.max.ms'='1200000',\n" + //设置会话连接时长,如果您的重做日志不经常切换,您可以通过指定 Oracle 切换日志的频率来避免 ORA-04036 错误

" 'debezium.database.tablename.case.insensitive'='false',\n" + //关闭大小写

" 'scan.startup.mode' = 'initial' \n" + //全量模式,先全量后自动记录增量

" )";

// 创建一张用于输出的表 时间字段转换成BIGINT (CAST)

String sinkDDL = "CREATE TABLE SinkTable (\n" +

" ID DECIMAL(12,0), \n" +

" USER_CODE STRING, \n" +

" LOGIN_TIME BIGINT, \n" + //根据业务要求 需要设置成BIGINT类型 es落盘会自动创建对应的字段与相应的类型

" OVER_TIME BIGINT, \n" + //根据业务要求 需要设置成BIGINT类型 es落盘会自动创建对应的字段与相应的类型

" TOKEN STRING, \n" +

" INSERT_TIME_HIS BIGINT, \n" + //根据业务要求 需要设置成BIGINT类型 es落盘会自动创建对应的字段与相应的类型

" UPDATE_TIME_HIS BIGINT, \n" + //根据业务要求 需要设置成BIGINT类型 es落盘会自动创建对应的字段与相应的类型

" VERSION STRING, \n" +

" PRIMARY KEY (ID) NOT ENFORCED \n" +

") WITH (\n" +

" 'connector' = 'elasticsearch-7',\n" +

" 'hosts' = 'http://ES_IP地址:9200',\n" +

" 'format' = 'json',\n" + //一定要加

" 'index' = 'cp_login_info_testES',\n" +

" 'username' = 'userxxx',\n" +

" 'password' = 'pwdxxx',\n" +

" 'failure-handler' = 'ignore',\n" +

" 'sink.flush-on-checkpoint' = 'true' ,\n"+

" 'sink.bulk-flush.max-actions' = '20000' ,\n"+

" 'sink.bulk-flush.max-size' = '2mb' ,\n"+

" 'sink.bulk-flush.interval' = '1000ms' ,\n"+

" 'sink.bulk-flush.backoff.strategy' = 'CONSTANT',\n"+

" 'sink.bulk-flush.backoff.max-retries' = '3',\n"+

" 'connection.max-retry-timeout' = '3153600000000',\n"+ //设置es连接时间,太短的话会自动断连

" 'sink.bulk-flush.backoff.delay' = '100ms'\n"+

")";

String transformSQL =

" INSERT INTO SinkTable SELECT ID,\n" +

"USER_CODE,\n" +

"(CAST(LOGIN_TIME AS BIGINT) - 8 * 60 * 60 * 1000 ) as LOGIN_TIME,\n" + //类型转换 - 8小时 (CAST(字段 AS 类型 )-时区差) as 新字段名或落盘字段名

"(CAST(OVER_TIME AS BIGINT) - 8 * 60 * 60 * 1000 ) as OVER_TIME,\n" + //类型转换 - 8小时 (CAST(字段 AS 类型 )-时区差) as 新字段名或落盘字段名

"TOKEN,\n" +

"(CAST(INSERT_TIME_HIS AS BIGINT) - 8 * 60 * 60 * 1000 ) as INSERT_TIME_HIS,\n" + //类型转换 - 8小时 (CAST(字段 AS 类型 )-时区差) as 新字段名或落盘字段名

"(CAST(UPDATE_TIME_HIS AS BIGINT) - 8 * 60 * 60 * 1000 ) as UPDATE_TIME_HIS,\n" + //类型转换 - 8小时 (CAST(字段 AS 类型 )-时区差) as 新字段名或落盘字段名

"VERSION FROM Oracle_Source " ;

//执行source表ddl

tableEnv.executeSql(sourceDDL);

//执行sink表ddl

tableEnv.executeSql(sinkDDL);

//执行逻辑sql语句

TableResult tableResult = tableEnv.executeSql(transformSQL);

tableResult.print();

env.execute();

}

}