ivshmem-plain设备原理分析

文章目录

- 前言

- 基本原理

-

- 共享内存

- 协议规范

- 具体实现

-

- 设备模型

- 数据结构

- 设备初始化

- 测试验证

-

- 方案

- 流程

-

- Libvirt配置

- Qemu配置

- 测试步骤

前言

- ivshmem-plain设备是Qemu提供的一种特殊设备,通过这个设备,可以实现虚机内存和主机上其它进程共存共享,应用程序可以利用此设备实现虚机内部和主机上进程间的高效数据传输。通常,虚机内部的进程作为生产者,往共享内存中写入数据,主机侧进程作为消费者,从共享内存中读取数据,这种模式常常应用在虚拟化的杀毒软件场景,虚机内部的杀毒软件驱动程序搜集虚机的行为数据放到共享内存,后端的杀毒软件分析虚机暴露的数据,判断该虚机是否行为异常甚至中毒,本文主要分析这类杀毒软件的工作基础ivshmem-plain设备。

基本原理

共享内存

- linux支持进程间共享内存,通过文件的形式提供编程接口,共享内存通常由一个进程打开共享内存的文件并写入内容,作为生产者,由另一个进程通过只读方式打开同样的内存文件并作为消费着读取。我们通过简单测试程序了解共享内存的使用方式,参考代码shared memory

- 测试程序有两个,一个作为生产者(sender)创建共享内存文件

/dev/shm/ivshmem-demo,同时往内存文件中写入内容,这里 sender分别写入了三个整数 0、1、2。一个作为消费者(receiver)通过只读方式打开共享内存文件/dev/shm/ivshmem-demo,读取该内存文件,测试程序运行结果如下:

- 生产者打开共享内存文件,可以看到,进程空间分配的内存比实际映射的多,为4k,猜测共享内存的最小单位是一个内存页。

- Qemu的ivshmem-plain设备,其本质也是利用linux提供的这一套共享内存机制,实现虚拟机内存和主机上进程的内存共享。实际上虚机内存在主机上进程看来就是Qemu分配创建的共享内存,因此可以互相访问。

协议规范

- ivshmem规范定义了如何实现共享内存设备,其核心原理基于QEMU的PCI设备模型,前端将PCI设备的BAR空间作为一个普通的内存空间,后端将该BAR空间关联的MR配置成从共享内存文件中获得,从而实现将BAR空间暴露给其它进程的目标。

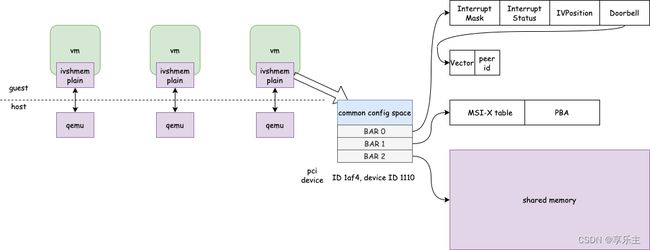

- ivshmem-plain设备主要使用的是共享内存部分的功能,这部分实现比较简单,ivshmem规范中涉及内容不多,ivshmem规范中大部分内容是讲解如何设计PCI的BAR寄存器实现共享设备之前的中断通知,即 ivshmem-doorbell设备。ivshmem-doorbell涉及的中断机制我们单独讲解,不在本文介绍。对于ivshmem设备,无论是ivshmem-plain设备还是ivshmem-doorbell设备,其架构相同,如下所示,ivshmem-plain设备的实现只用到了BAR2,ivshmem-doorbell设备的实现涉及BAR0和BAR1。

具体实现

设备模型

- ivshmem在最初的设计中,实现了驱动为ivshmem设备,既支持普通的共享内存设备,又支持可通过中断实现doorbell的共享内存设备,但实现逻辑耦合性高且不清晰,因此根据功能不同,ivshmem被拆分成ivshmem-plain和ivshmem-doorbell两类设备,原来的ivshmem设备作为遗留设备被建议废弃,实现代码仍然被保留。

- 当前Qemu的ivshmem设备主要指ivshmem-plain设备和ivshmem-doorbell设备,模型如下:

数据结构

- ivshmem设备的核心数据结构是

IVShmemState:

/* ivshmem规范中定义的PCI设备BAR0寄存器 */

/* registers for the Inter-VM shared memory device */

enum ivshmem_registers {

INTRMASK = 0,

INTRSTATUS = 4,

IVPOSITION = 8,

DOORBELL = 12,

};

typedef struct IVShmemState {

/*< private >*/

PCIDevice parent_obj;

/*< public >*/

/* 存放可供用户配置的特性 */

uint32_t features;

/* exactly one of these two may be set */

/* ivshmem-plain设备,使用qemu分配的内存作为共享内存 */

HostMemoryBackend *hostmem; /* with interrupts */

/* ivshmem-doorbell设备,使用server传入的共享内存文件fd分配内存作为共享内存 */

CharBackend server_chr; /* without interrupts */

/* registers */

uint32_t intrmask; /* Interrupt Mask */

uint32_t intrstatus; /* Interrupt Status */

/*

* 对于ivshmem-plain设备,这个字段没有使用,默认为0

* 对于ivshmem-doorbell设备,使用该字段,在共享设备的域中标识自己的ID

* 其它设备发送中断时通过指明vm_id字段表明中断要发送到的目的设备

* vm_id的范围时[0, 65535], ivshmem-doorbell-server根据client连接顺序从0

* 依次开始一次分配ID给client,角色为master的client必须是第一个连接

* server的ivshmem-doorbell设备,因此master ivshmem-doorbell设备的vm_id

* 是0,后续连接的client作为peer角色存在,master设备支持迁移

* 因此无论是ivshmem-plain还是ivshmem-doorbell,vm_id为0时都表明自己是

* master角色,能够支持迁移

*/

int vm_id; /* IVPosition */

/* BARs */

/* BAR0寄存器对应的后端实现,MMIO类型MR */

MemoryRegion ivshmem_mmio; /* BAR 0 (registers) */

/* ivshmem-plain设备的共享内存由qemu进程即时分配 */

MemoryRegion *ivshmem_bar2; /* BAR 2 (shared memory) */

/* ivshmem-doorbell设备的共享内存由server进程打开共享设备文件,

* 通过SCM_RIGHTS传入打开共享设备文件的描述符,客户端拿到后再

* 分配内存,如果是ivshmem-doorbell设备,ivshmem_bar2会指向

* server_bar2

*/

MemoryRegion server_bar2; /* used with server_chr */

/* interrupt support */

/* 内存共享域中其余设备形成的数组 */

Peer *peers;

/* 数组大小,也即共享域中其余设备的个数 */

int nb_peers; /* space in @peers[] */

uint32_t vectors;

MSIVector *msi_vectors;

uint64_t msg_buf; /* buffer for receiving server messages */

int msg_buffered_bytes; /* #bytes in @msg_buf */

/* migration stuff */

OnOffAuto master; /* 只有master=on才支持迁移,通过命令行参数master属性可以指定 */

Error *migration_blocker; /* 如果master=off,增加该blocker */

/* legacy cruft */

char *role;

char *shmobj;

char *sizearg;

size_t legacy_size;

uint32_t not_legacy_32bit;

} IVShmemState;

设备初始化

- 所有ivshmem-plain设备都属于ivshmem-plain类,类初始化函数如下:

static void ivshmem_plain_class_init(ObjectClass *klass, void *data)

{

DeviceClass *dc = DEVICE_CLASS(klass);

PCIDeviceClass *k = PCI_DEVICE_CLASS(klass);

k->realize = ivshmem_plain_realize; /* 设备创建时的初始化函数 */

dc->props = ivshmem_plain_properties; /* 设备属性 */

dc->vmsd = &ivshmem_plain_vmsd; /* 定义迁移设备状态时需要迁移IVShmemState的字段 */

}

- ivshmem_plain_properties描述设备有哪些属性,除此之外,ivshmem-plain设备的父类是ivshmem-common设备,父类的父类是pci设备,因此ivshmem-plain还具有pci设备的所有属性:

static Property ivshmem_plain_properties[] = {

/* master属性指定设备角色,默认为peer角色 */

DEFINE_PROP_ON_OFF_AUTO("master", IVShmemState, master, ON_OFF_AUTO_OFF),

/* 共享内存设备基于linux shared memory实现,这里的memdev指向主机memory-backend-file对象

* 最终指向memory-backend-file对象关联的具体共享内存文件文件

*/

DEFINE_PROP_LINK("memdev", IVShmemState, hostmem, TYPE_MEMORY_BACKEND,

HostMemoryBackend *),

DEFINE_PROP_END_OF_LIST(),

};

- ivshmem_plain_vmsd描述

IVShmemState数据结构的哪些字段需要迁移,在目的端加载设备时保证值相同。

static const VMStateDescription ivshmem_plain_vmsd = {

.name = TYPE_IVSHMEM_PLAIN,

.version_id = 0,

.minimum_version_id = 0,

.pre_load = ivshmem_pre_load,

.post_load = ivshmem_post_load,

.fields = (VMStateField[]) {

VMSTATE_PCI_DEVICE(parent_obj, IVShmemState), /* 迁移pci设备相关字段 */

VMSTATE_UINT32(intrstatus, IVShmemState), /* 迁移intrstatus字段,保证目的端设备加载后中断状态相同 */

VMSTATE_UINT32(intrmask, IVShmemState), /* 迁移intrmask字段,保证目的端设备使能的中断和源端相同 */

VMSTATE_END_OF_LIST()

},

};

- ivshmem_plain_realize实现ivshmem-plain设备的核心逻辑:

static void ivshmem_plain_realize(PCIDevice *dev, Error **errp)

{

IVShmemState *s = IVSHMEM_COMMON(dev);

/* 检查ivshmem-plain设备是否指定了memdev内存对象 */

if (!s->hostmem) {

error_setg(errp, "You must specify a 'memdev'");

return;

} else if (host_memory_backend_is_mapped(s->hostmem)) {

/* 如果内存对象被标记为已使用,报错 */

char *path = object_get_canonical_path_component(OBJECT(s->hostmem));

error_setg(errp, "can't use already busy memdev: %s", path);

g_free(path);

return;

}

/* ivshmem-plain设备核心逻辑和ivshmem-common设备相同 */

ivshmem_common_realize(dev, errp);

}

- ivshmem_common_realize对设备做初始化,对于ivshmem-plain设备,主要就是BAR0和BAR2的模拟(BAR1只有ivshmem-doorbell设备才会用到),BAR0是MMIO类型的MR,BAR2是RAM类型的MR,针对BAR0,函数主要是注册MMIO的回调钩子函数,针对BAR2,函数主要确保获取设备对应的MR信息并注册BAR。

static void ivshmem_common_realize(PCIDevice *dev, Error **errp)

{

IVShmemState *s = IVSHMEM_COMMON(dev);

uint8_t *pci_conf;

uint8_t attr = PCI_BASE_ADDRESS_SPACE_MEMORY |

PCI_BASE_ADDRESS_MEM_PREFETCH;

/* 配置ivshmem pci配置空间的命令寄存器 */

pci_conf = dev->config;

/* 设置ivshmem设备支持IO访问和内存映射访问

* IO访问的pci设备,可以通过io指令读写BAR空间

* 内存映射访问的pci设备,可以通过MMIO将BAR空间映射到内存上,可以像读写内存一样访问这类空间

*/

pci_conf[PCI_COMMAND] = PCI_COMMAND_IO | PCI_COMMAND_MEMORY;

/* MMIO memory初始化,注册IO回调函数用以响应guest对BAR0寄存器的读写请求 */

memory_region_init_io(&s->ivshmem_mmio, OBJECT(s), &ivshmem_mmio_ops, s,

"ivshmem-mmio", IVSHMEM_REG_BAR_SIZE);

/* 将MR注册为BAR */

/* region for registers*/

pci_register_bar(dev, 0, PCI_BASE_ADDRESS_SPACE_MEMORY,

&s->ivshmem_mmio);

/* 如果共享内存是ivshmem-plain设备,memdev参数必须指定,此处hostmem必不为NULL */

if (s->hostmem != NULL) {

IVSHMEM_DPRINTF("using hostmem\n");

/* 获取backend的MR作为共享内存的MR */

s->ivshmem_bar2 = host_memory_backend_get_memory(s->hostmem);

/* 标记该memdev object已经被使用,防止其它ivshmem-plain设备使用 */

host_memory_backend_set_mapped(s->hostmem, true);

}

/* master有三个属性on/off/auto,如果命令行配置为auto则由Qemu判断

* 对于ivshmem-plain设备,vm_id默认为0,因此角色默认为master

* 对于ivshmem-doorbell设备,第一个连接server分配得到的ID是0,作为master

*/

if (s->master == ON_OFF_AUTO_AUTO) {

s->master = s->vm_id == 0 ? ON_OFF_AUTO_ON : ON_OFF_AUTO_OFF;

}

/* 如果ivshmem设备不是master,增加迁移blocker,禁止配置该设备的虚机迁移 */

if (!ivshmem_is_master(s)) {

error_setg(&s->migration_blocker,

"Migration is disabled when using feature 'peer mode' in device 'ivshmem'");

migrate_add_blocker(s->migration_blocker, &local_err);

if (local_err) {

error_propagate(errp, local_err);

error_free(s->migration_blocker);

return;

}

}

/* ivshmem设备的BAR2是普通内存,对于普通内存通常通过memory_region_init_ram初始化MR

* 这里由于是共享内存,MR已经通过memdev对象初始化完成,因此只需要再标记下该MR关联

* 的RAMBlock可以迁移并设置idstr即可。标记RAMBlock可迁移的本质是帮助ram_save_iterate

* 在遍历ram_list.blockss时判断该RAMBlock是否可以迁移

*/

vmstate_register_ram(s->ivshmem_bar2, DEVICE(s));

/* 将MR注册为ivshmem-plain设备的BAR2 */

pci_register_bar(PCI_DEVICE(s), 2, attr, s->ivshmem_bar2);

}

- 对于BAR0,guest通过MMIO方式访问时,后端触发对应的IO回调函数,回调函数在ivshmem_mmio_ops中注册,如下:

static const MemoryRegionOps ivshmem_mmio_ops = {

.read = ivshmem_io_read, /* guest读bar0的回调函数 */

.write = ivshmem_io_write, /* guest写bar0的回调函数 */

.endianness = DEVICE_NATIVE_ENDIAN,

.impl = {

.min_access_size = 4,

.max_access_size = 4,

},

};

/* 根据guest读的地址,返回IVShmemState中对应的字段

* 即intrmask、intrstatus、vm_id三个字段

*/

static uint64_t ivshmem_io_read(void *opaque, hwaddr addr,

unsigned size)

{

IVShmemState *s = opaque;

uint32_t ret;

switch (addr)

{

case INTRMASK:

ret = ivshmem_IntrMask_read(s);

break;

case INTRSTATUS:

ret = ivshmem_IntrStatus_read(s);

break;

case IVPOSITION:

ret = s->vm_id;

break;

default:

IVSHMEM_DPRINTF("why are we reading " TARGET_FMT_plx "\n", addr);

ret = 0;

}

return ret;

}

/* 根据guest的写地址,如果是写intrmask、intrstatus两个寄存器

* 则表明是中断本虚拟机,设置对应的寄存器值并注入中断

* 如果是写doorbell集群起,表明是中断共享内存的其它虚机

* 通过写eventfd通知

*/

static void ivshmem_io_write(void *opaque, hwaddr addr,

uint64_t val, unsigned size)

{

IVShmemState *s = opaque;

uint16_t dest = val >> 16;

uint16_t vector = val & 0xff;

addr &= 0xfc;

IVSHMEM_DPRINTF("writing to addr " TARGET_FMT_plx "\n", addr);

switch (addr)

{

case INTRMASK:

ivshmem_IntrMask_write(s, val);

break;

case INTRSTATUS:

ivshmem_IntrStatus_write(s, val);

break;

case DOORBELL:

/* check that dest VM ID is reasonable */

if (dest >= s->nb_peers) {

IVSHMEM_DPRINTF("Invalid destination VM ID (%d)\n", dest);

break;

}

/* check doorbell range */

if (vector < s->peers[dest].nb_eventfds) {

IVSHMEM_DPRINTF("Notifying VM %d on vector %d\n", dest, vector);

/* 向vm_id为dest的设备发送一个vector中断向量

* ivshmem-doorbell设备注册了ivshmem_vector_notify handler

* 用于处理evenfd上的读写事件

*/

event_notifier_set(&s->peers[dest].eventfds[vector]);

} else {

/* 向其它设备注入了一个没有注册的无效中断向量,报错 */

IVSHMEM_DPRINTF("Invalid destination vector %d on VM %d\n",

vector, dest);

}

break;

default:

IVSHMEM_DPRINTF("Unhandled write " TARGET_FMT_plx "\n", addr);

}

}

测试验证

方案

- 我们通过下面简单的测试demo验证ivshmem-plain设备的整个工作流程,测试demo代码分为两部分:

- guest, 这部分代码运行在虚机内部,主要是驱动代码,用于识别ivshmem-plain pci设备,并将pci设备的bar2空间通过MMIO的方式映射为内存,同时将映射后内存的地址通过proc文件系统暴露到用户态,方便通过命令行方式往ivshmem-plain设备的bar2写入测试内容。参考ivshmem-plain guest驱动

- host,这部分代码运行主机上,主要是读取qemu的ivshmem-plain设备暴露的共享内存数据,验证guest驱动中写的内容。参考ivshmem-plain host程序

- 如下图所示,guest驱动代码即

ivshmem drv模块,它识别到ivshmem-plain pci设备后,提供procfs接口供命令行读写共享内存,host程序即test agent模块,它读取Qemu暴露的共享内存文件内容并打印。通过比较test agent模块读取的内容是否与guest中向/proc/ivshmem_demo/bar2写入的内容相同,测试内存是否共享。

流程

Libvirt配置

我们配置了4M的共享内存,默认情况下,如果xml中role属性不设置,则qemu默认会配置为peer角色:

4

Qemu配置

- 命令行

-object memory-backend-file,id=shmmem-shmem0,mem-path=/dev/shm/my_shmem0,size=4194304,share=yes

-device ivshmem-plain,id=shmem0,memdev=shmmem-shmem0,bus=pci.0,addr=0xb

- 进程地址空间

Qemu进程的虚机地址空间布局(cat /proc/pid/maps),可以看到分配了4M的虚拟地址空间

7fc5b99af000-7fc5ba1af000 rw-p 00000000 00:00 0

7fc5ba1af000-7fc5ba5af000 rw-s 00000000 00:16 433243576 /dev/shm/my_shmem0

7fc5ba5af000-7fc5ba5b0000 ---p 00000000 00:00 0

- Qemu设备树

Qemu hmp info qtree输出:

dev: ivshmem-plain, id "shmem0"

master = "off"

memdev = "/objects/shmmem-shmem0"

addr = 0b.0

romfile = ""

rombar = 1 (0x1)

multifunction = false

command_serr_enable = true

x-pcie-lnksta-dllla = true

x-pcie-extcap-init = true

class RAM controller, addr 00:0b.0, pci id 1af4:1110 (sub 1af4:1100)

bar 0: mem at 0xfea5a000 [0xfea5a0ff]

bar 2: mem at 0xfe000000 [0xfe3fffff]

- Qemu内存树

Qemu hmp info mtree -f输出:

00000000fd000000-00000000fd3fffff (prio 0, i/o): cirrus-bitblt-mmio

00000000fe000000-00000000fe3fffff (prio 1, ram): /objects/shmmem-shmem0 /* BAR 2 */

00000000fe600000-00000000fe600fff (prio 0, i/o): virtio-pci-common

00000000fea59800-00000000fea59807 (prio 0, i/o): msix-pba

00000000fea5a000-00000000fea5a0ff (prio 1, i/o): ivshmem-mmio /* BAR 0 */

00000000fec00000-00000000fec00fff (prio 0, i/o): kvm-ioapic

- guest

虚机内部pci信息输出:

[root@ivshmem ~]# lspci -v -s 00:0b.0

00:0b.0 RAM memory: Virtio: Inter-VM shared memory (rev 01)

Subsystem: Virtio: QEMU Virtual Machine

Physical Slot: 11

Flags: fast devsel

Memory at fea5a000 (32-bit, non-prefetchable) [size=256]

Memory at fe000000 (64-bit, prefetchable) [size=4M]

Kernel driver in use: ivshmem_demo_driver

测试步骤

- guest加载ivshmem-drv.ko驱动模块,识别ivshmem-plain pci设备,运行

insmod ivshmem-drv.ko, 内核驱动通过vendor_id和device_id识别到ivshmem-plain设备,并通过pci规范探测该设备需要申请的空间大小,并做内存映射,日志如下:

从上面可以看到,bar 2空间大小为0x400000(4M),起始地址为0xfe00000,被ioremap到了0x45400000地址作为普通内存使用。 - 查看生成的proc接口,没有任何内容:

- 往BAR2写入如下内容并读取确认:

- 主机侧运行测试程序,读取qemu暴露的共享内存文件

/dev/shm/my_shmem0确认: