Flink问题记录

生产上的坑才是真的坑 | 盘一盘Flink那些经典线上问题

https://mp.weixin.qq.com/s?__biz=MzU3MzgwNTU2Mg==&mid=2247500470&idx=1&sn=a9ddfabd545a52e463dbc728fdaa872d&chksm=fd3e8423ca490d3578b802859924a5de96038e5f114788443f10763b90cea4955ff352982e5a&scene=21#wechat_redirect)

本地执行环境

Configuration configuration = new Configuration();

configuration.setString(RestOptions.BIND_PORT, "8081");

StreamExecutionEnvironment environment = StreamExecutionEnvironment.createLocalEnvironmentWithWebUI(configuration);

EnvironmentSettings environmentSettings = EnvironmentSettings.newInstance().useBlinkPlanner().build();

StreamTableEnvironment tableEnvironment = StreamTableEnvironment.create(environment, environmentSettings);

// 数据源 idle 设置

tableEnvironment.getConfig().set("table.exec.source.idle-timeout", "10s");

1)No ExecutorFactory found to execute the application

Flink 1.11之后需要添加上

<dependency>

<groupId>org.apache.flinkgroupId>

<artifactId>flink-clients_2.11artifactId>

<version>1.13.5version>

dependency>

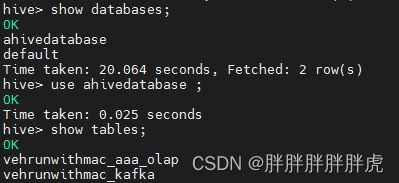

2) k8s flink hive 问题 Unable to instantiate org.apache.hadoop.hive.metastore.HiveMetaStoreClient

镜像 jdk 为 11、替换JDK 8

https://blog.csdn.net/wuxintdrh/article/details/119538780

[root@hadoop01 lib]# ll

total 282732

...

-rw-r--r-- 1 root root 167761 Feb 25 10:17 antlr-runtime-3.5.2.jar

-rw-r--r--. 1 root root 3773790 Jun 10 2021 flink-connector-hive_2.11-1.11.2.jar

-rw-r--r--. 1 root root 35803900 Jun 9 2021 hive-exec-2.1.1-cdh6.3.1.jar

-rw-r--r--. 1 root root 313702 Jun 9 2021 libfb303-0.9.3.jar

#-rw-r--r--. 1 root root 1007502 Aug 13 2020 mysql-connector-java-5.1.47.jar

...

3)Invalid value org.apache.flink.kafka.shaded.org.apache.kafka.common.serialization.ByteArraySerializer for configuration key.serializer: Class org.apache.flink.kafka.shaded.org.apache.kafka.common.serialization.ByteArraySerializer could not be found

### 只添加

flink-sql-connector-kafka_2.11-1.13.5.jar

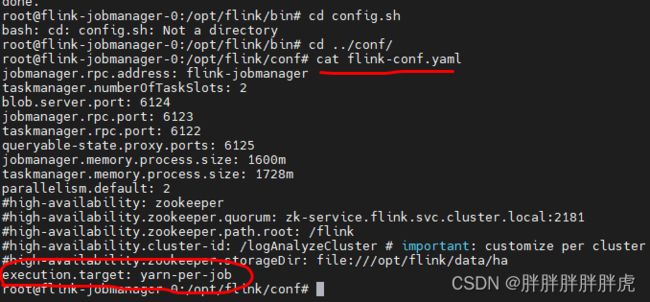

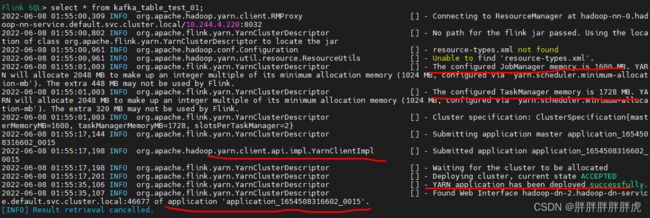

4) sql-client yarn per-job

5)Hudi

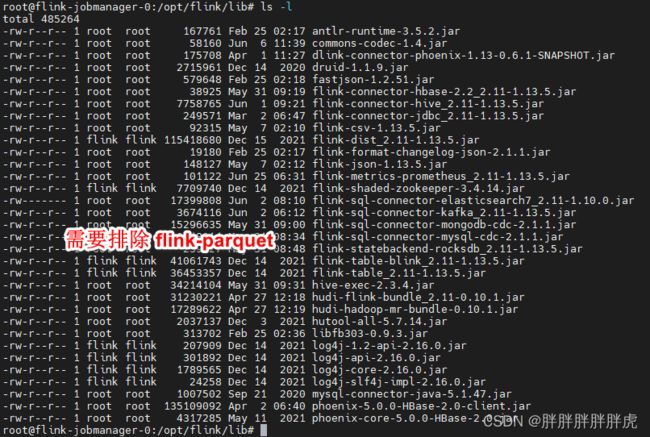

java.lang.NoSuchMethodError: org.apache.flink.formats.parquet.vector.reader.BytesColumnReader.<init>(Lorg/apache/hudi/org/apache/parquet/column/ColumnDescriptor;Lorg/apache/hudi/org/apache/parquet/column/page/PageReader;)V

flink on k8s

参数配置:org.apache.flink.kubernetes.configuration.KubernetesConfigOptions

https://blog.csdn.net/sqf_csdn/article/details/125037675?spm=1001.2101.3001.6650.9&utm_medium=distribute.pc_relevant.none-task-blog-2%7Edefault%7EBlogCommendFromBaidu%7Edefault-9-125037675-blog-123861113.pc_relevant_multi_platform_whitelistv2&depth_1-utm_source=distribute.pc_relevant.none-task-blog-2%7Edefault%7EBlogCommendFromBaidu%7Edefault-9-125037675-blog-123861113.pc_relevant_multi_platform_whitelistv2&utm_relevant_index=16

./bin/flink run-application \

-target kubernetes-application \

-Dkubernetes.cluster-id=my-test-flink-k8s \

-Dkubernetes.container.image=flinkk8s:1.15.0 \

-Dkubernetes.rest-service.exposed.type=NodePort \

-Dkubernetes.jobmanager.cpu=0.2 \

-Dkubernetes.taskmanager.cpu=0.1 \

-Dtaskmanager.numberOfTaskSlots=1 \

-Djobmanager.memory.process.size=1024m \

-Dtaskmanager.memory.process.size=1024m \

-Dkubernetes.namespace=flinkjob \

local:///opt/flink/examples/streaming/TopSpeedWindowing.jar

./bin/flink run-application -p 1 -t kubernetes-application \

--detached \

--allowNonRestoredState \ # 从指定savepoint启动,不需要时可省略掉

--fromSavepoint oss://flinkjob-state-prod/savepoint-f1e2d7-3108369d7445 \ # 从指定savepoint启动不需要时可省略掉

-Dkubernetes.namespace=flinkjob \

-Dkubernetes.jobmanager.service-account=flink \

-Dkubernetes.cluster-id=flink-jobs \

-Dkubernetes.container.image.pull-policy=Always \

-Dkubernetes.container.image=*****/flink-jobs:git.c2f4bba9ffbc26a077ae73d8508fcce0e05752ed \ # 对应镜像地址

-Djobmanager.memory.process.size=1024m \

-Dtaskmanager.memory.process.size=1024m \

-Dkubernetes.jobmanager.cpu=0.2 \

-Dkubernetes.taskmanager.cpu=0.1 \

-Dtaskmanager.numberOfTaskSlots=1 \

-Dkubernetes.rest-service.exposed.type=NodePort \

local:///flink/flink-jobs/flink-jobs.jar # 镜像需要执行的jar地址

# 查看当前集群任务id

./bin/flink list --target kubernetes-application -Dkubernetes.cluster-id=flink-jobs -Dkubernetes.namespace=flinkjob

# stop 任务并进行状态savepoint

./bin/flink stop --savepointPath --target kubernetes-application -Dkubernetes.cluster-id=flink-production -Dkubernetes.namespace=flinkjob <job-id>

# stop集

echo 'stop' | ./bin/kubernetes-session.sh -Dkubernetes.cluster-id=flink-production -Dkubernetes.namespace=flinkjob -Dexecution.attached=true

# List running job on the cluster

$ ./bin/flink list --target kubernetes-application -Dkubernetes.cluster-id=my-first-application-cluster

# Cancel running job

$ ./bin/flink cancel --target kubernetes-application -Dkubernetes.cluster-id=my-first-application-cluster <jobId>

flink api k8s application

https://blog.csdn.net/huashetianzu/article/details/107988731

import java.util.Collections;

import java.util.concurrent.Executors;

import org.apache.flink.client.deployment.ClusterDeploymentException;

import org.apache.flink.client.deployment.ClusterSpecification;

import org.apache.flink.client.deployment.application.ApplicationConfiguration;

import org.apache.flink.client.program.ClusterClient;

import org.apache.flink.client.program.ClusterClientProvider;

import org.apache.flink.configuration.CheckpointingOptions;

import org.apache.flink.configuration.Configuration;

import org.apache.flink.configuration.DeploymentOptions;

import org.apache.flink.configuration.GlobalConfiguration;

import org.apache.flink.configuration.JobManagerOptions;

import org.apache.flink.configuration.MemorySize;

import org.apache.flink.configuration.PipelineOptions;

import org.apache.flink.configuration.TaskManagerOptions;

import org.apache.flink.kubernetes.KubernetesClusterDescriptor;

import org.apache.flink.kubernetes.configuration.KubernetesConfigOptions;

import org.apache.flink.kubernetes.configuration.KubernetesDeploymentTarget;

import org.apache.flink.kubernetes.kubeclient.Fabric8FlinkKubeClient;

import org.apache.flink.kubernetes.kubeclient.FlinkKubeClient;

import io.fabric8.kubernetes.client.DefaultKubernetesClient;

import io.fabric8.kubernetes.client.KubernetesClient;

public class KubernetesApplicationDeployment {

public static void main(String[] args) {

String flinkDistJar = "hdfs://data/flink/libs/flink-kubernetes_2.12-1.11.0.jar";

Configuration flinkConfiguration = GlobalConfiguration.loadConfiguration();

flinkConfiguration.set(DeploymentOptions.TARGET, KubernetesDeploymentTarget.APPLICATION.getName());

flinkConfiguration.set(CheckpointingOptions.INCREMENTAL_CHECKPOINTS, true);

flinkConfiguration.set(PipelineOptions.JARS, Collections.singletonList(flinkDistJar));

flinkConfiguration.set(KubernetesConfigOptions.CLUSTER_ID, "k8s-app1");

flinkConfiguration.set(KubernetesConfigOptions.CONTAINER_IMAGE, "img_url");

flinkConfiguration.set(KubernetesConfigOptions.CONTAINER_IMAGE_PULL_POLICY,

KubernetesConfigOptions.ImagePullPolicy.Always);

flinkConfiguration.set(KubernetesConfigOptions.JOB_MANAGER_CPU, 2.5);

flinkConfiguration.set(KubernetesConfigOptions.TASK_MANAGER_CPU, 1.5);

flinkConfiguration.set(JobManagerOptions.TOTAL_FLINK_MEMORY, MemorySize.parse("4096M"));

flinkConfiguration.set(JobManagerOptions.TOTAL_PROCESS_MEMORY, MemorySize.parse("4096M"));

flinkConfiguration.set(TaskManagerOptions.NUM_TASK_SLOTS, 1);

KubernetesClient kubernetesClient = new DefaultKubernetesClient();

FlinkKubeClient flinkKubeClient = new Fabric8FlinkKubeClient(flinkConfiguration,

kubernetesClient, () -> Executors.newFixedThreadPool(2));

KubernetesClusterDescriptor kubernetesClusterDescriptor = new

KubernetesClusterDescriptor(flinkConfiguration, flinkKubeClient);

ClusterSpecification clusterSpecification = new ClusterSpecification

.ClusterSpecificationBuilder().createClusterSpecification();

ApplicationConfiguration appConfig = new ApplicationConfiguration(args, null);

ClusterClientProvider<String> clusterClientProvider = null;

try {

clusterClientProvider = kubernetesClusterDescriptor.deployApplicationCluster(

clusterSpecification, appConfig);

} catch (ClusterDeploymentException e) {

e.printStackTrace();

}

kubernetesClusterDescriptor.close();

ClusterClient<String> clusterClient = clusterClientProvider.getClusterClient();

String clusterId = clusterClient.getClusterId();

System.out.println(clusterId);

}

}

Could not forward element to next operator

flink-shaded-hadoop-3-uber 下载

https://blog.csdn.net/a284365/article/details/121116390

flink k8s application 资源问题

-bash-4.2# kubectl describe pod flink-k8s-application-taskmanager-1-1

Name: flink-k8s-application-taskmanager-1-1

Namespace: default

Priority: 0

Node: <none>

Labels: app=flink-k8s-application

component=taskmanager

type=flink-native-kubernetes

Annotations: <none>

Status: Pending

IP:

IPs: <none>

Controlled By: Deployment/flink-k8s-application

Containers:

flink-main-container:

Image: docker-registry-node:5000/flink:k8s-application

Port: 6122/TCP

Host Port: 0/TCP

Command:

/docker-entrypoint.sh

Args:

bash

-c

kubernetes-taskmanager.sh -Djobmanager.memory.jvm-overhead.min='201326592b' -Dpipeline.classpaths='' -Dtaskmanager.resource-id='flink-k8s-application-taskmanage r-1-1' -Djobmanager.memory.off-heap.size='134217728b' -Dexecution.target='embedded' -Dweb.tmpdir='/tmp/flink-web-db70960a-475f-4c8b-bf3d-ed84272b1dfe' -Dpipeline.jars ='file:/opt/dlink-app-1.13-0.6.5-SNAPSHOT-jar-with-dependencies.jar' -Djobmanager.memory.jvm-metaspace.size='268435456b' -Djobmanager.memory.heap.size='469762048b' -D jobmanager.memory.jvm-overhead.max='201326592b' -D taskmanager.memory.network.min=166429984b -D taskmanager.cpu.cores=4.0 -D taskmanager.memory.task.off-heap.size=0b -D taskmanager.memory.jvm-metaspace.size=268435456b -D external-resources=none -D taskmanager.memory.jvm-overhead.min=214748368b -D taskmanager.memory.framework.off-h eap.size=134217728b -D taskmanager.memory.network.max=166429984b -D taskmanager.memory.framework.heap.size=134217728b -D taskmanager.memory.managed.size=665719939b -D taskmanager.memory.task.heap.size=563714445b -D taskmanager.numberOfTaskSlots=4 -D taskmanager.memory.jvm-overhead.max=214748368b

Limits:

cpu: 4

memory: 2Gi

Requests:

cpu: 4

memory: 2Gi

Environment:

FLINK_TM_JVM_MEM_OPTS: -Xmx697932173 -Xms697932173 -XX:MaxDirectMemorySize=300647712 -XX:MaxMetaspaceSize=268435456

HADOOP_CONF_DIR: /opt/hadoop/conf

Mounts:

/opt/flink/conf from flink-config-volume (rw)

/opt/hadoop/conf from hadoop-config-volume (rw)

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-65gpv (ro)

Conditions:

Type Status

PodScheduled False

Volumes:

hadoop-config-volume:

Type: ConfigMap (a volume populated by a ConfigMap)

Name: hadoop-config-flink-k8s-application

Optional: false

flink-config-volume:

Type: ConfigMap (a volume populated by a ConfigMap)

Name: flink-config-flink-k8s-application

Optional: false

kube-api-access-65gpv:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: Guaranteed

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedScheduling 4m43s default-scheduler 0/15 nodes are available: 12 Insufficient cpu, 3 node(s) had taint {node-role.kubernetes.io/master: }, that the pod didn't tolerate.

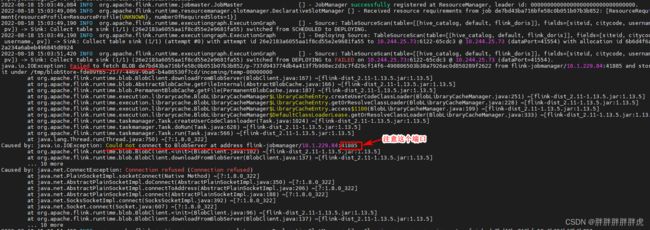

Could not connect to BlobServer at address

/**

* This class implements the BLOB server. The BLOB server is responsible for listening for incoming

* requests and spawning threads to handle these requests. Furthermore, it takes care of creating

* the directory structure to store the BLOBs or temporarily cache them.

*/

kafka 全局有序

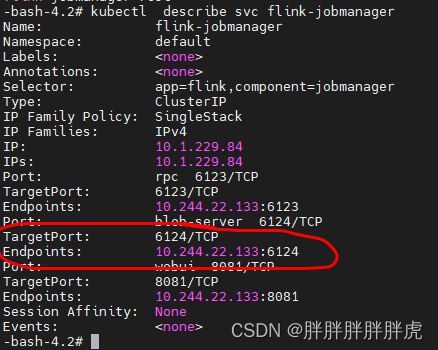

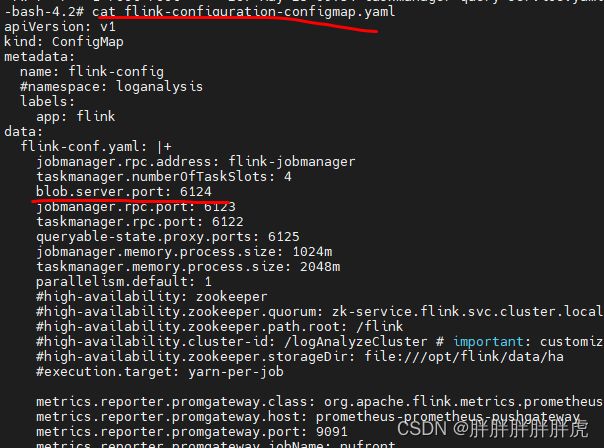

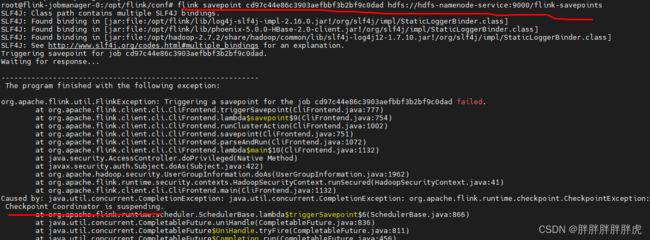

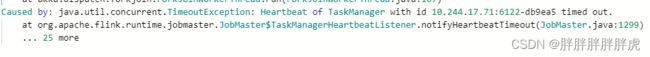

savepoint问题: Checkpoint Coordinator is suspending…

Heartbeat of TaskManager with id 10.244.17.71:6122-db9ea5 timed out

reference.conf exception when running flink application

https://stackoverflow.com/questions/48087389/reference-conf-exception-when-running-flink-application

<dependency>

<groupId>com.typesafe.akkagroupId>

<artifactId>akka-actor_2.11artifactId>

<version>${akka.version}version>

dependency>

<dependency>

<groupId>com.typesafe.akkagroupId>

<artifactId>akka-protobuf_2.11artifactId>

<version>${akka.version}version>

dependency>

<dependency>

<groupId>com.typesafe.akkagroupId>

<artifactId>akka-stream_2.11artifactId>

<version>${akka.version}version>

dependency>

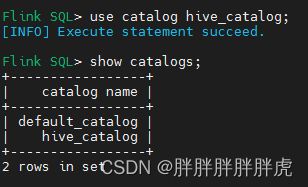

Configured default database default doesn’t exist in catalog my_catalog.

遇到的问题是: 在 flinksql sql-client-defaults.yaml 客户端中配置catalog,sql-client.sh embedded 执行成功,但是在通过 flink web 提交任务报,hive default 的确是存在的 (两个catalog 不能用同一个 database?)

FlinkKafkaConsumerBase 继承了 RichParallelSourceFunction 和CheckpointedFunction,并且重写了RichParallelSourceFunction 的open方法和CheckpointedFunction initializeState方法,那么是先执行open呢还是initializeState呢?

Flink hadoop 环境参数配置

Caused by: java.lang.ClassNotFoundException: org.apache.hadoop.conf.Configuration

at java.net.URLClassLoader.findClass(URLClassLoader.java:382)

at java.lang.ClassLoader.loadClass(ClassLoader.java:424)

at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:349)

at java.lang.ClassLoader.loadClass(ClassLoader.java:357)

... 13 more

ts@tsserver:~/bigdata/flink-1.13.5/bin$ export HADOOP_CLASSPATH=/home/ts/bigdata/hadoop-2.7.4/etc/hadoop:/home/ts/bigdata/hadoop-2.7.4/share/hadoop/common/lib/*:/home/ts/bigdata/hadoop-2.7.4/share/hadoop/common/*:/home/ts/bigdata/hadoop-2.7.4/share/hadoop/hdfs:/home/ts/bigdata/hadoop-2.7.4/share/hadoop/hdfs/lib/*:/home/ts/bigdata/hadoop-2.7.4/share/hadoop/hdfs/*:/home/ts/bigdata/hadoop-2.7.4/share/hadoop/yarn/lib/*:/home/ts/bigdata/hadoop-2.7.4/share/hadoop/yarn/*:/home/ts/bigdata/hadoop-2.7.4/share/hadoop/mapreduce/lib/*:/home/ts/bigdata/hadoop-2.7.4/share/hadoop/mapreduce/*:/contrib/capacity-scheduler/*.jar

Embedded metastore is not allowed. Make sure you have set a valid value for hive.metastore.uris

<property>

<name>hive.metastore.urisname>

<value>thrift://localhost:9083value>

property>

hive --server metastore

hive json 表,Flinksql 访问报错

Flink SQL> select * from testhive ;

[ERROR] Could not execute SQL statement. Reason:

org.apache.flink.table.catalog.exceptions.CatalogException: Failed to get table schema from deserializer

cp hive-hcatalog-core-2.3.2.jar /home/ts/bigdata/flink-1.13.5/lib/

Flink SQL> select * from testhive ;

2023-08-05 07:04:01,095 INFO org.apache.hadoop.mapred.FileInputFormat [] - Total input paths to process : 1

+----+--------------------------------+-------------+

| op | name | age |

+----+--------------------------------+-------------+

| +I | 张三 | 17 |

| +I | 李四 | 18 |

| +I | 王五 | 16 |

+----+--------------------------------+-------------+

Received a total of 3 rows

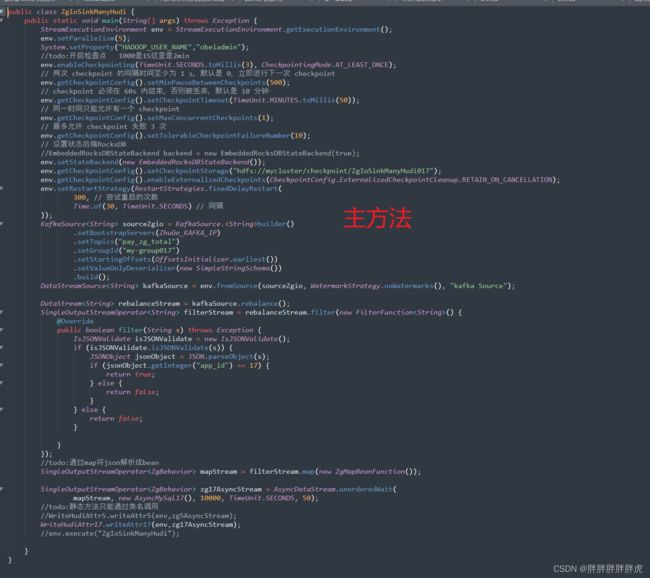

Async/IO Caused by: java.util.concurrent.TimeoutException: Async function call has timed out.

2023-09-15 14:49:14,580 WARN org.apache.flink.runtime.taskmanager.Task [] - Filter -> Map -> async wait operator -> sourceTable[1] -> Calc[2] -> ConstraintEnforcer[3] -> row_data_to_hoodie_record (2/5)#61 (10c30caf3eba58f4e981db644b8231e7_20ba6b65f97481d5570070de90e4e791_1_61) switched from RUNNING to FAILED with failure cause:

java.lang.Exception: Could not complete the stream element: Record @ -1 : ZgBehavior(app_id=5, ZG_ID=37747, SESSION_ID=1694757999648, UUID=null, EVENT_ID=null, BEGIN_DATE=1694758125436, DEVICE_ID=6168259, USER_ID=1574, USER_CODE=UO9305, USER_NAME=???, COMPANY_NAME=??????????????????, MOBILE_NUMBER=13705551174, ORGANIZATION_NAME=??????????????????, EVENT_NAME=null, PLATFORM=3, USERAGENT=null, WEBSITE=soe.obei.com.cn, CURRENT_URL=https://soe.obei.com.cn/obei-web-ec/settle/center/invoice/settleBillEditNew.html?type=add&purCode=UOS829, REFERRER_URL=https://soe.obei.com.cn/obei-web-ec/settle/center/invoice/settleBillIndex.html?appId=soe&sysName=PSCS&access_token=, CHANNEL=, APP_VERSION=, IP=117.65.208.35, COUNTRY=??, AREA=, CITY=, OS=, OV=, BS=, BV=, UTM_SOURCE=, UTM_MEDIUM=, UTM_CAMPAIGN=, UTM_CONTENT=, UTM_TERM=, DURATION=, ATTR1=, ATTR2=, ATTR3=, ATTR4=, ATTR5=6168259_1694757999648, CUS1=, CUS2=, CUS3=, CUS4=, CUS5=, CUS6=, CUS7=, CUS8=, CUS9=, CUS10=, CUS11=, CUS12=, CUS13=, CUS14=, CUS15=, JSON_PR={"$ct":1694758125436,"$tz":28800000,"$cuid":"UO9305","$sid":1694757999648,"$url":"https://soe.obei.com.cn/obei-web-ec/settle/center/invoice/settleBillEditNew.html?type=add&purCode=UOS829","$ref":"https://soe.obei.com.cn/obei-web-ec/settle/center/invoice/settleBillIndex.html?appId=soe&sysName=PSCS&access_token=","_???":"13705551174","_????":"???","_????":"??????????????????","_????":"??????????????????","$zg_did":6168259,"$zg_sid":1694757999648,"$zg_uid":1574,"$zg_zgid":37747,"$zg_upid#_???":30,"$zg_uptp#_???":"string","$zg_upid#_????":17,"$zg_uptp#_????":"string","$zg_upid#_????":6,"$zg_uptp#_????":"string","$zg_upid#_????":8,"$zg_uptp#_????":"string"}).

at org.apache.flink.streaming.api.operators.async.AsyncWaitOperator$ResultHandler.completeExceptionally(AsyncWaitOperator.java:633) ~[flink-dist-1.17.0.jar:1.17.0]

at org.apache.flink.streaming.api.functions.async.AsyncFunction.timeout(AsyncFunction.java:97) ~[flink-dist-1.17.0.jar:1.17.0]

... org.apache.flink.runtime.taskmanager.Task.runWithSystemExitMonitoring(Task.java:952) [flink-dist-1.17.0.jar:1.17.0]

at org.apache.flink.runtime.taskmanager.Task.restoreAndInvoke(Task.java:931) [flink-dist-1.17.0.jar:1.17.0]

at org.apache.flink.runtime.taskmanager.Task.doRun(Task.java:745) [flink-dist-1.17.0.jar:1.17.0]

at org.apache.flink.runtime.taskmanager.Task.run(Task.java:562) [flink-dist-1.17.0.jar:1.17.0]

at java.lang.Thread.run(Thread.java:748) [?:1.8.0_301]

Caused by: java.util.concurrent.TimeoutException: Async function call has timed out.

... 32 more

https://blog.csdn.net/u012447842/article/details/121694932