TBase环境部署过程及使用一

TBase简介

Tbase是腾讯开源的一个提供写可靠性,多主节点数据同步的关系数据库集群平台.你可以将Tbase配置一台或者多台主机上,Tbase数据存储在多台物理主机上面.数据表的存储有两种方式,分别是分布式或者复制,当向TBase发送查询SQL时,TBase会自动向数据节点发出查询语句并获取最终结果。

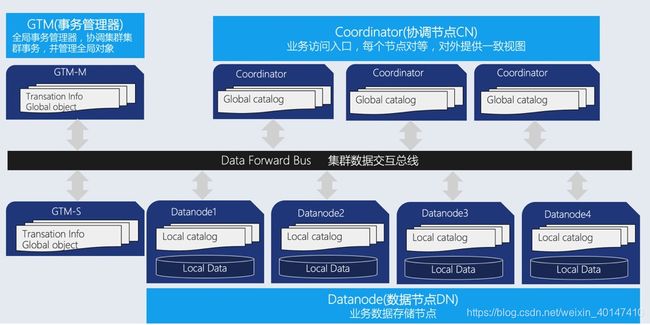

TBase采用分布式集群架构(如下图),该架构分布式为无共享(无共享)模式,节点之间相应独立,各自处理自己的数据,处理后的结果可能向上层汇总或在节点间流转,各处理单元之间通过网络协议进行通信,并行处理和扩展能力更好,这也意味着只需要简单的x86服务器就可以部署TBase数据库集群。

下面简单解读一下Tbase的三大模块

-

协调员:协调节点(简称CN)

业务访问入口,负责数据的分发和查询规划,多个节点位置对等,每个节点都提供相同的数据库视图;在功能上cn上只存储系统的全局元数据,并不存储实际的业务数据.

-

数据节点:数据节点(简称DN)

每个节点还存储业务数据的分片在功能上,DN节点负责完成执行协调节点分发的执行请求.

-

全球交易管理器(全局事务管理器)

负责管理集群事务信息,同时管理集群的全局对象,比如序列等.

接下来,让我们来看看如何从源码开始,完成到Tbase集群环境的搭建.

Tbase源码编译安装

本人在源码安装过程中,发现TBase源码安装需依赖readline-devel、uuid、uuid-devel、postgresql这些包,请各位安装自行检查系统是否已安装,否则在安装过程中会出现依赖Error.

Centos安装命令:yum install -y readline-devel

-

创建tbase用户

注意:所有需要安装Tbase集群的机器上都需要创建

mkdir /data useradd -d /data/tbase tbase -

源码获取

git clone https://github.com/Tencent/TBase -

添加环境变量 vim /etc/profile 添加以下内容后运行 source /etc/profile命令使其生效

${SOURCECODE_PATH}=/data/tbase/TBase-master

${install_path}=/data/tbase/install

-

源码编译

cd ${SOURCECODE_PATH} rm -rf ${INSTALL_PATH}/tbase_bin_v2.0 chmod +x configure* ./configure --prefix=${INSTALL_PATH}/tbase_bin_v2.0 --enable-user-switch --with-openssl --with-ossp-uuid CFLAGS=-g make clean make -sj make install chmod +x contrib/pgxc_ctl/make_signature cd contrib make -sj make install

集群安装

- 集群规划

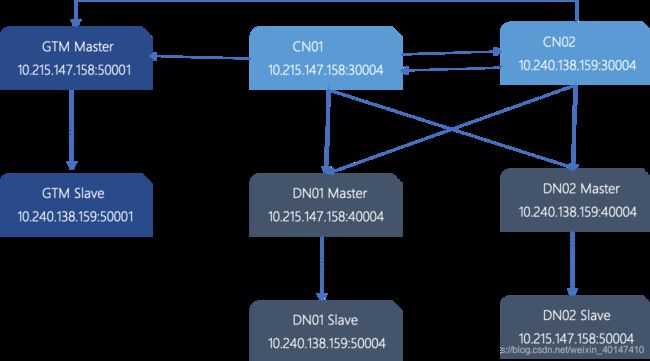

下面以两台服务器上搭建1 GTM主,1 GTM备,2 CN主(CN主之间对等,因此无需备CN),2 DN主,2 DN备的集群,该集群为具备容灾能力的最小配置

机器1:10.215.147.158

机器2:10.240.138.159

集群规划如下:

| 节点名称 | IP | 数据目录 |

|---|---|---|

| GTM主机 | 10.215.147.158 | /Data/tbase/data/gtm |

| GTM从机 | 10.240.138.159 | /Data/tbase/data/gtm |

| CN1 | 10.215.147.158 | /data/tbase/data/coord |

| 二氯化萘 | 10.240.138.159 | /data/tbase/data/coord |

| DN1主 | 10.215.147.158 | /Data/tbase/data/dn001 |

| DN1奴隶 | 10.240.138.159 | /Data/tbase/data/dn001 |

| DN2主 | 10.240.138.159 | /Data/tbase/data/dn002 |

| DN2奴隶 | 10.215.147.158 | /Data/tbase/data/dn002 |

- 机器间的ssh互信配置 参考:https://blog.csdn.net/chenghuikai/article/details/52807074

- 环境变量配置

集群所有机器都需要配置

[tbase@TENCENT64 ~]$ vim ~/.bashrc

export TBASE_HOME=/data/tbase/install/tbase_bin_v2.0

export PATH=$TBASE_HOME/bin:$PATH

export LD_LIBRARY_PATH=$TBASE_HOME/lib:${LD_LIBRARY_PATH}以上,已经配置好了所需要基础环境,可以进入到集群初始化阶段,为了方便用户,Tbase提供了专用的配置和操作工具:Pgxc_ctl来协助用户快速搭建并管理集群,首先需要将前文所述的节点的ip,端口,目录写入到配置文件pgxc_ctl.conf中.

- 初始化pgxc_ctl.conf文件

[tbase@TENCENT64 ~]$ mkdir /data/tbase/pgxc_ctl

[tbase@TENCENT64 ~]$ cd /data/tbase/pgxc_ctl

[tbase@TENCENT64 ~/pgxc_ctl]$ vim pgxc_ctl.conf如下,是结合上文描述的ip,端口,数据库目录,二进制目录等规划来写的pgxc_ctl.conf文件.具体实践中只需按照自己的实际情况配置好即可.

#!/bin/bash

pgxcInstallDir=/data/tbase/install/tbase_bin_v2.0

pgxcOwner=tbase

defaultDatabase=postgres

pgxcUser=$pgxcOwner

tmpDir=/tmp

localTmpDir=$tmpDir

configBackup=n

configBackupHost=pgxc-linker

configBackupDir=$HOME/pgxc

configBackupFile=pgxc_ctl.bak

#---- GTM ----------

gtmName=gtm

gtmMasterServer=10.215.147.158

gtmMasterPort=50001

gtmMasterDir=/data/tbase/data/gtm

gtmExtraConfig=none

gtmMasterSpecificExtraConfig=none

gtmSlave=y

gtmSlaveServer=10.240.138.159

gtmSlavePort=50001

gtmSlaveDir=/data/tbase/data/gtm

gtmSlaveSpecificExtraConfig=none

#---- Coordinators -------

coordMasterDir=/data/tbase/data/coord

coordMasterDir=/data/tbase/data/coord

coordArchLogDir=/data/tbase/data/coord_archlog

coordNames=(cn001 cn002 )

coordPorts=(30004 30004 )

poolerPorts=(31110 31110 )

coordPgHbaEntries=(0.0.0.0/0)

coordMasterServers=(10.215.147.158 10.240.138.159)

coordMasterDirs=($coordMasterDir $coordMasterDir)

coordMaxWALsernder=2

coordMaxWALSenders=($coordMaxWALsernder $coordMaxWALsernder )

coordSlave=n

coordSlaveSync=n

coordArchLogDirs=($coordArchLogDir $coordArchLogDir)

#---- Datanodes ---------------------

dn1MstrDir=/data/tbase/data/dn001

dn2MstrDir=/data/tbase/data/dn002

dn1SlvDir=/data/tbase/data/dn001

dn2SlvDir=/data/tbase/data/dn002

dn1ALDir=/data/tbase/data/datanode_archlog

dn2ALDir=/data/tbase/data/datanode_archlog

primaryDatanode=dn001

datanodeNames=(dn001 dn002)

datanodePorts=(40004 40004)

datanodePoolerPorts=(41110 41110)

datanodePgHbaEntries=(0.0.0.0/0)

datanodeMasterServers=(10.215.147.158 10.240.138.159)

datanodeMasterDirs=($dn1MstrDir $dn2MstrDir)

dnWALSndr=4

datanodeMaxWALSenders=($dnWALSndr $dnWALSndr)

datanodeSlave=y

datanodeSlaveServers=(10.240.138.159 10.215.147.158)

datanodeSlavePorts=(50004 54004)

datanodeSlavePoolerPorts=(51110 51110)

datanodeSlaveSync=n

datanodeSlaveDirs=($dn1SlvDir $dn2SlvDir)

datanodeArchLogDirs=($dn1ALDir/dn001 $dn2ALDir/dn002)

datanodeSpecificExtraPgHba=(none none)

datanodeAdditionalSlaves=n

walArchive=n注意:官方文档中配置有很多,但我使用官方配置文件内容的时,启动一直会报错,要么启动数据节点无法启动,这是个人尝试后数据节点正常启动无报错的配置。

-

- 分发二进制包

在一个节点配置好配置文件后,需要预先将二进制包部署到所有节点所在的机器上,这个可以使用pgxc_ctl工具,执行部署所有命令来完成.

[tbase@TENCENT64 ~/pgxc_ctl]$ pgxc_ctl /usr/bin/bash Installing pgxc_ctl_bash script as /data/tbase/pgxc_ctl/pgxc_ctl_bash. Installing pgxc_ctl_bash script as /data/tbase/pgxc_ctl/pgxc_ctl_bash. Reading configuration using /data/tbase/pgxc_ctl/pgxc_ctl_bash --home /data/tbase/pgxc_ctl --configuration /data/tbase/pgxc_ctl/pgxc_ctl.conf Finished reading configuration. ******** PGXC_CTL START *************** Current directory: /data/tbase/pgxc_ctl PGXC deploy all Deploying Postgres-XL components to all the target servers. Prepare tarball to deploy ... Deploying to the server 10.215.147.158. Deploying to the server 10.240.138.159. Deployment done. 登录到所有节点,check二进制包是否分发OK [tbase@TENCENT64 ~/install]$ ls /data/tbase/install/tbase_bin_v2.0 bin include lib share- 执行全部命令,完成集群初始化命令

[tbase@TENCENT64 ~]$ pgxc_ctl /usr/bin/bash Installing pgxc_ctl_bash script as /data/tbase/pgxc_ctl/pgxc_ctl_bash. Installing pgxc_ctl_bash script as /data/tbase/pgxc_ctl/pgxc_ctl_bash. Reading configuration using /data/tbase/pgxc_ctl/pgxc_ctl_bash --home /data/tbase/pgxc_ctl --configuration /data/tbase/pgxc_ctl/pgxc_ctl.conf Finished reading configuration. ******** PGXC_CTL START *************** Current directory: /data/tbase/pgxc_ctl PGXC init all Initialize GTM master .... .... Initialize datanode slave dn001 Initialize datanode slave dn002 mkdir: cannot create directory '/data1/tbase': Permission denied chmod: cannot access '/data1/tbase/data/dn001': No such file or directory pg_ctl: directory "/data1/tbase/data/dn001" does not exist pg_basebackup: could not create directory "/data1/tbase": Permission denied- 安装错误处理

一般init集群出错,终端会打印出错误日志,通过查看错误原因,更改配置即可,或者可以通过/data/tbase/pgxc_ctl/pgxc_log路径下的错误日志查看错误,排查配置文件的错误

[tbase@TENCENT64 ~]$ ll ~/pgxc_ctl/pgxc_log/ total 184 -rw-rw-r-- 1 tbase tbase 81123 Nov 13 17:22 14105_pgxc_ctl.log -rw-rw-r-- 1 tbase tbase 2861 Nov 13 17:58 15762_pgxc_ctl.log -rw-rw-r-- 1 tbase tbase 14823 Nov 14 07:59 16671_pgxc_ctl.log -rw-rw-r-- 1 tbase tbase 2721 Nov 13 16:52 18891_pgxc_ctl.log -rw-rw-r-- 1 tbase tbase 1409 Nov 13 16:20 22603_pgxc_ctl.log -rw-rw-r-- 1 tbase tbase 60043 Nov 13 16:33 28932_pgxc_ctl.log -rw-rw-r-- 1 tbase tbase 15671 Nov 14 07:57 6849_pgxc_ctl.log通过运行pgxc_ctl工具,执行洗净命令删除已经初始化的文件,修改pgxc_ctl.conf文件,重新执行全部命令重新发起初始化.

[tbase@TENCENT64 ~]$ pgxc_ctl /usr/bin/bash Installing pgxc_ctl_bash script as /data/tbase/pgxc_ctl/pgxc_ctl_bash. Installing pgxc_ctl_bash script as /data/tbase/pgxc_ctl/pgxc_ctl_bash. Reading configuration using /data/tbase/pgxc_ctl/pgxc_ctl_bash --home /data/tbase/pgxc_ctl --configuration /data/tbase/pgxc_ctl/pgxc_ctl.conf Finished reading configuration. ******** PGXC_CTL START *************** Current directory: /data/tbase/pgxc_ctl PGXC clean all [tbase@TENCENT64 ~]$ pgxc_ctl /usr/bin/bash Installing pgxc_ctl_bash script as /data/tbase/pgxc_ctl/pgxc_ctl_bash. Installing pgxc_ctl_bash script as /data/tbase/pgxc_ctl/pgxc_ctl_bash. Reading configuration using /data/tbase/pgxc_ctl/pgxc_ctl_bash --home /data/tbase/pgxc_ctl --configuration /data/tbase/pgxc_ctl/pgxc_ctl.conf Finished reading configuration. ******** PGXC_CTL START *************** Current directory: /data/tbase/pgxc_ctl PGXC init all Initialize GTM master EXECUTE DIRECT ON (dn002) 'ALTER NODE dn002 WITH (TYPE=''datanode'', HOST=''10.240.138.159'', PORT=40004, PREFERRED)'; EXECUTE DIRECT EXECUTE DIRECT ON (dn002) 'SELECT pgxc_pool_reload()'; pgxc_pool_reload ------------------ t (1 row) Done. -

查看集群状态当发现上面的输出时,集群已经OK,另外也可以通过pgxc_ctl工具的监视所有命令来查看集群状态

[tbase@TENCENT64 ~/pgxc_ctl]$ pgxc_ctl /usr/bin/bash Installing pgxc_ctl_bash script as /data/tbase/pgxc_ctl/pgxc_ctl_bash. Installing pgxc_ctl_bash script as /data/tbase/pgxc_ctl/pgxc_ctl_bash. Reading configuration using /data/tbase/pgxc_ctl/pgxc_ctl_bash --home /data/tbase/pgxc_ctl --configuration /data/tbase/pgxc_ctl/pgxc_ctl.conf Finished reading configuration. ******** PGXC_CTL START *************** Current directory: /data/tbase/pgxc_ctl PGXC monitor all Running: gtm master Not running: gtm slave Running: coordinator master cn001 Running: coordinator master cn002 Running: datanode master dn001 Running: datanode slave dn001 Running: datanode master dn002 Not running: datanode slave dn002一般的如果配置的不是强同步模式,GTM Salve,DN从的故障不会影响访问。

-

集群访问访问TBase集群和访问单机的PostgreSQL基本上无差别,我们可以通过任意一个cn访问数据库集群:例如通过连接cn节点选择pgxc_node表即可查看集群的拓扑结构(当前的配置下备机不会展示在pgxc_node中),在linux命令行下通过psql访问的具体示例如下

[tbase@TENCENT64 ~/pgxc_ctl]$ psql -h 10.215.147.158 -p 30004 -d postgres -U tbase psql (PostgreSQL 10.0 TBase V2) Type "help" for help. postgres=# \d Did not find any relations. postgres=# select * from pgxc_node; node_name | node_type | node_port | node_host | nodeis_primary | nodeis_preferred | node_id | node_cluster_name -----------+-----------+-----------+----------------+----------------+------------------+------------+------------------- gtm | G | 50001 | 10.215.147.158 | t | f | 428125959 | tbase_cluster cn001 | C | 30004 | 10.215.147.158 | f | f | -264077367 | tbase_cluster cn002 | C | 30004 | 10.240.138.159 | f | f | -674870440 | tbase_cluster dn001 | D | 40004 | 10.215.147.158 | t | t | 2142761564 | tbase_cluster dn002 | D | 40004 | 10.240.138.159 | f | f | -17499968 | tbase_cluster (5 rows)- 使用数据库前需要创建默认组以及切分表

TBase使用DataNode组来增加节点的管理灵活度,要求有一个默认组才能使用,因此需要预先创建;一般情况下,会将节点的所有DataNode节点加入到默认组里另外一方面,TBase的数据分布为了增加灵活度,加了中间逻辑层来维护数据记录到物理节点的映射,我们叫切分,所以需要预先创建切分,命令如下:

postgres=# create default node group default_group with (dn001,dn002); CREATE NODE GROUP postgres=# create sharding group to group default_group; CREATE SHARDING GROUP- 创建数据库,用户,创建表,增删查改等操作

至此,就可以跟使用单机数据库一样来访问数据库集群了

postgres=# create database test; CREATE DATABASE postgres=# create user test with password 'test'; CREATE ROLE postgres=# alter database test owner to test; ALTER DATABASE postgres=# \c test test You are now connected to database "test" as user "test". test=> create table foo(id bigint, str text) distribute by shard(id); CREATE TABLE test=> insert into foo values(1, 'tencent'), (2, 'shenzhen'); COPY 2 test=> select * from foo; id | str ----+---------- 1 | tencent 2 | shenzhen (2 rows) - 注意:本人在访问集群时,出现无法访问dn002的机器数据节点的情况:

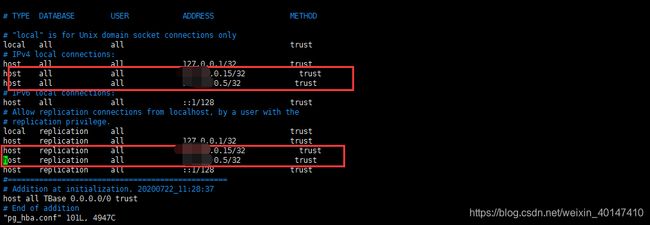

- 最后发现是cn节点的pg_hba.conf文件未配置对节点ip信任原因,添加以下配置后PGXC stop all -m fast 停止然后重新运行PGXC start al解决问题。

-

停止集群通过pgxc_ctl工具的停止一切命令来停止集群,停止所有后面可以加上参数-m快或者是**-m即时**来决定如何停止各个节点。

PGXC stop all -m fast Stopping all the coordinator masters. Stopping coordinator master cn001. Stopping coordinator master cn002. Done. Stopping all the datanode slaves. Stopping datanode slave dn001. Stopping datanode slave dn002. pg_ctl: PID file "/data/tbase/data/dn002/postmaster.pid" does not exist Is server running? Stopping all the datanode masters. Stopping datanode master dn001. Stopping datanode master dn002. Done. Stop GTM slave waiting for server to shut down..... done server stopped Stop GTM master waiting for server to shut down.... done server stopped PGXC monitor all Not running: gtm master Not running: gtm slave Not running: coordinator master cn001 Not running: coordinator master cn002 Not running: datanode master dn001 Not running: datanode slave dn001 Not running: datanode master dn002 Not running: datanode slave dn002 -

启动集群通过pgxc_ctl工具的开始所有命令来启动集群

[tbase@TENCENT64 ~]$ pgxc_ctl /usr/bin/bash Installing pgxc_ctl_bash script as /data/tbase/pgxc_ctl/pgxc_ctl_bash. Installing pgxc_ctl_bash script as /data/tbase/pgxc_ctl/pgxc_ctl_bash. Reading configuration using /data/tbase/pgxc_ctl/pgxc_ctl_bash --home /data/tbase/pgxc_ctl --configuration /data/tbase/pgxc_ctl/pgxc_ctl.conf Finished reading configuration. ******** PGXC_CTL START *************** Current directory: /data/tbase/pgxc_ctl PGXC start all