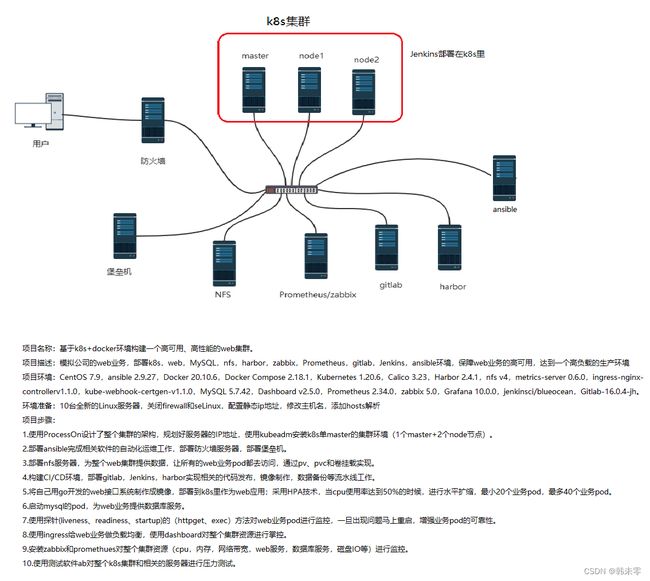

基于 k8s+docker 环境构建一个高可用、高性能的 web 集群。

目录

项目架构图

项目描述

项目环境

环境准备

IP地址规划

关闭selinux和firewall

配置静态ip地址

修改主机名

升级系统(可做可不做)

添加hosts解析

项目步骤

一.使用ProcessOn设计了整个集群的架构,规划好服务器的IP地址,使用kubeadm安装k8s单master的集群环境(1个master+2个node节点)。

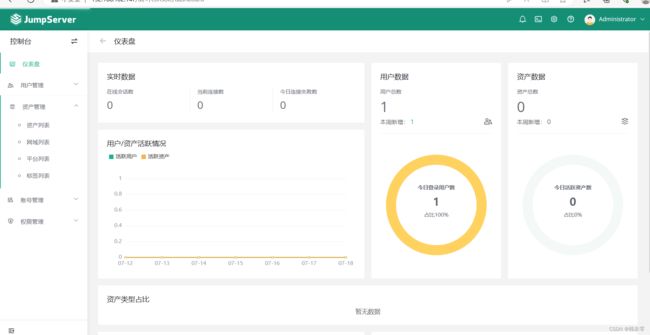

二.部署ansible完成相关软件的自动化运维工作,部署防火墙服务器,部署堡垒机。

部署堡垒机

部署firewall服务器

三.部署nfs服务器,为整个web集群提供数据,让所有的web业务pod都去访问,通过pv、pvc和卷挂载实现。

四.构建CI/CD环境,部署gitlab,Jenkins,harbor实现相关的代码发布,镜像制作,数据备份等流水线工作。

1.部署gitlab

2.部署Jenkins

3.部署harbor

五.将自己用go开发的web接口系统制作成镜像,部署到k8s里作为web应用;采用HPA技术,当cpu使用率达到50%的时候,进行水平扩缩,最小20个业务pod,最多40个业务pod。

六.启动mysql的pod,为web业务提供数据库服务。

尝试:k8s部署有状态的MySQL

七.使用探针(liveness、readiness、startup)的(httpget、exec)方法对web业务pod进行监控,一旦出现问题马上重启,增强业务pod的可靠性。

八.使用ingress给web业务做负载均衡,使用dashboard对整个集群资源进行掌控。

使用dashboard对整个集群资源进行掌控

九.安装zabbix和promethues对整个集群资源(cpu,内存,网络带宽,web服务,数据库服务,磁盘IO等)进行监控。

十.使用测试软件ab对整个k8s集群和相关的服务器进行压力测试。

项目架构图

项目描述

模拟公司的web业务,部署k8s,web,MySQL,nfs,harbor,zabbix,Prometheus,gitlab,Jenkins,ansible环境,保障web业务的高可用,达到一个高负载的生产环境。

项目环境

CentOS 7.9,ansible 2.9.27,Docker 20.10.6,Docker Compose 2.18.1,Kubernetes 1.20.6,Calico 3.23,Harbor 2.4.1,nfs v4,metrics-server 0.6.0,ingress-nginx-controllerv1.1.0,kube-webhook-certgen-v1.1.0,MySQL 5.7.42,Dashboard v2.5.0,Prometheus 2.34.0,zabbix 5.0,Grafana 10.0.0,jenkinsci/blueocean,Gitlab-16.0.4-jh。环境准备

10台全新的Linux服务器,关闭firewall和seLinux,配置静态ip地址,修改主机名,添加hosts解析

IP地址规划

| server | ip |

| k8smaster | 192.168.2.104 |

| k8snode1 | 192.168.2.111 |

| k8snode2 | 192.168.2.112 |

| ansibe | 192.168.2.119 |

| nfs | 192.168.2.121 |

| gitlab | 192.168.2.124 |

| harbor | 192.168.2.106 |

| zabbix | 192.168.2.117 |

| firewalld | 192.168.2.141 |

| Bastionhost | 192.168.2.140 |

关闭selinux和firewall

# 防火墙并且设置防火墙开启不启动

service firewalld stop && systemctl disable firewalld

# 临时关闭seLinux

setenforce 0

# 永久关闭seLinux

sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config

[root@k8smaster ~]# service firewalld stop

Redirecting to /bin/systemctl stop firewalld.service

[root@k8smaster ~]# systemctl disable firewalld

Removed symlink /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed symlink /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

[root@k8smaster ~]# reboot

[root@k8smaster ~]# getenforce

Disabled配置静态ip地址

cd /etc/sysconfig/network-scripts/

vim ifcfg-ens33

TYPE="Ethernet"

BOOTPROTO="static"

DEVICE="ens33"

NAME="ens33"

ONBOOT="yes"

IPADDR="192.168.2.104"

PREFIX=24

GATEWAY="192.168.2.1"

DNS1=114.114.114.114

TYPE="Ethernet"

BOOTPROTO="static"

DEVICE="ens33"

NAME="ens33"

ONBOOT="yes"

IPADDR="192.168.2.111"

PREFIX=24

GATEWAY="192.168.2.1"

DNS1=114.114.114.114

TYPE="Ethernet"

BOOTPROTO="static"

DEVICE="ens33"

NAME="ens33"

ONBOOT="yes"

IPADDR="192.168.2.112"

PREFIX=24

GATEWAY="192.168.2.1"

DNS1=114.114.114.114修改主机名

hostnamcectl set-hostname k8smaster

hostnamcectl set-hostname k8snode1

hostnamcectl set-hostname k8snode2

#切换用户,重新加载环境

su - root

[root@k8smaster ~]#

[root@k8snode1 ~]#

[root@k8snode2 ~]#升级系统(可做可不做)

yum update -y添加hosts解析

vim /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.2.104 k8smaster

192.168.2.111 k8snode1

192.168.2.112 k8snode2项目步骤

一.使用ProcessOn设计了整个集群的架构,规划好服务器的IP地址,使用kubeadm安装k8s单master的集群环境(1个master+2个node节点)。

# 1.互相之间建立免密通道

ssh-keygen # 一路回车

ssh-copy-id k8smaster

ssh-copy-id k8snode1

ssh-copy-id k8snode2

# 2.关闭交换分区(Kubeadm初始化的时候会检测)

# 临时关闭:swapoff -a

# 永久关闭:注释swap挂载,给swap这行开头加一下注释

[root@k8smaster ~]# cat /etc/fstab

#

# /etc/fstab

# Created by anaconda on Thu Mar 23 15:22:20 2023

#

# Accessible filesystems, by reference, are maintained under '/dev/disk'

# See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info

#

/dev/mapper/centos-root / xfs defaults 0 0

UUID=00236222-82bd-4c15-9c97-e55643144ff3 /boot xfs defaults 0 0

/dev/mapper/centos-home /home xfs defaults 0 0

#/dev/mapper/centos-swap swap swap defaults 0 0

# 3.加载相关内核模块

modprobe br_netfilter

echo "modprobe br_netfilter" >> /etc/profile

cat > /etc/sysctl.d/k8s.conf < kubeadm.yaml

[root@k8smaster ~]# vim kubeadm.yaml

apiVersion: kubeadm.k8s.io/v1beta2

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 192.168.2.104 #控制节点的ip

bindPort: 6443

nodeRegistration:

criSocket: /var/run/dockershim.sock

name: k8smaster #控制节点主机名

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.aliyuncs.com/google_containers # 需要修改为阿里云的仓库

kind: ClusterConfiguration

kubernetesVersion: v1.20.6

networking:

dnsDomain: cluster.local

serviceSubnet: 10.96.0.0/12

podSubnet: 10.244.0.0/16 #指定pod网段,需要新增加这个

scheduler: {}

#追加如下几行

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

mode: ipvs

---

apiVersion: kubelet.config.k8s.io/v1beta1

kind: KubeletConfiguration

cgroupDriver: systemd

# 12.基于kubeadm.yaml文件初始化k8s

[root@k8smaster ~]# kubeadm init --config=kubeadm.yaml --ignore-preflight-errors=SystemVerification

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

kubeadm join 192.168.2.104:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:83421a7d1baa62269508259b33e6563e45fbeb9139a9c214cbe9fc107f07cb4c

# 13.扩容k8s集群-添加工作节点

[root@k8snode1 ~]# kubeadm join 192.168.2.104:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:83421a7d1baa62269508259b33e6563e45fbeb9139a9c214cbe9fc107f07cb4c

[root@k8snode2 ~]# kubeadm join 192.168.2.104:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:83421a7d1baa62269508259b33e6563e45fbeb9139a9c214cbe9fc107f07cb4c

# 14.在k8smaster上查看集群节点状况

[root@k8smaster ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8smaster NotReady control-plane,master 2m49s v1.20.6

k8snode1 NotReady 19s v1.20.6

k8snode2 NotReady 14s v1.20.6

# 15.k8snode1,k8snode2的ROLES角色为空,就表示这个节点是工作节点。

可以把k8snode1,k8snode2的ROLES变成work

[root@k8smaster ~]# kubectl label node k8snode1 node-role.kubernetes.io/worker=worker

node/k8snode1 labeled

[root@k8smaster ~]# kubectl label node k8snode2 node-role.kubernetes.io/worker=worker

node/k8snode2 labeled

[root@k8smaster ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8smaster NotReady control-plane,master 2m43s v1.20.6

k8snode1 NotReady worker 2m15s v1.20.6

k8snode2 NotReady worker 2m11s v1.20.6

# 注意:上面状态都是NotReady状态,说明没有安装网络插件

# 16.安装kubernetes网络组件-Calico

# 上传calico.yaml到k8smaster上,使用yaml文件安装calico网络插件 。

wget https://docs.projectcalico.org/v3.23/manifests/calico.yaml --no-check-certificate

[root@k8smaster ~]# kubectl apply -f calico.yaml

configmap/calico-config created

customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/kubecontrollersconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org created

clusterrole.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrole.rbac.authorization.k8s.io/calico-node created

clusterrolebinding.rbac.authorization.k8s.io/calico-node created

daemonset.apps/calico-node created

serviceaccount/calico-node created

deployment.apps/calico-kube-controllers created

serviceaccount/calico-kube-controllers created

poddisruptionbudget.policy/calico-kube-controllers created

# 再次查看集群状态

[root@k8smaster ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8smaster Ready control-plane,master 5m57s v1.20.6

k8snode1 Ready worker 3m27s v1.20.6

k8snode2 Ready worker 3m22s v1.20.6

# STATUS状态是Ready,说明k8s集群正常运行了 二.部署ansible完成相关软件的自动化运维工作,部署防火墙服务器,部署堡垒机。

# 1.建立免密通道 在ansible主机上生成密钥对

[root@ansible ~]# ssh-keygen -t ecdsa

Generating public/private ecdsa key pair.

Enter file in which to save the key (/root/.ssh/id_ecdsa):

Created directory '/root/.ssh'.

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_ecdsa.

Your public key has been saved in /root/.ssh/id_ecdsa.pub.

The key fingerprint is:

SHA256:FNgCSDVk6i3foP88MfekA2UzwNn6x3kyi7V+mLdoxYE root@ansible

The key's randomart image is:

+---[ECDSA 256]---+

| ..+*o =. |

| .o .* o. |

| . +. . |

| . . ..= E . |

| o o +S+ o . |

| + o+ o O + |

| . . .= B X |

| . .. + B.o |

| ..o. +oo.. |

+----[SHA256]-----+

[root@ansible ~]# cd /root/.ssh

[root@ansible .ssh]# ls

id_ecdsa id_ecdsa.pub

# 2.上传公钥到所有服务器的root用户家目录下

# 所有服务器上开启ssh服务 ,开放22号端口,允许root用户登录

# 上传公钥到k8smaster

[root@ansible .ssh]# ssh-copy-id -i id_ecdsa.pub [email protected]

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "id_ecdsa.pub"

The authenticity of host '192.168.2.104 (192.168.2.104)' can't be established.

ECDSA key fingerprint is SHA256:l7LRfACELrI6mU2XvYaCz+sDBWiGkYnAecPgnxJxdvE.

ECDSA key fingerprint is MD5:b6:f7:e1:c5:23:24:5c:16:1f:66:42:ba:80:a6:3c:fd.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

[email protected]'s password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh '[email protected]'"

and check to make sure that only the key(s) you wanted were added.

# 上传公钥到k8snode

[root@ansible .ssh]# ssh-copy-id -i id_ecdsa.pub [email protected]

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "id_ecdsa.pub"

The authenticity of host '192.168.2.111 (192.168.2.111)' can't be established.

ECDSA key fingerprint is SHA256:l7LRfACELrI6mU2XvYaCz+sDBWiGkYnAecPgnxJxdvE.

ECDSA key fingerprint is MD5:b6:f7:e1:c5:23:24:5c:16:1f:66:42:ba:80:a6:3c:fd.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

[email protected]'s password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh '[email protected]'"

and check to make sure that only the key(s) you wanted were added.

[root@ansible .ssh]# ssh-copy-id -i id_ecdsa.pub [email protected]

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "id_ecdsa.pub"

The authenticity of host '192.168.2.112 (192.168.2.112)' can't be established.

ECDSA key fingerprint is SHA256:l7LRfACELrI6mU2XvYaCz+sDBWiGkYnAecPgnxJxdvE.

ECDSA key fingerprint is MD5:b6:f7:e1:c5:23:24:5c:16:1f:66:42:ba:80:a6:3c:fd.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

[email protected]'s password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh '[email protected]'"

and check to make sure that only the key(s) you wanted were added.

# 验证是否实现免密码密钥认证

[root@ansible .ssh]# ssh [email protected]

Last login: Tue Jun 20 10:33:33 2023 from 192.168.2.240

[root@nfs ~]# exit

登出

Connection to 192.168.2.121 closed.

[root@ansible .ssh]# ssh [email protected]

Last login: Tue Jun 20 10:34:18 2023 from 192.168.2.240

[root@k8snode2 ~]# exit

登出

Connection to 192.168.2.112 closed.

[root@ansible .ssh]#

# 3.安装ansible,在管理节点上

# 目前,只要机器上安装了 Python 2.6 或 Python 2.7 (windows系统不可以做控制主机),都可以运行Ansible.

[root@ansible .ssh]# yum install epel-release -y

[root@ansible .ssh]# yum install ansible -y

[root@ansible ~]# ansible --version

ansible 2.9.27

config file = /etc/ansible/ansible.cfg

configured module search path = [u'/root/.ansible/plugins/modules', u'/usr/share/ansible/plugins/modules']

ansible python module location = /usr/lib/python2.7/site-packages/ansible

executable location = /usr/bin/ansible

python version = 2.7.5 (default, Oct 14 2020, 14:45:30) [GCC 4.8.5 20150623 (Red Hat 4.8.5-44)]

# 4.编写主机清单

[root@ansible .ssh]# cd /etc/ansible

[root@ansible ansible]# ls

ansible.cfg hosts roles

[root@ansible ansible]# vim hosts

## 192.168.1.110

[k8smaster]

192.168.2.104

[k8snode]

192.168.2.111

192.168.2.112

[nfs]

192.168.2.121

[gitlab]

192.168.2.124

[harbor]

192.168.2.106

[zabbix]

192.168.2.117

# 测试

[root@ansible ansible]# ansible all -m shell -a "ip add"部署堡垒机

仅需两步快速安装 JumpServer:

准备一台 2核4G (最低)且可以访问互联网的 64 位 Linux 主机;

以 root 用户执行如下命令一键安装 JumpServer。

curl -sSL https://resource.fit2cloud.com/jumpserver/jumpserver/releases/latest/download/quick_start.sh | bash部署firewall服务器

# 关闭虚拟机,增加一块网卡(ens37)

# 编写脚本实现SNAT_DNAT功能

[root@firewalld ~]# cat snat_dnat.sh

#!/bin/bash

# open route

echo 1 >/proc/sys/net/ipv4/ip_forward

# stop firewall

systemctl stop firewalld

systemctl disable firewalld

# clear iptables rule

iptables -F

iptables -t nat -F

# enable snat

iptables -t nat -A POSTROUTING -s 192.168.2.0/24 -o ens33 -j MASQUERADE

#内网来的192.168.2.0网段过来的ip地址全部伪装(替换)为ens33接口的公网ip地址,好处就是不需要考虑ens33接口的ip地址是多少,你是哪个ip地址,我就伪装成哪个ip地址

# enable dnat

iptables -t nat -A PREROUTING -d 192.168.0.169 -i ens33 -p tcp --dport 2233 -j DNAT --to-destination 192.168.2.104:22

# open web 80

iptables -t nat -A PREROUTING -d 192.168.0.169 -i ens33 -p tcp --dport 80 -j DNAT --to-destination 192.168.2.104:80

# web服务器上操作

[root@k8smaster ~]# cat open_app.sh

#!/bin/bash

# open ssh

iptables -t filter -A INPUT -p tcp --dport 22 -j ACCEPT

# open dns

iptables -t filter -A INPUT -p udp --dport 53 -s 192.168.2.0/24 -j ACCEPT

# open dhcp

iptables -t filter -A INPUT -p udp --dport 67 -j ACCEPT

# open http/https

iptables -t filter -A INPUT -p tcp --dport 80 -j ACCEPT

iptables -t filter -A INPUT -p tcp --dport 443 -j ACCEPT

# open mysql

iptables -t filter -A INPUT -p tcp --dport 3306 -j ACCEPT

# default policy DROP

iptables -t filter -P INPUT DROP

# drop icmp request

iptables -t filter -A INPUT -p icmp --icmp-type 8 -j DROP三.部署nfs服务器,为整个web集群提供数据,让所有的web业务pod都去访问,通过pv、pvc和卷挂载实现。

# 1.搭建好nfs服务器

[root@nfs ~]# yum install nfs-utils -y

# 建议k8s集群内的所有的节点都安装nfs-utils软件,因为节点服务器里创建卷需要支持nfs网络文件系统

[root@k8smaster ~]# yum install nfs-utils -y

[root@k8smaster ~]# service nfs restart

Redirecting to /bin/systemctl restart nfs.service

[root@k8smaster ~]# ps aux |grep nfs

root 87368 0.0 0.0 0 0 ? S< 16:49 0:00 [nfsd4_callbacks]

root 87374 0.0 0.0 0 0 ? S 16:49 0:00 [nfsd]

root 87375 0.0 0.0 0 0 ? S 16:49 0:00 [nfsd]

root 87376 0.0 0.0 0 0 ? S 16:49 0:00 [nfsd]

root 87377 0.0 0.0 0 0 ? S 16:49 0:00 [nfsd]

root 87378 0.0 0.0 0 0 ? S 16:49 0:00 [nfsd]

root 87379 0.0 0.0 0 0 ? S 16:49 0:00 [nfsd]

root 87380 0.0 0.0 0 0 ? S 16:49 0:00 [nfsd]

root 87381 0.0 0.0 0 0 ? S 16:49 0:00 [nfsd]

root 96648 0.0 0.0 112824 988 pts/0 S+ 17:02 0:00 grep --color=auto nfs

# 2.设置共享目录

[root@nfs ~]# vim /etc/exports

[root@nfs ~]# cat /etc/exports

/web 192.168.2.0/24(rw,no_root_squash,sync)

# 3.新建共享目录和index.html

[root@nfs ~]# mkdir /web

[root@nfs ~]# cd /web

[root@nfs web]# echo "welcome to changsha" >index.html

[root@nfs web]# ls

index.html

[root@nfs web]# ll -d /web

drwxr-xr-x. 2 root root 24 6月 18 16:46 /web

# 4.刷新nfs或者重新输出共享目录

[root@nfs ~]# exportfs -r #输出所有共享目录

[root@nfs ~]# exportfs -v #显示输出的共享目录

/web 192.168.2.0/24(sync,wdelay,hide,no_subtree_check,sec=sys,rw,secure,no_root_squash,no_all_squash)

# 5.重启nfs服务并且设置nfs开机自启

[root@nfs web]# systemctl restart nfs && systemctl enable nfs

Created symlink from /etc/systemd/system/multi-user.target.wants/nfs-server.service to /usr/lib/systemd/system/nfs-server.service.

# 6.在k8s集群里的任意一个节点服务器上测试能否挂载nfs服务器共享的目录

[root@k8snode1 ~]# mkdir /node1_nfs

[root@k8snode1 ~]# mount 192.168.2.121:/web /node1_nfs

您在 /var/spool/mail/root 中有新邮件

[root@k8snode1 ~]# df -Th|grep nfs

192.168.2.121:/web nfs4 17G 1.5G 16G 9% /node1_nfs

# 7.取消挂载

[root@k8snode1 ~]# umount /node1_nfs

# 8.创建pv使用nfs服务器上的共享目录

[root@k8smaster pv]# vim nfs-pv.yml

[root@k8smaster pv]# cat nfs-pv.yml

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv-web

labels:

type: pv-web

spec:

capacity:

storage: 10Gi

accessModes:

- ReadWriteMany

storageClassName: nfs # pv对应的名字

nfs:

path: "/web" # nfs共享的目录

server: 192.168.2.121 # nfs服务器的ip地址

readOnly: false # 访问模式

[root@k8smaster pv]# kubectl apply -f nfs-pv.yml

persistentvolume/pv-web created

[root@k8smaster pv]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pv-web 10Gi RWX Retain Available nfs 5s

# 9.创建pvc使用pv

[root@k8smaster pv]# vim nfs-pvc.yml

[root@k8smaster pv]# cat nfs-pvc.yml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pvc-web

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Gi

storageClassName: nfs #使用nfs类型的pv

[root@k8smaster pv]# kubectl apply -f pvc-nfs.yaml

persistentvolumeclaim/sc-nginx-pvc created

[root@k8smaster pv]# kubectl apply -f nfs-pvc.yml

persistentvolumeclaim/pvc-web created

[root@k8smaster pv]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

pvc-web Bound pv-web 10Gi RWX nfs 6s

# 10.创建pod使用pvc

[root@k8smaster pv]# vim nginx-deployment.yaml

[root@k8smaster pv]# cat nginx-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

labels:

app: nginx

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

volumes:

- name: sc-pv-storage-nfs

persistentVolumeClaim:

claimName: pvc-web

containers:

- name: sc-pv-container-nfs

image: nginx

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

name: "http-server"

volumeMounts:

- mountPath: "/usr/share/nginx/html"

name: sc-pv-storage-nfs

[root@k8smaster pv]# kubectl apply -f nginx-deployment.yaml

deployment.apps/nginx-deployment created

[root@k8smaster pv]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-deployment-76855d4d79-2q4vh 1/1 Running 0 42s 10.244.185.194 k8snode2

nginx-deployment-76855d4d79-mvgq7 1/1 Running 0 42s 10.244.185.195 k8snode2

nginx-deployment-76855d4d79-zm8v4 1/1 Running 0 42s 10.244.249.3 k8snode1

# 11.测试访问

[root@k8smaster pv]# curl 10.244.185.194

welcome to changsha

[root@k8smaster pv]# curl 10.244.185.195

welcome to changsha

[root@k8smaster pv]# curl 10.244.249.3

welcome to changsha

[root@k8snode1 ~]# curl 10.244.185.194

welcome to changsha

[root@k8snode1 ~]# curl 10.244.185.195

welcome to changsha

[root@k8snode1 ~]# curl 10.244.249.3

welcome to changsha

[root@k8snode2 ~]# curl 10.244.185.194

welcome to changsha

[root@k8snode2 ~]# curl 10.244.185.195

welcome to changsha

[root@k8snode2 ~]# curl 10.244.249.3

welcome to changsha

# 12.修改内容

[root@nfs web]# echo "hello,world" >> index.html

[root@nfs web]# cat index.html

welcome to changsha

hello,world

# 13.再次访问

[root@k8snode1 ~]# curl 10.244.249.3

welcome to changsha

hello,world 四.构建CI/CD环境,部署gitlab,Jenkins,harbor实现相关的代码发布,镜像制作,数据备份等流水线工作。

1.部署gitlab

# 部署gitlab

https://gitlab.cn/install/

[root@localhost ~]# hostnamectl set-hostname gitlab

[root@localhost ~]# su - root

su - root

上一次登录:日 6月 18 18:28:08 CST 2023从 192.168.2.240pts/0 上

[root@gitlab ~]# cd /etc/sysconfig/network-scripts/

[root@gitlab network-scripts]# vim ifcfg-ens33

[root@gitlab network-scripts]# service network restart

Restarting network (via systemctl): [ 确定 ]

[root@gitlab network-scripts]# sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config

[root@gitlab network-scripts]# service firewalld stop && systemctl disable firewalld

Redirecting to /bin/systemctl stop firewalld.service

Removed symlink /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed symlink /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

[root@gitlab network-scripts]# reboot

[root@gitlab ~]# getenforce

Disabled

# 1.安装和配置必须的依赖项

yum install -y curl policycoreutils-python openssh-server perl

# 2.配置极狐GitLab 软件源镜像

[root@gitlab ~]# curl -fsSL https://packages.gitlab.cn/repository/raw/scripts/setup.sh | /bin/bash

==> Detected OS centos

==> Add yum repo file to /etc/yum.repos.d/gitlab-jh.repo

[gitlab-jh]

name=JiHu GitLab

baseurl=https://packages.gitlab.cn/repository/el/$releasever/

gpgcheck=0

gpgkey=https://packages.gitlab.cn/repository/raw/gpg/public.gpg.key

priority=1

enabled=1

==> Generate yum cache for gitlab-jh

==> Successfully added gitlab-jh repo. To install JiHu GitLab, run "sudo yum/dnf install gitlab-jh".

[root@gitlab ~]# yum install gitlab-jh -y

Thank you for installing JiHu GitLab!

GitLab was unable to detect a valid hostname for your instance.

Please configure a URL for your JiHu GitLab instance by setting `external_url`

configuration in /etc/gitlab/gitlab.rb file.

Then, you can start your JiHu GitLab instance by running the following command:

sudo gitlab-ctl reconfigure

For a comprehensive list of configuration options please see the Omnibus GitLab readme

https://jihulab.com/gitlab-cn/omnibus-gitlab/-/blob/main-jh/README.md

Help us improve the installation experience, let us know how we did with a 1 minute survey:

https://wj.qq.com/s2/10068464/dc66

[root@gitlab ~]# vim /etc/gitlab/gitlab.rb

external_url 'http://myweb.first.com'

[root@gitlab ~]# gitlab-ctl reconfigure

Notes:

Default admin account has been configured with following details:

Username: root

Password: You didn't opt-in to print initial root password to STDOUT.

Password stored to /etc/gitlab/initial_root_password. This file will be cleaned up in first reconfigure run after 24 hours.

NOTE: Because these credentials might be present in your log files in plain text, it is highly recommended to reset the password following https://docs.gitlab.com/ee/security/reset_user_password.html#reset-your-root-password.

gitlab Reconfigured!

# 查看密码

[root@gitlab ~]# cat /etc/gitlab/initial_root_password

# WARNING: This value is valid only in the following conditions

# 1. If provided manually (either via `GITLAB_ROOT_PASSWORD` environment variable or via `gitlab_rails['initial_root_password']` setting in `gitlab.rb`, it was provided before database was seeded for the first time (usually, the first reconfigure run).

# 2. Password hasn't been changed manually, either via UI or via command line.

#

# If the password shown here doesn't work, you must reset the admin password following https://docs.gitlab.com/ee/security/reset_user_password.html#reset-your-root-password.

Password: Al5rgYomhXDz5kNfDl3y8qunrSX334aZZxX5vONJ05s=

# NOTE: This file will be automatically deleted in the first reconfigure run after 24 hours.

# 可以登录后修改语言为中文

# 用户的profile/preferences

# 修改密码

[root@gitlab ~]# gitlab-rake gitlab:env:info

System information

System:

Proxy: no

Current User: git

Using RVM: no

Ruby Version: 3.0.6p216

Gem Version: 3.4.13

Bundler Version:2.4.13

Rake Version: 13.0.6

Redis Version: 6.2.11

Sidekiq Version:6.5.7

Go Version: unknown

GitLab information

Version: 16.0.4-jh

Revision: c2ed99db36f

Directory: /opt/gitlab/embedded/service/gitlab-rails

DB Adapter: PostgreSQL

DB Version: 13.11

URL: http://myweb.first.com

HTTP Clone URL: http://myweb.first.com/some-group/some-project.git

SSH Clone URL: [email protected]:some-group/some-project.git

Elasticsearch: no

Geo: no

Using LDAP: no

Using Omniauth: yes

Omniauth Providers:

GitLab Shell

Version: 14.20.0

Repository storages:

- default: unix:/var/opt/gitlab/gitaly/gitaly.socket

GitLab Shell path: /opt/gitlab/embedded/service/gitlab-shell

2.部署Jenkins

# Jenkins部署到k8s里

# 1.安装git软件

[root@k8smaster jenkins]# yum install git -y

# 2.下载相关的yaml文件

[root@k8smaster jenkins]# git clone https://github.com/scriptcamp/kubernetes-jenkins

正克隆到 'kubernetes-jenkins'...

remote: Enumerating objects: 16, done.

remote: Counting objects: 100% (7/7), done.

remote: Compressing objects: 100% (7/7), done.

remote: Total 16 (delta 1), reused 0 (delta 0), pack-reused 9

Unpacking objects: 100% (16/16), done.

[root@k8smaster jenkins]# ls

kubernetes-jenkins

[root@k8smaster jenkins]# cd kubernetes-jenkins/

[root@k8smaster kubernetes-jenkins]# ls

deployment.yaml namespace.yaml README.md serviceAccount.yaml service.yaml volume.yaml

# 3.创建命名空间

[root@k8smaster kubernetes-jenkins]# cat namespace.yaml

apiVersion: v1

kind: Namespace

metadata:

name: devops-tools

[root@k8smaster kubernetes-jenkins]# kubectl apply -f namespace.yaml

namespace/devops-tools created

[root@k8smaster kubernetes-jenkins]# kubectl get ns

NAME STATUS AGE

default Active 22h

devops-tools Active 19s

ingress-nginx Active 139m

kube-node-lease Active 22h

kube-public Active 22h

kube-system Active 22h

# 4.创建服务账号,集群角色,绑定

[root@k8smaster kubernetes-jenkins]# cat serviceAccount.yaml

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: jenkins-admin

rules:

- apiGroups: [""]

resources: ["*"]

verbs: ["*"]

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: jenkins-admin

namespace: devops-tools

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: jenkins-admin

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: jenkins-admin

subjects:

- kind: ServiceAccount

name: jenkins-admin

[root@k8smaster kubernetes-jenkins]# kubectl apply -f serviceAccount.yaml

clusterrole.rbac.authorization.k8s.io/jenkins-admin created

serviceaccount/jenkins-admin created

clusterrolebinding.rbac.authorization.k8s.io/jenkins-admin created

# 5.创建卷,用来存放数据

[root@k8smaster kubernetes-jenkins]# cat volume.yaml

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:

name: local-storage

provisioner: kubernetes.io/no-provisioner

volumeBindingMode: WaitForFirstConsumer

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: jenkins-pv-volume

labels:

type: local

spec:

storageClassName: local-storage

claimRef:

name: jenkins-pv-claim

namespace: devops-tools

capacity:

storage: 10Gi

accessModes:

- ReadWriteOnce

local:

path: /mnt

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- k8snode1 # 需要修改为k8s里的node节点的名字

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: jenkins-pv-claim

namespace: devops-tools

spec:

storageClassName: local-storage

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 3Gi

[root@k8smaster kubernetes-jenkins]# kubectl apply -f volume.yaml

storageclass.storage.k8s.io/local-storage created

persistentvolume/jenkins-pv-volume created

persistentvolumeclaim/jenkins-pv-claim created

[root@k8smaster kubernetes-jenkins]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

jenkins-pv-volume 10Gi RWO Retain Bound devops-tools/jenkins-pv-claim local-storage 33s

pv-web 10Gi RWX Retain Bound default/pvc-web nfs 21h

[root@k8smaster kubernetes-jenkins]# kubectl describe pv jenkins-pv-volume

Name: jenkins-pv-volume

Labels: type=local

Annotations:

Finalizers: [kubernetes.io/pv-protection]

StorageClass: local-storage

Status: Bound

Claim: devops-tools/jenkins-pv-claim

Reclaim Policy: Retain

Access Modes: RWO

VolumeMode: Filesystem

Capacity: 10Gi

Node Affinity:

Required Terms:

Term 0: kubernetes.io/hostname in [k8snode1]

Message:

Source:

Type: LocalVolume (a persistent volume backed by local storage on a node)

Path: /mnt

Events:

# 6.部署Jenkins

[root@k8smaster kubernetes-jenkins]# cat deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: jenkins

namespace: devops-tools

spec:

replicas: 1

selector:

matchLabels:

app: jenkins-server

template:

metadata:

labels:

app: jenkins-server

spec:

securityContext:

fsGroup: 1000

runAsUser: 1000

serviceAccountName: jenkins-admin

containers:

- name: jenkins

image: jenkins/jenkins:lts

imagePullPolicy: IfNotPresent

resources:

limits:

memory: "2Gi"

cpu: "1000m"

requests:

memory: "500Mi"

cpu: "500m"

ports:

- name: httpport

containerPort: 8080

- name: jnlpport

containerPort: 50000

livenessProbe:

httpGet:

path: "/login"

port: 8080

initialDelaySeconds: 90

periodSeconds: 10

timeoutSeconds: 5

failureThreshold: 5

readinessProbe:

httpGet:

path: "/login"

port: 8080

initialDelaySeconds: 60

periodSeconds: 10

timeoutSeconds: 5

failureThreshold: 3

volumeMounts:

- name: jenkins-data

mountPath: /var/jenkins_home

volumes:

- name: jenkins-data

persistentVolumeClaim:

claimName: jenkins-pv-claim

[root@k8smaster kubernetes-jenkins]# kubectl apply -f deployment.yaml

deployment.apps/jenkins created

[root@k8smaster kubernetes-jenkins]# kubectl get deploy -n devops-tools

NAME READY UP-TO-DATE AVAILABLE AGE

jenkins 1/1 1 1 5m36s

[root@k8smaster kubernetes-jenkins]# kubectl get pod -n devops-tools

NAME READY STATUS RESTARTS AGE

jenkins-7fdc8dd5fd-bg66q 1/1 Running 0 19s

# 7.启动服务发布Jenkins的pod

[root@k8smaster kubernetes-jenkins]# cat service.yaml

apiVersion: v1

kind: Service

metadata:

name: jenkins-service

namespace: devops-tools

annotations:

prometheus.io/scrape: 'true'

prometheus.io/path: /

prometheus.io/port: '8080'

spec:

selector:

app: jenkins-server

type: NodePort

ports:

- port: 8080

targetPort: 8080

nodePort: 32000

[root@k8smaster kubernetes-jenkins]# kubectl apply -f service.yaml

service/jenkins-service created

[root@k8smaster kubernetes-jenkins]# kubectl get svc -n devops-tools

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

jenkins-service NodePort 10.104.76.252 8080:32000/TCP 24s

# 8.在Windows机器上访问Jenkins,宿主机ip+端口号

http://192.168.2.104:32000/login?from=%2F

# 9.进入pod里获取登录的密码

[root@k8smaster kubernetes-jenkins]# kubectl exec -it jenkins-7fdc8dd5fd-bg66q -n devops-tools -- bash

bash-5.1$ cat /var/jenkins_home/secrets/initialAdminPassword

b0232e2dad164f89ad2221e4c46b0d46

# 修改密码

[root@k8smaster kubernetes-jenkins]# kubectl get pod -n devops-tools

NAME READY STATUS RESTARTS AGE

jenkins-7fdc8dd5fd-5nn7m 1/1 Running 0 91s

3.部署harbor

# 前提是安装好 docker 和 docker compose

# 1.配置阿里云的repo源

yum install -y yum-utils

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

# 2.安装docker服务

yum install docker-ce-20.10.6 -y

# 启动docker,设置开机自启

systemctl start docker && systemctl enable docker.service

# 3.查看docker版本,docker compose版本

[root@harbor ~]# docker version

Client: Docker Engine - Community

Version: 24.0.2

API version: 1.41 (downgraded from 1.43)

Go version: go1.20.4

Git commit: cb74dfc

Built: Thu May 25 21:55:21 2023

OS/Arch: linux/amd64

Context: default

Server: Docker Engine - Community

Engine:

Version: 20.10.6

API version: 1.41 (minimum version 1.12)

Go version: go1.13.15

Git commit: 8728dd2

Built: Fri Apr 9 22:43:57 2021

OS/Arch: linux/amd64

Experimental: false

containerd:

Version: 1.6.21

GitCommit: 3dce8eb055cbb6872793272b4f20ed16117344f8

runc:

Version: 1.1.7

GitCommit: v1.1.7-0-g860f061

docker-init:

Version: 0.19.0

GitCommit: de40ad0

[root@harbor ~]# docker compose version

Docker Compose version v2.18.1

# 4.安装 docker-compose

[root@harbor ~]# ls

anaconda-ks.cfg docker-compose-linux-x86_64 harbor

[root@harbor ~]# chmod +x docker-compose-linux-x86_64

[root@harbor ~]# mv docker-compose-linux-x86_64 /usr/local/sbin/docker-compose

# 5.安装 harbor,到 harbor 官网或者 github 下载harbor源码包

[root@harbor harbor]# ls

harbor-offline-installer-v2.4.1.tgz

# 6.解压

[root@harbor harbor]# tar xf harbor-offline-installer-v2.4.1.tgz

[root@harbor harbor]# ls

harbor harbor-offline-installer-v2.4.1.tgz

[root@harbor harbor]# cd harbor

[root@harbor harbor]# ls

common.sh harbor.v2.4.1.tar.gz harbor.yml.tmpl install.sh LICENSE prepare

[root@harbor harbor]# pwd

/root/harbor/harbor

# 7.修改配置文件

[root@harbor harbor]# cat harbor.yml

# Configuration file of Harbor

# The IP address or hostname to access admin UI and registry service.

# DO NOT use localhost or 127.0.0.1, because Harbor needs to be accessed by external clients.

hostname: 192.168.2.106 # 修改为主机ip地址

# http related config

http:

# port for http, default is 80. If https enabled, this port will redirect to https port

port: 5000 # 修改成其他端口号

#https可以全关闭

# https related config

#https:

# https port for harbor, default is 443

#port: 443

# The path of cert and key files for nginx

#certificate: /your/certificate/path

#private_key: /your/private/key/path

# # Uncomment following will enable tls communication between all harbor components

# internal_tls:

# # set enabled to true means internal tls is enabled

# enabled: true

# # put your cert and key files on dir

# dir: /etc/harbor/tls/internal

# Uncomment external_url if you want to enable external proxy

# And when it enabled the hostname will no longer used

# external_url: https://reg.mydomain.com:8433

# The initial password of Harbor admin

# It only works in first time to install harbor

# Remember Change the admin password from UI after launching Harbor.

harbor_admin_password: Harbor12345 #登录密码

# Harbor DB configuration

database:

# The password for the root user of Harbor DB. Change this before any production use.

password: root123

# The maximum number of connections in the idle connection pool. If it <=0, no idle connections are retained.

max_idle_conns: 100

# The maximum number of open connections to the database. If it <= 0, then there is no limit on the number of open connections.

# Note: the default number of connections is 1024 for postgres of harbor.

max_open_conns: 900

# The default data volume

data_volume: /data

# 8.执行部署脚本

[root@harbor harbor]# ./install.sh

[Step 0]: checking if docker is installed ...

Note: docker version: 24.0.2

[Step 1]: checking docker-compose is installed ...

✖ Need to install docker-compose(1.18.0+) by yourself first and run this script again.

[root@harbor harbor]# ./install.sh

[+] Running 10/10

⠿ Network harbor_harbor Created 0.7s

⠿ Container harbor-log Started 1.6s

⠿ Container registry Started 5.2s

⠿ Container harbor-db Started 4.9s

⠿ Container harbor-portal Started 5.1s

⠿ Container registryctl Started 4.8s

⠿ Container redis Started 3.9s

⠿ Container harbor-core Started 6.5s

⠿ Container harbor-jobservice Started 9.0s

⠿ Container nginx Started 9.1s

✔ ----Harbor has been installed and started successfully.----

# 9.配置开机自启

[root@harbor harbor]# vim /etc/rc.local

[root@harbor harbor]# cat /etc/rc.local

#!/bin/bash

# THIS FILE IS ADDED FOR COMPATIBILITY PURPOSES

#

# It is highly advisable to create own systemd services or udev rules

# to run scripts during boot instead of using this file.

#

# In contrast to previous versions due to parallel execution during boot

# this script will NOT be run after all other services.

#

# Please note that you must run 'chmod +x /etc/rc.d/rc.local' to ensure

# that this script will be executed during boot.

touch /var/lock/subsys/local

/usr/local/sbin/docker-compose -f /root/harbor/harbor/docker-compose.yml up -d

# 10.设置权限

[root@harbor harbor]# chmod +x /etc/rc.local /etc/rc.d/rc.local

# 11.登录

http://192.168.2.106:5000/

# 账号:admin

# 密码:Harbor12345

# 新建一个项目

# 测试(以nginx为例进行推送到harbor上)

[root@harbor harbor]# docker image ls | grep nginx

nginx latest 605c77e624dd 17 months ago 141MB

goharbor/nginx-photon v2.4.1 78aad8c8ef41 18 months ago 45.7MB

[root@harbor harbor]# docker tag nginx:latest 192.168.2.106:5000/test/nginx1:v1

[root@harbor harbor]# docker image ls | grep nginx

192.168.2.106:5000/test/nginx1 v1 605c77e624dd 17 months ago 141MB

nginx latest 605c77e624dd 17 months ago 141MB

goharbor/nginx-photon v2.4.1 78aad8c8ef41 18 months ago 45.7MB

[root@harbor harbor]# docker push 192.168.2.106:5000/test/nginx1:v1

The push refers to repository [192.168.2.106:5000/test/nginx1]

Get https://192.168.2.106:5000/v2/: http: server gave HTTP response to HTTPS client

[root@harbor harbor]# vim /etc/docker/daemon.json

{

"insecure-registries":["192.168.2.106:5000"]

}

[root@harbor harbor]# docker login 192.168.2.106:5000

Username: admin

Password:

WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-store

Login Succeeded

[root@harbor harbor]# docker push 192.168.2.106:5000/test/nginx1:v1

The push refers to repository [192.168.2.106:5000/test/nginx1]

d874fd2bc83b: Pushed

32ce5f6a5106: Pushed

f1db227348d0: Pushed

b8d6e692a25e: Pushed

e379e8aedd4d: Pushed

2edcec3590a4: Pushed

v1: digest: sha256:ee89b00528ff4f02f2405e4ee221743ebc3f8e8dd0bfd5c4c20a2fa2aaa7ede3 size: 1570

[root@harbor harbor]# cat /etc/docker/daemon.json

{

"insecure-registries":["192.168.2.106:5000"]

} 五.将自己用go开发的web接口系统制作成镜像,部署到k8s里作为web应用;采用HPA技术,当cpu使用率达到50%的时候,进行水平扩缩,最小20个业务pod,最多40个业务pod。

# k8s集群每个节点都登入到harbor中,以便于从harbor中拉回镜像。

[root@k8snode2 ~]# cat /etc/docker/daemon.json

{

"registry-mirrors":["https://rsbud4vc.mirror.aliyuncs.com","https://registry.docker-cn.com","https://docker.mirrors.ustc.edu.cn","https://dockerhub.azk8s.cn","http://hub-mirror.c.163.com"],

"insecure-registries":["192.168.2.106:5000"],

"exec-opts": ["native.cgroupdriver=systemd"]

}

# 重新加载配置,重启docker服务

systemctl daemon-reload && systemctl restart docker

# 登录harbor

[root@k8smaster mysql]# docker login 192.168.2.106:5000

Username: admin

Password:

WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-store

Login Succeeded

[root@k8snode1 ~]# docker login 192.168.2.106:5000

Username: admin

Password:

WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-store

Login Succeeded

[root@k8snode2 ~]# docker login 192.168.2.106:5000

Username: admin

Password:

WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-store

Login Succeeded

# 测试:从harbor拉取nginx镜像

[root@k8snode1 ~]# docker pull 192.168.2.106:5000/test/nginx1:v1

[root@k8snode1 ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

mysql 5.7.42 2be84dd575ee 5 days ago 569MB

nginx latest 605c77e624dd 17 months ago 141MB

192.168.2.106:5000/test/nginx1 v1 605c77e624dd 17 months ago 141MB

# 制作镜像

[root@harbor ~]# cd go

[root@harbor go]# ls

scweb Dockerfile

[root@harbor go]# cat Dockerfile

FROM centos:7

WORKDIR /go

COPY . /go

RUN ls /go && pwd

ENTRYPOINT ["/go/scweb"]

[root@harbor go]# docker build -t scmyweb:1.1 .

[root@harbor go]# docker image ls | grep scweb

scweb 1.1 f845e97e9dfd 4 hours ago 214MB

[root@harbor go]# docker tag scweb:1.1 192.168.2.106:5000/test/web:v2

[root@harbor go]# docker image ls | grep web

192.168.2.106:5000/test/web v2 00900ace4935 4 minutes ago 214MB

scweb 1.1 00900ace4935 4 minutes ago 214MB

[root@harbor go]# docker push 192.168.2.106:5000/test/web:v2

The push refers to repository [192.168.2.106:5000/test/web]

3e252407b5c2: Pushed

193a27e04097: Pushed

b13a87e7576f: Pushed

174f56854903: Pushed

v1: digest: sha256:a723c83407c49e6fcf9aa67a041a4b6241cf9856170c1703014a61dec3726b29 size: 1153

[root@k8snode1 ~]# docker login 192.168.2.106:5000

Authenticating with existing credentials...

WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-store

Login Succeeded

[root@k8snode1 ~]# docker pull 192.168.2.106:5000/test/web:v2

v1: Pulling from test/web

2d473b07cdd5: Pull complete

bc5e56dd1476: Pull complete

694440c745ce: Pull complete

78694d1cffbb: Pull complete

Digest: sha256:a723c83407c49e6fcf9aa67a041a4b6241cf9856170c1703014a61dec3726b29

Status: Downloaded newer image for 192.168.2.106:5000/test/web:v2

192.168.2.106:5000/test/web:v1

[root@k8snode1 ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

192.168.2.106:5000/test/web v2 f845e97e9dfd 4 hours ago 214MB

[root@k8snode2 ~]# docker login 192.168.2.106:5000

Authenticating with existing credentials...

WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-store

Login Succeeded

[root@k8snode2 ~]# docker pull 192.168.2.106:5000/test/web:v2

v1: Pulling from test/web

2d473b07cdd5: Pull complete

bc5e56dd1476: Pull complete

694440c745ce: Pull complete

78694d1cffbb: Pull complete

Digest: sha256:a723c83407c49e6fcf9aa67a041a4b6241cf9856170c1703014a61dec3726b29

Status: Downloaded newer image for 192.168.2.106:5000/test/web:v2

192.168.2.106:5000/test/web:v1

[root@k8snode2 ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

192.168.2.106:5000/test/web v2 f845e97e9dfd 4 hours ago 214MB

# 采用HPA技术,当cpu使用率达到50%的时候,进行水平扩缩,最小1个,最多10个pod

# HorizontalPodAutoscaler(简称 HPA )自动更新工作负载资源(例如Deployment),目的是自动扩缩# 工作负载以满足需求。

https://kubernetes.io/zh-cn/docs/tasks/run-application/horizontal-pod-autoscale-walkthrough/

# 1.安装metrics server

# 下载components.yaml配置文件

wget https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/components.yaml

# 替换image

image: registry.aliyuncs.com/google_containers/metrics-server:v0.6.0

imagePullPolicy: IfNotPresent

args:

# // 新增下面两行参数

- --kubelet-insecure-tls

- --kubelet-preferred-address-types=InternalDNS,InternalIP,ExternalDNS,ExternalIP,Hostname

# 修改components.yaml配置文件

[root@k8smaster ~]# cat components.yaml

spec:

containers:

- args:

- --kubelet-insecure-tls

- --kubelet-preferred-address-types=InternalIP

- --cert-dir=/tmp

- --secure-port=4443

- --kubelet-preferred-address-types=InternalDNS,InternalIP,ExternalIP,Hostname

- --kubelet-use-node-status-port

- --metric-resolution=15s

image: registry.aliyuncs.com/google_containers/metrics-server:v0.6.0

imagePullPolicy: IfNotPresent

# 执行安装命令

[root@k8smaster metrics]# kubectl apply -f components.yaml

serviceaccount/metrics-server created

clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader created

clusterrole.rbac.authorization.k8s.io/system:metrics-server created

rolebinding.rbac.authorization.k8s.io/metrics-server-auth-reader created

clusterrolebinding.rbac.authorization.k8s.io/metrics-server:system:auth-delegator created

clusterrolebinding.rbac.authorization.k8s.io/system:metrics-server created

service/metrics-server created

deployment.apps/metrics-server created

apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io created

# 查看效果

[root@k8smaster metrics]# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-6949477b58-xdk88 1/1 Running 1 22h

calico-node-4knc8 1/1 Running 4 22h

calico-node-8jzrn 1/1 Running 1 22h

calico-node-9d7pt 1/1 Running 2 22h

coredns-7f89b7bc75-52c4x 1/1 Running 2 22h

coredns-7f89b7bc75-82jrx 1/1 Running 1 22h

etcd-k8smaster 1/1 Running 1 22h

kube-apiserver-k8smaster 1/1 Running 1 22h

kube-controller-manager-k8smaster 1/1 Running 1 22h

kube-proxy-8wp9c 1/1 Running 2 22h

kube-proxy-d46jp 1/1 Running 1 22h

kube-proxy-whg4f 1/1 Running 1 22h

kube-scheduler-k8smaster 1/1 Running 1 22h

metrics-server-6c75959ddf-hw7cs 1/1 Running 0 61s

# 能够使用下面的命令查看到pod的效果,说明metrics server已经安装成功

[root@k8smaster metrics]# kubectl top node

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

k8smaster 322m 16% 1226Mi 71%

k8snode1 215m 10% 874Mi 50%

k8snode2 190m 9% 711Mi 41%

# 确保metrics-server安装好

# 查看pod、apiservice验证metrics-server安装好了

[root@k8smaster HPA]# kubectl get pod -n kube-system|grep metrics

metrics-server-6c75959ddf-hw7cs 1/1 Running 4 6h35m

[root@k8smaster HPA]# kubectl get apiservice |grep metrics

v1beta1.metrics.k8s.io kube-system/metrics-server True 6h35m

[root@k8smaster HPA]# kubectl top node

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

k8smaster 349m 17% 1160Mi 67%

k8snode1 271m 13% 1074Mi 62%

k8snode2 226m 11% 1224Mi 71%

[root@k8snode1 ~]# docker images|grep metrics

registry.aliyuncs.com/google_containers/metrics-server v0.6.0 5787924fe1d8 14 months ago 68.8MB

您在 /var/spool/mail/root 中有新邮件

# node节点上查看

[root@k8snode1 ~]# docker images|grep metrics

registry.aliyuncs.com/google_containers/metrics-server v0.6.0 5787924fe1d8 17 months ago 68.8MB

kubernetesui/metrics-scraper v1.0.7 7801cfc6d5c0 2 years ago 34.4MB

# 2.以yaml文件启动web并暴露服务

[root@k8smaster hpa]# cat my-web.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: myweb

name: myweb

spec:

replicas: 3

selector:

matchLabels:

app: myweb

template:

metadata:

labels:

app: myweb

spec:

containers:

- name: myweb

image: 192.168.2.106:5000/test/web:v2

imagePullPolicy: IfNotPresent

ports:

- containerPort: 8000

resources:

limits:

cpu: 300m

requests:

cpu: 100m

---

apiVersion: v1

kind: Service

metadata:

labels:

app: myweb-svc

name: myweb-svc

spec:

selector:

app: myweb

type: NodePort

ports:

- port: 8000

protocol: TCP

targetPort: 8000

nodePort: 30001

[root@k8smaster HPA]# kubectl apply -f my-web.yaml

deployment.apps/myweb created

service/myweb-svc created

# 3.创建HPA功能

[root@k8smaster HPA]# kubectl autoscale deployment myweb --cpu-percent=50 --min=1 --max=10

horizontalpodautoscaler.autoscaling/myweb autoscaled

[root@k8smaster HPA]# kubectl get pod

NAME READY STATUS RESTARTS AGE

myweb-6dc7b4dfcb-9q85g 1/1 Running 0 9s

myweb-6dc7b4dfcb-ddq82 1/1 Running 0 9s

myweb-6dc7b4dfcb-l7sw7 1/1 Running 0 9s

[root@k8smaster HPA]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 443/TCP 3d2h

myweb-svc NodePort 10.102.83.168 8000:30001/TCP 15s

[root@k8smaster HPA]# kubectl get hpa

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

myweb Deployment/myweb /50% 1 10 3 16s

# 4.访问

http://192.168.2.112:30001/

[root@k8smaster HPA]# kubectl get hpa

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

myweb Deployment/myweb 1%/50% 1 10 1 11m

[root@k8smaster HPA]# kubectl get pod

NAME READY STATUS RESTARTS AGE

myweb-6dc7b4dfcb-ddq82 1/1 Running 0 10m

# 5.删除hpa

[root@k8smaster HPA]# kubectl delete hpa myweb-svc 六.启动mysql的pod,为web业务提供数据库服务。

[root@k8smaster mysql]# cat mysql-deployment.yaml

# 定义mysql的Deployment

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: mysql

name: mysql

spec:

replicas: 1

selector:

matchLabels:

app: mysql

template:

metadata:

labels:

app: mysql

spec:

containers:

- image: mysql:5.7.42

name: mysql

imagePullPolicy: IfNotPresent

env:

- name: MYSQL_ROOT_PASSWORD

value: "123456"

ports:

- containerPort: 3306

---

#定义mysql的Service

apiVersion: v1

kind: Service

metadata:

labels:

app: svc-mysql

name: svc-mysql

spec:

selector:

app: mysql

type: NodePort

ports:

- port: 3306

protocol: TCP

targetPort: 3306

nodePort: 30007

[root@k8smaster mysql]# kubectl apply -f mysql-deployment.yaml

deployment.apps/mysql created

service/svc-mysql created

[root@k8smaster mysql]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 443/TCP 28h

svc-mysql NodePort 10.105.96.217 3306:30007/TCP 10m

[root@k8smaster mysql]# kubectl get pod

NAME READY STATUS RESTARTS AGE

mysql-5f9bccd855-6kglf 1/1 Running 0 8m59s

[root@k8smaster mysql]# kubectl exec -it mysql-5f9bccd855-6kglf -- bash

bash-4.2# mysql -uroot -p123456

mysql: [Warning] Using a password on the command line interface can be insecure.

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 2

Server version: 5.7.42 MySQL Community Server (GPL)

Copyright (c) 2000, 2023, Oracle and/or its affiliates.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

mysql> show databases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| mysql |

| performance_schema |

| sys |

+--------------------+

4 rows in set (0.01 sec)

mysql> exit

Bye

bash-4.2# exit

exit

[root@k8smaster mysql]#

# Web服务和MySQL数据库结合起来

# 第一种:在mysql的service中增加以下内容

ports:

- name: mysql

protocol: TCP

port: 3306

targetPort: 3306

# 在web的pod中增加以下内容

env:

- name: MYSQL_HOST

value: mysql

- name: MYSQL_PORT

value: "3306"

# 第二种:安装MySQL驱动程序,在 Go 代码中引入并初始化该驱动程序。

# 1.导入必要的包和驱动程序import ( "database/sql"

"fmt"

_ "github.com/go-sql-driver/mysql" # 导入 MySQL 驱动程序

)

# 2.建立数据库连接db, err := sql.Open("mysql", "username:password@tcp(hostname:port)/dbname")

if err != nil {

fmt.Println("Failed to connect to database:", err)

return

}

defer db.Close() # 记得关闭数据库连接 尝试:k8s部署有状态的MySQL

# 1.创建 ConfigMap

[root@k8smaster mysql]# cat mysql-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: mysql

labels:

app: mysql

data:

primary.cnf: |

# 仅在主服务器上应用此配置

[mysqld]

log-bin

replica.cnf: |

# 仅在副本服务器上应用此配置

[mysqld]

super-read-only

[root@k8smaster mysql]# kubectl apply -f mysql-configmap.yaml

configmap/mysql created

[root@k8smaster mysql]# kubectl get cm

NAME DATA AGE

kube-root-ca.crt 1 6d22h

mysql 2 5s

# 2.创建服务

[root@k8smaster mysql]# cat mysql-services.yaml

# 为 StatefulSet 成员提供稳定的 DNS 表项的无头服务(Headless Service)

apiVersion: v1

kind: Service

metadata:

name: mysql

labels:

app: mysql

app.kubernetes.io/name: mysql

spec:

ports:

- name: mysql

port: 3306

clusterIP: None

selector:

app: mysql

---

# 用于连接到任一 MySQL 实例执行读操作的客户端服务

# 对于写操作,你必须连接到主服务器:mysql-0.mysql

apiVersion: v1

kind: Service

metadata:

name: mysql-read

labels:

app: mysql

app.kubernetes.io/name: mysql

readonly: "true"

spec:

ports:

- name: mysql

port: 3306

selector:

app: mysql

[root@k8smaster mysql]# kubectl apply -f mysql-services.yaml

service/mysql created

service/mysql-read created

[root@k8smaster mysql]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 443/TCP 6d22h

mysql ClusterIP None 3306/TCP 7s

mysql-read ClusterIP 10.102.31.144 3306/TCP 7s

# 3.创建 StatefulSet

[root@k8smaster mysql]# cat mysql-statefulset.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: mysql

spec:

selector:

matchLabels:

app: mysql

app.kubernetes.io/name: mysql

serviceName: mysql

replicas: 3

template:

metadata:

labels:

app: mysql

app.kubernetes.io/name: mysql

spec:

initContainers:

- name: init-mysql

image: mysql:5.7.42

imagePullPolicy: IfNotPresent

command:

- bash

- "-c"

- |

set -ex

# 基于 Pod 序号生成 MySQL 服务器的 ID。

[[ $HOSTNAME =~ -([0-9]+)$ ]] || exit 1

ordinal=${BASH_REMATCH[1]}

echo [mysqld] > /mnt/conf.d/server-id.cnf

# 添加偏移量以避免使用 server-id=0 这一保留值。

echo server-id=$((100 + $ordinal)) >> /mnt/conf.d/server-id.cnf

# 将合适的 conf.d 文件从 config-map 复制到 emptyDir。

if [[ $ordinal -eq 0 ]]; then

cp /mnt/config-map/primary.cnf /mnt/conf.d/

else

cp /mnt/config-map/replica.cnf /mnt/conf.d/

fi

volumeMounts:

- name: conf

mountPath: /mnt/conf.d

- name: config-map

mountPath: /mnt/config-map

- name: clone-mysql

image: registry.cn-hangzhou.aliyuncs.com/google_samples_thepoy/xtrabackup:1.0

command:

- bash

- "-c"

- |

set -ex

# 如果已有数据,则跳过克隆。

[[ -d /var/lib/mysql/mysql ]] && exit 0

# 跳过主实例(序号索引 0)的克隆。

[[ `hostname` =~ -([0-9]+)$ ]] || exit 1

ordinal=${BASH_REMATCH[1]}

[[ $ordinal -eq 0 ]] && exit 0

# 从原来的对等节点克隆数据。

ncat --recv-only mysql-$(($ordinal-1)).mysql 3307 | xbstream -x -C /var/lib/mysql

# 准备备份。

xtrabackup --prepare --target-dir=/var/lib/mysql

volumeMounts:

- name: data

mountPath: /var/lib/mysql

subPath: mysql

- name: conf

mountPath: /etc/mysql/conf.d

containers:

- name: mysql

image: mysql:5.7.42

imagePullPolicy: IfNotPresent

env:

- name: MYSQL_ALLOW_EMPTY_PASSWORD

value: "1"

ports:

- name: mysql

containerPort: 3306

volumeMounts:

- name: data

mountPath: /var/lib/mysql

subPath: mysql

- name: conf

mountPath: /etc/mysql/conf.d

resources:

requests:

cpu: 500m

memory: 1Gi

livenessProbe:

exec:

command: ["mysqladmin", "ping"]

initialDelaySeconds: 30

periodSeconds: 10

timeoutSeconds: 5

readinessProbe:

exec:

# 检查我们是否可以通过 TCP 执行查询(skip-networking 是关闭的)。

command: ["mysql", "-h", "127.0.0.1", "-e", "SELECT 1"]

initialDelaySeconds: 5

periodSeconds: 2

timeoutSeconds: 1

- name: xtrabackup

image: registry.cn-hangzhou.aliyuncs.com/google_samples_thepoy/xtrabackup:1.0

ports:

- name: xtrabackup

containerPort: 3307

command:

- bash

- "-c"

- |

set -ex

cd /var/lib/mysql

# 确定克隆数据的 binlog 位置(如果有的话)。

if [[ -f xtrabackup_slave_info && "x$( change_master_to.sql.in

# 在这里要忽略 xtrabackup_binlog_info (它是没用的)。

rm -f xtrabackup_slave_info xtrabackup_binlog_info

elif [[ -f xtrabackup_binlog_info ]]; then

# 我们直接从主实例进行克隆。解析 binlog 位置。

[[ `cat xtrabackup_binlog_info` =~ ^(.*?)[[:space:]]+(.*?)$ ]] || exit 1

rm -f xtrabackup_binlog_info xtrabackup_slave_info

echo "CHANGE MASTER TO MASTER_LOG_FILE='${BASH_REMATCH[1]}',\

MASTER_LOG_POS=${BASH_REMATCH[2]}" > change_master_to.sql.in

fi

# 检查我们是否需要通过启动复制来完成克隆。

if [[ -f change_master_to.sql.in ]]; then

echo "Waiting for mysqld to be ready (accepting connections)"

until mysql -h 127.0.0.1 -e "SELECT 1"; do sleep 1; done

echo "Initializing replication from clone position"

mysql -h 127.0.0.1 \

-e "$( 七.使用探针(liveness、readiness、startup)的(httpget、exec)方法对web业务pod进行监控,一旦出现问题马上重启,增强业务pod的可靠性。

livenessProbe:

exec:

command:

- ls

- /tmp

initialDelaySeconds: 5

periodSeconds: 5

readinessProbe:

exec:

command:

- ls

- /tmp

initialDelaySeconds: 5

periodSeconds: 5

startupProbe:

httpGet:

path: /

port: 8000

failureThreshold: 30

periodSeconds: 10

[root@k8smaster probe]# vim my-web.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: myweb

name: myweb

spec:

replicas: 3

selector:

matchLabels:

app: myweb

template:

metadata:

labels:

app: myweb

spec:

containers:

- name: myweb

image: 192.168.2.106:5000/test/web:v2

imagePullPolicy: IfNotPresent

ports:

- containerPort: 8000

resources:

limits:

cpu: 300m

requests:

cpu: 100m

livenessProbe:

exec:

command:

- ls

- /tmp

initialDelaySeconds: 5

periodSeconds: 5

readinessProbe:

exec:

command:

- ls

- /tmp

initialDelaySeconds: 5

periodSeconds: 5

startupProbe:

httpGet:

path: /

port: 8000

failureThreshold: 30

periodSeconds: 10

---

apiVersion: v1

kind: Service

metadata:

labels:

app: myweb-svc

name: myweb-svc

spec:

selector:

app: myweb

type: NodePort

ports:

- port: 8000

protocol: TCP

targetPort: 8000

nodePort: 30001

[root@k8smaster probe]# kubectl apply -f my-web.yaml

deployment.apps/myweb created

service/myweb-svc created

[root@k8smaster probe]# kubectl get pod

NAME READY STATUS RESTARTS AGE

myweb-6b89fb9c7b-4cdh9 1/1 Running 0 53s

myweb-6b89fb9c7b-dh87w 1/1 Running 0 53s

myweb-6b89fb9c7b-zvc52 1/1 Running 0 53s

[root@k8smaster probe]# kubectl describe pod myweb-6b89fb9c7b-4cdh9

Name: myweb-6b89fb9c7b-4cdh9

Namespace: default

Priority: 0

Node: k8snode2/192.168.2.112

Start Time: Thu, 22 Jun 2023 16:47:20 +0800

Labels: app=myweb

pod-template-hash=6b89fb9c7b

Annotations: cni.projectcalico.org/podIP: 10.244.185.219/32

cni.projectcalico.org/podIPs: 10.244.185.219/32

Status: Running

IP: 10.244.185.219

IPs:

IP: 10.244.185.219

Controlled By: ReplicaSet/myweb-6b89fb9c7b

Containers:

myweb:

Container ID: docker://8c55c0c825483f86e4b3c87413984415b2ccf5cad78ed005eed8bedb4252c130

Image: 192.168.2.106:5000/test/web:v2

Image ID: docker-pullable://192.168.2.106:5000/test/web@sha256:3bef039aa5c13103365a6868c9f052a000de376a45eaffcbad27d6ddb1f6e354

Port: 8000/TCP

Host Port: 0/TCP

State: Running

Started: Thu, 22 Jun 2023 16:47:23 +0800

Ready: True

Restart Count: 0

Limits:

cpu: 300m

Requests:

cpu: 100m

Liveness: exec [ls /tmp] delay=5s timeout=1s period=5s #success=1 #failure=3

Readiness: exec [ls /tmp] delay=5s timeout=1s period=5s #success=1 #failure=3

Startup: http-get http://:8000/ delay=0s timeout=1s period=10s #success=1 #failure=30

Environment:

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from default-token-24tkk (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

default-token-24tkk:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-24tkk

Optional: false

QoS Class: Burstable

Node-Selectors:

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 55s default-scheduler Successfully assigned default/myweb-6b89fb9c7b-4cdh9 to k8snode2

Normal Pulled 52s kubelet Container image "192.168.2.106:5000/test/web:v2" already present on machine

Normal Created 52s kubelet Created container myweb

Normal Started 52s kubelet Started container myweb 八.使用ingress给web业务做负载均衡,使用dashboard对整个集群资源进行掌控。

# ingress controller 本质上是一个nginx软件,用来做负载均衡。

# ingress 是k8s内部管理nginx配置(nginx.conf)的组件,用来给ingress controller传参。

[root@k8smaster ingress]# ls

ingress-controller-deploy.yaml kube-webhook-certgen-v1.1.0.tar.gz sc-nginx-svc-1.yaml

ingress-nginx-controllerv1.1.0.tar.gz sc-ingress.yaml

ingress-controller-deploy.yaml 是部署ingress controller使用的yaml文件

ingress-nginx-controllerv1.1.0.tar.gz ingress-nginx-controller镜像

kube-webhook-certgen-v1.1.0.tar.gz kube-webhook-certgen镜像

sc-ingress.yaml 创建ingress的配置文件

sc-nginx-svc-1.yaml 启动sc-nginx-svc-1服务和相关pod的yaml

nginx-deployment-nginx-svc-2.yaml 启动nginx-deployment-nginx-svc-2服务和相关pod的yaml

# 第1大步骤:安装ingress controller

# 1.将镜像scp到所有的node节点服务器上

[root@k8smaster ingress]# scp ingress-nginx-controllerv1.1.0.tar.gz k8snode1:/root

ingress-nginx-controllerv1.1.0.tar.gz 100% 276MB 101.1MB/s 00:02

[root@k8smaster ingress]# scp ingress-nginx-controllerv1.1.0.tar.gz k8snode2:/root

ingress-nginx-controllerv1.1.0.tar.gz 100% 276MB 98.1MB/s 00:02

[root@k8smaster ingress]# scp kube-webhook-certgen-v1.1.0.tar.gz k8snode1:/root

kube-webhook-certgen-v1.1.0.tar.gz 100% 47MB 93.3MB/s 00:00

[root@k8smaster ingress]# scp kube-webhook-certgen-v1.1.0.tar.gz k8snode2:/root

kube-webhook-certgen-v1.1.0.tar.gz 100% 47MB 39.3MB/s 00:01

# 2.导入镜像,在所有的节点服务器上进行

[root@k8snode1 ~]# docker load -i ingress-nginx-controllerv1.1.0.tar.gz

[root@k8snode1 ~]# docker load -i kube-webhook-certgen-v1.1.0.tar.gz

[root@k8snode2 ~]# docker load -i ingress-nginx-controllerv1.1.0.tar.gz

[root@k8snode2 ~]# docker load -i kube-webhook-certgen-v1.1.0.tar.gz

[root@k8snode1 ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

nginx latest 605c77e624dd 17 months ago 141MB

registry.cn-hangzhou.aliyuncs.com/google_containers/nginx-ingress-controller v1.1.0 ae1a7201ec95 19 months ago 285MB

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-webhook-certgen v1.1.1 c41e9fcadf5a 20 months ago 47.7MB

[root@k8snode2 ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

nginx latest 605c77e624dd 17 months ago 141MB

registry.cn-hangzhou.aliyuncs.com/google_containers/nginx-ingress-controller v1.1.0 ae1a7201ec95 19 months ago 285MB

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-webhook-certgen v1.1.1 c41e9fcadf5a 20 months ago 47.7MB

# 3.执行yaml文件去创建ingres controller

[root@k8smaster ingress]# kubectl apply -f ingress-controller-deploy.yaml

namespace/ingress-nginx created

serviceaccount/ingress-nginx created

configmap/ingress-nginx-controller created

clusterrole.rbac.authorization.k8s.io/ingress-nginx created

clusterrolebinding.rbac.authorization.k8s.io/ingress-nginx created

role.rbac.authorization.k8s.io/ingress-nginx created

rolebinding.rbac.authorization.k8s.io/ingress-nginx created

service/ingress-nginx-controller-admission created

service/ingress-nginx-controller created

deployment.apps/ingress-nginx-controller created

ingressclass.networking.k8s.io/nginx created

validatingwebhookconfiguration.admissionregistration.k8s.io/ingress-nginx-admission created

serviceaccount/ingress-nginx-admission created

clusterrole.rbac.authorization.k8s.io/ingress-nginx-admission created

clusterrolebinding.rbac.authorization.k8s.io/ingress-nginx-admission created

role.rbac.authorization.k8s.io/ingress-nginx-admission created

rolebinding.rbac.authorization.k8s.io/ingress-nginx-admission created

job.batch/ingress-nginx-admission-create created

job.batch/ingress-nginx-admission-patch created

# 4.查看ingress controller的相关命名空间

[root@k8smaster ingress]# kubectl get ns

NAME STATUS AGE

default Active 20h

ingress-nginx Active 30s

kube-node-lease Active 20h

kube-public Active 20h

kube-system Active 20h

# 5.查看ingress controller的相关service

[root@k8smaster ingress]# kubectl get svc -n ingress-nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx-controller NodePort 10.105.213.95 80:31457/TCP,443:32569/TCP 64s

ingress-nginx-controller-admission ClusterIP 10.98.225.196 443/TCP 64s

# 6.查看ingress controller的相关pod

[root@k8smaster ingress]# kubectl get pod -n ingress-nginx

NAME READY STATUS RESTARTS AGE

ingress-nginx-admission-create-9sg56 0/1 Completed 0 80s

ingress-nginx-admission-patch-8sctb 0/1 Completed 1 80s

ingress-nginx-controller-6c8ffbbfcf-bmdj9 1/1 Running 0 80s

ingress-nginx-controller-6c8ffbbfcf-j576v 1/1 Running 0 80s

# 第2大步骤:创建pod和暴露pod的服务

[root@k8smaster new]# cat sc-nginx-svc-1.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: sc-nginx-deploy

labels:

app: sc-nginx-feng

spec:

replicas: 3

selector:

matchLabels:

app: sc-nginx-feng

template:

metadata:

labels:

app: sc-nginx-feng

spec:

containers:

- name: sc-nginx-feng

image: nginx

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: sc-nginx-svc

labels:

app: sc-nginx-svc

spec:

selector:

app: sc-nginx-feng

ports:

- name: name-of-service-port

protocol: TCP

port: 80

targetPort: 80

[root@k8smaster new]# kubectl apply -f sc-nginx-svc-1.yaml

deployment.apps/sc-nginx-deploy created

service/sc-nginx-svc created

[root@k8smaster ingress]# kubectl get pod

NAME READY STATUS RESTARTS AGE

sc-nginx-deploy-7bb895f9f5-hmf2n 1/1 Running 0 7s

sc-nginx-deploy-7bb895f9f5-mczzg 1/1 Running 0 7s

sc-nginx-deploy-7bb895f9f5-zzndv 1/1 Running 0 7s

[root@k8smaster ingress]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 443/TCP 20h

sc-nginx-svc ClusterIP 10.96.76.55 80/TCP 26s

# 查看服务器的详细信息,查看Endpoints对应的pod的ip和端口是否正常

[root@k8smaster ingress]# kubectl describe svc sc-nginx-svc

Name: sc-nginx-svc

Namespace: default

Labels: app=sc-nginx-svc

Annotations:

Selector: app=sc-nginx-feng

Type: ClusterIP

IP Families:

IP: 10.96.76.55

IPs: 10.96.76.55

Port: name-of-service-port 80/TCP

TargetPort: 80/TCP

Endpoints: 10.244.185.209:80,10.244.185.210:80,10.244.249.16:80

Session Affinity: None

Events:

# 访问服务暴露的ip

[root@k8smaster ingress]# curl 10.96.76.55

Welcome to nginx!

Welcome to nginx!

If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.

For online documentation and support please refer to

nginx.org.

Commercial support is available at

nginx.com.

Thank you for using nginx.

# 第3大步骤:启用ingress关联ingress controller 和service

# 创建一个yaml文件,去启动ingress

[root@k8smaster ingress]# cat sc-ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: sc-ingress

annotations:

kubernets.io/ingress.class: nginx #注释 这个ingress 是关联ingress controller的

spec:

ingressClassName: nginx #关联ingress controller

rules:

- host: www.feng.com

http:

paths:

- pathType: Prefix

path: /

backend:

service:

name: sc-nginx-svc

port:

number: 80

- host: www.zhang.com

http:

paths:

- pathType: Prefix

path: /

backend:

service:

name: sc-nginx-svc-2

port:

number: 80

[root@k8smaster ingress]# kubectl apply -f my-ingress.yaml

ingress.networking.k8s.io/my-ingress created

# 查看ingress

[root@k8smaster ingress]# kubectl get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

sc-ingress nginx www.feng.com,www.zhang.com 192.168.2.111,192.168.2.112 80 52s

# 第4大步骤:查看ingress controller 里的nginx.conf 文件里是否有ingress对应的规则

[root@k8smaster ingress]# kubectl get pod -n ingress-nginx

NAME READY STATUS RESTARTS AGE

ingress-nginx-admission-create-9sg56 0/1 Completed 0 6m53s

ingress-nginx-admission-patch-8sctb 0/1 Completed 1 6m53s

ingress-nginx-controller-6c8ffbbfcf-bmdj9 1/1 Running 0 6m53s

ingress-nginx-controller-6c8ffbbfcf-j576v 1/1 Running 0 6m53s

[root@k8smaster ingress]# kubectl exec -n ingress-nginx -it ingress-nginx-controller-6c8ffbbfcf-bmdj9 -- bash

bash-5.1$ cat nginx.conf |grep feng.com

## start server www.feng.com

server_name www.feng.com ;

## end server www.feng.com

bash-5.1$ cat nginx.conf |grep zhang.com

## start server www.zhang.com

server_name www.zhang.com ;

## end server www.zhang.com

bash-5.1$ cat nginx.conf|grep -C3 upstream_balancer

error_log /var/log/nginx/error.log notice;

upstream upstream_balancer {

server 0.0.0.1:1234; # placeholder

# 获取ingress controller对应的service暴露宿主机的端口,访问宿主机和相关端口,就可以验证ingress controller是否能进行负载均衡

[root@k8smaster ingress]# kubectl get svc -n ingress-nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx-controller NodePort 10.105.213.95 80:31457/TCP,443:32569/TCP 8m12s

ingress-nginx-controller-admission ClusterIP 10.98.225.196 443/TCP 8m12s

# 在其他的宿主机或者windows机器上使用域名进行访问

[root@zabbix ~]# vim /etc/hosts

[root@zabbix ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.2.111 www.feng.com

192.168.2.112 www.zhang.com

# 因为我们是基于域名做的负载均衡的配置,所以必须要在浏览器里使用域名去访问,不能使用ip地址

# 同时ingress controller做负载均衡的时候是基于http协议的,7层负载均衡。

[root@zabbix ~]# curl www.feng.com

Welcome to nginx!

Welcome to nginx!

If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.

For online documentation and support please refer to

nginx.org.

Commercial support is available at

nginx.com.

Thank you for using nginx.

# 访问www.zhang.com出现异常,503错误,是nginx内部错误

[root@zabbix ~]# curl www.zhang.com

503 Service Temporarily Unavailable

503 Service Temporarily Unavailable

nginx

# 第5大步骤:启动第2个服务和pod,使用了pv+pvc+nfs

# 需要提前准备好nfs服务器+创建pv和pvc

[root@k8smaster pv]# pwd

/root/pv

[root@k8smaster pv]# ls

nfs-pvc.yml nfs-pv.yml nginx-deployment.yml

[root@k8smaster pv]# cat nfs-pv.yml

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv-web

labels:

type: pv-web

spec:

capacity:

storage: 10Gi

accessModes:

- ReadWriteMany

storageClassName: nfs # pv对应的名字

nfs:

path: "/web" # nfs共享的目录

server: 192.168.2.121 # nfs服务器的ip地址

readOnly: false # 访问模式

[root@k8smaster pv]# kubectl apply -f nfs-pv.yaml

[root@k8smaster pv]# kubectl apply -f nfs-pvc.yaml

[root@k8smaster pv]# kubectl get pv