2.3.1 推送系统功能实现及系统优化

推送系统设计

若自定义网络协议会遇到的问题

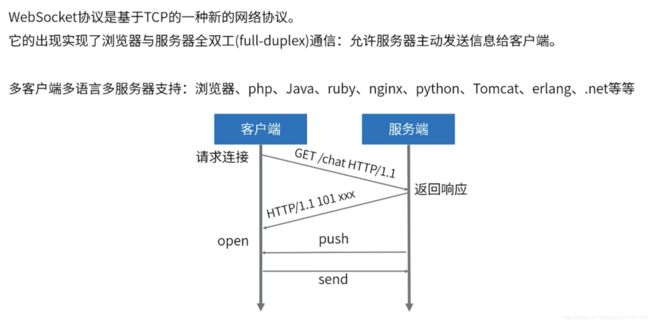

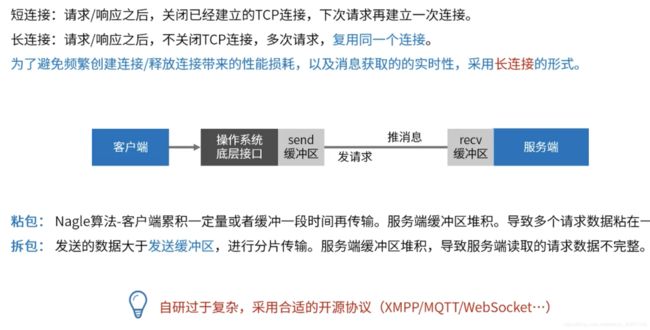

在推送系统设计中,为了避免频繁创建连接/释放连接带来的性能损耗,以及消息获取的实时性,采用长连接的形式。

但要注意会有粘包和拆包存在,其原因就在于客户端和服务端都有一个缓冲区。

使用WebSocket

简单的推送系统代码 – Server

public final class WebSocketServer {

static int PORT = 9000;

public static void main(String[] args) throws Exception {

EventLoopGroup bossGroup = new NioEventLoopGroup(1);

EventLoopGroup workerGroup = new NioEventLoopGroup();

try {

ServerBootstrap b = new ServerBootstrap();

b.group(bossGroup, workerGroup)

.channel(NioServerSocketChannel.class)

.option(ChannelOption.SO_REUSEADDR, true)

.childHandler(new WebSocketServerInitializer())

.childOption(ChannelOption.SO_REUSEADDR, true);

for (int i = 0; i < 100; i++) {

b.bind(++PORT).addListener(new ChannelFutureListener() {

public void operationComplete(ChannelFuture future) throws Exception {

if ("true".equals(System.getProperty("netease.debug")))

System.out.println("端口绑定完成:" + future.channel().localAddress());

}

});

}

// 端口绑定完成,启动消息随机推送(测试)

TestCenter.startTest();

System.in.read();

} finally {

bossGroup.shutdownGracefully();

workerGroup.shutdownGracefully();

}

}

}

public class WebSocketServerInitializer extends ChannelInitializer<SocketChannel> {

@Override

public void initChannel(SocketChannel ch) throws Exception {

ChannelPipeline pipeline = ch.pipeline();

pipeline.addLast(new HttpServerCodec());

pipeline.addLast(new HttpObjectAggregator(65536));

pipeline.addLast(new WebSocketServerHandler());

pipeline.addLast(new NewConnectHandler());

}

}

简单的推送系统代码 – handler

public class WebSocketServerHandler extends SimpleChannelInboundHandler<Object> {

private static final String WEBSOCKET_PATH = "/websocket";

private WebSocketServerHandshaker handshaker;

public static final LongAdder counter = new LongAdder();

@Override

public void channelRead0(ChannelHandlerContext ctx, Object msg) {

counter.add(1);

if (msg instanceof FullHttpRequest) {

handleHttpRequest(ctx, (FullHttpRequest) msg);

} else if (msg instanceof WebSocketFrame) {

handleWebSocketFrame(ctx, (WebSocketFrame) msg);

}

}

@Override

public void channelReadComplete(ChannelHandlerContext ctx) {

ctx.flush();

}

private void handleHttpRequest(ChannelHandlerContext ctx, FullHttpRequest req) {

// Handle a bad request. //如果http解码失败 则返回http异常 并且判断消息头有没有包含Upgrade字段(协议升级)

if (!req.decoderResult().isSuccess() || req.method() != GET || (!"websocket".equals(req.headers().get("Upgrade")))) {

sendHttpResponse(ctx, req, new DefaultFullHttpResponse(HTTP_1_1, BAD_REQUEST));

return;

}

// 构造握手响应返回

WebSocketServerHandshakerFactory wsFactory = new WebSocketServerHandshakerFactory(

getWebSocketLocation(req), null, true, 5 * 1024 * 1024);

handshaker = wsFactory.newHandshaker(req);

if (handshaker == null) {

// 版本不支持

WebSocketServerHandshakerFactory.sendUnsupportedVersionResponse(ctx.channel());

} else {

handshaker.handshake(ctx.channel(), req);

ctx.fireChannelRead(req.retain()); // 继续传播

}

}

private void handleWebSocketFrame(ChannelHandlerContext ctx, WebSocketFrame frame) {

// Check for closing frame 关闭

if (frame instanceof CloseWebSocketFrame) {

Object userId = ctx.channel().attr(AttributeKey.valueOf("userId")).get();

TestCenter.removeConnection(userId);

handshaker.close(ctx.channel(), (CloseWebSocketFrame) frame.retain());

return;

}

if (frame instanceof PingWebSocketFrame) { // ping/pong作为心跳

System.out.println("ping: " + frame);

ctx.write(new PongWebSocketFrame(frame.content().retain()));

return;

}

if (frame instanceof TextWebSocketFrame) {

// Echo the frame

//发送到客户端websocket

ctx.channel().write(new TextWebSocketFrame(((TextWebSocketFrame) frame).text()

+ ", 欢迎使用Netty WebSocket服务, 现在时刻:"

+ new java.util.Date().toString()));

return;

}

// 不处理二进制消息

if (frame instanceof BinaryWebSocketFrame) {

// Echo the frame

ctx.write(frame.retain());

}

}

private static void sendHttpResponse(

ChannelHandlerContext ctx, FullHttpRequest req, FullHttpResponse res) {

// Generate an error page if response getStatus code is not OK (200).

if (res.status().code() != 200) {

ByteBuf buf = Unpooled.copiedBuffer(res.status().toString(), CharsetUtil.UTF_8);

res.content().writeBytes(buf);

buf.release();

HttpUtil.setContentLength(res, res.content().readableBytes());

}

// Send the response and close the connection if necessary.

ChannelFuture f = ctx.channel().writeAndFlush(res);

if (!HttpUtil.isKeepAlive(req) || res.status().code() != 200) {

f.addListener(ChannelFutureListener.CLOSE);

}

}

@Override

public void exceptionCaught(ChannelHandlerContext ctx, Throwable cause) {

cause.printStackTrace();

ctx.close();

}

private static String getWebSocketLocation(FullHttpRequest req) {

String location = req.headers().get(HttpHeaderNames.HOST) + WEBSOCKET_PATH;

return "ws://" + location;

}

}

// 新连接建立了

public class NewConnectHandler extends SimpleChannelInboundHandler<FullHttpRequest> {

@Override

protected void channelRead0(ChannelHandlerContext ctx, FullHttpRequest req) throws Exception {

// 解析请求,判断token,拿到用户ID

Map<String, List<String>> parameters = new QueryStringDecoder(req.uri()).parameters();

// String token = parameters.get("token").get(0); 不是所有人都能连接,比如需要登录之后,发放一个推送的token

String userId = parameters.get("userId").get(0);

ctx.channel().attr(AttributeKey.valueOf("userId")).getAndSet(userId); // channel中保存userId

TestCenter.saveConnection(userId, ctx.channel()); // 保存连接

// 结束

}

}

测试环境搭建

我们把java包放在服务器上,但我们是自己测试的环境就会遇到如何利用现有的资源创建一个百万级别的连接。

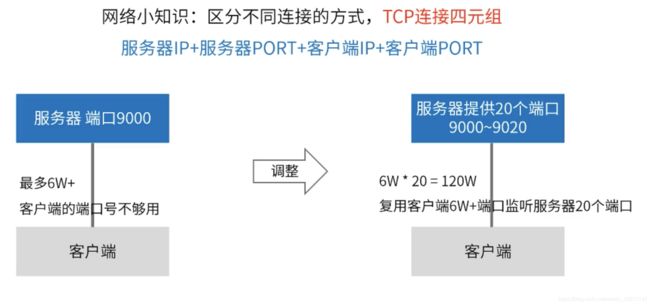

客户端每发起一个请求需要调用一个空闲的端口,而一台机器最多也就6W+,所以端口号不够用。

而一个请求是由 服务器IP+服务器PORT+客户端IP+客户端PORT组成的。

我们可以在服务器上提供20个端口,这样子就能支持百万级别的连接。

优化一 百万连接

优化二 提高请求/推送的吞吐量

业务操作提交到单独的线程执行调整TCP缓冲区大小,提高网络吞吐量基于Netty框架开发时,业务代码逻辑的调优

Netty百万连接配置详细

0、 启动客户端和服务端

# 测试环境: centos7 jdk8 4核6G

# 服务端启动

java -Xmx4096m -Xms4096m -Dnetease.debug=true -cp netty-all-4.1.32.Final.jar:netty-push-1.0.0.jar com.study.netty.push.server.WebSocketServer

# 客户端

java -Xmx4096m -Xms4096m -Dnetease.debug=false -Dnetease.pushserver.host=192.168.100.241 -cp netty-all-4.1.32.Final.jar:netty-push-1.0.0.jar com.study.netty.push.client.WebSocketClient

1、 too many openFiles 服务端和测试机都改一下

# 进程最大文件打开添加参数最大限制

vi /etc/security/limits.conf

* soft nofile 1000000

* hard nofile 1000000

# 全局限制 cat /proc/sys/fs/file-nr

echo 1200000 > /proc/sys/fs/file-max

vi /etc/sysctl.conf

fs.file-max = 1000000

2、 客户端问题汇总

# 客户机开不了这么多连接 ,可能的问题原因端口开放数

linux对外随机分配的端口是有限制,理论上单机对外端口数可达65535,但实际对外可建立的连接默认最大只有28232个

查看: cat /proc/sys/net/ipv4/ip_local_port_range

echo "net.ipv4.ip_local_port_range= 1024 65535">> /etc/sysctl.conf

sysctl -p

# 如果你的机器差,出现了奇怪的问题~

sysctl -w net.ipv4.tcp_tw_recycle=1 #快速回收time_wait的连接

sysctl -w net.ipv4.tcp_tw_reuse=1

sysctl -w net.ipv4.tcp_timestamps=1

3、 可能的问题

# 如果发现自己的用例跑不上去,就看看linux日志

tail -f /var/log/messages

# linux 日志

1、 nf_conntrack: table full, dropping packet 表示防火墙的表满了,加大 nf_conntrack_max 参数

echo "net.nf_conntrack_max = 1000000">> /etc/sysctl.conf

# 2、 TCP: too many orphaned sockets 表示内存不太够,拒绝分配,一般就是TCP缓冲区内存不够用,调大一点

# cat /proc/sys/net/ipv4/tcp_mem

echo "net.ipv4.tcp_mem = 786432 2097152 16777216">> /etc/sysctl.conf

echo "net.ipv4.tcp_rmem = 4096 4096 16777216">> /etc/sysctl.conf

echo "net.ipv4.tcp_wmem = 4096 4096 16777216">> /etc/sysctl.conf

sysctl -p

4、 常规监控

# 查看某个端口的连接情况

netstat -nat|grep -i "9001"|wc -l

netstat -n | awk '/^tcp/ {++S[$NF]} END {for(a in S) print a, S[a]}'

# 网络接口的带宽使用情况

#tcpdump https://www.cnblogs.com/maifengqiang/p/3863168.html

# glances工具

yum install -y glances

glances 控制台查看

glances -s 服务器模式查看

如果是自己虚拟机,要记得关闭防火墙

systemctl stop firewalld.service