Kubernetes二进制部署

| 节点名称 |

ip地址 |

服务部署 |

| master |

192.168.150.10 |

kube-apiserver kube-controller-manager kube-scheduler etcd |

| node1 |

192.168.150.100 |

kubelet kube-proxy docker flannel etcd |

| node2 |

192.168.150.200 |

kubelet kube-proxy docker flannel etcd |

目录

一、ETCD集群部署

1.master节点上操作

(1)创建目录及上传文件

(2)给予权限

(3)配置ca证书

(4)生成证书并解压etcd

(5)创建配置文件,命令文件,证书

(6)拷贝证书文件至相应目录

(7)执行etcd.sh启动脚本(进入卡住状态等待其他节点加入,目的是生成启动脚本)

(8)拷贝证书至其他节点

(9)启动脚本拷贝其他节点

2.node节点上操作

(1)在node1节点修改

(2)启动etcd

(3)在node2节点修改

(4)启动etcd

(5)master节点上检查群集状态

二、Docker引擎部署

1.所有node节点部署docker引擎

(1)关闭防火墙策略

(2)部署docker引擎

三、Flannel网络配置

1.master节点上操作

(1)写入分配的子网段到ETCD中,供flannel使用

(2)查看写入的信息

(3)拷贝到所有node节点

2.node1节点上操作

(1)node1节点解压flannel

(2)创建k8s工作目录

(3)定义flannel脚本

(4)开启flannel网络功能

(5)配置docker连接flannel

(6)重启docker服务

(7)查看flannel网络

3.node2节点上操作

(1)node2节点解压flannel

(2)创建k8s工作目录

(3)定义flannel脚本

(4)开启flannel网络功能

(5)配置docker连接flannel

(6)重启docker服务

(7)查看flannel网络

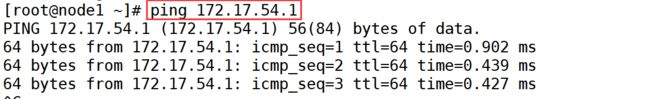

(8)测试ping通对方docker0网卡,证明flannel起到路由作用

(9)测试ping通两个node中的centos:7容器

四、部署master组件

1.master节点上操作

(1)api-server生成证书

(2)生成k8s证书

(3)拷贝证书

(4)解压kubernetes压缩包

(5)复制关键命令文件

(6)随机生成序列号,并定义token.csv

(7)二进制文件,token证书都准备好,开启apiserver

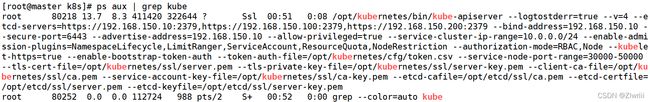

(8)检查进程是否启动成功

(9)查看配置文件

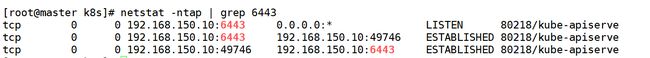

(10)监听的https端口

(11)启动scheduler服务

(12)启动controller-manager

(13)查看master 节点状态

五、Node节点部署

1.master 节点上操作

(1)把 kubelet、kube-proxy拷贝到node1节点上去

(2)把 kubelet、kube-proxy拷贝到node2节点上去

2.node节点上操作

(1)node1节点上操作

(2)node2节点上操作

3.master 节点上操作

(1)拷贝kubeconfig.sh文件进行重命名

(2)获取token信息

(3)配置文件修改为tokenID

(4)设置环境变量(可以写入到/etc/profile中)

(5)生成配置文件

(6)拷贝配置文件到node节点

(7)创建bootstrap角色赋予权限用于连接apiserver请求签名

4.node1节点上操作

(1)启动kubelet服务

(2)检查kubelet服务启动

(3)启动proxy服务

5.node2节点上操作

(1)启动kubelet服务

(2)检查kubelet服务启动

(3)启动proxy服务

6.master节点上操作

(1)检查node1节点的请求

(2)继续查看证书状态

(3)查看群集节点,成功加入node1节点和node2节点

一、ETCD集群部署

1.master节点上操作

(1)创建目录及上传文件

[root@master ~]# mkdir k8s

[root@master ~]# cd k8s/

[root@master k8s]# ls

etcd-cert.sh etcd.sh #上传两个相关的脚本

[root@master k8s]# mkdir etcd-cert

[root@master k8s]# mv etcd-cert.sh etcd-cert(2)给予权限

[root@master k8s]# cd /usr/local/bin/

[root@master bin]# ls

cfssl cfssl-certinfo cfssljson #上传三个软件服务

[root@master bin]# chmod +x /usr/local/bin/cfssl /usr/local/bin/cfssljson /usr/local/bin/cfssl-certinfo (3)配置ca证书

[root@master bin]# cd /root/k8s/etcd-cert/

[root@master etcd-cert]# vim etcd-cert.sh

cat > ca-config.json < ca-csr.json < server-csr.json < (4)生成证书并解压etcd

[root@master etcd-cert]# sh -x etcd-cert.sh

[root@master etcd-cert]# ls

ca-config.json ca-key.pem etcd-v3.3.10-linux-amd64.tar.gz server.csr server.pem

ca.csr ca.pem flannel-v0.10.0-linux-amd64.tar.gz server-csr.json

ca-csr.json etcd-cert.sh kubernetes-server-linux-amd64.tar.gz server-key.pem

[root@master etcd-cert]# mv *.tar.gz ../

[root@master etcd-cert]# cd ..

[root@master k8s]# ls

etcd-cert etcd-v3.3.10-linux-amd64.tar.gz kubernetes-server-linux-amd64.tar.gz

etcd.sh flannel-v0.10.0-linux-amd64.tar.gz

[root@master k8s]# tar zxf etcd-v3.3.10-linux-amd64.tar.gz

[root@master k8s]# ls etcd-v3.3.10-linux-amd64

Documentation etcd etcdctl README-etcdctl.md README.md READMEv2-etcdctl.md(5)创建配置文件,命令文件,证书

[root@master k8s]# mkdir /opt/etcd/{cfg,bin,ssl} -p

[root@master k8s]# mv etcd-v3.3.10-linux-amd64/etcd etcd-v3.3.10-linux-amd64/etcdctl /opt/etcd/bin/(6)拷贝证书文件至相应目录

[root@master k8s]# cp etcd-cert/*.pem /opt/etcd/ssl/(7)执行etcd.sh启动脚本(进入卡住状态等待其他节点加入,目的是生成启动脚本)

[root@master k8s]# bash etcd.sh etcd01 192.168.150.10 etcd02=https://192.168.150.100:2380,etcd03=https://192.168.150.200:2380用另一个终端打开,会发现etcd进程已经开启

(8)拷贝证书至其他节点

[root@master k8s]# scp -r /opt/etcd/ [email protected]:/opt/

[root@master k8s]# scp -r /opt/etcd/ [email protected]:/opt/(9)启动脚本拷贝其他节点

[root@master k8s]# scp /usr/lib/systemd/system/etcd.service [email protected]:/usr/lib/systemd/system/

[email protected]'s password:

etcd.service 100% 923 719.8KB/s 00:00

[root@master k8s]# scp /usr/lib/systemd/system/etcd.service [email protected]:/usr/lib/systemd/system/

[email protected]'s password:

etcd.service 100% 923 558.6KB/s 00:00

2.node节点上操作

(1)在node1节点修改

[root@node1 ~]# vim /opt/etcd/cfg/etcd

#[Member]

ETCD_NAME="etcd02"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://192.168.150.100:2380"

ETCD_LISTEN_CLIENT_URLS="https://192.168.150.100:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.150.100:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.150.100:2379"

ETCD_INITIAL_CLUSTER="etcd01=https://192.168.150.10:2380,etcd02=https://192.168.150.100:2380,etcd03=https://192.168.150.200:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"(2)启动etcd

[root@node2 cfg]# systemctl start etcd.service

[root@node2 cfg]# systemctl status etcd.service(3)在node2节点修改

[root@node2 ~]# vim /opt/etcd/cfg/etcd

#[Member]

ETCD_NAME="etcd03"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://192.168.150.200:2380"

ETCD_LISTEN_CLIENT_URLS="https://192.168.150.200:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.150.200:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.150.200:2379"

ETCD_INITIAL_CLUSTER="etcd01=https://192.168.150.10:2380,etcd02=https://192.168.150.100:2380,etcd03=https://192.168.150.200:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"(4)启动etcd

[root@node2 cfg]# systemctl start etcd.service

[root@node2 cfg]# systemctl status etcd.service(5)master节点上检查群集状态

[root@master ~]# cd k8s/etcd-cert/

[root@master etcd-cert]# /opt/etcd/bin/etcdctl --ca-file=ca.pem --cert-file=server.pem --key-file=server-key.pem --endpoints="https://192.168.150.10:2379,https://192.168.150.100:2379,https://192.168.150.200:2379" cluster-health

member 411b467d9995fab5 is healthy: got healthy result from https://192.168.150.10:2379

member 8bafd67ce9c1821d is healthy: got healthy result from https://192.168.150.200:2379

member bf628b4130b432fb is healthy: got healthy result from https://192.168.150.100:2379二、Docker引擎部署

1.所有node节点部署docker引擎

(1)关闭防火墙策略

[root@node ~]# systemctl stop firewalld

[root@node ~]# systemctl disable firewalld

[root@node ~]# setenforce 0(2)部署docker引擎

[root@node ~]# yum install -y yum-utils device-mapper-persistent-data lvm2

[root@node ~]# cd /etc/yum.repos.d/

[root@node yum.repos.d]# yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

[root@node yum.repos.d]# yum install -y docker-ce

[root@node yum.repos.d]# mkdir -p /etc/docker

[root@node yum.repos.d]# cd /etc/docker/

[root@node docker]# vim daemon.json

{

"registry-mirrors": ["https://cvzmdwsp.mirror.aliyuncs.com"]

}

[root@node docker]# vim /etc/sysctl.conf

net.ipv4.ip_forward=1

[root@node docker]# sysctl -p

net.ipv4.ip_forward = 1

[root@node docker]# systemctl daemon-reload

[root@node docker]# systemctl restart docker.service

[root@node docker]# systemctl enable docker.service

[root@node docker]# systemctl status docker.service三、Flannel网络配置

1.master节点上操作

(1)写入分配的子网段到ETCD中,供flannel使用

[root@master etcd-cert]# /opt/etcd/bin/etcdctl --ca-file=ca.pem --cert-file=server.pem --key-file=server-key.pem --endpoints="https://192.168.150.10:2379,https://192.168.150.100:2379,https://192.168.150.200:2379" set /coreos.com/network/config '{ "Network": "172.17.0.0/16", "Backend": {"Type": "vxlan"}}'(2)查看写入的信息

[root@master etcd-cert]# /opt/etcd/bin/etcdctl --ca-file=ca.pem --cert-file=server.pem --key-file=server-key.pem --endpoints="https://192.168.150.10:2379,https://192.168.150.100:2379,https://192.168.150.200:2379" get /coreos.com/network/config (3)拷贝到所有node节点

[root@master k8s]# scp flannel-v0.10.0-linux-amd64.tar.gz [email protected]:/root

[email protected]'s password:

flannel-v0.10.0-linux-amd64.tar.gz 100% 9479KB 57.0MB/s 00:00

[root@master k8s]# scp flannel-v0.10.0-linux-amd64.tar.gz [email protected]:/root

[email protected]'s password:

flannel-v0.10.0-linux-amd64.tar.gz 100% 9479KB 86.8MB/s 00:00

2.node1节点上操作

(1)node1节点解压flannel

[root@node1 ~]# tar zxf flannel-v0.10.0-linux-amd64.tar.gz

[root@node1 ~]# ls

flanneld mk-docker-opts.sh(2)创建k8s工作目录

[root@node1 ~]# mkdir /opt/kubernetes/{cfg,bin,ssl} -p

[root@node1 ~]# mv mk-docker-opts.sh flanneld /opt/kubernetes/bin/(3)定义flannel脚本

[root@node1 ~]# vim flannel.sh

#!/bin/bash

ETCD_ENDPOINTS=${1:-"http://127.0.0.1:2379"}

cat </opt/kubernetes/cfg/flanneld

FLANNEL_OPTIONS="--etcd-endpoints=${ETCD_ENDPOINTS} \

-etcd-cafile=/opt/etcd/ssl/ca.pem \

-etcd-certfile=/opt/etcd/ssl/server.pem \

-etcd-keyfile=/opt/etcd/ssl/server-key.pem"

EOF

cat </usr/lib/systemd/system/flanneld.service

[Unit]

Description=Flanneld overlay address etcd agent

After=network-online.target network.target

Before=docker.service

[Service]

Type=notify

EnvironmentFile=/opt/kubernetes/cfg/flanneld

ExecStart=/opt/kubernetes/bin/flanneld --ip-masq \$FLANNEL_OPTIONS

ExecStartPost=/opt/kubernetes/bin/mk-docker-opts.sh -k DOCKER_NETWORK_OPTIONS -d /run/flannel/subnet.env

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload

systemctl enable flanneld

systemctl restart flanneld (4)开启flannel网络功能

[root@node1 ~]# bash flannel.sh https://192.168.150.10:2379,https://192.168.150.100:2379,https://192.168.150.200:2379

Created symlink from /etc/systemd/system/multi-user.target.wants/flanneld.service to /usr/lib/systemd/system/flanneld.service.(5)配置docker连接flannel

[root@node1 ~]# vim /usr/lib/systemd/system/docker.service

[Service]

Type=notify

# the default is not to use systemd for cgroups because the delegate issues still

# exists and systemd currently does not support the cgroup feature set required

# for containers run by docker

EnvironmentFile=/run/flannel/subnet.env

ExecStart=/usr/bin/dockerd $DOCKER_NETWORK_OPTIONS -H fd:// --containerd=/run/containerd/containerd.sock

ExecReload=/bin/kill -s HUP $MAINPID

TimeoutSec=0

RestartSec=2

Restart=always(6)重启docker服务

[root@node1 ~]# systemctl daemon-reload

[root@node1 ~]# systemctl restart docker.service (7)查看flannel网络

[root@node1 ~]# ifconfig

docker0: flags=4099 mtu 1500

inet 172.17.80.1 netmask 255.255.255.0 broadcast 172.17.80.255

ether 02:42:6e:32:99:35 txqueuelen 0 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

flannel.1: flags=4163 mtu 1450

inet 172.17.80.0 netmask 255.255.255.255 broadcast 0.0.0.0

inet6 fe80::4ca3:18ff:fef3:799f prefixlen 64 scopeid 0x20

ether 4e:a3:18:f3:79:9f txqueuelen 0 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 36 overruns 0 carrier 0 collisions 0 3.node2节点上操作

(1)node2节点解压flannel

[root@node2 ~]# tar zxf flannel-v0.10.0-linux-amd64.tar.gz

[root@node2 ~]# ls

flanneld mk-docker-opts.sh(2)创建k8s工作目录

[root@node2 ~]# mkdir /opt/kubernetes/{cfg,bin,ssl} -p

[root@node2 ~]# mv mk-docker-opts.sh flanneld /opt/kubernetes/bin/(3)定义flannel脚本

[root@node2 ~]# vim flannel.sh

#!/bin/bash

ETCD_ENDPOINTS=${1:-"http://127.0.0.1:2379"}

cat </opt/kubernetes/cfg/flanneld

FLANNEL_OPTIONS="--etcd-endpoints=${ETCD_ENDPOINTS} \

-etcd-cafile=/opt/etcd/ssl/ca.pem \

-etcd-certfile=/opt/etcd/ssl/server.pem \

-etcd-keyfile=/opt/etcd/ssl/server-key.pem"

EOF

cat </usr/lib/systemd/system/flanneld.service

[Unit]

Description=Flanneld overlay address etcd agent

After=network-online.target network.target

Before=docker.service

[Service]

Type=notify

EnvironmentFile=/opt/kubernetes/cfg/flanneld

ExecStart=/opt/kubernetes/bin/flanneld --ip-masq \$FLANNEL_OPTIONS

ExecStartPost=/opt/kubernetes/bin/mk-docker-opts.sh -k DOCKER_NETWORK_OPTIONS -d /run/flannel/subnet.env

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload

systemctl enable flanneld

systemctl restart flanneld (4)开启flannel网络功能

[root@node2 ~]# bash flannel.sh https://192.168.150.10:2379,https://192.168.150.100:2379,https://192.168.150.200:2379

Created symlink from /etc/systemd/system/multi-user.target.wants/flanneld.service to /usr/lib/systemd/system/flanneld.service.(5)配置docker连接flannel

[root@node2 ~]# vim /usr/lib/systemd/system/docker.service

[Service]

Type=notify

# the default is not to use systemd for cgroups because the delegate issues still

# exists and systemd currently does not support the cgroup feature set required

# for containers run by docker

EnvironmentFile=/run/flannel/subnet.env

ExecStart=/usr/bin/dockerd $DOCKER_NETWORK_OPTIONS -H fd:// --containerd=/run/containerd/containerd.sock

ExecReload=/bin/kill -s HUP $MAINPID

TimeoutSec=0

RestartSec=2

Restart=always(6)重启docker服务

[root@node2 ~]# systemctl daemon-reload

[root@node2 ~]# systemctl restart docker.service (7)查看flannel网络

[root@node2 ~]# ifconfig

docker0: flags=4099 mtu 1500

inet 172.17.54.1 netmask 255.255.255.0 broadcast 172.17.54.255

ether 02:42:a5:a7:9d:d4 txqueuelen 0 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

flannel.1: flags=4163 mtu 1450

inet 172.17.54.0 netmask 255.255.255.255 broadcast 0.0.0.0

inet6 fe80::5065:30ff:fe70:d5ef prefixlen 64 scopeid 0x20

ether 52:65:30:70:d5:ef txqueuelen 0 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 35 overruns 0 carrier 0 collisions 0 (8)测试ping通对方docker0网卡,证明flannel起到路由作用

(9)测试ping通两个node中的centos:7容器

四、部署master组件

1.master节点上操作

(1)api-server生成证书

[root@master k8s]# ls

master.zip

[root@master k8s]# unzip master.zip

Archive: master.zip

inflating: apiserver.sh

inflating: controller-manager.sh

inflating: scheduler.sh

cat > ca-config.json < ca-csr.json < server-csr.json < admin-csr.json < kube-proxy-csr.json < (2)生成k8s证书

[root@master k8s-cert]# bash k8s-cert.sh(3)拷贝证书

[root@master k8s-cert]# ls *pem

admin-key.pem admin.pem ca-key.pem ca.pem kube-proxy-key.pem kube-proxy.pem server-key.pem server.pem

[root@master k8s-cert]# cp ca*pem server*pem /opt/kubernetes/ssl/(4)解压kubernetes压缩包

[root@master k8s]# tar zxf kubernetes-server-linux-amd64.tar.gz (5)复制关键命令文件

[root@localhost k8s]# cd /root/k8s/kubernetes/server/bin

[root@localhost bin]# cp kube-apiserver kubectl kube-controller-manager kube-scheduler /opt/kubernetes/bin/(6)随机生成序列号,并定义token.csv

[root@master bin]# head -c 16 /dev/urandom | od -An -t x | tr -d ' '

848e190dc41df28f8aac271c1b9b28b4

[root@master bin]# vim /opt/kubernetes/cfg/token.csv

848e190dc41df28f8aac271c1b9b28b4,kubelet-bootstrap,10001,"system:kubelet-bootstrap"(7)二进制文件,token证书都准备好,开启apiserver

[root@master k8s]# bash apiserver.sh 192.168.150.10 https://192.168.150.10:2379,https://192.168.150.100:2379,https://192.168.150.200:2379

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-apiserver.service to /usr/lib/systemd/system/kube-apiserver.service.(8)检查进程是否启动成功

[root@master k8s]# ps aux | grep kube(9)查看配置文件

[root@master k8s]# cat /opt/kubernetes/cfg/kube-apiserver(10)监听的https端口

[root@master k8s]# netstat -ntap | grep 6443

[root@master k8s]# netstat -ntap | grep 8080![]()

(11)启动scheduler服务

[root@master k8s]# ./scheduler.sh 127.0.0.1

[root@master k8s]# ps aux | grep kube-scheduler.service

[root@master k8s]# systemctl status kube-scheduler.service (12)启动controller-manager

[root@master k8s]# chmod +x controller-manager.sh

[root@master k8s]# ./controller-manager.sh 127.0.0.1

[root@master k8s]# ps aux | grep kube-controller-manager

[root@master k8s]# systemctl status kube-controller-manager(13)查看master 节点状态

[root@master k8s]# /opt/kubernetes/bin/kubectl get cs

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-0 Healthy {"health":"true"}

etcd-1 Healthy {"health":"true"}

etcd-2 Healthy {"health":"true"}

五、Node节点部署

1.master 节点上操作

(1)把 kubelet、kube-proxy拷贝到node1节点上去

[root@master ~]# cd /root/k8s/kubernetes/server/bin/

[root@master bin]# scp kubelet kube-proxy [email protected]:/opt/kubernetes/bin/

[email protected]'s password:

kubelet 100% 168MB 104.7MB/s 00:01

kube-proxy 100% 48MB 107.4MB/s 00:00 (2)把 kubelet、kube-proxy拷贝到node2节点上去

[root@master bin]# scp kubelet kube-proxy [email protected]:/opt/kubernetes/bin/

[email protected]'s password:

kubelet 100% 168MB 168.3MB/s 00:01

kube-proxy 100% 48MB 169.4MB/s 00:00 2.node节点上操作

(1)node1节点上操作

[root@node1 ~]# ls

node.zip

[root@node1 ~]# unzip node.zip

Archive: node.zip

inflating: proxy.sh

inflating: kubelet.sh (2)node2节点上操作

[root@node2 ~]# ls

node.zip

[root@node2 ~]# unzip node.zip

Archive: node.zip

inflating: proxy.sh

inflating: kubelet.sh 3.master 节点上操作

(1)拷贝kubeconfig.sh文件进行重命名

[root@master ~]# cd k8s/

[root@master k8s]# mkdir kubeconfig

[root@master k8s]# cd kubeconfig/

[root@master kubeconfig]# ls

kubeconfig.sh #上传一个脚本

[root@master kubeconfig]# mv kubeconfig.sh kubeconfig(2)获取token信息

[root@master kubeconfig]# cat /opt/kubernetes/cfg/token.csv

848e190dc41df28f8aac271c1b9b28b4,kubelet-bootstrap,10001,"system:kubelet-bootstrap"(3)配置文件修改为tokenID

[root@master kubeconfig]# vim kubeconfig

APISERVER=$1

SSL_DIR=$2

# 创建kubelet bootstrapping kubeconfig

export KUBE_APISERVER="https://$APISERVER:6443"

# 设置集群参数

kubectl config set-cluster kubernetes \

--certificate-authority=$SSL_DIR/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=bootstrap.kubeconfig

# 设置客户端认证参数

kubectl config set-credentials kubelet-bootstrap \

--token=848e190dc41df28f8aac271c1b9b28b4 \ #上面获取的信息进行修改

--kubeconfig=bootstrap.kubeconfig

# 设置上下文参数

kubectl config set-context default \

--cluster=kubernetes \

--user=kubelet-bootstrap \

--kubeconfig=bootstrap.kubeconfig

# 设置默认上下文

kubectl config use-context default --kubeconfig=bootstrap.kubeconfig

#----------------------

# 创建kube-proxy kubeconfig文件

kubectl config set-cluster kubernetes \

--certificate-authority=$SSL_DIR/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=kube-proxy.kubeconfig

kubectl config set-credentials kube-proxy \

--client-certificate=$SSL_DIR/kube-proxy.pem \

--client-key=$SSL_DIR/kube-proxy-key.pem \

--embed-certs=true \

--kubeconfig=kube-proxy.kubeconfig

kubectl config set-context default \

--cluster=kubernetes \

--user=kube-proxy \

--kubeconfig=kube-proxy.kubeconfig

kubectl config use-context default --kubeconfig=kube-proxy.kubeconfi(4)设置环境变量(可以写入到/etc/profile中)

[root@master kubeconfig]# export PATH=$PATH:/opt/kubernetes/bin/

[root@master kubeconfig]# kubectl get cs

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-2 Healthy {"health":"true"}

etcd-1 Healthy {"health":"true"}

etcd-0 Healthy {"health":"true"} (5)生成配置文件

[root@master kubeconfig]# bash kubeconfig 192.168.150.10 /root/k8s/k8s-cert/

Cluster "kubernetes" set.

User "kubelet-bootstrap" set.

Context "default" created.

Switched to context "default".

Cluster "kubernetes" set.

User "kube-proxy" set.

Context "default" created.

Switched to context "default".(6)拷贝配置文件到node节点

[root@master kubeconfig]# scp bootstrap.kubeconfig kube-proxy.kubeconfig [email protected]:/opt/kubernetes/cfg/

[root@master kubeconfig]# scp bootstrap.kubeconfig kube-proxy.kubeconfig [email protected]:/opt/kubernetes/cfg/(7)创建bootstrap角色赋予权限用于连接apiserver请求签名

[root@master kubeconfig]# kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --user=kubelet-bootstrap4.node1节点上操作

(1)启动kubelet服务

[root@node1 ~]# bash kubelet.sh 192.168.150.100

Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/system/kubelet.service.(2)检查kubelet服务启动

[root@node1 ~]# ps aux | grep kubelet

[root@node1 ~]# systemctl status kubelet(3)启动proxy服务

[root@node1 ~]# bash proxy.sh 192.168.150.100

[root@node1 ~]# systemctl status kube-proxy.service5.node2节点上操作

(1)启动kubelet服务

[root@node2 ~]# bash kubelet.sh 192.168.150.200

Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/system/kubelet.service.(2)检查kubelet服务启动

[root@node2 ~]# ps aux | grep kubelet

[root@node2 ~]# systemctl status kubelet(3)启动proxy服务

[root@node2 ~]# bash proxy.sh 192.168.150.200

[root@node2 ~]# systemctl status kube-proxy.service6.master节点上操作

(1)检查node1节点的请求

[root@master kubeconfig]# kubectl get csr

NAME AGE REQUESTOR CONDITION

node-csr-LdKoDavc7oXSTfCLckyyPyGYC0Mcl7mRD4BsQwd01M0 13s kubelet-bootstrap Pending

node-csr-jZUYmnIxwHpHymAd6szmUWbIP6MSJfv9Ptz7z_aeK7g 13s kubelet-bootstrap Pending[root@master kubeconfig]# kubectl certificate approve node-csr-LdKoDavc7oXSTfCLckyyPyGYC0Mcl7mRD4BsQwd01M0

certificatesigningrequest.certificates.k8s.io/node-csr-LdKoDavc7oXSTfCLckyyPyGYC0Mcl7mRD4BsQwd01M0 approved

[root@master kubeconfig]# kubectl certificate approve node-csr-jZUYmnIxwHpHymAd6szmUWbIP6MSJfv9Ptz7z_aeK7g

certificatesigningrequest.certificates.k8s.io/node-csr-jZUYmnIxwHpHymAd6szmUWbIP6MSJfv9Ptz7z_aeK7g approved(2)继续查看证书状态

[root@master kubeconfig]# kubectl get csr

NAME AGE REQUESTOR CONDITION

node-csr-LdKoDavc7oXSTfCLckyyPyGYC0Mcl7mRD4BsQwd01M0 15m kubelet-bootstrap Approved,Issued

node-csr-jZUYmnIxwHpHymAd6szmUWbIP6MSJfv9Ptz7z_aeK7g 15m kubelet-bootstrap Approved,Issued(3)查看群集节点,成功加入node1节点和node2节点

[root@master kubeconfig]# kubectl get node

NAME STATUS ROLES AGE VERSION

192.168.150.100 Ready 5m41s v1.12.3

192.168.150.200 Ready 5m17s v1.12.3