Flink 窗口 join

文章目录

- Flink 窗口 join

-

- 窗口Join (Window Join)

-

- 定义:

- API 文档:

- 示例:

- 结果:

- 间隔Join (Interval Join)

-

- 定义:

- API文档:

- 示例:

- 结果:

Flink 窗口 join

Flink的窗口Join分为2中,一种为普通的window join (类似 inner join),一种为Interval Join (间隔 join)。

窗口Join (Window Join)

定义:

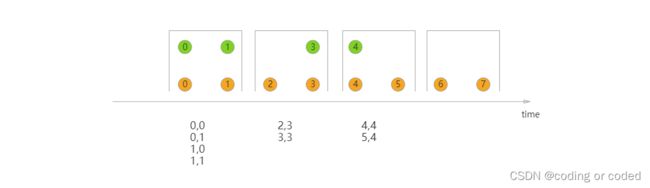

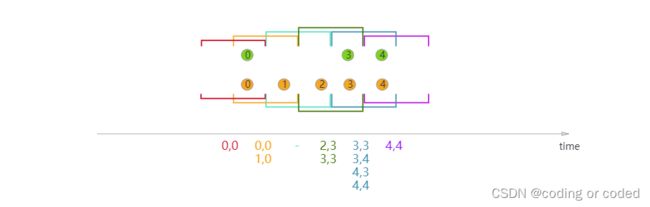

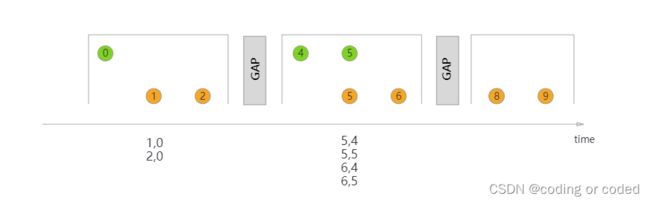

Flink 提供了一个窗口联结(window join)算子,可以定义时间窗口,并将两条流中共享一个公共键(key)的数据放在窗口中进行配对处理。类似于SQL中的Join,

API 文档:

leftStream.join(rightStream)

.where(<KeySelector>)

.equalTo(<KeySelector>)

.window(<WindowAssigner>)

.apply(<JoinFunction>)

- leftStream:参与 join 的数据流

- rightStream:参与 join 的另一个数据流

- where:第一条数据流的 key

- equalTo:第二条数据流的key

- window:设置窗口信息,例如 滚动窗口(tumbling window)、滑动窗口(sliding window)和会话窗口(session window)都可以使用

import org.apache.flink.api.java.functions.KeySelector;

import org.apache.flink.streaming.api.windowing.assigners.TumblingEventTimeWindows;

import org.apache.flink.streaming.api.windowing.time.Time;

...

DataStream<Integer> orangeStream = ...

DataStream<Integer> greenStream = ...

orangeStream.join(greenStream)

.where(<KeySelector>)

.equalTo(<KeySelector>)

.window(TumblingEventTimeWindows.of(Time.milliseconds(2)))

.apply (new JoinFunction<Integer, Integer, String> (){

@Override

public String join(Integer first, Integer second) {

return first + "," + second;

}

});

import org.apache.flink.api.java.functions.KeySelector;

import org.apache.flink.streaming.api.windowing.assigners.SlidingEventTimeWindows;

import org.apache.flink.streaming.api.windowing.time.Time;

...

DataStream<Integer> orangeStream = ...

DataStream<Integer> greenStream = ...

orangeStream.join(greenStream)

.where(<KeySelector>)

.equalTo(<KeySelector>)

.window(SlidingEventTimeWindows.of(Time.milliseconds(2) /* size */, Time.milliseconds(1) /* slide */))

.apply (new JoinFunction<Integer, Integer, String> (){

@Override

public String join(Integer first, Integer second) {

return first + "," + second;

}

});

import org.apache.flink.api.java.functions.KeySelector;

import org.apache.flink.streaming.api.windowing.assigners.EventTimeSessionWindows;

import org.apache.flink.streaming.api.windowing.time.Time;

...

DataStream<Integer> orangeStream = ...

DataStream<Integer> greenStream = ...

orangeStream.join(greenStream)

.where(<KeySelector>)

.equalTo(<KeySelector>)

.window(EventTimeSessionWindows.withGap(Time.milliseconds(1)))

.apply (new JoinFunction<Integer, Integer, String> (){

@Override

public String join(Integer first, Integer second) {

return first + "," + second;

}

});

- apply:窗口处理函数,只能调用 .apply()。内部可以实现JoinFunction或者FlatJoinFunction,来返回 join 后的结果。

- JoinFunction

/**

* IN1: 第一个流的输出类型

* IN2: 第二个流的输出类型

* OUT: join后的输出类型

*/

public interface JoinFunction<IN1, IN2, OUT> extends Function, Serializable {

OUT join(IN1 var1, IN2 var2) throws Exception;

}

- FlatJoinFunction

/**

* IN1: 第一个流的输出类型

* IN2: 第二个流的输出类型

* OUT: 通过收集器(Collector)来收集 join 结果

*/

public interface FlatJoinFunction<IN1, IN2, OUT> extends Function, Serializable {

void join(IN1 var1, IN2 var2, Collector<OUT> var3) throws Exception;

}

示例:

package com.ali.flink.demo.driver;

import com.ali.flink.demo.utils.DataGeneratorImpl002;

import com.ali.flink.demo.utils.FlinkEnv;

import com.alibaba.fastjson.JSON;

import com.alibaba.fastjson.JSONObject;

import org.apache.flink.api.common.functions.JoinFunction;

import org.apache.flink.api.common.functions.MapFunction;

import org.apache.flink.api.java.functions.KeySelector;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.source.datagen.DataGeneratorSource;

import org.apache.flink.streaming.api.windowing.assigners.TumblingProcessingTimeWindows;

import org.apache.flink.streaming.api.windowing.time.Time;

/**

* join function

*/

public class FlinkJoinFunctionDemo01 {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = FlinkEnv.FlinkDataStreamRunEnv();

env.setParallelism(1);

DataGeneratorSource<String> dataGeneratorSource1 = new DataGeneratorSource<>(new DataGeneratorImpl002());

DataStream<String> sourceStream1 = env.addSource(dataGeneratorSource1).returns(String.class);

// dataGeneratorStream.print("source");

SingleOutputStreamOperator<Event> mapStream1 = sourceStream1

.map(new MapFunction<String, Event>() {

@Override

public Event map(String s) throws Exception {

JSONObject jsonObject = JSON.parseObject(s);

String username = jsonObject.getString("username");

String eventtime = jsonObject.getString("eventtime");

String click_url = jsonObject.getString("click_url");

return new Event(username, click_url, eventtime);

}

});

mapStream1.print("map source_1");

DataGeneratorSource<String> dataGeneratorSource2 = new DataGeneratorSource<>(new DataGeneratorImpl002());

DataStream<String> sourceStream2 = env.addSource(dataGeneratorSource2).returns(String.class);

// dataGeneratorStream.print("source");

SingleOutputStreamOperator<Event> mapStream2 = sourceStream2

.map(new MapFunction<String, Event>() {

@Override

public Event map(String s) throws Exception {

JSONObject jsonObject = JSON.parseObject(s);

String username = jsonObject.getString("username");

String eventtime = jsonObject.getString("eventtime");

String click_url = jsonObject.getString("click_url");

return new Event(username, click_url, eventtime);

}

});

mapStream2.print("map source_2");

mapStream1.join(mapStream2)

.where(new KeySelector<Event, String>() {

@Override

public String getKey(Event event) throws Exception {

return event.userName + '-' + event.clickUrl;

}

})

.equalTo(new KeySelector<Event, String>() {

@Override

public String getKey(Event event) throws Exception {

return event.userName + '-' + event.clickUrl;

}

})

.window(TumblingProcessingTimeWindows.of(Time.seconds(20)))

.apply(new JoinFunction<Event, Event, String>() {

@Override

public String join(Event event, Event event2) throws Exception {

return event.toString() + "====>" + event2.toString();

}

}).print("join");

env.execute("tumble window test");

}

public static class Event{

private String userName;

private String clickUrl;

private String eventTime;

public Event(String userName, String clickUrl, String eventTime) {

this.userName = userName;

this.clickUrl = clickUrl;

this.eventTime = eventTime;

}

@Override

public String toString() {

return "Event{" +

"userName='" + userName + '\'' +

", clickUrl='" + clickUrl + '\'' +

", eventTime='" + eventTime + '\'' +

'}';

}

}

}

结果:

map source_1> Event{userName='bbb', clickUrl='url1', eventTime='2022-07-07 16:14:15'}

map source_2> Event{userName='ccc', clickUrl='url2', eventTime='2022-07-07 16:14:16'}

map source_1> Event{userName='bbb', clickUrl='url2', eventTime='2022-07-07 16:14:23'}

map source_2> Event{userName='ccc', clickUrl='url2', eventTime='2022-07-07 16:14:23'}

map source_1> Event{userName='ccc', clickUrl='url2', eventTime='2022-07-07 16:14:24'}

map source_1> Event{userName='aaa', clickUrl='url2', eventTime='2022-07-07 16:14:30'}

map source_2> Event{userName='ccc', clickUrl='url1', eventTime='2022-07-07 16:14:32'}

map source_1> Event{userName='ccc', clickUrl='url1', eventTime='2022-07-07 16:14:32'}

join> Event{userName='ccc', clickUrl='url1', eventTime='2022-07-07 16:14:32'}====>Event{userName='ccc', clickUrl='url1', eventTime='2022-07-07 16:14:32'}

join> Event{userName='ccc', clickUrl='url2', eventTime='2022-07-07 16:14:24'}====>Event{userName='ccc', clickUrl='url2', eventTime='2022-07-07 16:14:23'}

间隔Join (Interval Join)

定义:

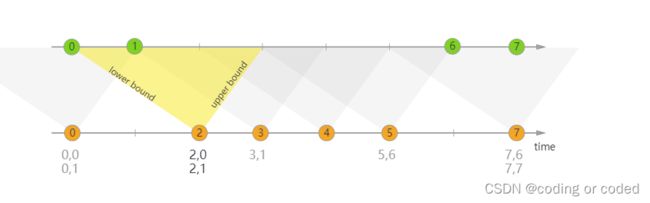

间隔Join 用一个共同的 key 连接两个流的元素(我们现在称它们为 A 和 B),其中流 B 的元素的时间戳位于流 A 中元素的时间戳的相对时间间隔内。

其满足 b.timestamp ∈ [a.timestamp + lowerBound; a.timestamp + upperBound]或 a.timestamp + lowerBound <= b.timestamp <= a.timestamp + upperBound

API文档:

import org.apache.flink.api.java.functions.KeySelector;

import org.apache.flink.streaming.api.functions.co.ProcessJoinFunction;

import org.apache.flink.streaming.api.windowing.time.Time;

...

DataStream<Integer> orangeStream = ...

DataStream<Integer> greenStream = ...

orangeStream

.keyBy(<KeySelector>)

.intervalJoin(greenStream.keyBy(<KeySelector>))

.between(Time.milliseconds(-2), Time.milliseconds(1))

.process (new ProcessJoinFunction<Integer, Integer, String(){

@Override

public void processElement(Integer left, Integer right, Context ctx, Collector<String> out) {

out.collect(first + "," + second);

}

});

示例:

Interval Join必须设置watermark。

package com.ali.flink.demo.driver;

import com.ali.flink.demo.utils.DataGeneratorImpl002;

import com.ali.flink.demo.utils.FlinkEnv;

import com.alibaba.fastjson.JSON;

import com.alibaba.fastjson.JSONObject;

import org.apache.flink.api.common.eventtime.SerializableTimestampAssigner;

import org.apache.flink.api.common.eventtime.WatermarkStrategy;

import org.apache.flink.api.common.functions.MapFunction;

import org.apache.flink.api.java.functions.KeySelector;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.co.ProcessJoinFunction;

import org.apache.flink.streaming.api.functions.source.datagen.DataGeneratorSource;

import org.apache.flink.streaming.api.windowing.time.Time;

import org.apache.flink.util.Collector;

import java.text.ParseException;

import java.text.SimpleDateFormat;

import java.time.Duration;

import java.util.Date;

/**

* Interval Join

*/

public class FlinkIntervalJoinFunctionDemo01 {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = FlinkEnv.FlinkDataStreamRunEnv();

env.setParallelism(1);

DataGeneratorSource<String> dataGeneratorSource1 = new DataGeneratorSource<>(new DataGeneratorImpl002());

DataStream<String> sourceStream1 = env.addSource(dataGeneratorSource1).returns(String.class);

// dataGeneratorStream.print("source");

SingleOutputStreamOperator<Event> mapStream1 = sourceStream1

.map(new MapFunction<String, Event>() {

@Override

public Event map(String s) throws Exception {

JSONObject jsonObject = JSON.parseObject(s);

String username = jsonObject.getString("username");

String eventtime = jsonObject.getString("eventtime");

String click_url = jsonObject.getString("click_url");

return new Event(username, click_url, eventtime);

}

})

.assignTimestampsAndWatermarks(WatermarkStrategy.<Event>forBoundedOutOfOrderness(Duration.ofSeconds(2))

.withTimestampAssigner(new SerializableTimestampAssigner<Event>() {

@Override

public long extractTimestamp(Event event, long l) {

SimpleDateFormat simpleDateFormat = new SimpleDateFormat("yyyy-MM-dd HH:mm:ss");

Date event_time = null;

try {

event_time = simpleDateFormat.parse(event.eventTime);

} catch (ParseException e) {

e.printStackTrace();

}

return event_time.getTime();

}

}));

mapStream1.print("map source_1");

DataGeneratorSource<String> dataGeneratorSource2 = new DataGeneratorSource<>(new DataGeneratorImpl002());

DataStream<String> sourceStream2 = env.addSource(dataGeneratorSource2).returns(String.class);

// dataGeneratorStream.print("source");

SingleOutputStreamOperator<Event> mapStream2 = sourceStream2

.map(new MapFunction<String, Event>() {

@Override

public Event map(String s) throws Exception {

JSONObject jsonObject = JSON.parseObject(s);

String username = jsonObject.getString("username");

String eventtime = jsonObject.getString("eventtime");

String click_url = jsonObject.getString("click_url");

return new Event(username, click_url, eventtime);

}

})

.assignTimestampsAndWatermarks(WatermarkStrategy.<Event>forBoundedOutOfOrderness(Duration.ofSeconds(2))

.withTimestampAssigner(new SerializableTimestampAssigner<Event>() {

@Override

public long extractTimestamp(Event event, long l) {

SimpleDateFormat simpleDateFormat = new SimpleDateFormat("yyyy-MM-dd HH:mm:ss");

Date event_time = null;

try {

event_time = simpleDateFormat.parse(event.eventTime);

} catch (ParseException e) {

e.printStackTrace();

}

return event_time.getTime();

}

}));

mapStream2.print("map source_2");

mapStream1

.keyBy(new KeySelector<Event, String>() {

@Override

public String getKey(Event event) throws Exception {

return event.userName + '-' + event.clickUrl;

}

})

.intervalJoin(mapStream2.keyBy(new KeySelector<Event, String>() {

@Override

public String getKey(Event event) throws Exception {

return event.userName + '-' + event.clickUrl;

}

}))

.between(Time.seconds(-5), Time.seconds(5))

.process(new ProcessJoinFunction<Event, Event, String>() {

@Override

public void processElement(Event event, Event event2, Context context, Collector<String> collector) throws Exception {

SimpleDateFormat simpleDateFormat = new SimpleDateFormat("yyyy-MM-dd HH:mm:ss");

String left_time = simpleDateFormat.format(new Date(context.getLeftTimestamp()));

String right_time = simpleDateFormat.format(new Date(context.getRightTimestamp()));

String timestamp = simpleDateFormat.format(new Date(context.getTimestamp()));

collector.collect("left_time : " + left_time + "-- right_time : " + right_time + "-- timestamp : " + timestamp + " || " + event.toString() + "====>" + event2.toString());

}

}).print("Interval Join");

env.execute("tumble window test");

}

public static class Event{

private String userName;

private String clickUrl;

private String eventTime;

public Event(String userName, String clickUrl, String eventTime) {

this.userName = userName;

this.clickUrl = clickUrl;

this.eventTime = eventTime;

}

@Override

public String toString() {

return "Event{" +

"userName='" + userName + '\'' +

", clickUrl='" + clickUrl + '\'' +

", eventTime='" + eventTime + '\'' +

'}';

}

}

}

结果:

map source_2> Event{userName='ccc', clickUrl='url1', eventTime='2022-07-07 17:11:29'}

map source_1> Event{userName='ccc', clickUrl='url1', eventTime='2022-07-07 17:11:32'}

Interval Join> left_time : 2022-07-07 17:11:32-- right_time : 2022-07-07 17:11:29-- timestamp : 2022-07-07 17:11:32 || Event{userName='ccc', clickUrl='url1', eventTime='2022-07-07 17:11:32'}====>Event{userName='ccc', clickUrl='url1', eventTime='2022-07-07 17:11:29'}

map source_2> Event{userName='aaa', clickUrl='url1', eventTime='2022-07-07 17:11:38'}

map source_1> Event{userName='ccc', clickUrl='url2', eventTime='2022-07-07 17:11:40'}

map source_2> Event{userName='ccc', clickUrl='url1', eventTime='2022-07-07 17:11:43'}

map source_1> Event{userName='ccc', clickUrl='url2', eventTime='2022-07-07 17:11:45'}

map source_2> Event{userName='bbb', clickUrl='url2', eventTime='2022-07-07 17:11:52'}

map source_1> Event{userName='bbb', clickUrl='url2', eventTime='2022-07-07 17:11:53'}

Interval Join> left_time : 2022-07-07 17:11:53-- right_time : 2022-07-07 17:11:52-- timestamp : 2022-07-07 17:11:53 || Event{userName='bbb', clickUrl='url2', eventTime='2022-07-07 17:11:53'}====>Event{userName='bbb', clickUrl='url2', eventTime='2022-07-07 17:11:52'}