UE4获取空间坐标点对应颜色值

原理:

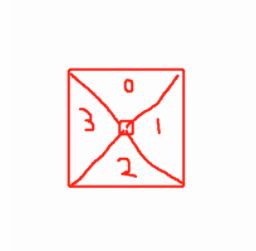

把360方向均分成4分,分别用四个90°的2DCapture渲染到renderTarget2D上,然后通过3D到2D renderTarget2D的映射关系,读取RenderTarget2D的颜色值

应用:点云数据,颜色采集

得到3D到2DRenderTarget2D的映射关系:

代码来源:github :

VictoryBPFunctionLibrary.h

https://github.com/UngureanuAdrian/RPGQuantumGate/blob/f1af44ab32cdbde8a90cf7bad170995693898be3/Plugins/VictoryPlugin25/Source/VictoryBPLibrary/Public/VictoryBPFunctionLibrary.h

// Fill out your copyright notice in the Description page of Project Settings.

#include "UtilLibrary.h"

#include "Engine/TextureRenderTarget2D.h"

#include "Engine/SceneCapture2D.h"

#include "Components/SceneCaptureComponent2D.h"

#include "Engine.h"

#include "Engine/World.h"

#include "Kismet/KismetSystemLibrary.h"

#include "MyProject.h"

#include "RHI.h"

bool UUtilLibrary::CaptureComponent2D_Project(class USceneCaptureComponent2D* Target, FVector Location, FVector2D& OutPixelLocation)

{

if ((Target == nullptr) || (Target->TextureTarget == nullptr))

{

return false;

}

const FTransform& Transform = Target->GetComponentToWorld();

FMatrix ViewMatrix = Transform.ToInverseMatrixWithScale();

FVector ViewLocation = Transform.GetTranslation();

// swap axis st. x=z,y=x,z=y (unreal coord space) so that z is up

ViewMatrix = ViewMatrix * FMatrix(

FPlane(0, 0, 1, 0),

FPlane(1, 0, 0, 0),

FPlane(0, 1, 0, 0),

FPlane(0, 0, 0, 1));

const float FOV = Target->FOVAngle * (float)PI / 360.0f;

FIntPoint CaptureSize(Target->TextureTarget->GetSurfaceWidth(), Target->TextureTarget->GetSurfaceHeight());

float XAxisMultiplier;

float YAxisMultiplier;

if (CaptureSize.X > CaptureSize.Y)

{

// if the viewport is wider than it is tall

XAxisMultiplier = 1.0f;

YAxisMultiplier = CaptureSize.X / (float)CaptureSize.Y;

}

else

{

// if the viewport is taller than it is wide

XAxisMultiplier = CaptureSize.Y / (float)CaptureSize.X;

YAxisMultiplier = 1.0f;

}

FMatrix ProjectionMatrix = FReversedZPerspectiveMatrix(

FOV,

FOV,

XAxisMultiplier,

YAxisMultiplier,

GNearClippingPlane,

GNearClippingPlane

);

FMatrix ViewProjectionMatrix = ViewMatrix * ProjectionMatrix;

FVector4 ScreenPoint = ViewProjectionMatrix.TransformFVector4(FVector4(Location, 1));

if (ScreenPoint.W > 0.0f)

{

float InvW = 1.0f / ScreenPoint.W;

float Y = (GProjectionSignY > 0.0f) ? ScreenPoint.Y : 1.0f - ScreenPoint.Y; //GProjectionSignY此变量需要引入RHI库

FIntRect ViewRect = FIntRect(0, 0, CaptureSize.X, CaptureSize.Y);

OutPixelLocation = FVector2D(

ViewRect.Min.X + (0.5f + ScreenPoint.X * 0.5f * InvW) * ViewRect.Width(),

ViewRect.Min.Y + (0.5f - Y * 0.5f * InvW) * ViewRect.Height()

);

return true;

}

return false;

}实现:

创建四个完全一致的RenderTarget2D

实例化四个2DCapture,并把四个renderterget2D传进去:

bool ULidarBase::InitLidarComponent_YH(int32 LidarLineNum, USceneComponent* Parent, TArray RenderTargets)

{

if (LidarLineNum<=0||Parent == nullptr|| RenderTargets.Num()<4)

{

return false;

}

USceneComponent* LidarRoot = NewObject(GetOwner());

LidarRoot->AttachToComponent(Parent, FAttachmentTransformRules::KeepRelativeTransform);

LidarRoot->RegisterComponent();

ThisLidarRoot = LidarRoot;

for (int32 j = 0; j < 4; j++)

{

USceneCaptureComponent2D* lidarCamera = NewObject(GetOwner());

lidarCamera->AttachToComponent(Parent, FAttachmentTransformRules::KeepRelativeTransform);

lidarCamera->RegisterComponent();

lidarCamera->TextureTarget = RenderTargets[j];

lidarCamera->CaptureSource = ESceneCaptureSource::SCS_FinalColorLDR;

lidarCamera->PrimitiveRenderMode = ESceneCapturePrimitiveRenderMode::PRM_RenderScenePrimitives;

lidarCamera->ProjectionType = ECameraProjectionMode::Perspective;

lidarCamera->FOVAngle = 90.0;

lidarCamera->SetRelativeRotation(FRotator(0.0, 90.0*j, 0.0));

MainCamera2Ds.Add(lidarCamera);

}

float DirRotaNum = 360.0 / LidarLineNum;

for (int32 i = 0; i < LidarLineNum; i++)

{

USceneComponent* LidarParent = NewObject(GetOwner());

LidarParent->AttachToComponent(LidarRoot, FAttachmentTransformRules::KeepRelativeTransform);

LidarParent->RegisterComponent();

LidarParent->SetRelativeRotation(FRotator(0.0, DirRotaNum*i, 0.0));

LidarTmap.Add(i, LidarParent);

}

return LidarRoot->IsRegistered();

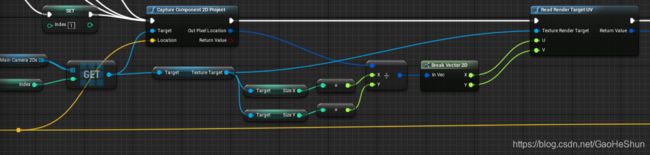

} 蓝图中调用:

运行:

结果:点坐标通过射线检测得到