DCGAN对GAN的改善在于使用深度卷积网络代替全连接网络

全部代码:

import torch

from torch import nn

from torchvision.datasets import MNIST

from torch.utils.data import DataLoader,Dataset

import torchvision

import os

import numpy as np

from PIL import Image

import matplotlib.pyplot as plt

class Discirmintor(nn.Module):

def __init__(self):

super(Discirmintor, self).__init__()

self.conv1=nn.Conv2d(in_channels=1,out_channels=32,kernel_size=3,stride=2,padding=1)

self.bn1=nn.BatchNorm2d(num_features=32,momentum=0.8)

self.conv2=nn.Conv2d(in_channels=32,out_channels=64,kernel_size=3,stride=2,padding=1)

self.bn2=nn.BatchNorm2d(num_features=64,momentum=0.8)

self.conv3=nn.Conv2d(in_channels=64,out_channels=128,kernel_size=3,stride=2,padding=1)

self.bn3=nn.BatchNorm2d(num_features=128,momentum=0.8)

self.avg=nn.AvgPool2d(kernel_size=3)

self.flatten=nn.Flatten()

self.fc=nn.Linear(128,1)

self.lr=nn.LeakyReLU(0.2)

self.sigmoid=nn.Sigmoid()

def forward(self,x):

x=x.view(-1,1,28,28)

x=self.lr(self.bn1(self.conv1(x)))

x=self.lr(self.bn2(self.conv2(x)))

x=self.lr(self.bn3(self.conv3(x)))

x=self.avg(x)

x=x.view(-1,128)

x=self.fc(x)

x=self.sigmoid(x)

return x

class Generator(nn.Module):

def __init__(self):

super(Generator, self).__init__()

self.fc=nn.Linear(noise_size,7*7*256)

self.up1=nn.UpsamplingNearest2d(scale_factor=2)

self.conv1=nn.Conv2d(in_channels=256,out_channels=128,kernel_size=3,padding=1)

self.bn1=nn.BatchNorm2d(num_features=128,momentum=0.8)

self.up2=nn.UpsamplingNearest2d(scale_factor=2)

self.conv2=nn.Conv2d(in_channels=128,out_channels=64,kernel_size=3,padding=1)

self.bn2=nn.BatchNorm2d(num_features=64,momentum=0.8)

self.conv3=nn.Conv2d(in_channels=64,out_channels=1,kernel_size=3,padding=1)

self.relu=nn.ReLU()

self.tanh=nn.Tanh()

def forward(self,x):

x=self.fc(x)

x=self.relu(x)

x=x.view(-1,256,7,7)

x=self.relu(self.bn1(self.conv1(self.up1(x))))

x=self.relu(self.bn2(self.conv2(self.up2(x))))

x=self.conv3(x)

x=self.tanh(x)

return x

def to_img(image):

image=0.5*(image+1)

image=torch.clamp(image,0,1)

image=image.view(-1,28,28,1)

return image

def save_img(fake_image,epoch):

r, c = 5, 5

fig, axs = plt.subplots(r, c)

cnt = 0

for i in range(r):

for j in range(c):

axs[i, j].imshow(fake_image[cnt, :, :, 0],cmap='gray')

axs[i, j].axis('off')

cnt += 1

fig.savefig("images/DCGAN-Mnist/epoch_{}.png".format(epoch + 1))

plt.close()

def train(epochs):

for epoch in range(epochs):

for idx,(img,_) in enumerate(dataloader):

img=img.to(device)

num_img=img.size(0)

real_img=img.view(num_img,-1)

real_label=torch.ones(num_img,1)

real_label=real_label.to(device)

fake_img=torch.randn(num_img,noise_size)

fake_img=fake_img.to(device)

fake_label=torch.zeros(num_img,1)

fake_label=fake_label.to(device)

real_out=D(real_img)

d_loss_real=criterion(real_out,real_label)

fake_img=G(fake_img).detach()

fake_out=D(fake_img)

d_loss_fake=criterion(fake_out,fake_label)

d_loss=d_loss_real+d_loss_fake

optimizer_D.zero_grad()

d_loss.backward()

optimizer_D.step()

fake_img=torch.randn(num_img,noise_size)

fake_img=fake_img.to(device)

fake_img=G(fake_img)

fake_out=D(fake_img)

g_loss=criterion(fake_out,real_label)

optimizer_G.zero_grad()

g_loss.backward()

optimizer_G.step()

print('epoch :{}, d_loss:{}, g_loss:{} '.format(epoch,d_loss.item(),g_loss.item()))

fake_image=to_img(fake_img.cpu().data)

save_img(fake_image,epoch)

torch.save(D.state_dict(), 'models/DCGAN-Mnist/discrimintor.pth')

torch.save(G.state_dict(),'models/DCGAN-Mnist/generator.pth')

if __name__ == '__main__':

transformer = torchvision.transforms.Compose([

torchvision.transforms.ToTensor(),

torchvision.transforms.Normalize((0.5,), (0.5,))

])

dataset=MNIST(root='mnist',train=True,transform=transformer,download=True)

dataloader=DataLoader(dataset=dataset,shuffle=True,batch_size=512)

epoch=500

noise_size=100

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

D = Discirmintor()

G = Generator()

D = D.to(device)

G = G.to(device)

criterion = nn.BCELoss()

optimizer_G = torch.optim.Adam(G.parameters(), lr=0.0003)

optimizer_D = torch.optim.Adam(D.parameters(), lr=0.0001)

train(epoch)

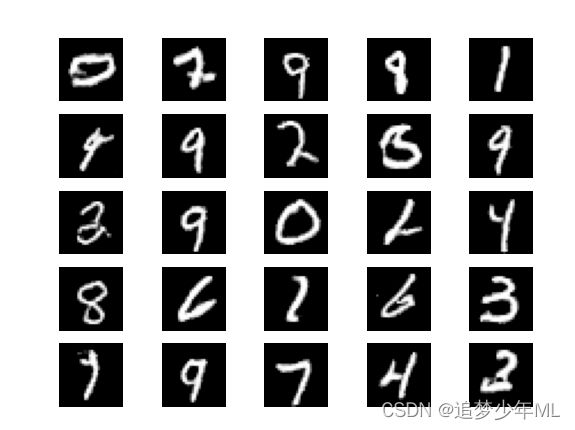

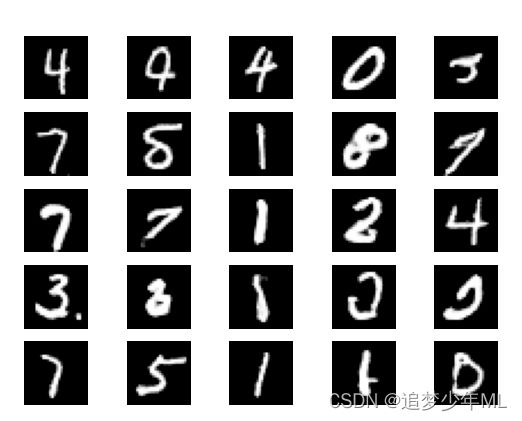

训练250个epoch的结果