LVS+keepalive高可用集群

keepalive简介

keepalive为LVS应用延伸的高可用服务。lvs的调度器无法做高可用。但keepalive不是为lvs专门集群服务的,也可以为其他的的代理服务器做高可用。

keepalive在lvs的高可用集群,主调度器和备调度器(可以有多个) 一主两备或一主一备。

VRRP: keepalived是基于vrrp协议实现Ivs服务的高可用。解决了调度器单节点的故障问题。

VRRP协议:提高网络路由器的可靠性开发的一种协议。

vrrp工作原理

·选举出主和备,预先设定主备的优先级。主的优先级较高,备的优先级低,一旦开启服务器,优先级高的,会自定抢占主的位置。

·VRRP组播通信: 224.0.0.18 VRRP协议当中的主备服务器通过组播地进行通信,交换主备服务器之间的运行状态。主服务会周期性的发送vrrp协议报文,告诉备:主的当前状态。

·主备切换:主服务器发生故障,或者不可达,VRRP协议会把请求转义到备服务器。通过组播地址,VRRP可以迅速的通知其他服务器发生了主备切换,确保新的主服务器可以正常处理客户端的请求。

·故障恢复: 一旦主服务器恢复通信,由组播地址进行通信,发现在恢复的主服务器优先级更高,会抢占原主服务器的位置,成为主服务器,调度和接受请求。

keepalive工作原理

主调度器能够正常运行时,由主调度器进行后端真实服务器的分配处理。其余的备用调度器处于冗余状态。

不参与集群的运转。主调度器故障无法运行,备调度器才会承担主调度器的工作。

一旦主调度恢复工作,继续由主调度器进行处理,备调度器又成了冗余。

keepalive的体系模块:

全局模块:core模块,负载整个keepalived启

动加载和维护

VRRP模块:实现vrrp协议,主备切换

check模块:负责后端真实服务器健康检查,配置真实服务器的模块当中。

安装keepalived yum -y install keepalived ipvsadm

vim /etc/keepalived/keepalived.conf下配置说明

·邮箱地:smtp_server

·主备服务的id,主和备的id不能一致:router_id_01

·取消严格遵守vrrp协议功能,不取消VIP无法连接:#vrrp_strict

·标识主身份:state MASTER

·指定vip地址的物理接口:interface ens33

·虚拟路由器的id号:virtual_router_id 10

·发送报文的间隔时间:advert_int 30

·指定集群的vip地址:virtual_ipaddress

·健康检查的间隔时间:delay_loop 6 单位s

·负载均衡的调度算法:lb algo rr

·指定lvs集群的工作方式(要大写):lb_kind NAT

·连接保持:persistence_timeout 50 单位s

·VIP模块:virtual server 192.168.233.100 80

·后端真实服务器的轮询权重:real_server 192.168.233.30 80

·检测目标的端口号:connect_port 80

·连接超时时间:connect_timeout 3 单位s

·重试的次数:nb_get_retry 3

·重试间隔秒数:delay_before_retry 4实验(LVS-DR)-keepalive

主192.168.10.10

备192.168.10.40

web服务器

192.168.10.20----nginx

192.168.10.30----nginx

客户端访问:任意一台

主192.168.10.10

yum -y install keepalived ipvsadm

vim /etc/keepalived/keepalived.conf

.............

smtp_server 127.0.0.1

router_id LVS_01

vrrp_strict 注释掉

interface ens33

persistence_timeout 0

virtual_ipaddress {

192.168.10.99

}

修改上面的,并删掉最后一个下面所有的内容,并添加以下内容

virtual_server 192.168.10.99 80 {

delay_loop 6

lb_algo rr

lb_kind DR

persistence_timeout 0

protocol TCP

real_server 192.168.10.20 80 {

weight 1

TCP_CHECK {

connect_port 80

connect_timeout 3

nb_get_retry 3

delay_before_retry 4

}

}

real_server 192.168.10.30 80 {

weight 1

TCP_CHECK {

connect_port 80

connect_timeout 3

nb_get_retry 3

delay_before_retry 4

}

}

}

.............

systemctl restart keepalived.service

systemctl restart ipvsadm.service

ipvsadm-save > /etc/sysconfig/ipvsadm

systemctl restart ipvsadm.service

ipvsadm -ln

ipvsadm-save > /etc/sysconfig/ipvsadm

systemctl restart ipvsadm.service

vim /etc/sysctl.conf

.....................

net.ipv4.ip_forward = 0

net.ipv4.conf.all.send_redirects = 0

net.ipv4.conf.default.send_redirects = 0

net.ipv4.conf.ens33.send_redirects = 0

.....................

sysctl -p 立即加载

备192.168.10.40

yum -y install keepalived ipvsadm

vim /etc/keepalived/keepalived.conf

.............

smtp_server 127.0.0.1

router_id LVS_02

priority 90

vrrp_strict 注释掉

state BACKUP

interface ens33

persistence_timeout 0

virtual_ipaddress {

192.168.10.99

}

修改上面的,并删掉最后一个下面所有的内容,并添加以下内容

virtual_server 192.168.10.99 80 {

delay_loop 6

lb_algo rr

lb_kind DR

persistence_timeout 0

protocol TCP

real_server 192.168.10.20 80 {

weight 1

TCP_CHECK {

connect_port 80

connect_timeout 3

nb_get_retry 3

delay_before_retry 4

}

}

real_server 192.168.10.30 80 {

weight 1

TCP_CHECK {

connect_port 80

connect_timeout 3

nb_get_retry 3

delay_before_retry 4

}

}

}

.............

systemctl restart keepalived.service

systemctl restart ipvsadm.service

ipvsadm-save > /etc/sysconfig/ipvsadm

systemctl restart ipvsadm.service

ipvsadm -ln

ipvsadm-save > /etc/sysconfig/ipvsadm

systemctl restart ipvsadm.service

vim /etc/sysctl.conf

.....................

net.ipv4.ip_forward = 0

net.ipv4.conf.all.send_redirects = 0

net.ipv4.conf.default.send_redirects = 0

net.ipv4.conf.ens33.send_redirects = 0

.....................

sysctl -p 立即加载web服务器

192.168.10.20

192.168.10.30

vim /etc/sysconfig/network-scripts/ifcfg-lo:0

.........................

DEVICE=lo:0

IPADDR=192.168.10.99

NETMASK=255.255.255.255

ONBOOT=yes

.........................

重启lo:0网卡

ifup ifcfg-lo:0

绑定vip

route add -host 192.168.10.99 dev lo:0

vim /etc/sysctl.conf

...................

net.ipv4.conf.lo.arp_ignore = 1

net.ipv4.conf.lo.arp_announce = 2

net.ipv4.conf.all.arp_ignore = 1

net.ipv4.conf.all.arp_announce = 2

...................

sysctl -p结果

停止主的

systemctl stop ipvsadm.service

systemctl stop keepalived.service

vip漂移到备服务器

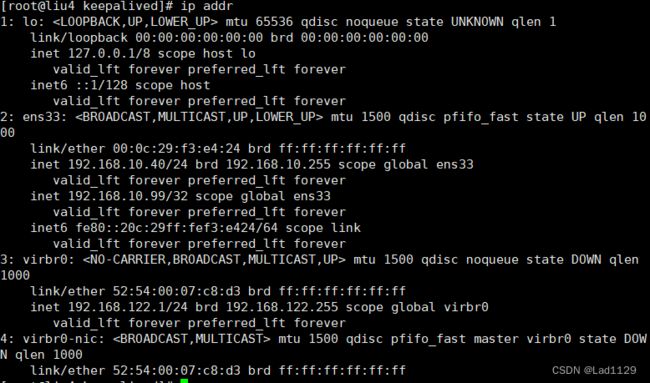

ip addr 看

页面访问服务正常keepalive基于nginx高可用

主192.168.10.10--nginx

yum -y install keepalived

vim /etc/keepalived/keepalived.conf

...................

global_defs模块中

smtp_server 127.0.0.1

router_id LVS_01

#vrrp_strict

在global和vrrp_instance之间加

.......

vrrp_script check_nginx {

script "/opt/check_nginx.sh"

interval 5

}

.......

vrrp_instance模块中

virtual_ipaddress {

192.168.10.99

}

vrrp_instance内最后加

track_script {

check_nginx

}

...................

vrrp_instance模块后的都删除

chmod 777 /opt/check_nginx.sh

systemctl restart keepalived.service传递nginx的状态

vim /opt/check_nginx.sh

#!/bin/bash

/usr/bin/curl -I http://localhost &> /dev/null

if [ $? -ne 0 ]

then

systemctl stop keepalived

fi备192.168.10.20--nginx

yum -y install keepalived

vim /etc/keepalived/keepalived.conf

...................

global_defs模块中

smtp_server 127.0.0.1

router_id LVS_02

#vrrp_strict

在global和vrrp_instance之间加

.......

vrrp_script check_nginx {

script "/opt/check_nginx.sh"

interval 5

}

.......

vrrp_instance模块中

state BACKUP

priority 90

virtual_ipaddress {

192.168.10.99

}

vrrp_instance内最后加

track_script {

check_nginx

}

...................

vrrp_instance模块后的都删除

chmod 777 /opt/check_nginx.sh

systemctl restart keepalived.service传递nginx的状态

vim /opt/check_nginx.sh

#!/bin/bash

/usr/bin/curl -I http://localhost &> /dev/null

if [ $? -ne 0 ]

then

systemctl stop keepalived

fi结果:

当主故障后切换到备,主要恢复需要

主故障

systemctl stop nginx.service

主恢复需要

systemctl restart nginx.service

systemctl restart keepalived.service