VMware下centos7安装k8s(Kubernetes)多master集群

上一节:VMware下centos7安装k8s(Kubernetes)集群

1、使用MobaXterm打开多个窗口,进行多窗口同时编辑,已提前改好IP和hostname。

2、修改hosts,用vim /etc/hosts多机器同时修改。注意ip和主机名不要写错!

[root@master-26 ~]# vim /etc/hosts #多窗口同时编辑

#在尾部添加下面映射

192.168.1.26 master-26

192.168.1.27 node-27

192.168.1.28 master-28

192.168.1.29 node-29

3、关闭防火墙,多窗口同时编辑。

[root@master-26 ~]# systemctl stop firewalld #关闭防火墙

[root@master-26 ~]# systemctl disable firewalld #禁用开机启动

Removed symlink /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed symlink /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.4、关闭交换区,多窗口同时编辑。

[root@master-26 ~]# swapoff -a #禁用交换分区

[root@master-26 ~]# free -h #查看内存使用情况

total used free shared buff/cache available

Mem: 1.8G 225M 1.4G 9.5M 187M 1.4G

Swap: 0B 0B 0B

[root@master-26 ~]# vim /etc/fstab

#永久禁用swap,删除或注释掉/etc/fstab里的swap设备的挂载命令

# /dev/mapper/centos-swap swap swap defaults 0 0

5、禁用selinux,多窗口同时编辑。

[root@master-26 ~]# setenforce 0 #临时关闭selinux

[root@master-26 ~]# getenforce 0 #查看状态

Permissive

[root@master-26 ~]# vim /etc/selinux/config

#永久关闭selinux,在文档最后加下面这句

SELINUX=disabled6、时间同步,centos8是chrony。

#centos7的时间同步

[root@master-26 ~]# yum -y install ntp #安装ntpd服务,安装过程略

[root@master-26 ~]# systemctl start ntpd #开启ntpd服务

[root@master-26 ~]# systemctl enable ntpd #设置开机启动

#centos8时间同步要安装 chrony 可自行百度7、将桥接的IPv4流量传递到iptables的链(有一些ipv4的流量不能走iptables链,因为linux内核的一个过滤器,每个流量都会经过他,然后再匹配是否可进入当前应用进程去处理,所以会导致流量丢失),配置k8s.conf文件(k8s.conf文件原来不存在,需要自己创建的)

[root@master-26 ~]# touch /etc/sysctl.d/k8s.conf #文件需要自己创建

[root@master-26 ~]# vim /etc/sysctl.d/k8s.conf #手敲命令可多行输入,复制粘贴要分别操作

#添加下面4行

net.bridge.bridge-nf-call-ip6tables=1

net.bridge.bridge-nf-call-iptables=1

net.ipv4.ip_forward=1

vm.swappiness=0

[root@master-26 ~]# sysctl --system #加载系统参数8、安装docker,k8s驱动介绍可参考:Configuring a cgroup driver

#先卸载安装残留,有些时候没安装好后续步骤报错,也可以卸载重装

#手敲命令可多行输入,复制粘贴要分别操

[root@master-26 ~]# yum remove docker docker-client docker-client-latest docker-common docker-latest docker-latest-logrotate docker-logrotate docker-engine

[root@master-26 ~]# yum install -y yum-utils #安装yum-utils,主要提供yum-config-manager命令

[root@master-26 ~]# yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo

[root@master-26 ~]# yum list docker-ce --showduplicates | sort -r #查看可获取的docker版本

[root@master-26 ~]# yum -y install docker-ce docker-ce-cli containerd.io

[root@master-26 ~]# systemctl start docker #启动

[root@master-26 ~]# systemctl enable docker #设置开机启动

[root@master-26 ~]# vim /etc/docker/daemon.json

#新增配置文件,加入下面内容,#后的释义不要加!!!,不然重启docker会报错

{

"registry-mirrors": ["https://b9pmyelo.mirror.aliyuncs.com"], #镜像加速,

"exec-opts": ["native.cgroupdriver=systemd"] #更换驱动,k8s用systemd驱动

}

[root@master-26 ~]# systemctl restart docker #重启docker

[root@master-26 ~]# docker info |tail -5 #查看镜像加速是否设置成功

127.0.0.0/8

Registry Mirrors:

https://b9pmyelo.mirror.aliyuncs.com/

Live Restore Enabled: false

[root@master-26 ~]# docker info | grep -i "Cgroup Driver" #查看驱动是否更换

Cgroup Driver: systemd9、使用kubeadm安装k8s及相关工具

[root@master-26 ~]# vim /etc/yum.repos.d/kubernetes.repo #设置阿里云源,否则会卡住

[kubernetes]

name = Kubernetes

baseurl = https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled = 1

gpgcheck = 0

repo_gpgcheck = 0

gpgkey = https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

[root@master-26 ~]# cat /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name = Kubernetes

baseurl = https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled = 1

gpgcheck = 0

repo_gpgcheck = 0

gpgkey = https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

[root@master-26 ~]# yum list --showduplicates | grep kubeadm #查看可安装版本

#版本要安装1.23.12之下的,1.24之上就不默认集成docker后面会报错

[root@master-26 ~]# yum -y install kubelet-1.23.12 kubeadm-1.23.12 kubectl-1.23.12

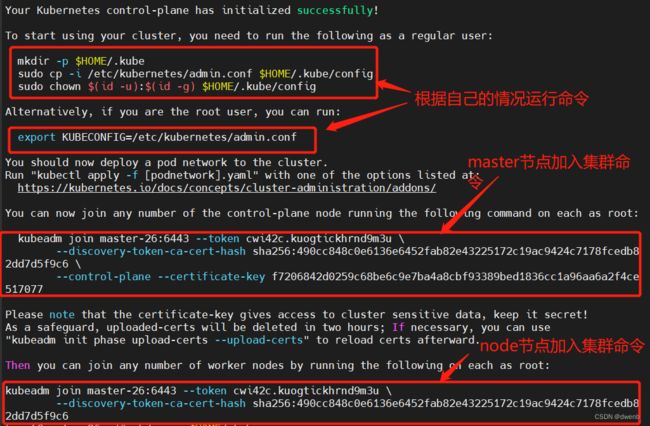

[root@master-26 ~]# systemctl enable kubelet #开机启动,现在服务还起不来10、退出多窗口编辑!!!只在一台机器上初始化master节点,其中192.168.1.26要换成自己的实际ip地址,kubernetes版本号要和前面安装的一致。命令输入后要多等一会,在需要拉取镜像。

[root@master-26 ~]# kubeadm init --control-plane-endpoint=master-26:6443 --upload-certs --image-repository registry.aliyuncs.com/google_containers --kubernetes-version v1.23.12 --service-cidr=10.96.0.0/12 --pod-network-cidr=10.244.0.0/16

[init] Using Kubernetes version: v1.23.12

[preflight] Running pre-flight checks

[preflight] Pulling images required for setting up a Kubernetes cluster

......

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of the control-plane node running the following command on each as root:

kubeadm join master-26:6443 --token cwi42c.kuogtickhrnd9m3u \

--discovery-token-ca-cert-hash sha256:490cc848c0e6136e6452fab82e43225172c19ac9424c7178fcedb82dd7d5f9c6 \

--control-plane --certificate-key f7206842d0259c68be6c9e7ba4a8cbf93389bed1836cc1a96aa6a2f4ce517077

Please note that the certificate-key gives access to cluster sensitive data, keep it secret!

As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use

"kubeadm init phase upload-certs --upload-certs" to reload certs afterward.

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join master-26:6443 --token cwi42c.kuogtickhrnd9m3u \

--discovery-token-ca-cert-hash sha256:490cc848c0e6136e6452fab82e43225172c19ac9424c7178fcedb82dd7d5f9c6

[root@master-26 ~]# export KUBECONFIG=/etc/kubernetes/admin.conf

[root@master-26 ~]#

12、另一台master机器加入集群,注意看上一步的最后输出部分。

[root@master-28 ~]# kubeadm join master-26:6443 --token cwi42c.kuogtickhrnd9m3u \

> --discovery-token-ca-cert-hash sha256:490cc848c0e6136e6452fab82e43225172c19ac9424c7178fced b82dd7d5f9c6 \

> --control-plane --certificate-key f7206842d0259c68be6c9e7ba4a8cbf93389bed1836cc1a96aa6a2f4 ce517077

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

......

This node has joined the cluster and a new control plane instance was created:

* Certificate signing request was sent to apiserver and approval was received.

* The Kubelet was informed of the new secure connection details.

* Control plane (master) label and taint were applied to the new node.

* The Kubernetes control plane instances scaled up.

* A new etcd member was added to the local/stacked etcd cluster.

To start administering your cluster from this node, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Run 'kubectl get nodes' to see this node join the cluster.

[root@master-28 ~]# mkdir -p $HOME/.kube

[root@master-28 ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@master-28 ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

[root@master-28 ~]# kubectl get nodes #此时可以看到已经加入集群了

NAME STATUS ROLES AGE VERSION

master-26 NotReady control-plane,master 5m31s v1.23.12

master-28 NotReady control-plane,master 62s v1.23.12

13、将另外两台node节点加入集群,在node节点机器查询ststus报错,可以在master机器上查询。下一步node节点查询解决报错。

[root@node-27 ~]# kubeadm join master-26:6443 --token cwi42c.kuogtickhrnd9m3u \

> --discovery-token-ca-cert-hash sha256:490cc848c0e6136e6452fab82e43225172c19ac9424c7178fcedb82dd7d5f9c6

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

[root@node-27 ~]# kubectl get nodes

The connection to the server localhost:8080 was refused - did you specify the right host or port?

[root@master-26 ~]# kubectl get nodes #注意看机器,换到master了

NAME STATUS ROLES AGE VERSION

master-26 NotReady control-plane,master 18m v1.23.12

master-28 NotReady control-plane,master 14m v1.23.12

node-27 NotReady 42s v1.23.12 [root@node-29 ~]# kubeadm join master-26:6443 --token cwi42c.kuogtickhrnd9m3u --discovery-token-ca-cert-hash sha256:490cc848c0e6136e6452fab82e43225172c19ac9424c7178fcedb82dd7d5f9c6

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

[root@node-29 ~]# kubectl get nodes

The connection to the server localhost:8080 was refused - did you specify the right host or port?

[root@master-26 ~]# kubectl get nodes #注意看机器,换到master了

NAME STATUS ROLES AGE VERSION

master-26 NotReady control-plane,master 19m v1.23.12

master-28 NotReady control-plane,master 15m v1.23.12

node-27 NotReady 110s v1.23.12

node-29 NotReady 14s v1.23.12 14、node节点查询,要将master上的admin.conf文件拷到node机器上

[root@master-26 ~]# scp /etc/kubernetes/admin.conf [email protected]:/etc/kubernetes/admin.conf

The authenticity of host '192.168.1.27 (192.168.1.27)' can't be established.

ECDSA key fingerprint is SHA256:HPt1rmGZwNTRyOStjI6N4vUmVDGvv0Wbu0ClzQLLq9U.

ECDSA key fingerprint is MD5:b1:41:b6:6e:d0:6b:e9:0d:cc:f3:7e:43:7b:55:f2:56.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '192.168.1.27' (ECDSA) to the list of known hosts.

[email protected]'s password:

admin.conf 100% 5637 7.8MB/s 00:00

[root@master-26 ~]# scp /etc/kubernetes/admin.conf [email protected]:/etc/kubernetes/admin.conf

The authenticity of host '192.168.1.29 (192.168.1.29)' can't be established.

ECDSA key fingerprint is SHA256:HPt1rmGZwNTRyOStjI6N4vUmVDGvv0Wbu0ClzQLLq9U.

ECDSA key fingerprint is MD5:b1:41:b6:6e:d0:6b:e9:0d:cc:f3:7e:43:7b:55:f2:56.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '192.168.1.29' (ECDSA) to the list of known hosts.

[email protected]'s password:

admin.conf 100% 5637 4.2MB/s 00:00

[root@node-27 ~]# kubectl get nodes #admin.con拷过来后要加入环境变量才能生效

The connection to the server localhost:8080 was refused - did you specify the right host or port?

[root@node-27 ~]# echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> ~/.bash_profile

[root@node-27 ~]# source ~/.bash_profile

[root@node-27 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master-26 NotReady control-plane,master 34m v1.23.12

master-28 NotReady control-plane,master 29m v1.23.12

node-27 NotReady 16m v1.23.12

[root@node-29 ~]# kubectl get nodes #admin.con拷过来后要加入环境变量才能生效

The connection to the server localhost:8080 was refused - did you specify the right host or port?

[root@node-29 ~]# echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> ~/.bash_profile

[root@node-29 ~]# source ~/.bash_profile

[root@node-29 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master-26 NotReady control-plane,master 39m v1.23.12

master-28 NotReady control-plane,master 35m v1.23.12

node-27 NotReady 21m v1.23.12

node-29 NotReady 19m v1.23.12

15、 部署容器网络,CNI网络插件,几台机器都要装。如果安装完还有notready的,步骤没错的话可以等一会再看

#在master节点配置pod网络创建

#node节点加入k8s集群后,在master上执行kubectl get nodes发现状态是NotReady,因为还没有部署CNI网络插件,其实在步骤四初始化

#完成master节点的时候k8s已经叫我们去配置pod网络了。在k8s系统上Pod网络的实现依赖于第三方插件进行,这类插件有近数十种之多,较为

#著名的有flannel、calico、canal和kube-router等,简单易用的实现是为CoreOS提供的flannel项目。

#执行下面这条命令在线配置pod网络,因为是国外网站,所以可能报错,测试去http://ip.tool.chinaz.com/网站查到

#域名raw.githubusercontent.com对应的IP,把域名解析配置到/etc/hosts文件,然后执行在线配置pod网络,多尝试几次即可成功。

[root@master-26 ~]# echo "185.199.111.133 raw.githubusercontent.com" >> /etc/hosts #本人查到的地址,所有机器都要加

[root@master-26 ~]# kubectl apply -f https://raw.githubusercontent.com/flannel-io/flannel/master/Documentation/kube-flannel.yml #如果不行多试几次,或换手机热点

namespace/kube-flannel unchanged

clusterrole.rbac.authorization.k8s.io/flannel unchanged

clusterrolebinding.rbac.authorization.k8s.io/flannel unchanged

serviceaccount/flannel unchanged

configmap/kube-flannel-cfg unchanged

daemonset.apps/kube-flannel-ds unchanged

[root@master-26 ~]# kubectl get pods -n kube-system #查看运行状态

NAME READY STATUS RESTARTS AGE

coredns-7f6cbbb7b8-9k7gx 1/1 Running 0 46m

coredns-7f6cbbb7b8-n7vcd 1/1 Running 0 46m

etcd-master-26 1/1 Running 2 46m

kube-apiserver-master-26 1/1 Running 3 46m

kube-controller-manager-master-26 1/1 Running 2 46m

kube-proxy-mpxck 1/1 Running 1 (5m6s ago) 42m

kube-proxy-nrvcq 1/1 Running 0 13m

kube-proxy-tjn44 1/1 Running 0 46m

kube-scheduler-master-26 1/1 Running 2 46m

[root@master-26 ~]# kubectl get nodes #查看node

NAME STATUS ROLES AGE VERSION

master-26 NotReady control-plane,master 47m v1.22.6

node-27 Ready 43m v1.22.6

node-29 Ready 13m v1.22.6

[root@master-26 ~]# kubectl describe node master-26 #对于notready的节点查看原因

……

container runtime network not ready: NetworkReady=false reason:NetworkPluginNotReady message:docker: network plugin is not ready: cni config uninitialized

……

#上述错误可通过如下方式解决

[root@master-26 ~]# mv /etc/containerd/config.toml /tmp/

[root@master-26 ~]# systemctl restart containerd 16、验证集群是否正常,要注意看对外暴露的端口号(自动生成的),不要错了。跑通后再自行修改内网对应的端口号。

[root@node-26 ~]# kubectl create deployment httpd --image=httpd

deployment.apps/httpd created

[root@node-26 ~]# kubectl expose deployment httpd --port=80 --type=NodePort

service/httpd exposed

[root@master-26 ~]# kubectl get pod,svc

NAME READY STATUS RESTARTS AGE

pod/httpd-694d7c7586-wzsrt 1/1 Running 0 10m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/httpd NodePort 10.108.208.52 80:32758/TCP 9m47s

service/kubernetes ClusterIP 10.96.0.1 443/TCP 54m