devops之CI/CD实践总结

背景

从2015开始开始接触CICD到现在已经8年,记得15年在某大厂的时候,那时候还没有用上git仓库,开始都是svn,后来才逐步推动git的普及,当然自动化部署一直是有的,不过都是基于开源的jenkins做了一些定制开发

2017年在当前这家公司开始搭建自有的CICD平台,到现在也有6年了,目前的版本主要是基于gitlab和jenkins来做的,包括后面的kubernate,除了支持微服务架构,还包括对异构平台支持,总体上算是趋向成熟,期间也经历了总共3个大版本的迭代。现在把这几年的devops的演化路径和实践经验梳理一下,供大家参考

版本演进

第一版 基础版

主要内容:

- 采用gitlab作为持续集成工具,每次代码的提交都会触发gitrunner自动构建,java的微服务项目采用maven构建,产物是可执行的jar包

- 构建好的产物会自动上传到nexus产物仓库中,cd脚本会从这里pull产物

- 利用jenkins作为自动化部署工具,在gitlab上创建好自动化部署项目,每个项目都有单独的目录,并将groovy自动化部署脚本路径配置到jenkins上,作为一个pipline,手工触发自动化发部署

- 部署流程贯穿和打通了公司机房和公有云环境,CICD都在公司机房,支持向云上部署应用

第二版 集成kubernate

主要内容:

- 将所有的微服务的cicd脚本都做了升级和改造,采用kubernate集群部署

- 微服务采用maven插件构建docker镜像,并推送到harbor仓库

- 在jenkins的agent上运行kubectl apply -f ***deployment.yaml,进行自动化部署

- 因为是第一次引入kubernate,这个版本的cicd主要开发和测试环境

第三版 采用pod执行构建和部署

主要内容:

- 不仅仅是应用和服务进入了kubernate,而且连jenkins的执行部署任务的agent,还有gitlab的运行构建任务runner也放到了kubernate,这样的改进使得大规模的并行构建和部署得以有效支持

- 支持了异构系统的集群部署,主要是python,java和c++开发的程序都进入了kubernate集群

- 这个版本已经在生产环境逐步推行开了,生产环境的新的服务都是在kubernate集群中

各个版本对比

第一个版本实现了基础的cicd功能,相当于打下了一个基础,但是问题也比较明显,就是微服务的几点要扩容,增加新的节点,需要做很多环境的安装,因为部署的直接是jar包,至少要安装一下java的环境,而且每次扩容都需要改自动化部署脚本,增加新的节点,而且每次都要遍历所有的节点列表进行部署

第二版引入kubernate,每次扩容就不需要安装新环境了,直接改一下服务副本集的数量就实现了扩容,但是基本还是以开发和测试环境为主

第三版最大的提升就是把kubernate推进到生产环境,新的服务都是采用kubernate来部署的,同时我们的很多服务都是python或者c++写的,这类服务的ci和cd,其实主要是ci带来的提升非常明显,大家知道c++编译环境是很麻烦的事情,举个例子,就是c++依赖的第三方包,需要手工安装的,一旦环境变了,可能有的包就安装不上了,用了kubernate解决了c++环境构建和部署很大的问题

分支模型

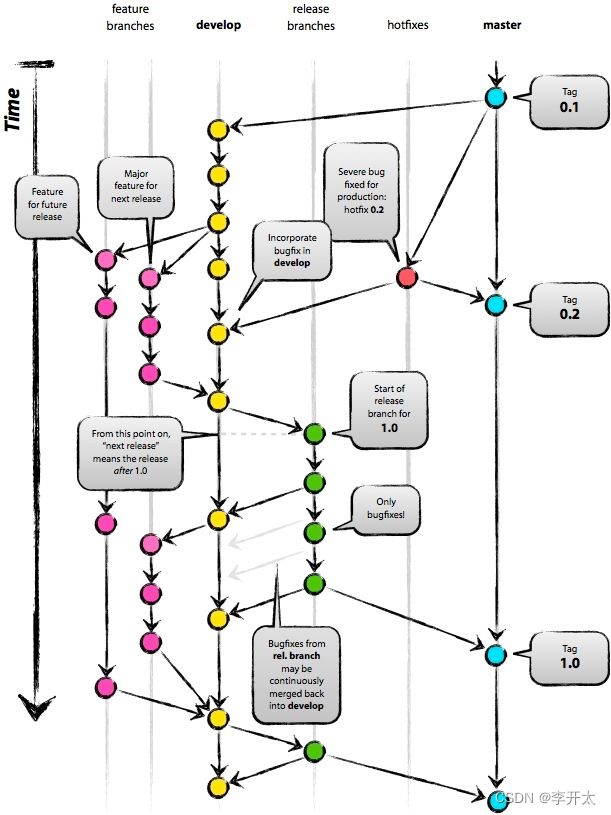

这里项目采用gitflow作为分支和版本管理工具

特性分支(feature)

所有的开发在项目启动之前都先基于develop开一个特性分支,然后在feature分支上提交代码和自测,稳定下来需要提测了,可以合并到develop分支

从develop分支创建feature分支, 使用之前的版本号更新pom(s), 可选择更新或不更新版本号, 默认使用feature名字更新版本号更新pom(s)

# 确保没有未提交的修改

mvn gitflow:feature-start

# 输入feature名称

# 检查所有出现版本号的位置是否被正确修改

# 进行开发

# 提交所有未提交的修改

将feature分支merge到develop分支, 使用之前的版本号更新pom(s), 删除feature分支

mvn -DpushRemote=true -DskipTestProject=true -DkeepBranch=true gitflow:feature-finish

# 选择要完成的feature名称(可以同时有多个feature)

# 检查所有出现版本号的位置是否被正确修改

# -DskipTestProject=true 跳过测试

# -DkeepBranch=true 保留分支

git push origin develop:develop

# 可选的 触发ci发布新版本开发主干(develop)

测试人员是基于develop分支进行测试,开发人员修复bug也是基于develop

正式发布(release)

测试通过之后,测试人员通知开发进行release,release上线成功之后,可以在release分支上进行finish操作,finishi之后release分支被删除,版本合并到master,并打上版本的tag

从develop分支创建feature分支, 使用之前的版本号更新pom(s), 可选择更新或不更新版本号, 默认使用feature名字更新版本号更新pom(s)

# 确保没有未提交的修改

mvn gitflow:feature-start

# 输入feature名称

# 检查所有出现版本号的位置是否被正确修改

# 进行开发

# 提交所有未提交的修改

将feature分支merge到develop分支, 使用之前的版本号更新pom(s), 删除feature分支

mvn -DpushRemote=true -DskipTestProject=true -DkeepBranch=true gitflow:feature-finish

# 选择要完成的feature名称(可以同时有多个feature)

# 检查所有出现版本号的位置是否被正确修改

# -DskipTestProject=true 跳过测试

# -DkeepBranch=true 保留分支

git push origin develop:develop

# 可选的 触发ci发布新版本这里需要注意的是feature分支的develop分支都是不是稳定的,属于snapshot版本,release的产物是稳定版,这两个版本放到nexus上不同的库,采用不同的存储逻辑,非稳定版本只保留7天,超过7天就会失效,否则版本太多了,存储成本cover不住

所以在下面的自动化脚本里面,针对不同的分支采用不同的构建逻辑

自动化脚本

为了简化自动化和规范化CI/CD脚本,我们提供了一个share_library,把一些常用的功能都抽象到一个共享库,开发人员只要按照之前的模板做一点点调整,然后调用共享库的函数,很少量的工作就可以把脚本写好

持续集成pipeline

每个项目都要编写一个.gitlab-ci.yml文件和ci.sh文件,定义一些简单的构建逻辑,以下是常规的ci pipline定义

before_script:

- export CI_OPT_SITE=false

- export CI_OPT_GITHUB_SITE_PUBLISH=false

- export CI_OPT_INFRASTRUCTURE=private

- export CI_OPT_MAVEN_EFFECTIVE_POM=false

- export CI_OPT_PUBLISH_TO_REPO=true

- export CI_OPT_TEST_SKIP=true

- export CI_OPT_INTEGRATION_TEST_SKIP=true

build_snapshots:

artifacts:

when: on_success

expire_in: 1 day

paths:

- artifacts.tar.gz

except:

- master

- /^hotfix\/.+$/

- /^release\/.+$/

- /^support\/.*$/

- /^v\d+\.\d+(\.\d+)?(-\S*)?/

script:

- bash ci.sh mvn clean package;

- echo archive $(git ls-files -o | grep -Ev '.+-exec\.jar' | grep -v 'artifacts.tar.gz' | wc -l) files into artifacts.tar.gz;

- git ls-files -o | grep -Ev '.+-exec\.jar' | grep -v 'artifacts.tar.gz' | tar -czf artifacts.tar.gz -T -;

stage: build

publish_snapshots:

artifacts:

expire_in: 1 day

paths:

- artifacts.tar.gz

dependencies:

- build_snapshots

except:

- master

- /^hotfix\/.+$/

- /^release\/.+$/

- /^support\/.*$/

- /^v\d+\.\d+(\.\d+)?(-\S*)?/

before_script:

- export CI_OPT_CLEAN_SKIP=true

- export CI_OPT_INTEGRATION_TEST_SKIP=true

- export CI_OPT_TEST_SKIP=true

- export CI_OPT_EXTRA_MAVEN_OPTS="-DsendCredentialsOverHttp=true"

script:

- if [ -f artifacts.tar.gz ]; then tar -xzf artifacts.tar.gz; fi;

- bash ci.sh mvn -e deploy;

- echo archive $(git ls-files -o | grep -Ev '.+-exec\.jar' | grep -v 'artifacts.tar.gz' | wc -l) files into artifacts.tar.gz;

- git ls-files -o | grep -Ev '.+-exec\.jar' | grep -v 'artifacts.tar.gz' | tar -czf artifacts.tar.gz -T -;

stage: publish

build_releases:

artifacts:

expire_in: 1 day

paths:

- artifacts.tar.gz

only:

- /^hotfix\/.+$/

- /^release\/.+$/

- /^support\/.*$/

script:

bash ci.sh mvn -e clean package;

stage: build

publish_release:

dependencies:

- build_releases

only:

- /^release\/.+$/

- /^hotfix\/.+$/

- /^support\/.*$/

before_script:

- export CI_OPT_CLEAN_SKIP=true

- export CI_OPT_INTEGRATION_TEST_SKIP=true

- export CI_OPT_TEST_SKIP=true

- export CI_OPT_EXTRA_MAVEN_OPTS="-DsendCredentialsOverHttp=true -Dskip-quality=true"

script:

- if [ -f artifacts.tar.gz ]; then tar -xzf artifacts.tar.gz; fi;

- bash ci.sh mvn -e deploy

stage: publish

stages:

- build

- publish这个pipline主要提供了两个stages:build为构建产物,publish是将产物发布到nexus仓库

在.gitlab-ci.yml中调用了ci.sh,设置构建的逻辑,这里主要是组装的mvn的构建参数,并把参数设置成环境变量maven_opt,共构建使用,以下是ci.sh文件内容:

#!/usr/bin/env bash

echo -e "\n>>>>>>>>>> ---------- default options ---------- >>>>>>>>>>"

if [ -z "${CI_INFRA_OPT_GIT_PREFIX}" ]; then CI_INFRA_OPT_GIT_PREFIX="http://gitlab.td.internal"; fi

if [ -z "${CI_OPT_CI_SCRIPT}" ]; then CI_OPT_CI_SCRIPT="${CI_INFRA_OPT_GIT_PREFIX}/infra/maven-build/raw/master/src/main/ci-script/lib_ci.sh"; fi

if [ -z "${CI_OPT_GITHUB_SITE_PUBLISH}" ]; then CI_OPT_GITHUB_SITE_PUBLISH="false"; fi

if [ -z "${CI_OPT_GITHUB_SITE_REPO_OWNER}" ]; then CI_OPT_GITHUB_SITE_REPO_OWNER="spec"; fi

if [ -z "${CI_OPT_GPG_KEYNAME}" ]; then CI_OPT_GPG_KEYNAME="59DBF10E"; fi

if [ -z "${CI_OPT_INFRASTRUCTURE}" ]; then CI_OPT_INFRASTRUCTURE="private"; fi

if [ -z "${CI_OPT_DEPENDENCY_CHECK}" ]; then CI_OPT_DEPENDENCY_CHECK="false"; fi

if [ -z "${CI_OPT_MAVEN_EFFECTIVE_POM}" ]; then CI_OPT_MAVEN_EFFECTIVE_POM="false"; fi

if [ -z "${CI_OPT_ORIGIN_REPO_SLUG}" ]; then if [ -n "${CI_PROJECT_PATH}" ]; then CI_OPT_ORIGIN_REPO_SLUG="spec/muji-strategy-produce"; else CI_OPT_ORIGIN_REPO_SLUG="spec/infrastructure"; fi; fi

CI_INFRA_OPT_MAVEN_BUILD_OPTS_REPO="${CI_INFRA_OPT_GIT_PREFIX}/infra/maven-build-opts-${CI_OPT_INFRASTRUCTURE}/raw/master";

if [ -z "${CI_OPT_SITE}" ]; then CI_OPT_SITE="true"; fi

if [ -z "${CI_OPT_SITE_PATH_PREFIX}" ] && [ "${CI_OPT_GITHUB_SITE_PUBLISH}" == "true" ]; then

# github site repo ci-and-cd/ci-and-cd (CI_OPT_GITHUB_SITE_REPO_NAME)

CI_OPT_SITE_PATH_PREFIX="spec"

elif [ -z "${CI_OPT_SITE_PATH_PREFIX}" ] && [ "${CI_OPT_GITHUB_SITE_PUBLISH}" == "false" ]; then

# site in nexus3 raw repository

CI_OPT_SITE_PATH_PREFIX="spec/muji-strategy-produce"

fi

if [ -z "${CI_OPT_SONAR_ORGANIZATION}" ]; then CI_OPT_SONAR_ORGANIZATION="spec"; fi

if [ -z "${CI_OPT_SONAR}" ]; then CI_OPT_SONAR="true"; fi

#if [ -z "${LOGGING_LEVEL_ROOT}" ]; then export LOGGING_LEVEL_ROOT="INFO"; fi

echo -e "<<<<<<<<<< ---------- default options ---------- <<<<<<<<<<\n"

echo -e "\n>>>>>>>>>> ---------- call remote script ---------- >>>>>>>>>>"

echo "set -e; curl -f -s -L ${CI_OPT_CI_SCRIPT} > /tmp/$(basename $(pwd))-lib_ci.sh; set +e; source /tmp/$(basename $(pwd))-lib_ci.sh"

set -e; curl -f -s -L ${CI_OPT_CI_SCRIPT} > /tmp/$(basename $(pwd))-lib_ci.sh; set +e; source /tmp/$(basename $(pwd))-lib_ci.sh

echo -e "<<<<<<<<<< ---------- call remote script ---------- <<<<<<<<<<\n"

在ci.sh中基本没有什么内容,主要是一些针对当前微服务项目的环境变量和参数设置,最后调用了share_library: lib_ci.sh,在lib_ci.sh生成maven构建所需的参数MAVEN_OPTS

持续部署pipeline

部署的pipeline是作为一个单独的项目,而不是放到每个微服务的项目中的,因为相对于提交代码,比较随意,部署还是相对来说比较严肃的事情,因为一旦部署了就会产生实际影响,所以我们的部署并不是提交代码触发,而是由开发者手工点击鼠标来触发,jenkins pipeline: pipeline.groovy,也是CD脚本的统一入口的入口

#!/usr/bin/env groovy

package pipeline

// some config for diff project

class Config {

public static String DEPLOY_REMOTE_USER = "spec"

public static String DEPLOY_REMOTE_HOST_PORT = "22"

// internal private project for security info

public static String PROJECT_OSS_INTERNAL = "http://gitlab.tongdao.info/home1-oss/oss-internal.git"

// 项目部署用相关信息

public static String APP_DEPLOY = "http://gitlab.tongdao.info/k8s-deploy/muji-strat-k8s-deploy.git"

public static String APP_DEPLOY_BRANCH = "develop"

// 全局的共享库

public static String WGL_LIBRARIES = "http://gitlab.tongdao.info/home1-oss/jenkins-wgl.git"

// config global credentials in jenkins with admin role,and define credentialsId with this

public static String JENKINS_CREDENTIALS_ID = "gitlab"

public static String NODE_LABEL = "spec"

public static String DEF_PROJEVT_VERSION = "2.0.0-SNAPSHOT"

public static String DEF_WORK_DIR = "/www/ws"

public static String DEF_ENV = "test\ndev\nstaging"

}

// 加载公共的pipeline 类库 ,包含 Deploy.groovy Utilities.groovy

library identifier: 'jenkins-wgl@master',

retriever: modernSCM([$class: 'GitSCMSource', remote: "${Config.WGL_LIBRARIES}", credentialsId: "${Config.JENKINS_CREDENTIALS_ID}"])

def project

podTemplate(inheritFrom: 'k8s-deploy') {

node(POD_LABEL) {

// 初始化阶段

stage("初始化") {

timestamps {

step([$class: 'WsCleanup'])

sh "ls -la ${pwd()}"

git branch: "${Config.APP_DEPLOY_BRANCH}", credentialsId: "${Config.JENKINS_CREDENTIALS_ID}", url: "${Config.APP_DEPLOY}"

def workspace = pwd()

project = Utilities.getProjectList("${workspace}")

echo "project is : ${project}"

}

}

// 收集参数

stage "参数采集"

def paramMap = [:]

timeout(3) { // 超时3分钟

timestamps {

paramMap = input id: 'Environment_id', message: 'Custome your parameters', ok: '提交', parameters:

Utilities.getInputParam(project, Config.DEF_PROJEVT_VERSION, "$JOB_BASE_NAME", getEnv("$JOB_NAME", Config.DEF_ENV))

println("param is :" + paramMap.inspect())

}

manager.addShortText("${paramMap.PROJECT_VERSION}")

}

stage("资源准备") {

timestamps {

if (fileExists("${paramMap.PROJECT}")) {

echo "stash project: ${paramMap.PROJECT}"

def envJson = readFile file: "${workspace}/${paramMap.PROJECT}/environments/environment.json"

paramMap = Utilities.generateParam(paramMap, envJson)

privateConfig(paramMap)

// 认证信息默认从当前部署项目获取,获取不到的情况再从oss-internal统一的地方获取

if (fileExists("src/main/credentials/config")) {

dir("src/main/credentials/") {

stash name: "config", includes: "config"

}

}

// 参数做持久化保存

writeFile(file: 'data.zip', text: paramMap.inspect(), encoding: 'utf-8')

stash "data.zip"

stash name: "${paramMap.PROJECT}", includes: "${paramMap.PROJECT}/**/*"

} else {

print("project: ${paramMap.PROJECT} in ${Config.APP_DEPLOY} is not exist, please check for that!!")

}

sh "ls -la ${pwd()}"

}

}

stage("服务部署") {

container('kubectl') {

timestamps {

try {

step([$class: 'WsCleanup'])

unstash "config"

sh "ls -la "

sh "export KUBECONFIG=config"

def workspace = pwd()

unstash "${paramMap.PROJECT}"

sh "ls -la ${workspace}"

def ipNode = paramMap["PRE_NODES"][0]

def deployParam = Utilities.generateSSHKeyParam(paramMap, ipNode)

dir("${paramMap.PROJECT}") {

Utilities.deploy(paramMap, deployParam, workspace)

}

} catch (e) {

def w = new StringWriter()

e.printStackTrace(new PrintWriter(w))

echo "部署失败,error is: ${w}"

throw e

}

}

}

}

stage("前置校验") {

container('kubectl') {

unstash "config"

def deploy_result

for (int i = 0; i < 25; i++) {

sleep(time: 3, unit: "SECONDS")

deploy_result = sh(

script: """

export KUBECONFIG=config

kubectl config use-context ${paramMap.ENV} >> /dev/null

current_result=FAILURE

deploy_replicas=`kubectl get deployments. ${paramMap.PROJECT} -n ${paramMap.ENV} -o go-template --template='{{.status.replicas}}'`

current_replicas=`kubectl get deployments. ${paramMap.PROJECT} -n ${paramMap.ENV} -o go-template --template='{{.spec.replicas}}'`

if [ \$deploy_replicas -eq \$current_replicas ];then

current_result="SUCCESS"

fi

echo "\$current_result"

""",returnStdout: true).trim()

echo "------------"

echo "${deploy_result}"

echo "------------"

if ("SUCCESS".equalsIgnoreCase("${deploy_result}")) {

break

}

}

if ("FAILURE".equalsIgnoreCase("${deploy_result}")) {

echo "部署失败"

}

currentBuild.result = "$deploy_result"

}

}

stage("完成") {

echo "--------------Deploy Success--------------- "

}

}

}

/**

* 不同项目特有的定制参数

* @param paramMap

* @return

*/

def privateConfig(paramMap) {

paramMap.DEPLOY_USER = Config.DEPLOY_REMOTE_USER

paramMap.WORK_DIR = Config.DEF_WORK_DIR

}

@NonCPS

def getEnv(text, defEnv) {

def pattern = ~"(?<=-)([a-zA-Z].*?)(?=/)"

def martchArray = text =~ pattern

def envName = (martchArray && martchArray[0][1]) ? martchArray[0][1] : defEnv

echo "envName=${envName}"

return envName

}

在这个入口pipeline里面定义了以下部署逻辑,调用了共享库Utilities.groovy,共享库中实现了公共部署分支和逻辑

def deploy(paramMap, deployParam, workspace) {

println("=======new version for deploy!!!!")

def deployObj = new DeployUtil()

if (paramMap.PROJECT_TYPE == "jar") {

return deployObj.deployJar(paramMap, deployParam, workspace, "-i ${workspace}/id_rsa -p " + deployParam.PORT)

} else if (paramMap.PROJECT_TYPE == "docker-compose") {

return deployObj.deployDockerCompose(paramMap, deployParam, workspace, "")

} else if (paramMap.PROJECT_TYPE == "docker-k8s") {

return deployObj.deployDockerK8s(paramMap, deployParam, workspace, "")

} else if (paramMap.PROJECT_TYPE == "tar.gz") {

return deployObj.deployTar(paramMap, deployParam, workspace, "-i ${workspace}/id_rsa -p " + deployParam.PORT)

} else if (paramMap.PROJECT_TYPE == "war") {

return deployObj.deployWar(paramMap, deployParam, workspace, "-i ${workspace}/id_rsa -p " + deployParam.PORT)

} else if (paramMap.PROJECT_TYPE == "tgz") { //npm前端项目

return deployObj.deployNpm(paramMap, deployParam, workspace, "-i ${workspace}/id_rsa -p " + deployParam.PORT)

} else {

println("not support this deploy type !please check config for your environment.json")

}

return null

}

针对不同的类型的项目,走不同的分支逻辑,包括是jar包部署,还是docker-k8s部署,还有war的部署,还有前端项目的部署,然后再调用DeployUtil.groovy共享库并在DeployUtil.groovy中调用项目的deploy.sh,每个项目的deploy.sh由开发人员编写,这个脚本就是拿模板稍微调整一下,工作量很小,以下是一个项目的deploy.sh内容:

start(){

{

kubectl delete deployments. -n $PROJECT_ENV muji-strategy-produce-server --force --grace-period=0

sleep 5

kubectl get po -n $PROJECT_ENV | grep muji-strategy-produce-server |awk '{print $1}' | xargs kubectl delete po -n $PROJECT_ENV --force --grace-period=0

} || { # catch

echo "deployment dont exists"

}

export TS=`date +'%s'`

yaml=`pwd`/environments/$PROJECT_ENV

echo $yaml

for file in $yaml/*;

do

envsubst < $file > ${file}-deploy

cat ${file}-deploy

/bin/kubectl apply -f ${file}-deploy

done

echo "start $PROJECT_NAME OK"

}

这个项目实际的部署脚本就是按照模板修改项目名称即可

未来演化方向

- 加入审批流程,应该增加一个审批人,针对环境的上线部署增加一个审批操作

- 集成公有云的serverless