linux 内存检测工具 kfence 详解

版本基于:

Linux-5.10

约定:

PAGE_SIZE:4K

内存架构:UMA

0. 前言

本文 kfence 之外的代码版本是基于 Linux5.10,最近需要将 kfence 移植到 Linux5.10 中,本文借此机会将 kfence 机制详细地记录一下。

kfence,全称为 Kernel Electric-Fence,是 Linux5.12 版本新 引入 的内存使用错误检测机制。

kfence 基本原理非常简单,它创建了自己的专有检测内存池 kfence_pool。然后在 data page 的两边加上 fence page 电子栅栏,利用 MMU 的特性把 fence page 设置为不可访问。如果对 data page 的访问越过 page 边界,就会立刻触发异常。

检测的内存错误有:

- OOB:out-of-bounds access,访问越界;

- UAF:use-after-free,释放再使用;

- CORRUPTION:释放的时候检测到内存损坏;

- INVALID:无效访问;

- INVALID_FREE,无效释放;

现在,kfence 检测的内存错误类型不如 KASAN 多,但,kfence 设计的目的:

- be enabled in production kernels,在产品内核中使能

- has near zero performance overhead,接近 0 性能开销

kfence 机制依赖 slab 和kmalloc 机制,熟悉这两个机制能更好理解 kfence。

1. kfence 依赖的config

//当使用 arm64时,该config会被默认select,详细看arch/arm64/Kconfig

CONFIG_HAVE_ARCH_KFENCE

//kfence 机制的核心config,需要手动配置,下面所有的config都依赖它

CONFIG_KFENCE

------------------ 下面所有config都依赖CONFIG_KFENCE-----------

//依赖CONFIG_JUMP_LABEL

//用以启动静态key功能,主要是来优化性能,每次读取kfence_allocation_gate的值是否为0来进行判断,这样的性能

//开销比较大

CONFIG_KFENCE_STATIC_KEYS

//kfence pool的获取频率,默认为100ms

// 另外,该config可以设置为0,表示禁用 kfence功能

CONFIG_KFENCE_SAMPLE_INTERVAL

//kfence pool中共支持多少个OBJECTS,默认为255,从1~65535之间取值

// 一个kfence object需要申请两个pages

CONFIG_KFENCE_NUM_OBJECTS

//stress tesing of fault handling and error reporting, default 0

CONFIG_KFENCE_STRESS_TEST_FAULTS

//依赖CONFIG_TRACEPOINTS && CONFIG_KUNIT,用以启动kfence的测试用例

CONFIG_KFENCE_KUNIT_TEST1. kfence 原理

2. kfence中的重要数据结构

2.1 __kfence_pool

char *__kfence_pool __ro_after_init;

EXPORT_SYMBOL(__kfence_pool); /* Export for test modules. */kfence 中有个专门的内存池,在 memblock移交 buddy之前从 memblock 中申请的一块内存。

内存的首地址保存在全局变量 __kfence_pool 中。

这里来看下内存池的大小:

include/linux/kfence.h

#define KFENCE_POOL_SIZE ((CONFIG_KFENCE_NUM_OBJECTS + 1) * 2 * PAGE_SIZE)每个 object 会占用 2 pages,一个page 用于 object 自身,另一个page 用作guard page

kfence pool 会在 CONFIG_KFENCE_NUM_OBJECTS 的基础上多申请 2 个pages,即kfence pool 的page0 和 page1。page0 大部分是没用的,仅仅作为一个扩展的guard page。多加上page1 方便简化metadata 索引地址映射。

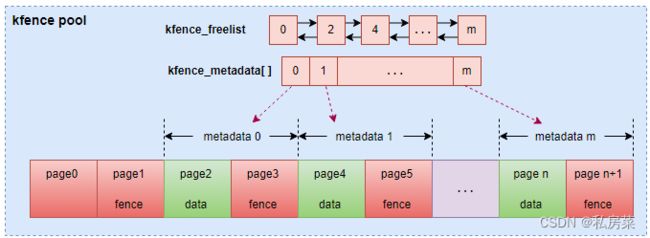

下面弄一个图方便理解 kfence_pool

- page0 和 page1 就是上面所述的多出来的两个 pages;

- 其他的pages 是 CONFIG_KFENCE_NUM_OBJECTS * 2,每个object 拥有两个pages,第一个为object 本身,第二个为 fence page;

- kfence 定义一个全局变量 kfence_metadata 数组,数组的长度为CONFIG_KFENCE_NUM_OBJECT,里面管理所有的objects,包括obj当前状态,内存地址等信息;

- kfence pool 中可用的 metadata 会被存放在链表 kfence_freelist 中;

- 从图上可以看到,每一个object page 都会被两个 guard page 包裹了;

2.2 kfence_sample_interval

static unsigned long kfence_sample_interval __read_mostly = CONFIG_KFENCE_SAMPLE_INTERVAL;该变量用以存储 kfence 的采样间隔,默认使用的是 CONFIG_KFENCE_SAMPLE_INTERVAL 的值。当然,内核中还提供内存参数的方式进行配置:

static const struct kernel_param_ops sample_interval_param_ops = {

.set = param_set_sample_interval,

.get = param_get_sample_interval,

};

module_param_cb(sample_interval, &sample_interval_param_ops, &kfence_sample_interval, 0600);通过set、get 指定内核参数 kfence.sample_interval 的配置和获取,单位为毫秒。

可以通过设施 kfence.sample_interval=0 来禁用 kfence 功能。

2.3 kfence_enabled

该变量表示kfence pool 初始化成功,kfence 进入正常运行中。

kfence 中一共有两个地方会将 kfence_enables 设为false。

第一个地方:

mm/kfence/core.c

#define KFENCE_WARN_ON(cond) \

({ \

const bool __cond = WARN_ON(cond); \

if (unlikely(__cond)) \

WRITE_ONCE(kfence_enabled, false); \

__cond; \

})在kfence 中很多地方需要确定重要条件不能为 false,通过 KFENCE_WARN_ON() 进行check,如果condition 为false,则将 kfence_enabled 设为 false。

第二个地方:

param_set_sample_interval() 调用时,如果采样间隔设为0,则表示 kfence 功能关闭。

2.4 kfence_metadata

这是一个 struct kfence_metadata 的全局变量。用以管理所有 kfence objects:

mm/kfence/kfence.h

struct kfence_metadata {

struct list_head list; //kfence_metadata为kfence_freelist中的一个节点

struct rcu_head rcu_head; //delayed freeing 使用

//每个kfence_metadata带有一个自旋锁,用以保护data一致性

//我们不能将同一个metadata从freelist 中抓取两次,也不能对同一个metadata进行__kfence_alloc() 多次

raw_spinlock_t lock;

//object 的当前状态,默认为UNUSED

enum kfence_object_state state;

//对象的基地址,都是按照页对齐的

unsigned long addr;

/*

* The size of the original allocation.

*/

size_t size;

//最后一次从对象中分配内存的kmem_cache

//如果没有申请或kmem_cache被销毁,则该值为NULL

struct kmem_cache *cache;

//记录发生异常的地址

unsigned long unprotected_page;

/* 分配或释放的栈信息 */

struct kfence_track alloc_track;

struct kfence_track free_track;

};2.5 kfence_freelist

/* Freelist with available objects. */

static struct list_head kfence_freelist = LIST_HEAD_INIT(kfence_freelist);

static DEFINE_RAW_SPINLOCK(kfence_freelist_lock); /* Lock protecting freelist. */用以管理所有的可用的kfence objeces

2.6 kfence_allocation_gate

这是个 atomic_t 变量,是kfence 定时开放分配的闸门,0 表示允许分配,非0表示不允许分配。

正常情况下,在 kfence_alloc() 进行内存分配的时候,会通过atomic_read() 读取该变量的值,如果为0,则表示允许分配,kfence 会进一步调用 __kfence_alloc() 函数。

当考虑到性能问题,内核启动了 static key 功能,即变量 kfence_allocation_key,详见下一小节。

2.7 kfence_allocation_key

这个是kfence 分配的static key,需要 CONFIG_KFENCE_STATIC_KEYS 使能。

#ifdef CONFIG_KFENCE_STATIC_KEYS

/* The static key to set up a KFENCE allocation. */

DEFINE_STATIC_KEY_FALSE(kfence_allocation_key);

#endif这是一个 static_key_false key。

如果 CONFIG_KFENCE_STATIC_KEYS 使能,在 kfence_alloc() 的时候将不再判断 kfence_allocation_gate 的值,而是判断该key 的值。

3. kfence 初始化

init/main.c

static void __init mm_init(void)

{

...

kfence_alloc_pool();

report_meminit();

mem_init();

...

}从《buddy 初始化》一文中得知,mm_init() 函数开始将进行buddy 系统的内存初始化。而在函数 mem_init() 中会通过 free 操作,将内存一个页块一个页块的添加到 buddy 系统中。

而 kfence pool 是在 mem_init() 调用之前,从memblock 中分配出一段内存。

3.1 kfence_alloc_pool()

mm/kfence/core.c

void __init kfence_alloc_pool(void)

{

if (!kfence_sample_interval)

return;

__kfence_pool = memblock_alloc(KFENCE_POOL_SIZE, PAGE_SIZE);

if (!__kfence_pool)

pr_err("failed to allocate pool\n");

}代码比较简单:

- 确认 kfence_sample_interval 是否为0,如果为0 则表示kfence 为disabled;

- 通过 memblock_alloc() 申请 KFENCE_POOL_SIZE 的空间,PAGE_SIZE 对齐;

3.2 kfence_init()

该函数被放置在 start_kernel() 函数比较靠后的位置,此时buddy初始化、slab初始化、workqueue 初始化等已经完成。

mm/kfence/core.c

void __init kfence_init(void)

{

/* Setting kfence_sample_interval to 0 on boot disables KFENCE. */

if (!kfence_sample_interval)

return;

if (!kfence_init_pool()) {

pr_err("%s failed\n", __func__);

return;

}

WRITE_ONCE(kfence_enabled, true);

queue_delayed_work(system_unbound_wq, &kfence_timer, 0);

pr_info("initialized - using %lu bytes for %d objects at 0x%p-0x%p\n", KFENCE_POOL_SIZE,

CONFIG_KFENCE_NUM_OBJECTS, (void *)__kfence_pool,

(void *)(__kfence_pool + KFENCE_POOL_SIZE));

}- 同样,当采样间隔设为0,即 kfence_sample_interval 为0 时,关闭kfence;

- 调用 kfence_init_pool() 对kfence pool 进行初始化;

- 变量 kfence_enabled 设为 true,表示 kfence 功能正常,可以正常工作;

- 创建工作队列 kfence_timer,并添加到 system_unbound_wq 中,注意这里延迟为0,即立刻执行 kfence_timer;

注意最后打印的信息,在kfence pool 初始化结束,会从dmesg 中看到如下log:

<6>[ 0.000000] kfence: initialized - using 2097152 bytes for 255 objects at 0x(____ptrval____)-0x(____ptrval____)系统中申请了 255 个 objects,共使用 2M 的内存空间。

3.2.1 kfence_init_pool()

mm/kfence/core.c

static bool __init kfence_init_pool(void)

{

unsigned long addr = (unsigned long)__kfence_pool;

struct page *pages;

int i;

//确认 __kfence_pool已经申请成功,kfence_alloc_pool()会从memblock中申请

if (!__kfence_pool)

return false;

//对于 arm64架构,该函数直接返回true

//对于 x86架构,会通过lookup_address()检查__kfence_pool是否映射到物理地址了

if (!arch_kfence_init_pool())

goto err;

//获取映射好的pages,从vmemmap 中查找

pages = virt_to_page(addr);

//配置kfence pool中的page,将其打上slab页的标记

for (i = 0; i < KFENCE_POOL_SIZE / PAGE_SIZE; i++) {

if (!i || (i % 2)) //第0页和奇数页跳过,即配置偶数页

continue;

//确认pages不是复合页

if (WARN_ON(compound_head(&pages[i]) != &pages[i]))

goto err;

__SetPageSlab(&pages[i]);

}

//将kfence pool的前两个页面设为guard pages

//主要是清除对应 pte项的present标志,这样当CPU访问前两页就会触发缺页异常,就会进入kfence处理流程

for (i = 0; i < 2; i++) {

if (unlikely(!kfence_protect(addr)))

goto err;

addr += PAGE_SIZE;

}

//遍历所有的kfence objects页面,kfence_metadata数组是专门对CONFIG_KFENCE_NUM_OBJECTS个对象的状态进行管理

for (i = 0; i < CONFIG_KFENCE_NUM_OBJECTS; i++) {

struct kfence_metadata *meta = &kfence_metadata[i];

/* 初始化kfence metadata */

INIT_LIST_HEAD(&meta->list); //初始化kfence_metadata节点

raw_spin_lock_init(&meta->lock); //初始化spi lock

meta->state = KFENCE_OBJECT_UNUSED; //所有的起始状态是UNUSED

meta->addr = addr; //保存该对象的page地址

list_add_tail(&meta->list, &kfence_freelist); //将可用的metadata添加到kfence_freelist尾部

//保护每个object的右边区域的page

if (unlikely(!kfence_protect(addr + PAGE_SIZE)))

goto err;

addr += 2 * PAGE_SIZE; //跳到下一个对象

}

//kfence pool是一直活着的,从此时起永远不会被释放

//之前在调用 memblock_alloc()时在 kmemleak中留有记录,这里要删除这部分记录,防止与后面调用

// kfence_alloc()分配时出现冲突

kmemleak_free(__kfence_pool);

return true;

err:

/*

* Only release unprotected pages, and do not try to go back and change

* page attributes due to risk of failing to do so as well. If changing

* page attributes for some pages fails, it is very likely that it also

* fails for the first page, and therefore expect addr==__kfence_pool in

* most failure cases.

*/

memblock_free_late(__pa(addr), KFENCE_POOL_SIZE - (addr - (unsigned long)__kfence_pool));

__kfence_pool = NULL;

return false;

}3.2.2 kfence_timer

在上面 kfence_init_pool() 成功完成之后,kfence_init() 会进入下一步:创建周期性的工作队列。

queue_delayed_work(system_unbound_wq, &kfence_timer, 0);注意最后一个参数为0,因为这里是kfence_init(),第一次执行 kfence_timer 会立即执行,之后的 kfence_timer 会有个 kfence_sample_interval 的延迟。

来看下 kfence_timer 的创建:

mm/kfence/core.c

static DECLARE_DELAYED_WORK(kfence_timer, toggle_allocation_gate);通过调用 DECLARE_DELAYED_WORK() 初始化一个延迟队列,toggle_allocation_gate() 为时间到达后的处理函数。

下面来看下 toggle_allocation_gate():

mm/kfence/core.c

static void toggle_allocation_gate(struct work_struct *work)

{

//首先确定kfence功能正常

if (!READ_ONCE(kfence_enabled))

return;

//将 kfence_allocation_gate 设为0

// 这是kfence内存池开启分配的标志,0表示开启,非0表示关闭

// 这样保证每隔一段时间,最多只允许从kfence内存池分配一次内存

atomic_set(&kfence_allocation_gate, 0);

#ifdef CONFIG_KFENCE_STATIC_KEYS

//使能static key,等到分配的发生

static_branch_enable(&kfence_allocation_key);

//内核发出 hung task警告的时间最短时间长度,为CONFIG_DEFAULT_HUNG_TASK_TIMEOUT的值

if (sysctl_hung_task_timeout_secs) {

//如果内存分配没有那么频繁,就有可能出现等待时间过长的问题,

// 这里将等待超过时间设置为hung task警告时间的一半,

// 这样,内核就不会因为处于D状态过长导致内核出现警告

wait_event_idle_timeout(allocation_wait, atomic_read(&kfence_allocation_gate),

sysctl_hung_task_timeout_secs * HZ / 2);

} else {

//如果hungtask检测时间为0,表示时间无限长,那么可以放心等待下去,直到有人从kfence中

// 分配了内存,会将kfence_allocation_gate设为1,然后唤醒阻塞在allocation_wait里的任务

wait_event_idle(allocation_wait, atomic_read(&kfence_allocation_gate));

}

/* 将static key关闭,保证不会进入 __kfence_alloc() */

static_branch_disable(&kfence_allocation_key);

#endif

//等待kfence_sample_interval,单位是毫秒,然后再次开启kfence内存池分配

queue_delayed_work(system_unbound_wq, &kfence_timer,

msecs_to_jiffies(kfence_sample_interval));

}注意 static key 需要 CONFIG_KFNECE_STATIC_KEYS 使能。

这里使用 static key,主要是来优化性能,每次读取 kfence_allocation_gate 的值是否为0来进行判断,这样的性能开销比较大。

另外,在此次 toggle 执行完成后,会再次调用 queue_delayed_work() 进入下一次work,只不过有个 delay——kfence_sample_interval。

至此,kfence 初始化过程基本剖析完成,整理流程图大致如下:

4. kfence 申请

kfence 申请的核心接口是 __kfence_alloc() 函数,系统中调用该函数有两个地方:

- kmem_cache_alloc_bulk()

- slab_alloc_node()

第一个函数只有在 io_alloc_req() 函数中调用,详见 fs/io_uring.c

第二个函数,如果只考虑 UMA 架构函数调用,起点只会是 slab_alloc() 函数,调用的地方有:

kmem_cache_alloc()

kmem_cache_alloc_trace()

__kmalloc()函数的细节可以查看《slub 分配器之kmem_cache_alloc》和《slub 分配器之kmalloc详解》

slab_alloc() 函数进一步会调用 slab_alloc_node():

mm/slub.c

static __always_inline void *slab_alloc_node(struct kmem_cache *s,

gfp_t gfpflags, int node, unsigned long addr, size_t orig_size)

{

void *object;

struct kmem_cache_cpu *c;

struct page *page;

unsigned long tid;

struct obj_cgroup *objcg = NULL;

s = slab_pre_alloc_hook(s, &objcg, 1, gfpflags);

if (!s)

return NULL;

object = kfence_alloc(s, orig_size, gfpflags);

if (unlikely(object))

goto out;

...

out:

slab_post_alloc_hook(s, objcg, gfpflags, 1, &object);

return object;

}函数最开始会尝试调用 kfence_alloc() 申请内存,如果成功申请到,会跳过一堆slab 快速分配、慢速分配的流程。这里不过多分析,详细可以查看《slub 分配器之kmem_cache_alloc》一文。

下面正式进入 kfence_alloc() 的流程。

4.1 kfence_alloc()

include/linux/kfence.h

static __always_inline void *kfence_alloc(struct kmem_cache *s, size_t size, gfp_t flags)

{

#ifdef CONFIG_KFENCE_STATIC_KEYS

if (static_branch_unlikely(&kfence_allocation_key))

#else

if (unlikely(!atomic_read(&kfence_allocation_gate)))

#endif

return __kfence_alloc(s, size, flags);

return NULL;

}前面的逻辑判断,在上文第 2.6 节、第 2.7 节已经提前阐述过了,这里不再过多叙述。

下面直接来看下kfence 分配的核心处理函数 __kfence_alloc()。

4.2 __kfence_alloc()

mm/kfence/core.c

void *__kfence_alloc(struct kmem_cache *s, size_t size, gfp_t flags)

{

//在 kfence_allocation_gate 切换之前,会首先确认申请的 size,必须要小于1个page

if (size > PAGE_SIZE)

return NULL;

//需要从 DMA、DMA32、HIGHMEM分配内存的话,kfence内存池不支持

// 因为kfence 内存池的内存属性不一定满足要求,例如dma一般要求内存不带cache的,而kfence

// 内存池不能保证这一点

if ((flags & GFP_ZONEMASK) ||

(s->flags & (SLAB_CACHE_DMA | SLAB_CACHE_DMA32)))

return NULL;

//kfence_allocation_gate只需要变成非0, 因此继续写它并付出关联的竞争代价没有意义

if (atomic_read(&kfence_allocation_gate) || atomic_inc_return(&kfence_allocation_gate) > 1)

return NULL;

#ifdef CONFIG_KFENCE_STATIC_KEYS

//检查allocation_wait中是否有进程在阻塞,有的话,会起一个work来唤醒被阻塞的进程

if (waitqueue_active(&allocation_wait)) {

/*

* Calling wake_up() here may deadlock when allocations happen

* from within timer code. Use an irq_work to defer it.

*/

irq_work_queue(&wake_up_kfence_timer_work);

}

#endif

//在分配之前,确定kfence_enable是否被disable掉了

if (!READ_ONCE(kfence_enabled))

return NULL;

//从kfence 内存池中分配object

return kfence_guarded_alloc(s, size, flags);

}主要的分配函数是 kfence_guarded_alloc(),下面单独开一节剖析。

4.3 kfence_guarded_alloc()

mm/kfence/core.c

static void *kfence_guarded_alloc(struct kmem_cache *cache, size_t size, gfp_t gfp)

{

struct kfence_metadata *meta = NULL;

unsigned long flags;

struct page *page;

void *addr;

//获取kfence_freelist中的metadata,上锁保护

raw_spin_lock_irqsave(&kfence_freelist_lock, flags);

//如果kfence_freelist不为空,则取出第一个metadata

if (!list_empty(&kfence_freelist)) {

meta = list_entry(kfence_freelist.next, struct kfence_metadata, list);

list_del_init(&meta->list);

}

raw_spin_unlock_irqrestore(&kfence_freelist_lock, flags);

//如果是否从kfence_freelist中取出metadata,如果kfence_freelist为空,则表示没有可用的metadata

if (!meta)

return NULL;

//尝试给meta上锁,极度不愿意看到上锁失败

//当UAF 的kfence会进行report,此时会对meta进行上锁,并且report 代码是通过printk,而printk

// 会调用kmalloc(),而kmalloc()最终会调用kfence_alloc()去尝试抓取同一个用于report 的object

//这里防止死锁,并如果出现上锁失败,会将刚抓取的metadata放回kfence_freelist尾部后返回NULL

if (unlikely(!raw_spin_trylock_irqsave(&meta->lock, flags))) {

/*

* This is extremely unlikely -- we are reporting on a

* use-after-free, which locked meta->lock, and the reporting

* code via printk calls kmalloc() which ends up in

* kfence_alloc() and tries to grab the same object that we're

* reporting on. While it has never been observed, lockdep does

* report that there is a possibility of deadlock. Fix it by

* using trylock and bailing out gracefully.

*/

raw_spin_lock_irqsave(&kfence_freelist_lock, flags);

list_add_tail(&meta->list, &kfence_freelist);

raw_spin_unlock_irqrestore(&kfence_freelist_lock, flags);

return NULL;

}

//对metadata 上锁成功,开始处理metadata

//首先,通过medata获取page的虚拟地址,该函数见下文

meta->addr = metadata_to_pageaddr(meta);

//在free的时候,为了防止UAF,会将该object page进行kfence_protect,而

//当该object page再次被分配值,需要unprotect

//之所以这里条件只判断FREED,是因为在UNUSED 时处于初始化,该page还没有被使用过,并不需要考虑protect

if (meta->state == KFENCE_OBJECT_FREED)

kfence_unprotect(meta->addr);

/*

* Note: for allocations made before RNG initialization, will always

* return zero. We still benefit from enabling KFENCE as early as

* possible, even when the RNG is not yet available, as this will allow

* KFENCE to detect bugs due to earlier allocations. The only downside

* is that the out-of-bounds accesses detected are deterministic for

* such allocations.

*/

//如果随机数产生器初始化之前分配,那么object地址从该页的起始地址开始,

//当随机数产生器可以工作了,那么将object放到该页的最右侧

if (prandom_u32_max(2)) {

meta->addr += PAGE_SIZE - size;

meta->addr = ALIGN_DOWN(meta->addr, cache->align);

}

//确定最终的object起始地址

addr = (void *)meta->addr;

//该函数详细的剖析可以查看下文

//主要做了几件事情:

// 1. 通过状态确定使用alloc_track还是free_track,这里肯定选择alloc_track

// 2. 将当前进程的调用栈记录到 alloc_track中;

// 3. 获取当前进程的pid,并存放到track中

// 4. 将当前最新状态更新到 metadata中,这里metadata状态变成ALLOCATED,进入分配

metadata_update_state(meta, KFENCE_OBJECT_ALLOCATED);

//将当前的kmem_cache记录到metadata中

WRITE_ONCE(meta->cache, cache);

//记录object 的size

meta->size = size;

//将metadata页中除了给object用的size空间之外的填充成一个跟地址相关的pattern数

// 目的是在释放时检查是否发生越界访问

//该函数详细的剖析,可以查看下文

for_each_canary(meta, set_canary_byte);

//获取对应的struct page结构虚拟地址,并进行赋值

page = virt_to_page(meta->addr);

page->slab_cache = cache;

if (IS_ENABLED(CONFIG_SLUB))

page->objects = 1;

if (IS_ENABLED(CONFIG_SLAB))

page->s_mem = addr;

//metadata 数据处理完成,解锁

raw_spin_unlock_irqrestore(&meta->lock, flags);

/* Memory initialization. */

//如果gfp设置了__GFP_ZERO,则返回true,从而会调用memzero_explicit()对object区域清零

if (unlikely(slab_want_init_on_alloc(gfp, cache)))

memzero_explicit(addr, size);

//kmem_cache如果设定了构造,则调用

if (cache->ctor)

cache->ctor(addr);

if (CONFIG_KFENCE_STRESS_TEST_FAULTS && !prandom_u32_max(CONFIG_KFENCE_STRESS_TEST_FAULTS))

kfence_protect(meta->addr); /* Random "faults" by protecting the object. */

//COUNTER_ALLOCATED,记录当前已经被分配出去的metadata数量,释放的时候会减1

atomic_long_inc(&counters[KFENCE_COUNTER_ALLOCATED]);

//COUNTER_ALLOCS,记录从kfence内存池分配内存的总的次数

atomic_long_inc(&counters[KFENCE_COUNTER_ALLOCS]);

return addr;

}4.3.1 metadata_to_pageaddr()

mm/kfence/core.c

static inline unsigned long metadata_to_pageaddr(const struct kfence_metadata *meta)

{

unsigned long offset = (meta - kfence_metadata + 1) * PAGE_SIZE * 2;

unsigned long pageaddr = (unsigned long)&__kfence_pool[offset];

/* The checks do not affect performance; only called from slow-paths. */

/* Only call with a pointer into kfence_metadata. */

if (KFENCE_WARN_ON(meta < kfence_metadata ||

meta >= kfence_metadata + CONFIG_KFENCE_NUM_OBJECTS))

return 0;

/*

* This metadata object only ever maps to 1 page; verify that the stored

* address is in the expected range.

*/

if (KFENCE_WARN_ON(ALIGN_DOWN(meta->addr, PAGE_SIZE) != pageaddr))

return 0;

return pageaddr;

}主要是获取metadata page 的虚拟地址。

通过参数 meta 确定 offset,接着就可以确定该 metadata 的虚拟地址。

注意这里的两处 KFENCE_WARN_ON(),笔者在上文第 2.3 节已经剖析过,kfence 不希望condition 成立,一旦成立 kfence_enabled 会被置为 false。

当然,如果 metadata 没有越界且metadata 的虚拟地址是页对齐,那就将该虚拟地址返回。

疑问:

笔者个人觉得这里的第一个判断条件可以提前到函数最开始,这样性能上更好一些。

4.3.2 metadata_update_state()

mm/kfence/core.c

static noinline void metadata_update_state(struct kfence_metadata *meta,

enum kfence_object_state next)

{

struct kfence_track *track =

next == KFENCE_OBJECT_FREED ? &meta->free_track : &meta->alloc_track;

lockdep_assert_held(&meta->lock);

/*

* Skip over 1 (this) functions; noinline ensures we do not accidentally

* skip over the caller by never inlining.

*/

track->num_stack_entries = stack_trace_save(track->stack_entries, KFENCE_STACK_DEPTH, 1);

track->pid = task_pid_nr(current);

/*

* Pairs with READ_ONCE() in

* kfence_shutdown_cache(),

* kfence_handle_page_fault().

*/

WRITE_ONCE(meta->state, next);

}- 根据需要配置的object 状态,确定后面保存alloc或者free调用栈;

- 调用 stack_trace_save() 将调用栈保存到 track->stack_entries 中;

- 调用 task_pid_nr() 获取当前进程的pid;

- 设置metadata 的当前状态;

4.3.3 for_each_canary()

mm/kfence/core.c

static __always_inline void for_each_canary(const struct kfence_metadata *meta, bool (*fn)(u8 *))

{

//获取该 metadata的的页起始地址,按页向下对齐即可

const unsigned long pageaddr = ALIGN_DOWN(meta->addr, PAGE_SIZE);

unsigned long addr;

lockdep_assert_held(&meta->lock);

/*

* We'll iterate over each canary byte per-side until fn() returns

* false. However, we'll still iterate over the canary bytes to the

* right of the object even if there was an error in the canary bytes to

* the left of the object. Specifically, if check_canary_byte()

* generates an error, showing both sides might give more clues as to

* what the error is about when displaying which bytes were corrupted.

*/

//以object为界,分别对其左侧、右侧的canay bytes进行迭代,直到fn() 返回false

//不管怎样,都会对右侧的canary bytes进行迭代,哪怕左侧迭代出错了

//在check_canary_byte()会提示哪个byte被损坏的错误提示

for (addr = pageaddr; addr < meta->addr; addr++) {

if (!fn((u8 *)addr))

break;

}

/* Apply to right of object. */

for (addr = meta->addr + meta->size; addr < pageaddr + PAGE_SIZE; addr++) {

if (!fn((u8 *)addr))

break;

}

}第二个参数是回调函数,对于 kfence 只有两种情况:

- 在 alloc 的时候为 set_canary_byte() 函数,用以设置canary byte;

- 在free 的时候为 check_canary_byte() 函数,用以检测是否有memory corruption;

mm/kfence/core.c

static inline bool set_canary_byte(u8 *addr)

{

*addr = KFENCE_CANARY_PATTERN(addr);

return true;

}mm/kfence/kfence.h

#define KFENCE_CANARY_PATTERN(addr) ((u8)0xaa ^ (u8)((unsigned long)(addr) & 0x7))set_canary_byte() 会将该字节写上个跟地址相关的 pattern 数。

mm/kfence/core.c

static inline bool check_canary_byte(u8 *addr)

{

if (likely(*addr == KFENCE_CANARY_PATTERN(addr)))

return true;

atomic_long_inc(&counters[KFENCE_COUNTER_BUGS]);

kfence_report_error((unsigned long)addr, false, NULL, addr_to_metadata((unsigned long)addr),

KFENCE_ERROR_CORRUPTION);

return false;

}check_canary_byte() 用以确定该 canary byte是否被损坏。

如果出现越界访问了,则会进行处理:

- KFENCE_COUNTER_BUGS 计数增加;

- 调用kfence_report_error() 对该metadata 进行 ERROR_CORRUPTION 记录;

至此,kfence 内存分配的流程基本剖析完成,下面整理个流程:

5. kfence 释放

kfence 释放的核心接口是 __kfence_free() 函数,系统中调用该函数有两个地方:

- kmem_cache_free_bulk()

- slab_free()

在 kfree() 和 kmem_cache_free() 中会调用 slab_free() 函数。

函数的细节详细可以查看《slub 分配器之kmem_cache_free》和《slub 分配器之kmalloc详解》

slab_free() 进一步会调用 __slab_free() 函数:

mm/slub.c

static void __slab_free(struct kmem_cache *s, struct page *page,

void *head, void *tail, int cnt,

unsigned long addr)

{

void *prior;

int was_frozen;

struct page new;

unsigned long counters;

struct kmem_cache_node *n = NULL;

unsigned long flags;

stat(s, FREE_SLOWPATH);

if (kfence_free(head))

return;

...

}函数最开始会调用 kfence_free() 进行确认该内存是否来源于 kfence,如果不是 kfence 内存,则会继续往下执行 slab的正常free 流程,详细可以查看《slub 分配器之kmem_cache_free》一文。

下面正式进入 kfence_free() 的流程。

5.1 kfence_free()

include/linux/kfence.h

static __always_inline __must_check bool kfence_free(void *addr)

{

if (!is_kfence_address(addr))

return false;

__kfence_free(addr);

return true;

}函数做了两件事情:

- is_kfence_address() 确定该内存是否来源于 kfence 内存池;

- 如果是kfence 内存,调用__kfence_free() 进行释放处理;

5.1.1 is_kfence_address()

include/linux/kfence.h

static __always_inline bool is_kfence_address(const void *addr)

{

return unlikely((unsigned long)((char *)addr - __kfence_pool) < KFENCE_POOL_SIZE && __kfence_pool);

}

代码比较简单,该内存如果来源于kfence 内存池,那么 addr - __kfence_pool 肯定就是内存偏移,这个偏移是不可能超过内存池最大size KFNECE_POOL_SIZE,另外,__kfence_pool 这个变量肯定不能为NULL。

下面直接来看下kfence 释放的核心处理函数 __kfence_free()。

5.2 __kfence_free()

mm/kfence/core.c

void __kfence_free(void *addr)

{

struct kfence_metadata *meta = addr_to_metadata((unsigned long)addr);

//如果metadata对应的kmem_cache有SLAB_TYPESAFE_BY_RCU,那么不能立即释放,

// 而是进行异步处理,当过了一个宽限期再释放

if (unlikely(meta->cache && (meta->cache->flags & SLAB_TYPESAFE_BY_RCU)))

call_rcu(&meta->rcu_head, rcu_guarded_free);

else

kfence_guarded_free(addr, meta, false);

}函数共三个注意点:

- 通过 addr 获取 metadata,详细看下文第 5.2.1 节;

- 确定meta中kmem_cache 是否有 SLAB_TYPESAFE_BY_RCU;

- 调用 kfence_guarded_free() 进行kfence 内存释放;

可以看到,主要的释放函数是 kfence_guarded_free(),下面单独开一节剖析,详细看下文第 5.3 节。

5.2.1 addr_to_metadata()

mm/kfence/core.c

static inline struct kfence_metadata *addr_to_metadata(unsigned long addr)

{

long index;

/* The checks do not affect performance; only called from slow-paths. */

if (!is_kfence_address((void *)addr))

return NULL;

/*

* May be an invalid index if called with an address at the edge of

* __kfence_pool, in which case we would report an "invalid access"

* error.

*/

index = (addr - (unsigned long)__kfence_pool) / (PAGE_SIZE * 2) - 1;

if (index < 0 || index >= CONFIG_KFENCE_NUM_OBJECTS)

return NULL;

return &kfence_metadata[index];

}该函数是 metadata_to_pageaddr()的逆过程:

- 首先确定 addr 有效性,是否为 kfence 内存;

- 接着确定 addr 相对于 __kfence_pool 的偏移,注意会将 __kfence_pool 前两个page 自动跳过;

- 最后根据偏移,从 kfence_metadata 数组中获取到 metadata;

5.3 kfence_guarded_free()

mm/kfence/core.c

static void kfence_guarded_free(void *addr, struct kfence_metadata *meta, bool zombie)

{

struct kcsan_scoped_access assert_page_exclusive;

unsigned long flags;

raw_spin_lock_irqsave(&meta->lock, flags);

if (meta->state != KFENCE_OBJECT_ALLOCATED || meta->addr != (unsigned long)addr) {

/* Invalid or double-free, bail out. */

atomic_long_inc(&counters[KFENCE_COUNTER_BUGS]);

kfence_report_error((unsigned long)addr, false, NULL, meta,

KFENCE_ERROR_INVALID_FREE);

raw_spin_unlock_irqrestore(&meta->lock, flags);

return;

}

/* Detect racy use-after-free, or incorrect reallocation of this page by KFENCE. */

kcsan_begin_scoped_access((void *)ALIGN_DOWN((unsigned long)addr, PAGE_SIZE), PAGE_SIZE,

KCSAN_ACCESS_SCOPED | KCSAN_ACCESS_WRITE | KCSAN_ACCESS_ASSERT,

&assert_page_exclusive);

if (CONFIG_KFENCE_STRESS_TEST_FAULTS)

kfence_unprotect((unsigned long)addr); /* To check canary bytes. */

/* Restore page protection if there was an OOB access. */

if (meta->unprotected_page) {

memzero_explicit((void *)ALIGN_DOWN(meta->unprotected_page, PAGE_SIZE), PAGE_SIZE);

kfence_protect(meta->unprotected_page);

meta->unprotected_page = 0;

}

/* Check canary bytes for memory corruption. */

for_each_canary(meta, check_canary_byte);

/*

* Clear memory if init-on-free is set. While we protect the page, the

* data is still there, and after a use-after-free is detected, we

* unprotect the page, so the data is still accessible.

*/

if (!zombie && unlikely(slab_want_init_on_free(meta->cache)))

memzero_explicit(addr, meta->size);

/* Mark the object as freed. */

metadata_update_state(meta, KFENCE_OBJECT_FREED);

raw_spin_unlock_irqrestore(&meta->lock, flags);

/* Protect to detect use-after-frees. */

kfence_protect((unsigned long)addr);

kcsan_end_scoped_access(&assert_page_exclusive);

if (!zombie) {

/* Add it to the tail of the freelist for reuse. */

raw_spin_lock_irqsave(&kfence_freelist_lock, flags);

KFENCE_WARN_ON(!list_empty(&meta->list));

list_add_tail(&meta->list, &kfence_freelist);

raw_spin_unlock_irqrestore(&kfence_freelist_lock, flags);

atomic_long_dec(&counters[KFENCE_COUNTER_ALLOCATED]);

atomic_long_inc(&counters[KFENCE_COUNTER_FREES]);

} else {

/* See kfence_shutdown_cache(). */

atomic_long_inc(&counters[KFENCE_COUNTER_ZOMBIES]);

}

}

参考:

https://www.kernel.org/doc/html/latest/dev-tools/kfence.html