Springboot学习

1、Springboot自动装配原理

1.1、初识自动装配:

pom.xml:

- spring-boot-dependencies:核心依赖在父工程中

- 在引用关于springboot依赖时不需要指定版本,因为父工程中规定了版本号

启动器

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

- spring-boot-starter-web,是关于web应用的启动器,会导入关于web环境的所有依赖

- springboot会将所有的功能场景都变成启动器

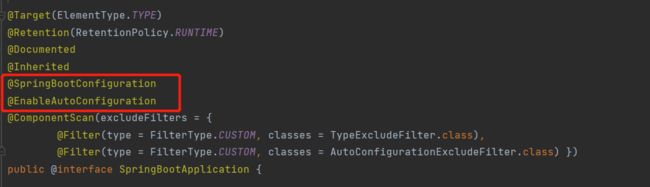

@SpringBootApplication注解原理:

@SpringBootApplication:Spring Boot应用标注在某个类上说明这个类是SpringBoot的主配置类,SpringBoot就应该运行这个类的main方法来启动SpringBoot应用;

该注解有两个重要的注解:

- @SpringBootConfiguration:spring boot的配置类

- @EnableAutoConfiguration:开启自动配置功能

1、@SpringBootConfiguration:spring boot的配置类

@Configuration:说明这是一个配置类 ,配置类就是对应Spring的xml 配置文件;

@Component:该注解标明配置类也是springIOC容器的组件

2、@EnableAutoConfiguration:开启自动配置功能

以前我们需要配置的东西,Spring Boot帮我们自动配置;告诉SpringBoot开启自动配置功能;这样自动配置才能生效;

该注解也有两个重要的注解:

- @AutoConfigurationPackage:开启自动配置包

- @Import(AutoConfigurationImportSelector.class) :给容器导入组件

2.1@AutoConfigurationPackage:开启自动配置包

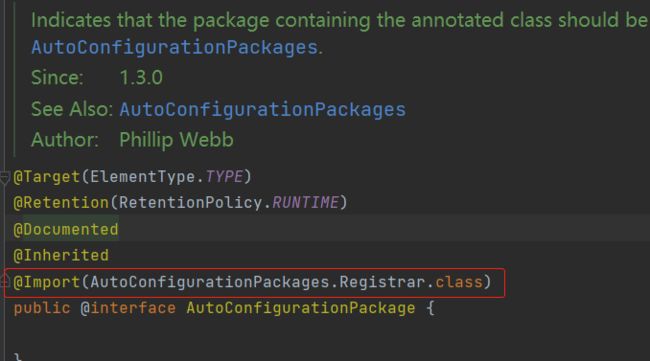

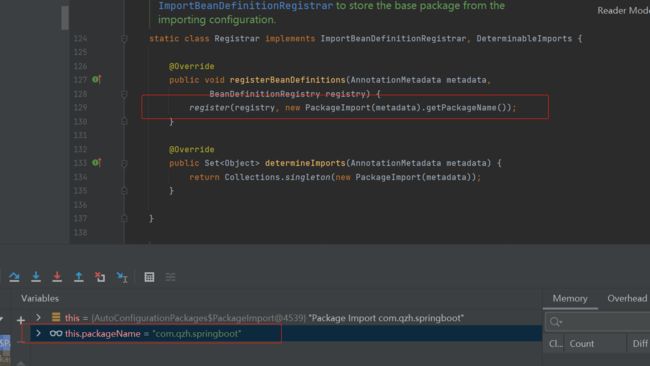

- Spring的底层注解@Import,给容器中导入一个组件;导入的组件为AutoConfigurationPackages.Registrar.class;

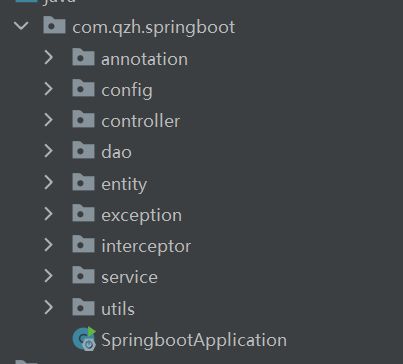

- AutoConfigurationPackages.Registrar.class作用:将主配置类(@SpringBootApplication标注的类)的所在包及下面所有子包里面的所有组件扫描到Spring容器;(因此若把Controller的包放在启动类包外面,项目不能正常运行)

2.2@Import(AutoConfigurationImportSelector.class) :给容器导入组件

AutoConfigurationImportSelector :自动配置导入选择器,导入组件的选择器

- 将所有需要导入的组件以全类名的方式返回;这些组件就会被添加到容器中;

- 会给容器中导入非常多的自动配置类(xxxAutoConfiguration);就是给容器中导入这个场景需要的所有组件,并配置好这些组件;

AutoConfigurationImportSelector类中有这个方法:

// 获得候选的配置

protected List<String> getCandidateConfigurations(AnnotationMetadata metadata, AnnotationAttributes attributes) {

//这里的getSpringFactoriesLoaderFactoryClass()方法

//返回的就是我们最开始看的启动自动导入配置文件的注解类;EnableAutoConfiguration

List<String> configurations = SpringFactoriesLoader.loadFactoryNames(this.getSpringFactoriesLoaderFactoryClass(), this.getBeanClassLoader());

Assert.notEmpty(configurations, "No auto configuration classes found in META-INF/spring.factories. If you are using a custom packaging, make sure that file is correct.");

return configurations;

}

![]()

Spring Boot在启动的时候从类路径下的META-INF/spring.factories中获取EnableAutoConfiguration指定的值,将这些值作为自动配置类导入到容器中,自动配置类就生效,帮我们进行自动配置工作;以前我们需要自己配置的东西,自动配置类都帮我们;

总结:

- SpringBoot在启动的时候从类路径下的META-INF/spring.factories中获取EnableAutoConfiguration指定的值

- 将这些值作为自动配置类导入容器 , 自动配置类就生效 , 帮我们进行自动配置工作;

- 整个J2EE的整体解决方案和自动配置都在springboot-autoconfigure的jar包中;

- 它会给容器中导入非常多的自动配置类 (xxxAutoConfiguration), 就是给容器中导入这个场景需要的所有组件 ,并配置好这些组件 ;

- 有了自动配置类 , 免去了我们手动编写配置注入功能组件等的工作;

1.2、SpringApplication.run 分析

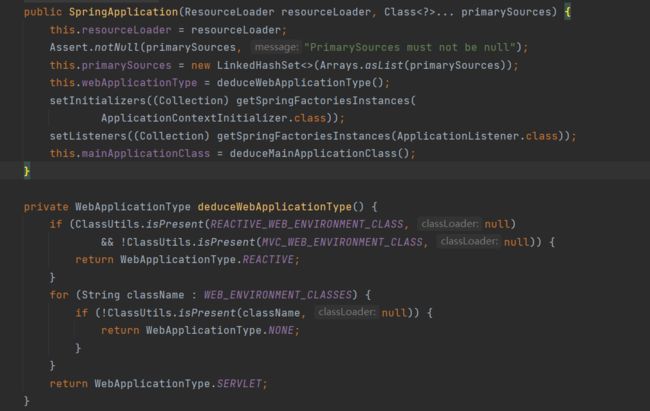

1、SpringApplication

这个类主要做了以下四件事情:

1、推断应用的类型是普通的项目还是Web项目

2、查找并加载所有可用初始化器 , 设置到initializers属性中

3、找出所有的应用程序监听器,设置到listeners属性中

4、推断并设置main方法的定义类,找到运行的主类

2、run流程分析

public ConfigurableApplicationContext run(String... args) {

StopWatch stopWatch = new StopWatch();

stopWatch.start();

ConfigurableApplicationContext context = null;

Collection<SpringBootExceptionReporter> exceptionReporters = new ArrayList<>();

configureHeadlessProperty();

SpringApplicationRunListeners listeners = getRunListeners(args);

listeners.starting();

try {

ApplicationArguments applicationArguments = new DefaultApplicationArguments(

args);

ConfigurableEnvironment environment = prepareEnvironment(listeners,

applicationArguments);

configureIgnoreBeanInfo(environment);

Banner printedBanner = printBanner(environment);

context = createApplicationContext();

exceptionReporters = getSpringFactoriesInstances(

SpringBootExceptionReporter.class,

new Class[] { ConfigurableApplicationContext.class }, context);

prepareContext(context, environment, listeners, applicationArguments,

printedBanner);

refreshContext(context);

afterRefresh(context, applicationArguments);

stopWatch.stop();

if (this.logStartupInfo) {

new StartupInfoLogger(this.mainApplicationClass)

.logStarted(getApplicationLog(), stopWatch);

}

listeners.started(context);

callRunners(context, applicationArguments);

}

catch (Throwable ex) {

handleRunFailure(context, ex, exceptionReporters, listeners);

throw new IllegalStateException(ex);

}

try {

listeners.running(context);

}

catch (Throwable ex) {

handleRunFailure(context, ex, exceptionReporters, null);

throw new IllegalStateException(ex);

}

return context;

}

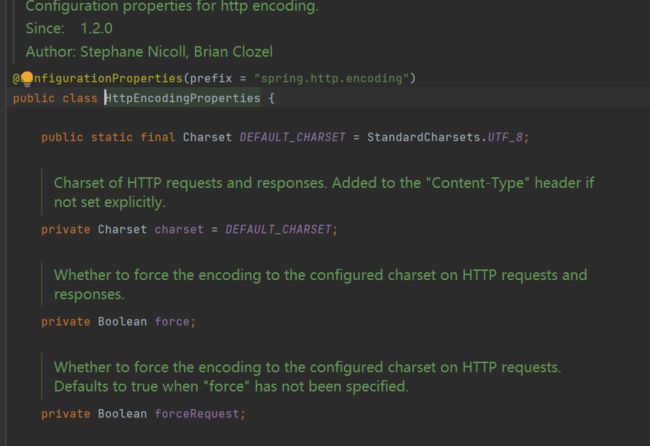

1.3、自动配置原理

分析装配原理

以HttpEncodingAutoConfiguration(Http编码自动配置)为例解释自动配置原理

//表示这是一个配置类,和以前编写的配置文件一样,也可以给容器中添加组件;

@Configuration

//启动指定类的ConfigurationProperties功能;

//进入这个HttpProperties查看,将配置文件中对应的值和HttpProperties绑定起来;

//并把HttpProperties加入到ioc容器中

@EnableConfigurationProperties({HttpProperties.class})

//Spring底层@Conditional注解

//根据不同的条件判断,如果满足指定的条件,整个配置类里面的配置就会生效;

//这里的意思就是判断当前应用是否是web应用,如果是,当前配置类生效

@ConditionalOnWebApplication(

type = Type.SERVLET

)

//判断当前项目有没有这个类CharacterEncodingFilter;SpringMVC中进行乱码解决的过滤器;

@ConditionalOnClass({CharacterEncodingFilter.class})

//判断配置文件中是否存在某个配置:spring.http.encoding.enabled;

//如果不存在,判断也是成立的

//即使我们配置文件中不配置pring.http.encoding.enabled=true,也是默认生效的;

@ConditionalOnProperty(

prefix = "spring.http.encoding",

value = {"enabled"},

matchIfMissing = true

)

public class HttpEncodingAutoConfiguration {

//他已经和SpringBoot的配置文件映射了

private final Encoding properties;

//只有一个有参构造器的情况下,参数的值就会从容器中拿

public HttpEncodingAutoConfiguration(HttpProperties properties) {

this.properties = properties.getEncoding();

}

//给容器中添加一个组件,这个组件的某些值需要从properties中获取

@Bean

@ConditionalOnMissingBean //判断容器没有这个组件?

public CharacterEncodingFilter characterEncodingFilter() {

CharacterEncodingFilter filter = new OrderedCharacterEncodingFilter();

filter.setEncoding(this.properties.getCharset().name());

filter.setForceRequestEncoding(this.properties.shouldForce(org.springframework.boot.autoconfigure.http.HttpProperties.Encoding.Type.REQUEST));

filter.setForceResponseEncoding(this.properties.shouldForce(org.springframework.boot.autoconfigure.http.HttpProperties.Encoding.Type.RESPONSE));

return filter;

}

}

根据当前不同条件判断,决定这个配置类是否生效

- 一但这个配置类生效;这个配置类就会给容器中添加各种组件;

- 这些组件的属性是从对应的properties类中获取的,这些类里面的每一个属性又是和配置文件绑定的;

- 所有在配置文件中能配置的属性都是在xxxxProperties类中封装着;

- 配置文件能配置什么就可以参照某个功能对应的这个属性类

自动装配原理总结:

- SpringBoot启动会加载大量的自动配置类

- 我们看我们需要的功能有没有在SpringBoot默认写好的自动配置类当中;

- 我们再来看这个自动配置类中到底配置了哪些组件;(只要我们要用的组件存在在其中,我们就不需要再手动配置了)

- 给容器中自动配置类添加组件的时候,会从properties类中获取某些属性。我们只需要在配置文件中指定这些属性的值即可;

xxxxAutoConfigurartion:自动配置类; 给容器中添加组件

xxxxProperties:封装配置文件中相关属性;

了解:@Conditional

@Conditional派生注解(Spring注解版原生的@Conditional作用)

作用:必须是@Conditional指定的条件成立,才给容器中添加组件,配置里面的所有内容才生效;

那么多的自动配置类,必须在一定的条件下才能生效;也就是说,我们加载了这么多的配置类,但不是所有的都生效了。

2、yaml、JSR303数据校验及多环境切换

2.1、yaml

- application.properties

- 语法结构: key=value

- application.yml

- 语法结构:kye: 空格 value

yaml基础语法

说明:语法要求严格!

1、空格不能省略

2、以缩进来控制层级关系,只要是左边对齐的一列数据都是同一个层级的。

3、属性和值的大小写都是十分敏感的。

/*

@ConfigurationProperties作用:

将配置文件中配置的每一个属性的值,映射到这个组件中;

告诉SpringBoot将本类中的所有属性和配置文件中相关的配置进行绑定

参数 prefix = “person” : 将配置文件中的person下面的所有属性一一对应

*/

@Component //注册bean

@ConfigurationProperties(prefix = "person")

public class Person {

private String name;

private Integer age;

}

@Value(“#{}”)与@Value(“${}”)的区别

@Value(“#{}”) 表示SpEl表达式通常用来获取bean的属性,或者调用bean的某个方法。当然还有可以表示常量

@Value(“${}”)从配置properties文件中读取属性的值

2.2、JSR303数据校验

Springboot中可以用@Validated来校验数据,如果数据异常则会统一抛出异常,方便异常中心统一处理。

@ConfigurationProperties(prefix = "person")

@Validated //数据校验

public class Person {

@Email(message="邮箱格式错误") //name必须是邮箱格式

private String name;

}

常见注解

空检查

@Null 验证对象是否为null

@NotNull 验证对象是否不为null, 无法查检长度为0的字符串

@NotBlank 检查约束字符串是不是Null还有被Trim的长度是否大于0,只对字符串,且会去掉前后空格.

@NotEmpty 检查约束元素是否为NULL或者是EMPTY.

Booelan检查

@AssertTrue 验证 Boolean 对象是否为 true

@AssertFalse 验证 Boolean 对象是否为 false

长度检查

@Size(min=, max=) 验证对象(Array,Collection,Map,String)长度是否在给定的范围之内

@Length(min=, max=) string is between min and max included.

日期检查

@Past 验证 Date 和 Calendar 对象是否在当前时间之前

@Future 验证 Date 和 Calendar 对象是否在当前时间之后

@Pattern 验证 String 对象是否符合正则表达式的规则

2.3、多环境切换

profile是Spring对不同环境提供不同配置功能的支持,可以通过激活不同的环境版本,实现快速切换环境;

yaml的多环境配置

#选择要激活那个环境块

spring:

profiles:

active: prod

---

server:

port: 8083

spring:

profiles: dev #配置环境的名称

---

server:

port: 8084

spring:

profiles: prod #配置环境的名称

3、整合JDBC、Druid、Mybatis-plus

3.1、整合JDBC

1、导入依赖

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-jdbc</artifactId>

</dependency>

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

<scope>runtime</scope>

</dependency>

2、编写yml文件

pring:

datasource:

driver-class-name: com.mysql.cj.jdbc.Driver

#serverTimezone=UTC解决时区的报错

url: jdbc:mysql://localhost:3306/50sql?serverTimezone=UTC&useUnicode=true&characterEncoding=utf-8

username: root

password: 123456

3、测试类测试

// springboot2.2.0之前使用org.junit.Test

// springboot2.2.0之后使用org.junit.jupiter.api.Test

import org.junit.jupiter.api.Test;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.boot.test.context.SpringBootTest;

import javax.sql.DataSource;

import java.sql.Connection;

import java.sql.SQLException;

@SpringBootTest

class SpringbootDataJdbcApplicationTests {

//DI注入数据源

@Autowired

DataSource dataSource;

@Test

public void contextLoads() throws SQLException {

//看一下默认数据源

System.out.println(dataSource.getClass());

//获得连接

Connection connection = dataSource.getConnection();

System.out.println(connection);

//关闭连接

connection.close();

}

}

结果:可以看到默认配置的数据源为 : class com.zaxxer.hikari.HikariDataSource

我们来全局搜索一下,找到数据源的所有自动配置都在 :DataSourceAutoConfiguration文件:

@Import(

{Hikari.class, Tomcat.class, Dbcp2.class, Generic.class, DataSourceJmxConfiguration.class}

)

protected static class PooledDataSourceConfiguration {

protected PooledDataSourceConfiguration() {

}

}

这里导入的类都在 DataSourceConfiguration 配置类下,可以看出 Spring Boot 2.2.5 默认使用HikariDataSource 数据源,而以前版本,如 Spring Boot 1.5 默认使用 org.apache.tomcat.jdbc.pool.DataSource 作为数据源;

HikariDataSource 号称 Java WEB 当前速度最快的数据源,相比于传统的 C3P0 、DBCP、Tomcat jdbc 等连接池更加优秀;

可以使用 spring.datasource.type 指定自定义的数据源类型,值为 要使用的连接池实现的完全限定名。

了解JdbcTemplate:

- 有了数据源(com.zaxxer.hikari.HikariDataSource),然后可以拿到数据库连接(java.sql.Connection),有了连接,就可以使用原生的 JDBC 语句来操作数据库;

- 即使不使用第三方第数据库操作框架,如 MyBatis等,Spring 本身也对原生的JDBC 做了轻量级的封装,即JdbcTemplate。

- 数据库操作的所有 CRUD 方法都在 JdbcTemplate 中。

- Spring Boot 不仅提供了默认的数据源,同时默认已经配置好了 JdbcTemplate 放在了容器中,程序员只需自己注入即可使用

- JdbcTemplate 的自动配置是依赖 org.springframework.boot.autoconfigure.jdbc 包下的 JdbcTemplateConfiguration 类

JdbcTemplate主要提供以下几类方法:

- execute方法:可以用于执行任何SQL语句,一般用于执行DDL语句;

- update方法及batchUpdate方法:update方法用于执行新增、修改、删除等语句;batchUpdate方法用于执行批处理相关语句;

- query方法及queryForXXX方法:用于执行查询相关语句;

- call方法:用于执行存储过程、函数相关语句。

3.2、整合Druid的两种方式

com.alibaba.druid.pool.DruidDataSource 部分基本配置参数如下:

1、导入druid依赖

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>druid</artifactId>

<version>1.1.22</version>

</dependency>

<dependency>

<groupId>log4j</groupId>

<artifactId>log4j</artifactId>

<version>1.2.17</version>

</dependency>

编写yml文件

spring:

datasource:

username: root

password: 123456

#serverTimezone=UTC解决时区的报错

url: jdbc:mysql://localhost:3306/50sql?serverTimezone=UTC&useUnicode=true&characterEncoding=utf-8

driver-class-name: com.mysql.cj.jdbc.Driver

type: com.alibaba.druid.pool.DruidDataSource

#Spring Boot 默认是不注入这些属性值的,需要自己绑定

#druid 数据源专有配置

initialSize: 5

minIdle: 5

maxActive: 20

maxWait: 60000

timeBetweenEvictionRunsMillis: 60000

minEvictableIdleTimeMillis: 300000

validationQuery: SELECT 1 FROM DUAL

testWhileIdle: true

testOnBorrow: false

testOnReturn: false

poolPreparedStatements: true

#配置监控统计拦截的filters,stat:监控统计、log4j:日志记录、wall:防御sql注入

#如果允许时报错 java.lang.ClassNotFoundException: org.apache.log4j.Priority

#则导入 log4j 依赖即可,Maven 地址:https://mvnrepository.com/artifact/log4j/log4j

filters: stat,wall,log4j

maxPoolPreparedStatementPerConnectionSize: 20

useGlobalDataSourceStat: true

connectionProperties: druid.stat.mergeSql=true;druid.stat.slowSqlMillis=500

需要自己添加DruidDataSource组件到容器中

import com.alibaba.druid.pool.DruidDataSource;

import org.springframework.boot.context.properties.ConfigurationProperties;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

import javax.sql.DataSource;

@Configuration

public class DruidConfig {

/*

将自定义的 Druid数据源添加到容器中,不再让 Spring Boot 自动创建

绑定全局配置文件中的 druid 数据源属性到 com.alibaba.druid.pool.DruidDataSource从而让它们生效

@ConfigurationProperties(prefix = "spring.datasource"):作用就是将 全局配置文件中

前缀为 spring.datasource的属性值注入到 com.alibaba.druid.pool.DruidDataSource 的同名参数中

*/

@ConfigurationProperties(prefix = "spring.datasource")

@Bean

public DataSource druidDataSource() {

return new DruidDataSource();

}

//配置Druid的监控

//1.配置一个管理后台的Servlet

@Bean

public ServletRegistrationBean statViewServlet(){

// 记得加上"/druid/*",否则在进行登录页面的重定向过多而无法访问的问题(记得在Google浏览器才会报这个错)

ServletRegistrationBean<StatViewServlet> bean = new ServletRegistrationBean<>(new StatViewServlet(),"/druid/*");

Map<String,String> initParams = new HashMap<>();

initParams.put("loginUsername","admin");

initParams.put("loginPassword","123456");

//默认是允许所有访问

//initParams.put("allow","");

// initParams.put("deny","192.168.31.30");

bean.setInitParameters(initParams);

return bean;

}

//2.配置一个web监控的filter

@Bean

public FilterRegistrationBean webStatFilter(){

FilterRegistrationBean bean = new FilterRegistrationBean();

bean.setFilter(new WebStatFilter());

Map<String,String> initParams = new HashMap<>();

//配置拦截时需要排除的请求

initParams.put("exclusions","*.js,*.gif,*.jpg,*.png,*.css,*.ico,/druid/*");

bean.setInitParameters(initParams);

bean.setUrlPatterns(Arrays.asList("/*"));

return bean;

}

}

2、导入druid-spring-boot-starter依赖

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>druid-spring-boot-starter</artifactId>

<version>1.1.22</version>

</dependency>

<dependency>

<groupId>log4j</groupId>

<artifactId>log4j</artifactId>

<version>1.2.17</version>

</dependency>

编写yml文件

spring:

#数据库配置,使用druid数据库连接池

datasource:

name: druidDataSource

type: com.alibaba.druid.pool.DruidDataSource

druid:

driver-class-name: com.mysql.cj.jdbc.Driver

url: jdbc:mysql://localhost:3306/50sql?serverTimezone=UTC&useUnicode=true&characterEncoding=utf-8

username: root

password: 123456

#Spring Boot 默认是不注入这些属性值的,需要自己绑定

#注意:如果导入的是druid-spring-boot-starter启动器则不用单独配置

#druid 数据源专有配置

initialSize: 5

minIdle: 5

maxActive: 20

maxWait: 60000

timeBetweenEvictionRunsMillis: 60000

minEvictableIdleTimeMillis: 300000

validationQuery: SELECT 1 FROM DUAL

testWhileIdle: true

testOnBorrow: false

testOnReturn: false

poolPreparedStatements: true

#配置监控统计拦截的filters:stat:监控统计、self4j(使用log4j的记得导入log4j的依赖):日志记录、wall:防御sql注入 此处配置不能遗漏服务sql监控台不能监控sql

filter:

slf4j:

enabled: true

stat:

enabled: true

merge-sql: true

slow-sql-millis: 5000

wall:

enabled: true

#配置stat-view-servlet

stat-view-servlet:

enabled: true

login-username: admin

login-password: 123456

reset-enable: false

#配置web-stat-filter

web-stat-filter:

enabled: true

通过查看com.alibaba.druid.spring.boot.autoconfigure自动配置包,查看DruidDataSourceAutoConfigure的自动配置类

@Configuration

@ConditionalOnClass(DruidDataSource.class)

@AutoConfigureBefore(DataSourceAutoConfiguration.class)

@EnableConfigurationProperties({DruidStatProperties.class, DataSourceProperties.class})

@Import({DruidSpringAopConfiguration.class,

DruidStatViewServletConfiguration.class,

DruidWebStatFilterConfiguration.class,

DruidFilterConfiguration.class})

public class DruidDataSourceAutoConfigure {

private static final Logger LOGGER = LoggerFactory.getLogger(DruidDataSourceAutoConfigure.class);

@Bean(initMethod = "init")

@ConditionalOnMissingBean

public DataSource dataSource() {

LOGGER.info("Init DruidDataSource");

return new DruidDataSourceWrapper();

}

}

发现只配置了数据源,我继续进入@Import注解导入的其他配置类

- DruidSpringAopConfiguration.class:AOP配置

- DruidStatViewServletConfiguration.class:statservlet的监控配置

- DruidWebStatFilterConfiguration.class:statfilter的监控配置

- DruidFilterConfiguration.class:各种其他的filter配置

DruidStatViewServletConfiguration.class

@ConditionalOnWebApplication

@ConditionalOnProperty(name = "spring.datasource.druid.stat-view-servlet.enabled", havingValue = "true")

public class DruidStatViewServletConfiguration {

private static final String DEFAULT_ALLOW_IP = "127.0.0.1";

@Bean

public ServletRegistrationBean statViewServletRegistrationBean(DruidStatProperties properties) {

DruidStatProperties.StatViewServlet config = properties.getStatViewServlet();

ServletRegistrationBean registrationBean = new ServletRegistrationBean();

registrationBean.setServlet(new StatViewServlet());

registrationBean.addUrlMappings(config.getUrlPattern() != null ? config.getUrlPattern() : "/druid/*");

if (config.getAllow() != null) {

registrationBean.addInitParameter("allow", config.getAllow());

} else {

registrationBean.addInitParameter("allow", DEFAULT_ALLOW_IP);

}

if (config.getDeny() != null) {

registrationBean.addInitParameter("deny", config.getDeny());

}

if (config.getLoginUsername() != null) {

registrationBean.addInitParameter("loginUsername", config.getLoginUsername());

}

if (config.getLoginPassword() != null) {

registrationBean.addInitParameter("loginPassword", config.getLoginPassword());

}

if (config.getResetEnable() != null) {

registrationBean.addInitParameter("resetEnable", config.getResetEnable());

}

return registrationBean;

}

}

DruidWebStatFilterConfiguration.class

@ConditionalOnWebApplication

@ConditionalOnProperty(name = "spring.datasource.druid.web-stat-filter.enabled", havingValue = "true")

public class DruidWebStatFilterConfiguration {

@Bean

public FilterRegistrationBean webStatFilterRegistrationBean(DruidStatProperties properties) {

DruidStatProperties.WebStatFilter config = properties.getWebStatFilter();

FilterRegistrationBean registrationBean = new FilterRegistrationBean();

WebStatFilter filter = new WebStatFilter();

registrationBean.setFilter(filter);

registrationBean.addUrlPatterns(config.getUrlPattern() != null ? config.getUrlPattern() : "/*");

registrationBean.addInitParameter("exclusions", config.getExclusions() != null ? config.getExclusions() : "*.js,*.gif,*.jpg,*.png,*.css,*.ico,/druid/*");

if (config.getSessionStatEnable() != null) {

registrationBean.addInitParameter("sessionStatEnable", config.getSessionStatEnable());

}

if (config.getSessionStatMaxCount() != null) {

registrationBean.addInitParameter("sessionStatMaxCount", config.getSessionStatMaxCount());

}

if (config.getPrincipalSessionName() != null) {

registrationBean.addInitParameter("principalSessionName", config.getPrincipalSessionName());

}

if (config.getPrincipalCookieName() != null) {

registrationBean.addInitParameter("principalCookieName", config.getPrincipalCookieName());

}

if (config.getProfileEnable() != null) {

registrationBean.addInitParameter("profileEnable", config.getProfileEnable());

}

return registrationBean;

}

}

StatViewServlet默认情况下是关闭的,只要我们开启,这个配置类便会向容器中加入StatViewServlet,并配置好默认参数。我们如果想自定义参数,可以再druid关键词下配置。默认允许本机进入,默认url-pattern是/druid/。

同理,WebStatFilter也需要我们开启,默认是关闭的。它帮我们配置好了过滤的urlpattren为"/“,排除了druid的urlpattern与一些静态资源”.js,.gif,.jpg,.png,.css,.ico,/druid/*"。

4、整合Mybatis-plus

引入依赖

<dependency>

<groupId>com.baomidou</groupId>

<artifactId>mybatis-plus-boot-starter</artifactId>

<version>最新版本</version>

</dependency>

在 Spring Boot 启动类中添加 @MapperScan 注解,扫描 dao层 文件夹,或者在每个Mapper上添加@Mapper注解

@SpringBootApplication

@MapperScan("com.qzh.springboot.dao")

public class Application {

public static void main(String[] args) {

SpringApplication.run(Application.class, args);

}

}

配置mybatis-plus

#mybatis-plus

mybatis-plus:

type-aliases-package: com.qzh.springboot.entity #实体别名

configuration:

map-underscore-to-camel-case: true # 驼峰命名规范

mapper-locations: # mapper映射文件位置

- classpath:mapper/*.xml

Mapper文件示例

DOCTYPE mapper

PUBLIC "-//mybatis.org//DTD Mapper 3.0//EN"

"http://mybatis.org/dtd/mybatis-3-mapper.dtd">

<mapper namespace="com.qzh.springboot.dao.UserMapper">

<select id="getUserById" resultType="com.qzh.springboot.entity.User">

select * from user

select>

mapper>

配置分页插件(版本要求:3.4.0 版本以上)

@Configuration

public class MybatisPlusConfig {

@Bean

public MybatisPlusInterceptor mybatisPlusInterceptor() {

MybatisPlusInterceptor interceptor = new MybatisPlusInterceptor(); //配置插件类

interceptor.addInnerInterceptor(new PaginationInnerInterceptor(DbType.MYSQL)); //添加一个插件,这里添加分页插件

return interceptor;

}

}

mybatis-plus分页插件使用

分页插件事例:

@Test

public void selectPage() {

IPage<User> page = new Page<>(3,2);

IPage<User> userList = userMapper.selectPage(page,null);

System.out.println(JSONObject.toJSONString(userList));

}

5、配置slf4j日志记录

导入log4j的依赖,使用slf4j自动使用log4j记录日志

<!--log4j-->

<dependency>

<groupId>log4j</groupId>

<artifactId>log4j</artifactId>

<version>1.2.17</version>

</dependency>

application.yml添加slf4j的配置

#logger

logging:

#logging.config 是用来指定项目启动的时候,读取哪个配置文件,这里指定的是日志配置文件是根路径下的 logback.xml 文件

config: classpath:logback.xml

#logging.level 是用来指定具体的 mapper 中日志的输出级别

level:

com.qzh.springboot: Debug

log4j日志级别类型:

- All:最低等级的,用于获取所有日志记录

- Trace:是追踪,就是程序推进以下,你就可以写个trace输出

- Debug:指出细粒度信息事件对调试应用程序是非常有帮助的

- Info:消息在粗粒度级别上突出强调应用程序的运行过程

- Warn:输出警告及warn以下级别的日志

- Error:输出错误信息日志

- Fatal:输出每个严重的错误事件将会导致应用程序的退出的日志

- OFF:最高等级的,用于关闭所有日志记录

Log4j建议只使用四个级别,优先级从高到低分别是ERROR、WARN、INFO、DEBUG

logback.xml:

<configuration debug="false">

<property name="LOG_HOME" value="../" />

<property resource="application.yml" />

<appender name="STDOUT" class="ch.qos.logback.core.ConsoleAppender">

<encoder class="ch.qos.logback.classic.encoder.PatternLayoutEncoder">

<pattern>%d{yyyy-MM-dd HH:mm:ss.SSS} %-5level %logger{20} - %msg%npattern>

<charset>UTF-8charset>

encoder>

appender>

<appender name="FILE" class="ch.qos.logback.core.rolling.RollingFileAppender">

<filter class="ch.qos.logback.classic.filter.LevelFilter">

<level>INFOlevel>

<onMatch>ACCEPTonMatch>

<onMismatch>DENYonMismatch>

filter>

<file>${LOG_HOME}/logs/logfile>

<rollingPolicy class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy">

<FileNamePattern>${LOG_HOME}/logs/log.%d{yyyy-MM-dd}.%i.zipFileNamePattern>

<MaxHistory>5MaxHistory>

<timeBasedFileNamingAndTriggeringPolicy class="ch.qos.logback.core.rolling.SizeAndTimeBasedFNATP">

<maxFileSize>20MBmaxFileSize>

timeBasedFileNamingAndTriggeringPolicy>

rollingPolicy>

<append>trueappend>

<encoder class="ch.qos.logback.classic.encoder.PatternLayoutEncoder">

<pattern>%d{yyyy-MM-dd HH:mm:ss.SSS} %-5level %logger{50} - %msg%npattern>

<charset>UTF-8charset>

encoder>

appender>

<root level="INFO">

<appender-ref ref="STDOUT" />

<appender-ref ref="FILE" />

root>

<logger name="org.springframework" level="WARN"/>

<logger name="org.springframework.web" level="ERROR"/>

<logger name="org.springframework.cloud" level="WARN"/>

<logger name="org.springframework.security" level="WARN"/>

<logger name="org.springframework.cache" level="WARN"/>

<logger name="org.springframework.data.mybatis" level="DEBUG"/>

<logger name="org.springframework.aop.aspectj" level="ERROR"/>

<logger name="javax.activation" level="WARN"/>

<logger name="javax.mail" level="WARN"/>

<logger name="javax.xml.bind" level="WARN"/>

<logger name="ch.qos.logback" level="INFO"/>

<logger name="org.apache.zookeeper" level="WARN"/>

<logger name="org.apache.kafka" level="WARN"/>

<logger name="org.apache" level="WARN"/>

<logger name="org.apache.tomcat.util" level="ERROR"/>

<logger name="com.sun" level="WARN"/>

<logger name="com.alibaba" level="WARN"/>

<logger name="springfox" level="WARN"/>

<logger name="druid.sql" level="INFO"/>

<logger name="com.netflix.eureka" level="WARN"/>

<logger name="com.netflix.ribbon" level="WARN"/>

<logger name="io.github.openfeign" level="WARN"/>

<logger name="com.ctrip.framework.apollo" level="WARN"/>

<logger name="redis.clients" level="WARN"/>

<logger name="org.elasticsearch" level="WARN"/>

<logger name="org.hibernate.type.descriptor.sql.BasicBinder" level="INFO" />

<logger name="org.hibernate.type.descriptor.sql.BasicExtractor" level="DEBUG" />

<logger name="org.hibernate.SQL" level="DEBUG" />

<logger name="org.hibernate.engine.QueryParameters" level="DEBUG" />

<logger name="org.hibernate.engine.query.HQLQueryPlan" level="DEBUG" />

<logger name="com.ibatis" level="INFO"/>

<logger name="com.ibatis.common.jdbc.SimpleDataSource" level="INFO"/>

<logger name="com.ibatis.common.jdbc.ScriptRunner" level="INFO"/>

<logger name="com.ibatis.sqlmap.engine.impl.SqlMapClientDelegate" level="WARN"/>

<logger name="java.sql.Connection" level="DEBUG"/>

<logger name="java.sql.Statement" level="DEBUG"/>

<logger name="java.sql.PreparedStatement" level="DEBUG"/>

<logger name="java.sql.ResultSet" level="DEBUG"/>

<logger name="jdbc.connection" level="ERROR"/>

<logger name="jdbc.resultset" level="ERROR"/>

<logger name="jdbc.resultsettable" level="INFO"/>

<logger name="jdbc.audit" level="ERROR"/>

<logger name="jdbc.sqltiming" level="ERROR"/>

<logger name="jdbc.sqlonly" level="INFO"/>

configuration>

使用:

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

@SpringBootTest

public class SpringbootApplicationTests {

private static final Logger logger = LoggerFactory.getLogger(SpringbootApplicationTests.class);

@Test

public void save() {

logger.debug("=====测试日志debug级别打印====");

logger.info("======测试日志info级别打印=====");

logger.error("=====测试日志error级别打印====");

logger.warn("======测试日志warn级别打印=====");

}

}

6、整合redis

导入依赖

<!--redis,使用lettuce连接池,需要导入commons-pool2依赖-->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-data-redis</artifactId>

</dependency>

<dependency>

<groupId>org.apache.commons</groupId>

<artifactId>commons-pool2</artifactId>

<version>2.9.0</version>

</dependency>

yml文件添加redis配置

redis:

#配置redis主机地址

host: 192.168.67.54

port: 6379

database: 3

password: wlkj@1234

timeout: 5000

lettuce:

pool:

# 连接池中最大空闲连接,默认值是8

max-idle: 500

# 连接池中最小空闲连接,默认值是0

min-idle: 50

# 如果赋值为-1,则表示不限制;如果pool已经分配了maxActive个jedis实例,则此时pool的状态为exhausted(耗尽)

max-active: 1000

# 等待可用连接的最大时间,单位毫秒,默认值为-1,表示永不超时。如果超过等待时间,则直接抛出JedisConnectionException

max-wait: 2000

RedisConfig.java:

package com.qzh.springboot.config;

import com.fasterxml.jackson.annotation.JsonAutoDetect;

import com.fasterxml.jackson.annotation.PropertyAccessor;

import com.fasterxml.jackson.databind.ObjectMapper;

import org.springframework.boot.autoconfigure.cache.CacheProperties;

import org.springframework.cache.annotation.CachingConfigurerSupport;

import org.springframework.cache.annotation.EnableCaching;

import org.springframework.cache.interceptor.KeyGenerator;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

import org.springframework.data.redis.cache.RedisCacheConfiguration;

import org.springframework.data.redis.connection.RedisConnectionFactory;

import org.springframework.data.redis.core.RedisTemplate;

import org.springframework.data.redis.serializer.*;

import java.lang.reflect.Method;

import java.util.Arrays;

/**

* @author qzh

* @date 2022/10/13 上午 11:03

* @description

*/

@Configuration

@EnableCaching //启用缓存

public class RedisConfig extends CachingConfigurerSupport {

/**

* 自定义redisTemplate的序列化,jedis版本

* @param factory

* @return

*/

@Bean

public RedisTemplate redisTemplate(RedisConnectionFactory factory) {

RedisTemplate redisTemplate = new RedisTemplate();

redisTemplate.setConnectionFactory(factory);

// Json对象的序列化

Jackson2JsonRedisSerializer jackson2JsonRedisSerializer = new Jackson2JsonRedisSerializer(Object.class);

ObjectMapper objectMapper = new ObjectMapper();

objectMapper.setVisibility(PropertyAccessor.ALL, JsonAutoDetect.Visibility.ANY);

objectMapper.activateDefaultTyping(LaissezFaireSubTypeValidator.instance,

ObjectMapper.DefaultTyping.NON_FINAL, JsonTypeInfo.As.PROPERTY);

jackson2JsonRedisSerializer.setObjectMapper(objectMapper);

// String的序列化

StringRedisSerializer stringRedisSerializer = new StringRedisSerializer();

// key采用String的序列化方式

redisTemplate.setKeySerializer(stringRedisSerializer);

// hash 的key也采用String的序列化方式

redisTemplate.setHashKeySerializer(stringRedisSerializer);

// value序列化方式采用jackson

redisTemplate.setValueSerializer(jackson2JsonRedisSerializer);

// hash的value序列化方式采用

redisTemplate.setHashValueSerializer(jackson2JsonRedisSerializer);

redisTemplate.afterPropertiesSet();

return redisTemplate;

}

/**

* 自定义redisTemplate的序列化,lettuce版本

* @param factory

* @return

*/

@Bean

public RedisTemplate<String,Object> redisTemplate(LettuceConnectionFactory factory) {

RedisTemplate<String,Object> redisTemplate = new RedisTemplate();

redisTemplate.setConnectionFactory(factory);

// Json对象的序列化

Jackson2JsonRedisSerializer jackson2JsonRedisSerializer = new Jackson2JsonRedisSerializer(Object.class);

ObjectMapper objectMapper = new ObjectMapper();

objectMapper.setVisibility(PropertyAccessor.ALL, JsonAutoDetect.Visibility.ANY);

objectMapper.activateDefaultTyping(LaissezFaireSubTypeValidator.instance,

ObjectMapper.DefaultTyping.NON_FINAL, JsonTypeInfo.As.PROPERTY);

jackson2JsonRedisSerializer.setObjectMapper(objectMapper);

// String的序列化

StringRedisSerializer stringRedisSerializer = new StringRedisSerializer();

// key采用String的序列化方式

redisTemplate.setKeySerializer(stringRedisSerializer);

// hash 的key也采用String的序列化方式

redisTemplate.setHashKeySerializer(stringRedisSerializer);

// value序列化方式采用jackson

redisTemplate.setValueSerializer(jackson2JsonRedisSerializer);

// hash的value序列化方式采用

redisTemplate.setHashValueSerializer(jackson2JsonRedisSerializer);

redisTemplate.afterPropertiesSet();

return redisTemplate;

}

/**

* 配置Redis缓存注解的value序列化方式,解决乱码

*/

@Bean

public RedisCacheConfiguration redisCacheConfiguration(CacheProperties cacheProperties) {

CacheProperties.Redis redisProperties = cacheProperties.getRedis();

RedisCacheConfiguration config = RedisCacheConfiguration.defaultCacheConfig();

// 序列化值

config = config.serializeValuesWith(RedisSerializationContext.SerializationPair

.fromSerializer(new GenericJackson2JsonRedisSerializer()));

if (redisProperties.getTimeToLive() != null) {

config = config.entryTtl(redisProperties.getTimeToLive());

}

if (redisProperties.getKeyPrefix() != null) {

config = config.prefixKeysWith(redisProperties.getKeyPrefix());

}

if (!redisProperties.isCacheNullValues()) {

config = config.disableCachingNullValues();

}

if (!redisProperties.isUseKeyPrefix()) {

config = config.disableKeyPrefix();

}

return config;

}

/**

* 自定义缓存key生成策略

*

* @return

*/

@Bean

@Override

public KeyGenerator keyGenerator() {

return new KeyGenerator() {

@Override

public Object generate(Object o, Method method, Object... objects) {

StringBuilder sb = new StringBuilder();

sb.append(o.getClass().

getName()).append(":")

.append(method.getName());

if (objects != null && objects.length != 0) {

sb.append(":" + Arrays.toString(objects));

}

return sb.toString();

}

};

}

}

redis工具类

package com.qzh.springboot.utils;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.data.redis.core.RedisTemplate;

import org.springframework.stereotype.Component;

import org.springframework.util.CollectionUtils;

import java.util.List;

import java.util.Map;

import java.util.Set;

import java.util.concurrent.TimeUnit;

/**

* @author qzh

* @date 2022/11/1 下午 04:06

* @description redis工具类

*/

@Component

public class RedisUtil {

@Autowired

private RedisTemplate redisTemplate;

//============================common=============================

/**

* 指定缓存失效时间

* @param key 键

* @param time 时间(秒)

*/

public boolean expire (String key, long time) {

try {

if (time > 0) {

redisTemplate.expire(key, time, TimeUnit.SECONDS);

return true;

} else {

throw new RuntimeException("缓存失效时间不能小于0");

}

} catch (Exception e) {

e.printStackTrace();

return false;

}

}

/**

* 根据key 获取过期时间

* @param key 键 不能为null

* @return 时间(秒) 返回0代表为永久有效

*/

public long getExpire (String key) {

return redisTemplate.getExpire(key, TimeUnit.SECONDS);

}

/**

* 判断key是否存在

* @param key 键

* @return true 存在 false不存在

*/

public boolean hasKey(String key) {

try {

return redisTemplate.hasKey(key);

} catch (Exception e) {

e.printStackTrace();

return false;

}

}

/**

* 删除缓存

* @param key 可以传一个值 或多个

*/

@SuppressWarnings("unchecked")

public void del(String... key) {

if (key != null && key.length != 0) {

if (key.length == 1) {

redisTemplate.delete(key[0]);

} else {

redisTemplate.delete(CollectionUtils.arrayToList(key));

}

}

}

// ============================String=============================

/**

* 普通缓存获取

* @param key 键

* @return 值

*/

public Object get(String key) {

return key == null ? null : redisTemplate.opsForValue().get(key);

}

/**

* 普通缓存存放

* @param key 键

* @param value 值

* @return true成功 false失败

*/

public boolean set(String key, Object value) {

try {

redisTemplate.opsForValue().set(key, value);

return true;

} catch (Exception e) {

e.printStackTrace();

return false;

}

}

/**

* 普通缓存放入并设置时间

* @param key 键

* @param value 值

* @param time 时间(秒) time要大于0 如果time小于等于0 将设置无限期

* @return true成功 false 失败

*/

public boolean set(String key, Object value, long time) {

try {

if (time > 0) {

redisTemplate.opsForValue().set(key, value, time, TimeUnit.SECONDS);

} else {

redisTemplate.opsForValue().set(key, value);

}

return true;

} catch (Exception e) {

e.printStackTrace();

return false;

}

}

/**

* 递增

* @param key 键

* @param delta 要增加几(大于0)

*/

public long incr(String key, long delta) {

if (delta < 0) {

throw new RuntimeException("递增因子必须大于0");

}

return redisTemplate.opsForValue().increment(key, delta);

}

/**

* 递减

* @param key 键

* @param delta 要减少几(小于0)

*/

public long decr(String key, long delta) {

if (delta < 0) {

throw new RuntimeException("递减因子必须大于0");

}

return redisTemplate.opsForValue().increment(key, -delta);

}

// ================================Map=================================

/**

* HashGet

* @param key 键 不能为null

* @param item 项 不能为null

*/

public Object hget(String key, String item) {

return redisTemplate.opsForHash().get(key, item);

}

/**

* 获取hashKey对应的所有键值

* @param key 键

* @return 对应的多个键值

*/

public Map<Object, Object> hmget(String key) {

return redisTemplate.opsForHash().entries(key);

}

/**

* HashSet

* @param key 键

* @param map 对应多个键值

*/

public boolean hmset(String key, Map<Object, Object> map) {

try {

redisTemplate.opsForHash().putAll(key, map);

return true;

} catch (Exception e) {

e.printStackTrace();

return false;

}

}

/**

* HashSet 并设置时间

* @param key 键

* @param map 对应多个键值

* @param time 时间(秒)

* @return true成功 false失败

*/

public boolean hmset(String key, Map<Object, Object> map, long time) {

try {

redisTemplate.opsForHash().putAll(key, map);

if (time > 0 ) {

expire(key, time);

}

return true;

} catch (Exception e) {

e.printStackTrace();

return false;

}

}

/**

* 删除hash表中的值

*

* @param key 键 不能为null

* @param item 项 可以使多个 不能为null

*/

public void hdel(String key, Object... item) {

redisTemplate.opsForHash().delete(key, item);

}

/**

* 判断hash表中是否有该项的值

*

* @param key 键 不能为null

* @param item 项 不能为null

* @return true 存在 false不存在

*/

public boolean hHasKey(String key, String item) {

return redisTemplate.opsForHash().hasKey(key, item);

}

/**

* hash递增 如果不存在,就会创建一个 并把新增后的值返回

*

* @param key 键

* @param item 项

* @param by 要增加几(大于0)

*/

public double hincr(String key, String item, double by) {

return redisTemplate.opsForHash().increment(key, item, by);

}

/**

* hash递减

*

* @param key 键

* @param item 项

* @param by 要减少记(小于0)

*/

public double hdecr(String key, String item, double by) {

return redisTemplate.opsForHash().increment(key, item, -by);

}

// ============================set=============================

/**

* 根据key获取Set中的所有值

* @param key 键

*/

public Set<Object> sGet(String key) {

try {

return redisTemplate.opsForSet().members(key);

} catch (Exception e) {

e.printStackTrace();

return null;

}

}

/**

* 根据value从一个set中查询,是否存在

*

* @param key 键

* @param value 值

* @return true 存在 false不存在

*/

public boolean sHasKey(String key, Object value) {

try {

return redisTemplate.opsForSet().isMember(key, value);

} catch (Exception e) {

e.printStackTrace();

return false;

}

}

/**

* 将数据放入set缓存

*

* @param key 键

* @param values 值 可以是多个

* @return 成功个数

*/

public long sSet(String key, Object... values) {

try {

return redisTemplate.opsForSet().add(key, values);

} catch (Exception e) {

e.printStackTrace();

return 0;

}

}

/**

* 将set数据放入缓存

*

* @param key 键

* @param time 时间(秒)

* @param values 值 可以是多个

* @return 成功个数

*/

public long sSetAndTime(String key, long time, Object... values) {

try {

Long count = redisTemplate.opsForSet().add(key, values);

if (time > 0)

expire(key, time);

return count;

} catch (Exception e) {

e.printStackTrace();

return 0;

}

}

/**

* 获取set缓存的长度

*

* @param key 键

*/

public long sGetSetSize(String key) {

try {

return redisTemplate.opsForSet().size(key);

} catch (Exception e) {

e.printStackTrace();

return 0;

}

}

/**

* 移除值为value的

*

* @param key 键

* @param values 值 可以是多个

* @return 移除的个数

*/

public long setRemove(String key, Object... values) {

try {

Long count = redisTemplate.opsForSet().remove(key, values);

return count;

} catch (Exception e) {

e.printStackTrace();

return 0;

}

}

// ===============================list=================================

/**

* 获取list缓存的内容

* @param key 键

* @param start 开始

* @param end 结束 0 到 -1代表所有值

* @return

*/

public List<Object> lGet(String key, long start, long end) {

try {

return redisTemplate.opsForList().range(key, start, end);

} catch (Exception e) {

e.printStackTrace();

return null;

}

}

/**

* 获取list缓存的长度

*

* @param key 键

*/

public long lGetListSize(String key) {

try {

return redisTemplate.opsForList().size(key);

} catch (Exception e) {

e.printStackTrace();

return 0;

}

}

/**

* 通过索引 获取list中的值

*

* @param key 键

* @param index 索引 index>=0时, 0 表头,1 第二个元素,依次类推;index<0时,-1,表尾,-2倒数第二个元素,依次类推

*/

public Object lGetIndex(String key, long index) {

try {

return redisTemplate.opsForList().index(key, index);

} catch (Exception e) {

e.printStackTrace();

return null;

}

}

/**

* 将list放入缓存

*

* @param key 键

* @param value 值

*/

public boolean lSet(String key, Object value) {

try {

redisTemplate.opsForList().rightPush(key, value);

return true;

} catch (Exception e) {

e.printStackTrace();

return false;

}

}

/**

* 将list放入缓存

* @param key 键

* @param value 值

* @param time 时间(秒)

*/

public boolean lSet(String key, Object value, long time) {

try {

redisTemplate.opsForList().rightPush(key, value);

if (time > 0)

expire(key, time);

return true;

} catch (Exception e) {

e.printStackTrace();

return false;

}

}

/**

* 将list放入缓存

*

* @param key 键

* @param value 值

* @return

*/

public boolean lSet(String key, List<Object> value) {

try {

redisTemplate.opsForList().rightPushAll(key, value);

return true;

} catch (Exception e) {

e.printStackTrace();

return false;

}

}

/**

* 将list放入缓存

*

* @param key 键

* @param value 值

* @param time 时间(秒)

* @return

*/

public boolean lSet(String key, List<Object> value, long time) {

try {

redisTemplate.opsForList().rightPushAll(key, value);

if (time > 0)

expire(key, time);

return true;

} catch (Exception e) {

e.printStackTrace();

return false;

}

}

/**

* 根据索引修改list中的某条数据

*

* @param key 键

* @param index 索引

* @param value 值

* @return

*/

public boolean lUpdateIndex(String key, long index, Object value) {

try {

redisTemplate.opsForList().set(key, index, value);

return true;

} catch (Exception e) {

e.printStackTrace();

return false;

}

}

/**

* 移除N个值为value

*

* @param key 键

* @param count 移除多少个

* @param value 值

* @return 移除的个数

*/

public long lRemove(String key, long count, Object value) {

try {

Long remove = redisTemplate.opsForList().remove(key, count, value);

return remove;

} catch (Exception e) {

e.printStackTrace();

return 0;

}

}

}

7、整合swagger

导入依赖

<!--swagger :访问 http://localhost:8080/swagger-ui.html-->

io.springfox

springfox-swagger-ui

2.9.2

io.springfox

springfox-swagger2

2.9.2

io.swagger

swagger-annotations

io.swagger

swagger-models