windows版本docker安装nacos集群,使用mysql进行持久化并用nginx进行代理,整合seata进行分布式事务处理

网络准备

创建docker子网,用于网络配置

docker network create --subnet 172.18.0.0/24 nacos网络设置

| 容器名称 | ip | 本机port | 容器port |

|---|---|---|---|

| mysql | 172.18.0.2 | 3307 | 3306 |

| my-nacos1 | 172.18.0.3 | 8846 | 8848 |

| my-nacos2 | 172.18.0.4 | 8847 | 8848 |

| my-nacos3 | 172.18.0.5 | 8848 | 8848 |

| nginx | 172.18.0.6 | 1111 | 80 |

创建mysql

启动命令

docker run -di \

--name=mysql \

-p 3307:3306 \

-v E:/docker-mysql/data/mysql/conf:/etc/mysql/conf.d \

-v E:/docker-mysql/data/mysql/data:/var/lib/mysql \

-v E:/docker-mysql/data/mysql/log:/var/log/mysql \

-v E:/docker-mysql/data/mysql-files:/var/lib/mysql-files \

-e MYSQL_ROOT_PASSWORD=abc123 \

--net nacos \

--ip 172.18.0.2 \

mysql:8.0.26参数说明:

-

--name指定容器名称

-

-p将本机端口映射到容器端口,这里将本机3307映射到容器3306端口

-

-v进行挂在

-

-e指定环境变量MYSQL_ROOT_PASSWORD为abc123

-

--net指定网络

-

--ip指定容器启动的ip

-

mysql:8.0.26运行的image

navicat连接问题

成功启动后mysql,使用navicat连接,出现2059 - authentication plugin ‘caching_sha2_password‘问题

解决方案

MySQL新版本(8以上版本)的用户登录账户加密方式是【caching_sha2_password】,Navicat不支持这种用户登录账户加密方式。

#进入mysql容器中

docker exec -it mysql bash

#登陆mysql

mysql -u root -p abc123

#修改root用户加密方式

ALTER USER 'root'@'localhost' IDENTIFIED WITH mysql_native_password BY 'abc123';

ALTER USER 'root'@'%' IDENTIFIED WITH mysql_native_password BY 'abc123';重新连接解决问题

创建nacos数据库

CREATE DATABASE nacos根据使用的nacos版本,从官网找到对应版本的sql,这里使用1.4.1版

Release 1.4.1 (Jan 15, 2021) · alibaba/nacos (github.com)

下载windows版本并解压,从nacos/conf/中找到nacos-mysql.sql文件,在nacos数据库中运行

至此,mysql的准备工作完成!

部署nacos集群

创建三个nacos容器作为集群

#配置nacos集群

#机器1

docker run -d \

-e PREFER_HOST_MODE=hostname \

-e MODE=cluster \

-e NACOS_APPLICATION_PORT=8846 \

-e NACOS_SERVERS="172.18.0.3:8846 172.18.0.4:8847 172.18.0.5:8848" \

-e SPRING_DATASOURCE_PLATFORM=mysql \

-e MYSQL_SERVICE_HOST=172.18.0.2 \

-e MYSQL_SERVICE_PORT=3306 \

-e MYSQL_SERVICE_USER=root \

-e MYSQL_SERVICE_PASSWORD=abc123 \

-e MYSQL_SERVICE_DB_NAME=nacos \

-e NACOS_SERVER_IP=172.18.0.3 \

--net nacos \

--ip 172.18.0.3 \

--name my-nacos1 \

-p 8846:8846 \

nacos/nacos-server:1.4.1

#机器2

docker run -d \

-e PREFER_HOST_MODE=hostname \

-e MODE=cluster \

-e NACOS_APPLICATION_PORT=8847 \

-e NACOS_SERVERS="172.18.0.3:8846 172.18.0.4:8847 172.18.0.5:8848" \

-e SPRING_DATASOURCE_PLATFORM=mysql \

-e MYSQL_SERVICE_HOST=172.18.0.2 \

-e MYSQL_SERVICE_PORT=3306 \

-e MYSQL_SERVICE_USER=root \

-e MYSQL_SERVICE_PASSWORD=abc123 \

-e MYSQL_SERVICE_DB_NAME=nacos \

-e NACOS_SERVER_IP=172.18.0.4 \

--net nacos \

--ip 172.18.0.4 \

--name my-nacos2 \

-p 8847:8847 \

nacos/nacos-server:1.4.1

#机器3

docker run -d \

-e PREFER_HOST_MODE=hostname \

-e MODE=cluster \

-e NACOS_APPLICATION_PORT=8848 \

-e NACOS_SERVERS="172.18.0.3:8846 172.18.0.4:8847 172.18.0.5:8848" \

-e SPRING_DATASOURCE_PLATFORM=mysql \

-e MYSQL_SERVICE_HOST=172.18.0.2 \

-e MYSQL_SERVICE_PORT=3306 \

-e MYSQL_SERVICE_USER=root \

-e MYSQL_SERVICE_PASSWORD=abc123 \

-e MYSQL_SERVICE_DB_NAME=nacos \

-e NACOS_SERVER_IP=172.18.0.5 \

--net nacos \

--ip 172.18.0.5 \

--name my-nacos3 \

-p 8848:8848 \

nacos/nacos-server:1.4.1参数说明

-

MODE指定nacos运行模式,standalone表示单机,cluster表示以集群方式启动

-

NACOS_APPLICATION_PORT指定nacos在本机运行的端口

-

NACOS_SERVERS指定集群中有哪些机器

-

SPRING_DATASOURCE_PLATFORM指定数据源

-

MYSQL_SERVICE_HOST指定mysql的hostname

-

MYSQL_SERVICE_PORT指定mysql的端口号

-

MYSQL_SERVICE_USER指定mysql的用户名

-

MYSQL_SERVICE_PASSWORD指定mysql登陆密码

-

MYSQL_SERVICE_DB_NAME指定nacos连接的数据库

-

NACOS_SERVER_IP指定nacos的ip

-

--net指定网络

-

--ip指定ip

-

-p端口映射

启动后,查看每台机器的日志

docker logs -f my-nacos1可以看到nacos启动成功,并使用外部存储

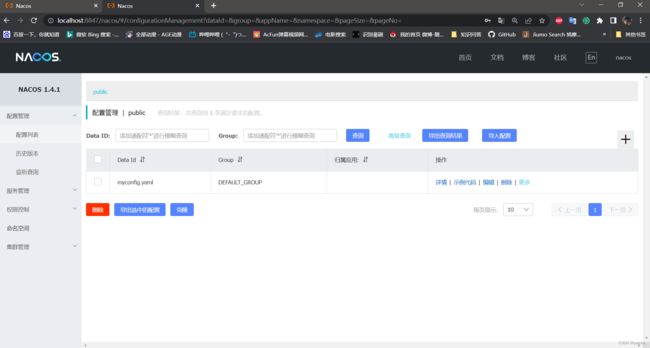

使用本地8847访问成功,同理8846,8848端口都可以成功访问

查看集群管理,三台机器都成功启动

至此,nacos集群搭建完成

nginx代理

修改配置文件

先简单创建nginx

docker run --name mynginx -d nginx复制对应的文件到本地,修改后再拷贝至容器内

# 复制mynginx容器中的静态文件

docker cp mynginx:/usr/share/nginx/html E:/nginx/html

# 复制mynginx容器中的配置文件

docker cp mynginx:/etc/nginx/nginx.conf E:/nginx/conf/nginx.conf

# 复制mynginx容器中的默认配置文件

docker cp mynginx:/etc/nginx/conf.d/default.conf E:/nginx/conf.d/default.conf

修改配置文件

修改/e/nginx/conf.d/default.conf

upstream nacosserver {

server 172.18.0.3:8846;

server 172.18.0.4:8847;

server 172.18.0.5:8848;

}

server {

listen 80;

listen [::]:80;

server_name localhost;

#access_log /var/log/nginx/host.access.log main;

location / {

#root /usr/share/nginx/html;

#index index.html index.htm;

proxy_pass http://nacosserver;

}

#error_page 404 /404.html;

# redirect server error pages to the static page /50x.html

#

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root /usr/share/nginx/html;

}

# proxy the PHP scripts to Apache listening on 127.0.0.1:80

#

#location ~ \.php$ {

# proxy_pass http://127.0.0.1;

#}

# pass the PHP scripts to FastCGI server listening on 127.0.0.1:9000

#

#location ~ \.php$ {

# root html;

# fastcgi_pass 127.0.0.1:9000;

# fastcgi_index index.php;

# fastcgi_param SCRIPT_FILENAME /scripts$fastcgi_script_name;

# include fastcgi_params;

#}

# deny access to .htaccess files, if Apache's document root

# concurs with nginx's one

#

#location ~ /\.ht {

# deny all;

#}

}关闭并删除刚刚创建的mynginx

重新创建nginx

创建nginx进行代理

docker run -it -d \

--name nginx \

--restart=on-failure:5 \

-p 1111:80 \

-v E:/nginx/html:/usr/share/nginx/html \

-v E:/nginx/conf/nginx.conf:/etc/nginx/nginx.conf \

-v E:/nginx/conf.d/default.conf:/etc/nginx/conf.d/default.conf \

-v E:/nginx/log:/var/log/nginx \

--net nacos \

--ip 172.18.0.6 \

--privileged nginx:1.21.1 效果演示

使用本地的1111端口可以对nacos进行访问。

将服务部署到nacos中

我是跟着尚硅谷的springcloud课程做的,这里贴一下yaml配置

server:

port: 9002

spring:

application:

name: nacos-payment-provider

cloud:

nacos:

discovery:

server-addr: localhost:1111

# server-addr: localhost:8848 #配置Nacos地址

management:

endpoints:

web:

exposure:

include: '*'项目成功启动无报错

查看网页,服务成功注册

至此,docker下搭建nacos集群使用mysql进行持久化,使用nginx进行代理全部完成

seata部署

数据库准备

需要在mysql中创建seata数据库,同时在源码中找到sql文件进行运行,这里是1.4.2版本的sql文件

-- -------------------------------- The script used when storeMode is 'db' --------------------------------

-- the table to store GlobalSession data

CREATE TABLE IF NOT EXISTS `global_table`

(

`xid` VARCHAR(128) NOT NULL,

`transaction_id` BIGINT,

`status` TINYINT NOT NULL,

`application_id` VARCHAR(32),

`transaction_service_group` VARCHAR(32),

`transaction_name` VARCHAR(128),

`timeout` INT,

`begin_time` BIGINT,

`application_data` VARCHAR(2000),

`gmt_create` DATETIME,

`gmt_modified` DATETIME,

PRIMARY KEY (`xid`),

KEY `idx_gmt_modified_status` (`gmt_modified`, `status`),

KEY `idx_transaction_id` (`transaction_id`)

) ENGINE = InnoDB

DEFAULT CHARSET = utf8;

-- the table to store BranchSession data

CREATE TABLE IF NOT EXISTS `branch_table`

(

`branch_id` BIGINT NOT NULL,

`xid` VARCHAR(128) NOT NULL,

`transaction_id` BIGINT,

`resource_group_id` VARCHAR(32),

`resource_id` VARCHAR(256),

`branch_type` VARCHAR(8),

`status` TINYINT,

`client_id` VARCHAR(64),

`application_data` VARCHAR(2000),

`gmt_create` DATETIME(6),

`gmt_modified` DATETIME(6),

PRIMARY KEY (`branch_id`),

KEY `idx_xid` (`xid`)

) ENGINE = InnoDB

DEFAULT CHARSET = utf8;

-- the table to store lock data

CREATE TABLE IF NOT EXISTS `lock_table`

(

`row_key` VARCHAR(128) NOT NULL,

`xid` VARCHAR(128),

`transaction_id` BIGINT,

`branch_id` BIGINT NOT NULL,

`resource_id` VARCHAR(256),

`table_name` VARCHAR(32),

`pk` VARCHAR(36),

`gmt_create` DATETIME,

`gmt_modified` DATETIME,

PRIMARY KEY (`row_key`),

KEY `idx_branch_id` (`branch_id`)

) ENGINE = InnoDB

DEFAULT CHARSET = utf8;修改源码下的config.txt

transport.type=TCP

transport.server=NIO

transport.heartbeat=true

transport.enableClientBatchSendRequest=false

transport.threadFactory.bossThreadPrefix=NettyBoss

transport.threadFactory.workerThreadPrefix=NettyServerNIOWorker

transport.threadFactory.serverExecutorThreadPrefix=NettyServerBizHandler

transport.threadFactory.shareBossWorker=false

transport.threadFactory.clientSelectorThreadPrefix=NettyClientSelector

transport.threadFactory.clientSelectorThreadSize=1

transport.threadFactory.clientWorkerThreadPrefix=NettyClientWorkerThread

transport.threadFactory.bossThreadSize=1

transport.threadFactory.workerThreadSize=default

transport.shutdown.wait=3

service.vgroupMapping.fsp_tx_group=default #这里vgroupMapping后面的组名与业务yaml文件中的组名对应

service.default.grouplist=172.24.68.53:8091 #这里是否有用待定

service.enableDegrade=false

service.disableGlobalTransaction=false

client.rm.asyncCommitBufferLimit=10000

client.rm.lock.retryInterval=10

client.rm.lock.retryTimes=30

client.rm.lock.retryPolicyBranchRollbackOnConflict=true

client.rm.reportRetryCount=5

client.rm.tableMetaCheckEnable=false

client.rm.tableMetaCheckerInterval=60000

client.rm.sqlParserType=druid

client.rm.reportSuccessEnable=false

client.rm.sagaBranchRegisterEnable=false

client.tm.commitRetryCount=5

client.tm.rollbackRetryCount=5

client.tm.defaultGlobalTransactionTimeout=60000

client.tm.degradeCheck=false

client.tm.degradeCheckAllowTimes=10

client.tm.degradeCheckPeriod=2000

store.mode=db #修改存储模式

store.file.dir=file_store/data

store.file.maxBranchSessionSize=16384

store.file.maxGlobalSessionSize=512

store.file.fileWriteBufferCacheSize=16384

store.file.flushDiskMode=async

store.file.sessionReloadReadSize=100

store.db.datasource=druid #使用druid作为数据源

store.db.dbType=mysql #数据库类型

store.db.driverClassName=com.mysql.cj.jdbc.Driver #数据库连接驱动,这里使用的是mysql8.0版本的驱动

store.db.url=jdbc:mysql://你的本机ip地址:3307/seata?rewriteBatchedStatements=true&serverTimezone=Asia/Shanghai&useUnicode=true&characterEncoding=utf8&useSSL=false #数据库连接地址,mysql使用docker部署,这里的地址是本机ip地址和本机的端口,没有使用容器的ip和端口

store.db.user=root #数据库用户名

store.db.password=abc123 #数据库密码

store.db.minConn=5

store.db.maxConn=30

store.db.globalTable=global_table

store.db.branchTable=branch_table

store.db.queryLimit=100

store.db.lockTable=lock_table

store.db.maxWait=5000

store.redis.mode=single

store.redis.single.host=127.0.0.1

store.redis.single.port=6379

store.redis.maxConn=10

store.redis.minConn=1

store.redis.maxTotal=100

store.redis.database=0

store.redis.queryLimit=100

server.recovery.committingRetryPeriod=1000

server.recovery.asynCommittingRetryPeriod=1000

server.recovery.rollbackingRetryPeriod=1000

server.recovery.timeoutRetryPeriod=1000

server.maxCommitRetryTimeout=-1

server.maxRollbackRetryTimeout=-1

server.rollbackRetryTimeoutUnlockEnable=false

client.undo.dataValidation=true

client.undo.logSerialization=jackson

client.undo.onlyCareUpdateColumns=true

server.undo.logSaveDays=7

server.undo.logDeletePeriod=86400000

client.undo.logTable=undo_log

client.undo.compress.enable=true

client.undo.compress.type=zip

client.undo.compress.threshold=64k

log.exceptionRate=100

transport.serialization=seata

transport.compressor=none

metrics.enabled=false

metrics.registryType=compact

metrics.exporterList=prometheus

metrics.exporterPrometheusPort=9898使用命令将配置上传到nacos

sh nacos-config.sh -h 你的本机ip地址 -p 1111 #这里-h使用的是本机ip,1111是本地使用nginx代理的nacos端口修改源码下的registry.conf和file.conf

registry.conf

registry {

# file 、nacos 、eureka、redis、zk、consul、etcd3、sofa

type = "nacos" #指定使用nacos

nacos {

application = "seata-server"

serverAddr = "你的本机ip地址:1111" #本地ip和端口

group = "SEATA_GROUP"

namespace = ""

cluster = "default"

username = "nacos"

password = "nacos"

}

eureka {

serviceUrl = "http://localhost:8761/eureka"

application = "default"

weight = "1"

}

redis {

serverAddr = "localhost:6379"

db = 0

password = ""

cluster = "default"

timeout = 0

}

zk {

cluster = "default"

serverAddr = "127.0.0.1:2181"

sessionTimeout = 6000

connectTimeout = 2000

username = ""

password = ""

}

consul {

cluster = "default"

serverAddr = "127.0.0.1:8500"

aclToken = ""

}

etcd3 {

cluster = "default"

serverAddr = "http://localhost:2379"

}

sofa {

serverAddr = "127.0.0.1:9603"

application = "default"

region = "DEFAULT_ZONE"

datacenter = "DefaultDataCenter"

cluster = "default"

group = "SEATA_GROUP"

addressWaitTime = "3000"

}

file {

name = "file.conf"

}

}

config {

# file、nacos 、apollo、zk、consul、etcd3

type = "nacos" #使用nacos

nacos {

serverAddr = "你的本机ip地址:1111" #本地ip和端口

namespace = ""

group = "SEATA_GROUP"

username = "nacos"

password = "nacos"

dataId = "seataServer.properties"

}

consul {

serverAddr = "127.0.0.1:8500"

aclToken = ""

}

apollo {

appId = "seata-server"

## apolloConfigService will cover apolloMeta

apolloMeta = "http://192.168.1.204:8801"

apolloConfigService = "http://192.168.1.204:8080"

namespace = "application"

apolloAccesskeySecret = ""

cluster = "seata"

}

zk {

serverAddr = "127.0.0.1:2181"

sessionTimeout = 6000

connectTimeout = 2000

username = ""

password = ""

nodePath = "/seata/seata.properties"

}

etcd3 {

serverAddr = "http://localhost:2379"

}

file {

name = "file.conf"

}

}

file.conf

## transaction log store, only used in seata-server

store {

## store mode: file、db、redis

mode = "db" #使用数据库模式

## rsa decryption public key

publicKey = ""

## file store property

file {

## store location dir

dir = "sessionStore"

# branch session size , if exceeded first try compress lockkey, still exceeded throws exceptions

maxBranchSessionSize = 16384

# globe session size , if exceeded throws exceptions

maxGlobalSessionSize = 512

# file buffer size , if exceeded allocate new buffer

fileWriteBufferCacheSize = 16384

# when recover batch read size

sessionReloadReadSize = 100

# async, sync

flushDiskMode = async

}

## database store property

db {

## the implement of javax.sql.DataSource, such as DruidDataSource(druid)/BasicDataSource(dbcp)/HikariDataSource(hikari) etc.

datasource = "druid"

## mysql/oracle/postgresql/h2/oceanbase etc.

dbType = "mysql"

driverClassName = "com.mysql.cj.jdbc.Driver" #数据库连接驱动类型,mysql8.0使用的驱动

## if using mysql to store the data, recommend add rewriteBatchedStatements=true in jdbc connection param

url = "jdbc:mysql://你的本机ip地址:3307/seata?rewriteBatchedStatements=true&serverTimezone=Asia/Shanghai&useUnicode=true&characterEncoding=utf8&useSSL=false"

user = "root"

password = "abc123"

minConn = 5

maxConn = 100

globalTable = "global_table"

branchTable = "branch_table"

lockTable = "lock_table"

queryLimit = 100

maxWait = 5000

}

## redis store property

redis {

## redis mode: single、sentinel

mode = "single"

## single mode property

single {

host = "127.0.0.1"

port = "6379"

}

## sentinel mode property

sentinel {

masterName = ""

## such as "10.28.235.65:26379,10.28.235.65:26380,10.28.235.65:26381"

sentinelHosts = ""

}

password = ""

database = "0"

minConn = 1

maxConn = 10

maxTotal = 100

queryLimit = 100

}

}使用命令部署seata-server

docker run -d \

--restart always \

--name seata-server \

-p 8091:8091 \

-e SEATA_IP=你的本机ip地址\ #这里是重点,一定要使用本机ip,否则后续运行服务时,服务无法找到seata-server

-v E:/dtx/seata/resources:/seata-server/resources \ #将修改的配置文件与容器进行挂载

--net nacos \

--ip 172.18.0.8 \

seataio/seata-server:1.4.2在nacos集群中查看,seata服务成功启动

运行服务,将seata整合进业务中

业务的yaml配置

server:

port: 2001

spring:

application:

name: seata-order-service

cloud:

alibaba:

seata:

#自定义事务组名称需要与seata-server中的对应

tx-service-group: fsp_tx_group

nacos:

discovery:

server-addr: localhost:1111

datasource:

driver-class-name: com.mysql.cj.jdbc.Driver

url: jdbc:mysql://你的本机ip地址:3307/seata_order?rewriteBatchedStatements=true&serverTimezone=Asia/Shanghai&useUnicode=true&characterEncoding=utf8&useSSL=false #这里很重要,mysql使用docker部署的,一定要使用本地ip和端口,负责使用容器ip和端口的就是连接不到数据库

username: root

password: abc123

feign:

hystrix:

enabled: false

logging:

level:

io:

seata: info

mybatis:

mapperLocations: classpath:mapper/*.xml最后,每个业务的resouce文件下放上前面写好的registry.conf

seata的使用需要在业务的服务实现类上加上注解@GlobalTransactional,当出现异常时就能实现所有数据库的回滚

最后启动每个业务没有报错,在nacos集群上就能看到每个都注册成功了

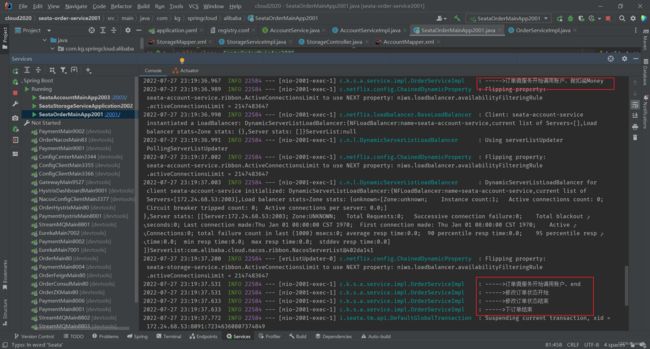

正常的功能调用

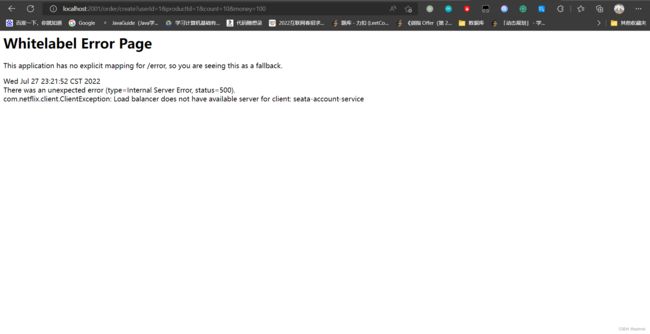

事务回滚,AccountServiceImpl加上超时,进行测试,首先调用失败

再查看seata_order数据库,没有状态为0的订单记录,事务回滚,测试成功

至此,windows+docker+mysql+nacos+nginx+seata全部成功!!!

后话

为什么要使用docker呢,本地的内存只有16g全部使用虚拟机的话,nacos集群、nginx、sentinel这些肯定没有办法全部开启,所以使用docker能很好地解决这些问题。同时,以后工作的话也是需要使用docker的,因此这里选择全部使用docker进行部署,熟悉这一套流程,提升了能力。