Docker搭建nacos+seata分布式事务

搭建nacos+seata分布式事务

- 1.简介

-

- 2.环境准备

- 2.1 下载nacos并安装启动

- 2.2 下载seata并安装启动

-

- 2.2.1 在 Seata Release 下载最新版的 Seata Server:

- 2.2.2 修改 conf/registry.conf 配置:

- 2.2.3 修改 conf/nacos-config.txt配置:

1.简介

本文主要介绍SpringBoot2.0.4 + Nacos 1.2.1 +Windows版本的Seata0.9.0整合使用Nacos 作为注册中心和配置中心,使用 MySQL 数据库和 MyBatis来操作数据。

2.环境准备

2.1 下载nacos并安装启动

nacos的Docker安装命令:

docker pull nacos/nacos-server:1.2.1

安装命令执行完成之后可以通过以下命令进行查看镜像(相信docker经验的人都非常熟悉了):

docker images

有上述这条就代表镜像已经安装成功了,接下来需要将该镜像启动并修改对应的一些配置信息如下:

docker run --env MODE=standalone --name nacos -d -p 18001:8848 nacos/nacos-server:1.2.1

启动成功后执行以下命令查看是否成功:

docker ps

![]()

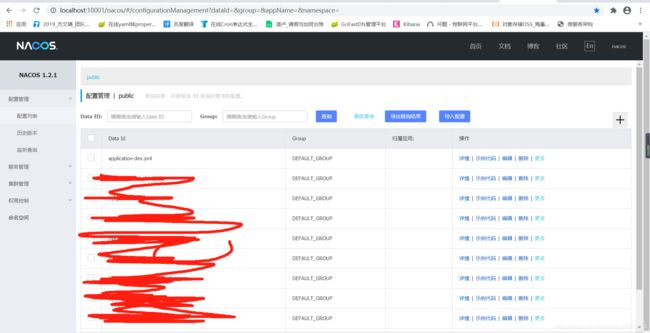

这边做了端口映射,最后可以通过 ip(localhost):18001/nacos 访问,登录密码默认nacos/nacos

到这里nacos就算安装启动成功了,接下来就是安装seata服务了。

2.2 下载seata并安装启动

2.2.1 在 Seata Release 下载最新版的 Seata Server:

下载seata服务地址Seata Release

2.2.2 修改 conf/registry.conf 配置:

目前seata支持如下的file、nacos 、apollo、zk、consul的注册中心和配置中心。这里我们以nacos 为例。

将 type 改为 nacos,这里采用的是file的方式

registry {

# file 、nacos 、eureka、redis、zk、consul、etcd3、sofa

type = "nacos"

nacos {

serverAddr = "127.0.0.1:18001"

namespace = "public"

cluster = "default"

}

}

config {

# file、nacos 、apollo、zk、consul、etcd3

type = "file"

file {

name = "file.conf"

}

}

serverAddr = “127.0.0.1:18001” :nacos 的地址

namespace = “public” :nacos的命名空间默认为public

cluster = “default” :集群设置未默认 default

2.2.3 修改 conf/nacos-config.txt配置:

transport {

# tcp udt unix-domain-socket

type = "TCP"

#NIO NATIVE

server = "NIO"

#enable heartbeat

heartbeat = true

#thread factory for netty

thread-factory {

boss-thread-prefix = "NettyBoss"

worker-thread-prefix = "NettyServerNIOWorker"

server-executor-thread-prefix = "NettyServerBizHandler"

share-boss-worker = false

client-selector-thread-prefix = "NettyClientSelector"

client-selector-thread-size = 1

client-worker-thread-prefix = "NettyClientWorkerThread"

# netty boss thread size,will not be used for UDT

boss-thread-size = 1

#auto default pin or 8

worker-thread-size = 8

}

shutdown {

# when destroy server, wait seconds

wait = 3

}

serialization = "seata"

compressor = "none"

}

service {

#vgroup->rgroup

vgroup_mapping.my_test_tx_group = "seata-service"

#only support single node

default.grouplist = "127.0.0.1:8091"

#degrade current not support

enableDegrade = false

#disable

disable = false

#unit ms,s,m,h,d represents milliseconds, seconds, minutes, hours, days, default permanent

max.commit.retry.timeout = "-1"

max.rollback.retry.timeout = "-1"

}

client {

async.commit.buffer.limit = 10000

lock {

retry.internal = 10

retry.times = 30

}

report.retry.count = 5

tm.commit.retry.count = 1

tm.rollback.retry.count = 1

}

## transaction log store

store {

## store mode: file、db

mode = "db"

## file store

file {

dir = "sessionStore"

# branch session size , if exceeded first try compress lockkey, still exceeded throws exceptions

max-branch-session-size = 16384

# globe session size , if exceeded throws exceptions

max-global-session-size = 512

# file buffer size , if exceeded allocate new buffer

file-write-buffer-cache-size = 16384

# when recover batch read size

session.reload.read_size = 100

# async, sync

flush-disk-mode = async

}

## database store

db {

## the implement of javax.sql.DataSource, such as DruidDataSource(druid)/BasicDataSource(dbcp) etc.

datasource = "dbcp"

## mysql/oracle/h2/oceanbase etc.

db-type = "mysql"

driver-class-name = "com.mysql.jdbc.Driver"

url = "jdbc:mysql://127.0.0.1:3306/seata?useUnicode=true&characterEncoding=utf-8&allowMultiQueries=true&useSSL=false"

user = "root"

password = "123456"

min-conn = 1

max-conn = 3

global.table = "global_table"

branch.table = "branch_table"

lock-table = "lock_table"

query-limit = 100

}

}

lock {

## the lock store mode: local、remote

mode = "remote"

local {

## store locks in user's database

}

remote {

## store locks in the seata's server

}

}

recovery {

#schedule committing retry period in milliseconds

committing-retry-period = 1000

#schedule asyn committing retry period in milliseconds

asyn-committing-retry-period = 1000

#schedule rollbacking retry period in milliseconds

rollbacking-retry-period = 1000

#schedule timeout retry period in milliseconds

timeout-retry-period = 1000

}

transaction {

undo.data.validation = true

undo.log.serialization = "jackson"

undo.log.save.days = 7

#schedule delete expired undo_log in milliseconds

undo.log.delete.period = 86400000

undo.log.table = "undo_log"

}

## metrics settings

metrics {

enabled = false

registry-type = "compact"

# multi exporters use comma divided

exporter-list = "prometheus"

exporter-prometheus-port = 9898

}

support {

## spring

spring {

# auto proxy the DataSource bean

datasource.autoproxy = false

}

}

vgroup_mapping.my_test_tx_group = “seata-service” 这里 "seata-service"是到时候让其他微服务注册seata中的名字:

cloud:

alibaba:

seata:

#自定义事务组名称需要与seata-server中的对应

tx-service-group: seata-service

以下是数据库地址账号密码的配置,根据自己情况修改即可:

url = "jdbc:mysql://127.0.0.1:3306/seata?useUnicode=true&characterEncoding=utf-8&allowMultiQueries=true&useSSL=false"

user = "root"

password = "123456"

db模式下的所需的三个表的数据库脚本位于seata\conf\db_store.sql

global_table的表结构:

CREATE TABLE `global_table` (

`xid` varchar(128) NOT NULL,

`transaction_id` bigint(20) DEFAULT NULL,

`status` tinyint(4) NOT NULL,

`application_id` varchar(64) DEFAULT NULL,

`transaction_service_group` varchar(64) DEFAULT NULL,

`transaction_name` varchar(64) DEFAULT NULL,

`timeout` int(11) DEFAULT NULL,

`begin_time` bigint(20) DEFAULT NULL,

`application_data` varchar(2000) DEFAULT NULL,

`gmt_create` datetime DEFAULT NULL,

`gmt_modified` datetime DEFAULT NULL,

PRIMARY KEY (`xid`),

KEY `idx_gmt_modified_status` (`gmt_modified`,`status`),

KEY `idx_transaction_id` (`transaction_id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8mb4;

branch_table的表结构:

CREATE TABLE `branch_table` (

`branch_id` bigint(20) NOT NULL,

`xid` varchar(128) NOT NULL,

`transaction_id` bigint(20) DEFAULT NULL,

`resource_group_id` varchar(32) DEFAULT NULL,

`resource_id` varchar(256) DEFAULT NULL,

`lock_key` varchar(128) DEFAULT NULL,

`branch_type` varchar(8) DEFAULT NULL,

`status` tinyint(4) DEFAULT NULL,

`client_id` varchar(64) DEFAULT NULL,

`application_data` varchar(2000) DEFAULT NULL,

`gmt_create` datetime DEFAULT NULL,

`gmt_modified` datetime DEFAULT NULL,

PRIMARY KEY (`branch_id`),

KEY `idx_xid` (`xid`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8mb4;

lock_table的表结构:

create table `lock_table` (

`row_key` varchar(128) not null,

`xid` varchar(96),

`transaction_id` long ,

`branch_id` long,

`resource_id` varchar(256) ,

`table_name` varchar(32) ,

`pk` varchar(32) ,

`gmt_create` datetime ,

`gmt_modified` datetime,

primary key(`row_key`)

);