基于知识库的chatbot或者FAQ

背景

最近突然想做一个基于自己的知识库(knowlegebase)的chatbot或者FAQ的项目。未来如果可以在公司用chatgpt或者gpt3.5之后的模型的话,还可以利用gpt强大的语言理解力和搜索出来的用户问题的相关业务文档来回答用户在业务中的问题。

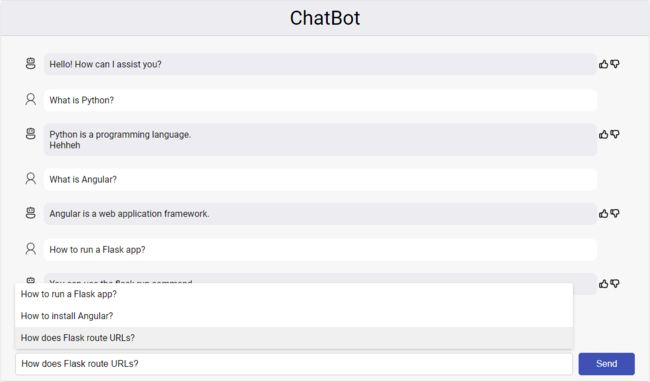

Chatbot UI

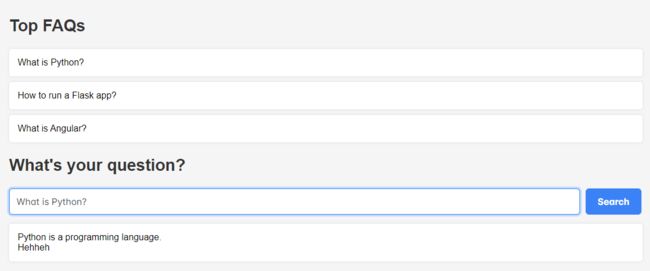

FAQ UI

后端代码实现

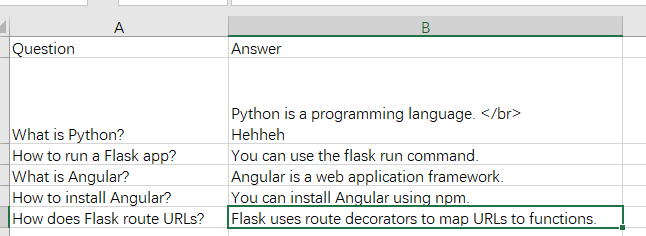

1. 建立一个基于excel的简单的知识库,

2.利用knowlege_base_service.py文件来获取上面知识库中所有的问题。

import pandas as pd

knowledge_base = pd.read_excel("./data/knowledge_base.xlsx")

require_to_reload = False

def get_all_questions():

knowledge_base = get_knowlege_base()

return knowledge_base["Question"].tolist();

pass

def get_knowlege_base():

global require_to_reload, knowledge_base

# knowledge_base_dict = knowledge_base.to_dict(orient="records")

if require_to_reload == True:

knowledge_base = pd.read_excel("./data/knowledge_base.xlsx")

require_to_reload = False

return knowledge_base3. 创建一个句子相似度比较的模型,用来比较用户输入的问题和我们知识库中问题的相似度。

base 类

class BaseSentenceSimilarityModel():

def calculate_sentence_similarity(self, source_sentence, sentences_to_compare):

print("padding to be overided by subclass")

results = []

return results

def find_most_similar_question(self, source_sentence, sentences_to_compare):

print("padding to be overided by subclass")

return ''模型1. TF-IDF

class TFIDFModel(BaseSentenceSimilarityModel):

def calculate_sentence_similarity(self, source_sentence, sentences_to_compare):

# Combine source_sentence and sentences_to_compare into one list for vectorization

sentences = [source_sentence] + sentences_to_compare

# Create a TF-IDF vectorizer

vectorizer = TfidfVectorizer()

# Compute the TF-IDF matrix

tfidf_matrix = vectorizer.fit_transform(sentences)

# Calculate cosine similarity between the source_sentence and sentences_to_compare

similarity_scores = cosine_similarity(tfidf_matrix[0], tfidf_matrix[1:])

scores = similarity_scores.flatten();

results = []

for idx, score in enumerate(scores):

# print('sentence:', sentences_to_compare[idx], f", score: {score:.4f}")

results.append( {'sentence': sentences_to_compare[idx], 'score': round(score, 4) })

print(results)

return results

def find_most_similar_question(self, source_sentence, sentences_to_compare):

results = self.calculate_sentence_similarity(source_sentence, sentences_to_compare)

most_similar_question = ''

score = 0

for result in results:

# print('sentence:', sentences_to_compare[idx], f", score: {score:.4f}")

if result['score'] > score and result['score']>0.7:

score = result['score']

most_similar_question = result['sentence']

return most_similar_question

模型二,基于glove词向量的模型

class Word2VectorModel(BaseSentenceSimilarityModel):

def calculate_sentence_similarity(self, source_sentence, sentences_to_compare):

# Parse the sentences using spaCy

sentences = [source_sentence] + sentences_to_compare

gloveHelper = GloveHelper()

source_sentence_vector = gloveHelper.getVector(source_sentence)

sentences_vector_mean = []

for sentence in sentences:

sentences_vector = gloveHelper.getVector(sentence)

# sentences_vector_mean.append(sentences_vector)

sentences_vector_mean.append(np.mean(sentences_vector, axis=0))

# Calculate cosine similarity between the source_sentence and sentences_to_compare

print(np.array(sentences_vector_mean[0]).shape)

print(np.array(sentences_vector_mean[1:]).shape)

similarity_scores = cosine_similarity([sentences_vector_mean[0]], np.array(sentences_vector_mean[1:]))

scores = similarity_scores.flatten();

results = []

for idx, score in enumerate(scores):

# print('sentence:', sentences_to_compare[idx], f", score: {score:.4f}")

results.append({'sentence': sentences_to_compare[idx], 'score': round(float(score), 4)})

print(results)

return results

def find_most_similar_question(self, source_sentence, sentences_to_compare):

results = self.calculate_sentence_similarity(source_sentence, sentences_to_compare)

most_similar_question = ''

score = 0

for result in results:

# print('sentence:', sentences_to_compare[idx], f", score: {score:.4f}")

if result['score'] > score and result['score']>0.7:

score = result['score']

most_similar_question = result['sentence']

return most_similar_question模型三,tensorhub 里的模型 universal-sentence-encoder_4

import tensorflow_hub as hub

enable_universal_sentence_encoder_Model = True

if enable_universal_sentence_encoder_Model:

print('loading universal-sentence-encoder_4 model...')

embed = hub.load("C:/apps/ml_model/universal-sentence-encoder_4")

class UniversalSentenceEncoderModel(BaseSentenceSimilarityModel):

def calculate_sentence_similarity(self, source_sentence, sentences_to_compare):

# Parse the sentences using spaCy

sentences = [source_sentence] + sentences_to_compare

sentences_vectors = embed(sentences)

sentences_vectors = sentences_vectors.numpy()

print(sentences_vectors)

# sentences_vector_mean = np.mean(sentences_vectors, axis=1)

# for sentences_vector in sentences_vectors:

# sentences_vector_mean.append(np.mean(sentences_vector, axis=0))

# Calculate cosine similarity between the source_sentence and sentences_to_compare

print(np.array(sentences_vectors[0]).shape)

print(np.array(sentences_vectors[1:]).shape)

similarity_scores = cosine_similarity([sentences_vectors[0]], np.array(sentences_vectors[1:]))

scores = similarity_scores.flatten();

results = []

for idx, score in enumerate(scores):

# print('sentence:', sentences_to_compare[idx], f", score: {score:.4f}")

results.append({'sentence': sentences_to_compare[idx], 'score': round(float(score), 4)})

print(results)

return results

def find_most_similar_question(self, source_sentence, sentences_to_compare):

print("universal sentence encoder model....")

results = self.calculate_sentence_similarity(source_sentence, sentences_to_compare)

most_similar_question = ''

score = 0

for result in results:

# print('sentence:', sentences_to_compare[idx], f", score: {score:.4f}")

if result['score'] > score and result['score']>0.6:

score = result['score']

most_similar_question = result['sentence']

return most_similar_question

4. 利用flask 创建一个rest api

app = Flask(__name__)

CORS(app)

@app.route('/')

def index():

return 'welcome to my webpage!'

@app.route('/api/chat', methods=['POST','GET'])

def send_message():

user_message = request.json.get('user_message')

# Find the most similar question in the knowledge base

answer = find_most_similar_question(user_message)

return jsonify({'bot_response': answer})

def find_most_similar_question(user_question , model = 'tf_idf_model'):

knowledge_base = get_knowlege_base()

print('model name :', model)

if model == 'tf_idf_model':

sentenceSimilarityModel = TFIDFModel()

pass

elif model == 'word2vector_model':

sentenceSimilarityModel = Word2VectorModel()

elif model == 'UniversalSentenceEncoder_Model':

from nlp.sentence_similarity.universal_sentence_encoder_model import UniversalSentenceEncoderModel

sentenceSimilarityModel = UniversalSentenceEncoderModel()

else:

sentenceSimilarityModel = TFIDFModel()

most_similar_question = sentenceSimilarityModel.find_most_similar_question(user_question, knowledge_base["Question"].tolist())

filtered_df = knowledge_base[knowledge_base["Question"] == most_similar_question]

# Check if any matching rows were found

if not filtered_df.empty:

found_answer = filtered_df.iloc[0]["Answer"]

print("Answer:", found_answer)

return found_answer

else:

print("No answer found for the question:", user_question)

return 'No answer found for the question';

def get_top_faq():

# Count the frequency of each question

top_question = knowledge_base.head(3).to_dict(orient="records")

print(top_question)

return top_question

if __name__=="__main__":

app.run(port=2020,host="127.0.0.1",debug=True)前端Angular UI

chat.component.css

.chat-container {

max-width: 60%;

margin: 0 auto;

background-color: #f7f7f7;

box-shadow: 0 4px 8px rgba(0, 0, 0, 0.2);

}

.chat-area {

max-height: 550px;

overflow-y: auto;

padding: 20px;

background-color: #f7f7f7;

border-radius: 10px;

}

.chat-header {

color: black; /* Set text color */

background-color: #ececf1;

text-align: center;

padding: 10px;

/*box-shadow: 0px 2px 4px rgba(0, 0, 0, 0.1); !* Add a subtle shadow *!*/

border-bottom: 1px solid #ccc; /* Add a border at the bottom */

font-size: 35px; /* Adjust the font size as needed */

}

.chat-foot{

padding: 10px 15px;

margin: 10px

}

.user-bubble {

--tw-border-opacity: 1;

background-color: white; /* User message background color */

border-color: rgba(255,255,255,var(--tw-border-opacity));

border-radius: 10px;

padding: 10px 10px;

margin: 10px 0;

/* max-width: 85%;*/

align-self: flex-end;

}

.chat-message{

display: flex;

}

.bot-bubble {

--tw-border-opacity: 1;

background-color: #ececf1; /* Chatbot message background color */

border-collapse: rgba(255,255,255,var(--tw-border-opacity));

border-radius: 10px;

padding: 10px 10px;

margin: 10px 0;

/*max-width: 85%;*/

align-self: flex-start;

justify-content: right;

}

.form-container {

display: flex;

align-items: center;

}

.user-input {

/* width: 86%;*/

flex-grow: 1;

padding: 10px;

border: 1px solid #ccc;

border-radius: 5px;

outline: none;

font-size: 16px;

/*margin-top: 10px;*/

margin-right: 10px;

}

.indented-div {

margin-right: 10px; /* Adjust this value as needed */

padding: 15px 1px 10px 10px

}

/* Send button */

.send-button {

/* width: 10%;*/

width: 100px;

background-color: #3f51b5;

color: #fff;

border: none;

border-radius: 5px;

padding: 10px 20px;

font-size: 16px;

cursor: pointer;

transition: background-color 0.3s;

}

.send-button:hover {

background-color: #303f9f;

}

.chat_left{

display: flex;

padding: 0px 0px 0px 10px;

margin: 1px 0;

}

.chat_right {

/* float: right;*/ /* Align bot actions to the right */

/* margin-left: 10px;*/ /* Add some spacing between the chat message and bot actions */

width: 50px;

/* padding: 10px 15px;*/

/* margin: 20px 5px;

*/

margin: 20px 20px 20px 2px

}

.chat_right i {

color: #000;

transition: color 0.3s;

cursor: pointer;

}

.chat_right i:hover {

color: darkorange;

}

/* text-suggestion.component.css */

.suggestion-container {

position: relative;

width: calc(100% - 110px);

}

.suggestion-container ul {

list-style: none;

padding: 0;

margin: 0;

/* width: 91.6%;*/

width : 100%;

position: absolute;

/*top: -195px; !* Adjust this value to control the distance from the input *!*/

background-color: #fff; /* Customize this background color */

/*border: 1px solid #ccc;*/

border-radius: 5px; /* Add border radius for styling */

box-shadow: 0 2px 5px rgba(0, 0, 0, 0.2); /* Add box shadow for a card-like effect */

}

.selected {

background-color: #f0f0f0; /* Highlight color */

}

.suggestion-container li {

padding: 10px;

cursor: pointer;

}

.suggestion-container li:hover {

background-color: #f2f2f2; /* Hover effect */

}

.category-button {

background-color: #fff;

color: #333;

border: 1px solid #ccc;

padding: 5px 10px;

margin: 5px;

border-radius: 5px;

cursor: pointer;

font-size: 15px;

transition: background-color 0.3s, border-color 0.3s;

}

.category{

margin-bottom: 10px

}

.category-button.selected {

/*background-color: #007bff;*/

/*color: #fff;*/

/*border-color: #007bff;*/

color: #007bff;

border: 1px solid #007bff;

}

.category-button:hover {

/*background-color: #007bff;*/

color: #007bff;

border: 1px solid #007bff;

/*border-color: #007bff;*/

}

chat.component.html

ChatBot

chat.component.ts

import { Component, ElementRef, ViewChild, AfterViewChecked, OnInit } from '@angular/core';

import { HttpClient } from '@angular/common/http';

import { DomSanitizer } from '@angular/platform-browser';

import {host} from "../app-config";

@Component({

selector: 'app-chat',

templateUrl: './chat.component.html',

styleUrls: ['./chat.component.css']

})

export class ChatComponent implements AfterViewChecked, OnInit {

@ViewChild('chatArea') private chatArea!: ElementRef;

userMessage: string = '';

chatMessages: any[] = [];

suggestions: string[] = [];

allSuggestions: string[] = [];

showSuggestions = false;

selectedSuggestionIndex: number = -1;

selectedCategory: string = 'general'; // Default category

constructor(

private http: HttpClient,

private sanitizer: DomSanitizer

) {

this.http.get(host+'/faq/all-suggestions')

.subscribe(data => {

this.allSuggestions = data

});

}

ngOnInit() {

this.sanitizeMessages();

this.chatMessages.push({ text: 'Hello! How can I assist you?', type: 'bot' });

}

selectCategory(category: string) {

this.selectedCategory = category;

// Implement category-specific logic or fetching here

}

ngAfterViewChecked() {

this.scrollToBottom();

}

onKeyDown(event: KeyboardEvent) {

// console.info("....."+event.key)

if (event.key === 'ArrowDown') {

event.preventDefault();

this.selectedSuggestionIndex =

(this.selectedSuggestionIndex + 1) % this.suggestions.length;

this.userMessage = this.suggestions[this.selectedSuggestionIndex];

} else if (event.key === 'ArrowUp') {

event.preventDefault();

this.selectedSuggestionIndex =

(this.selectedSuggestionIndex - 1 + this.suggestions.length) % this.suggestions.length;

this.userMessage = this.suggestions[this.selectedSuggestionIndex];

}

}

onSuggestionClick(suggestion: string) {

this.userMessage = suggestion;

this.showSuggestions = false;

}

sendMessage() {

if (this.userMessage === undefined || this.userMessage.trim() === ''){

return;

}

this.showSuggestions=false

this.chatMessages.push({ text: this.userMessage, type: 'user' });

this.http.post(host+'/api/chat', { user_message: this.userMessage }).subscribe(response => {

this.chatMessages.push({ text: response.bot_response, type: 'bot' });

this.userMessage = '';

});

}

scrollToBottom() {

try {

this.chatArea.nativeElement.scrollTop = this.chatArea.nativeElement.scrollHeight;

} catch (err) {}

}

onThumbsUpClick(message: any) {

console.log('Thumbs up clicked for the bot message: ', message.text);

}

onThumbsDownClick(message: any) {

console.log('Thumbs down clicked for the bot message: ', message.text);

}

// Sanitize messages with HTML content

sanitizeMessages() {

for (let message of this.chatMessages) {

if (message.type === 'bot') {

message.text = this.sanitizer.bypassSecurityTrustHtml(message.text);

}

}

}

onQueryChange() {

this.showSuggestions = true;

this.suggestions = this.getTop5SimilarSuggestions(this.allSuggestions, this.userMessage);

}

getTop5SimilarSuggestions(suggestions: string[], query: string): string[] {

return suggestions

.filter(suggestion => suggestion.toLowerCase().includes(query.toLowerCase()))

.sort((a, b) => this.calculateSimilarity(a, query) - this.calculateSimilarity(b, query))

.slice(0, 5);

}

calculateSimilarity(suggestion: string, query: string): number {

// You can use Levenshtein distance or any other similarity metric here

// Example: Using Levenshtein distance

if (suggestion === query) return 0;

const matrix = [];

const len1 = suggestion.length;

const len2 = query.length;

for (let i = 0; i <= len2; i++) {

matrix[i] = [i];

}

for (let i = 0; i <= len1; i++) {

matrix[0][i] = i;

}

for (let i = 1; i <= len2; i++) {

for (let j = 1; j <= len1; j++) {

const cost = suggestion[j - 1] === query[i - 1] ? 0 : 1;

matrix[i][j] = Math.min(

matrix[i - 1][j] + 1,

matrix[i][j - 1] + 1,

matrix[i - 1][j - 1] + cost

);

}

}

return matrix[len2][len1];

}

}