Flink Table API和Flink SQL

简介

Flink Table API 是一套内嵌在java和scala中的查询api,他允许以非常直观的方式组合一些关系运算符的查询。

Flink SQL是基于实现了SQL标准的Apache Calcite。

目前Flink Table API和SQL还不是很完善。

例子:

package com.ts.tabletest;

import com.ts.flink.SensorReading;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.table.api.Table;

import org.apache.flink.table.api.bridge.java.StreamTableEnvironment;

import org.apache.flink.types.Row;

public class Example {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

// 读取数据

DataStreamSource<String> inputStream = env.readTextFile("E:\\Demo\\flink\\src\\com\\ts\\resources\\sensor.txt");

// 转换为POJO

DataStream<SensorReading> dataStream = inputStream.map(line -> {

String[] fields = line.split(",");

return new SensorReading(fields[0], new Long(fields[1]), new Double(fields[2]));

});

// 创建表的执行环境

StreamTableEnvironment tableEnv = StreamTableEnvironment.create(env);

// 基于流创建表

Table dataTable = tableEnv.fromDataStream(dataStream);

// 调用table API进行转换操作

Table resultTable = dataTable.select("id,temperature")

.where("id = 'sensor_1'");

// 执行SQL

tableEnv.createTemporaryView("sensor",dataTable);

String sql = "select id,temperature from sensor where id = 'sensor_1'";

Table resultSqlTable = tableEnv.sqlQuery(sql);

tableEnv.toAppendStream(resultTable, Row.class).print("result");

tableEnv.toAppendStream(resultTable,Row.class).print("sql");

env.execute();

}

}

---------

result> sensor_1,35.8

sql> sensor_1,35.8

result> sensor_1,36.3

sql> sensor_1,36.3

result> sensor_1,32.8

sql> sensor_1,32.8

result> sensor_1,37.1

sql> sensor_1,37.1

常用操作

创建基于Planner的流批处理环境

package com.ts.tabletest;

import org.apache.flink.api.java.ExecutionEnvironment;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.table.api.EnvironmentSettings;

import org.apache.flink.table.api.bridge.java.BatchTableEnvironment;

import org.apache.flink.table.api.bridge.java.StreamTableEnvironment;

public class CommonApi {

public static void main(String[] args) {

// 1. 创建环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

StreamTableEnvironment tableEnv = StreamTableEnvironment.create(env);

// 1.1 基于老版本planner的流处理

EnvironmentSettings oldStreamSettings = EnvironmentSettings.newInstance()

.useOldPlanner()

.inStreamingMode()

.build();

StreamTableEnvironment oldStreamTableEnv = StreamTableEnvironment.create(env, oldStreamSettings);

// 1.2 基于老版本planner的批处理

ExecutionEnvironment batchEnv = ExecutionEnvironment.getExecutionEnvironment();

BatchTableEnvironment oldBatchTableEnv = BatchTableEnvironment.create(batchEnv);

}

}

创建基于Blink的流批处理环境

package com.ts.tabletest;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.table.api.EnvironmentSettings;

import org.apache.flink.table.api.TableEnvironment;

import org.apache.flink.table.api.bridge.java.StreamTableEnvironment;

public class CommonApi {

public static void main(String[] args) {

// 1. 创建环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

StreamTableEnvironment tableEnv = StreamTableEnvironment.create(env);

// 1.1 基于Blink的流处理

EnvironmentSettings blinkStreamSettings = EnvironmentSettings.newInstance()

.useBlinkPlanner()

.inStreamingMode()

.build();

StreamTableEnvironment blinkStreamTableEnv = StreamTableEnvironment.create(env, blinkStreamSettings);

// 1.2 基于Blink的批处理

EnvironmentSettings blinkBatchSettings = EnvironmentSettings.newInstance()

.useBlinkPlanner()

.inBatchMode()

.build();

TableEnvironment blinkBatchTableEnv = TableEnvironment.create(blinkBatchSettings);

}

}

创建表

package com.ts.tabletest;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.table.api.DataTypes;

import org.apache.flink.table.api.EnvironmentSettings;

import org.apache.flink.table.api.Table;

import org.apache.flink.table.api.TableEnvironment;

import org.apache.flink.table.api.bridge.java.StreamTableEnvironment;

import org.apache.flink.table.descriptors.Csv;

import org.apache.flink.table.descriptors.FileSystem;

import org.apache.flink.table.descriptors.Schema;

import org.apache.flink.types.Row;

public class CommonApi {

public static void main(String[] args) throws Exception{

// 1. 创建环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

StreamTableEnvironment tableEnv = StreamTableEnvironment.create(env);

// 2. 表的创建:连接外部系统,读取数据

// 2.1 读取文件

String filePath = "E:\\Demo\\flink\\src\\com\\ts\\resources\\sensor.txt";

tableEnv.connect( new FileSystem().path(filePath))

.withFormat( new Csv())

//Schema 指定字段

.withSchema( new Schema()

// 字段名 字段类型

.field("id", DataTypes.STRING())

.field("timestamp", DataTypes.BIGINT())

.field("temp", DataTypes.DOUBLE())

)

.createTemporaryTable("inputTable");

Table inputTable = tableEnv.from("inputTable");

inputTable.printSchema();

tableEnv.toAppendStream(inputTable, Row.class).print();

env.execute();

}

}

----------

root

|-- id: STRING

|-- timestamp: BIGINT

|-- temp: DOUBLE

sensor_1,1547718199,35.8

sensor_6,1547718201,15.4

sensor_7,1547718202,6.7

sensor_10,1547718205,38.1

sensor_1,1547718207,36.3

sensor_1,1547718209,32.8

sensor_1,1547718212,37.1

Table API查询

package com.ts.tabletest;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.table.api.DataTypes;

import org.apache.flink.table.api.EnvironmentSettings;

import org.apache.flink.table.api.Table;

import org.apache.flink.table.api.TableEnvironment;

import org.apache.flink.table.api.bridge.java.StreamTableEnvironment;

import org.apache.flink.table.descriptors.Csv;

import org.apache.flink.table.descriptors.FileSystem;

import org.apache.flink.table.descriptors.Schema;

import org.apache.flink.types.Row;

public class CommonApi {

public static void main(String[] args) throws Exception{

// 1. 创建环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

StreamTableEnvironment tableEnv = StreamTableEnvironment.create(env);

// 2. 表的创建:连接外部系统,读取数据

// 2.1 读取文件

String filePath = "E:\\Demo\\flink\\src\\com\\ts\\resources\\sensor.txt";

tableEnv.connect( new FileSystem().path(filePath))

.withFormat( new Csv())

//Schema 指定字段

.withSchema( new Schema()

// 字段名 字段类型

.field("id", DataTypes.STRING())

.field("timestamp", DataTypes.BIGINT())

.field("temp", DataTypes.DOUBLE())

)

.createTemporaryTable("inputTable");

Table inputTable = tableEnv.from("inputTable");

// 3. 查询转换

// 3.1 Table API

// 简单转换

Table resultTable = inputTable.select("id, temp")

.filter("id === 'sensor_6'");

// 聚合统计

Table aggTable = inputTable.groupBy("id")

.select("id, id.count as count, temp.avg as avgTemp");

// 打印输出

tableEnv.toAppendStream(resultTable, Row.class).print("result");

tableEnv.toRetractStream(aggTable, Row.class).print("agg");

env.execute();

}

}

----------

result> sensor_6,15.4

agg> (true,sensor_1,1,35.8)

agg> (true,sensor_6,1,15.4)

agg> (true,sensor_7,1,6.7)

agg> (true,sensor_10,1,38.1)

agg> (false,sensor_1,1,35.8)

agg> (true,sensor_1,2,36.05)

agg> (false,sensor_1,2,36.05)

agg> (true,sensor_1,3,34.96666666666666)

agg> (false,sensor_1,3,34.96666666666666)

agg> (true,sensor_1,4,35.5)

SQL查询

package com.ts.tabletest;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.table.api.DataTypes;

import org.apache.flink.table.api.EnvironmentSettings;

import org.apache.flink.table.api.Table;

import org.apache.flink.table.api.TableEnvironment;

import org.apache.flink.table.api.bridge.java.StreamTableEnvironment;

import org.apache.flink.table.descriptors.Csv;

import org.apache.flink.table.descriptors.FileSystem;

import org.apache.flink.table.descriptors.Schema;

import org.apache.flink.types.Row;

public class CommonApi {

public static void main(String[] args) throws Exception{

// 1. 创建环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

StreamTableEnvironment tableEnv = StreamTableEnvironment.create(env);

// 2. 表的创建:连接外部系统,读取数据

// 2.1 读取文件

String filePath = "E:\\Demo\\flink\\src\\com\\ts\\resources\\sensor.txt";

tableEnv.connect( new FileSystem().path(filePath))

.withFormat( new Csv())

//Schema 指定字段

.withSchema( new Schema()

// 字段名 字段类型

.field("id", DataTypes.STRING())

.field("timestamp", DataTypes.BIGINT())

.field("temp", DataTypes.DOUBLE())

)

.createTemporaryTable("inputTable");

Table inputTable = tableEnv.from("inputTable");

// 3. 查询转换

// 3.2 SQL

tableEnv.sqlQuery("select id, temp from inputTable where id = 'senosr_6'");

Table sqlAggTable = tableEnv.sqlQuery("select id, count(id) as cnt, avg(temp) as avgTemp from inputTable group by id");

// 打印输出

tableEnv.toRetractStream(sqlAggTable, Row.class).print("sqlagg");

env.execute();

}

}

--------

sqlagg> (true,sensor_1,1,35.8)

sqlagg> (true,sensor_6,1,15.4)

sqlagg> (true,sensor_7,1,6.7)

sqlagg> (true,sensor_10,1,38.1)

sqlagg> (false,sensor_1,1,35.8)

sqlagg> (true,sensor_1,2,36.05)

sqlagg> (false,sensor_1,2,36.05)

sqlagg> (true,sensor_1,3,34.96666666666666)

sqlagg> (false,sensor_1,3,34.96666666666666)

sqlagg> (true,sensor_1,4,35.5)

输出到文件

package com.ts.tabletest;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.table.api.DataTypes;

import org.apache.flink.table.api.EnvironmentSettings;

import org.apache.flink.table.api.Table;

import org.apache.flink.table.api.TableEnvironment;

import org.apache.flink.table.api.bridge.java.StreamTableEnvironment;

import org.apache.flink.table.descriptors.Csv;

import org.apache.flink.table.descriptors.FileSystem;

import org.apache.flink.table.descriptors.Schema;

import org.apache.flink.types.Row;

public class CommonApi {

public static void main(String[] args) throws Exception{

// 1. 创建环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

StreamTableEnvironment tableEnv = StreamTableEnvironment.create(env);

// 2. 表的创建:连接外部系统,读取数据

// 2.1 读取文件

String filePath = "E:\\Demo\\flink\\src\\com\\ts\\resources\\sensor.txt";

tableEnv.connect( new FileSystem().path(filePath))

.withFormat( new Csv())

//Schema 指定字段

.withSchema( new Schema()

// 字段名 字段类型

.field("id", DataTypes.STRING())

.field("timestamp", DataTypes.BIGINT())

.field("temp", DataTypes.DOUBLE())

)

.createTemporaryTable("inputTable");

Table inputTable = tableEnv.from("inputTable");

// 3. 查询转换

// 3.1 Table API

// 简单转换

Table resultTable = inputTable.select("id, temp")

.filter("id === 'sensor_6'");

// 聚合统计

Table aggTable = inputTable.groupBy("id")

.select("id, id.count as count, temp.avg as avgTemp");

// 4. 输出到文件

// 连接外部文件注册输出表

String outputPath = "E:\\Demo\\flink\\src\\com\\ts\\resources\\out.txt";

tableEnv.connect(new FileSystem().path(outputPath))

.withFormat(new Csv())

.withSchema(

new Schema()

.field("id", DataTypes.STRING())

.field("temp", DataTypes.DOUBLE())

)

.createTemporaryTable("outputTable");

resultTable.insertInto("outputTable");

env.execute();

}

}

结合Kafka

package com.ts.tabletest;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.table.api.DataTypes;

import org.apache.flink.table.api.Table;

import org.apache.flink.table.api.bridge.java.StreamTableEnvironment;

import org.apache.flink.table.descriptors.Csv;

import org.apache.flink.table.descriptors.Kafka;

import org.apache.flink.table.descriptors.Schema;

public class KafkaPipeLine {

public static void main(String[] args) throws Exception {

// 1. 创建环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

StreamTableEnvironment tableEnv = StreamTableEnvironment.create(env);

// 2. 连接Kafka,读取数据

tableEnv.connect(new Kafka()

// 版本

.version("0.11")

// 管道名称

.topic("sensor")

// 连接kafka和zookeeper

.property("zookeeper.connect", "localhost:2181")

.property("bootstrap.servers", "localhost:9092")

)

.withFormat(new Csv())

.withSchema(new Schema()

.field("id", DataTypes.STRING())

.field("timestamp", DataTypes.BIGINT())

.field("temp", DataTypes.DOUBLE())

)

.createTemporaryTable("inputTable");

// 3. 查询转换

// 简单转换

Table sensorTable = tableEnv.from("inputTable");

Table resultTable = sensorTable.select("id, temp")

.filter("id === 'sensor_6'");

// 聚合统计

Table aggTable = sensorTable.groupBy("id")

.select("id, id.count as count, temp.avg as avgTemp");

// 4. 建立kafka连接,输出到不同的topic下

tableEnv.connect(new Kafka()

.version("0.11")

.topic("sinktest")

.property("zookeeper.connect", "ha1:2181;")

.property("bootstrap.servers", "localhost:9092")

)

.withFormat(new Csv())

.withSchema(new Schema()

.field("id", DataTypes.STRING())

.field("temp", DataTypes.DOUBLE())

)

.createTemporaryTable("outputTable");

resultTable.insertInto("outputTable");

env.execute();

}

}

输出到ES

tableEnv.connect(new Elasticsearch()

.version("6")

.host("localhost", 9200, "http")

.index("sensor")

.documentType("temp") )

.inUpsertMode()

.withFormat(new Json())

.withSchema( new Schema()

.field("id", DataTypes.STRING())

.field("count", DataTypes.BIGINT())

)

.createTemporaryTable("esOutputTable");

aggResultTable.insertInto("esOutputTable");

输出到MySQL

String sinkDDL=

"create table jdbcOutputTable (" +

" id varchar(20) not null, " +

" cnt bigint not null " +

") with (" +

" 'connector.type' = 'jdbc', " +

" 'connector.url' = 'jdbc:mysql://localhost:3306/test', " +

" 'connector.table' = 'sensor_count', " +

" 'connector.driver' = 'com.mysql.jdbc.Driver', " +

" 'connector.username' = 'root', " +

" 'connector.password' = '123456' )";

tableEnv.sqlUpdate(sinkDDL) // 执行 DDL创建表

aggResultSqlTable.insertInto("jdbcOutputTable");

表转换为流

// 追加模式

DataStream<Row> resultStream = tableEnv.toAppendStream(resultTable, Row.class);

// 撤回模式 数据会增加一个Boolean的标识位,标识该数据是新增还是删除

DataStream<Tuple2<Boolean, Row>> resultTableStream = tableEnv.toRetractStream(resultTable, Row.class);

流转换为表

// 默认转换

Table sensorTable = tableEnv.fromDataStream(resultStream);

// 转换中也可以设置对应字段

Table sensorTable = tableEnv.fromDataStream(dataStream, "id,timestamp as ts,temperature");

创建视图

// 根据流创建视图

tableEnv.createTemporaryView("sensorView",dataStream);

// 根据表创建视图

tableEnv.createTemporaryView("sensorView",resultTable);

查看执行计划

String explaination = tableEnv.explain(resultTable);

System.out.println(explaination);

动态表

动态表是 Flink 对流数据的 Table API 和 SQL 支持的核心概念,与表示批处理数据的静态表不同,动态表是随时间变化的。可以类比传统数据库的物化视图。

他和传统数据库有些区别:

| 传统数据库SQL处理 | 实时SQL处理 |

|---|---|

| 传统数据库的表数据是有界限的 | 实时数据无界限的 |

| 在批处理数据的查询是需要获取全量数据 | 无法获取全量数据,必须等待新的数据输入 |

| 处理结束后就终止了 | 利用输入的数据不断的更新它的结果表,绝对不会停止 |

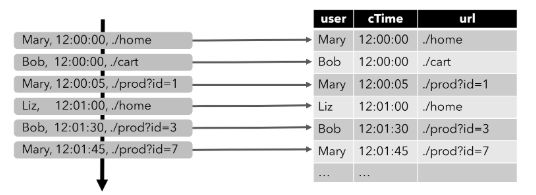

流、动态表和持续查询的关系

流式持续查询的过程为:

-

流被转换为动态表。

-

对动态表计算连续查询,生成新的动态表。

-

生成的动态表被转换回流。

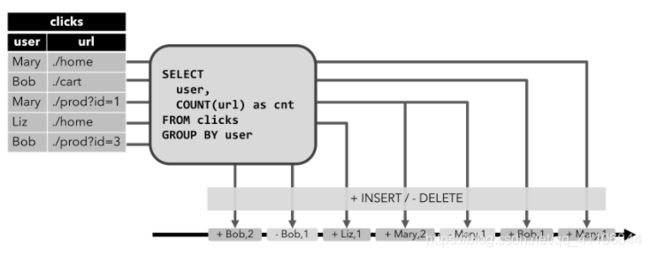

持续查询

动态表可以像静态的批处理表一样进行查询,查询一个动态表会产生持续查询(Continuous Query)

连续查询永远不会终止,并会生成另一个动态表

查询会不断更新其动态结果表,以反映其动态输入表上的更改

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-LcMASsqX-1617352981594)(https://i.loli.net/2021/03/19/KNflAyC8uOgTd94.png)]

将DataStream转换为动态表

为了处理带有关系查询的流,必须先将其转换为表

从概念上讲,流的每个数据记录,都被解释为对结果表的插入(Insert)修改操作

将动态表转换为DataStream

与常规的数据库表一样,动态表可以通过插入(Insert)、更新(Update)和删除(Delete)更改,进行持续的修改

将动态表转换为流或将其写入外部系统时,需要对这些更改进行编码

时间特性

基于时间的操作(比如 Table API 和 SQL 中窗口操作),需要定义相关的时间语义和时间数据来源的信息

Table 可以提供一个逻辑上的时间字段,用于在表处理程序中,指示时间和访问相应的时间戳

时间属性,可以是每个表schema的一部分。一旦定义了时间属性,它就可以作为一个字段引用,并且可以在基于时间的操作中使用

时间属性的行为类似于常规时间戳,可以访问,并且进行计算

定义处理时间

处理时间语义下,允许表处理程序根据机器的本地时间生成结果。它是时间的最简单概念。它既不需要提取时间戳,也不需要生成 watermark

在定义Schema期间,可以使用.proctime,指定字段名定义处理时间字段,这个proctime属性只能通过附加逻辑字段,来扩展物理schema。因此,只能在schema定义的末尾定义它。

- Table API

package com.ts.tabletest;

import com.ts.flink.SensorReading;

import org.apache.flink.streaming.api.TimeCharacteristic;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.timestamps.BoundedOutOfOrdernessTimestampExtractor;

import org.apache.flink.streaming.api.windowing.time.Time;

import org.apache.flink.table.api.Table;

import org.apache.flink.table.api.bridge.java.StreamTableEnvironment;

import org.apache.flink.types.Row;

public class TimeAttributesAPI {

public static void main(String[] args) throws Exception {

// 1. 创建环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

env.setStreamTimeCharacteristic(TimeCharacteristic.EventTime);

StreamTableEnvironment tableEnv = StreamTableEnvironment.create(env);

// 2. 读入文件数据,得到DataStream

DataStream<String> inputStream = env.readTextFile("E:\\Demo\\flink\\src\\com\\ts\\resources\\sensor.txt");

// 3. 转换成POJO

DataStream<SensorReading> dataStream = inputStream.map(line -> {

String[] fields = line.split(",");

return new SensorReading(fields[0], new Long(fields[1]), new Double(fields[2]));

})

.assignTimestampsAndWatermarks(new BoundedOutOfOrdernessTimestampExtractor<SensorReading>(Time.seconds(2)) {

@Override

public long extractTimestamp(SensorReading element) {

return element.getTimestamp() * 1000L;

}

});

// 4. 将流转换成表,定义时间特性为proctime

Table dataTable = tableEnv.fromDataStream(dataStream, "id, timestamp as ts, temperature as temp, pt.proctime");

tableEnv.toAppendStream(dataTable,Row.class).print();

env.execute();

}

}

----------

sensor_1,1547718199,35.8,2021-03-25T09:28:10.239

sensor_6,1547718201,15.4,2021-03-25T09:28:10.239

sensor_7,1547718202,6.7,2021-03-25T09:28:10.239

sensor_10,1547718205,38.1,2021-03-25T09:28:10.239

sensor_1,1547718207,36.3,2021-03-25T09:28:10.239

sensor_1,1547718209,32.8,2021-03-25T09:28:10.239

sensor_1,1547718212,37.1,2021-03-25T09:28:10.239

- Table Schema

package com.ts.tabletest;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.table.api.DataTypes;

import org.apache.flink.table.api.Table;

import org.apache.flink.table.api.bridge.java.StreamTableEnvironment;

import org.apache.flink.table.descriptors.Csv;

import org.apache.flink.table.descriptors.Kafka;

import org.apache.flink.table.descriptors.Schema;

import org.apache.flink.types.Row;

public class TimeAttributesAPI {

public static void main(String[] args) throws Exception {

// 1. 创建环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

StreamTableEnvironment tableEnv = StreamTableEnvironment.create(env);

// 2. 连接Kafka,读取数据

tableEnv.connect(new Kafka()

// 版本

.version("0.11")

// 管道名称

.topic("sensor")

// 连接kafka和zookeeper

.property("zookeeper.connect", "localhost:2181")

.property("bootstrap.servers", "localhost:9092")

)

.withFormat( new Csv() ) // 新版本得Csv

.withSchema( new Schema()

.field("id", DataTypes.STRING())

.field("timestamp", DataTypes.BIGINT())

.field("temperature", DataTypes.DOUBLE())

// 处理时间

.field("pt", DataTypes.TIMESTAMP(3))

.proctime()

)

.createTemporaryTable( "proctimeInputTable" );

Table inputTable = tableEnv.from("proctimeInputTable");

tableEnv.toAppendStream(inputTable, Row.class).print();

env.execute();

}

}

----------

sensor_1,1547718199,35.8,2021-03-25T09:28:10.239

sensor_6,1547718201,15.4,2021-03-25T09:28:10.239

sensor_7,1547718202,6.7,2021-03-25T09:28:10.239

sensor_10,1547718205,38.1,2021-03-25T09:28:10.239

sensor_1,1547718207,36.3,2021-03-25T09:28:10.239

sensor_1,1547718209,32.8,2021-03-25T09:28:10.239

sensor_1,1547718212,37.1,2021-03-25T09:28:10.239

- SQL

package com.ts.tabletest;

import com.ts.flink.SensorReading;

import org.apache.flink.streaming.api.TimeCharacteristic;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.timestamps.BoundedOutOfOrdernessTimestampExtractor;

import org.apache.flink.streaming.api.windowing.time.Time;

import org.apache.flink.table.api.Table;

import org.apache.flink.table.api.bridge.java.StreamTableEnvironment;

import org.apache.flink.types.Row;

public class TimeAttributesAPI {

public static void main(String[] args) throws Exception {

// 1. 创建环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

env.setStreamTimeCharacteristic(TimeCharacteristic.EventTime);

StreamTableEnvironment tableEnv = StreamTableEnvironment.create(env);

// 2.在创建表的 DDL 中定义

String sinkDDL = "create table dataTable (" +

" id varchar(20) not null, " +

" ts bigint, " +

" temperature double, " +

" pt AS PROCTIME() " +

") with (" +

" 'connector.type' = 'filesystem', " +

" 'connector.path' = 'E:\\Demo\\flink\\src\\com\\ts\\resources\\sensor.txt', " +

" 'format.type' = 'csv')";

tableEnv.sqlUpdate(sinkDDL);

Table inputTable = tableEnv.from("dataTable");

tableEnv.toAppendStream(inputTable,Row.class).print();

env.execute();

}

}

---------

sensor_1,1547718199,35.8,2021-03-25T09:28:10.239

sensor_6,1547718201,15.4,2021-03-25T09:28:10.239

sensor_7,1547718202,6.7,2021-03-25T09:28:10.239

sensor_10,1547718205,38.1,2021-03-25T09:28:10.239

sensor_1,1547718207,36.3,2021-03-25T09:28:10.239

sensor_1,1547718209,32.8,2021-03-25T09:28:10.239

sensor_1,1547718212,37.1,2021-03-25T09:28:10.239

定义事件时间

- Table API

package com.ts.tabletest;

import com.ts.flink.SensorReading;

import org.apache.flink.streaming.api.TimeCharacteristic;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.timestamps.BoundedOutOfOrdernessTimestampExtractor;

import org.apache.flink.streaming.api.windowing.time.Time;

import org.apache.flink.table.api.Table;

import org.apache.flink.table.api.bridge.java.StreamTableEnvironment;

import org.apache.flink.types.Row;

public class TimeAttributesAPI {

public static void main(String[] args) throws Exception {

// 1. 创建环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

env.setStreamTimeCharacteristic(TimeCharacteristic.EventTime);

StreamTableEnvironment tableEnv = StreamTableEnvironment.create(env);

// 2. 读入文件数据,得到DataStream

DataStream<String> inputStream = env.readTextFile("E:\\Demo\\flink\\src\\com\\ts\\resources\\sensor.txt");

// 3. 转换成POJO

DataStream<SensorReading> dataStream = inputStream.map(line -> {

String[] fields = line.split(",");

return new SensorReading(fields[0], new Long(fields[1]), new Double(fields[2]));

})

.assignTimestampsAndWatermarks(new BoundedOutOfOrdernessTimestampExtractor<SensorReading>(Time.seconds(2)) {

@Override

public long extractTimestamp(SensorReading element) {

return element.getTimestamp() * 1000L;

}

});

// 4. 将流转换成表,定义时间特性

Table dataTable = tableEnv.fromDataStream(dataStream, "id, timestamp as ts, temperature as temp, rt.rowtime");

tableEnv.toAppendStream(dataTable,Row.class).print();

env.execute();

}

}

----------

sensor_1,1547718199,35.8,2019-01-17T09:43:19

sensor_6,1547718201,15.4,2019-01-17T09:43:21

sensor_7,1547718202,6.7,2019-01-17T09:43:22

sensor_10,1547718205,38.1,2019-01-17T09:43:25

sensor_1,1547718207,36.3,2019-01-17T09:43:27

sensor_1,1547718209,32.8,2019-01-17T09:43:29

sensor_1,1547718212,37.1,2019-01-17T09:43:32

- Table Schema

package com.ts.tabletest;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.table.api.DataTypes;

import org.apache.flink.table.api.Table;

import org.apache.flink.table.api.bridge.java.StreamTableEnvironment;

import org.apache.flink.table.descriptors.Csv;

import org.apache.flink.table.descriptors.Kafka;

import org.apache.flink.table.descriptors.Rowtime;

import org.apache.flink.table.descriptors.Schema;

import org.apache.flink.types.Row;

public class TimeAttributesAPI {

public static void main(String[] args) throws Exception {

// 1. 创建环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

StreamTableEnvironment tableEnv = StreamTableEnvironment.create(env);

// 2. 连接Kafka,读取数据

tableEnv.connect(new Kafka()

// 版本

.version("0.11")

// 管道名称

.topic("sensor")

// 连接kafka和zookeeper

.property("zookeeper.connect", "localhost:2181")

.property("bootstrap.servers", "localhost:9092")

)

.withFormat( new Csv() ) // 新版本得Csv

.withSchema( new Schema()

.field("id", DataTypes.STRING())

.field("timestamp", DataTypes.BIGINT())

.rowtime(

new Rowtime()

.timestampsFromField("timestamp") // 从字段中提取时间戳

.watermarksPeriodicBounded(1000) // watermark延迟1秒

)

.field("temperature", DataTypes.DOUBLE())

)

.createTemporaryTable( "rowtimeInputTable" );

Table inputTable = tableEnv.from("rowtimeInputTable");

tableEnv.toAppendStream(inputTable, Row.class).print();

env.execute();

}

}

---------

sensor_1,1547718199,35.8,2019-01-17T09:43:19

sensor_6,1547718201,15.4,2019-01-17T09:43:21

sensor_7,1547718202,6.7,2019-01-17T09:43:22

sensor_10,1547718205,38.1,2019-01-17T09:43:25

sensor_1,1547718207,36.3,2019-01-17T09:43:27

sensor_1,1547718209,32.8,2019-01-17T09:43:29

sensor_1,1547718212,37.1,2019-01-17T09:43:32

- SQL

package com.ts.tabletest;

import com.ts.flink.SensorReading;

import org.apache.flink.streaming.api.TimeCharacteristic;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.timestamps.BoundedOutOfOrdernessTimestampExtractor;

import org.apache.flink.streaming.api.windowing.time.Time;

import org.apache.flink.table.api.Table;

import org.apache.flink.table.api.bridge.java.StreamTableEnvironment;

import org.apache.flink.types.Row;

public class TimeAttributesAPI {

public static void main(String[] args) throws Exception {

// 1. 创建环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

env.setStreamTimeCharacteristic(TimeCharacteristic.EventTime);

StreamTableEnvironment tableEnv = StreamTableEnvironment.create(env);

// 2.在创建表的 DDL 中定义

String sinkDDL=

"create table dataTable (" +

" id varchar(20) not null, " +

" ts bigint, " +

" temperature double, " +

" rt AS TO_TIMESTAMP( FROM_UNIXTIME(ts) ), " +

" watermark for rt as rt - interval '1' second" +

") with (" +

" 'connector.type' = 'filesystem', " +

" 'connector.path' = 'E:\\Demo\\flink\\src\\com\\ts\\resources\\sensor.txt', " +

" 'format.type' = 'csv')";

tableEnv.sqlUpdate(sinkDDL);

Table inputTable = tableEnv.from("dataTable");

tableEnv.toAppendStream(inputTable,Row.class).print();

env.execute();

}

}

---------

sensor_1,1547718199,35.8,2019-01-17T09:43:19

sensor_6,1547718201,15.4,2019-01-17T09:43:21

sensor_7,1547718202,6.7,2019-01-17T09:43:22

sensor_10,1547718205,38.1,2019-01-17T09:43:25

sensor_1,1547718207,36.3,2019-01-17T09:43:27

sensor_1,1547718209,32.8,2019-01-17T09:43:29

sensor_1,1547718212,37.1,2019-01-17T09:43:32

窗口

分组窗口(Group Windows)

Group Windows 是使用 window(w:GroupWindow)子句定义的,并且必须由as子句指定一个别名。

为了按窗口对表进行分组,窗口的别名必须在 group by 子句中,像常规的分组字段一样引用

Table table = input

// 定义窗口,别名为w

.window([w: GroupWindow] as "w")

// 按照字段a和窗口w分组

.groupBy("w, a")

.select("a,b.sum");

Table API 提供了一组具有特定语义的预定义 Window 类,这些类会被转换为底层 DataStream 或 DataSet 的窗口操作

滚动窗口(Tumbling Windows)

// 开启一个10分钟的滚动窗口,按照事件时间分组或排序,别名为w

.window(Tumble.over("10.minutes").on("rowtime").as("w"))

// 开启一个10分钟的滚动窗口,按照处理时间分组或排序,别名为w

.window(Tumble.over("10.minutes").on("proctime").as("w"))

// 开启一个10行的滚动窗口,按照处理时间分组或排序,别名为w

.window(Tumble.over("10.rows").on("proctime").as("w"))

// 定义一个滚动窗口,第一个参数是时间字段,第二个参数是窗口长度

TUMBLE(time_attr, interval) TUMBLE_END(time_attr, interval)

package com.ts.tabletest;

import com.ts.flink.SensorReading;

import org.apache.flink.streaming.api.TimeCharacteristic;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.timestamps.BoundedOutOfOrdernessTimestampExtractor;

import org.apache.flink.streaming.api.windowing.time.Time;

import org.apache.flink.table.api.Table;

import org.apache.flink.table.api.Tumble;

import org.apache.flink.table.api.bridge.java.StreamTableEnvironment;

import org.apache.flink.types.Row;

public class WindowsAPI {

public static void main(String[] args) throws Exception{

// 1. 创建环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

env.setStreamTimeCharacteristic(TimeCharacteristic.EventTime);

StreamTableEnvironment tableEnv = StreamTableEnvironment.create(env);

// 2. 读入文件数据,得到DataStream

DataStream<String> inputStream = env.readTextFile("E:\\Demo\\flink\\src\\com\\ts\\resources\\sensor.txt");

// 3. 转换成POJO

DataStream<SensorReading> dataStream = inputStream.map(line -> {

String[] fields = line.split(",");

return new SensorReading(fields[0], new Long(fields[1]), new Double(fields[2]));

})

.assignTimestampsAndWatermarks(new BoundedOutOfOrdernessTimestampExtractor<SensorReading>(Time.seconds(2)) {

@Override

public long extractTimestamp(SensorReading element) {

return element.getTimestamp() * 1000L;

}

});

// 4. 将流转换成表,定义时间特性

Table dataTable = tableEnv.fromDataStream(dataStream, "id, timestamp as ts, temperature as temp, rt.rowtime");

// Tumbling Windows

// table API

// 打开一个10s的滚动窗口,按照事件时间划分,别名为tw

Table resultTable = dataTable.window(Tumble.over("10.seconds").on("rt").as("tw"))

// 根据id,tw窗口分组

.groupBy("id, tw")

// 查询id,id计数,温度平均数,窗口关闭时间

.select("id, id.count, temp.avg, tw.end");

// 表注册

tableEnv.createTemporaryView("sensor", dataTable);

// SQL

// TUMBLE(time_attr, interval) TUMBLE_END(time_attr, interval)

// 定义一个滚动窗口,第一个参数是时间字段,第二个参数是窗口长度

Table resultSqlTable = tableEnv.sqlQuery("select id, count(id) as cnt, avg(temp) as avgTemp, tumble_end(rt, interval '10' second) " +

"from sensor group by id, tumble(rt, interval '10' second)");

tableEnv.toAppendStream(resultTable, Row.class).print("result");

tableEnv.toRetractStream(resultSqlTable, Row.class).print("sql");

env.execute();

}

}

---------

result> sensor_1,1,35.8,2019-01-17T09:43:20

result> sensor_6,1,15.4,2019-01-17T09:43:30

result> sensor_1,2,34.55,2019-01-17T09:43:30

result> sensor_10,1,38.1,2019-01-17T09:43:30

result> sensor_7,1,6.7,2019-01-17T09:43:30

result> sensor_1,1,37.1,2019-01-17T09:43:40

sql> (true,sensor_1,1,35.8,2019-01-17T09:43:20)

sql> (true,sensor_6,1,15.4,2019-01-17T09:43:30)

sql> (true,sensor_1,2,34.55,2019-01-17T09:43:30)

sql> (true,sensor_10,1,38.1,2019-01-17T09:43:30)

sql> (true,sensor_7,1,6.7,2019-01-17T09:43:30)

sql> (true,sensor_1,1,37.1,2019-01-17T09:43:40)

滑动窗口(Sliding Windows)

// 开启一个10分钟的滑动窗口,步长为5min,按照事件时间分组或排序,别名为w

.window(Slide.over("10.minutes").every("5.minutes").on("rowtime").as("w"))

// 开启一个10分钟的滑动窗口,步长为5min,按照处理时间分组或排序,别名为w

.window(Slide.over("10.minutes").every("5.minutes").on("proctime").as("w"))

// 开启一个10行的滑动窗口,步长为5行,按照处理时间分组或排序,别名为w

.window(Slide.over("10.rows").every("5.rows").on("proctime").as("w"))

// 定义一个滑动窗口,第一个参数是时间字段,第二个参数是窗口滑动步长,第三个是窗口长度

HOP(time_attr, interval, interval) HOP_END(time_attr, interval, interval)

package com.ts.tabletest;

import com.ts.flink.SensorReading;

import org.apache.flink.streaming.api.TimeCharacteristic;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.timestamps.BoundedOutOfOrdernessTimestampExtractor;

import org.apache.flink.streaming.api.windowing.time.Time;

import org.apache.flink.table.api.Slide;

import org.apache.flink.table.api.Table;

import org.apache.flink.table.api.bridge.java.StreamTableEnvironment;

import org.apache.flink.types.Row;

public class WindowsAPI {

public static void main(String[] args) throws Exception{

// 1. 创建环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

env.setStreamTimeCharacteristic(TimeCharacteristic.EventTime);

StreamTableEnvironment tableEnv = StreamTableEnvironment.create(env);

// 2. 读入文件数据,得到DataStream

DataStream<String> inputStream = env.readTextFile("E:\\Demo\\flink\\src\\com\\ts\\resources\\sensor.txt");

// 3. 转换成POJO

DataStream<SensorReading> dataStream = inputStream.map(line -> {

String[] fields = line.split(",");

return new SensorReading(fields[0], new Long(fields[1]), new Double(fields[2]));

})

.assignTimestampsAndWatermarks(new BoundedOutOfOrdernessTimestampExtractor<SensorReading>(Time.seconds(2)) {

@Override

public long extractTimestamp(SensorReading element) {

return element.getTimestamp() * 1000L;

}

});

// 4. 将流转换成表,定义时间特性

Table dataTable = tableEnv.fromDataStream(dataStream, "id, timestamp as ts, temperature as temp, rt.rowtime");

// Sliding Windows

// table API

// 打开一个10s的滚动窗口,按照事件时间划分,别名为tw

Table resultTable = dataTable.window(Slide.over("10.seconds").every("5.seconds").on("rt").as("tw"))

// 根据id,tw窗口分组

.groupBy("id, tw")

// 查询id,id计数,温度平均数,窗口关闭时间

.select("id, id.count, temp.avg, tw.end");

// 表注册

tableEnv.createTemporaryView("sensor", dataTable);

// SQL

// HOP(time_attr, interval, interval) HOP_END(time_attr, interval, interval)

// 定义一个滑动窗口,第一个参数是时间字段,第二个参数是窗口滑动步长,第三个是窗口长度

Table resultSqlTable = tableEnv.sqlQuery("select id, count(id) as cnt, avg(temp) as avgTemp, hop_end(rt, interval '5' second,interval '10' second) " +

"from sensor group by id, hop(rt, interval '5' second,interval '10' second)");

tableEnv.toAppendStream(resultTable, Row.class).print("result");

tableEnv.toRetractStream(resultSqlTable, Row.class).print("sql");

env.execute();

}

}

---------

result> sensor_1,1,35.8,2019-01-17T09:43:20

result> sensor_1,1,35.8,2019-01-17T09:43:25

result> sensor_6,1,15.4,2019-01-17T09:43:25

result> sensor_7,1,6.7,2019-01-17T09:43:25

result> sensor_1,2,34.55,2019-01-17T09:43:30

result> sensor_10,1,38.1,2019-01-17T09:43:30

result> sensor_7,1,6.7,2019-01-17T09:43:30

result> sensor_6,1,15.4,2019-01-17T09:43:30

result> sensor_1,3,35.4,2019-01-17T09:43:35

result> sensor_10,1,38.1,2019-01-17T09:43:35

result> sensor_1,1,37.1,2019-01-17T09:43:40

sql> (true,sensor_1,1,35.8,2019-01-17T09:43:20)

sql> (true,sensor_1,1,35.8,2019-01-17T09:43:25)

sql> (true,sensor_6,1,15.4,2019-01-17T09:43:25)

sql> (true,sensor_7,1,6.7,2019-01-17T09:43:25)

sql> (true,sensor_1,2,34.55,2019-01-17T09:43:30)

sql> (true,sensor_10,1,38.1,2019-01-17T09:43:30)

sql> (true,sensor_7,1,6.7,2019-01-17T09:43:30)

sql> (true,sensor_6,1,15.4,2019-01-17T09:43:30)

sql> (true,sensor_1,3,35.4,2019-01-17T09:43:35)

sql> (true,sensor_10,1,38.1,2019-01-17T09:43:35)

sql> (true,sensor_1,1,37.1,2019-01-17T09:43:40)

会话窗口(Session Windows)

// 两个窗口之间的时间间隔为10分钟,按照事件时间分组或排序,别名为w

.window(Session.withGap("10.minutes").on("rowtime").as("w"))

// 两个窗口之间的时间间隔为10分钟,按照处理时间分组或排序,别名为w

.window(Session.withGap("10.minutes").on("proctime").as("w"))

// 定义一个会话窗口,第一个参数是时间字段,第二个是窗口间隔

SESSION(time_attr, interval) SESSION_END(time_attr, interval)

package com.ts.tabletest;

import com.ts.flink.SensorReading;

import org.apache.flink.streaming.api.TimeCharacteristic;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.timestamps.BoundedOutOfOrdernessTimestampExtractor;

import org.apache.flink.streaming.api.windowing.time.Time;

import org.apache.flink.table.api.Session;

import org.apache.flink.table.api.Table;

import org.apache.flink.table.api.bridge.java.StreamTableEnvironment;

import org.apache.flink.types.Row;

public class WindowsAPI {

public static void main(String[] args) throws Exception{

// 1. 创建环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

env.setStreamTimeCharacteristic(TimeCharacteristic.EventTime);

StreamTableEnvironment tableEnv = StreamTableEnvironment.create(env);

// 2. 读入文件数据,得到DataStream

DataStream<String> inputStream = env.readTextFile("E:\\Demo\\flink\\src\\com\\ts\\resources\\sensor.txt");

// 3. 转换成POJO

DataStream<SensorReading> dataStream = inputStream.map(line -> {

String[] fields = line.split(",");

return new SensorReading(fields[0], new Long(fields[1]), new Double(fields[2]));

})

.assignTimestampsAndWatermarks(new BoundedOutOfOrdernessTimestampExtractor<SensorReading>(Time.seconds(2)) {

@Override

public long extractTimestamp(SensorReading element) {

return element.getTimestamp() * 1000L;

}

});

// 4. 将流转换成表,定义时间特性

Table dataTable = tableEnv.fromDataStream(dataStream, "id, timestamp as ts, temperature as temp, rt.rowtime");

// session Windows

// table API

// 两个会话间隔10s的窗口,按照事件时间划分,别名为tw

Table resultTable = dataTable.window(Session.withGap("10.seconds").on("rt").as("tw"))

// 根据id,tw窗口分组

.groupBy("id, tw")

// 查询id,id计数,温度平均数,窗口关闭时间

.select("id, id.count, temp.avg, tw.end");

// 表注册

tableEnv.createTemporaryView("sensor", dataTable);

// SQL

// SESSION(time_attr, interval) SESSION_END(time_attr, interval)

// 定义一个会话窗口,第一个参数是时间字段,第二个参数是窗口间隔

Table resultSqlTable = tableEnv.sqlQuery("select id, count(id) as cnt, avg(temp) as avgTemp, session_end(rt,interval '10' second) " +

"from sensor group by id, session(rt, interval '10' second)");

tableEnv.toAppendStream(resultTable, Row.class).print("result");

tableEnv.toRetractStream(resultSqlTable, Row.class).print("sql");

env.execute();

}

}

----------

result> sensor_6,1,15.4,2019-01-17T09:43:31

result> sensor_7,1,6.7,2019-01-17T09:43:32

result> sensor_10,1,38.1,2019-01-17T09:43:35

result> sensor_1,4,35.5,2019-01-17T09:43:42

sql> (true,sensor_6,1,15.4,2019-01-17T09:43:31)

sql> (true,sensor_7,1,6.7,2019-01-17T09:43:32)

sql> (true,sensor_10,1,38.1,2019-01-17T09:43:35)

sql> (true,sensor_1,4,35.5,2019-01-17T09:43:42)

开窗函数(Over Windows)

Over window 聚合是标准 SQL 中已有的(over 子句),可以在查询的SELECT 子句中定义

Over window 聚合,会针对每个输入行,计算相邻行范围内的聚合

Over windows 使用 window(w:overwindows)子句定义,并在 select()方法中通过别名来引用

Table table = input

.window([w: OverWindow] as "w")

.select("a, b.sum over w, c.min over w");

Table API 提供了 Over 类,来配置 Over 窗口的属性

无界开窗(Unbounded Over Windows)

// 无界的事件时间 over window 按照a字段分区,按照事件时间排序,因为是无界开窗,preceding无需配置,别名为w

.window(Over.partitionBy("a").orderBy("rowtime").preceding(UNBOUNDED_RANGE).as("w"))

// 无界的处理时间 over window 按照a字段分区,按照处理时间排序,因为是无界开窗,preceding无需配置,别名为w

.window(Over.partitionBy("a").orderBy("proctime").preceding(UNBOUNDED_RANGE).as("w"))

// 无界的事件时间 Row-count over window 按照a字段分区,按照事件时间排序,因为是无界开窗,preceding无需配置,别名为w

.window(Over.partitionBy("a").orderBy("rowtime").preceding(UNBOUNDED_ROW).as("w"))

// 无界的处理时间 Row-count over window 按照a字段分区,按照处理时间排序,因为是无界开窗,preceding无需配置,别名为w

.window(Over.partitionBy("a").orderBy("proctime").preceding(UNBOUNDED_ROW).as("w"))

package com.ts.tabletest;

import com.ts.flink.SensorReading;

import org.apache.flink.streaming.api.TimeCharacteristic;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.timestamps.BoundedOutOfOrdernessTimestampExtractor;

import org.apache.flink.streaming.api.windowing.time.Time;

import org.apache.flink.table.api.Over;

import org.apache.flink.table.api.Table;

import org.apache.flink.table.api.bridge.java.StreamTableEnvironment;

import org.apache.flink.types.Row;

public class WindowsAPI {

public static void main(String[] args) throws Exception{

// 1. 创建环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

env.setStreamTimeCharacteristic(TimeCharacteristic.EventTime);

StreamTableEnvironment tableEnv = StreamTableEnvironment.create(env);

// 2. 读入文件数据,得到DataStream

DataStream<String> inputStream = env.readTextFile("E:\\Demo\\flink\\src\\com\\ts\\resources\\sensor.txt");

// 3. 转换成POJO

DataStream<SensorReading> dataStream = inputStream.map(line -> {

String[] fields = line.split(",");

return new SensorReading(fields[0], new Long(fields[1]), new Double(fields[2]));

})

.assignTimestampsAndWatermarks(new BoundedOutOfOrdernessTimestampExtractor<SensorReading>(Time.seconds(2)) {

@Override

public long extractTimestamp(SensorReading element) {

return element.getTimestamp() * 1000L;

}

});

// 4. 将流转换成表,定义时间特性

Table dataTable = tableEnv.fromDataStream(dataStream, "id, timestamp as ts, temperature as temp, rt.rowtime");

// Unbounded Over Windows

// table API

// 无边界开窗

Table overResult = dataTable.window(Over.partitionBy("id").orderBy("rt").as("ow"))

.select("id, rt, id.count over ow, temp.avg over ow");

// 表注册

tableEnv.createTemporaryView("sensor", dataTable);

// SQL

Table overSqlResult = tableEnv.sqlQuery("select id, rt, count(id) over ow, avg(temp) over ow " +

" from sensor window ow as (partition by id order by rt)");

tableEnv.toAppendStream(overResult, Row.class).print("result");

tableEnv.toRetractStream(overSqlResult, Row.class).print("sql");

env.execute();

}

}

----------

result> sensor_1,2019-01-17T09:43:19,1,35.8

result> sensor_1,2019-01-17T09:43:27,2,36.05

result> sensor_1,2019-01-17T09:43:29,3,34.96666666666666

result> sensor_1,2019-01-17T09:43:32,4,35.5

result> sensor_10,2019-01-17T09:43:25,1,38.1

result> sensor_7,2019-01-17T09:43:22,1,6.7

result> sensor_6,2019-01-17T09:43:21,1,15.4

sql> (true,sensor_1,2019-01-17T09:43:19,1,35.8)

sql> (true,sensor_1,2019-01-17T09:43:27,2,36.05)

sql> (true,sensor_1,2019-01-17T09:43:29,3,34.96666666666666)

sql> (true,sensor_1,2019-01-17T09:43:32,4,35.5)

sql> (true,sensor_10,2019-01-17T09:43:25,1,38.1)

sql> (true,sensor_7,2019-01-17T09:43:22,1,6.7)

sql> (true,sensor_6,2019-01-17T09:43:21,1,15.4)

有界开窗(Bounded Over Windows)

// 有界的事件时间 over window 按照a字段分区,按照事件时间排序,开窗大小为1分钟,别名为w

.window(Over.partitionBy("a").orderBy("rowtime").preceding("1.minutes").as("w"))

// 有界的处理时间 over window 按照a字段分区,按照处理时间排序,开窗大小为1分钟,别名为w

.window(Over.partitionBy("a").orderBy("proctime").preceding("1.minutes").as("w"))

// 有界的事件时间 Row-count over window 按照a字段分区,按照事件时间排序,开窗大小为10行,别名为w

.window(Over.partitionBy("a").orderBy("rowtime").preceding("10.rows").as("w"))

// 有界的处理时间 Row-count over window 按照a字段分区,按照处理时间排序,开窗大小为10行,别名为w

.window(Over.partitionBy("a").orderBy("procime").preceding("10.rows").as("w"))

package com.ts.tabletest;

import com.ts.flink.SensorReading;

import org.apache.flink.streaming.api.TimeCharacteristic;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.timestamps.BoundedOutOfOrdernessTimestampExtractor;

import org.apache.flink.streaming.api.windowing.time.Time;

import org.apache.flink.table.api.Over;

import org.apache.flink.table.api.Table;

import org.apache.flink.table.api.bridge.java.StreamTableEnvironment;

import org.apache.flink.types.Row;

public class WindowsAPI {

public static void main(String[] args) throws Exception{

// 1. 创建环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

env.setStreamTimeCharacteristic(TimeCharacteristic.EventTime);

StreamTableEnvironment tableEnv = StreamTableEnvironment.create(env);

// 2. 读入文件数据,得到DataStream

DataStream<String> inputStream = env.readTextFile("E:\\Demo\\flink\\src\\com\\ts\\resources\\sensor.txt");

// 3. 转换成POJO

DataStream<SensorReading> dataStream = inputStream.map(line -> {

String[] fields = line.split(",");

return new SensorReading(fields[0], new Long(fields[1]), new Double(fields[2]));

})

.assignTimestampsAndWatermarks(new BoundedOutOfOrdernessTimestampExtractor<SensorReading>(Time.seconds(2)) {

@Override

public long extractTimestamp(SensorReading element) {

return element.getTimestamp() * 1000L;

}

});

// 4. 将流转换成表,定义时间特性

Table dataTable = tableEnv.fromDataStream(dataStream, "id, timestamp as ts, temperature as temp, rt.rowtime");

// Bounded Over Windows

// table API

// 有边界开窗 开两行窗口

Table overResult = dataTable.window(Over.partitionBy("id").orderBy("rt").preceding("2.rows").as("ow"))

.select("id, rt, id.count over ow, temp.avg over ow");

// 表注册

tableEnv.createTemporaryView("sensor", dataTable);

// SQL

Table overSqlResult = tableEnv.sqlQuery("select id, rt, count(id) over ow, avg(temp) over ow " +

" from sensor " +

" window ow as (partition by id order by rt rows between 2 preceding and current row)");

tableEnv.toAppendStream(overResult, Row.class).print("result");

tableEnv.toRetractStream(overSqlResult, Row.class).print("sql");

env.execute();

}

}

----------

result> sensor_1,2019-01-17T09:43:19,1,35.8

result> sensor_6,2019-01-17T09:43:21,1,15.4

result> sensor_7,2019-01-17T09:43:22,1,6.7

result> sensor_10,2019-01-17T09:43:25,1,38.1

result> sensor_1,2019-01-17T09:43:27,2,36.05

result> sensor_1,2019-01-17T09:43:29,3,34.96666666666666

result> sensor_1,2019-01-17T09:43:32,3,35.4

sql> (true,sensor_1,2019-01-17T09:43:19,1,35.8)

sql> (true,sensor_6,2019-01-17T09:43:21,1,15.4)

sql> (true,sensor_7,2019-01-17T09:43:22,1,6.7)

sql> (true,sensor_10,2019-01-17T09:43:25,1,38.1)

sql> (true,sensor_1,2019-01-17T09:43:27,2,36.05)

sql> (true,sensor_1,2019-01-17T09:43:29,3,34.96666666666666)

sql> (true,sensor_1,2019-01-17T09:43:32,3,35.4)

函数

Flink内置函数一览:https://ci.apache.org/projects/flink/flink-docs-release-1.12/zh/dev/table/functions/systemFunctions.html#comparison-functions

常用函数

-

比较函数

-

SQL

- value1= value2

- 判断两个value是否相等

- value1 > value2

- 判断value1是否大于value2

- value1= value2

-

Table API

- ANY1 === ANY2

- 判断两个ANY是否相等

- ANY1 >ANY2

- 判断ANY1是否大于ANY2

- ANY1 === ANY2

-

-

逻辑函数

- SQL

- boolean1 or boolean2

- b1或者b2为true时,返回true

- boolean is false

- boolean为false,返回true

- NOT boolean

- boolean为false,返回true;boolean为true,返回false

- boolean1 or boolean2

- Table API

- boolean1 || boolean2

- b1或者b2为true时,返回true

- boolean.isFalse

- boolean为false,返回true

- !boolean

- boolean为false,返回true;boolean为true,返回false

- boolean1 || boolean2

- SQL

-

算术函数

- SQL

- num1+num2

- n1和n2的和

- power(n1,n2)

- n1的n2次幂

- num1+num2

- Table API

- num1+num2

- n1和n2的和

- num1.power(num2)

- n1的n2次幂

- num1+num2

- SQL

-

字符串函数

- SQL

- string1 || string2

- 连接s1和s2

- UPPER(string)

- 把string转换为大写

- CHAR_LENGTH(string)

- 返回string的长度

- string1 || string2

- Table API

- string1+string2

- 连接s1和s2

- string.upperCase()

- 把string转换为大写

- string.charLength()

- 返回string的长度

- string1+string2

- SQL

-

时间函数

- SQL

- date string

- 将“ yyyy-MM-dd”形式的字符串解析为 SQL的日期

- timestamp string

- 将“ yyyy-MM-dd HH:mm:ss.fff”形式的字符串解析为 SQL的日期

- current_time

- 返回 UTC 时区内的当前 SQL 时间

- interval string range

- 以“ dd hh: mm: ss.fff”的形式解析 SQL 间隔为毫秒的间隔,或者以“ yyyy-mm”解析 SQL 间隔为个月的间隔。

- date string

- Table API

- string.toDate

- 将“ yyyy-MM-dd”形式的字符串解析为 SQL的日期

- string.toTimestamp

- 将“ yyyy-MM-dd HH:mm:ss.fff”形式的字符串解析为 SQL的日期

- currentTime()

- 返回 UTC 时区内的当前 SQL 时间

- NUMERIC.minutes

- 以“ dd hh: mm: ss.fff”的形式解析 SQL 间隔为毫秒的间隔

- NUMERIC.days

- 以“ yyyy-mm”解析 SQL 间隔为个月的间隔。

- string.toDate

- SQL

-

聚合函数

-

SQL

- COUNT(*)

- 计数

- SUM(expression)

- 求和

- RANK()

- 返回某个值的排名

- ROW_NUMBER()

- 根据窗口分区内行的顺序,从一行开始,为每一行分配一个唯一的顺序编号。

- COUNT(*)

-

Table API

-

FIELD.count

- 计数

-

FIELD.sum

- 求和

-

-