HCIE-kubernetes(k8s)

1、kubernetes

kubernetes(k和s之间8个字母简称k8s)k8s 容器编排工具。生产环境里面运行成千上万个容器,这么多容器如果用手工管理很麻烦,这候需要一个工具对所有的容器进行编排和管理操作。docker有自己的容器编排工具swarm,安装好docker之后就自带有了swarm工具,无需额外去安装,但是k8s是一个独立的工具,需要单独部署和安装。

理解pod概念

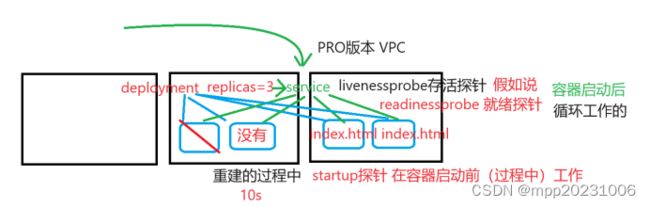

pod是k8s集群管理和调度的最小单元,默认一个POD里面运行一个容器(一个POD也能运行多个容器),把容器封装成POD,再针对POD进行调度,k8s通过控制器deployment来管理POD,控制器deployment里面有个参数replicas副本数,如果在控制器deployment里指定replicas副本数为3,这个控制器会在k8s集群中启动3个一样的POD,有可能分布在不同主机节点上,也可能在同一个主机节点上。若另一台主机上的POD的主机故障了,那运行在这个主机上的POD会消亡,控制器会删除那个故障POD在其它主机节点重新创建这个副本,保证集群有3个POD。

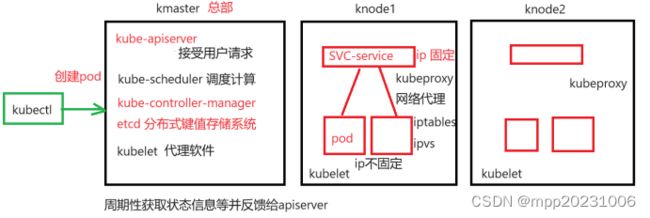

k8s框架体系

Kubernetes是一个用于容器编排和管理的开源平台,它提供了一组核心组件用于自动化容器化应用程序的部署、扩展和管理。下面是一些Kubernetes的基本组件及其作用的介绍:

Master节点:

kube-apiserver:Kubernetes API服务器,提供了Kubernetes的API接口,用于管理和控制整个集群。

kube-scheduler:负责根据资源需求和约束条件,将Pod调度到合适的节点上运行。

kube-controller-manager:包含了一组控制器,用于维护集群的状态和进行自动化的管理操作,如节点控制器、副本控制器等。

etcd:分布式键值存储系统,用于保存集群的配置数据和状态信息。

kubelet代理软件,周期性获取状态信息等并反馈给apiserver

Node节点:

kubelet:运行在每个节点上,负责管理该节点上的容器和Pod,与Master节点进行通信。

kubeproxy:负责为Pod提供网络代理和负载均衡功能,以及处理集群中的网络流量路由。

流量通过SVC对外暴露端口来到SVC,再通过kubeproxy网络代理(默认iptables模式,但实际用ipvs效率高)将流量转发到后端的POD上。

2、K8s集群搭建

Docker Engine 核心组件 Containerd,后将其开源并捐赠给CNCF(云原生计算基金会),作为独立的项目运营。也就是说 Docker Engine 里面是包含 containerd 的,安装 Docker 后,就会有 containerd。containerd 也可以进行单独安装,无需安装 Docker,yum install -y containerd。

K8s早期集成docker,调用docker engine 的containerd 来管理容器的生命周期。K8s为了兼容更多的容器运行时(比如 containerd/CRI-O),创建了一个CRI(Container Runtime Interface)容器运行时接口标准,制定标准的目的是为了实现编排器(如 Kubernetes)和其他不同的容器运行时之间交互操作。但是docker出来的比较早,它不满足这个标准,Docker Engine 本身就是一个完整的技术栈,没有实现(CRI)接口,也不可能为了迎合k8s编排工具指定的 CRI 标准而改变底层架构。k8s引入了一个临时解决方案dockershim来帮助docker通过k8s进行调用,通过dockershim可以让k8s的代理kubelet通过 CRI 来调用 Docker Engine 进行容器的操作。

k8s 在 1.7 的版本中就已经将 containerd 和 kubelet 集成使用了,最终都是要调用 containerd 这个容器运行时。如果没有使用cri-dockerd将Docker Engine 和 k8s 集成,那么docker命令是查不到k8s(crictl)的镜像,反过来也一样。

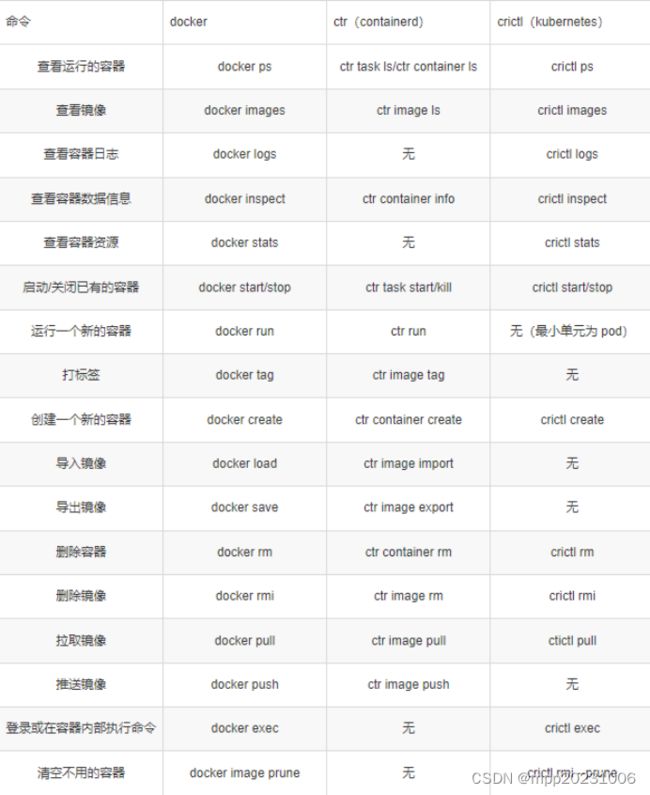

docker(docker):docker 命令行工具,无需单独安装。

ctr(containerd):containerd 命令行工具,无需单独安装,集成containerd,多用于测试或开发。

nerdctl(containerd):containerd 客户端工具,使用效果与 docker 命令的语法一致。需要单独安装。

crictl(kubernetes):遵循 CRI 接口规范的一个命令行工具,通常用它来检查和管理kubelet节点上的容器运行时和镜像。没有tag和push。

系统环境配置

环境准备,使用centos stream 8 克隆3台虚拟机(完整克隆)

master 10.1.1.200 2vcpus/8G master节点 可连通外网(NAT模式)

node1 10.1.1.201 2vcpus/8G node1节点 可连通外网

node2 10.1.1.202 2vcpus/8G node2节点 可连通外网(vcpus至少2个,建议vcpus4个、内存4G、硬盘100G)

完整克隆3台虚拟机,修改3台虚拟机主机名及ip地址。注意:修改ip地址的时候,看清楚网卡名字是什么,我这里是ifcfg-ens160,如果你是VMware17版本,安装的centos stream 8 网卡叫 ens160,如果你是VMware16版本,安装的网卡叫 ens33。

这三台都要修改主机名和ip,hostnamectl set-hostname master

cd /etc/sysconfig/network-scripts/

[root@kmaster network-scripts]# cat ifcfg-ens160

TYPE=Ethernet

BOOTPROTO=none

NAME=ens160

DEVICE=ens160

ONBOOT=yes

IPADDR=10.1.1.200

NETMASK=255.255.255.0

GATEWAY=10.1.1.2

DNS1=10.1.1.2

将Stream8-k8s-v1.27.0.sh脚本上传到三台主机家目录,三台主机都执行脚本。

注意:在脚本里面只需修改网卡名,hostip=$(ifconfig ens160 |grep -w “inet” |awk ‘{print $2}’)

[root@master ~]# chmod +x Stream8-k8s-v1.27.0.sh

[root@master ~]# ./Stream8-k8s-v1.27.0.sh

或者直接使用shell运行脚本[root@master ~]# sh Stream8-k8s-v1.27.0.sh

报错是系统默认从CentOS-Stream-Extras-common.repo下载了,把CentOS-Stream-Extras-common.repo(enabled=0)禁用就好了。

Stream8-k8s-v1.27.0.sh脚本内容如下:

#!/bin/bash

# CentOS stream 8 install kubenetes 1.27.0

# the number of available CPUs 1 is less than the required 2

# k8s 环境要求虚拟cpu数量至少2个

# 使用方法:在所有节点上执行该脚本,所有节点配置完成后,复制第11步语句,单独在master节点上进行集群初始化。

#1 rpm

echo '###00 Checking RPM###'

yum install -y yum-utils vim bash-completion net-tools wget

echo "00 configuration successful ^_^"

#Basic Information

echo '###01 Basic Information###'

hostname=`hostname`

hostip=$(ifconfig ens160 |grep -w "inet" |awk '{print $2}')

echo 'The Hostname is:'$hostname

echo 'The IPAddress is:'$hostip

#2 /etc/hosts

echo '###02 Checking File:/etc/hosts###'

hosts=$(cat /etc/hosts)

result01=$(echo $hosts |grep -w "${hostname}")

if [[ "$result01" != "" ]]

then

echo "Configuration passed ^_^"

else

echo "hostname and ip not set,configuring......"

echo "$hostip $hostname" >> /etc/hosts

echo "configuration successful ^_^"

fi

echo "02 configuration successful ^_^"

#3 firewall & selinux

echo '###03 Checking Firewall and SELinux###'

systemctl stop firewalld

systemctl disable firewalld

se01="SELINUX=disabled"

se02=$(cat /etc/selinux/config |grep -w "^SELINUX")

if [[ "$se01" == "$se02" ]]

then

echo "Configuration passed ^_^"

else

echo "SELinux Not Closed,configuring......"

sed -i 's/^SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config

echo "configuration successful ^_^"

fi

echo "03 configuration successful ^_^"

#4 swap

echo '###04 Checking swap###'

swapoff -a

sed -i "s/^.*swap/#&/g" /etc/fstab

echo "04 configuration successful ^_^"

#5 docker-ce

echo '###05 Checking docker###'

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

echo 'list docker-ce versions'

yum list docker-ce --showduplicates | sort -r

yum install -y docker-ce

systemctl start docker

systemctl enable docker

cat <<EOF > /etc/docker/daemon.json

{

"registry-mirrors": ["https://cc2d8woc.mirror.aliyuncs.com"]

}

EOF

systemctl restart docker

echo "05 configuration successful ^_^"

#6 iptables

echo '###06 Checking iptables###'

cat <<EOF > /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

EOF

sysctl -p /etc/sysctl.d/k8s.conf

echo "06 configuration successful ^_^"

#7 cgroup(systemd/cgroupfs)

echo '###07 Checking cgroup###'

containerd config default > /etc/containerd/config.toml

sed -i "s#registry.k8s.io/pause#registry.aliyuncs.com/google_containers/pause#g" /etc/containerd/config.toml

sed -i 's/SystemdCgroup = false/SystemdCgroup = true/g' /etc/containerd/config.toml

systemctl restart containerd

echo "07 configuration successful ^_^"

#8 kubenetes.repo

echo '###08 Checking repo###'

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

echo "08 configuration successful ^_^"

#9 crictl

echo "Checking crictl"

cat <<EOF > /etc/crictl.yaml

runtime-endpoint: unix:///run/containerd/containerd.sock

image-endpoint: unix:///run/containerd/containerd.sock

timeout: 5

debug: false

EOF

echo "09 configuration successful ^_^"

#10 kube1.27.0

echo "Checking kube"

yum install -y kubelet-1.27.0 kubeadm-1.27.0 kubectl-1.27.0 --disableexcludes=kubernetes

systemctl enable --now kubelet

echo "10 configuration successful ^_^"

echo "Congratulations ! The basic configuration has been completed"

#11 Initialize the cluster

# 仅在master主机上做集群初始化

# kubeadm init --image-repository registry.aliyuncs.com/google_containers --kubernetes-version=v1.27.0 --pod-network-cidr=10.244.0.0/16

集群搭建

1、k8s集群初始化,把脚本的第11条复制到master主机运行,仅在master主机上做k8s集群初始化。

kubeadm init --image-repository registry.aliyuncs.com/google_containers --kubernetes-version=v1.27.0 --pod-network-cidr=10.244.0.0/16

2、配置环境变量,k8s集群初始化后仅在master主机执行以下命令

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 10.1.1.200:6443 --token k5ieso.no0jtanbbgodlta7 \

--discovery-token-ca-cert-hash sha256:418a41208ad32b04f39b5ba70ca4b59084450a65d3c852b8dc2b7f53d462a540

[root@master ~]# mkdir -p $HOME/.kube

[root@master ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@master ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

[root@master ~]# echo 'export KUBECONFIG=/etc/kubernetes/admin.conf' >> /etc/profile

[root@master ~]# source /etc/profile

[root@node1 ~]# kubeadm join 10.1.1.200:6443 --token k5ieso.no0jtanbbgodlta7 --discovery-token-ca-cert-hash sha256:418a41208ad32b04f39b5ba70ca4b59084450a65d3c852b8dc2b7f53d462a540

[preflight] Running pre-flight checks

[WARNING FileExisting-tc]: tc not found in system path

[WARNING Hostname]: hostname "node1" could not be reached

[WARNING Hostname]: hostname "node1": lookup node1 on 10.1.1.2:53: no such host

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

[root@master ~]# kubectl get no

NAME STATUS ROLES AGE VERSION

master NotReady control-plane 18m v1.27.0

[root@master ~]# kubectl get no

NAME STATUS ROLES AGE VERSION

master NotReady control-plane 19m v1.27.0

node1 NotReady <none> 38s v1.27.0

node2 NotReady <none> 30s v1.27.0

4、安装calico网络组件(仅master节点),上传tigera-operator-3-26-1.yaml和custom-resources-3-26-1.yaml到家目录

安装calico网络组件前,集群状态为 NotReady,安装后等待一段时间,集群状态将变为 Ready。

查看集群状态[root@master ~]# kubectl get nodes 或 kubectl get no

NAME STATUS ROLES AGE VERSION

master NotReady control-plane 97m v1.27.0

node1 NotReady <none> 78m v1.27.0

node2 NotReady <none> 78m v1.27.0

查看所有命名空间[root@master ~]# kubectl get namespaces 或 kubectl get ns

NAME STATUS AGE

default Active 97m

kube-node-lease Active 97m

kube-public Active 97m

kube-system Active 97m

安装 Tigera Calico operator

[root@master ~]# kubectl create -f tigera-operator-3-26-1.yaml 会多个tigera-operator命名空间

配置 custom-resources.yaml[root@kmaster ~]# vim custom-resources-3-26-1.yaml

更改IP地址池中的 CIDR,和k8s集群初始化的 --pod-network-cidr 参数保持一致(配置文件已做更改)cidr: 10.244.0.0/16

[root@master ~]# kubectl create -f custom-resources-3-26-1.yaml 会多个calico-system命名空间

查看所有命名空间[root@master ~]# kubectl get namespaces 或 kubectl get ns

[root@master ~]# kubectl get namespaces

NAME STATUS AGE

calico-system Active 17m

default Active 119m

kube-node-lease Active 119m

kube-public Active 119m

kube-system Active 119m

tigera-operator Active 18m

要点时间等待,要是安装有问题,如kubectl get ns查看STATUS状态为Terminating终止中,

倒着删除再执行,删除有延迟卡住的话,删除后再重启下所有节点,再执行安装操作

[root@master ~]# kubectl delete -f custom-resources-3-26-1.yaml

[root@master ~]# kubectl delete -f tigera-operator-3-26-1.yaml

动态查看calico容器状态,待全部running后,集群状态变为正常。(网络慢的话此过程可能要几小时)

[root@kmaster ~]# watch kubectl get pods -n calico-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-6c677477b7-6tthc 1/1 Running 0 12h

calico-node-cdjl8 1/1 Running 0 12h

calico-node-cxcwb 1/1 Running 0 12h

calico-node-rg75v 1/1 Running 0 12h

calico-typha-69c55766b7-54ct4 1/1 Running 2 (10m ago) 12h

calico-typha-69c55766b7-nxzl9 1/1 Running 2 (10m ago) 12h

csi-node-driver-kdqqx 2/2 Running 0 12h

csi-node-driver-qrjz5 2/2 Running 0 12h

csi-node-driver-wsxx6 2/2 Running 0 12h

再次查看集群状态[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready control-plane 13h v1.27.0

node1 Ready <none> 13h v1.27.0

node2 Ready <none> 13h v1.27.0

CNI Plugin,CNI网络插件,Calico通过CNI网络插件与kubelet关联,从而实现Pod网络。

Calico Node,Calico节点代理是运行在每个节点上的代理程序,负责管理节点路由信息、策略规则和创建Calico虚拟网络设备。

Calico Controller,Calico网络策略控制器。允许创建"NetworkPolicy"资源对象,并根据资源对象里面对网络策略定义,在对应节点主机上创建针对于Pod流出或流入流量的IPtables规则。

Calico Typha(可选的扩展组件),Typha是Calico的一个扩展组件,用于Calico通过Typha直接与Etcd通信,而不是通过kube-apiserver。通常当K8S的规模超过50个节点的时候推荐启用它,以降低kube-apiserver的负载。每个Pod/calico-typha可承载100~200个Calico节点的连接请求,最多不要超过200个。

自动补全,自动补全安装包在执行脚本时已安装

[root@master ~]# rpm -qa |grep bash-completion

bash-completion-2.7-5.el8.noarch

[root@master ~]# vim /etc/profile

配置文件第二行添加

source <(kubectl completion bash)

[root@master ~]# source /etc/profile

metrics-service,通过kubectl命令查询节点的cpu和内存的使用率

[root@master ~]# kubectl top nodes

error: Metrics API not available

想通过top命令查询节点的cpu和内存的使用率,必须依靠一个插件:metrics-service

下载yaml文件,官方托管地址https://github.com/kubernetes-sigs/metrics-server

安装metrics-service组件,但是默认情况下,网路问题,导致镜像无法拉取

kubectl apply -f https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/components.yaml

国外网络不稳定建议下载到本地再上传,所以单独把components.yaml 下载过来,进行修改:改两行参数

- --kubelet-insecure-tls

image: registry.cn-hangzhou.aliyuncs.com/cloudcs/metrics-server:v0.6.2

修改后内容:

- args:

- --kubelet-insecure-tls

- --cert-dir=/tmp

- --secure-port=4443

- --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname

- --kubelet-use-node-status-port

- --metric-resolution=15s

image: registry.cn-hangzhou.aliyuncs.com/cloudcs/metrics-server:v0.6.2

将components.yaml上传到master家目录,[root@master ~]# kubectl apply -f components.yaml

serviceaccount/metrics-server created

clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader created

clusterrole.rbac.authorization.k8s.io/system:metrics-server created

rolebinding.rbac.authorization.k8s.io/metrics-server-auth-reader created

clusterrolebinding.rbac.authorization.k8s.io/metrics-server:system:auth-delegator created

clusterrolebinding.rbac.authorization.k8s.io/system:metrics-server created

service/metrics-server created

deployment.apps/metrics-server created

apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io created

[root@master ~]# kubectl top nodes

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

master 129m 3% 1338Mi 35%

node1 59m 1% 726Mi 19%

node2 74m 1% 692Mi 18%

3、POD管理操作

了解namespace及相关操作:

一个k8s集群有多个命名空间,一个命名空间有多个pod,默认一个POD里面运行一个容器(一个POD也能运行多个容器),POD通过namespace命名空间进行隔离的,POD运行在命名空间里面。

查看所有命名空间[root@master ~]# kubectl get namespaces 或 kubectl get ns

查看当前命名空间,kubectl config get-contexts,命名空间是空的,代表default

[root@master ~]# kubectl config get-contexts

CURRENT NAME CLUSTER AUTHINFO NAMESPACE

* kubernetes-admin@kubernetes kubernetes kubernetes-admin

查看当前命名空间的pod,kubectl get pod

查看指定命名空间pod,管理员权限可查看其它命名空间的pod,默认执行的是/etc/kubernetes/admin.conf是管理员权限,可用-n查看其它命名空间的pod

kubectl get pod -n tigera-operator

kubectl get pod -n calico-system

kubectl get pod -n kube-system

切换命名空间

kubectl config set-context --current --namespace kube-system

kubectl config set-context --current --namespace default

创建命名空间,[root@master ~]# kubectl create ns ns1

这样的话命令比较麻烦,所以这里提供一个小脚本,kubens,把它传到/bin 下面,并进行授权操作 chmod +x /bin/kubens,之后通过kubens脚本操作即可。kubens内容如下:

#!/usr/bin/env bash

#

# kubenx(1) is a utility to switch between Kubernetes namespaces.

# Copyright 2017 Google Inc.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

[[ -n $DEBUG ]] && set -x

set -eou pipefail

IFS=$'\n\t'

KUBENS_DIR="${HOME}/.kube/kubens"

usage() {

cat <<"EOF"

USAGE:

kubens : list the namespaces in the current context

kubens : change the active namespace of current context

kubens - : switch to the previous namespace in this context

kubens -h,--help : show this message

EOF

exit 1

}

current_namespace() {

local cur_ctx

cur_ctx="$(current_context)"

ns="$(kubectl config view -o=jsonpath="{.contexts[?(@.name==\"${cur_ctx}\")].context.namespace}")"

if [[ -z "${ns}" ]]; then

echo "default"

else

echo "${ns}"

fi

}

current_context() {

kubectl config view -o=jsonpath='{.current-context}'

}

get_namespaces() {

kubectl get namespaces -o=jsonpath='{range .items[*].metadata.name}{@}{"\n"}{end}'

}

escape_context_name() {

echo "${1//\//-}"

}

namespace_file() {

local ctx="$(escape_context_name "${1}")"

echo "${KUBENS_DIR}/${ctx}"

}

read_namespace() {

local f

f="$(namespace_file "${1}")"

[[ -f "${f}" ]] && cat "${f}"

return 0

}

save_namespace() {

mkdir -p "${KUBENS_DIR}"

local f saved

f="$(namespace_file "${1}")"

saved="$(read_namespace "${1}")"

if [[ "${saved}" != "${2}" ]]; then

printf %s "${2}" > "${f}"

fi

}

switch_namespace() {

local ctx="${1}"

kubectl config set-context "${ctx}" --namespace="${2}"

echo "Active namespace is \"${2}\".">&2

}

set_namespace() {

local ctx prev

ctx="$(current_context)"

prev="$(current_namespace)"

if grep -q ^"${1}"\$ <(get_namespaces); then

switch_namespace "${ctx}" "${1}"

if [[ "${prev}" != "${1}" ]]; then

save_namespace "${ctx}" "${prev}"

fi

else

echo "error: no namespace exists with name \"${1}\".">&2

exit 1

fi

}

list_namespaces() {

local yellow darkbg normal

yellow=$(tput setaf 3)

darkbg=$(tput setab 0)

normal=$(tput sgr0)

local cur_ctx_fg cur_ctx_bg

cur_ctx_fg=${KUBECTX_CURRENT_FGCOLOR:-$yellow}

cur_ctx_bg=${KUBECTX_CURRENT_BGCOLOR:-$darkbg}

local cur ns_list

cur="$(current_namespace)"

ns_list=$(get_namespaces)

for c in $ns_list; do

if [[ -t 1 && -z "${NO_COLOR:-}" && "${c}" = "${cur}" ]]; then

echo "${cur_ctx_bg}${cur_ctx_fg}${c}${normal}"

else

echo "${c}"

fi

done

}

swap_namespace() {

local ctx ns

ctx="$(current_context)"

ns="$(read_namespace "${ctx}")"

if [[ -z "${ns}" ]]; then

echo "error: No previous namespace found for current context." >&2

exit 1

fi

set_namespace "${ns}"

}

main() {

if [[ "$#" -eq 0 ]]; then

list_namespaces

elif [[ "$#" -eq 1 ]]; then

if [[ "${1}" == '-h' || "${1}" == '--help' ]]; then

usage

elif [[ "${1}" == "-" ]]; then

swap_namespace

elif [[ "${1}" =~ ^-(.*) ]]; then

echo "error: unrecognized flag \"${1}\"" >&2

usage

elif [[ "${1}" =~ (.+)=(.+) ]]; then

alias_context "${BASH_REMATCH[2]}" "${BASH_REMATCH[1]}"

else

set_namespace "${1}"

fi

else

echo "error: too many flags" >&2

usage

fi

}

main "$@"

查看当前所有的命名空间,并高亮显示目前在哪个命名空间里面,kubens

切换命名空间,[root@master ~]# kubens kube-system

Context "kubernetes-admin@kubernetes" modified.

Active namespace is "kube-system".

[root@master ~]# kubens default

Context "kubernetes-admin@kubernetes" modified.

Active namespace is "default".

pod如何创建:

在k8s集群里面,k8s调度的最小单位 pod,pod里面跑容器(containerd)。K8s调用containerd,1、可以单独安装containerd, 2、可以安装docker,docker内部包含了containerd,这里采用第二种,dockershim 这个临时过渡方案已经在k8s 1.24版本里面删除了,所以是两套系统了,那么docker命令是查不到k8s(crictl)的镜像,反过来也一样。

docker自定义镜像后push推送到仓库,再k8s从仓库拉下来用,制作镜像都是通过dockerfile的,k8s重点是对容器的管理而不是制作镜像。

创建pod,有2种方法:命令行创建、编写yaml文件(推荐)

求帮助,[root@master ~]# kubectl run --help

命令行创建一个pod,会先下载镜像再创建pod

[root@master ~]# kubectl run nginx --image=nginx

pod/nginx created

查看当前命名空间的pod[root@master ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx 0/1 ContainerCreating 0 5s

[root@master ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx 1/1 Running 0 2m2s

查询pod的详细信息[root@master ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx 1/1 Running 0 2m18s 10.244.166.131 node1 <none> <none>

kubectl run nginx2 --image nginx --image后面可以不用等号=

查询pod的详细信息[root@master ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx 1/1 Running 0 24m 10.244.166.131 node1 <none> <none>

nginx2 1/1 Running 0 84s 10.244.104.4 node2 <none> <none>

发现pod运行在哪个节点,其镜像就在哪个节点,可通过crictl images查得镜像。

[root@master ~]# kubectl describe pod pod1 查看pod描述信息,查看详细信息可用于排查

[root@master ~]# kubectl describe nodes node1 查看node节点描述信息

[root@master ~]# kubectl run pod2 --image nginx --image-pull-policy IfNotPresent

镜像的下载策略

Always:它每次都会联网检查最新的镜像,不管你本地有没有,都会到互联网下载。

Never:它只会使用本地镜像,从不下载

IfNotPresent:它如果检测本地没有镜像,才会联网下载。

编写yaml文件方式,--dry-run=client后面必须带等号=,不能空格

重定向输出到pod3.yaml [root@master ~]# kubectl run pod3 --image nginx --dry-run=client -o yaml > pod3.yaml

[root@master ~]# cat pod3.yaml

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

run: pod3

name: pod3

spec:

containers:

- image: nginx

name: pod3

resources: {}

dnsPolicy: ClusterFirst

restartPolicy: Always

status: {}

[root@master ~]# kubectl apply -f pod3.yaml

pod/pod3 created

[root@master ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx 1/1 Running 0 33m 10.244.166.131 node1 <none> <none>

nginx2 1/1 Running 0 10m 10.244.104.4 node2 <none> <none>

pod3 1/1 Running 0 72s 10.244.166.132 node1 <none> <none>

删除pod

[root@master ~]# kubectl delete pods nginx

pod "nginx" deleted

[root@master ~]# kubectl delete pod nginx2

pod "nginx2" deleted

[root@master ~]# kubectl delete pod/pod1

pod "pod1" deleted

[root@master ~]# kubectl delete pods/pod2

pod "pod2" deleted

[root@master ~]# kubectl delete -f pod3.yaml 执行文件删除pod(不是删除文件)

pod "pod3" deleted

镜像下载策略imagePullPolicy,当镜像标签是latest时,默认策略是Always;当镜像标签是自定义时默认策略是IfNotPresent,–image-pull-policy Never 对应yaml文件中 imagePullPolicy: Never

[root@master ~]# kubectl run pod2 --image nginx --image-pull-policy Never --dry-run=client -o yaml > pod2.yaml

Never:直接使用本地镜像(已经下载好了),不会联网去下载,那如果本地没有,则创建失败。

IfNotPresent:如果本地有,则使用本地的,如果本地没有,则联网下载。

Always:不管本地有没有,每一次都联网下载。

[root@master ~]# cat pod2.yaml

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

run: pod2

name: pod2

spec:

containers:

- image: nginx

imagePullPolicy: Never

name: pod2

resources: {}

dnsPolicy: ClusterFirst

restartPolicy: Always

status: {}

yaml语法格式:

1.必须通过空格进行缩进,不能使用tab

2.缩进几个空格没有要求,但是每一级标题必须左对齐

一个pod多个容器,pod4中运行c1和c2容器

[root@master ~]# vim pod4.yaml

[root@master ~]# cat pod4.yaml

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

run: pod4

name: pod4

spec:

containers:

- image: nginx

imagePullPolicy: IfNotPresent

args:

- sleep

- "3600"

name: c1

resources: {}

- image: nginx

imagePullPolicy: IfNotPresent

name: c2

resources: {}

dnsPolicy: ClusterFirst

restartPolicy: Always

status: {}

[root@master ~]# kubectl apply -f pod4.yaml

pod/pod4 created

[root@master ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod4 2/2 Running 0 20s 10.244.166.135 node1 <none> <none>

[root@master ~]# kubectl exec -ti pod4 /bin/bash

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

Defaulted container "c1" out of: c1, c2

root@pod4:/# exit

exit

[root@master ~]# kubectl exec -ti pod4 -- bash 标准的,-- bash 会告诉现在默认进入哪个容器

Defaulted container "c1" out of: c1, c2

root@pod4:/# exit

exit

[root@master ~]# kubectl exec -ti pod4 -c c2 -- bash -c参数指定进入哪个容器

root@pod4:/# ls

bin boot dev docker-entrypoint.d docker-entrypoint.sh etc home lib lib32 lib64 libx32 media mnt opt proc root run sbin srv sys tmp usr var

容器重启策略 restartPolicy(默认Always)

Always:不管什么情况下出现错误或退出,都会一直重启

Never:不管什么情况下出现错误或退出,都不会重启

OnFailure:如果是正常命令执行完毕正常退出,不重启;如果非正常退出或错误,会重启

Always:不管什么情况下出现错误或退出,都会一直重启

[root@master ~]# cat pod2.yaml

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

run: pod2

name: pod2

spec:

containers:

- image: nginx

imagePullPolicy: Never

args:

- sleep

- "10"

name: pod2

resources: {}

dnsPolicy: ClusterFirst

restartPolicy: Always

status: {}

[root@master ~]# kubectl apply -f pod2.yaml

pod/pod2 created

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod2 0/1 CrashLoopBackOff 4 (32s ago) 3m3s 10.244.166.141 node1 <none> <none>

Never:不管什么情况下出现错误或退出,都不会重启

[root@master ~]# cat pod2.yaml

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

run: pod2

name: pod2

spec:

containers:

- image: nginx

imagePullPolicy: Never

args:

- sleep

- "10"

name: pod2

resources: {}

dnsPolicy: ClusterFirst

restartPolicy: Never

status: {}

[root@master ~]# kubectl apply -f pod2.yaml

pod/pod2 created

[root@master ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod2 0/1 Completed 0 15s 10.244.166.137 node1 <none> <none>

OnFailure:如果是正常命令执行完毕退出,不重启;如果非正常退出,会重启

OnFailure 正常退出

[root@master ~]# cat pod2.yaml

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

run: pod2

name: pod2

spec:

containers:

- image: nginx

imagePullPolicy: Never

args:

- sleep

- "10"

name: pod2

resources: {}

dnsPolicy: ClusterFirst

restartPolicy: OnFailure

status: {}

[root@master ~]# kubectl apply -f pod2.yaml

pod/pod2 created

[root@master ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod2 0/1 Completed 0 21s 10.244.166.138 node1 <none> <none>

OnFailure 非正常退出,会反复重启

sleeperror命令错误,非正常退出,会反复重启CrashLoopBackOff

[root@master ~]# cat pod2.yaml

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

run: pod2

name: pod2

spec:

containers:

- image: nginx

imagePullPolicy: Never

args:

- sleeperror

- "10"

name: pod2

resources: {}

dnsPolicy: ClusterFirst

restartPolicy: OnFailure

status: {}

[root@master ~]# kubectl apply -f pod2.yaml

pod/pod2 created

[root@master ~]# kubectl get pod -w 是kubectl get pod --watch简写 监测创建过程的动态更新

NAME READY STATUS RESTARTS AGE

pod2 0/1 CrashLoopBackOff 1 (8s ago) 9s

[root@master ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod2 0/1 CrashLoopBackOff 6 (4m46s ago) 10m 10.244.166.139 node1 <none> <none>

标签label,标签分为主机标签和pod标签

查看主机标签,[root@master ~]# kubectl get nodes --show-labels

NAME STATUS ROLES AGE VERSION LABELS

master Ready control-plane 19h v1.27.0 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=master,kubernetes.io/os=linux,node-role.kubernetes.io/control-plane=,node.kubernetes.io/exclude-from-external-load-balancers=

node1 Ready <none> 19h v1.27.0 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=node1,kubernetes.io/os=linux

node2 Ready <none> 19h v1.27.0 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=node2,kubernetes.io/os=linux

为主机节点添加标签,[root@master ~]# kubectl label nodes node2 disk=ssd

node/node2 labeled

[root@master ~]# kubectl get nodes node2 --show-labels

NAME STATUS ROLES AGE VERSION LABELS

node2 Ready <none> 19h v1.27.0 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,disk=ssd,kubernetes.io/arch=amd64,kubernetes.io/hostname=node2,kubernetes.io/os=linux

注意:标签都是键值对,以等号=作为键值的分割。

disk=ssd aaa/bbb=ccc aaa.bbb/ccc=ddd

为主机删除标签,[root@master ~]# kubectl label nodes node2 disk-

node/node2 unlabeled

[root@master ~]# kubectl get nodes node2 --show-labels

NAME STATUS ROLES AGE VERSION LABELS

node2 Ready <none> 19h v1.27.0 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=node2,kubernetes.io/os=linux

为pod添加标签,pod默认有个run标签

[root@master ~]# cat pod1.yaml

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

run: pod1

aaa: bbb

ccc: memeda

name: pod1

spec:

containers:

- image: nginx

name: pod1

resources: {}

dnsPolicy: ClusterFirst

restartPolicy: Always

status: {}

[root@master ~]# kubectl apply -f pod1.yaml

pod/pod1 created

查看pod标签[root@master ~]# kubectl get pod pod1 --show-labels

NAME READY STATUS RESTARTS AGE LABELS

pod1 1/1 Running 0 18s aaa=bbb,ccc=memeda,run=pod1

命令行给pod加标签,[root@master ~]# kubectl label pod pod1 abc=hehehe

pod/pod1 labeled

[root@master ~]# kubectl get pod pod1 --show-labels

NAME READY STATUS RESTARTS AGE LABELS

pod1 1/1 Running 0 7m19s aaa=bbb,abc=hehehe,ccc=memeda,run=pod1

为pod删除标签,[root@master ~]# kubectl label pod pod1 abc-

pod/pod1 unlabeled

[root@master ~]# kubectl label pod pod1 aaa-

pod/pod1 unlabeled

[root@master ~]# kubectl label pod pod1 ccc-

pod/pod1 unlabeled

[root@master ~]# kubectl get pod pod1 --show-labels

NAME READY STATUS RESTARTS AGE LABELS

pod1 1/1 Running 0 8m50s run=pod1

主机标签,让pod选择对应主机标签进行调度的(指定pod运行在哪个节点上)。pod标签,匹配控制器进行pod管理的。

错误理解:主机定义了一个标签disk=ssd ,pod也定义一个标签 disk=ssd,未来这个pod 一定会发放到 disk=ssd的主机上。

pod如果想要发放到对应的带标签的主机上,不是通过默认的labels标签匹配的,而是通过pod定义的一个nodeSelector选择器来匹配的。也就是说pod如果想要匹配主机标签,是要通过pod自身的 nodeSelector 选择器进行匹配的,不是通过pod自己的labels去匹配的,自己的labels更多的是为了给控制器deployment去匹配使用的。

控制器deployment通过pod的labels标签去管理pod,调度器scheduler决定pod是发放到node1主机还是node2主机。

定义pod在指定主机上运行,nodeSelector

[root@master ~]# kubectl label nodes node2 disk=ssd

node/node2 labeled

[root@master ~]# kubectl get nodes node2 --show-labels

NAME STATUS ROLES AGE VERSION LABELS

node2 Ready <none> 20h v1.27.0 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,disk=ssd,kubernetes.io/arch=amd64,kubernetes.io/hostname=node2,kubernetes.io/os=linux

[root@master ~]# cat pod1.yaml

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

run: pod1

aaa: bbb

ccc: memeda

name: pod1

spec:

nodeSelector:

disk: ssd

containers:

- image: nginx

name: pod1

resources: {}

dnsPolicy: ClusterFirst

restartPolicy: Always

status: {}

[root@master ~]# kubectl apply -f pod1.yaml

pod/pod1 created

[root@master ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod1 1/1 Running 0 7s 10.244.104.7 node2 <none> <none>

如果在pod里面定义了一个不存在的主机标签,直接pending挂起

[root@master ~]# cat pod1.yaml

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

run: pod1

aaa: bbb

ccc: memeda

name: pod1

spec:

nodeSelector:

disk: ssdaaa

containers:

- image: nginx

name: pod1

resources: {}

dnsPolicy: ClusterFirst

restartPolicy: Always

status: {}

[root@master ~]# kubectl apply -f pod1.yaml

pod/pod1 created

[root@master ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod1 0/1 Pending 0 12s <none> <none> <none> <none>

特殊标签,管理角色名称的,角色是通过一个特殊标签来管理的

master节点的control-plane角色是这个特殊标签node-role.kubernetes.io/control-plane=

[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready control-plane 20h v1.27.0

node1 Ready <none> 20h v1.27.0

node2 Ready <none> 20h v1.27.0

[root@master ~]# kubectl get nodes --show-labels

NAME STATUS ROLES AGE VERSION LABELS

master Ready control-plane 20h v1.27.0 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=master,kubernetes.io/os=linux,node-role.kubernetes.io/control-plane=,node.kubernetes.io/exclude-from-external-load-balancers=

node1 Ready <none> 20h v1.27.0 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=node1,kubernetes.io/os=linux

node2 Ready <none> 20h v1.27.0 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,disk=ssd,kubernetes.io/arch=amd64,kubernetes.io/hostname=node2,kubernetes.io/os=linux

把角色删除[root@master ~]# kubectl label nodes master node-role.kubernetes.io/control-plane-

node/master unlabeled

[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready <none> 20h v1.27.0

node1 Ready <none> 20h v1.27.0

node2 Ready <none> 20h v1.27.0

添加自定义角色,[root@master ~]# kubectl label nodes master node-role.kubernetes.io/master=

node/master labeled

[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready master 20h v1.27.0

node1 Ready <none> 20h v1.27.0

node2 Ready <none> 20h v1.27.0

[root@master ~]# kubectl label nodes node1 node-role.kubernetes.io/node1=

node/node1 labeled

[root@master ~]# kubectl label nodes node2 node-role.kubernetes.io/node2=

node/node2 labeled

[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready master 20h v1.27.0

node1 Ready node1 20h v1.27.0

node2 Ready node2 20h v1.27.0

4、POD调度管理

cordon告警警戒

drain 包含了两个动作(cordon 告警 evicted 驱逐)

taint 污点

当创建一个pod的时候,会根据scheduler调度算法,分布在不同的节点上。

cordon(节点维护,临时拉警戒线,新的pod不可以调度到其上面),一旦对某个节点执行了cordon操作,就意味着,新的pod不可以调度到其上面,原来该节点运行的pod不受影响。SchedulingDisabled禁止调度

添加警戒cordon,[root@master ~]# kubectl cordon node1

node/node1 cordoned

[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready master 26h v1.27.0

node1 Ready,SchedulingDisabled node1 25h v1.27.0

node2 Ready node2 25h v1.27.0

[root@master ~]# kubectl apply -f pod3.yaml

pod/pod3 created

[root@master ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod3 1/1 Running 0 29s 10.244.104.8 node2 <none> <none>

取消警戒uncordon,[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready master 26h v1.27.0

node1 Ready,SchedulingDisabled node1 26h v1.27.0

node2 Ready node2 26h v1.27.0

[root@master ~]# kubectl uncordon node1

node/node1 uncordoned

[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready master 26h v1.27.0

node1 Ready node1 26h v1.27.0

node2 Ready node2 26h v1.27.0

[root@master ~]# kubectl apply -f pod2.yaml

pod/pod2 created

[root@master ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod2 0/1 CrashLoopBackOff 2 (16s ago) 65s 10.244.166.142 node1 <none> <none>

pod3 1/1 Running 0 5m51s 10.244.104.8 node2 <none> <none>

drain 包含了两个动作(cordon 告警 evicted 驱逐),新的容器(pod)不可以调度到其上面,把原来该节点运行的容器删除,再在其它节点上创建该容器。(驱逐不是迁移,而是删除原来的重新创建)

[root@master ~]# kubectl run pod5 --image nginx --dry-run=client -o yaml > pod5.yaml

[root@master ~]# kubectl apply -f pod5.yaml

pod/pod5 created

[root@master ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod5 1/1 Running 0 45s 10.244.166.143 node1 <none> <none>

[root@master ~]# kubectl drain node1

node/node1 cordoned

error: unable to drain node "node1" due to error:[cannot delete DaemonSet-managed Pods (use --ignore-daemonsets to ignore): calico-system/calico-node-cdjl8, calico-system/csi-node-driver-kdqqx, kube-system/kube-proxy-dwgth, cannot delete Pods declare no controller (use --force to override): default/pod5], continuing command...

There are pending nodes to be drained:

node1

cannot delete DaemonSet-managed Pods (use --ignore-daemonsets to ignore): calico-system/calico-node-cdjl8, calico-system/csi-node-driver-kdqqx, kube-system/kube-proxy-dwgth

cannot delete Pods declare no controller (use --force to override): default/pod5

[root@master ~]# kubectl drain node1 --ignore-daemonsets --force

node/node1 already cordoned

Warning: ignoring DaemonSet-managed Pods: calico-system/calico-node-cdjl8, calico-system/csi-node-driver-kdqqx, kube-system/kube-proxy-dwgth; deleting Pods that declare no controller: default/pod5

evicting pod default/pod5

evicting pod calico-system/calico-typha-69c55766b7-54ct4

evicting pod tigera-operator/tigera-operator-5f4668786-l7kgw

evicting pod calico-apiserver/calico-apiserver-7f679fc855-g58ph

pod/tigera-operator-5f4668786-l7kgw evicted

pod/calico-typha-69c55766b7-54ct4 evicted

pod/calico-apiserver-7f679fc855-g58ph evicted

pod/pod5 evicted

node/node1 drained

[root@master ~]# kubectl get pod

No resources found in default namespace.

[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready master 27h v1.27.0

node1 Ready,SchedulingDisabled node1 26h v1.27.0

node2 Ready node2 26h v1.27.0

[root@master ~]# kubectl uncordon node1 解除警戒

node/node1 uncordoned

[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready master 27h v1.27.0

node1 Ready node1 26h v1.27.0

node2 Ready node2 26h v1.27.0

真正的体现驱逐的概念,可以使用deployment 控制器来控制pod。

[root@master ~]# kubectl create deployment web --image nginx --dry-run=client -o yaml > web.yaml

[root@master ~]# vim web.yaml 副本数改为5(replicas: 5),直接使用本地镜像(imagePullPolicy: Never)

[root@master ~]# cat web.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

creationTimestamp: null

labels:

app: web

name: web

spec:

replicas: 5

selector:

matchLabels:

app: web

strategy: {}

template:

metadata:

creationTimestamp: null

labels:

app: web

spec:

containers:

- image: nginx

imagePullPolicy: Never

name: nginx

resources: {}

status: {}

[root@master ~]# kubectl apply -f web.yaml

deployment.apps/web created

[root@master ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

web-74b7d5df6b-2dps5 1/1 Running 0 11s 10.244.166.146 node1 <none> <none>

web-74b7d5df6b-7bv9n 1/1 Running 0 11s 10.244.166.145 node1 <none> <none>

web-74b7d5df6b-7mc2k 1/1 Running 0 11s 10.244.104.10 node2 <none> <none>

web-74b7d5df6b-9hvfl 1/1 Running 0 11s 10.244.166.144 node1 <none> <none>

web-74b7d5df6b-h7cns 1/1 Running 0 11s 10.244.104.9 node2 <none> <none>

[root@master ~]# kubectl drain node1 --ignore-daemonsets

node/node1 cordoned

Warning: ignoring DaemonSet-managed Pods: calico-system/calico-node-cdjl8, calico-system/csi-no de-driver-kdqqx, kube-system/kube-proxy-dwgth

evicting pod default/web-74b7d5df6b-9hvfl

evicting pod calico-system/calico-typha-69c55766b7-25m7j

evicting pod default/web-74b7d5df6b-2dps5

evicting pod default/web-74b7d5df6b-7bv9n

pod/calico-typha-69c55766b7-25m7j evicted

pod/web-74b7d5df6b-7bv9n evicted

pod/web-74b7d5df6b-9hvfl evicted

pod/web-74b7d5df6b-2dps5 evicted

node/node1 drained

[root@master ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

web-74b7d5df6b-7jnxb 1/1 Running 0 4m30s 10.244.104.13 node2 <none> <none>

web-74b7d5df6b-7mc2k 1/1 Running 0 5m14s 10.244.104.10 node2 <none> <none>

web-74b7d5df6b-8zztw 1/1 Running 0 4m30s 10.244.104.12 node2 <none> <none>

web-74b7d5df6b-9s57p 1/1 Running 0 4m30s 10.244.104.11 node2 <none> <none>

web-74b7d5df6b-h7cns 1/1 Running 0 5m14s 10.244.104.9 node2 <none> <none>

[root@master ~]# kubectl uncordon node1 解除警戒

node/node1 uncordoned

[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready master 27h v1.27.0

node1 Ready node1 27h v1.27.0

node2 Ready node2 27h v1.27.0

taint 污点

不管pod如何调度,都不会自动调度到master节点上,为什么呢?因为master节点上默认有污点。

[root@master ~]# kubectl describe nodes master |grep -i taint

Taints: node-role.kubernetes.io/control-plane:NoSchedule

[root@master ~]# kubectl describe nodes node1 |grep -i taint

Taints: <none>

[root@master ~]# kubectl describe nodes node2 |grep -i taint

Taints: <none>

taint 污点和cordon警戒区别在哪?都是不让调度。cordon警戒了,新的pod是不能够在该节点上创建的,而taint污点,可以通过设置toleration 容忍度,来把对应的pod调度到该节点上。

增加污点,:NoSchedule这个是固定搭配

[root@master ~]# kubectl taint node node2 aaa=bbb:NoSchedule

node/node2 tainted

[root@master ~]# kubectl describe nodes node2 |grep -i taint

Taints: aaa=bbb:NoSchedule

[root@master ~]# kubectl delete -f web.yaml

deployment.apps "web" deleted

[root@master ~]# kubectl get pod -o wide

No resources found in default namespace.

[root@master ~]# kubectl apply -f web.yaml 有污点的节点node2不会运行pod

deployment.apps/web created

根据实验得出,5个pod都会运行在node1上,因为node2上存在污点了。

[root@master ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

web-74b7d5df6b-6ln6s 1/1 Running 0 10s 10.244.166.150 node1 <none> <none>

web-74b7d5df6b-8crhm 1/1 Running 0 10s 10.244.166.147 node1 <none> <none>

web-74b7d5df6b-d8w6q 1/1 Running 0 10s 10.244.166.148 node1 <none> <none>

web-74b7d5df6b-ngk5f 1/1 Running 0 10s 10.244.166.149 node1 <none> <none>

web-74b7d5df6b-sxzb9 1/1 Running 0 10s 10.244.166.151 node1 <none> <none>

删除污点,:NoSchedule-

[root@master ~]# kubectl taint node node2 aaa=bbb:NoSchedule-

node/node2 untainted

[root@master ~]# kubectl describe nodes node2 |grep -i taint

Taints: <none>

[root@master ~]# kubectl delete -f web.yaml

deployment.apps "web" deleted

[root@master ~]# kubectl apply -f web.yaml

deployment.apps/web created

[root@master ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

web-74b7d5df6b-g29qk 1/1 Running 0 4s 10.244.104.14 node2 <none> <none>

web-74b7d5df6b-h7x9w 1/1 Running 0 4s 10.244.166.154 node1 <none> <none>

web-74b7d5df6b-n9k6c 1/1 Running 0 4s 10.244.104.15 node2 <none> <none>

web-74b7d5df6b-pwrgt 1/1 Running 0 4s 10.244.166.153 node1 <none> <none>

web-74b7d5df6b-tt9st 1/1 Running 0 4s 10.244.166.152 node1 <none> <none>

容忍度 toleration

虽然node2节点有污点,但也可以进行分配pod,在pod里面加上容忍度即可。这里容忍度是污点等于什么就行(operator: “Equal”),看官网(k8s官网有中文)

[root@master ~]# kubectl delete -f web.yaml

deployment.apps "web" deleted

[root@master ~]# kubectl taint node node2 aaa=bbb:NoSchedule

node/node2 tainted

[root@master ~]# kubectl describe nodes node2 |grep -i taint

Taints: aaa=bbb:NoSchedule

[root@master ~]# vim web.yaml 容忍污点是key: "aaa"和value: "bbb"就行(operator: "Equal")

[root@master ~]# cat web.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

creationTimestamp: null

labels:

app: web

name: web

spec:

replicas: 5

selector:

matchLabels:

app: web

strategy: {}

template:

metadata:

creationTimestamp: null

labels:

app: web

spec:

containers:

- image: nginx

imagePullPolicy: Never

name: nginx

resources: {}

tolerations:

- key: "aaa"

operator: "Equal"

value: "bbb"

effect: "NoSchedule"

status: {}

[root@master ~]# kubectl apply -f web.yaml

deployment.apps/web created

[root@master ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

web-6bcf86dff4-68vwc 1/1 Running 0 7s 10.244.166.159 node1 <none> <none>

web-6bcf86dff4-9ntmd 1/1 Running 0 7s 10.244.104.18 node2 <none> <none>

web-6bcf86dff4-bt2d4 1/1 Running 0 7s 10.244.104.19 node2 <none> <none>

web-6bcf86dff4-ls89g 1/1 Running 0 7s 10.244.166.158 node1 <none> <none>

web-6bcf86dff4-wxbwj 1/1 Running 0 7s 10.244.166.160 node1 <none> <none>

5、存储管理

存储volume,docker默认情况下,数据保存在容器层,一旦删除容器,数据也随之删除。

在k8s环境里,pod运行容器,之前也没有指定存储,当删除pod的时候,之前在pod里面写入的数据就没有了。

本地存储:emptyDir临时、hostPath永久

emptyDir 临时(不是永久的,pod删除之后,主机层随机的目录也删除)

对于emptyDir来说,会在pod所在的物理机上生成一个随机目录。pod的容器会挂载到这个随机目录上。当pod容器删除后,随机目录也会随之删除。

[root@master ~]# kubectl run podvol1 --image nginx --image-pull-policy Never --dry-run=client -o yaml > podvol1.yaml

[root@master ~]# vim podvol1.yaml

[root@master ~]# cat podvol1.yaml

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

run: podvol1

name: podvol1

spec:

volumes:

- name: vol1

emptyDir: {}

containers:

- image: nginx

imagePullPolicy: Never

name: podvol1

resources: {}

volumeMounts:

- name: vol1

mountPath: /abc #容器挂载目录

dnsPolicy: ClusterFirst

restartPolicy: Always

status: {}

[root@master ~]# kubectl apply -f podvol1.yaml

pod/podvol1 created

[root@master ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

podvol1 1/1 Running 0 64s 10.244.166.162 node1 <none> <none>

[root@master ~]# kubectl exec -ti pods/podvol1 -- bash

root@podvol1:/# cd /abc

root@podvol1:/abc# touch mpp.txt

root@podvol1:/abc# ls

mpp.txt

来到pod所在的node1节点上查询文件

[root@node1 ~]# find / -name mpp.txt

/var/lib/kubelet/pods/d4038fb5-ca09-4291-af0e-75a6de6ebe20/volumes/kubernetes.io~empty-dir/vol1/mpp.txt

一旦删除pod,那么pod里面的数据就随之删除,即node1节点无该文件

[root@master ~]# kubectl delete -f podvol1.yaml

pod "podvol1" deleted

[root@node1 ~]# find / -name mpp.txt

[root@node1 ~]#

hostPath(是永久的,类似docker -v /host_dir:/docker_dir主机层指定的目录)指定主机挂载目录。

[root@master ~]# cat podvol2.yaml

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

run: podvol2

name: podvol2

spec:

volumes:

- name: vol1

emptyDir: {}

- name: vol2

hostPath:

path: /host_dir #主机节点挂载目录

containers:

- image: nginx

imagePullPolicy: Never

name: podvol2

resources: {}

volumeMounts:

- name: vol2

mountPath: /container_dir #容器挂载目录

dnsPolicy: ClusterFirst

restartPolicy: Always

status: {}

[root@master ~]# kubectl apply -f podvol2.yaml

pod/podvol2 created

[root@master ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

podvol2 1/1 Running 0 7s 10.244.166.164 node1 <none> <none>

[root@master ~]# kubectl exec -ti pods/podvol2 -- bash

root@podvol2:/# cd /container_dir

root@podvol2:/container_dir# touch 2.txt

[root@node1 ~]# cd /host_dir

[root@node1 host_dir]# ls

2.txt

[root@master ~]# kubectl delete -f podvol2.yaml

pod "podvol2" deleted

[root@node1 host_dir]# ls

2.txt

网络存储NFS

如果上面的pod是在node1上运行的,目录也是在node1上的永久目录,但是未来该pod万一调度到了node2上面了,就找不到数据了。保存的记录就没有了。没有办法各个节点之间进行同步。可以用网络存储。

网络存储支持很多种类型 nfs/ceph/iscsi等都可以作为后端存储来使用。举例NFS。

完整克隆一台NFS服务器(也可用某台节点搭建NFS)

yum install -y yum-utils vim bash-completion net-tools wget nfs-utils 或只需yum install -y nfs-utils

[root@nfs ~]# mkdir /nfsdata

[root@nfs ~]# systemctl start nfs-server.service

[root@nfs ~]# systemctl enable nfs-server.service

[root@nfs ~]# systemctl stop firewalld.service

[root@nfs ~]# systemctl disable firewalld.service

[root@nfs ~]# setenforce 0

[root@nfs ~]# vim /etc/selinux/config

[root@nfs ~]# vim /etc/exports

[root@nfs ~]# cat /etc/exports

/nfsdata *(rw,async,no_root_squash)

[root@nfs ~]# exportfs -arv 不用重启nfs服务,配置文件就会生效

no_root_squash:登入NFS 主机使用分享目录的使用者,如果是root的话,那么对于这个分享的目录来说,他就具有root 的权限,那这个项目就不安全,不建议使用以root身份写。

exportfs命令:

-a 全部挂载或者全部卸载

-r 重新挂载

-u 卸载某一个目录

-v 显示共享目录

虽然未来的pod要连接nfs,但是真正连接nfs的是pod所在的物理主机。所以作为物理主机(客户端)也要安装nfs客户端。

[root@node1 ~]# yum install -y nfs-utils

[root@node2 ~]# yum install -y nfs-utils

客户端尝试挂载

[root@node1 ~]# mount 10.1.1.202:/nfsdata /mnt

[root@node1 ~]# df -Th

10.1.1.202:/nfsdata nfs4 96G 4.8G 91G 6% /mnt

[root@node1 ~]# umount /mnt

[root@master ~]# vim podvol3.yaml

[root@master ~]# cat podvol3.yaml

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

run: podvol3

name: podvol3

spec:

volumes:

- name: vol1

emptyDir: {}

- name: vol2

hostPath:

path: /host_data

- name: vol3

nfs:

server: 10.1.1.202 #这里用node2搭建nfs服务了

path: /nfsdata

containers:

- image: nginx

imagePullPolicy: Never

name: podvol3

resources: {}

volumeMounts:

- name: vol3

mountPath: /container_data

dnsPolicy: ClusterFirst

restartPolicy: Always

status: {}

[root@master ~]# kubectl apply -f podvol3.yaml

pod/podvol3 created

[root@master ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

mypod 0/1 ContainerCreating 0 6d18h <none> node1 <none> <none>

podvol3 1/1 Running 0 25m 10.244.166.167 node1 <none> <none>

注意:如果pod的status一直处于ContainerCreating状态,可能是防火墙未关闭。

[root@master ~]# kubectl -ti exec podvol3 -- bash

root@podvol3:/# df -Th

Filesystem Type Size Used Avail Use% Mounted on

10.1.1.202:/nfsdata nfs4 96G 4.8G 91G 6% /container_data

在pod中写数据

root@podvol3:/# cd /container_data

root@podvol3:/container_data# touch haha.txt

root@podvol3:/container_data# ls

haha.txt

在node1上查看物理节点是否有挂载

[root@node1 ~]# df -Th

10.1.1.202:/nfsdata nfs4 96G 4.8G 91G 6% /var/lib/kubelet/pods/fa892ec1-bee8-465d-a428-01ea4dcaf4ee/volumes/kubernetes.io~nfs/vol3

在NFS服务器查看

[root@nfs ~]# ls /nfsdata/

haha.txt

多个客户端多个用户连接同一个存储里面的数据,会导致目录重复,后者有没有可能会把整个存储或者某个数据删除掉呢?就会带来一些安全隐患。

持久化存储(存储重点)

持久化存储有两个类型:PersistentVolume/PersistentVolumeClaim,所谓的持久化存储,它只是一种k8s里面针对存储管理的一种机制。后端该对接什么存储,就对接什么存储(NFS/Ceph)。

PV和PVC它不是一种后端存储,PV未来对接的存储可以是NFS/Ceph等,它是一种pod的存储管理方式,PV和PVC是一对一的。

下面是一个存储服务器(NFS),共享多个目录(/data),管理员会在k8s集群中创建 PersistentVolume(PV),这个PV是全局可见的(整个k8s集群可见)。该PV会和存储服务器中的某个目录关联。用户要做的就是创建自己的 PVC,PVC是基于命名空间进行隔离的。PVC仅当前命名空间可见,而PV是全局可见的(整个k8s集群可见)。之后把PVC和PV一对一关联在一起。

持久卷(静态pvc):创建PV(关联后端存储),创建PVC(关联PV),创建POD(关联pvc)

创建PV,这样pv和存储目录就关联在一起了

[root@master ~]# vim pv01.yaml

[root@master ~]# cat pv01.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv01

spec:

capacity:

storage: 5Gi

volumeMode: Filesystem

accessModes:

- ReadWriteOnce

storageClassName: manual

nfs:

path: "/nfsdata"

server: 10.1.1.202

[root@master ~]# kubectl apply -f pv01.yaml

persistentvolume/pv01 created

[root@master ~]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pv01 5Gi RWO Retain Available manual 10s

查看pv具体属性

[root@master ~]# kubectl describe pv pv01

claim为空的,因为PV和PVC还没有任何关联。pv是全局可见的,切换到命名空间依然可以看到pv。

[root@master ~]# kubens calico-system

[root@master ~]# kubens

calico-apiserver

calico-system

default

kube-node-lease

kube-public

kube-system

tigera-operator

[root@master ~]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pv01 5Gi RWO Retain Available manual 75s

[root@master ~]# kubens default

Context "kubernetes-admin@kubernetes" modified.

Active namespace is "default".

创建PVC,pvc创建完成之后,自动完成pv关联。

[root@master ~]# vim pvc01.yaml

[root@master ~]# cat pvc01.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pvc01

spec:

storageClassName: manual

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 5Gi

[root@master ~]# kubectl apply -f pvc01.yaml

persistentvolumeclaim/pvc01 created

[root@master ~]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pv01 5Gi RWO Retain Bound default/pvc01 manual 2m16s

[root@master ~]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

pvc01 Bound pv01 5Gi RWO manual 14s

PVC和PV是通过什么关联的呢?一个是accessModes,这个参数在pv和pvc里面必须一致。然后大小,pvc指定的大小必须小于等于pv的大小才能关联成功。

而且一个pv只能和一个pvc进行关联。比如切换命名空间,再次运行pvc,查看状态。就会一直处于pending状态。

[root@master ~]# kubens calico-system

Context "kubernetes-admin@kubernetes" modified.

Active namespace is "calico-system".

[root@master ~]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pv01 5Gi RWO Retain Bound default/pvc01 manual 12m

[root@master ~]# kubectl get pvc

No resources found in calico-system namespace.

[root@master ~]# kubectl apply -f pvc01.yaml

persistentvolumeclaim/pvc01 created

[root@master ~]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

pvc01 Pending manual 4s

谁操作的快,谁就能关联成功,而且不可控,因为命名空间相对独立,互相看不到。

这时候除了accessModes和大小,还有一个storageClassName可以控制,该参数随便自定义名称,但优先级最高。也就是pv如果定义了该参数,pvc没有该参数,即使满足了accessModes和大小也不会成功。

[root@master ~]# kubectl delete pvc pvc01

persistentvolumeclaim "pvc01" deleted

[root@master ~]# kubectl get pvc

No resources found in calico-system namespace.

[root@master ~]# kubens default

Context "kubernetes-admin@kubernetes" modified.

Active namespace is "default".

[root@master ~]# kubens

calico-apiserver

calico-system

default

kube-node-lease

kube-public

kube-system

tigera-operator

[root@master ~]# kubectl delete pvc pvc01

persistentvolumeclaim "pvc01" deleted

[root@master ~]# kubectl delete pv pv01

persistentvolume "pv01" deleted

[root@master ~]# kubectl get pvc

No resources found in default namespace.

[root@master ~]# kubectl get pv

No resources found

[root@master ~]# vim pv01.yaml

[root@master ~]# cat pv01.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv01

spec:

capacity:

storage: 5Gi

volumeMode: Filesystem

accessModes:

- ReadWriteOnce

storageClassName: aaa

nfs:

path: "/nfsdata"

server: 10.1.1.202

[root@master ~]# kubectl apply -f pv01.yaml

persistentvolume/pv01 created

[root@master ~]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pv01 5Gi RWO Retain Available aaa 4s

[root@master ~]# cat pvc01.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pvc01

spec:

storageClassName: manual

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 5Gi

[root@master ~]# kubectl apply -f pvc01.yaml

persistentvolumeclaim/pvc01 created

[root@master ~]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

pvc01 Pending manual 4s

创建pod

[root@master ~]# vim podpv.yaml

[root@master ~]# cat podpv.yaml

apiVersion: v1

kind: Pod

metadata:

name: mypod

spec:

containers:

- name: myfrontend

image: nginx

imagePullPolicy: Never

volumeMounts:

- mountPath: "/var/www/html"

name: mypd

volumes:

- name: mypd

persistentVolumeClaim:

claimName: pvc01

[root@master ~]# kubectl apply -f podpv.yaml

pod/mypod created

[root@master ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

mypod 1/1 Running 0 24s 10.244.166.168 node1 <none> <none>

[root@master ~]# kubectl exec -ti mypod -- bash

root@mypod:/# cd /var/www/html/

root@mypod:/var/www/html# ls

haha.txt

root@mypod:/var/www/html# touch ddd

如果写数据提示permission denied ,说明没有权限。注意 /etc/exports里面的权限要加上 no_root_squash。

root@mypod:/var/www/html# ls

haha.txt ddd

root@mypod:/var/www/html# exit

exit

静态pvc,创建PV(关联后端存储),创建PVC(关联PV),创建POD(关联pvc)

删除,POD删除,存储数据依然存在。PVC删除,存储数据依然存在。PV删除,存储数据依然存在。

删除所有资源,删除pod、pvc、pv

[root@master ~]# kubectl delete -f podpv.yaml

pod "mypod" deleted

[root@master ~]# kubectl delete pvc pvc01

persistentvolumeclaim "pvc01" deleted

[root@master ~]# kubectl delete pv pv01

persistentvolume "pv01" deleted

删除资源后,NFS数据依然存在

[root@yw ~]# ls /nfsdata/

haha.txt ddd

持久卷(静态pvc)优缺点

优点:如果误删除了pvc,那么数据依然被保留下来,不会丢数据。

缺点:如果我确实要删除pvc,那么需要手工把底层存储数据单独删除。

动态卷(动态pvc)

动态卷(动态pvc)实现随着删除PVC,底层存储数据也随之删除。

POD删除,存储数据不会删除。

PVC删除,让存储数据和PV自动删除。

动态卷(动态pvc),不需要手工单独创建pv,只需要创建pvc,那么pv会自动创建出来,只要删除pvc,pv也会随之自动删除,底层存储数据也会随之删除。

持久卷(静态pvc),pv pvc手工创建的,是静态的;直接创建pvc,pv跟着创建出这是动态卷。

查看pv时RECLAIM POLICY 有两种常用取值:Delete、Retain;

Delete:表示删除PVC的时候,PV也会一起删除,同时也删除PV所指向的实际存储空间;

Retain:表示删除PVC的时候,PV不会一起删除,而是变成Released状态等待管理员手动清理;

Delete:

优点:实现数据卷的全生命周期管理,应用删除PVC会自动删除后端云盘。能有效避免出现大量闲置云盘没有删除的情况。

缺点:删除PVC时候一起把后端云盘一起删除,如果不小心误删pvc,会出现后端数据丢失;

Retain:

优点:后端云盘需要手动清理,所以出现误删的可能性比较小;

缺点:没有实现数据卷全生命周期管理,常常会造成pvc、pv删除后,后端云盘闲置没清理,长此以往导致大量磁盘浪费。

查看当前的回收策略

[root@master ~]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pv01 5Gi RWO Retain Bound default/pvc01 manual 12m

看RECLAIM POLICY,retain是不回收数据,删除pvc后,pv不可用,并长期保持released状态。

删除pvc和pv后,底层存储数据依然存在。如果想要使用pv和pvc,必须全部删除,重新创建。

[root@master ~]# kubectl delete pvc pvc01

persistentvolumeclaim "pvc01" deleted

[root@master ~]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pv01 5Gi RWO Retain Released default/pvc01 manual 104s

nfs-subdir-external-provisioner是一个Kubernetes的外部卷(Persistent Volume)插件,它允许在Kubernetes集群中动态地创建和管理基于NFS共享的子目录卷。

获取 NFS Subdir External Provisioner 文件,地址:https://github.com/kubernetes-sigs/nfs-subdir-external-provisioner/tree/master/deploy

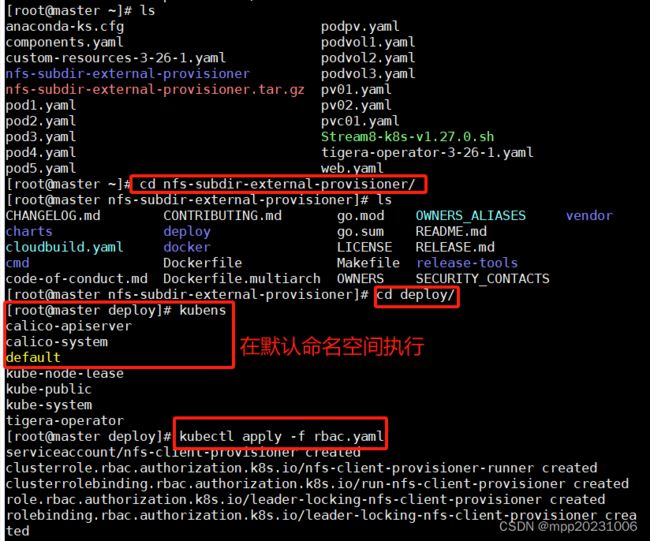

1、上传nfs-subdir-external-provisioner.tar.gz文件到mater节点家目录(已用node2节点搭建NFS服务)

设置RBAC授权[root@master ~]# tar -zxvf nfs-subdir-external-provisioner.tar.gz

这里用默认命名空间和rbac.yaml的default一致,所以rbac.yaml中不用改命名空间。

配置deployment.yaml,将镜像路径改为阿里云镜像路径和NFS共享目录修改下

image: registry.cn-hangzhou.aliyuncs.com/cloudcs/nfs-subdir-external-provisioner:v4.0.2

[root@master deploy]# kubectl apply -f deployment.yaml

deployment.apps/nfs-client-provisioner created

[root@master deploy]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nfs-client-provisioner-5d44b8c459-vszvz 1/1 Running 0 40s

创建storage class 存储类,才能实现动态卷供应

[root@master deploy]# kubectl get sc

No resources found

[root@master deploy]# kubectl apply -f class.yaml

storageclass.storage.k8s.io/nfs-client created

[root@master deploy]# kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

nfs-client k8s-sigs.io/nfs-subdir-external-provisioner Delete Immediate false 10s

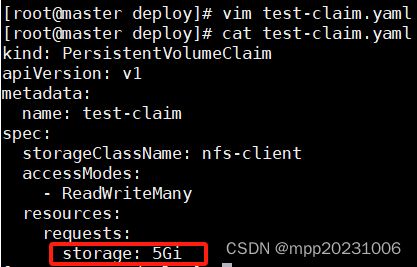

创建PVC,动态卷不用先手工创建pv,可直接创建pvc,pv会跟着创建出来。

修改test-claim.yaml,修改大小为5G

[root@master deploy]# kubectl get pv

No resources found

[root@master deploy]# kubectl get pvc

No resources found in default namespace.

[root@master deploy]# kubectl apply -f test-claim.yaml

persistentvolumeclaim/test-claim created

[root@master deploy]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pvc-cf280353-4495-4d91-9028-966007d43238 5Gi RWX Delete Bound default/test-claim nfs-client 19s

[root@master deploy]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

test-claim Bound pvc-cf280353-4495-4d91-9028-966007d43238 5Gi RWX nfs-client 21s

nfs查看,会自动创建这个目录,后面删除也会删除这个目录

# ls /nfsdata

default-test-claim-pvc-cf280353-4495-4d91-9028-966007d43238

[root@master deploy]# vim test-pod.yaml

[root@master deploy]# cat test-pod.yaml

kind: Pod

apiVersion: v1

metadata:

name: test-pod

spec:

containers:

- name: test-pod

image: nginx

imagePullPolicy: IfNotPresent

volumeMounts:

- name: nfs-pvc

mountPath: "/abc"

restartPolicy: "Never"

volumes:

- name: nfs-pvc

persistentVolumeClaim:

claimName: test-claim

[root@master deploy]# kubectl apply -f test-pod.yaml

pod/test-pod created

[root@master deploy]# kubectl get pod -w 是kubectl get pod --watch简写 监测创建过程的动态更新

NAME READY STATUS RESTARTS AGE

nfs-client-provisioner-5d44b8c459-k27nb 1/1 Running 7 (2m20s ago) 11h

test-pod 1/1 Running 0 15s

^C[root@master deploy]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nfs-client-provisioner-5d44b8c459-k27nb 1/1 Running 7 (2m34s ago) 11h 10.244.104.14 node2 <none> <none>

test-pod 1/1 Running 0 29s 10.244.166.140 node1 <none> <none>

[root@master deploy]# kubectl exec -ti test-pod -- bash

root@test-pod:/# cd /abc

root@test-pod:/abc# touch a.txt

root@test-pod:/abc# exit

exit

nfs查看,会有刚在容器中创建的文件

# ls /nfsdata

default-test-claim-pvc-cf280353-4495-4d91-9028-966007d43238

# cd /nfsdata/default-test-claim-pvc-cf280353-4495-4d91-9028-96600 7d43238

[root@nfs default-test-claim-pvc-cf280353-4495-4d91-9028-966007d43238]# ls

a.txt

删除PVC,发现PV和NFS存储都删除了

[root@master deploy]# kubectl delete -f test-pod.yaml

pod "test-pod" deleted

[root@master deploy]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

test-claim Bound pvc-cf280353-4495-4d91-9028-966007d43238 5Gi RWX nfs-client 21s

[root@master deploy]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pvc-cf280353-4495-4d91-9028-966007d43238 5Gi RWX Delete Bound default/test-claim nfs-client 19s

[root@master deploy]# kubectl delete -f test-claim.yaml

persistentvolumeclaim "test-claim" deleted

[root@master deploy]# kubectl get pvc

No resources found in default namespace.

[root@master deploy]# kubectl get pv

No resources found

NFS查看底层数据也被删除了,这个目录default-test-claim-pvc-cf280353-4495-4d91-9028-966007d43238删除了

# ls /nfsdata

扩充 PVC 申领,参考官网,https://kubernetes.io/zh-cn/docs/concepts/storage/persistent-volumes/#expanding-persistent-volumes-claims

如果要为某 PVC 请求较大的存储卷,可以编辑 PVC 对象,设置一个更大的尺寸值。 这一编辑操作会触发为下层 PersistentVolume 提供存储的卷的扩充。 Kubernetes 不会创建新的 PV 卷来满足此申领的请求。 与之相反,现有的卷会被调整大小。

动态扩展,需要在存储类class.yaml里面添加一行参数

只有当PVC的存储类中将allowVolumeExpansion设置为true时,才可以扩充该PVC申领

[root@master deploy]# vim class.yaml

[root@master deploy]# cat class.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: nfs-client

provisioner: k8s-sigs.io/nfs-subdir-external-provisioner # or choose another name, must match deployment's env PROVISIONER_NAME'

parameters:

archiveOnDelete: "false"

allowVolumeExpansion: true

现在对扩充 PVC 申领的支持默认处于被启用状态,可以扩充以下类型的卷:

azureFile(已弃用)

csi

flexVolume(已弃用)

gcePersistentDisk(已弃用)

rbd

portworxVolume(已弃用)

[root@master deploy]# kubectl apply -f class.yaml

storageclass.storage.k8s.io/nfs-client configured

[root@master deploy]# kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

nfs-client k8s-sigs.io/nfs-subdir-external-provisioner Delete Immediate true 12h

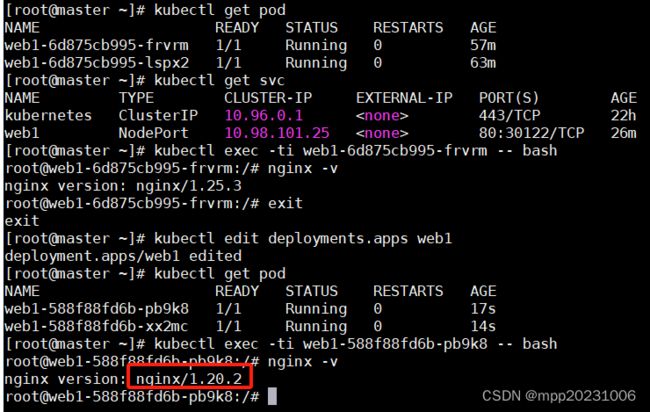

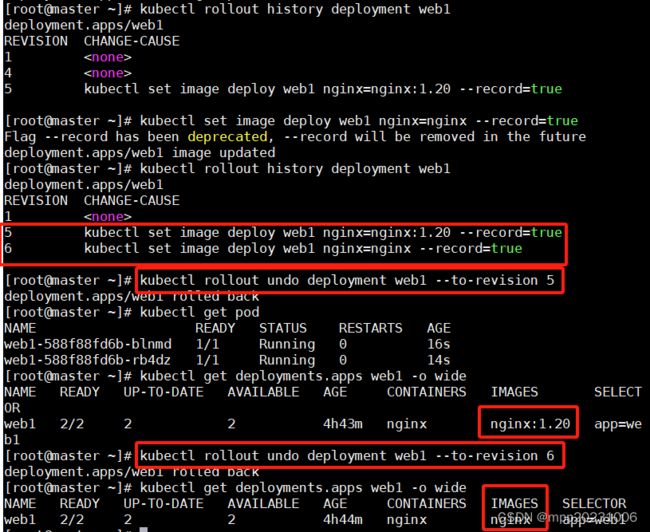

6、Deployment控制器

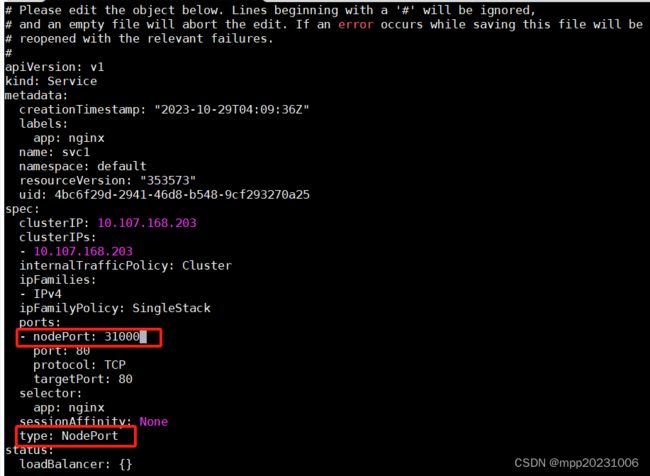

在k8s里面,最小的调度单位是pod,但是pod本身不稳定,导致系统不健壮,没有可再生性(自愈功能)。

在集群中,业务需要很多个pod,而对于这些pod的管理,k8s提供了很多控制器来管理他们,其中一个就叫deploment。

kind: Pod和 kind: Deployment(kind: DaemonSet)都是k8s中一个对象,Deploymen是pod的一个控制器,Deployment可以实现pod的高可用。

查下简称,[root@master ~]# kubectl api-resources |grep depl

deployments deploy apps/v1 true Deployment

[root@master ~]# kubectl api-resources |grep daemon

daemonsets ds apps/v1 true DaemonSet

deployment(deploy)对应cce中无状态工作负载,daemonsets(ds)对应cce中有状态工作负载

daemonset也是一种控制器,也是用来创建pod的,但是和deployment不一样,deploy需要指定副本数,每个节点上都可以运行多个副本。

daemonset不需要指定副本数,会自动的在每个节点上都创建1个副本,不可运行多个。

daemonset作用就是在每个节点上收集日志、监控和管理等。

应用场景:

网络插件的 Agent 组件,都必须运行在每一个节点上,用来处理这个节点上的容器网络。

存储插件的 Agent 组件,也必须运行在每一个节点上,用来在这个节点上挂载远程存储目录,操作容器的 Volume 目录,比如:glusterd、ceph。

监控组件和日志组件,也必须运行在每一个节点上,负责这个节点上的监控信息和日志搜集,比如:fluentd、logstash、Prometheus 等。

[root@master ~]# kubectl get ds

No resources found in default namespace.

[root@master ~]# kubectl get ds -n kube-system

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

kube-proxy 3 3 3 3 3 kubernetes.io/os=linux 45h

[root@master ~]# kubectl get namespaces

NAME STATUS AGE

calico-apiserver Active 44h

calico-system Active 44h

default Active 45h

kube-node-lease Active 45h

kube-public Active 45h

kube-system Active 45h

tigera-operator Active 44h

创建daemonset

[root@master ~]# kubectl create deployment ds1 --image nginx --dry-run=client -o yaml -- sh -c "sleep 3600" > ds1.yaml

[root@master ~]# vim ds1.yaml

[root@master ~]# cat ds1.yaml

apiVersion: apps/v1

kind: DaemonSet

metadata:

creationTimestamp: null

labels:

app: ds1

name: ds1

spec:

selector:

matchLabels:

app: ds1

template:

metadata:

creationTimestamp: null

labels:

app: ds1

spec:

containers:

- command:

- sh

- -c

- sleep 3600

image: nginx

name: nginx

resources: {}

1.更改kind: DaemonSet

2.删除副本数replicas

3.删除strategy

4.删除status

[root@master ~]# kubectl get ds

No resources found in default namespace.

[root@master ~]# kubectl apply -f ds1.yaml

daemonset.apps/ds1 created

[root@master ~]# kubectl get ds

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

ds1 2 2 2 2 2 <none> 7s

[root@master ~]# kubectl get ds -o wide

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE CONTAINERS IMAGES SELECTOR

ds1 2 2 2 2 2 <none> 13s nginx nginx app=ds1

[root@master ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

ds1-crtl4 1/1 Running 0 29s 10.244.166.172 node1 <none> <none>

ds1-kcpjt 1/1 Running 0 29s 10.244.104.54 node2 <none> <none>

每个节点上都创建1个副本,不可运行多个。因为master上有污点,默认master不会创建pod。

[root@master ~]# kubectl describe nodes master |grep -i taint

Taints: node-role.kubernetes.io/control-plane:NoSchedule

[root@master ~]# kubectl delete ds ds1

daemonset.apps "ds1" deleted

集群中只需要告诉deploy,需要多少个pod即可,一旦某个pod宕掉,deploy会生成新的pod,保证集群中的一定存在3个pod。少一个,生成一个,多一个,删除一个。

[root@master ~]# kubectl create deployment web1 --image nginx --dry-run=client -o yaml > web1.yaml

[root@master ~]# vim web1.yaml 将副本数改为3

[root@master ~]# cat web1.yaml

apiVersion: apps/v1

kind: Deployment #指定的类型

metadata: #这个控制器web1的属性信息

creationTimestamp: null

labels:

app: web1

name: web1

spec:

replicas: 3 #pod副本数默认1 这里修改为3

selector:

matchLabels: #匹配标签 Deployment管理pod标签为web1的这些pod,这里的matchlabels 必须匹配下面labels里面的标签(下面的标签可以有多个,但至少匹配一个),如果不匹配,deploy无法管理,会直接报错。

app: web1

strategy: {}

template: #所有的副本通过这个模板创建出来的,3个副本pod按照这个template模板定义的标签labels和镜像image生成pod

metadata:

creationTimestamp: null

labels: #创建出来pod的标签信息

app: web1

spec:

containers:

- image: nginx

imagePullPolicy: IfNotPresent

name: nginx

resources: {}

status: {}

deployment能通过标签web1管理到这3个pod,selector指定的是当前deploy控制器是通过app=web1这个标签来管理和控制pod的。会监控这些pod。删除一个,就启动一个,如果不把deploy删除,那么pod是永远删除不掉的。

[root@master ~]# kubectl apply -f web1.yaml

deployment.apps/web1 created

[root@master ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

web1-58d8bd576b-h98jb 1/1 Running 0 11s 10.244.166.143 node1 <none> <none>

web1-58d8bd576b-nt7rs 1/1 Running 0 11s 10.244.166.142 node1 <none> <none>

web1-58d8bd576b-p8rq5 1/1 Running 0 11s 10.244.104.27 node2 <none> <none>

[root@master ~]# kubectl get pods --show-labels 查看pod标签

NAME READY STATUS RESTARTS AGE LABELS

web1-58d8bd576b-h98jb 1/1 Running 0 76s app=web1,pod-template-hash=58d8bd576b

web1-58d8bd576b-nt7rs 1/1 Running 0 76s app=web1,pod-template-hash=58d8bd576b

web1-58d8bd576b-p8rq5 1/1 Running 0 76s app=web1,pod-template-hash=58d8bd576b

查看deploy控制器

[root@master ~]# kubectl get deployments.apps

NAME READY UP-TO-DATE AVAILABLE AGE

web1 3/3 3 3 18h

[root@master ~]# kubectl get deployments.apps -o wide

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

web1 3/3 3 3 18h nginx nginx app=web1

查看deploy详细信息

[root@master ~]# kubectl describe deployments.apps web1

现在删除node2上的pod,发现立刻又创建一个pod。删除3个pod又重新创建3个pod。

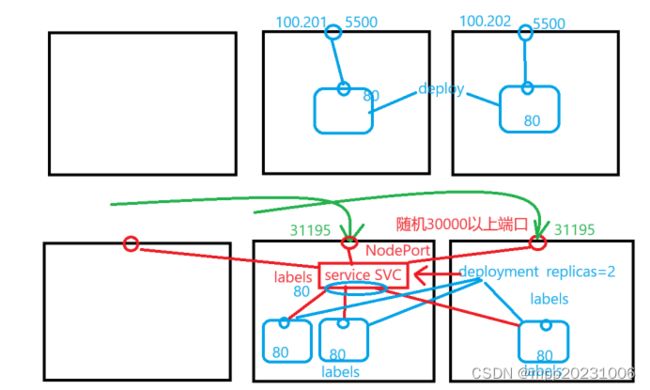

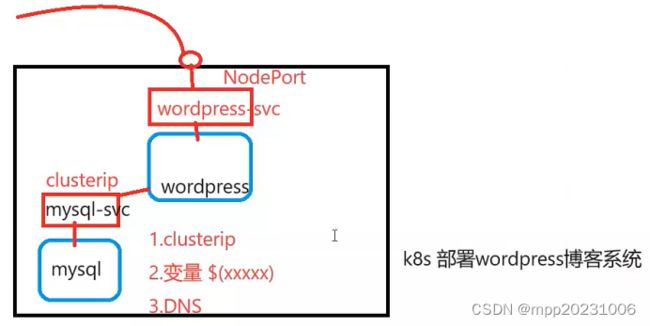

Deployment实现pod的高可用,删除3个pod后又重新创建3个pod,但这3个pod的ip变了,不会影响业务的访问,对外提供业务访问接口的不是pod,外部业务先访问service服务,service服务再将流量分摊到下面的pod,service服务的ip是固定的。

[root@master ~]# kubectl delete pod web1-58d8bd576b-p8rq5

pod "web1-58d8bd576b-p8rq5" deleted

[root@master ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

web1-58d8bd576b-h98jb 1/1 Running 0 159m 10.244.166.143 node1 <none> <none>

web1-58d8bd576b-nt7rs 1/1 Running 0 159m 10.244.166.142 node1 <none> <none>

web1-58d8bd576b-tbkkh 1/1 Running 0 30s 10.244.104.28 node2 <none> <none>

[root@master ~]# kubectl delete pods/web1-58d8bd576b-{h98jb,nt7rs,tbkkh}

pod "web1-58d8bd576b-h98jb" deleted

pod "web1-58d8bd576b-nt7rs" deleted

pod "web1-58d8bd576b-tbkkh" deleted

[root@master ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

web1-58d8bd576b-2zx9z 1/1 Running 0 6s 10.244.104.29 node2 <none> <none>

web1-58d8bd576b-km4f7 1/1 Running 0 6s 10.244.166.144 node1 <none> <none>

web1-58d8bd576b-nk69d 1/1 Running 0 6s 10.244.104.30 node2 <none> <none>

replicas副本数的修改

1、命令行修改replicas副本数

[root@master ~]# kubectl scale deployment web1 --replicas 5

deployment.apps/web1 scaled

[root@master ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

web1-58d8bd576b-2zx9z 1/1 Running 0 6m58s 10.244.104.29 node2 <none> <none>

web1-58d8bd576b-jt8z4 1/1 Running 0 7s 10.244.104.31 node2 <none> <none>

web1-58d8bd576b-km4f7 1/1 Running 0 6m58s 10.244.166.144 node1 <none> <none>

web1-58d8bd576b-nk69d 1/1 Running 0 6m58s 10.244.104.30 node2 <none> <none>

web1-58d8bd576b-qrqlb 1/1 Running 0 7s 10.244.166.145 node1 <none> <none>

2、在线修改pod副本数

[root@master ~]# kubectl edit deployments.apps web1

3、修改yaml文件,之后通过apply -f 更新

[root@master ~]# kubectl apply -f web1.yaml

动态扩展HPA

以上修改副本数,都是基于手工来修改的,如果面对未知的业务系统,业务并发量忽高忽低,总不能手工来来回回修改。HPA (Horizontal Pod Autoscaler) 水平自动伸缩,通过检测pod cpu的负载,解决deploy里某pod负载过高,动态伸缩pod的数量来实现负载均衡。HPA一旦监测pod负载过高,就会通知deploy,要创建更多的副本数,这样每个pod负载就会轻一些。HPA是通过metricservice组件来进行检测的,之前已经安装好了。

HPA动态的检测deployment控制器管理的pod,HPA设置CPU阈值为60%(默认CPU为80%),一旦deployment控制器管理的某个pod的CPU使用率超过60%,HPA会告诉deployment控制器扩容pod,deployment扩容pod数量受HPA限制(HPA设置的pod数量MIN 2 MAX 10),例如deployment扩容5个pod,分担了pod的压力,pod的CPU使用率会降下去,如CPU使用率降到40%,deployment就会按照最小pod数量进行缩容,CPU降到阈值以下就会释放掉刚扩容的pod。

查看节点和pod的cpu和内存

[root@master ~]# kubectl top node

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

master 158m 3% 1438Mi 37%

node1 87m 2% 1036Mi 27%

node2 102m 2% 1119Mi 29%

[root@master ~]# kubectl top pod

NAME CPU(cores) MEMORY(bytes)

web1-58d8bd576b-2gn9v 0m 4Mi

web1-58d8bd576b-2zx9z 0m 4Mi

web1-58d8bd576b-nk69d 0m 4Mi

cpu数量是如何计算

500m(m 1000)= 几个vcpus呢?

500/1000=0.5 个vcpus

1000m/1000=1 个vcpus

创建hpa

[root@master ~]# kubectl autoscale deployment web1 --min 2 --max 10 --cpu-percent 40

horizontalpodautoscaler.autoscaling/web1 autoscaled

--cpu-percent默认cpu使用率阈值默认80%,也可加内存使用率阈值检测,--min 2 --max 10 为pod最小数最大数

[root@master ~]# kubectl get hpa

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

web1 Deployment/web1 <unknown>/40% 2 10 3 88s

这个40%是针对谁的呢?没有说明,所以会显示unknown。

CPU使用率超过40%,HPA会通知deployment扩容,40%是根据多少颗cpu来的呢?

创建hpa时没有指定hpa名称会默认用deployment名称如web1,也可以用–name指定hpa名称,默认会让hpa名称和deployment名称一致,方便管理。

[root@master ~]# kubectl describe hpa web1

describe查看详细信息,获取cpu资源阈值失败了,cpu给多大没有进行初始化,无法获取cpu定量值失败。

删除hpa

[root@master ~]# kubectl delete hpa web1

horizontalpodautoscaler.autoscaling "web1" deleted

[root@master ~]# kubectl get hpa

No resources found in default namespace.

在线修改deployment

[root@master ~]# kubectl edit deployments.apps web1

创建hpa

[root@master ~]# kubectl autoscale deployment web1 --name hpa1 --min 2 --max 10 --cpu-percent 40

horizontalpodautoscaler.autoscaling/hpa1 autoscaled

[root@master ~]# kubectl get hpa

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

hpa1 Deployment/web1 0%/40% 2 10 3 59s

测试hpa,消耗cpu

[root@master ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

web1-6d875cb995-72qjs 1/1 Running 0 4m49s 10.244.166.150 node1 <none> <none>

web1-6d875cb995-lspx2 1/1 Running 0 4m51s 10.244.104.32 node2 <none> <none>

web1-6d875cb995-m69j2 1/1 Running 0 4m53s 10.244.166.149 node1 <none> <none>

[root@master ~]# kubectl exec -ti web1-6d875cb995-m69j2 -- bash

root@web1-6d875cb995-m69j2:/# cat /dev/zero > /dev/null &

[1] 39

root@web1-6d875cb995-m69j2:/# cat /dev/zero > /dev/null &

[2] 40

root@web1-6d875cb995-m69j2:/# cat /dev/zero > /dev/null &

[3] 41

root@web1-6d875cb995-m69j2:/# cat /dev/zero > /dev/null &

[4] 42

root@web1-6d875cb995-m69j2:/# cat /dev/zero > /dev/null &

[5] 43

观察HPA的值

[root@master ~]# kubectl get hpa

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

hpa1 Deployment/web1 196%/40% 2 10 3 4m39s

[root@master ~]# kubectl get hpa

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

hpa1 Deployment/web1 85%/40% 2 10 10 5m21s

hpa设置了pod最少2个最大10个,pod按道理说应该是负载均衡才对,按理说扩展的pod应该为那一个pod分担cpu压力,但从效果上看没有分担压力。因为你这个负载只是形式上的负载均衡,来自于内部产生的负载,所以无法转移,如果是外部来源,就会负载均衡。

到这个pod所运行的节点node1上把cat停掉,ps -ef |grep cat 先查出进程id 再kill -9杀掉进程(等几分钟pod才删除)

外部流量访问主机端口会映射到service端口(集群内所有主机端口和service端口映射),service具备负载均衡能力,service会把流量分摊到service管理的不同pod上。

为了测试效果明显把cpu使用率改成10%