数据转换工具sqoop安装和使用

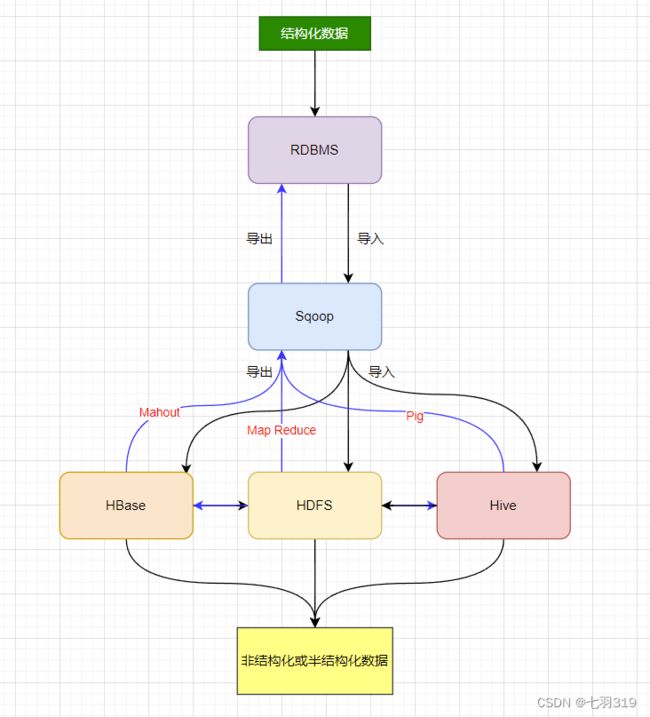

一、Sqoop概述

Sqoop是Apache一款开源工具,主要用于在HDFS、Hive、HBase等数据存储系统与关系性数据库之间传输数据。

导入数据:将Mysql、Oracle等关系型数据库导入到HDFS、Hive、HBase等数据存储系统

导出数据:将HDFS、Hive、HBase等数据存储系统中的数据导出到Mysql、Oracle等关系型数据库

Sqoop拥有一个可扩展的框架,使得它能够从(向)任何支持批量数据传输的外部存储系统导入(导出)数据。

导入(导出)数据都需要Sqoop连接器(connector),这个连接器就是这个框架下的一个模块化组件。

二、Sqoop安装

1.下载上传解压安装包

安装包下载地址:http://archive.apache.org/dist/sqoop/

下载并上传 sqoop-1.4.7.bin__hadoop-2.6.0.tar.gz安装包到hadoop0主机服务器/usr/local/sqoop目录下解压:

[root@hadoop0 sqoop]# tar -xvf sqoop-1.4.7.bin__hadoop-2.6.0.tar.gz

2.配置环境变量

编辑/etc/profile文件添加Sqoop环境变量:

export SQOOP_HOME=/usr/local/sqoop/sqoop-1.4.7.bin__hadoop-2.6.0

export PATH=$SQOOP_HOME/bin:$PATH

使用source /etc/profile命令,让环境变量立即生效。

3.下载上传mysql驱动

https://downloads.mysql.com/archives/c-j/

选择版本下载:

上传驱动包至hadoop0服务器的/usr/local/mysql目录解压

tar -zxvf mysql-connector-java-5.1.49.tar.gz

复制驱动包至$SQOOP_HOME/lib目录下

cp mysql-connector-java-5.1.49-bin.jar $SQOOP_HOME/lib/

查看拷贝结果:

[root@hadoop0 ~]# ll $SQOOP_HOME/lib | grep mysql

-rw-r--r-- 1 root root 1006906 Jun 14 16:03 mysql-connector-java-5.1.49-bin.jar

4.查看Sqoop版本和命令

[root@hadoop0 ~]# sqoop version

Warning: /usr/local/sqoop/sqoop-1.4.7.bin__hadoop-2.6.0/../hcatalog does not exist! HCatalog jobs will fail.

......

2022-06-14 16:06:04,903 INFO sqoop.Sqoop: Running Sqoop version: 1.4.7

Sqoop 1.4.7

git commit id 2328971411f57f0cb683dfb79d19d4d19d185dd8

Compiled by maugli on Thu Dec 21 15:59:58 STD 2017

[root@hadoop0 ~]# sqoop help

.........

2022-06-14 16:07:03,430 INFO sqoop.Sqoop: Running Sqoop version: 1.4.7

usage: sqoop COMMAND [ARGS]

Available commands:

codegen Generate code to interact with database records

create-hive-table Import a table definition into Hive

eval Evaluate a SQL statement and display the results

export Export an HDFS directory to a database table

help List available commands

import Import a table from a database to HDFS

import-all-tables Import tables from a database to HDFS

import-mainframe Import datasets from a mainframe server to HDFS

job Work with saved jobs

list-databases List available databases on a server

list-tables List available tables in a database

merge Merge results of incremental imports

metastore Run a standalone Sqoop metastore

version Display version information

See 'sqoop help COMMAND' for information on a specific command.

5.从Mysql导入数据到HDFS

注意:mysql的用户密码中不能含有特殊符号"!!",如果有需要更改账号密码

登录mysql造测试数据:

mysql> create database db_test_sqoop;

Query OK, 1 row affected (0.01 sec)

mysql> use db_test_sqoop;

Database changed

mysql> create table tb_test_sqoop(id bigint(20) not null auto_increment comment '自增主键', name varchar(32) default null comment '名称', create_time datetime default current_timestamp );

ERROR 1075 (42000): Incorrect table definition; there can be only one auto column and it must be defined as a key

mysql> create table tb_test_sqoop(id bigint(20) not null auto_increment comment '自增主键', name varchar(32) default null comment '名称', create_time datetime default current_timestamp, update_time datetime default current_timestamp on update current_timestamp, primary key (id) using btree) ENGINE=InnoDB AUTO_INCREMENT=1 DEFAULT CHARSET=utf8 ROWW_FORMAT=COMPACT COMMENT='测试Sqoop表' ;

Query OK, 0 rows affected (0.03 sec)

mysql> insert into tb_test_sqoop(name) values("张三");

Query OK, 1 row affected (0.00 sec)

mysql> insert into tb_test_sqoop(name) values("李四");

Query OK, 1 row affected (0.01 sec)

mysql> insert into tb_test_sqoop(name) values("王二");

Query OK, 1 row affected (0.01 sec)

mysql> insert into tb_test_sqoop(name) values("花花");

Query OK, 1 row affected (0.01 sec)

mysql> insert into tb_test_sqoop(name) values("二狗");

Query OK, 1 row affected (0.00 sec)

mysql> insert into tb_test_sqoop(name) values("球球");

Query OK, 1 row affected (0.01 sec)

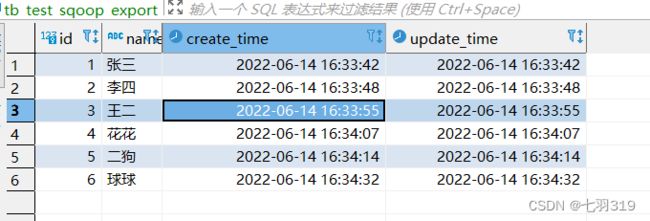

mysql> select * from tb_test_sqoop;

+----+--------+---------------------+---------------------+

| id | name | create_time | update_time |

+----+--------+---------------------+---------------------+

| 1 | 张三 | 2022-06-14 16:33:42 | 2022-06-14 16:33:42 |

| 2 | 李四 | 2022-06-14 16:33:48 | 2022-06-14 16:33:48 |

| 3 | 王二 | 2022-06-14 16:33:55 | 2022-06-14 16:33:55 |

| 4 | 花花 | 2022-06-14 16:34:07 | 2022-06-14 16:34:07 |

| 5 | 二狗 | 2022-06-14 16:34:14 | 2022-06-14 16:34:14 |

| 6 | 球球 | 2022-06-14 16:34:32 | 2022-06-14 16:34:32 |

+----+--------+---------------------+---------------------+

6 rows in set (0.00 sec)

新版Sqoop导入导出数据时,会自动创建实体类,如果执行过程中提示Class xxx not found,此时需要手动复制日志提示中的jar包(例如:/tmp/sqoop-root/compile/5f86b02068d2260bc9a4d6d08f81c0d0/tb_test_sqoop.jar)到$SQOOP_HOME/lib目录下。

导入命令格式:

sqoop import [GENERIC-ARGS] [TOOL-ARGS]

Common arguments:

# Connect to Specify JDBC

--connect <jdbc-uri>

# 用户名

--username <username>

# 密码

--password <password>

Import control arguments:

# 表名

--table <table-name>

# 任务数量,Use 'n' map tasks to import in parallel

-m,--num-mappers <n>

Output line formatting arguments:

# Sets the field separator character 指定接收方字段的分隔符(Output指的是入HDFS文件的字段分隔符)

--fields-terminated-by <char>

开始导入:

sqoop import --connect jdbc:mysql://hadoop0:3306/db_test_sqoop --username root -P --table tb_test_sqoop --fields-terminated-by ',' -m 1

[root@hadoop0 ~]# sqoop import --connect jdbc:mysql://hadoop0:3306/db_test_sqoop --username root -P --table tb_test_sqoop --fields-terminated-by ',' -m 1

Warning: /usr/local/sqoop/sqoop-1.4.7.bin__hadoop-2.6.0/../hcatalog does not exist! HCatalog jobs will fail.

Please set $HCAT_HOME to the root of your HCatalog installation.

Warning: /usr/local/sqoop/sqoop-1.4.7.bin__hadoop-2.6.0/../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

Warning: /usr/local/sqoop/sqoop-1.4.7.bin__hadoop-2.6.0/../zookeeper does not exist! Accumulo imports will fail.

Please set $ZOOKEEPER_HOME to the root of your Zookeeper installation.

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/local/hadoop/hadoop-3.3.3/share/hadoop/common/lib/slf4j-reload4j-1.7.36.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/local/hbase/hbase-2.4.12/lib/client-facing-thirdparty/slf4j-reload4j-1.7.33.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Reload4jLoggerFactory]

2022-06-14 17:03:58,237 INFO sqoop.Sqoop: Running Sqoop version: 1.4.7

Enter password:

Exception in thread "main" java.lang.NoClassDefFoundError: org/apache/commons/lang/StringUtils

at org.apache.sqoop.tool.BaseSqoopTool.validateHiveOptions(BaseSqoopTool.java:1583)

at org.apache.sqoop.tool.ImportTool.validateOptions(ImportTool.java:1178)

at org.apache.sqoop.Sqoop.run(Sqoop.java:137)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:81)

at org.apache.sqoop.Sqoop.runSqoop(Sqoop.java:183)

at org.apache.sqoop.Sqoop.runTool(Sqoop.java:234)

at org.apache.sqoop.Sqoop.runTool(Sqoop.java:243)

at org.apache.sqoop.Sqoop.main(Sqoop.java:252)

Caused by: java.lang.ClassNotFoundException: org.apache.commons.lang.StringUtils

at java.net.URLClassLoader.findClass(URLClassLoader.java:382)

at java.lang.ClassLoader.loadClass(ClassLoader.java:424)

at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:349)

at java.lang.ClassLoader.loadClass(ClassLoader.java:357)

... 8 more

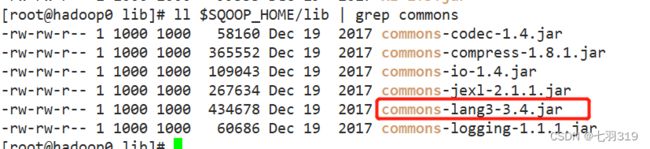

执行报错:缺少org.apache.commons.lang.StringUtils类,查看SQOOP_HOME/lib下的依赖包,发现只依赖了commons-lang3,没有commons-lang,需要手动下载依赖,然后放入SQOOP_HOME/lib目录下:

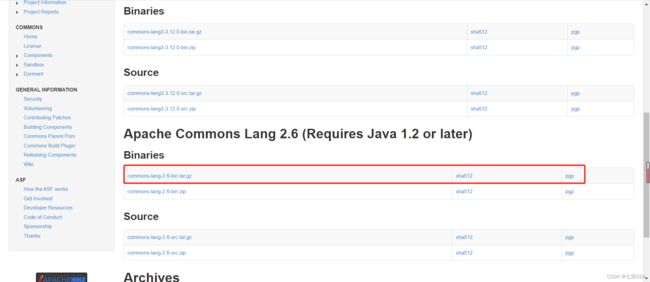

commons-lang依赖下载:https://commons.apache.org/proper/commons-lang/download_lang.cgi,选择2.6的版本下载:

下载之后上传到服务器并且解压,然后将解压后文件中的commons-lang-2.6.jar拷贝到SQOOP_HOME/lib目录下:

[root@hadoop0 commons-lang-2.6]# cp commons-lang-2.6.jar $SQOOP_HOME/lib/

[root@hadoop0 commons-lang-2.6]# ll $SQOOP_HOME/lib | grep commons

-rw-rw-r-- 1 1000 1000 58160 Dec 19 2017 commons-codec-1.4.jar

-rw-rw-r-- 1 1000 1000 365552 Dec 19 2017 commons-compress-1.8.1.jar

-rw-rw-r-- 1 1000 1000 109043 Dec 19 2017 commons-io-1.4.jar

-rw-rw-r-- 1 1000 1000 267634 Dec 19 2017 commons-jexl-2.1.1.jar

-rw-r--r-- 1 root root 284220 Jun 14 17:39 commons-lang-2.6.jar

-rw-rw-r-- 1 1000 1000 434678 Dec 19 2017 commons-lang3-3.4.jar

-rw-rw-r-- 1 1000 1000 60686 Dec 19 2017 commons-logging-1.1.1.jar

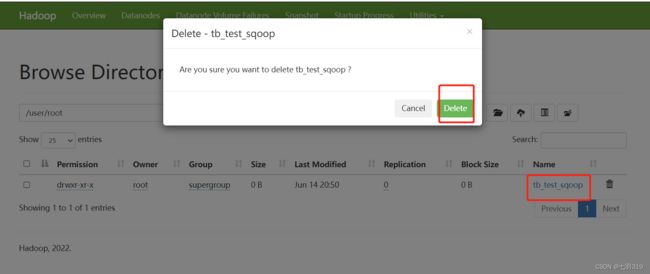

使用页面删除或者通过命令删除,已存在的文件目录:

[root@hadoop0 sbin]# hadoop fs -rm -r hdfs://hadoop0:9000/user/root/tb_test_sqoop

Deleted hdfs://hadoop0:9000/user/root/tb_test_sqoop

sqoop import --connect jdbc:mysql://hadoop0:3306/db_test_sqoop --username root -P --table tb_test_sqoop --fields-terminated-by ',' -m 1

[root@hadoop0 sbin]# sqoop import --connect jdbc:mysql://hadoop0:3306/db_test_sqoop --username root -P --table tb_test_sqoop --fields-terminated-by ',' -m 1

Warning: /usr/local/sqoop/sqoop-1.4.7.bin__hadoop-2.6.0/../hcatalog does not exist! HCatalog jobs will fail.

......

2022-06-14 20:59:48,684 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `tb_test_sqoop` AS t LIMIT 1

2022-06-14 20:59:48,709 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `tb_test_sqoop` AS t LIMIT 1

2022-06-14 20:59:48,718 INFO orm.CompilationManager: HADOOP_MAPRED_HOME is /usr/local/hadoop/hadoop-3.3.3

Note: /tmp/sqoop-root/compile/5f86b02068d2260bc9a4d6d08f81c0d0/tb_test_sqoop.java uses or overrides a deprecated API.

Note: Recompile with -Xlint:deprecation for details.

2022-06-14 20:59:50,129 INFO orm.CompilationManager: Writing jar file: /tmp/sqoop-root/compile/5f86b02068d2260bc9a4d6d08f81c0d0/tb_test_sqoop.jar

2022-06-14 20:59:50,147 WARN manager.MySQLManager: It looks like you are importing from mysql.

2022-06-14 20:59:50,147 WARN manager.MySQLManager: This transfer can be faster! Use the --direct

2022-06-14 20:59:50,147 WARN manager.MySQLManager: option to exercise a MySQL-specific fast path.

2022-06-14 20:59:50,147 INFO manager.MySQLManager: Setting zero DATETIME behavior to convertToNull (mysql)

2022-06-14 20:59:50,155 INFO mapreduce.ImportJobBase: Beginning import of tb_test_sqoop

2022-06-14 20:59:50,156 INFO Configuration.deprecation: mapred.job.tracker is deprecated. Instead, use mapreduce.jobtracker.address

2022-06-14 20:59:50,318 INFO Configuration.deprecation: mapred.jar is deprecated. Instead, use mapreduce.job.jar

2022-06-14 20:59:51,176 INFO Configuration.deprecation: mapred.map.tasks is deprecated. Instead, use mapreduce.job.maps

2022-06-14 20:59:51,265 INFO client.DefaultNoHARMFailoverProxyProvider: Connecting to ResourceManager at hadoop0/192.168.147.155:8032

2022-06-14 20:59:51,842 INFO mapreduce.JobResourceUploader: Disabling Erasure Coding for path: /tmp/hadoop-yarn/staging/root/.staging/job_1655201926342_0001

Tue Jun 14 20:59:54 CST 2022 WARN: Establishing SSL connection without server's identity verification is not recommended. According to MySQL 5.5.45+, 5.6.26+ and 5.7.6+ requirements SSL connection must be established by default if explicit option isn't set. For compliance with existing applications not using SSL the verifyServerCertificate property is set to 'false'. You need either to explicitly disable SSL by setting useSSL=false, or set useSSL=true and provide truststore for server certificate verification.

2022-06-14 20:59:54,919 INFO db.DBInputFormat: Using read commited transaction isolation

2022-06-14 20:59:55,007 INFO mapreduce.JobSubmitter: number of splits:1

2022-06-14 20:59:55,199 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1655201926342_0001

2022-06-14 20:59:55,199 INFO mapreduce.JobSubmitter: Executing with tokens: []

2022-06-14 20:59:55,615 INFO conf.Configuration: resource-types.xml not found

2022-06-14 20:59:55,615 INFO resource.ResourceUtils: Unable to find 'resource-types.xml'.

2022-06-14 20:59:55,967 INFO impl.YarnClientImpl: Submitted application application_1655201926342_0001

2022-06-14 20:59:56,030 INFO mapreduce.Job: The url to track the job: http://hadoop0:8088/proxy/application_1655201926342_0001/

2022-06-14 20:59:56,031 INFO mapreduce.Job: Running job: job_1655201926342_0001

2022-06-14 21:00:07,238 INFO mapreduce.Job: Job job_1655201926342_0001 running in uber mode : false

2022-06-14 21:00:07,240 INFO mapreduce.Job: map 0% reduce 0%

2022-06-14 21:00:14,458 INFO mapreduce.Job: map 100% reduce 0%

2022-06-14 21:00:15,472 INFO mapreduce.Job: Job job_1655201926342_0001 completed successfully

2022-06-14 21:00:15,591 INFO mapreduce.Job: Counters: 33

File System Counters

FILE: Number of bytes read=0

FILE: Number of bytes written=285639

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=87

HDFS: Number of bytes written=318

HDFS: Number of read operations=6

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

HDFS: Number of bytes read erasure-coded=0

Job Counters

Launched map tasks=1

Other local map tasks=1

Total time spent by all maps in occupied slots (ms)=4617

Total time spent by all reduces in occupied slots (ms)=0

Total time spent by all map tasks (ms)=4617

Total vcore-milliseconds taken by all map tasks=4617

Total megabyte-milliseconds taken by all map tasks=4727808

Map-Reduce Framework

Map input records=6

Map output records=6

Input split bytes=87

Spilled Records=0

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=162

CPU time spent (ms)=1780

Physical memory (bytes) snapshot=270290944

Virtual memory (bytes) snapshot=2795339776

Total committed heap usage (bytes)=181927936

Peak Map Physical memory (bytes)=270290944

Peak Map Virtual memory (bytes)=2795339776

File Input Format Counters

Bytes Read=0

File Output Format Counters

Bytes Written=318

2022-06-14 21:00:15,598 INFO mapreduce.ImportJobBase: Transferred 318 bytes in 24.4111 seconds (13.0268 bytes/sec)

2022-06-14 21:00:15,602 INFO mapreduce.ImportJobBase: Retrieved 6 records.

[root@hadoop0 sbin]#

[root@hadoop0 5f86b02068d2260bc9a4d6d08f81c0d0]# pwd

/tmp/sqoop-root/compile/5f86b02068d2260bc9a4d6d08f81c0d0

[root@hadoop0 5f86b02068d2260bc9a4d6d08f81c0d0]# ll

total 64

-rw-r--r-- 1 root root 618 Jun 14 20:59 tb_test_sqoop$1.class

-rw-r--r-- 1 root root 624 Jun 14 20:59 tb_test_sqoop$2.class

-rw-r--r-- 1 root root 630 Jun 14 20:59 tb_test_sqoop$3.class

-rw-r--r-- 1 root root 630 Jun 14 20:59 tb_test_sqoop$4.class

-rw-r--r-- 1 root root 12378 Jun 14 20:59 tb_test_sqoop.class

-rw-r--r-- 1 root root 240 Jun 14 20:59 tb_test_sqoop$FieldSetterCommand.class

-rw-r--r-- 1 root root 7250 Jun 14 20:59 tb_test_sqoop.jar

-rw-r--r-- 1 root root 16768 Jun 14 20:59 tb_test_sqoop.java

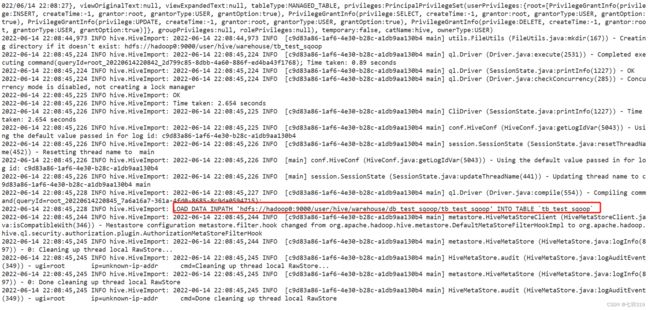

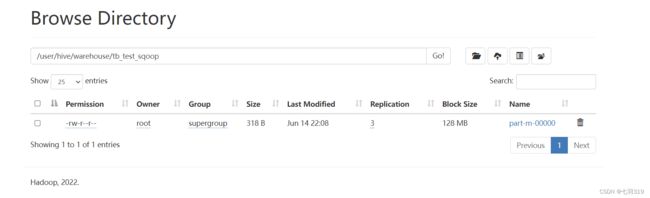

6.从Mysql导入数据到Hive

导入到hive,指定导入参数"–hive-import"即可导入数据并存入hive:

在HDFS上面创建目录(可以不建):

hadoop fs -mkdir /user/hive/warehouse/db_test_sqoop

导入数据,可以不指定–warehouse-dir /user/hive/warehouse/db_test_sqoop:

sqoop import --connect jdbc:mysql://hadoop0:3306/db_test_sqoop --username root -P --table tb_test_sqoop --fields-terminated-by ',' -m 1 --hive-import --warehouse-dir /user/hive/warehouse/db_test_sqoop

执行报错,原因是HiveConf缺失:

[root@hadoop0 conf]# sqoop import --connect jdbc:mysql://hadoop0:3306/db_test_sqoop --username root -P --table tb_test_sqoop --fields-terminated-by ',' -m 1 --hive-import --warehouse-dir /user/hive/warehouse/db_test_sqoop

2022-06-14 22:01:58,394 INFO hive.HiveImport: Loading uploaded data into Hive

2022-06-14 22:01:58,397 ERROR hive.HiveConfig: Could not load org.apache.hadoop.hive.conf.HiveConf. Make sure HIVE_CONF_DIR is set correctly.

2022-06-14 22:01:58,398 ERROR tool.ImportTool: Import failed: java.io.IOException: java.lang.ClassNotFoundException: org.apache.hadoop.hive.conf.HiveConf

at org.apache.sqoop.hive.HiveConfig.getHiveConf(HiveConfig.java:50)

at org.apache.sqoop.hive.HiveImport.getHiveArgs(HiveImport.java:392)

at org.apache.sqoop.hive.HiveImport.executeExternalHiveScript(HiveImport.java:379)

at org.apache.sqoop.hive.HiveImport.executeScript(HiveImport.java:337)

at org.apache.sqoop.hive.HiveImport.importTable(HiveImport.java:241)

at org.apache.sqoop.tool.ImportTool.importTable(ImportTool.java:537)

at org.apache.sqoop.tool.ImportTool.run(ImportTool.java:628)

at org.apache.sqoop.Sqoop.run(Sqoop.java:147)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:81)

at org.apache.sqoop.Sqoop.runSqoop(Sqoop.java:183)

at org.apache.sqoop.Sqoop.runTool(Sqoop.java:234)

at org.apache.sqoop.Sqoop.runTool(Sqoop.java:243)

at org.apache.sqoop.Sqoop.main(Sqoop.java:252)

Caused by: java.lang.ClassNotFoundException: org.apache.hadoop.hive.conf.HiveConf

at java.net.URLClassLoader.findClass(URLClassLoader.java:382)

at java.lang.ClassLoader.loadClass(ClassLoader.java:424)

at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:349)

at java.lang.ClassLoader.loadClass(ClassLoader.java:357)

at java.lang.Class.forName0(Native Method)

at java.lang.Class.forName(Class.java:264)

at org.apache.sqoop.hive.HiveConfig.getHiveConf(HiveConfig.java:44)

... 12 more

增加配置:

[root@hadoop0 conf]# vim $SQOOP_HOME/conf/sqoop-env-template.sh

export HIVE_HOME=/usr/local/hive/apache-hive-3.1.3-bin

export HIVE_CONF_DIR=/usr/local/hive/apache-hive-3.1.3-bin/conf

将hive的驱动依赖放入SQOOP_HOME/lib目录下:

[root@hadoop0 conf]# cp $HIVE_HOME/lib/hive-common-3.1.3.jar $SQOOP_HOME/lib/

[root@hadoop0 conf]# ll $SQOOP_HOME/lib | grep hive

-rw-r--r-- 1 root root 492915 Jun 14 22:05 hive-common-3.1.3.jar

-rw-rw-r-- 1 1000 1000 1801469 Dec 19 2017 kite-data-hive-1.1.0.jar

删除HDFS已存在的tb_test_sqoop目录:

hadoop fs -rm -r hdfs://hadoop0:9000/user/hive/warehouse/db_test_sqoop/tb_test_sqoop

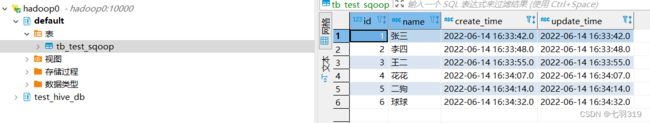

通过远程查询Hive中的表数据:

通过beeline命令行工具查询:

[root@hadoop0 conf]# beeline -u jdbc:hive2://hadoop0:10000/default -n hive -p

Connecting to jdbc:hive2://hadoop0:10000/default;user=hive

Enter password for jdbc:hive2://hadoop0:10000/default: ***********

2022-06-14 22:28:18,677 INFO jdbc.Utils: Supplied authorities: hadoop0:10000

2022-06-14 22:28:18,679 INFO jdbc.Utils: Resolved authority: hadoop0:10000

Connected to: Apache Hive (version 3.1.3)

Driver: Hive JDBC (version 2.3.9)

Transaction isolation: TRANSACTION_REPEATABLE_READ

Beeline version 2.3.9 by Apache Hive

0: jdbc:hive2://hadoop0:10000/default> show tables;

+----------------+

| tab_name |

+----------------+

| tb_test_sqoop |

+----------------+

1 row selected (0.264 seconds)

0: jdbc:hive2://hadoop0:10000/default> desc tb_test_sqoop;

+--------------+------------+----------+

| col_name | data_type | comment |

+--------------+------------+----------+

| id | bigint | |

| name | string | |

| create_time | string | |

| update_time | string | |

+--------------+------------+----------+

4 rows selected (0.091 seconds)

0: jdbc:hive2://hadoop0:10000/default> select * from tb_test_sqoop;

+-------------------+---------------------+----------------------------+----------------------------+

| tb_test_sqoop.id | tb_test_sqoop.name | tb_test_sqoop.create_time | tb_test_sqoop.update_time |

+-------------------+---------------------+----------------------------+----------------------------+

| 1 | 张三 | 2022-06-14 16:33:42.0 | 2022-06-14 16:33:42.0 |

| 2 | 李四 | 2022-06-14 16:33:48.0 | 2022-06-14 16:33:48.0 |

| 3 | 王二 | 2022-06-14 16:33:55.0 | 2022-06-14 16:33:55.0 |

| 4 | 花花 | 2022-06-14 16:34:07.0 | 2022-06-14 16:34:07.0 |

| 5 | 二狗 | 2022-06-14 16:34:14.0 | 2022-06-14 16:34:14.0 |

| 6 | 球球 | 2022-06-14 16:34:32.0 | 2022-06-14 16:34:32.0 |

+-------------------+---------------------+----------------------------+----------------------------+

6 rows selected (0.186 seconds)

0: jdbc:hive2://hadoop0:10000/default>

7.从HDFS导出数据到mysql

需要先在数据库中创建一张用于接收导出数据的表:tb_test_sqoop_export:

命令查看:

[root@hadoop0 sqoop]# sqoop help export

sqoop export [GENERIC-ARGS] [TOOL-ARGS]

# HDFS source path for the 指定HDFS导出的文件目录

--export-dir <dir>

# Sets the input field separator 指定接收方字段的分隔符(input指的是入关系型数据库)

--input-fields-terminated-by <char>

执行导出:

sqoop export --connect jdbc:mysql://hadoop0:3306/db_test_sqoop --username root -P --table tb_test_sqoop_export --export-dir /user/hive/warehouse/tb_test_sqoop --input-fields-terminated-by ','

[root@hadoop0 conf]# sqoop export --connect jdbc:mysql://hadoop0:3306/db_test_sqoop --username root -P --table tb_test_sqoop_export --export-dir /user/hive/warehouse/tb_test_sqoop --input-fields-terminated-by ','

2022-06-14 23:23:47,235 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `tb_test_sqoop_export` AS t LIMIT 1

2022-06-14 23:23:47,263 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `tb_test_sqoop_export` AS t LIMIT 1

2022-06-14 23:23:47,271 INFO orm.CompilationManager: HADOOP_MAPRED_HOME is /usr/local/hadoop/hadoop-3.3.3

Note: /tmp/sqoop-root/compile/195e564b29f52726a22addac7420430c/tb_test_sqoop_export.java uses or overrides a deprecated API.

Note: Recompile with -Xlint:deprecation for details.

2022-06-14 23:23:48,695 INFO orm.CompilationManager: Writing jar file: /tmp/sqoop-root/compile/195e564b29f52726a22addac7420430c/tb_test_sqoop_export.jar

2022-06-14 23:23:48,716 INFO mapreduce.ExportJobBase: Beginning export of tb_test_sqoop_export

2022-06-14 23:23:48,716 INFO Configuration.deprecation: mapred.job.tracker is deprecated. Instead, use mapreduce.jobtracker.address

2022-06-14 23:23:48,886 INFO Configuration.deprecation: mapred.jar is deprecated. Instead, use mapreduce.job.jar

2022-06-14 23:23:50,091 INFO Configuration.deprecation: mapred.reduce.tasks.speculative.execution is deprecated. Instead, use mapreduce.reduce.speculative

2022-06-14 23:23:50,095 INFO Configuration.deprecation: mapred.map.tasks.speculative.execution is deprecated. Instead, use mapreduce.map.speculative

2022-06-14 23:23:50,096 INFO Configuration.deprecation: mapred.map.tasks is deprecated. Instead, use mapreduce.job.maps

2022-06-14 23:23:50,195 INFO client.DefaultNoHARMFailoverProxyProvider: Connecting to ResourceManager at hadoop0/192.168.147.155:8032

2022-06-14 23:23:50,601 INFO mapreduce.JobResourceUploader: Disabling Erasure Coding for path: /tmp/hadoop-yarn/staging/root/.staging/job_1655201926342_0006

2022-06-14 23:23:52,398 INFO input.FileInputFormat: Total input files to process : 1

2022-06-14 23:23:52,401 INFO input.FileInputFormat: Total input files to process : 1

2022-06-14 23:23:52,487 INFO mapreduce.JobSubmitter: number of splits:4

2022-06-14 23:23:52,553 INFO Configuration.deprecation: mapred.map.tasks.speculative.execution is deprecated. Instead, use mapreduce.map.speculative

2022-06-14 23:23:52,674 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1655201926342_0006

2022-06-14 23:23:52,675 INFO mapreduce.JobSubmitter: Executing with tokens: []

2022-06-14 23:23:52,925 INFO conf.Configuration: resource-types.xml not found

2022-06-14 23:23:52,925 INFO resource.ResourceUtils: Unable to find 'resource-types.xml'.

2022-06-14 23:23:53,017 INFO impl.YarnClientImpl: Submitted application application_1655201926342_0006

2022-06-14 23:23:53,073 INFO mapreduce.Job: The url to track the job: http://hadoop0:8088/proxy/application_1655201926342_0006/

2022-06-14 23:23:53,074 INFO mapreduce.Job: Running job: job_1655201926342_0006

2022-06-14 23:24:08,561 INFO mapreduce.Job: Job job_1655201926342_0006 running in uber mode : false

2022-06-14 23:24:08,564 INFO mapreduce.Job: map 0% reduce 0%

2022-06-14 23:24:18,918 INFO mapreduce.Job: map 100% reduce 0%

2022-06-14 23:24:19,939 INFO mapreduce.Job: Job job_1655201926342_0006 completed successfully

2022-06-14 23:24:20,073 INFO mapreduce.Job: Counters: 33

File System Counters

FILE: Number of bytes read=0

FILE: Number of bytes written=1142272

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=1525

HDFS: Number of bytes written=0

HDFS: Number of read operations=19

HDFS: Number of large read operations=0

HDFS: Number of write operations=0

HDFS: Number of bytes read erasure-coded=0

Job Counters

Launched map tasks=4

Data-local map tasks=4

Total time spent by all maps in occupied slots (ms)=31260

Total time spent by all reduces in occupied slots (ms)=0

Total time spent by all map tasks (ms)=31260

Total vcore-milliseconds taken by all map tasks=31260

Total megabyte-milliseconds taken by all map tasks=32010240

Map-Reduce Framework

Map input records=6

Map output records=6

Input split bytes=671

Spilled Records=0

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=1546

CPU time spent (ms)=8330

Physical memory (bytes) snapshot=991711232

Virtual memory (bytes) snapshot=11177525248

Total committed heap usage (bytes)=716177408

Peak Map Physical memory (bytes)=258891776

Peak Map Virtual memory (bytes)=2801577984

File Input Format Counters

Bytes Read=0

File Output Format Counters

Bytes Written=0

2022-06-14 23:24:20,082 INFO mapreduce.ExportJobBase: Transferred 1.4893 KB in 29.9739 seconds (50.8776 bytes/sec)

2022-06-14 23:24:20,085 INFO mapreduce.ExportJobBase: Exported 6 records.

[root@hadoop0 conf]#

查看Mysql中的数据:

mysql> use db_test_sqoop;

Reading table information for completion of table and column names

You can turn off this feature to get a quicker startup with -A

Database changed

mysql> show tables;

+-------------------------+

| Tables_in_db_test_sqoop |

+-------------------------+

| tb_test_sqoop |

| tb_test_sqoop_export |

+-------------------------+

2 rows in set (0.00 sec)

mysql> desc tb_test_sqoop_export;

+-------------+-------------+------+-----+-------------------+-----------------------------+

| Field | Type | Null | Key | Default | Extra |

+-------------+-------------+------+-----+-------------------+-----------------------------+

| id | bigint(20) | NO | PRI | NULL | auto_increment |

| name | varchar(32) | YES | | NULL | |

| create_time | datetime | YES | | CURRENT_TIMESTAMP | |

| update_time | datetime | YES | | CURRENT_TIMESTAMP | on update CURRENT_TIMESTAMP |

+-------------+-------------+------+-----+-------------------+-----------------------------+

4 rows in set (0.00 sec)

mysql> select * from tb_test_sqoop_export;

+----+--------+---------------------+---------------------+

| id | name | create_time | update_time |

+----+--------+---------------------+---------------------+

| 1 | 张三 | 2022-06-14 16:33:42 | 2022-06-14 16:33:42 |

| 2 | 李四 | 2022-06-14 16:33:48 | 2022-06-14 16:33:48 |

| 3 | 王二 | 2022-06-14 16:33:55 | 2022-06-14 16:33:55 |

| 4 | 花花 | 2022-06-14 16:34:07 | 2022-06-14 16:34:07 |

| 5 | 二狗 | 2022-06-14 16:34:14 | 2022-06-14 16:34:14 |

| 6 | 球球 | 2022-06-14 16:34:32 | 2022-06-14 16:34:32 |

+----+--------+---------------------+---------------------+

6 rows in set (0.00 sec)

mysql>