基于kubernetes组件初步部署k8s

基于k8s组件初步部署k8s

- kubernetes组件

- kubernetes简单化部署安装

-

- Master操作

-

- 环境检查

- 安装配置Containerd

-

- 安装Containerd

- 配置containerd

- 启动containerd

- 安装配置Kubeadm

-

- 安装Kubeadm

- 配置Circtl

- kubeadm配置

- 启动kubelet服务

- 拉取镜像

- 初始化集群

-

- 操作命令行

- 安装网络插件

- Node操作

-

- Node添加

- 报错解决

kubernetes组件

这里先认识一下k8s的一些组件,了解个组件的功能,有助于我们先部署安装一个简单的k8s环境,以帮助我们更好的理解k8s架构的工作和设计原理。

管理端Master组件

kube-apiserver

API 服务器是 Kubernetes 控制平面的组件, 该组件负责公开了 Kubernetes API,负责处理接受请求的工作

kube-controller-manager

负责运行控制器进程

控制器有哪些

- 节点控制器(Node Controller):负责在节点出现故障时进行通知和响应

- 任务控制器(Job Controller):监测代表一次性任务的 Job 对象,然后创建 Pods 来运行这些任务直至完成

- 端点分片控制器(EndpointSlice controller):填充端点分片(EndpointSlice)对象(以提供 Service 和 Pod 之间的链接)。

- 服务账号控制器(ServiceAccount controller):为新的命名空间创建默认的服务账号(ServiceAccount)。

kube-scheduler

负责监视新创建的、未指定运行节点(node)的 Pods, 并选择节点来让 Pod 在上面运行。

etcd

一致且高可用的键值存储,用作 Kubernetes 所有集群数据的后台数据库。

插件

(1)DNS

集群 DNS 是一个 DNS 服务器,和环境中的其他 DNS 服务器一起工作,它为 Kubernetes 服务提供 DNS 记录。

(2)Web仪表盘

Dashboard 是 Kubernetes 集群的通用的、基于 Web 的用户界面。 它使用户可以管理集群中运行的应用程序以及集群本身, 并进行故障排除

(3)容器资源监控

容器资源监控 将关于容器的一些常见的时间序列度量值保存到一个集中的数据库中, 并提供浏览这些数据的界面

(4)容器日志

集群层面日志机制负责将容器的日志数据保存到一个集中的日志存储中, 这种集中日志存储提供搜索和浏览接口

网络插件

是实现容器网络接口(CNI)规范的软件组件。它们负责为 Pod 分配 IP 地址,并使这些 Pod 能在集群内部相互通信。

节点组件

kubelet

接收一组通过各类机制提供给它的 PodSpecs, 确保这些 PodSpecs 中描述的容器处于运行状态且健康

kube-proxy

每个节点(node)上所运行的网络代理, 实现 Kubernetes 服务(Service) 概念的一部分

docker

rkt

rkt运行容器,作为docker工具的替代方案。

supervisord

fluentd

容器按持续运行的时间可划分为两类:

服务类容器

持续提供服务,一直运行:像web服务(Tomcat、Nginx、Apache),数据库服务(Mysql、Oracle、Sqlserver)、监控服务(zabbix、Prometheus)

工作类容器

一次性任务:比如批处理任务,执行完指令,容器就退出

kubernetes简单化部署安装

Master操作

环境检查

1.确保每个节点上 MAC 地址和 product_uuid 的唯一性

product_uuid 校验

[root@master ~]# cat /sys/class/dmi/id/product_uuid

8BBA4D56-0CC0-790A-81F7-B1E1D4ED40C4

获取网络接口的 MAC 地址

[root@master ~]# ip link

1: lo: mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

2: eth0: mtu 1500 qdisc pfifo_fast state UP mode DEFAULT group default qlen 1000

link/ether 00:0c:29:ed:40:c4 brd ff:ff:ff:ff:ff:ff

2.确定端口未被占用

端口扫描,通的端口返回succeeded,不通的端口返回refused

[root@master ~]# nc -v -w 5 127.0.0.1 6443

Ncat: Version 7.50 ( https://nmap.org/ncat )

Ncat: Connection refused.

nc用法

-l 用于指定nc将处于侦听模式。指定该参数,则意味着nc被当作server,侦听并接受连接,而非向其它地址发起连接。

-p 暂未用到(老版本的nc可能需要在端口号前加-p参数,下面测试环境是centos6.6,nc版本是nc-1.84,未用到-p参数)

-s 指定发送数据的源IP地址,适用于多网卡机

-u 指定nc使用UDP协议,默认为TCP

-v 输出交互或出错信息,新手调试时尤为有用

-w 超时秒数,后面跟数字

-z 表示zero,表示扫描时不发送任何数据

说明:

Docker Engine 没有实现 CRI, 而这是容器运行时在 Kubernetes 中工作所需要的。 为此,必须安装一个额外的服务 cri-dockerd。 cri-dockerd 是一个基于传统的内置 Docker 引擎支持的项目, 它在 1.24 版本从 kubelet 中移除。

运行时 Unix 域套接字 containerd unix:///var/run/containerd/containerd.sockCRI-O unix:///var/run/crio/crio.sockDocker Engine(使用 cri-dockerd) unix:///var/run/cri-dockerd.sock

3.关闭Selinux

直到 kubelet对 SELinux 的支持进行升级为止

[root@master ~]# getenforce

Disabled

# 将 SELinux 设置为 permissive 模式(相当于将其禁用)

sudo setenforce 0

sudo sed -i 's/^SELINUX=enforcing$/SELINUX=permissive/' /etc/selinux/config

4.禁用swap分区

vim /etc/fstab # 注释掉swap一行

安装配置Containerd

安装Containerd

新版本默认使用的运行时不再是docker,因此这里只安装containerd即可

sudo yum install -y yum-utils device-mapper-persistent-data lvm2

sudo yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

sudo sed -i 's+download.docker.com+mirrors.aliyun.com/docker-ce+' /etc/yum.repos.d/docker-ce.repo

sudo yum makecache fast

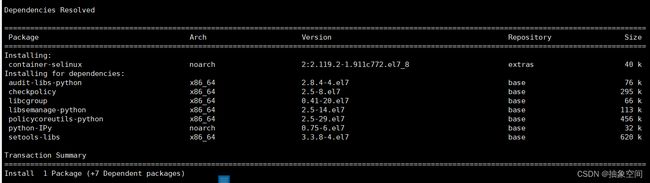

安装新版本的container-selinux

[root@master ~]# wget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo

[root@master ~]# yum install epel-release -y

[root@master ~]# yum clean all # 这一步必须清理yum 缓存

[root@master ~]# yum install container-selinux -y

配置yum源

修改为https

[root@master ~]# vim /etc/yum.repos.d/CentOS-Base.repo

baseurl=https://mirrors.aliyun.com/centos/$releasever/extras/$basearch/

https://mirrors.aliyuncs.com/centos/$releasever/extras/$basearch/

https://mirrors.cloud.aliyuncs.com/centos/$releasever/extras/$basearch/

安装containerd

[root@master ~]# yum install containerd.io -y

配置hosts

192.168.10.101 master

配置containerd

查看默认启用的配置

[root@master ~]# containerd config default

containerd默认配置文件内容

[root@master ~]# vim /etc/containerd/config.toml

命令行生产初始配置文件

[root@master ~]# containerd config default > /etc/containerd/config.toml

修改containerd配置文件

[root@master ~]# vim /etc/containerd/config.toml

[plugins]

...

[plugins."io.containerd.grpc.v1.cri"]

...

sandbox_image = "registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.6" # 配置软件源

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc.options]

...

SystemdCgroup = true # 配置cgroup类型

启动containerd

[root@master ~]# systemctl start containerd

安装配置Kubeadm

安装Kubeadm

1.配置yum软件源

官方源

cat <阿里云源

cat <2.安装kubeadm

sudo yum install -y kubelet kubeadm kubectl --disableexcludes=kubernetes

配置Circtl

这时由于没有运行时的入口,我们可以修改crictl配置文件,获得containerd的sock信息

[root@master ~]# crictl config runtime-endpoint unix:///run/containerd/containerd.sock

[root@master ~]# crictl config image-endpoint unix:///run/containerd/containerd.sock

[root@master ~]# cat /etc/crictl.yaml

runtime-endpoint: "unix:///run/containerd/containerd.sock"

image-endpoint: "unix:///run/containerd/containerd.sock"

timeout: 0

debug: false

pull-image-on-create: false

disable-pull-on-run: fals

配置 cgroup 驱动程序

容器运行时和 kubelet 都具有名字为 “cgroup driver” 的属性,该属性对于在 Linux 机器上管理 CGroups 而言非常重要。

注意:你需要确保容器运行时和 kubelet 所使用的是相同的 cgroup 驱动,否则 kubelet 进程会失败

配置容器运行时 cgroup 驱动

由于 kubeadm 把 kubelet 视为一个 系统服务来管理, 所以对基于 kubeadm 的安装, 推荐使用 systemd 驱动, 不推荐 kubelet 默认的 cgroupfs 驱动。

在版本 1.22 及更高版本中,如果用户没有在 KubeletConfiguration 中设置 cgroupDriver 字段, kubeadm 会将它设置为默认值 systemd

配置示例

# kubeadm-config.yaml

kind: ClusterConfiguration

apiVersion: kubeadm.k8s.io/v1beta3

kubernetesVersion: v1.21.0

---

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

cgroupDriver: systemd

kubeadm配置

生成初始配置文件

[root@master ~]# kubeadm config print init-defaults > kubeadm.yml

查看所需镜像列表

[root@master ~]# kubeadm config images list --config kubeadm.yml

registry.k8s.io/kube-apiserver:v1.27.0

registry.k8s.io/kube-controller-manager:v1.27.0

registry.k8s.io/kube-scheduler:v1.27.0

registry.k8s.io/kube-proxy:v1.27.0

registry.k8s.io/pause:3.9

registry.k8s.io/etcd:3.5.7-0

registry.k8s.io/coredns/coredns:v1.10.1

Kubeadm修改配置文件

[root@master ~]# vim kubeadm.yml

apiVersion: kubeadm.k8s.io/v1beta3

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 192.168.10.101 # 改为master的IP

bindPort: 6443

nodeRegistration:

criSocket: unix:///var/run/containerd/containerd.sock

imagePullPolicy: IfNotPresent

name: master # 修改名称

taints: null

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta3

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns: {}

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.aliyuncs.com/google_containers # 改为阿里云源

kind: ClusterConfiguration

kubernetesVersion: 1.27.0

networking:

dnsDomain: cluster.local

serviceSubnet: 10.96.0.0/12

scheduler: {}

启动kubelet服务

目前启动kubelet报错

[root@master ~]# systemctl start kubelet

[root@master systemctl status kubelet

● kubelet.service - kubelet: The Kubernetes Node Agent

Loaded: loaded (/usr/lib/systemd/system/kubelet.service; disabled; vendor preset: disabled)

Drop-In: /usr/lib/systemd/system/kubelet.service.d

└─10-kubeadm.conf

Active: activating (auto-restart) (Result: exit-code) since Fri 2023-07-07 10:53:24 CST; 1s ago

Docs: https://kubernetes.io/docs/

Process: 27867 ExecStart=/usr/bin/kubelet $KUBELET_KUBECONFIG_ARGS $KUBELET_CONFIG_ARGS $KUBELET_KUBEADM_ARGS $KUBELET_EXTRA_ARGS (code=exited, status=1/FAILURE)

Main PID: 27867 (code=exited, status=1/FAILURE)

Jul 07 10:53:24 master systemd[1]: kubelet.service: main process exited, code=exited, status=1/FAILURE

Jul 07 10:53:24 master systemd[1]: Unit kubelet.service entered failed state.

Jul 07 10:53:24 master systemd[1]: kubelet.service failed.

拉取镜像

kubeadm config images pull --config kubeadm.yml

[root@master ~]# crictl images

IMAGE TAG IMAGE ID SIZE

registry.aliyuncs.com/google_containers/coredns v1.10.1 ead0a4a53df89 16.2MB

registry.aliyuncs.com/google_containers/etcd 3.5.7-0 86b6af7dd652c 102MB

registry.aliyuncs.com/google_containers/kube-apiserver v1.27.0 6f707f569b572 33.4MB

registry.aliyuncs.com/google_containers/kube-controller-manager v1.27.0 95fe52ed44570 31MB

registry.aliyuncs.com/google_containers/kube-proxy v1.27.0 5f82fc39fa816 23.9MB

registry.aliyuncs.com/google_containers/kube-scheduler v1.27.0 f73f1b39c3fe8 18.2MB

registry.aliyuncs.com/google_containers/pause 3.9 e6f1816883972 322kB

查看拉取的镜像

[root@master ~]# crictl image list

IMAGE TAG IMAGE ID SIZE

registry.aliyuncs.com/google_containers/coredns v1.10.1 ead0a4a53df89 16.2MB

registry.aliyuncs.com/google_containers/etcd 3.5.7-0 86b6af7dd652c 102MB

registry.aliyuncs.com/google_containers/kube-apiserver v1.27.0 6f707f569b572 33.4MB

registry.aliyuncs.com/google_containers/kube-controller-manager v1.27.0 95fe52ed44570 31MB

registry.aliyuncs.com/google_containers/kube-proxy v1.27.0 5f82fc39fa816 23.9MB

registry.aliyuncs.com/google_containers/kube-scheduler v1.27.0 f73f1b39c3fe8 18.2MB

registry.aliyuncs.com/google_containers/pause 3.9 e6f1816883972 322kB

registry.cn-hangzhou.aliyuncs.com/google_containers/pause 3.6 6270bb605e12e 302kB

初始化集群

[root@master ~]# kubeadm init --config=/data/kubeadm.yml --upload-certs --v=6

...

[addons] Applied essential addon: kube-proxy

I0709 13:49:53.255150 29494 loader.go:373] Config loaded from file: /etc/kubernetes/admin.conf

I0709 13:49:53.255484 29494 loader.go:373] Config loaded from file: /etc/kubernetes/admin.conf

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.10.101:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:6ef00961d04157a81e8e60e04673835350a3995db28f5fb5395cfc13a21bb8f8

接下来的工作

[root@master ~]# mkdir -p $HOME/.kube

[root@master ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@master ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

查看kubelet状态:

此时kubelet已经起来了,但还是有报错:说网络还没有就绪,网络插件还未激活

[root@master ~]# systemctl status kubelet

● kubelet.service - kubelet: The Kubernetes Node Agent

Loaded: loaded (/usr/lib/systemd/system/kubelet.service; disabled; vendor preset: disabled)

Drop-In: /usr/lib/systemd/system/kubelet.service.d

└─10-kubeadm.conf

Active: active (running) since Sun 2023-07-09 13:49:52 CST; 20min ago

Docs: https://kubernetes.io/docs/

Main PID: 29979 (kubelet)

Tasks: 11

Memory: 44.0M

CGroup: /system.slice/kubelet.service

└─29979 /usr/bin/kubelet --bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.conf --kubeconfig=/etc/kubernetes/kubelet.conf --config=/var/lib/kubelet/config.yaml --container-runtime-endpoint...

Jul 09 14:09:48 master kubelet[29979]: E0709 14:09:48.250500 29979 kubelet.go:2760] "Container runtime network not ready" networkReady="NetworkReady=false reason:NetworkPluginNotReady messag...t initialized"

Jul 09 14:09:53 master kubelet[29979]: E0709 14:09:53.251686 29979 kubelet.go:2760] "Container runtime network not ready" networkReady="NetworkReady=false reason:NetworkPluginNotReady messag...t initialized"

Jul 09 14:09:58 master kubelet[29979]: E0709 14:09:58.252762 29979 kubelet.go:2760] "Container runtime network not ready" networkReady="NetworkReady=false reason:NetworkPluginNotReady messag...t initialized"

查看启动的容器

[root@master ~]# crictl ps

CONTAINER IMAGE CREATED STATE NAME ATTEMPT POD ID POD

f79b2b82e24aa 5f82fc39fa816 11 minutes ago Running kube-proxy 0 a05ef7d2d7a3b kube-proxy-bdzh6

f48bd5d031a38 95fe52ed44570 12 minutes ago Running kube-controller-manager 0 451a59c46907e kube-controller-manager-master

4ca2bf73d8a19 6f707f569b572 12 minutes ago Running kube-apiserver 0 e27c124e4422e kube-apiserver-master

7c6edea985069 86b6af7dd652c 12 minutes ago Running etcd 0 285d4ec481dae etcd-master

d1feeb8768dbd f73f1b39c3fe8 12 minutes ago Running kube-scheduler 0 f66a11a4ebd7f kube-scheduler-master

查看node状态

[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master NotReady control-plane 13m v1.27.3

操作命令行

| 命令 | docker | ctr(containerd) | crictl(kubernetes) |

|---|---|---|---|

| 查看运行的容器 | docker ps | ctr task ls/ctr container ls | crictl ps |

| 查看镜像 | docker images | ctr image ls | crictl images |

| 查看容器日志 | docker logs | 无 | crictl logs |

| 查看容器数据信息 | docker inspect | ctr container info | crictl inspect |

| 查看容器资源 | docker stats | 无 | crictl stats |

| 启动/关闭已有的容器 | docker start/stop | ctr task start/kill | crictl start/stop |

| 运行一个新的容器 | docker run | ctr run | 无(最小单元为 pod) |

| 打标签 | docker tag | ctr image tag | 无 |

| 创建一个新的容器 | docker create | ctr container create | crictl create |

| 导入镜像 | docker load | ctr image import | 无 |

| 导出镜像 | docker save | ctr image export | 无 |

| 删除容器 | docker rm | ctr container rm | crictl rm |

| 删除镜像 | docker rmi | ctr image rm | crictl rmi |

| 拉取镜像 | docker pull | ctr image pull | ctictl pull |

| 推送镜像 | docker push | ctr image push | 无 |

| 登录或在容器内部执行命令 | docker exec | 无 | crictl exec |

| 清空不用的容器 | docker image prune | 无 | crictl rmi --prune |

安装网络插件

[root@master data]# wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

--2023-07-09 14:34:07-- https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

Resolving raw.githubusercontent.com (raw.githubusercontent.com)... \185.199.110.133, 185.199.109.133, 185.199.111.133, ...

Connecting to raw.githubusercontent.com (raw.githubusercontent.com)|185.199.110.133|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 4615 (4.5K) [text/plain]

Saving to: ‘kube-flannel.yml’

100%[=======================================================================================================================================================================>] 4,615 --.-K/s in 0s

2023-07-09 14:34:07 (89.3 MB/s) - ‘kube-flannel.yml’ saved [4615/4615]

[root@master data]# ll

total 12

-rw-r--r-- 1 root root 840 Jul 9 13:23 kubeadm.yml

-rw-r--r-- 1 root root 4615 Jul 9 14:34 kube-flannel.yml

[root@master data]#

[root@master data]#

[root@master data]# sed -i 's/quay.io/quay-mirror.qiniu.com/g' kube-flannel.yml

[root@master data]#

[root@master data]#

[root@master data]# kubectl apply -f /data/kube-flannel.yml

namespace/kube-flannel created

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.apps/kube-flannel-ds created

Node操作

Node添加

[root@node1 ~]# kubeadm join 192.168.10.101:6443 --token abcdef.0123456789abcdef --discovery-token-ca-cert-hash sha256:6ef00961d04157a81e8e60e04673835350a3995db28f5fb5395cfc13a21bb8f8

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

报错解决

kubeadm拉取镜像报错

[root@master ~]# kubeadm config images pull --config kubeadm.yml

failed to pull image "registry.k8s.io/kube-apiserver:v1.27.0": output: E0707 11:05:07.804670 28217 remote_image.go:171] "PullImage from image service failed" err="rpc error: code = Unavailable desc = connection error: desc = \"transport: Error while dialing dial unix /var/run/containerd/containerd.sock: connect: no such file or directory\"" image="registry.k8s.io/kube-apiserver:v1.27.0"

time="2023-07-07T11:05:07+08:00" level=fatal msg="pulling image: rpc error: code = Unavailable desc = connection error: desc = \"transport: Error while dialing dial unix /var/run/containerd/containerd.sock: connect: no such file or directory\""

, error: exit status 1

原因:没有启动containerd服务

解决:

[root@master ~]# systemctl start containerd

报错

[root@master ~]# kubeadm config images pull --config kubeadm.yml

failed to pull image "registry.k8s.io/kube-apiserver:v1.27.0": output: time="2023-07-07T11:06:14+08:00" level=fatal msg="validate service connection: CRI v1 image API is not implemented for endpoint \"unix:///var/run/containerd/containerd.sock\": rpc error: code = Unimplemented desc = unknown service runtime.v1.ImageService"

, error: exit status 1

To see the stack trace of this error execute with --v=5 or higher

[root@master ~]# ll /var/run/containerd/containerd.sock

srw-rw---- 1 root root 0 Jul 7 11:05 /var/run/containerd/containerd.sock

crictl 是 kubernetes cri-tools 的一部分,是专门为 kubernetes 使用 containerd 而专门制作的,提供 了 Pod、容器和镜像等资源的管理命令。

需要注意的是:使用其他非 kubernetes 创建的容器、镜像,crictl 是无法看到和调试的,比如说 ctr run 在未指定 namespace 情况下运行起来的容器就无法使用 crictl 看到。当然 ctr 可以使用 -n k8s.io 指定操作的 namespace 为 k8s.io,从而可以看到/操作 kubernetes 集群中容器、镜像等资 源。可以理解为:crictl 操作的时候指定了 containerd 的 namespace 为 k8s.io。

作为接替 Docker 运行时的 Containerd 在早在 Kubernetes1.7 时就能直接与 Kubelet 集成使用,只是大部分时候我们因熟悉 Docker,在部署集群时采用了默认的 dockershim。在V1.24起的版本的 kubelet 就彻底移除了dockershim,改为默认使用Containerd了,当然也使用 cri-dockerd 适配器来将 Docker Engine 与 Kubernetes 集成

更换 Containerd 后,以往我们常用的 docker 命令也不再使用,取而代之的分别是 crictl 和 ctr 两个命令客户端。

[root@master ~]# ctr -v

ctr containerd.io 1.6.21

crictl是遵循 CRI 接口规范的一个命令行工具,通常用它来检查和管理kubelet节点上的容器运行时和镜像。ctr是containerd的一个客户端工具。ctr -v输出的是containerd的版本,crictl -v输出的是当前 k8s 的版本,从结果显而易见你可以认为crictl是用于k8s的。- 一般来说你某个主机安装了 k8s 后,命令行才会有 crictl 命令。而 ctr 是跟 k8s 无关的,你主机安装了 containerd 服务后就可以操作 ctr 命令。

初始化报错

[root@master ~]# kubeadm init --config=kubeadm.yml --upload-certs --v=6

[preflight] Some fatal errors occurred:

[ERROR FileContent--proc-sys-net-bridge-bridge-nf-call-iptables]: /proc/sys/net/bridge/bridge-nf-call-iptables does not exist

[ERROR FileContent--proc-sys-net-ipv4-ip_forward]: /proc/sys/net/ipv4/ip_forward contents are not set to 1

[preflight] If you know what you are doing, you can make a check non-fatal with `--ignore-preflight-errors=...`

error execution phase preflight

解决:

[root@master ~]# modprobe br_netfilter

[root@master ~]# cat /proc/sys/net/ipv4/ip_forward

0

[root@master ~]# echo 1 > /proc/sys/net/ipv4/ip_forward

[root@master ~]# cat /proc/sys/net/ipv4/ip_forward

1

参考:Kubernetes中文社区

使用crictl pull时报错:“unknown service runtime.v1alpha2.ImageService”

Containerd ctr、crictl、nerdctl 客户端命令介绍与实战操作