使用kube-prometheus部署k8s监控(最新版)

使用kube-prometheus部署k8s监控

-

- 简单介绍

- 准备清单文件

-

- 1.修改yaml,增加持久化存储

- 2.安装nfs服务端

- 3.准备nfs-storage配置

- 启动服务

-

- 1.部署CRD

- 2.部署nfs-storage

- 2.部署 kube-prometheus

- 3.部署ingress-controller

- 访问测试

-

- 1.本地hosts 添加解析

- 2.访问promethues

- 3访问grafana

- 参考文档

简单介绍

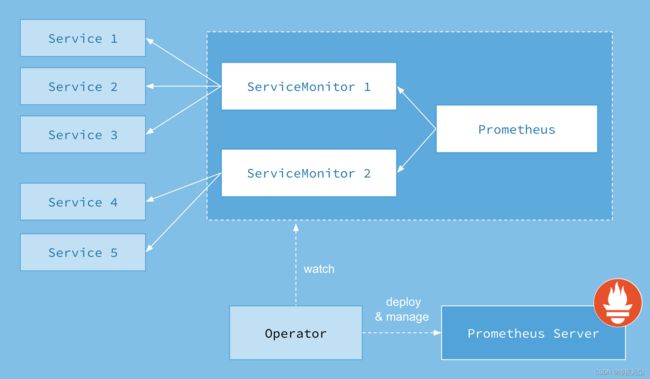

为了方便大家使用prometheus,Coreos出了提供了一个Operator,而为了方便大家一站式的监控方案就有了项目kube-prometheus是一个脚本项目,它主要使用jsonnet写成,其作用呢就是模板+参数然后渲染出yaml文件集,主要是作用是提供一个开箱即用的监控栈,用于kubernetes集群的监控和应用程序的监控。

这个项目主要包括以下软件栈:

- The Prometheus Operator:创建CRD自定义的资源对象

- Highly available Prometheus:创建高可用的Prometheus

- Highly available Alertmanager:创建高可用的告警组件

- Prometheus node-exporter:创建主机的监控组件

- Prometheus Adapter for Kubernetes Metrics APIs:创建自定义监控的指标工具(例如可以通过nginx的request来进行应用的自动伸缩)

- kube-state-metrics:监控k8s相关资源对象的状态指标

- Grafana:进行图像展示

Prometheus Operator的架构图

图片来源:

https://raw.githubusercontent.com/prometheus-operator/prometheus-operator/master/Documentation/user-guides/images/architecture.png

kube-prometheus的兼容性说明

(https://github.com/prometheus-operator/kube-prometheus#kubernetes-compatibility-matrix),按照兼容性说明,本次部署的是release-0.11版本

| kube-prometheus stack | Kubernetes 1.18 | Kubernetes 1.19 | Kubernetes 1.20 | Kubernetes 1.21 | Kubernetes 1.22 | Kubernetes 1.23 | Kubernetes 1.24 |

|---|---|---|---|---|---|---|---|

release-0.6 |

✗ | ✔ | ✗ | ✗ | ✗ | x | x |

release-0.7 |

✗ | ✔ | ✔ | ✗ | ✗ | x | x |

release-0.8 |

✗ | ✗ | ✔ | ✔ | ✗ | x | x |

release-0.9 |

✗ | ✗ | ✗ | ✔ | ✔ | x | x |

release-0.10 |

✗ | ✗ | ✗ | x | ✔ | ✔ | x |

release-0.11 |

✗ | ✗ | ✗ | x | x | ✔ | ✔ |

HEAD |

✗ | ✗ | ✗ | x | x | x | ✔ |

准备清单文件

从官方的地址获取最新的release-0.11分支,或者直接打包下载release-0.11

git clone https://github.com/prometheus-operator/kube-prometheus.git

git checkout release-0.11

# 直接下载打包好的包

wget https://github.com/prometheus-operator/kube-prometheus/archive/refs/tags/v0.11.0.tar.gz

tar -xvf v0.11.0.tar.gz

mv kube-prometheus-0.11.0 kube-prometheus

默认下载下来的文件较多,建议把文件进行归类处理,将相关yaml文件移动到对应目录下

cd kube-prometheus/manifests

mkdir -p adapter alertmanager blackbox grafana kube-state-metrics node-exporter operator other/{nfs-storage,ingress} prometheus

最终结构如下

manifests/

├── adapter

│ ├── prometheusAdapter-apiService.yaml

│ ├── prometheusAdapter-clusterRoleAggregatedMetricsReader.yaml

│ ├── prometheusAdapter-clusterRoleBindingDelegator.yaml

│ ├── prometheusAdapter-clusterRoleBinding.yaml

│ ├── prometheusAdapter-clusterRoleServerResources.yaml

│ ├── prometheusAdapter-clusterRole.yaml

│ ├── prometheusAdapter-configMap.yaml

│ ├── prometheusAdapter-deployment.yaml

│ ├── prometheusAdapter-networkPolicy.yaml

│ ├── prometheusAdapter-podDisruptionBudget.yaml

│ ├── prometheusAdapter-roleBindingAuthReader.yaml

│ ├── prometheusAdapter-serviceAccount.yaml

│ ├── prometheusAdapter-serviceMonitor.yaml

│ └── prometheusAdapter-service.yaml

├── alertmanager

│ ├── alertmanager-alertmanager.yaml

│ ├── alertmanager-networkPolicy.yaml

│ ├── alertmanager-podDisruptionBudget.yaml

│ ├── alertmanager-prometheusRule.yaml

│ ├── alertmanager-secret.yaml

│ ├── alertmanager-serviceAccount.yaml

│ ├── alertmanager-serviceMonitor.yaml

│ └── alertmanager-service.yaml

├── blackbox

│ ├── blackboxExporter-clusterRoleBinding.yaml

│ ├── blackboxExporter-clusterRole.yaml

│ ├── blackboxExporter-configuration.yaml

│ ├── blackboxExporter-deployment.yaml

│ ├── blackboxExporter-networkPolicy.yaml

│ ├── blackboxExporter-serviceAccount.yaml

│ ├── blackboxExporter-serviceMonitor.yaml

│ └── blackboxExporter-service.yaml

├── grafana

│ ├── grafana-config.yaml

│ ├── grafana-dashboardDatasources.yaml

│ ├── grafana-dashboardDefinitions.yaml

│ ├── grafana-dashboardSources.yaml

│ ├── grafana-deployment.yaml

│ ├── grafana-networkPolicy.yaml

│ ├── grafana-prometheusRule.yaml

│ ├── grafana-serviceAccount.yaml

│ ├── grafana-serviceMonitor.yaml

│ └── grafana-service.yaml

├── kube-state-metrics

│ ├── kubePrometheus-prometheusRule.yaml

│ ├── kubernetesControlPlane-prometheusRule.yaml

│ ├── kubernetesControlPlane-serviceMonitorApiserver.yaml

│ ├── kubernetesControlPlane-serviceMonitorCoreDNS.yaml

│ ├── kubernetesControlPlane-serviceMonitorKubeControllerManager.yaml

│ ├── kubernetesControlPlane-serviceMonitorKubelet.yaml

│ ├── kubernetesControlPlane-serviceMonitorKubeScheduler.yaml

│ ├── kubeStateMetrics-clusterRoleBinding.yaml

│ ├── kubeStateMetrics-clusterRole.yaml

│ ├── kubeStateMetrics-deployment.yaml

│ ├── kubeStateMetrics-networkPolicy.yaml

│ ├── kubeStateMetrics-prometheusRule.yaml

│ ├── kubeStateMetrics-serviceAccount.yaml

│ ├── kubeStateMetrics-serviceMonitor.yaml

│ └── kubeStateMetrics-service.yaml

├── node-exporter

│ ├── nodeExporter-clusterRoleBinding.yaml

│ ├── nodeExporter-clusterRole.yaml

│ ├── nodeExporter-daemonset.yaml

│ ├── nodeExporter-networkPolicy.yaml

│ ├── nodeExporter-prometheusRule.yaml

│ ├── nodeExporter-serviceAccount.yaml

│ ├── nodeExporter-serviceMonitor.yaml

│ └── nodeExporter-service.yaml

├── operator

│ ├── prometheusOperator-clusterRoleBinding.yaml

│ ├── prometheusOperator-clusterRole.yaml

│ ├── prometheusOperator-deployment.yaml

│ ├── prometheusOperator-networkPolicy.yaml

│ ├── prometheusOperator-prometheusRule.yaml

│ ├── prometheusOperator-serviceAccount.yaml

│ ├── prometheusOperator-serviceMonitor.yaml

│ └── prometheusOperator-service.yaml

├── other

│ ├── ingress

│ │ ├── deployment.yaml

│ │ └── prom-ingress.yaml

│ └── nfs-storage

│ ├── grafana-pvc.yaml

│ ├── nfs-provisioner.yaml

│ ├── nfs-rbac.yaml

│ └── nfs-storageclass.yaml

├── prometheus

│ ├── prometheus-clusterRoleBinding.yaml

│ ├── prometheus-clusterRole.yaml

│ ├── prometheus-networkPolicy.yaml

│ ├── prometheus-podDisruptionBudget.yaml

│ ├── prometheus-prometheusRule.yaml

│ ├── prometheus-prometheus.yaml

│ ├── prometheus-roleBindingConfig.yaml

│ ├── prometheus-roleBindingSpecificNamespaces.yaml

│ ├── prometheus-roleConfig.yaml

│ ├── prometheus-roleSpecificNamespaces.yaml

│ ├── prometheus-serviceAccount.yaml

│ ├── prometheus-serviceMonitor.yaml

│ └── prometheus-service.yaml

└── setup

├── 0alertmanagerConfigCustomResourceDefinition.yaml

├── 0alertmanagerCustomResourceDefinition.yaml

├── 0podmonitorCustomResourceDefinition.yaml

├── 0probeCustomResourceDefinition.yaml

├── 0prometheusCustomResourceDefinition.yaml

├── 0prometheusruleCustomResourceDefinition.yaml

├── 0servicemonitorCustomResourceDefinition.yaml

├── 0thanosrulerCustomResourceDefinition.yaml

└── namespace.yaml

12 directories, 99 files

1.修改yaml,增加持久化存储

manifests/prometheus/prometheus-prometheus.yaml

...

serviceAccountName: prometheus-k8s

serviceMonitorNamespaceSelector: { }

serviceMonitorSelector: { }

version: 2.36.1

# 新增持久化存储,末尾添加

retention: 3d

storage:

volumeClaimTemplate:

spec:

storageClassName: nfs-storage

resources:

requests:

storage: 5Gi

manifests/grafana/grafana-deployment.yaml

...

volumes:

# - emptyDir: {}

# name: grafana-storage

- name: grafana-storage

persistentVolumeClaim:

claimName: grafana-pvc

2.安装nfs服务端

#! /usr/bin/env python3

# _*_ coding: utf8 _*_

import platform

import os

import stat

def init_nfs():

# nfs_str: nfs 中共享挂载路径参数

nfs_str = "/data/nfs *(rw,sync,no_root_squash,no_subtree_check)"

with open('/etc/exports', 'w', encoding='utf8') as n:

n.write(nfs_str)

if not os.path.exists('/data/nfs'):

# exisk_ok: 如果为真执行 -p 操作

os.makedirs('/data/nfs', exist_ok=True) # 如果指定的路径不存在,新建

# 授权,相当于执行 --> chmod 777 /data/nfs

os.chmod('/data/nfs', stat.S_IRWXU + stat.S_IRWXG + stat.S_IRWXO)

def main():

# system_type: 操作系统类型 例: ubuntu | centos

system_type = platform.uname().version.lower()

if 'ubuntu' in system_type:

# 安装nfs

os.system('apt install -y nfs-kernel-server rpcbind')

init_nfs()

# 启动&开机自启

os.system('systemctl start rpcbind && systemctl start nfs-kernel-server.service')

# 检查 是否启动成功

if os.system('exportfs -rv') != 0:

os.system(' /etc/init.d/nfs-kernel-server restart')

os.system('systemctl enable rpcbind && systemctl enable nfs-kernel-server.service')

elif 'centos' in system_type:

# 安装nfs

os.system('yum install -y nfs-utils rpcbind')

init_nfs()

# 启动&开机自启

os.system('systemctl start rpcbind && systemctl start nfs-server')

os.system('systemctl enable rpcbind && systemctl enable nfs-server')

if __name__ == '__main__':

main()

使用上面的脚本安装nfs服务端 或者使用已有的nfs也是可以的,当然你也可以不使用nfs,可以使用其它的存储,如 ceph等

3.准备nfs-storage配置

需要提前安装nfs-server 服务端,上面步骤有讲

manifests/other/nfs-storage/nfs-rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: monitoring

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-client-provisioner-runner

rules:

- apiGroups: [ "" ]

resources: [ "nodes" ]

verbs: [ "get", "list", "watch" ]

- apiGroups: [ "" ]

resources: [ "persistentvolumes" ]

verbs: [ "get", "list", "watch", "create", "delete" ]

- apiGroups: [ "" ]

resources: [ "persistentvolumeclaims" ]

verbs: [ "get", "list", "watch", "update" ]

- apiGroups: [ "storage.k8s.io" ]

resources: [ "storageclasses" ]

verbs: [ "get", "list", "watch" ]

- apiGroups: [ "" ]

resources: [ "events" ]

verbs: [ "create", "update", "patch" ]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: monitoring

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: monitoring

rules:

- apiGroups: [ "" ]

resources: [ "endpoints" ]

verbs: [ "get", "list", "watch", "create", "update", "patch" ]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: monitoring

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: monitoring

roleRef:

kind: Role

name: leader-locking-nfs-client-provisioner

apiGroup: rbac.authorization.k8s.io

manifests/other/nfs-storage/nfs-provisioner.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nfs-client-provisioner

labels:

app: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: monitoring

spec:

replicas: 1

strategy:

type: Recreate

selector:

matchLabels:

app: nfs-client-provisioner

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

image: k8s.gcr.io/sig-storage/nfs-subdir-external-provisioner:v4.0.2

imagePullPolicy: IfNotPresent

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: nfs-client

- name: NFS_SERVER

value: 192.168.50.134

- name: NFS_PATH

value: /data/nfs

volumes:

- name: nfs-client-root

nfs:

server: 192.168.50.134

path: /data/nfs

manifests/other/nfs-storage/nfs-storageclass.yaml

---

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: nfs-storage

namespace: monitoring

annotations:

storageclass.kubernetes.io/is-default-class: "false" ## 是否设置为默认的storageclass

provisioner: nfs-client ## 动态卷分配者名称,必须和上面创建的"provisioner"变量中设置的Name一致

reclaimPolicy: Retain ## 指定回收政策,storage 默认为 Delete

这里需要创建grafana的 pvc 并指向我们创建的storage ,它会自动生成pv

manifests/other/nfs-storage/grafana-pvc.yaml

---

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: grafana-pvc

namespace: monitoring

spec:

storageClassName: nfs-storage

accessModes:

- ReadWriteMany

resources:

requests:

# 大小自个调整

storage: 1Gi

启动服务

1.部署CRD

kubectl create -f setup/

使用 apply 时提示 annotations 过长,使用 create 代替 The CustomResourceDefinition “prometheuses.monitoring.coreos.com” is invalid: metadata.annotations: Too long: must have at most 262144 bytes

输出如下:

customresourcedefinition.apiextensions.k8s.io/alertmanagerconfigs.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/alertmanagers.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/podmonitors.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/probes.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/prometheuses.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/prometheusrules.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/servicemonitors.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/thanosrulers.monitoring.coreos.com created

namespace/monitoring created

2.部署nfs-storage

前面是准备配置文件,这里才开始部署 注:

使用nfs来存储的话,一定不要忘记nfs的安装及共享目录授权啊

# 创建 nfs-storage 和grafana-pvc

kubectl create -f other/nfs-storage/

输出如下:

persistentvolumeclaim/grafana-pvc created

deployment.apps/nfs-client-provisioner created

serviceaccount/nfs-client-provisioner created

clusterrole.rbac.authorization.k8s.io/nfs-client-provisioner-runner created

clusterrolebinding.rbac.authorization.k8s.io/run-nfs-client-provisioner created

role.rbac.authorization.k8s.io/leader-locking-nfs-client-provisioner created

rolebinding.rbac.authorization.k8s.io/leader-locking-nfs-client-provisioner created

storageclass.storage.k8s.io/nfs-storage created

查看创建的pvc和class:

你如果执行完命令就去看,会发现pvc是

Pending状态,这需要等待一下,如果一直是这个状态,可能需要你去看一下 nfs-storage 的pod 是否启动成功了,一定不要忘记nfs的安装及共享目录授权啊

2.部署 kube-prometheus

# 启动服务,我这里没有使用 `apply` 而是使用的 `create` 因为我不想看到警告信息,虽然没错,但是不爽

kubectl create -f adapter/ -f alertmanager/ -f blackbox/ -f grafana/ -f kube-state-metrics/ -f node-exporter/ -f operator/ -f prometheus/

输出如下:

apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io created

clusterrole.rbac.authorization.k8s.io/prometheus-adapter created

clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader created

clusterrolebinding.rbac.authorization.k8s.io/prometheus-adapter created

clusterrolebinding.rbac.authorization.k8s.io/resource-metrics:system:auth-delegator created

clusterrole.rbac.authorization.k8s.io/resource-metrics-server-resources created

configmap/adapter-config created

deployment.apps/prometheus-adapter created

networkpolicy.networking.k8s.io/prometheus-adapter created

poddisruptionbudget.policy/prometheus-adapter created

rolebinding.rbac.authorization.k8s.io/resource-metrics-auth-reader created

service/prometheus-adapter created

serviceaccount/prometheus-adapter created

servicemonitor.monitoring.coreos.com/prometheus-adapter created

alertmanager.monitoring.coreos.com/main created

networkpolicy.networking.k8s.io/alertmanager-main created

poddisruptionbudget.policy/alertmanager-main created

prometheusrule.monitoring.coreos.com/alertmanager-main-rules created

secret/alertmanager-main created

service/alertmanager-main created

serviceaccount/alertmanager-main created

servicemonitor.monitoring.coreos.com/alertmanager-main created

clusterrole.rbac.authorization.k8s.io/blackbox-exporter created

clusterrolebinding.rbac.authorization.k8s.io/blackbox-exporter created

configmap/blackbox-exporter-configuration created

deployment.apps/blackbox-exporter created

networkpolicy.networking.k8s.io/blackbox-exporter created

service/blackbox-exporter created

serviceaccount/blackbox-exporter created

servicemonitor.monitoring.coreos.com/blackbox-exporter created

secret/grafana-config created

secret/grafana-datasources created

configmap/grafana-dashboard-alertmanager-overview created

configmap/grafana-dashboard-apiserver created

configmap/grafana-dashboard-cluster-total created

configmap/grafana-dashboard-controller-manager created

configmap/grafana-dashboard-grafana-overview created

configmap/grafana-dashboard-k8s-resources-cluster created

configmap/grafana-dashboard-k8s-resources-namespace created

configmap/grafana-dashboard-k8s-resources-node created

configmap/grafana-dashboard-k8s-resources-pod created

configmap/grafana-dashboard-k8s-resources-workload created

configmap/grafana-dashboard-k8s-resources-workloads-namespace created

configmap/grafana-dashboard-kubelet created

configmap/grafana-dashboard-namespace-by-pod created

configmap/grafana-dashboard-namespace-by-workload created

configmap/grafana-dashboard-node-cluster-rsrc-use created

configmap/grafana-dashboard-node-rsrc-use created

configmap/grafana-dashboard-nodes created

configmap/grafana-dashboard-persistentvolumesusage created

configmap/grafana-dashboard-pod-total created

configmap/grafana-dashboard-prometheus-remote-write created

configmap/grafana-dashboard-prometheus created

configmap/grafana-dashboard-proxy created

configmap/grafana-dashboard-scheduler created

configmap/grafana-dashboard-workload-total created

configmap/grafana-dashboards created

deployment.apps/grafana created

networkpolicy.networking.k8s.io/grafana created

prometheusrule.monitoring.coreos.com/grafana-rules created

service/grafana created

serviceaccount/grafana created

servicemonitor.monitoring.coreos.com/grafana created

prometheusrule.monitoring.coreos.com/kube-prometheus-rules created

clusterrole.rbac.authorization.k8s.io/kube-state-metrics created

clusterrolebinding.rbac.authorization.k8s.io/kube-state-metrics created

deployment.apps/kube-state-metrics created

networkpolicy.networking.k8s.io/kube-state-metrics created

prometheusrule.monitoring.coreos.com/kube-state-metrics-rules created

service/kube-state-metrics created

serviceaccount/kube-state-metrics created

servicemonitor.monitoring.coreos.com/kube-state-metrics created

prometheusrule.monitoring.coreos.com/kubernetes-monitoring-rules created

servicemonitor.monitoring.coreos.com/kube-apiserver created

servicemonitor.monitoring.coreos.com/coredns created

servicemonitor.monitoring.coreos.com/kube-controller-manager created

servicemonitor.monitoring.coreos.com/kube-scheduler created

servicemonitor.monitoring.coreos.com/kubelet created

clusterrole.rbac.authorization.k8s.io/node-exporter created

clusterrolebinding.rbac.authorization.k8s.io/node-exporter created

daemonset.apps/node-exporter created

networkpolicy.networking.k8s.io/node-exporter created

prometheusrule.monitoring.coreos.com/node-exporter-rules created

service/node-exporter created

serviceaccount/node-exporter created

servicemonitor.monitoring.coreos.com/node-exporter created

clusterrole.rbac.authorization.k8s.io/prometheus-operator created

clusterrolebinding.rbac.authorization.k8s.io/prometheus-operator created

deployment.apps/prometheus-operator created

networkpolicy.networking.k8s.io/prometheus-operator created

prometheusrule.monitoring.coreos.com/prometheus-operator-rules created

service/prometheus-operator created

serviceaccount/prometheus-operator created

servicemonitor.monitoring.coreos.com/prometheus-operator created

clusterrole.rbac.authorization.k8s.io/prometheus-k8s created

clusterrolebinding.rbac.authorization.k8s.io/prometheus-k8s created

networkpolicy.networking.k8s.io/prometheus-k8s created

poddisruptionbudget.policy/prometheus-k8s created

prometheus.monitoring.coreos.com/k8s created

prometheusrule.monitoring.coreos.com/prometheus-k8s-prometheus-rules created

rolebinding.rbac.authorization.k8s.io/prometheus-k8s-config created

rolebinding.rbac.authorization.k8s.io/prometheus-k8s created

rolebinding.rbac.authorization.k8s.io/prometheus-k8s created

rolebinding.rbac.authorization.k8s.io/prometheus-k8s created

role.rbac.authorization.k8s.io/prometheus-k8s-config created

role.rbac.authorization.k8s.io/prometheus-k8s created

role.rbac.authorization.k8s.io/prometheus-k8s created

role.rbac.authorization.k8s.io/prometheus-k8s created

service/prometheus-k8s created

serviceaccount/prometheus-k8s created

servicemonitor.monitoring.coreos.com/prometheus-k8s created

启动完如下图:

3.部署ingress-controller

准备 ingress yaml

wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v1.2.0/deploy/static/provider/baremetal/deploy.yaml -O deployment.yaml

manifests/other/ingress/deployment.yaml

修改 ingress yaml ,下面贴出需要修改的地方

-

添加日志挂载pvc

# add ingress logs volume-pvc kind: PersistentVolumeClaim apiVersion: v1 metadata: name: ingress-logs-pvc namespace: ingress-nginx spec: storageClassName: nfs-storage accessModes: - ReadWriteMany resources: requests: storage: 10Gi -

configmap 设置挂载路径

apiVersion: v1 kind: ConfigMap metadata: labels: app.kubernetes.io/component: controller app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.2.0 name: ingress-nginx-controller namespace: ingress-nginx data: allow-snippet-annotations: "true" access-log-path: "/var/log/nginx/access.log" error-log-path: "/var/log/nginx/error.log" -

取消默认的Nodeport 端口暴露

apiVersion: v1 kind: Service metadata: labels: app.kubernetes.io/component: controller app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.2.0 name: ingress-nginx-controller namespace: ingress-nginx spec: ports: - appProtocol: http name: http port: 80 protocol: TCP targetPort: http - appProtocol: https name: https port: 443 protocol: TCP targetPort: https selector: app.kubernetes.io/component: controller app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx # type: NodePort -

使用本机端口并设置日志输出位置

... spec: # 暴露本地端口 hostNetwork: true containers: - args: - /nginx-ingress-controller - --election-id=ingress-controller-leader - --controller-class=k8s.io/ingress-nginx - --ingress-class=nginx - --configmap=$(POD_NAMESPACE)/ingress-nginx-controller - --validating-webhook=:8443 - --validating-webhook-certificate=/usr/local/certificates/cert - --validating-webhook-key=/usr/local/certificates/key - --v=2 - --log_dir=/var/log/nginx/ - --logtostderr=false -

新增挂载配置

... volumeMounts: - mountPath: /usr/local/certificates/ name: webhook-cert readOnly: true - name: ingress-logs mountPath: /var/log/nginx/ dnsPolicy: ClusterFirst nodeSelector: kubernetes.io/os: linux serviceAccountName: ingress-nginx terminationGracePeriodSeconds: 300 volumes: - name: webhook-cert secret: secretName: ingress-nginx-admission - name: ingress-logs persistentVolumeClaim: claimName: ingress-logs-pvc -

编写ingress 代理规则

manifests/other/ingress/prom-ingress.yaml

--- apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: prom-ingress namespace: monitoring spec: ingressClassName: nginx rules: - host: alert.k8s.com http: paths: - path: / pathType: Prefix backend: service: name: alertmanager-main port: number: 9093 - host: grafana.k8s.com http: paths: - path: / pathType: Prefix backend: service: name: grafana port: number: 3000 - host: prom.k8s.com http: paths: - path: / pathType: Prefix backend: service: name: prometheus-k8s port: number: 9090

# 创建ingress并创建代理规则

kubectl create -f other/ingress/

访问测试

1.本地hosts 添加解析

cat >> /etc/hosts <<EOF

192.168.50.134 alert.k8s.com grafana.k8s.com prom.k8s.com

EOF

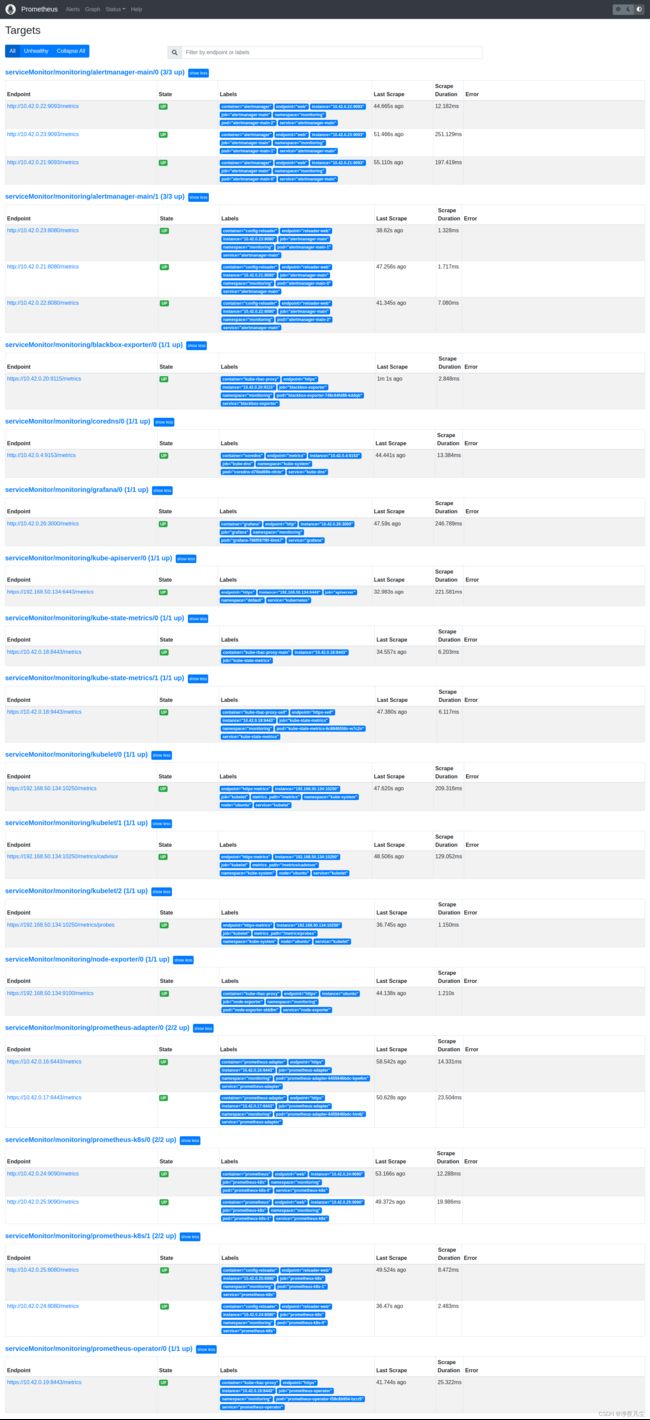

2.访问promethues

http://prom.k8s.com

3访问grafana

http://grafana.k8s.com

默认登录账号密码都为

admin登录就会要求你重设密码

模版ID: 13105 https://grafana.com/grafana/dashboards/13105

效果如下图:

更文说明:将kube-prometheus 从 0.8 升级至 0.11 版本!

到此 kubernetes 部署 prometheus 监控 完毕!

参考文档

kube-prometheus官方地址: https://github.com/prometheus-operator/kube-prometheus

Prometheus Operator文档: https://prometheus-operator.dev/docs/prologue/introduction/

相关使用文件在gitee: https://gitee.com/glnp/docker-case/tree/master/monitoring/prometheus/kube-prometheus