学习pytorch15 优化器

优化器

- 官网

- 如何构造一个优化器

- 优化器的step方法

- code

- running log

-

- 出现下面问题如何做反向优化?

官网

https://pytorch.org/docs/stable/optim.html

提问:优化器是什么 要优化什么 优化能干什么 优化是为了解决什么问题

优化模型参数

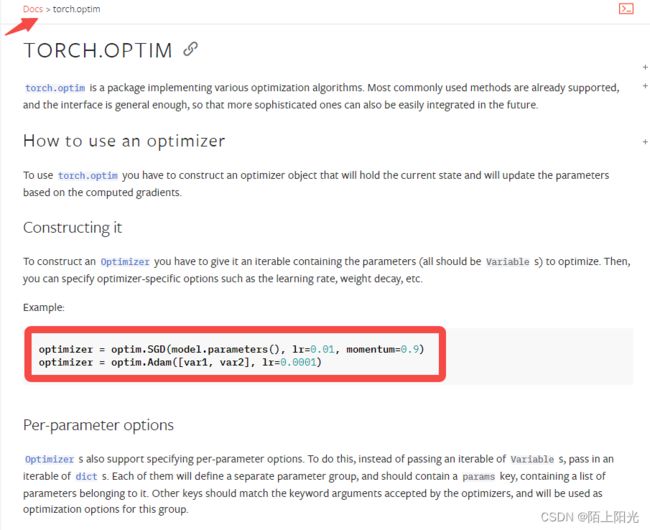

如何构造一个优化器

optimizer = optim.SGD(model.parameters(), lr=0.01, momentum=0.9) # momentum SGD优化算法用到的参数

optimizer = optim.Adam([var1, var2], lr=0.0001)

- 选择一个优化器算法,如上 SGD 或者 Adam

- 第一个参数 需要传入模型参数

- 第二个及后面的参数是优化器算法特定需要的,lr 学习率基本每个优化器算法都会用到

优化器的step方法

会利用模型的梯度,根据梯度每一轮更新参数

optimizer.zero_grad() # 必须做 把上一轮计算的梯度清零,否则模型会有问题

for input, target in dataset:

optimizer.zero_grad() # 必须做 把上一轮计算的梯度清零,否则模型会有问题

output = model(input)

loss = loss_fn(output, target)

loss.backward()

optimizer.step()

or 把模型梯度包装成方法再调用

for input, target in dataset:

def closure():

optimizer.zero_grad()

output = model(input)

loss = loss_fn(output, target)

loss.backward()

return loss

optimizer.step(closure)

code

import torch

import torchvision

from torch import nn, optim

from torch.nn import Conv2d, MaxPool2d, Flatten, Linear, Sequential

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

test_set = torchvision.datasets.CIFAR10("./dataset", train=False, transform=torchvision.transforms.ToTensor(),

download=True)

dataloader = DataLoader(test_set, batch_size=1)

class MySeq(nn.Module):

def __init__(self):

super(MySeq, self).__init__()

self.model1 = Sequential(Conv2d(3, 32, kernel_size=5, stride=1, padding=2),

MaxPool2d(2),

Conv2d(32, 32, kernel_size=5, stride=1, padding=2),

MaxPool2d(2),

Conv2d(32, 64, kernel_size=5, stride=1, padding=2),

MaxPool2d(2),

Flatten(),

Linear(1024, 64),

Linear(64, 10)

)

def forward(self, x):

x = self.model1(x)

return x

# 定义loss

loss = nn.CrossEntropyLoss()

# 搭建网络

myseq = MySeq()

print(myseq)

# 定义优化器

optmizer = optim.SGD(myseq.parameters(), lr=0.001, momentum=0.9)

for epoch in range(20):

running_loss = 0.0

for data in dataloader:

imgs, targets = data

# print(imgs.shape)

output = myseq(imgs)

optmizer.zero_grad() # 每轮训练将梯度初始化为0 上一次的梯度对本轮参数优化没有用

result_loss = loss(output, targets)

result_loss.backward() # 优化器需要每个参数的梯度, 所以要在backward() 之后执行

optmizer.step() # 根据梯度对每个参数进行调优

# print(result_loss)

# print(result_loss.grad)

# print("ok")

running_loss += result_loss

print(running_loss)

running log

loss由小变大最后到nan的解决办法:

- 降低学习率

- 使用正则化技术

- 增加训练数据

- 检查网络架构和激活函数

出现下面问题如何做反向优化?

Files already downloaded and verified

MySeq(

(model1): Sequential(

(0): Conv2d(3, 32, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(1): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(2): Conv2d(32, 32, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(3): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(4): Conv2d(32, 64, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(5): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(6): Flatten(start_dim=1, end_dim=-1)

(7): Linear(in_features=1024, out_features=64, bias=True)

(8): Linear(in_features=64, out_features=10, bias=True)

)

)

tensor(18622.4551, grad_fn=<AddBackward0>)

tensor(16121.4092, grad_fn=<AddBackward0>)

tensor(15442.6416, grad_fn=<AddBackward0>)

tensor(16387.4531, grad_fn=<AddBackward0>)

tensor(18351.6152, grad_fn=<AddBackward0>)

tensor(20915.9785, grad_fn=<AddBackward0>)

tensor(23081.5254, grad_fn=<AddBackward0>)

tensor(24841.8359, grad_fn=<AddBackward0>)

tensor(25401.1602, grad_fn=<AddBackward0>)

tensor(26187.4961, grad_fn=<AddBackward0>)

tensor(28283.8633, grad_fn=<AddBackward0>)

tensor(30156.9316, grad_fn=<AddBackward0>)

tensor(nan, grad_fn=<AddBackward0>)

tensor(nan, grad_fn=<AddBackward0>)

tensor(nan, grad_fn=<AddBackward0>)

tensor(nan, grad_fn=<AddBackward0>)

tensor(nan, grad_fn=<AddBackward0>)

tensor(nan, grad_fn=<AddBackward0>)

tensor(nan, grad_fn=<AddBackward0>)

tensor(nan, grad_fn=<AddBackward0>)