卷积神经网络-Python、TensorFlow和Keras p.3的深度学习基础

欢迎来到一个教程,在这里我们将讨论卷积神经网络(Convnet和CNN),使用其中的一个用我们在上一教程中构建的数据集对狗和猫进行分类。

卷积神经网络通过它在图像数据中的应用而获得了广泛的应用,并且是目前检测图像内容或包含在图像中的最先进的技术。

CNN的基本结构如下:Convolution -> Pooling -> Convolution -> Pooling -> Fully Connected Layer -> Output

Convolution获取原始数据并从中创建功能地图的行为。Pooling是下采样,最常见的形式是“最大池”,我们选择一个区域,然后取该区域的最大值,这将成为整个区域的新值。Fully Connected Layers是典型的神经网络,所有的节点都“完全连接”。卷积层没有像传统的神经网络那样完全连接起来。

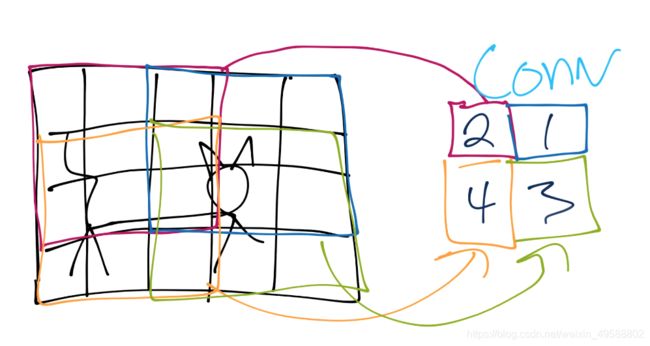

好吧,现在让我们来描述一下发生了什么。我们将从一只猫的图像开始:

然后“转换为像素:”

为了本教程的目的,假设每个正方形都是一个像素。接下来,对于卷积步骤,我们将取一个特定的窗口,并在该窗口中找到特性:

该窗口的功能现在只是一个像素大小的新功能地图,但我们将有多层的功能地图在现实中。

接下来,我们滑过这个窗口并继续这个过程。会有一些重叠,你可以确定你想要多少,你只是不想跳过任何像素,当然

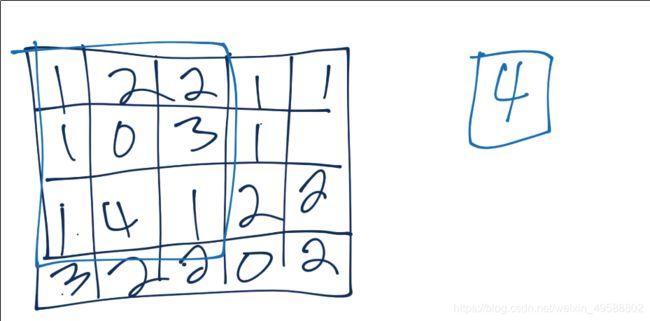

现在,您继续这个过程,直到覆盖了整个图像,然后您将有一个功能地图。通常,特征图只是更多的像素值,只是一个非常简单的值:

从这里开始我们要集中精力。比方说,我们的卷积给了我们(我忘了在第二行的最右方格中放一个数字,假设它是3或更少):

现在我们将使用一个3x3池窗口:

最常见的池形式是“最大池”,在这里我们简单地取窗口中的最大值,这将成为该区域的新值。

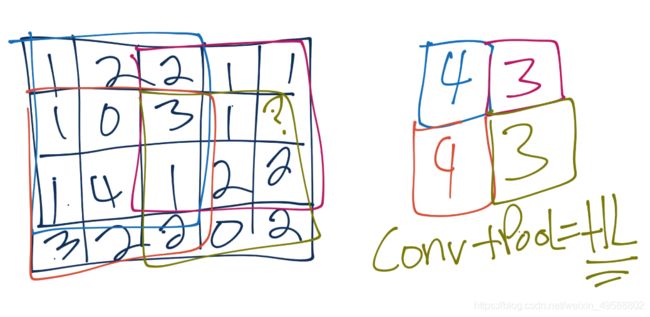

我们继续这个过程,直到我们集合起来,并且有如下内容:

每个卷积和池步骤都是一个隐藏层。在此之后,我们有一个完全连接的层,然后是输出层。完全连接层是典型的神经网络(多层感知器)类型,与输出层相同。

import tensorflow as tf

from tensorflow.keras.datasets import cifar10

from tensorflow.keras.preprocessing.image import ImageDataGenerator

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense, Dropout, Activation, Flatten

from tensorflow.keras.layers import Conv2D, MaxPooling2D

import pickle

pickle_in = open(“X.pickle”,“rb”)

X = pickle.load(pickle_in)

pickle_in = open(“y.pickle”,“rb”)

y = pickle.load(pickle_in)

X = X/255.0

model = Sequential()

model.add(Conv2D(256, (3, 3), input_shape=X.shape[1:]))

model.add(Activation(‘relu’))

model.add(MaxPooling2D(pool_size=(2, 2)))

model.add(Conv2D(256, (3, 3)))

model.add(Activation(‘relu’))

model.add(MaxPooling2D(pool_size=(2, 2)))

model.add(Flatten()) # this converts our 3D feature maps to 1D feature vectors

model.add(Dense(64))

model.add(Dense(1))

model.add(Activation(‘sigmoid’))

model.compile(loss=‘binary_crossentropy’,

optimizer=‘adam’,

metrics=[‘accuracy’])

model.fit(X, y, batch_size=32, epochs=3, validation_split=0.3)

Train on 17441 samples, validate on 7475 samples

Epoch 1/3

17441/17441 [] - ETA: 9:04 - loss: 0.6950 - acc: 0.500 - ETA: 1:35 - loss: 0.7981 - acc: 0.500 - ETA: 54s - loss: 0.7450 - acc: 0.542 - ETA: 38s - loss: 0.7328 - acc: 0.54 - ETA: 30s - loss: 0.7235 - acc: 0.53 - ETA: 25s - loss: 0.7176 - acc: 0.53 - ETA: 22s - loss: 0.7137 - acc: 0.52 - ETA: 19s - loss: 0.7107 - acc: 0.52 - ETA: 17s - loss: 0.7085 - acc: 0.52 - ETA: 16s - loss: 0.7065 - acc: 0.52 - ETA: 15s - loss: 0.7051 - acc: 0.52 - ETA: 14s - loss: 0.7033 - acc: 0.52 - ETA: 13s - loss: 0.7021 - acc: 0.52 - ETA: 12s - loss: 0.7004 - acc: 0.52 - ETA: 11s - loss: 0.6996 - acc: 0.52 - ETA: 11s - loss: 0.6983 - acc: 0.53 - ETA: 10s - loss: 0.6978 - acc: 0.53 - ETA: 10s - loss: 0.6977 - acc: 0.53 - ETA: 10s - loss: 0.6968 - acc: 0.54 - ETA: 9s - loss: 0.6957 - acc: 0.5469 - ETA: 9s - loss: 0.6957 - acc: 0.545 - ETA: 9s - loss: 0.6943 - acc: 0.549 - ETA: 8s - loss: 0.6928 - acc: 0.552 - ETA: 8s - loss: 0.6923 - acc: 0.554 - ETA: 8s - loss: 0.6922 - acc: 0.553 - ETA: 8s - loss: 0.6918 - acc: 0.554 - ETA: 7s - loss: 0.6913 - acc: 0.556 - ETA: 7s - loss: 0.6915 - acc: 0.554 - ETA: 7s - loss: 0.6902 - acc: 0.554 - ETA: 7s - loss: 0.6901 - acc: 0.553 - ETA: 7s - loss: 0.6893 - acc: 0.555 - ETA: 6s - loss: 0.6886 - acc: 0.557 - ETA: 6s - loss: 0.6881 - acc: 0.556 - ETA: 6s - loss: 0.6866 - acc: 0.558 - ETA: 6s - loss: 0.6868 - acc: 0.559 - ETA: 6s - loss: 0.6867 - acc: 0.559 - ETA: 6s - loss: 0.6866 - acc: 0.559 - ETA: 5s - loss: 0.6863 - acc: 0.559 - ETA: 5s - loss: 0.6853 - acc: 0.561 - ETA: 5s - loss: 0.6845 - acc: 0.562 - ETA: 5s - loss: 0.6844 - acc: 0.562 - ETA: 5s - loss: 0.6823 - acc: 0.565 - ETA: 5s - loss: 0.6817 - acc: 0.567 - ETA: 5s - loss: 0.6817 - acc: 0.568 - ETA: 5s - loss: 0.6813 - acc: 0.569 - ETA: 5s - loss: 0.6812 - acc: 0.569 - ETA: 4s - loss: 0.6812 - acc: 0.570 - ETA: 4s - loss: 0.6806 - acc: 0.571 - ETA: 4s - loss: 0.6798 - acc: 0.572 - ETA: 4s - loss: 0.6789 - acc: 0.573 - ETA: 4s - loss: 0.6783 - acc: 0.574 - ETA: 4s - loss: 0.6774 - acc: 0.575 - ETA: 4s - loss: 0.6770 - acc: 0.575 - ETA: 4s - loss: 0.6770 - acc: 0.574 - ETA: 4s - loss: 0.6766 - acc: 0.575 - ETA: 4s - loss: 0.6762 - acc: 0.576 - ETA: 3s - loss: 0.6758 - acc: 0.577 - ETA: 3s - loss: 0.6753 - acc: 0.578 - ETA: 3s - loss: 0.6750 - acc: 0.579 - ETA: 3s - loss: 0.6750 - acc: 0.579 - ETA: 3s - loss: 0.6742 - acc: 0.580 - ETA: 3s - loss: 0.6737 - acc: 0.581 - ETA: 3s - loss: 0.6731 - acc: 0.582 - ETA: 3s - loss: 0.6734 - acc: 0.581 - ETA: 3s - loss: 0.6729 - acc: 0.582 - ETA: 3s - loss: 0.6720 - acc: 0.583 - ETA: 3s - loss: 0.6718 - acc: 0.583 - ETA: 2s - loss: 0.6710 - acc: 0.585 - ETA: 2s - loss: 0.6712 - acc: 0.585 - ETA: 2s - loss: 0.6712 - acc: 0.585 - ETA: 2s - loss: 0.6710 - acc: 0.586 - ETA: 2s - loss: 0.6706 - acc: 0.586 - ETA: 2s - loss: 0.6706 - acc: 0.587 - ETA: 2s - loss: 0.6708 - acc: 0.587 - ETA: 2s - loss: 0.6704 - acc: 0.587 - ETA: 2s - loss: 0.6709 - acc: 0.587 - ETA: 2s - loss: 0.6710 - acc: 0.586 - ETA: 2s - loss: 0.6709 - acc: 0.587 - ETA: 2s - loss: 0.6710 - acc: 0.587 - ETA: 2s - loss: 0.6710 - acc: 0.586 - ETA: 1s - loss: 0.6704 - acc: 0.587 - ETA: 1s - loss: 0.6703 - acc: 0.587 - ETA: 1s - loss: 0.6701 - acc: 0.587 - ETA: 1s - loss: 0.6695 - acc: 0.589 - ETA: 1s - loss: 0.6695 - acc: 0.589 - ETA: 1s - loss: 0.6693 - acc: 0.588 - ETA: 1s - loss: 0.6695 - acc: 0.589 - ETA: 1s - loss: 0.6691 - acc: 0.589 - ETA: 1s - loss: 0.6690 - acc: 0.590 - ETA: 1s - loss: 0.6690 - acc: 0.590 - ETA: 1s - loss: 0.6687 - acc: 0.590 - ETA: 1s - loss: 0.6685 - acc: 0.591 - ETA: 1s - loss: 0.6683 - acc: 0.591 - ETA: 1s - loss: 0.6684 - acc: 0.591 - ETA: 1s - loss: 0.6682 - acc: 0.592 - ETA: 0s - loss: 0.6680 - acc: 0.592 - ETA: 0s - loss: 0.6678 - acc: 0.592 - ETA: 0s - loss: 0.6679 - acc: 0.592 - ETA: 0s - loss: 0.6680 - acc: 0.592 - ETA: 0s - loss: 0.6676 - acc: 0.593 - ETA: 0s - loss: 0.6669 - acc: 0.594 - ETA: 0s - loss: 0.6667 - acc: 0.595 - ETA: 0s - loss: 0.6660 - acc: 0.596 - ETA: 0s - loss: 0.6665 - acc: 0.596 - ETA: 0s - loss: 0.6661 - acc: 0.597 - ETA: 0s - loss: 0.6658 - acc: 0.597 - ETA: 0s - loss: 0.6652 - acc: 0.598 - ETA: 0s - loss: 0.6644 - acc: 0.599 - ETA: 0s - loss: 0.6643 - acc: 0.600 - ETA: 0s - loss: 0.6639 - acc: 0.600 - 8s 467us/step - loss: 0.6639 - acc: 0.6005 - val_loss: 0.6386 - val_acc: 0.6384

Epoch 2/3

17441/17441 [] - ETA: 5s - loss: 0.7182 - acc: 0.468 - ETA: 6s - loss: 0.6551 - acc: 0.567 - ETA: 6s - loss: 0.6405 - acc: 0.605 - ETA: 6s - loss: 0.6279 - acc: 0.636 - ETA: 5s - loss: 0.6330 - acc: 0.633 - ETA: 5s - loss: 0.6263 - acc: 0.643 - ETA: 5s - loss: 0.6318 - acc: 0.642 - ETA: 5s - loss: 0.6328 - acc: 0.637 - ETA: 5s - loss: 0.6350 - acc: 0.635 - ETA: 5s - loss: 0.6364 - acc: 0.635 - ETA: 5s - loss: 0.6352 - acc: 0.634 - ETA: 5s - loss: 0.6366 - acc: 0.628 - ETA: 5s - loss: 0.6345 - acc: 0.632 - ETA: 5s - loss: 0.6274 - acc: 0.644 - ETA: 5s - loss: 0.6289 - acc: 0.644 - ETA: 5s - loss: 0.6273 - acc: 0.645 - ETA: 5s - loss: 0.6285 - acc: 0.644 - ETA: 5s - loss: 0.6284 - acc: 0.645 - ETA: 5s - loss: 0.6291 - acc: 0.644 - ETA: 5s - loss: 0.6334 - acc: 0.640 - ETA: 5s - loss: 0.6333 - acc: 0.640 - ETA: 4s - loss: 0.6327 - acc: 0.644 - ETA: 4s - loss: 0.6335 - acc: 0.642 - ETA: 4s - loss: 0.6347 - acc: 0.642 - ETA: 4s - loss: 0.6354 - acc: 0.642 - ETA: 4s - loss: 0.6358 - acc: 0.642 - ETA: 4s - loss: 0.6367 - acc: 0.640 - ETA: 4s - loss: 0.6360 - acc: 0.640 - ETA: 4s - loss: 0.6341 - acc: 0.642 - ETA: 4s - loss: 0.6337 - acc: 0.641 - ETA: 4s - loss: 0.6329 - acc: 0.644 - ETA: 4s - loss: 0.6309 - acc: 0.646 - ETA: 4s - loss: 0.6317 - acc: 0.644 - ETA: 4s - loss: 0.6306 - acc: 0.646 - ETA: 4s - loss: 0.6300 - acc: 0.646 - ETA: 4s - loss: 0.6293 - acc: 0.646 - ETA: 4s - loss: 0.6296 - acc: 0.645 - ETA: 4s - loss: 0.6277 - acc: 0.648 - ETA: 4s - loss: 0.6284 - acc: 0.647 - ETA: 3s - loss: 0.6270 - acc: 0.650 - ETA: 3s - loss: 0.6269 - acc: 0.650 - ETA: 3s - loss: 0.6272 - acc: 0.650 - ETA: 3s - loss: 0.6275 - acc: 0.650 - ETA: 3s - loss: 0.6273 - acc: 0.650 - ETA: 3s - loss: 0.6258 - acc: 0.651 - ETA: 3s - loss: 0.6259 - acc: 0.651 - ETA: 3s - loss: 0.6258 - acc: 0.651 - ETA: 3s - loss: 0.6244 - acc: 0.653 - ETA: 3s - loss: 0.6244 - acc: 0.653 - ETA: 3s - loss: 0.6246 - acc: 0.654 - ETA: 3s - loss: 0.6237 - acc: 0.655 - ETA: 3s - loss: 0.6233 - acc: 0.656 - ETA: 3s - loss: 0.6240 - acc: 0.655 - ETA: 3s - loss: 0.6229 - acc: 0.656 - ETA: 3s - loss: 0.6228 - acc: 0.656 - ETA: 3s - loss: 0.6229 - acc: 0.656 - ETA: 3s - loss: 0.6237 - acc: 0.656 - ETA: 2s - loss: 0.6236 - acc: 0.656 - ETA: 2s - loss: 0.6231 - acc: 0.656 - ETA: 2s - loss: 0.6234 - acc: 0.656 - ETA: 2s - loss: 0.6234 - acc: 0.656 - ETA: 2s - loss: 0.6238 - acc: 0.655 - ETA: 2s - loss: 0.6226 - acc: 0.657 - ETA: 2s - loss: 0.6231 - acc: 0.657 - ETA: 2s - loss: 0.6234 - acc: 0.657 - ETA: 2s - loss: 0.6228 - acc: 0.658 - ETA: 2s - loss: 0.6207 - acc: 0.660 - ETA: 2s - loss: 0.6201 - acc: 0.660 - ETA: 2s - loss: 0.6200 - acc: 0.660 - ETA: 2s - loss: 0.6196 - acc: 0.661 - ETA: 2s - loss: 0.6190 - acc: 0.661 - ETA: 2s - loss: 0.6178 - acc: 0.663 - ETA: 2s - loss: 0.6180 - acc: 0.662 - ETA: 2s - loss: 0.6175 - acc: 0.662 - ETA: 1s - loss: 0.6174 - acc: 0.662 - ETA: 1s - loss: 0.6174 - acc: 0.663 - ETA: 1s - loss: 0.6174 - acc: 0.663 - ETA: 1s - loss: 0.6163 - acc: 0.664 - ETA: 1s - loss: 0.6151 - acc: 0.666 - ETA: 1s - loss: 0.6143 - acc: 0.666 - ETA: 1s - loss: 0.6133 - acc: 0.667 - ETA: 1s - loss: 0.6139 - acc: 0.667 - ETA: 1s - loss: 0.6143 - acc: 0.667 - ETA: 1s - loss: 0.6139 - acc: 0.667 - ETA: 1s - loss: 0.6142 - acc: 0.666 - ETA: 1s - loss: 0.6141 - acc: 0.666 - ETA: 1s - loss: 0.6138 - acc: 0.666 - ETA: 1s - loss: 0.6131 - acc: 0.667 - ETA: 1s - loss: 0.6122 - acc: 0.668 - ETA: 1s - loss: 0.6122 - acc: 0.668 - ETA: 1s - loss: 0.6113 - acc: 0.669 - ETA: 1s - loss: 0.6107 - acc: 0.670 - ETA: 0s - loss: 0.6109 - acc: 0.670 - ETA: 0s - loss: 0.6103 - acc: 0.671 - ETA: 0s - loss: 0.6109 - acc: 0.670 - ETA: 0s - loss: 0.6103 - acc: 0.671 - ETA: 0s - loss: 0.6099 - acc: 0.672 - ETA: 0s - loss: 0.6094 - acc: 0.672 - ETA: 0s - loss: 0.6089 - acc: 0.672 - ETA: 0s - loss: 0.6086 - acc: 0.672 - ETA: 0s - loss: 0.6096 - acc: 0.671 - ETA: 0s - loss: 0.6094 - acc: 0.671 - ETA: 0s - loss: 0.6092 - acc: 0.671 - ETA: 0s - loss: 0.6087 - acc: 0.672 - ETA: 0s - loss: 0.6084 - acc: 0.672 - ETA: 0s - loss: 0.6079 - acc: 0.673 - ETA: 0s - loss: 0.6075 - acc: 0.673 - ETA: 0s - loss: 0.6074 - acc: 0.674 - ETA: 0s - loss: 0.6064 - acc: 0.674 - 7s 404us/step - loss: 0.6059 - acc: 0.6749 - val_loss: 0.5673 - val_acc: 0.7025

Epoch 3/3

17441/17441 [==============================] - ETA: 5s - loss: 0.5591 - acc: 0.625 - ETA: 6s - loss: 0.5442 - acc: 0.729 - ETA: 6s - loss: 0.5434 - acc: 0.730 - ETA: 5s - loss: 0.5347 - acc: 0.732 - ETA: 5s - loss: 0.5355 - acc: 0.730 - ETA: 5s - loss: 0.5372 - acc: 0.735 - ETA: 5s - loss: 0.5334 - acc: 0.737 - ETA: 5s - loss: 0.5361 - acc: 0.730 - ETA: 5s - loss: 0.5282 - acc: 0.735 - ETA: 5s - loss: 0.5292 - acc: 0.732 - ETA: 5s - loss: 0.5319 - acc: 0.730 - ETA: 5s - loss: 0.5327 - acc: 0.731 - ETA: 5s - loss: 0.5315 - acc: 0.732 - ETA: 5s - loss: 0.5290 - acc: 0.736 - ETA: 5s - loss: 0.5291 - acc: 0.736 - ETA: 5s - loss: 0.5341 - acc: 0.737 - ETA: 5s - loss: 0.5378 - acc: 0.734 - ETA: 5s - loss: 0.5368 - acc: 0.735 - ETA: 5s - loss: 0.5366 - acc: 0.734 - ETA: 5s - loss: 0.5373 - acc: 0.733 - ETA: 5s - loss: 0.5383 - acc: 0.730 - ETA: 4s - loss: 0.5424 - acc: 0.729 - ETA: 4s - loss: 0.5414 - acc: 0.730 - ETA: 4s - loss: 0.5426 - acc: 0.728 - ETA: 4s - loss: 0.5416 - acc: 0.729 - ETA: 4s - loss: 0.5428 - acc: 0.729 - ETA: 4s - loss: 0.5423 - acc: 0.728 - ETA: 4s - loss: 0.5431 - acc: 0.729 - ETA: 4s - loss: 0.5442 - acc: 0.727 - ETA: 4s - loss: 0.5447 - acc: 0.726 - ETA: 4s - loss: 0.5437 - acc: 0.727 - ETA: 4s - loss: 0.5424 - acc: 0.729 - ETA: 4s - loss: 0.5434 - acc: 0.728 - ETA: 4s - loss: 0.5421 - acc: 0.729 - ETA: 4s - loss: 0.5423 - acc: 0.728 - ETA: 4s - loss: 0.5429 - acc: 0.727 - ETA: 4s - loss: 0.5407 - acc: 0.728 - ETA: 4s - loss: 0.5415 - acc: 0.728 - ETA: 4s - loss: 0.5423 - acc: 0.728 - ETA: 3s - loss: 0.5412 - acc: 0.729 - ETA: 3s - loss: 0.5416 - acc: 0.728 - ETA: 3s - loss: 0.5410 - acc: 0.729 - ETA: 3s - loss: 0.5414 - acc: 0.730 - ETA: 3s - loss: 0.5403 - acc: 0.730 - ETA: 3s - loss: 0.5401 - acc: 0.729 - ETA: 3s - loss: 0.5401 - acc: 0.729 - ETA: 3s - loss: 0.5408 - acc: 0.728 - ETA: 3s - loss: 0.5419 - acc: 0.728 - ETA: 3s - loss: 0.5413 - acc: 0.729 - ETA: 3s - loss: 0.5409 - acc: 0.729 - ETA: 3s - loss: 0.5404 - acc: 0.731 - ETA: 3s - loss: 0.5402 - acc: 0.730 - ETA: 3s - loss: 0.5406 - acc: 0.730 - ETA: 3s - loss: 0.5412 - acc: 0.730 - ETA: 3s - loss: 0.5418 - acc: 0.729 - ETA: 3s - loss: 0.5420 - acc: 0.728 - ETA: 2s - loss: 0.5417 - acc: 0.729 - ETA: 2s - loss: 0.5425 - acc: 0.728 - ETA: 2s - loss: 0.5430 - acc: 0.728 - ETA: 2s - loss: 0.5428 - acc: 0.728 - ETA: 2s - loss: 0.5425 - acc: 0.728 - ETA: 2s - loss: 0.5419 - acc: 0.729 - ETA: 2s - loss: 0.5422 - acc: 0.729 - ETA: 2s - loss: 0.5422 - acc: 0.729 - ETA: 2s - loss: 0.5429 - acc: 0.729 - ETA: 2s - loss: 0.5436 - acc: 0.729 - ETA: 2s - loss: 0.5436 - acc: 0.729 - ETA: 2s - loss: 0.5442 - acc: 0.728 - ETA: 2s - loss: 0.5435 - acc: 0.728 - ETA: 2s - loss: 0.5431 - acc: 0.729 - ETA: 2s - loss: 0.5426 - acc: 0.729 - ETA: 2s - loss: 0.5425 - acc: 0.729 - ETA: 2s - loss: 0.5417 - acc: 0.730 - ETA: 2s - loss: 0.5407 - acc: 0.731 - ETA: 1s - loss: 0.5413 - acc: 0.730 - ETA: 1s - loss: 0.5413 - acc: 0.730 - ETA: 1s - loss: 0.5423 - acc: 0.730 - ETA: 1s - loss: 0.5420 - acc: 0.730 - ETA: 1s - loss: 0.5425 - acc: 0.730 - ETA: 1s - loss: 0.5426 - acc: 0.730 - ETA: 1s - loss: 0.5425 - acc: 0.730 - ETA: 1s - loss: 0.5419 - acc: 0.730 - ETA: 1s - loss: 0.5426 - acc: 0.729 - ETA: 1s - loss: 0.5434 - acc: 0.728 - ETA: 1s - loss: 0.5432 - acc: 0.728 - ETA: 1s - loss: 0.5428 - acc: 0.729 - ETA: 1s - loss: 0.5428 - acc: 0.729 - ETA: 1s - loss: 0.5433 - acc: 0.728 - ETA: 1s - loss: 0.5430 - acc: 0.729 - ETA: 1s - loss: 0.5434 - acc: 0.729 - ETA: 1s - loss: 0.5428 - acc: 0.729 - ETA: 1s - loss: 0.5424 - acc: 0.730 - ETA: 0s - loss: 0.5423 - acc: 0.730 - ETA: 0s - loss: 0.5426 - acc: 0.730 - ETA: 0s - loss: 0.5420 - acc: 0.730 - ETA: 0s - loss: 0.5414 - acc: 0.731 - ETA: 0s - loss: 0.5417 - acc: 0.731 - ETA: 0s - loss: 0.5422 - acc: 0.730 - ETA: 0s - loss: 0.5418 - acc: 0.731 - ETA: 0s - loss: 0.5413 - acc: 0.731 - ETA: 0s - loss: 0.5412 - acc: 0.731 - ETA: 0s - loss: 0.5412 - acc: 0.731 - ETA: 0s - loss: 0.5406 - acc: 0.732 - ETA: 0s - loss: 0.5404 - acc: 0.732 - ETA: 0s - loss: 0.5397 - acc: 0.732 - ETA: 0s - loss: 0.5396 - acc: 0.732 - ETA: 0s - loss: 0.5388 - acc: 0.733 - ETA: 0s - loss: 0.5383 - acc: 0.733 - ETA: 0s - loss: 0.5389 - acc: 0.733 - 7s 404us/step - loss: 0.5385 - acc: 0.7339 - val_loss: 0.5626 - val_acc: 0.7171

经过仅仅三个时代,我们有71%的验证准确性。如果我们继续前进,我们可能会做得更好,但我们应该讨论我们是如何知道我们是如何做的。为了帮助这一点,我们可以使用TensorBoard,它附带了TensorFlow,它帮助您可视化您的模型,因为他们是经过培训的。

我们将在下一篇教程中讨论TensorBoard以及对我们的模型的各种调整!

下一个教程:使用Tensorboard分析模型-使用Python、TensorFlow和Keras P.4深入学习基础知识