【MapReduce】MapReduce读写MySQL数据

MapReduce读写MySQL数据

- 数据

- 代码实现

-

- 自定义类来接收源数据

- 自定义类型来存储结果数据

- Mapper阶段

- Reducer阶段

- Driver阶段

- 上传运行

-

- 打包

- 上传集群运行

使用MapReduce读取MySQL的数据,完成单词的计数,并且将数据存储到MySQL的表里,并且将程序打包到集群上运行

数据

代码实现

自定义类来接收源数据

之所以使用Text.writeString(dataOutput,words);是因为dataoutput没有string类型的方法,他与直接使用dataoutput除了结果的数据类型不一样外,其他的完全一样

statement.setString(1, words);和·words = resultSet.getString(1);数字代表的是在MySQL表中的第几列

import org.apache.hadoop.io.Text;

import org.apache.hadoop.io.Writable;

import org.apache.hadoop.mapreduce.lib.db.DBWritable;

import java.io.DataInput;

import java.io.DataOutput;

import java.io.IOException;

import java.sql.PreparedStatement;

import java.sql.ResultSet;

import java.sql.SQLException;

public class ReceiveTable implements DBWritable, Writable {

private String words;

public void write(DataOutput dataOutput) throws IOException {

Text.writeString(dataOutput,words);

}

public void readFields(DataInput dataInput) throws IOException {

words = dataInput.readUTF();

}

public void write(PreparedStatement statement) throws SQLException {

statement.setString(1, words);

}

public void readFields(ResultSet resultSet) throws SQLException {

words = resultSet.getString(1);

}

public String getWord() {

return words;

}

public void setWord(String word) {

this.words = word;

}

}

自定义类型来存储结果数据

import org.apache.hadoop.io.Text;

import org.apache.hadoop.io.Writable;

import org.apache.hadoop.mapreduce.lib.db.DBWritable;

import java.io.DataInput;

import java.io.DataOutput;

import java.io.IOException;

import java.sql.PreparedStatement;

import java.sql.ResultSet;

import java.sql.SQLException;

public class SendTable implements Writable, DBWritable {

private String word;

private int count;

public void write(DataOutput dataOutput) throws IOException {

Text.writeString(dataOutput,word);

dataOutput.writeInt(this.count);

}

public void readFields(DataInput dataInput) throws IOException {

this.word = dataInput.readUTF();

this.count = dataInput.readInt();

}

public void write(PreparedStatement statement) throws SQLException {

statement.setString(1, this.word);

statement.setInt(2, this.count);

}

public void readFields(ResultSet resultSet) throws SQLException {

word = resultSet.getString(1);

count = resultSet.getInt(2);

}

public void set(String word, int count) {

this.word = word;

this.count = count;

}

public String getWord() {

return word;

}

public void setWord(String word) {

this.word = word;

}

public int getCount() {

return count;

}

public void setCount(int count) {

this.count = count;

}

}

Mapper阶段

Mapper端接受的value是自定义的接收数据类型,这是因为现在读取的信息不是文件,而是从数据库里读取,ReceiveTable会逐行读取接收数据

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

import java.io.IOException;

public class MapTest extends Mapper<LongWritable, ReceiveTable, Text, IntWritable> {

Text k = new Text();

IntWritable v = new IntWritable(1);

@Override

protected void map(LongWritable key, ReceiveTable value, Context context) throws IOException, InterruptedException {

k.set(value.getWord());

context.write(k, v);

}

}

Reducer阶段

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

import java.io.IOException;

public class RedTest extends Reducer<Text, IntWritable, SendTable, NullWritable> {

SendTable k = new SendTable();

int count = 0;

@Override

protected void reduce(Text key, Iterable<IntWritable> values, Context context) throws IOException, InterruptedException {

for (IntWritable v : values) {

count++;

}

k.set(key.toString(), count);

System.out.println(k.getWord()+":"+k.getCount());

context.write(k, NullWritable.get());

count=0;

}

}

Driver阶段

BasicConfigurator.configure();是配置日志文件conf.set("fs.defaultFS","hdfs://d:9000");设置集群信息DBConfiguration.configureDB(conf, "com.mysql.jdbc.Driver", "jdbc:mysql://192.168.0.155:3306/data", "root", "123456");配置数据库信息,几个参数分别是Configuration、Driver类、MySQL所在的主机IP和端口,用户名,密码- f1和f1分别是输入表和输出表的列名

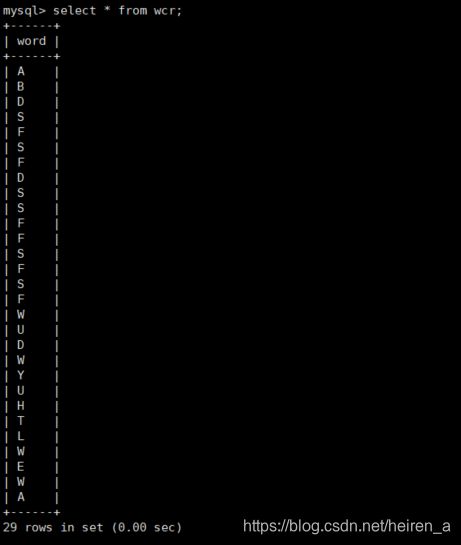

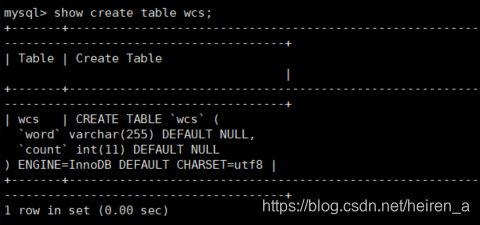

DBInputFormat.setInput(job, ReceiveTable.class, "wcr", null, "word", f1);参数分别是Job、接收数据类型、输入表名,条件语句、orderby字段名及每列列名DBOutputFormat.setOutput(job,"wcs",f2);几个参数分别是Job,输出表名,输出表的列名数组

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.db.DBConfiguration;

import org.apache.hadoop.mapreduce.lib.db.DBInputFormat;

import org.apache.hadoop.mapreduce.lib.db.DBOutputFormat;

import org.apache.log4j.BasicConfigurator;

import java.io.IOException;

public class DriTest {

public static void main(String[] args) {

try {

BasicConfigurator.configure();

Configuration conf = new Configuration();

conf.set("fs.defaultFS","hdfs://d:9000");

DBConfiguration.configureDB(conf, "com.mysql.jdbc.Driver", "jdbc:mysql://192.168.0.155:3306/data", "root", "123456");

Job job = Job.getInstance(conf,"mr");

job.setJarByClass(DriTest.class);

job.setMapperClass(MapTest.class);

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(IntWritable.class);

job.setReducerClass(RedTest.class);

job.setOutputKeyClass(SendTable.class);

job.setOutputValueClass(NullWritable.class);

String[] f1 = {"word"};

String[] f2 = {"word", "count"};

DBInputFormat.setInput(job, ReceiveTable.class, "wcr", null, "word", f1);

DBOutputFormat.setOutput(job,"wcs",f2);

System.exit(job.waitForCompletion(true) ? 0 : 1);

} catch (IOException e) {

e.printStackTrace();

} catch (InterruptedException e) {

e.printStackTrace();

} catch (ClassNotFoundException e) {

e.printStackTrace();

}

}

}

上传运行

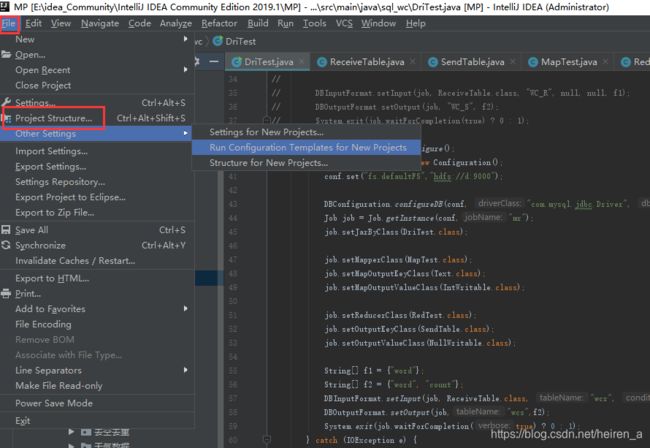

打包

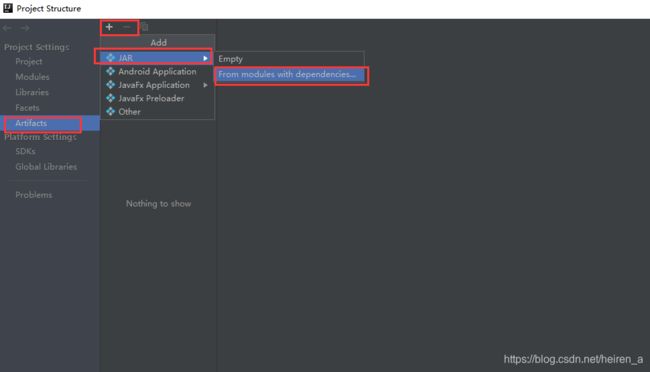

File—>Project Structure

选择你所需要的主类即可

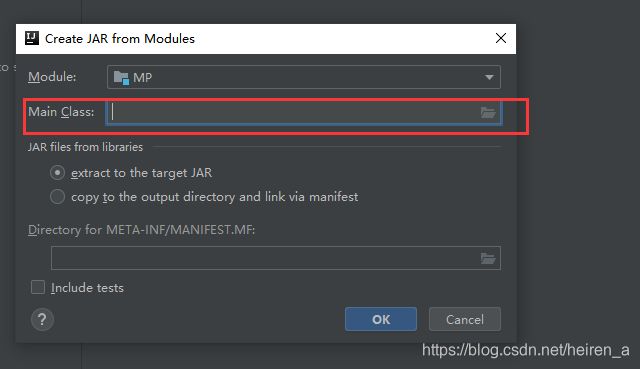

上传集群运行

hadoop jar MP.jar sql_wc.DriTest运行代码,hadoop jar jar包路径 主类绝对路径

发现报错,找不到类,原因是我们在编写代码的时候使用了第三方的jar包,但是我们在上传集群时,只是将代码打包了,而第三方jar包却没有,所以此时我们应该将第三方的jar包上传至集群的每一个节点的common目录下,common的位置在/hadoop/hadoop/share/hadoop/common/

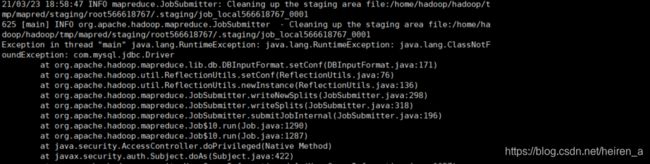

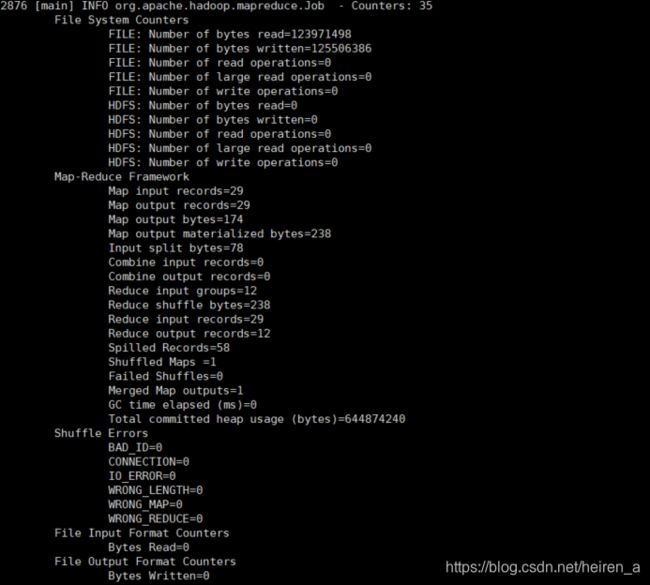

再次运行

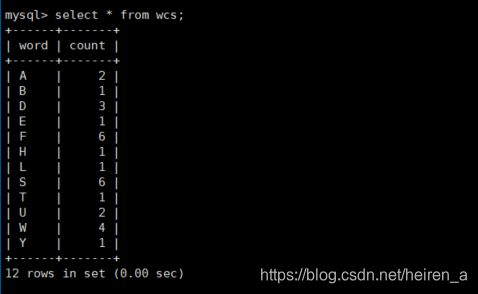

查看输出表