k8s实践笔记

文章目录

-

-

- 第二天 Kubernetes落地实践之旅

-

- 纯容器模式的问题

- 容器调度管理平台

- 架构图

- 核心组件

- 工作流程

- 架构设计的几点思考

- 实践--集群安装

-

-

- k8s集群主流安装方式对比分析

- 核心组件

- 理解集群资源

- kubectl的使用

-

- 实践--使用k8s管理业务应用

-

- 最小调度单元 Pod

-

- 为什么引入Pod

- 使用yaml格式定义Pod

- 创建和访问Pod

- Infra容器

- 查看pod详细信息

- Troubleshooting and Debugging(调试及故障排除)

- 更新服务版本

- 删除Pod服务

- Pod数据持久化

- **标签的增删改查**

- 服务健康检查

- 重启策略

- 镜像拉取策略

- Pod资源限制

- yaml优化

- 如何编写资源yaml

- pod状态与生命周期

- 小结

- Pod控制器

-

- Workload (工作负载)

- Deployment

- 创建Deployment

- 查看Deployment

- 副本保障机制

- Pod驱逐策略

- 服务更新

- 更新策略

- 服务回滚

- Kubernetes服务访问之Service

-

- Service 负载均衡之Cluster IP

- 服务发现

- Service负载均衡之NodePort

- kube-proxy

- Kubernetes服务访问之Ingress

-

- 示意图:

- 实现逻辑

- 安装

- 访问

- 多路径转发及重写的实现

-

第二天 Kubernetes落地实践之旅

纯容器模式的问题

- 业务容器数量庞大,哪些容器部署在哪些节点,使用了哪些端口,如何记录、管理,需要登录到每台机器去管理?

- 跨主机通信,多个机器中的容器之间相互调用如何做,iptables规则手动维护?

- 跨主机容器间互相调用,配置如何写?写死固定IP+端口?

- 如何实现业务高可用?多个容器对外提供服务如何实现负载均衡?

- 容器的业务中断了,如何可以感知到,感知到以后,如何自动启动新的容器?

- 如何实现滚动升级保证业务的连续性?

- …

容器调度管理平台

Docker Swarm Mesos Google Kubernetes

2017年开始Kubernetes凭借强大的容器集群管理功能, 逐步占据市场,目前在容器编排领域一枝独秀

https://kubernetes.io/

架构图

如何设计一个容器管理平台?

- 集群架构,管理节点分发容器到数据节点(kubelet)

- 如何部署业务容器到各数据节点(yaml编排文件)

- N个数据节点,业务容器如何选择部署在最合理的节点(调度器,kube-scheduler)

- 容器如何实现多副本,如何满足每个机器部署一个容器的模型(控制管理器,controller-manager)

- 多副本如何实现集群内负载均衡(kube-proxy)

分布式系统,两类角色:管理节点和工作节点

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传

核心组件

-

ETCD:分布式高性能键值数据库,存储整个集群的所有元数据

-

ApiServer: API服务器,集群资源访问控制入口,提供restAPI及安全访问控制

-

Scheduler:调度器,负责把业务容器调度到最合适的Node节点

-

Controller Manager:控制器管理,确保集群资源按照期望的方式运行

- Replication Controller 副本控制器

功能如下: 确保pod数量:它会确保Kubernetes中有指定数量的Pod在运行。如果少于指定数量的pod,Replication Controller会创建新的,反之则会删除掉多余的以保证Pod数量不变。 确保pod健康:当pod不健康,运行出错或者无法提供服务时,Replication Controller也会杀死不健康的pod,重新创建新的。 弹性伸缩 :在业务高峰或者低峰期的时候,可以通过Replication Controller动态的调整pod的数量来提高资源的利用率。同时,配置相应的监控功能(Hroizontal Pod Autoscaler),会定时自动从监控平台获取Replication Controller关联pod的整体资源使用情况,做到自动伸缩。 滚动升级:滚动升级为一种平滑的升级方式,通过逐步替换的策略,保证整体系统的稳定,在初始化升级的时候就可以及时发现和解决问题,避免问题不断扩大。- Node controller 节点控制器

Node由master管理,node负责监控并汇报容器的状态,并根据master的要求管理容器的生命周期- ResourceQuota Controller 资源配额控制器

定义每个命名空间(namespace)的资源配额,从而实现资源消耗总量的限制,以保证集群资源的有效利用以及稳定性- Namespace Controller 命名空间控制器

Namespace在很多情况下用于实现多租户的资源隔离,不同的业务可以使用不同的namespace进行隔离。- ServiceAccount Controller 服务账号控制器

Service Account Controller在namespaces里管理ServiceAccount,并确保每个有效的namespaces中都存在一个名为“default”的ServiceAccount- Token Controller 令牌控制器

Token controller检测service account的创建,并为它们创建secret 观察serviceAccount的删除,并删除所有相应的ServiceAccountToken Secret 观察secret 添加,并确保关联的ServiceAccount存在,并在需要时向secret 中添加一个Token。 观察secret 删除,并在需要时对应 ServiceAccount 的关联- Service Controller 服务控制器

Service Account 用来访问 kubernetes API- Endpoints Controller 终端控制器

负责生成和维护所有Endpoints对象的控制器.它负责监听Service和对应的Pod副本的变化,如果检测到Service被删除,则删除和该Service同名的Endpoints对象。如果检测到新的Service被创建或者修改则根据该Service信息获得相关的Pod列表,然后创建或者更新Service对应的Endpoints对象。kubelet:运行在每个节点上的主要的“节点代理”,脏活累活

- pod 管理:kubelet 定期从所监听的数据源获取节点上 pod/container 的期望状态(运行什么容器、运行的副本数量、网络或者存储如何配置等等),并调用对应的容器平台接口达到这个状态。

- 容器健康检查:kubelet 创建了容器之后还要查看容器是否正常运行,如果容器运行出错,就要根据 pod 设置的重启策略进行处理.

- 容器监控:kubelet 会监控所在节点的资源使用情况,并定时向 master 报告,资源使用数据都是通过 cAdvisor 获取的。知道整个集群所有节点的资源情况,对于 pod 的调度和正常运行至关重要

-

kube-proxy:维护节点中的iptables或者ipvs规则

-

kubectl: 命令行接口,用于对 Kubernetes 集群运行命令 https://kubernetes.io/zh/docs/reference/kubectl/

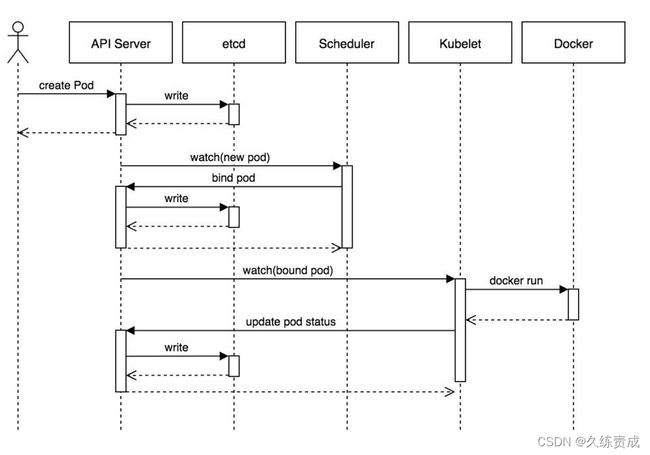

工作流程

- 用户准备一个资源文件(记录了业务应用的名称、镜像地址等信息),通过调用APIServer执行创建Pod

- APIServer收到用户的Pod创建请求,将Pod信息写入到etcd中

- 调度器通过list-watch的方式,发现有新的pod数据,但是这个pod还没有绑定到某一个节点中

- 调度器通过调度算法,计算出最适合该pod运行的节点,并调用APIServer,把信息更新到etcd中

- kubelet同样通过list-watch方式,发现有新的pod调度到本机的节点了,因此调用容器运行时,去根据pod的描述信息,拉取镜像,启动容器,同时生成事件信息

- 同时,把容器的信息、事件及状态也通过APIServer写入到etcd中

架构设计的几点思考

- 系统各个组件分工明确(APIServer是所有请求入口,CM是控制中枢,Scheduler主管调度,而Kubelet负责运行),配合流畅,整个运行机制一气呵成。

- 除了配置管理和持久化组件ETCD,其他组件并不保存数据。意味

除ETCD外其他组件都是无状态的。因此从架构设计上对kubernetes系统高可用部署提供了支撑。 - 同时因为组件无状态,组件的升级,重启,故障等并不影响集群最终状态,只要组件恢复后就可以从中断处继续运行。

- 各个组件和kube-apiserver之间的数据推送都是通过list-watch机制来实现。

实践–集群安装

k8s集群主流安装方式对比分析

- minikube

- 二进制安装

- kubeadm等安装工具

kubeadm https://kubernetes.io/zh/docs/reference/setup-tools/kubeadm/kubeadm/

《Kubernetes安装手册(非高可用版)》

核心组件

静态Pod的方式:

## etcd、apiserver、controller-manager、kube-scheduler

$ kubectl -n kube-system get po

systemd服务方式:

$ systemctl status kubelet

kubectl:二进制命令行工具

注:其他组件配置文件位置

[root@k8s-master week2]# ll /etc/kubernetes/manifests/

total 16

-rw------- 1 root root 2104 Jul 9 22:55 etcd.yaml

-rw------- 1 root root 3161 Jul 9 22:55 kube-apiserver.yaml

-rw------- 1 root root 2858 Jul 9 22:55 kube-controller-manager.yaml

-rw------- 1 root root 1413 Jul 9 22:55 kube-scheduler.yaml

可以通过删除pod,让他自动重建的方式重启,kube-proxy

[root@k8s-master week2]# kubectl -n kube-system get po |grep kube-proxy

kube-proxy-4qbsc 1/1 Running 0 15h

kube-proxy-hpkd4 1/1 Running 0 15h

kube-proxy-rk2fw 1/1 Running 0 15h

[root@k8s-master week2]# kubectl -n kube-system get po -owide|grep kube-proxy

kube-proxy-4qbsc 1/1 Running 0 15h 10.0.1.6 k8s-slave1 <none> <none>

kube-proxy-hpkd4 1/1 Running 0 15h 10.0.1.5 k8s-master <none> <none>

kube-proxy-rk2fw 1/1 Running 0 15h 10.0.1.8 k8s-slave2 <none> <none>

理解集群资源

组件是为了支撑k8s平台的运行,安装好的软件。

资源是如何去使用k8s的能力的定义。比如,k8s可以使用Pod来管理业务应用,那么Pod就是k8s集群中的一类资源,集群中的所有资源可以提供如下方式查看:

# namespace 这一列下面为true的资源都需要指定namespace,不指定默认为default

$ kubectl api-resources

如何理解namespace:

命名空间,集群内一个虚拟的概念,类似于资源池的概念,一个池子里可以有各种资源类型,绝大多数的资源都必须属于某一个namespace。集群初始化安装好之后,会默认有如下几个namespace:

$ kubectl get namespaces

NAME STATUS AGE

default Active 84m

kube-node-lease Active 84m

kube-public Active 84m

kube-system Active 84m

kubernetes-dashboard Active 71m

# 注意与docker中资源 namespace区分,k8s中是一个虚拟的概念,类似于资源池的概念

- 所有NAMESPACED的资源,在创建的时候都需要指定namespace,若不指定,默认会在default命名空间下

- 相同namespace下的同类资源不可以重名,不同类型的资源可以重名

- 不同namespace下的同类资源可以重名

- 通常在项目使用的时候,我们会创建带有业务含义的namespace来做逻辑上的整合

kubectl的使用

类似于docker,kubectl是命令行工具,用于与APIServer交互,内置了丰富的子命令,功能极其强大。 https://kubernetes.io/docs/reference/kubectl/overview/

注:忘记命令就可使用kubectl -h查看命令帮助,如kubectl get pod -h

$ kubectl -h

$ kubectl get -h

$ kubectl create -h

$ kubectl create namespace -h

实践–使用k8s管理业务应用

最小调度单元 Pod

docker调度的是容器,在k8s集群中,最小的调度单元是Pod(豆荚)

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传

为什么引入Pod

-

与容器引擎解耦

Docker、Rkt。平台设计与引擎的具体的实现解耦

-

多容器共享网络|存储|进程 空间, 支持的业务场景更加灵活

注:pod里面的容器都直接可以通过localhost访问

使用yaml格式定义Pod

myblog/one-pod/pod.yaml

apiVersion: v1 #版本

kind: Pod # 类型/角色

metadata: # 元数据属性

name: myblog # pod名称

namespace: luffy # 在哪个namespace下

labels: # pod标签

component: myblog # 相当于component=myblog的标签

spec: #详细说明

containers: # 容器

- name: myblog # 容器名称

image: 10.0.1.5:5000/myblog:v1 # 容器镜像地址

env: #环境变量

- name: MYSQL_HOST # 指定root用户的用户名

value: "127.0.0.1" # 上行name的值

- name: MYSQL_PASSWD # 密码

value: "123456"

ports: #需要暴露的端口号列表,象征的显示此业务跑在8002端口,可以省略不写,没有影响

- containerPort: 8002

- name: mysql # 容器名称

image: mysql:5.7 # 容器使用的镜像

# command

args: # 启动容器运行的命令参数

- --character-set-server=utf8mb4

- --collation-server=utf8mb4_unicode_ci

ports: # 需要暴露的端口号列表

- containerPort: 3306

env: # 容器运行前需要设置的环境变量列表

- name: MYSQL_ROOT_PASSWORD

value: "123456"

- name: MYSQL_DATABASE

value: "myblog"

{

"apiVersion": "v1",

"kind": "Pod",

"metadata": {

"name": "myblog",

"namespace": "luffy",

"labels": {

"component": "myblog"

}

},

"spec": {

"containers": [

{

"name": "myblog",

"image": "10.0.1.5:5000/myblog:v1",

"env": [

{

"name": "MYSQL_HOST",

"value": "127.0.0.1"

}

]

},

{

...

}

]

}

}

| apiVersion | 含义 |

|---|---|

| alpha | 进入K8s功能的早期候选版本,可能包含Bug,最终不一定进入K8s |

| beta | 已经过测试的版本,最终会进入K8s,但功能、对象定义可能会发生变更。 |

| stable | 可安全使用的稳定版本 |

| v1 | stable 版本之后的首个版本,包含了更多的核心对象 |

| apps/v1 | 使用最广泛的版本,像Deployment、ReplicaSets都已进入该版本 |

资源类型与apiVersion对照表

| Kind | apiVersion |

|---|---|

| ClusterRoleBinding | rbac.authorization.k8s.io/v1 |

| ClusterRole | rbac.authorization.k8s.io/v1 |

| ConfigMap | v1 |

| CronJob | batch/v1beta1 |

| DaemonSet | extensions/v1beta1 |

| Node | v1 |

| Namespace | v1 |

| Secret | v1 |

| PersistentVolume | v1 |

| PersistentVolumeClaim | v1 |

| Pod | v1 |

| Deployment | v1、apps/v1、apps/v1beta1、apps/v1beta2 |

| Service | v1 |

| Ingress | extensions/v1beta1 |

| ReplicaSet | apps/v1、apps/v1beta2 |

| Job | batch/v1 |

| StatefulSet | apps/v1、apps/v1beta1、apps/v1beta2 |

快速获得资源和版本

# 查看所有api-versions信息

$ kubectl api-versions

# 查看具体资源详细信息如

$ kubectl explain pod

$ kubectl explain Pod.apiVersion

$ kubectl explain Pod.apiVersion

$ kubectl explain Deployment.apiVersion

$ kubectl explain Service.apiVersion

创建和访问Pod

前提:

# This my first django Dockerfile

# Version 1.0

# Base images 基础镜像

FROM centos:centos7.5.1804

#MAINTAINER 维护者信息

LABEL maintainer="[email protected]"

#ENV 设置环境变量

ENV LANG en_US.UTF-8

ENV LC_ALL en_US.UTF-8

#RUN 执行以下命令

RUN curl -so /etc/yum.repos.d/Centos-7.repo http://mirrors.aliyun.com/repo/Centos-7.repo && rpm -Uvh http://nginx.org/packages/centos/7/noarch/RPMS/nginx-release-centos-7-0.el7.ngx.noarch.rpm

RUN yum install -y python36 python3-devel gcc pcre-devel zlib-devel make net-tools nginx

#工作目录

WORKDIR /opt/myblog

#拷贝文件至工作目录

COPY . .

#拷贝nginx配置文件

COPY myblog.conf /etc/nginx

# 安装依赖的插件

RUN pip3 install -i http://mirrors.aliyun.com/pypi/simple/ --trusted-host mirrors.aliyun.com -r requirements.txt

RUN chmod +x run.sh && rm -rf ~/.cache/pip

# EXPOSE 映射端口

EXPOSE 8002

# 容器启动时执行命令

CMD ["./run.sh"]

详情见上一节Django项目

mkdir luffy && cd luffy

git clone https://gitee.com/agagin/python-demo.git

cd python-demo/

vim Dockerfile

docker build . -t myblog:v1 -f Dockerfile

# 准备镜像文件

[root@k8s-master 2021]# pwd

/root/2021

[root@k8s-master 2021]# ls

registry registry.tar.gz

# 使用docker镜像启动仓库服务

docker run -d -p 5000:5000 --restart always -v /root/2021/registry:/var/lib/registry --name registry registry:2

#创建MySQL配置文件

[root@k8s-master luffy]# mkdir mysql-images/ && cd mysql-images/

[root@k8s-master mysql-images]# cat my.cnf

[mysqld]

user=root

character-set-server=utf8

lower_case_table_names=1

[client]

default-character-set=utf8

[mysql]

default-character-set=utf8

!includedir /etc/mysql/conf.d/

!includedir /etc/mysql/mysql.conf.d/

# 创建dockerfile、

[root@k8s-master mysql-images]# cat Dockerfile

FROM mysql:5.7

COPY my.cnf /etc/mysql/my.cnf

## CMD 或者ENTRYPOINT默认继承

# 构建本地镜像

[root@k8s-master mysql-images]# docker build . -t 10.0.1.5:5000/mysql:5.7-utf8

[root@k8s-master mysql-images]# docker tag myblog:v1 10.0.1.5:5000/myblog:v1

#配置加速 docker私有仓库服务器,默认是基于https传输的,所以我们需要在客户端127.0.0.1做相关设置,不使用https传输【此操作主从都需配置】

[root@k8s-master opt]# vi /etc/docker/daemon.json

[root@k8s-master opt]# cat /etc/docker/daemon.json

{

"registry-mirrors": [

"https://8xpk5wnt.mirror.aliyuncs.com"

],

"insecure-registries": [

"10.0.1.5:5000"

]

}

# 重启docker

systemctl daemon-reload

systemctl restart docker

# 推镜像

[root@k8s-master mysql-images]# docker push 10.0.1.5:5000/myblog:v1

[root@k8s-master mysql-images]# docker push 10.0.1.5:5000/mysql:5.7-utf8

# 拷贝yaml文件

[root@k8s-master week2]# pwd

/root/2021/week2

[root@k8s-master week2]# vim pod.yaml

## 创建namespace, namespace是逻辑上的资源池

$ kubectl create namespace luffy

## 使用指定文件创建Pod

$ kubectl create -f pod.yaml

## 查看pod,可以简写po

## 所有的操作都需要指定namespace,如果是在default命名空间下,则可以省略

$ kubectl -n luffy get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE

myblog 2/2 Running 0 3m 10.244.1.4 k8s-slave1

## 回顾流程

## 使用Pod Ip访问服务,3306和8002

$ curl 10.244.1.4:8002/blog/index/

## 进入容器,执行初始化, 不必到对应的主机执行docker exec

$ kubectl -n luffy exec -ti myblog -c myblog bash

/ # env

/ # python3 manage.py migrate

$ kubectl -n luffy exec -ti myblog -c mysql bash

/ # mysql -p123456

## 再次访问服务,3306和8002

$ curl 10.244.1.2:8002/blog/index/

Infra容器

登录k8s-slave1节点

$ docker ps -a |grep myblog ## 发现有三个容器

## 其中包含mysql和myblog程序以及Infra容器

## 为了实现Pod内部的容器可以通过localhost通信,每个Pod都会启动Infra容器,然后Pod内部的其他容器的网络空间会共享该Infra容器的网络空间(Docker网络的container模式),Infra容器只需要hang住网络空间,不需要额外的功能,因此资源消耗极低。

## 登录master节点,查看pod内部的容器ip均相同,为pod ip

$ kubectl -n luffy exec -ti myblog -c myblog bash

/ # ifconfig

$ kubectl -n luffy exec -ti myblog -c mysql bash

/ # ifconfig

pod容器命名: k8s_

查看pod详细信息

## 查看pod调度节点及pod_ip

$ kubectl -n luffy get pods -o wide

## 查看完整的yaml

$ kubectl -n luffy get po myblog -o yaml

## 查看pod的明细信息及事件

$ kubectl -n luffy describe pod myblog

Troubleshooting and Debugging(调试及故障排除)

#进入Pod内的容器

$ kubectl -n exec -c -ti /bin/sh

# 参数解释

# -n 指定命名空间

# exec -ti 进入交互式终端

# -c 容器的名字

#查看Pod内容器日志,显示标准或者错误输出日志

$ kubectl -n logs -f -c

$ kubectl -n logs -f --tail=100 -c

# 参数解释

# logs 查看日志

# -f 实时刷新

# --tail=100 指定行数

更新服务版本

$ kubectl apply -f myblog-pod.yaml

# 注:对于pod来讲,如果你的yaml文件资源定义的是pod,没法直接使用apply,除非是修改镜像版本,否则失败,如修改环境变量等apply会检验不通过

删除Pod服务

#根据文件删除

$ kubectl delete -f myblog-pod.yaml

#根据pod_name删除

$ kubectl -n delete pod

Pod数据持久化

若删除了Pod,由于mysql的数据都在容器内部,会造成数据丢失,因此需要数据进行持久化。

-

定点使用hostpath挂载,nodeSelector定点

myblog/one-pod/pod-with-volume.yamlapiVersion: v1 kind: Pod metadata: name: myblog namespace: luffy labels: component: myblog #相当于调度到有labels是component=myblog的机器上 spec: volumes: # 定义卷,和container同级,所以所有container都能引用 - name: mysql-data hostPath: path: /opt/mysql/data nodeSelector: # 使用节点选择器将Pod调度到指定label的节点 component: mysql containers: - name: myblog image: 10.0.1.5:5000/myblog:v1 env: - name: MYSQL_HOST # 指定root用户的用户名 value: "127.0.0.1" - name: MYSQL_PASSWD value: "123456" ports: - containerPort: 8002 - name: mysql image: mysql:5.7 args: - --character-set-server=utf8mb4 - --collation-server=utf8mb4_unicode_ci ports: - containerPort: 3306 env: - name: MYSQL_ROOT_PASSWORD value: "123456" - name: MYSQL_DATABASE value: "myblog" volumeMounts: # 挂载卷,属于container下的一个key, - name: mysql-data # 名字必须和上面定义的相对应 mountPath: /var/lib/mysql保存文件为

pod-with-volume.yaml,执行创建## 若存在旧的同名服务,先删除掉,后创建 $ kubectl -n luffy delete pod myblog ## 创建 $ kubectl create -f pod-with-volume.yaml ## 此时pod状态Pending $ kubectl -n luffy get po NAME READY STATUS RESTARTS AGE myblog 0/2 Pending 0 32s ## 查看原因,提示调度失败,因为节点不满足node selector $ kubectl -n luffy describe po myblog Events: Type Reason Age From Message ---- ------ ---- ---- ------- Warning FailedScheduling 12s (x2 over 12s) default-scheduler 0/3 nodes are available: 3 node(s) didn't match node selector. ## 为节点打标签 $ kubectl label node k8s-slave1 component=mysql ## 再次查看,已经运行成功 $ kubectl -n luffy get po NAME READY STATUS RESTARTS AGE IP NODE myblog 2/2 Running 0 3m54s 10.244.1.150 k8s-slave1 ## 到k8s-slave1节点,查看/opt/mysql/data $ ll /opt/mysql/data/ total 188484 -rw-r----- 1 polkitd input 56 Mar 29 09:20 auto.cnf -rw------- 1 polkitd input 1676 Mar 29 09:20 ca-key.pem -rw-r--r-- 1 polkitd input 1112 Mar 29 09:20 ca.pem drwxr-x--- 2 polkitd input 8192 Mar 29 09:20 sys ... ## 执行migrate,创建数据库表,然后删掉pod,再次创建后验证数据是否存在 $ kubectl -n luffy exec -ti myblog python3 manage.py migrate ## 访问服务,正常 $ curl 10.244.1.150:8002/blog/index/ ## 删除pod $ kubectl delete -f pod-with-volume.yaml ## 再次创建Pod $ kubectl create -f pod-with-volume.yaml ## 查看pod ip并访问服务 $ kubectl -n luffy get po -o wide NAME READY STATUS RESTARTS AGE IP NODE myblog 2/2 Running 0 7s 10.244.1.151 k8s-slave1 ## 未重新做migrate,服务正常 $ curl 10.244.1.151:8002/blog/index/ -

使用PV+PVC连接分布式存储解决方案

- ceph

- glusterfs

- nfs

标签的增删改查

# 添加

$ kubectl label nodes kube-node label_key=label_value

# 查询

$ kubectl get node --show-labels

# 过滤标签

$ kubectl -n ns_name get po -o wide -l label_key=label_value

# 删除一个label,只需在命令行最后指定的label的可以名并与一个减号相连即可:

$ kubectl label nodes k8s-slave1 label_key-

# 修改一个label的值,需要加上--overwrite参数

$ kubectl label nodes k8s-slave1 label_key=apache --overwrite

# 或者你可以直接kubectl edit就可编辑这个node的配置,保存退出就可以了

服务健康检查

检测容器服务是否健康的手段,若不健康,会根据设置的重启策略(restartPolicy)进行操作,两种检测机制可以分别单独设置,若不设置,默认认为Pod是健康的。

两种机制:

-

LivenessProbe探针

存活性探测:用于判断容器是否存活,即Pod是否为running状态,如果LivenessProbe探针探测到容器不健康,则kubelet将kill掉容器,并根据容器的重启策略是否重启,如果一个容器不包含LivenessProbe探针,则Kubelet认为容器的LivenessProbe探针的返回值永远成功。... containers: - name: myblog image: 10.0.1.5:5000/myblog:v1 livenessProbe: httpGet: path: /blog/index/ port: 8002 scheme: HTTP initialDelaySeconds: 10 # 容器启动后第一次执行探测是需要等待多少秒 periodSeconds: 10 # 执行探测的频率 timeoutSeconds: 2 # 探测超时时间 ... -

ReadinessProbe探针

可用性探测:用于判断容器是否正常提供服务,即容器的Ready是否为True,是否可以接收请求,如果ReadinessProbe探测失败,则容器的Ready将为False, Endpoint Controller 控制器将此Pod的Endpoint从对应的service的Endpoint列表中移除,不再将任何请求调度此Pod上,直到下次探测成功。(剔除此pod不参与接收请求不会将流量转发给此Pod)。... containers: - name: myblog image: 10.0.1.5:5000/myblog:v1 readinessProbe: httpGet: path: /blog/index/ port: 8002 scheme: HTTP initialDelaySeconds: 10 timeoutSeconds: 2 periodSeconds: 10 ...

三种类型:

- exec:通过执行命令来检查服务是否正常,返回值为0则表示容器健康

- httpGet方式:通过发送http请求检查服务是否正常,返回200-399状态码则表明容器健康

- tcpSocket:通过容器的IP和Port执行TCP检查,如果能够建立TCP连接,则表明容器健康

示例:

完整文件路径 myblog/one-pod/pod-with-healthcheck.yaml

containers:

- name: myblog

image: 10.0.1.5:5000/myblog:v1

env:

- name: MYSQL_HOST # 指定root用户的用户名

value: "127.0.0.1"

- name: MYSQL_PASSWD

value: "123456"

ports:

- containerPort: 8002

livenessProbe: # 注意添加位置

httpGet:

path: /blog/index/

port: 8002

scheme: HTTP

initialDelaySeconds: 10 # 容器启动后第一次执行探测是需要等待多少秒

periodSeconds: 10 # 执行探测的频率

timeoutSeconds: 2 # 探测超时时间

readinessProbe:

httpGet:

path: /blog/index/

port: 8002

scheme: HTTP

initialDelaySeconds: 10

timeoutSeconds: 2

periodSeconds: 10

[root@k8s-master week2]# kubectl delete -f pod-with-volume.yaml

pod "myblog" deleted

[root@k8s-master week2]# vim pod-with-volume.yaml

[root@k8s-master week2]# kubectl create -f pod-with-volume.yaml

pod/myblog created

[root@k8s-master week2]# kubectl -n luffy get po -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE

myblog 1/2 Running 0 29s 10.244.1.15 k8s-slave1

# 进入容器

$ kubectl -n luffy exec -ti myblog -c myblog bash

[root@myblog myblog]# python3 manage.py migrate

$ kubectl -n luffy get po

[root@k8s-master week2]# kubectl -n luffy get po

NAME READY STATUS RESTARTS AGE

myblog 2/2 Running 3 6m6s

- initialDelaySeconds:容器启动后第一次执行探测是需要等待多少秒。

- periodSeconds:执行探测的频率。默认是10秒,最小1秒。

- timeoutSeconds:探测超时时间。默认1秒,最小1秒。

- successThreshold:探测失败后,最少连续探测成功多少次才被认定为成功。默认是1。

- failureThreshold:探测成功后,最少连续探测失败多少次

才被认定为失败。默认是3,最小值是1。

K8S将在Pod开始启动10s(initialDelaySeconds)后利用HTTP访问8002端口的/blog/index/,如果超过2s或者返回码不在200~399内,则健康检查失败

重启策略

Pod的重启策略(RestartPolicy)应用于Pod内的所有容器,并且仅在Pod所处的Node上由kubelet进行判断和重启操作。当某个容器异常退出或者健康检查失败时,kubelet将根据RestartPolicy的设置来进行相应的操作。

Pod的重启策略包括Always、OnFailure和Never,默认值为Always。

- Always:当容器进程退出后,由kubelet自动重启该容器;

- OnFailure:当容器终止运行且退出码不为0时,由kubelet自动重启该容器;

- Never:不论容器运行状态如何,kubelet都不会重启该容器。

演示重启策略:

apiVersion: v1

kind: Pod

metadata:

name: test-restart-policy

spec:

restartPolicy: Always

containers:

- name: busybox

image: busybox

args:

- /bin/sh

- -c

- sleep 10

执行结果

$ kubectl apply -f test-restartpolicy.yaml

# 会出现如下几种状态

$ kubectl get po -owide -w

test-restart-policy 0/1 Completed 0 98s 10.244.2.4 k8s-slave2

test-restart-policy 1/1 Running 1 118s 10.244.2.4 k8s-slave2

test-restart-policy 0/1 Completed 1 2m8s 10.244.2.4 k8s-slave2

test-restart-policy 0/1 CrashLoopBackOff 1 2m18s 10.244.2.4 k8s-

- 使用默认的重启策略,即 restartPolicy: Always ,无论容器是否是正常退出,都会自动重启容器

- 使用OnFailure的策略时

- 如果把exit 1,去掉,即让容器的进程正常退出的话,则不会重启

- 只有非正常退出状态才会重启

- 使用Never时,退出了就不再重启

可以看出,若容器正常退出,Pod的状态会是Completed,非正常退出,状态为CrashLoopBackOff

镜像拉取策略

spec:

containers:

- name: myblog

image: 10.0.1.5:5000/myblog:v1

imagePullPolicy: IfNotPresent

设置镜像的拉取策略,默认为IfNotPresent

- Always,总是拉取镜像,即使本地有镜像也从仓库拉取

- IfNotPresent ,本地有则使用本地镜像,本地没有则去仓库拉取

- Never,只使用本地镜像,本地没有则报错

Pod资源限制

为了保证充分利用集群资源,且确保重要容器在运行周期内能够分配到足够的资源稳定运行,因此平台需要具备

Pod的资源限制的能力。 对于一个pod来说,资源最基础的2个的指标就是:CPU和内存。

Kubernetes提供了个采用requests和limits 两种类型参数对资源进行预分配和使用限制。

完整文件路径:myblog/one-pod/pod-with-resourcelimits.yaml

...

containers:

- name: myblog

image: 10.0.1.5:5000/myblog:v1

env:

- name: MYSQL_HOST # 指定root用户的用户名

value: "127.0.0.1"

- name: MYSQL_PASSWD

value: "123456"

ports:

- containerPort: 8002

resources:

requests:

memory: 100Mi

cpu: 50m

limits:

memory: 500Mi

cpu: 100m

...

node节点的资源限制查看

$ kubectl describe no k8s-slave1

requests:

- 容器使用的最小资源需求,作用于schedule阶段,作为容器调度时资源分配的判断依赖

- 只有当前节点上可分配的资源量 >= request 时才允许将容器调度到该节点

- request参数不限制容器的最大可使用资源

- requests.cpu被转成docker的–cpu-shares参数,与cgroup cpu.shares功能相同 (无论宿主机有多少个cpu或者内核,–cpu-shares选项都会按照比例分配cpu资源)

- requests.memory没有对应的docker参数,仅作为k8s调度依据

limits:

- 容器能使用资源的最大值

- 设置为0表示对使用的资源不做限制, 可无限的使用

- 当pod 内存超过limit时,会被oom

- 当cpu超过limit时,不会被kill,但是会限制不超过limit值

- limits.cpu会被转换成docker的–cpu-quota参数。与cgroup cpu.cfs_quota_us功能相同

- limits.memory会被转换成docker的–memory参数。用来限制容器使用的最大内存

对于 CPU,我们知道计算机里 CPU 的资源是按“时间片”的方式来进行分配的,系统里的每一个操作都需要 CPU 的处理,所以,哪个任务要是申请的 CPU 时间片越多,那么它得到的 CPU 资源就越多。

然后还需要了解下 CGroup 里面对于 CPU 资源的单位换算:

1 CPU = 1000 millicpu(1 Core = 1000m)

这里的 m 就是毫、毫核的意思,Kubernetes 集群中的每一个节点可以通过操作系统的命令来确认本节点的 CPU 内核数量,然后将这个数量乘以1000,得到的就是节点总 CPU 总毫数。比如一个节点有四核,那么该节点的 CPU 总毫量为 4000m。

docker run命令和 CPU 限制相关的所有选项如下:

| 选项 | 描述 |

|---|---|

--cpuset-cpus="" |

允许使用的 CPU 集,值可以为 0-3,0,1 |

-c,--cpu-shares=0 |

CPU 共享权值(相对权重) |

cpu-period=0 |

限制 CPU CFS 的周期,范围从 100ms~1s,即[1000, 1000000] |

--cpu-quota=0 |

限制 CPU CFS 配额,必须不小于1ms,即 >= 1000,绝对限制 |

docker run -it --cpu-period=50000 --cpu-quota=25000 ubuntu:16.04 /bin/bash

将 CFS 调度的周期设为 50000,将容器在每个周期内的 CPU 配额设置为 25000,表示该容器每 50ms 可以得到 50% 的 CPU 运行时间。

注意:若内存使用超出限制,会引发系统的OOM机制,因CPU是可压缩资源,不会引发Pod退出或重建

yaml优化

目前完善后的yaml,myblog/one-pod/pod-completed.yaml

apiVersion: v1

kind: Pod

metadata:

name: myblog

namespace: luffy

labels:

component: myblog

spec:

volumes:

- name: mysql-data

hostPath:

path: /opt/mysql/data

nodeSelector: # 使用节点选择器将Pod调度到指定label的节点

component: mysql

containers:

- name: myblog

image: 10.0.1.5:5000/myblog:v1

env:

- name: MYSQL_HOST # 指定root用户的用户名

value: "127.0.0.1"

- name: MYSQL_PASSWD

value: "123456"

ports:

- containerPort: 8002

resources:

requests:

memory: 100Mi

cpu: 50m

limits:

memory: 500Mi

cpu: 100m

livenessProbe:

httpGet:

path: /blog/index/

port: 8002

scheme: HTTP

initialDelaySeconds: 10 # 容器启动后第一次执行探测是需要等待多少秒

periodSeconds: 15 # 执行探测的频率

timeoutSeconds: 2 # 探测超时时间

readinessProbe:

httpGet:

path: /blog/index/

port: 8002

scheme: HTTP

initialDelaySeconds: 10

timeoutSeconds: 2

periodSeconds: 15

- name: mysql

image: mysql:5.7

args:

- --character-set-server=utf8mb4

- --collation-server=utf8mb4_unicode_ci

ports:

- containerPort: 3306

env:

- name: MYSQL_ROOT_PASSWORD

value: "123456"

- name: MYSQL_DATABASE

value: "myblog"

resources:

requests:

memory: 100Mi

cpu: 50m

limits:

memory: 500Mi

cpu: 100m

readinessProbe:

tcpSocket:

port: 3306

initialDelaySeconds: 5

periodSeconds: 10

livenessProbe:

tcpSocket:

port: 3306

initialDelaySeconds: 15

periodSeconds: 20

volumeMounts:

- name: mysql-data

mountPath: /var/lib/mysql

为什么要优化

- 考虑真实的使用场景,像数据库这类中间件,是作为公共资源,为多个项目提供服务,不适合和业务容器绑定在同一个Pod中,因为业务容器是经常变更的,而数据库不需要频繁迭代

- yaml的环境变量中存在敏感信息(账号、密码),存在安全隐患

解决问题一,需要拆分yaml

myblog/two-pod/mysql.yaml

apiVersion: v1

kind: Pod

metadata:

name: mysql

namespace: luffy

labels:

component: mysql

spec:

hostNetwork: true # 声明pod的网络模式为host模式,效果同docker run --net=host

volumes:

- name: mysql-data

hostPath:

path: /opt/mysql/data

nodeSelector: # 使用节点选择器将Pod调度到指定label的节点

component: mysql

containers:

- name: mysql

image: mysql:5.7

args:

- --character-set-server=utf8mb4

- --collation-server=utf8mb4_unicode_ci

ports:

- containerPort: 3306

env:

- name: MYSQL_ROOT_PASSWORD

value: "123456"

- name: MYSQL_DATABASE

value: "myblog"

resources:

requests:

memory: 100Mi

cpu: 50m

limits:

memory: 500Mi

cpu: 100m

readinessProbe:

tcpSocket:

port: 3306

initialDelaySeconds: 5

periodSeconds: 10

livenessProbe:

tcpSocket:

port: 3306

initialDelaySeconds: 15

periodSeconds: 20

volumeMounts:

- name: mysql-data

mountPath: /var/lib/mysql

myblog.yaml

apiVersion: v1

kind: Pod

metadata:

name: myblog

namespace: luffy

labels:

component: myblog

spec:

containers:

- name: myblog

image: 10.0.1.5:5000/myblog:v1

imagePullPolicy: IfNotPresent

env:

- name: MYSQL_HOST # 指定root用户的用户名

value: "10.0.1.6" # mysql的地址

- name: MYSQL_PASSWD

value: "123456"

ports:

- containerPort: 8002

resources:

requests:

memory: 100Mi

cpu: 50m

limits:

memory: 500Mi

cpu: 100m

livenessProbe:

httpGet:

path: /blog/index/

port: 8002

scheme: HTTP

initialDelaySeconds: 10 # 容器启动后第一次执行探测是需要等待多少秒

periodSeconds: 15 # 执行探测的频率

timeoutSeconds: 2 # 探测超时时间

readinessProbe:

httpGet:

path: /blog/index/

port: 8002

scheme: HTTP

initialDelaySeconds: 10

timeoutSeconds: 2

periodSeconds: 15

创建测试

## 先删除旧pod

$ kubectl -n luffy delete po myblog

## 分别创建mysql和myblog

$ kubectl create -f mysql.yaml

$ kubectl create -f myblog.yaml

## 查看pod,注意mysqlIP为宿主机IP,因为网络模式为host

$ kubectl -n luffy get po -o wide

NAME READY STATUS RESTARTS AGE IP NODE

myblog 1/1 Running 0 41s 10.244.1.152 k8s-slave1

mysql 1/1 Running 0 52s 172.21.51.67 k8s-slave1

## 访问myblog服务正常

$ curl 10.244.1.152:8002/blog/index/

解决问题二,环境变量中敏感信息带来的安全隐患

为什么要统一管理环境变量

- 环境变量中有很多敏感的信息,比如账号密码,直接暴漏在yaml文件中存在安全性问题

- 团队内部一般存在多个项目,这些项目直接存在配置相同环境变量的情况,因此可以统一维护管理

- 对于开发、测试、生产环境,由于配置均不同,每套环境部署的时候都要修改yaml,带来额外的开销

k8s提供两类资源,configMap和Secret,可以用来实现业务配置的统一管理, 允许将配置文件与镜像文件分离,以使容器化的应用程序具有可移植性 。

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传

-

configMap,通常用来管理应用的配置文件或者环境变量,

myblog/two-pod/configmap.yamlapiVersion: v1 kind: ConfigMap metadata: name: myblog namespace: luffy # 指定namespace,直接引用此命名空间下的,而不能去读取别的命名空间的configMap data: MYSQL_HOST: "10.0.1.6" MYSQL_PORT: "3306"创建并查看configMap:

$ kubectl create -f configmap.yaml $ kubectl -n luffy get cm myblog -oyaml或者可以使用命令的方式,从文件中创建,比如:

configmap.txt

$ cat configmap.txt MYSQL_HOST=10.0.1.6 MYSQL_PORT=3306 $ kubectl -n luffy create configmap myblog --from-env-file=configmap.txt环境变量和非环境变量配置文件的创建区别

[root@k8s-master week2]# cat configmap.txt MYSQL_HOST=10.0.1.6 MYSQL_PORT=3306 kubectl create configmap my-config --from-env-file=path/to/bar.env kubectl create configmap my-config --from-file=path/to/bar # 环境变量形式 [root@k8s-master week2]# kubectl -n luffy create configmap myblog --from-env-file=configmap.txt # 非环境变量形式 [root@k8s-master week2]# cat config.ini [mysql] aaa=bbb ccc=xxx [root@k8s-master week2]# kubectl -n luffy create configmap demo --from-file=config.ini kubectl -n luffy get configmap kubectl -n luffy get configmap myblog -oyaml kubectl -n luffy get configmap demo -oyaml # 对比 [root@k8s-master week2]# kubectl -n luffy get configmap myblog -oyaml apiVersion: v1 data: MYSQL_HOST: 10.0.1.5 MYSQL_PORT: "3306" kind: ConfigMap metadata: creationTimestamp: "2021-06-13T23:12:58Z" managedFields: - apiVersion: v1 fieldsType: FieldsV1 fieldsV1: f:data: .: {} f:MYSQL_HOST: {} f:MYSQL_PORT: {} manager: kubectl-create operation: Update time: "2021-06-13T23:12:58Z" name: myblog namespace: luffy resourceVersion: "479878" selfLink: /api/v1/namespaces/luffy/configmaps/myblog uid: 4c9045fd-0cb3-4cb5-8562-7b808363c249 [root@k8s-master week2]# kubectl -n luffy get configmap demo -oyaml apiVersion: v1 data: config.ini: | [mysql] aaa=bbb ccc=xxx kind: ConfigMap metadata: creationTimestamp: "2021-06-13T23:13:14Z" managedFields: - apiVersion: v1 fieldsType: FieldsV1 fieldsV1: f:data: .: {} f:config.ini: {} manager: kubectl-create operation: Update time: "2021-06-13T23:13:14Z" name: demo namespace: luffy resourceVersion: "479916" selfLink: /api/v1/namespaces/luffy/configmaps/demo uid: a5ac2b59-ae22-4645-af1b-500b501fd15f命令查看帮助

[root@k8s-master week2]# kubectl -n luffy get configmap myblog -oyaml

# 翻译结果

Examples:

# Create a new configmap named my-config based on folder bar

kubectl create configmap my-config --from-file=path/to/bar

# Create a new configmap named my-config with specified keys instead of file basenames on disk

kubectl create configmap my-config --from-file=key1=/path/to/bar/file1.txt

--from-file=key2=/path/to/bar/file2.txt

# Create a new configmap named my-config with key1=config1 and key2=config2

kubectl create configmap my-config --from-literal=key1=config1 --from-literal=key2=config2

# Create a new configmap named my-config from the key=value pairs in the file

kubectl create configmap my-config --from-file=path/to/bar

# Create a new configmap named my-config from an env file

kubectl create configmap my-config --from-env-file=path/to/bar.env

列子:

# 根据文件夹栏创建一个名为my-config的新配置映射

# 使用指定的键而不是磁盘上的文件基名创建一个名为 my-config 的新配置映射

# 创建一个名为 my-config 的新配置映射,key1=config1 和 key2=config2

# 从文件中的 key=value 对创建一个名为 my-config 的新配置映射

# 从 env 文件创建一个名为 my-config 的新配置映射

- Secret,管理敏感类的信息,默认会base64编码存储,有三种类型

[root@k8s-master week2]# kubectl create secret -h

Create a secret using specified subcommand.

Available Commands:

docker-registry Create a secret for use with a Docker registry

generic Create a secret from a local file, directory or literal value

tls Create a TLS secret

Usage:

kubectl create secret [flags] [options]

Use "kubectl --help" for more information about a given command.

Use "kubectl options" for a list of global command-line options (applies to all commands).

使用指定的子命令创建一个秘密。

可用命令:

docker-registry 创建一个用于 Docker 注册表的密钥

generic 从本地文件、目录或文字值创建一个秘密

tls 创建一个 TLS 秘密

用法:

kubectl 创建秘密 [标志] [选项]

使用“kubectl --help”获取有关给定命令的更多信息。

使用“kubectl options”获取全局命令行选项列表(适用于所有命令)。

- Service Account :用来访问Kubernetes API,由Kubernetes自动创建,并且会自动挂载到Pod的/run/secrets/kubernetes.io/serviceaccount目录中;创建ServiceAccount后,Pod中指定serviceAccount后,自动创建该ServiceAccount对应的secret;

- Opaque : base64编码格式的Secret,用来存储密码、密钥等;

- kubernetes.io/dockerconfigjson :用来存储私有docker registry的认证信息。

myblog/two-pod/secret.yaml

apiVersion: v1

kind: Secret

metadata:

name: myblog

namespace: luffy

type: Opaque

data:

MYSQL_USER: cm9vdA== #注意加-n参数, echo -n root|base64

MYSQL_PASSWD: MTIzNDU2

不加-n 多一个换行符

[root@k8s-master week2]# echo -n root|base64

cm9vdA==

[root@k8s-master week2]# echo -n 123456|base64

MTIzNDU2

# 反解

[root@k8s-master week2]# echo cm9vdA==|base64 -d

root

创建并查看:

$ kubectl create -f secret.yaml

$ kubectl -n luffy get secret

如果不习惯这种方式,可以通过如下方式:

$ cat secret.txt

MYSQL_USER=root

MYSQL_PASSWD=123456

$ kubectl -n luffy create secret generic myblog --from-env-file=secret.txt

[root@k8s-master week2]# kubectl -n luffy get secret

NAME TYPE DATA AGE

default-token-thqxq kubernetes.io/service-account-token 3 87m

myblog Opaque 2 24m

[root@k8s-master week2]# kubectl -n luffy get secret myblog -oyaml

apiVersion: v1

data:

MYSQL_PASSWD: MTIzNDU2

MYSQL_USER: cm9vdA==

kind: Secret

metadata:

creationTimestamp: "2021-07-11T03:10:18Z"

managedFields:

- apiVersion: v1

fieldsType: FieldsV1

fieldsV1:

f:data:

.: {}

f:MYSQL_PASSWD: {}

f:MYSQL_USER: {}

f:type: {}

manager: kubectl-create

operation: Update

time: "2021-07-11T03:10:18Z"

name: myblog

namespace: luffy

resourceVersion: "15853"

selfLink: /api/v1/namespaces/luffy/secrets/myblog

uid: 6da85352-0000-4127-9604-18484a8787b3

type: Opaque

修改后的mysql的yaml,资源路径:myblog/two-pod/mysql-with-config.yaml

...

spec:

containers:

- name: mysql

args:

- --character-set-server=utf8mb4

- --collation-server=utf8mb4_unicode_ci

env: #环境变量

- name: MYSQL_USER

valueFrom:

secretKeyRef:

name: myblog

key: MYSQL_USER

- name: MYSQL_ROOT_PASSWORD

valueFrom:

secretKeyRef:

name: myblog

key: MYSQL_PASSWD

- name: MYSQL_DATABASE

value: "myblog"

...

整体修改后的myblog的yaml,资源路径:myblog/two-pod/myblog-with-config.yaml

apiVersion: v1

kind: Pod

metadata:

name: myblog

namespace: luffy

labels:

component: myblog

spec:

containers:

- name: myblog

image: 10.0.1.5:5000/myblog:v1

imagePullPolicy: IfNotPresent

env:

- name: MYSQL_HOST

valueFrom:

configMapKeyRef:

name: myblog

key: MYSQL_HOST

- name: MYSQL_PORT

valueFrom:

configMapKeyRef:

name: myblog

key: MYSQL_PORT

- name: MYSQL_USER

valueFrom:

secretKeyRef:

name: myblog

key: MYSQL_USER

- name: MYSQL_PASSWD

valueFrom:

secretKeyRef:

name: myblog

key: MYSQL_PASSWD

ports:

- containerPort: 8002

resources:

requests:

memory: 100Mi

cpu: 50m

limits:

memory: 500Mi

cpu: 100m

livenessProbe:

httpGet:

path: /blog/index/

port: 8002

scheme: HTTP

initialDelaySeconds: 10 # 容器启动后第一次执行探测是需要等待多少秒

periodSeconds: 15 # 执行探测的频率

timeoutSeconds: 2 # 探测超时时间

readinessProbe:

httpGet:

path: /blog/index/

port: 8002

scheme: HTTP

initialDelaySeconds: 10

timeoutSeconds: 2

periodSeconds: 15

在部署不同的环境时,pod的yaml无须再变化,只需要在每套环境中维护一套ConfigMap和Secret即可。但是注意configmap和secret不能跨namespace使用,且更新后,pod内的env不会自动更新,重建后方可更新。

[root@k8s-master week2]# vim mysql.yaml

[root@k8s-master week2]# vim myblog.yaml

[root@k8s-master week2]# kubectl -n luffy delete -f myblog.yaml

pod "myblog" deleted

[root@k8s-master week2]# kubectl -n luffy delete -f mysql.yaml

pod "mysql" deleted

[root@k8s-master week2]# kubectl -n luffy create -f myblog.yaml

[root@k8s-master week2]# kubectl -n luffy create -f mysql.yaml

[root@k8s-master week2]# kubectl -n luffy get po -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

myblog 1/1 Running 0 31m 10.244.1.9 k8s-slave1

mysql 1/1 Running 0 74m 10.0.1.6 k8s-slave1

[root@k8s-master week2]# curl 10.244.1.9:8002/blog/index/

我的博客列表:</h3>

如何编写资源yaml

-

拿来主义,从机器中已有的资源中拿

$ kubectl -n kube-system get po,deployment,ds -

学会在官网查找, https://kubernetes.io/docs/home/

-

从kubernetes-api文档中查找, https://kubernetes.io/docs/reference/generated/kubernetes-api/v1.16/#pod-v1-core

-

kubectl explain 查看具体字段含义

pod状态与生命周期

Pod的状态如下表所示:

| 状态值 | 描述 |

|---|---|

| Pending | API Server已经创建该Pod,等待调度器调度 |

| ContainerCreating | 拉取镜像启动容器中 |

| Running | Pod内容器均已创建,且至少有一个容器处于运行状态、正在启动状态或正在重启状态 |

| Succeeded|Completed | Pod内所有容器均已成功执行退出,且不再重启 |

| Failed|Error | Pod内所有容器均已退出,但至少有一个容器退出为失败状态 |

| CrashLoopBackOff | Pod内有容器启动失败,比如配置文件丢失导致主进程启动失败 |

| Unknown | 由于某种原因无法获取该Pod的状态,可能由于网络通信不畅导致 |

生命周期示意图:

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传

启动和关闭示意:

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传

初始化容器:

- 验证业务应用依赖的组件是否均已启动

- 修改目录的权限

- 调整系统参数

...

initContainers:

- command:

- /sbin/sysctl

- -w

- vm.max_map_count=262144

image: alpine:3.6

imagePullPolicy: IfNotPresent

name: elasticsearch-logging-init

resources: {}

securityContext:

privileged: true

- name: fix-permissions

image: alpine:3.6

command: ["sh", "-c", "chown -R 1000:1000 /usr/share/elasticsearch/data"]

securityContext:

privileged: true

volumeMounts:

- name: elasticsearch-logging

mountPath: /usr/share/elasticsearch/data

...

验证Pod生命周期:

apiVersion: v1

kind: Pod

metadata:

name: pod-lifecycle

namespace: luffy

labels:

component: pod-lifecycless

spec:

initContainers:

- name: init

image: busybox

command: ['sh', '-c', 'echo $(date +%s): INIT >> /loap/timing']

volumeMounts:

- mountPath: /loap

name: timing

containers:

- name: main

image: busybox

command: ['sh', '-c', 'echo $(date +%s): START >> /loap/timing;

sleep 10; echo $(date +%s): END >> /loap/timing;']

volumeMounts:

- mountPath: /loap

name: timing

livenessProbe:

exec:

command: ['sh', '-c', 'echo $(date +%s): LIVENESS >> /loap/timing']

readinessProbe:

exec:

command: ['sh', '-c', 'echo $(date +%s): READINESS >> /loap/timing']

lifecycle:

postStart:

exec:

command: ['sh', '-c', 'echo $(date +%s): POST-START >> /loap/timing']

preStop:

exec:

command: ['sh', '-c', 'echo $(date +%s): PRE-STOP >> /loap/timing']

volumes:

- name: timing

hostPath:

path: /tmp/loap

创建pod测试:

$ kubectl create -f pod-lifecycle.yaml

## 查看demo状态

$ kubectl -n luffy get po -o wide -w

## 查看调度节点的/tmp/loap/timing

$ cat /tmp/loap/timing

1585424708: INIT

1585424746: START

1585424746: POST-START

1585424754: READINESS

1585424756: LIVENESS

1585424756: END

须主动杀掉 Pod 才会触发

pre-stop hook,如果是 Pod 自己 Down 掉,则不会执行pre-stop hook,且杀掉Pod进程前,进程必须是正常运行状态,否则不会执行pre-stop钩子

小结

- 实现k8s平台与特定的容器运行时解耦,提供更加灵活的业务部署方式,引入了Pod概念

- k8s使用yaml格式定义资源文件,yaml中Map与List的语法,与json做类比

- 通过kubectl apply| get | exec | logs | delete 等操作k8s资源,必须指定namespace

- 每启动一个Pod,为了实现网络空间共享,会先创建Infra容器,并把其他容器网络加入该容器

- 通过livenessProbe和readinessProbe实现Pod的存活性和就绪健康检查

- 通过requests和limit分别限定容器初始资源申请与最高上限资源申请

- Pod通过initContainer和lifecycle分别来执行初始化、pod启动和删除时候的操作,使得功能更加全面和灵活

- 编写yaml讲究方法,学习k8s,养成从官方网站查询知识的习惯

做了哪些工作:

- 定义Pod.yaml,将myblog和mysql打包在同一个Pod中,使用myblog使用localhost访问mysql

- mysql数据持久化,为myblog业务应用添加了健康检查和资源限制

- 将myblog与mysql拆分,使用独立的Pod管理

- yaml文件中的环境变量存在账号密码明文等敏感信息,使用configMap和Secret来统一配置,优化部署

只使用Pod, 面临的问题:

- 业务应用启动多个副本

- Pod重建后IP会变化,外部如何访问Pod服务

- 运行业务Pod的某个节点挂了,可以自动帮我把Pod转移到集群中的可用节点启动起来

- 我的业务应用功能是收集节点监控数据,需要把Pod运行在k8集群的各个节点上

Pod控制器

Workload (工作负载)

控制器又称工作负载是用于实现管理pod的中间层,确保pod资源符合预期的状态,pod的资源出现故障时,会尝试 进行重启,当根据重启策略无效,则会重新新建pod的资源。

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传

- ReplicaSet: 用户创建指定数量的pod副本数量,确保pod副本数量符合预期状态,并且支持滚动式自动扩容和缩容功能

- Deployment:工作在ReplicaSet之上,用于管理无状态应用,目前来说最好的控制器。支持滚动更新和回滚功能,提供声明式配置

- DaemonSet:用于确保集群中的每一个节点只运行特定的pod副本,通常用于实现系统级后台任务。比如EFK服务

- Job:只要完成就立即退出,不需要重启或重建

- Cronjob:周期性任务控制,不需要持续后台运行

- StatefulSet:管理有状态应用

Deployment

myblog/deployment/deploy-mysql.yaml

apiVersion: apps/v1 # 版本

kind: Deployment # 类型

metadata: # 元数据

name: mysql # deployment的名称

namespace: luffy

spec: # 详细说明

replicas: 1 #指定Pod副本数

selector: # 【指定Pod的选择器】,

matchLabels: # 匹配标签,

app: mysql # 与上面selector相对应,选择器会选择 pod里面带有 app=mysql这样label的pod

template: # 定义下面的一个标签模板

metadata: # 元数据

labels: #给Pod打label,与matchLabels是一起使用的,意思就是给pod定义一个matchLabel相匹配的标签

app: mysql # 定义一个app=mysql的标签

spec:

hostNetwork: true

volumes:

- name: mysql-data

hostPath:

path: /opt/mysql/data

nodeSelector: # 使用节点选择器将Pod调度到指定label的节点

component: mysql

containers:

- name: mysql

image: mysql:5.7

args:

- --character-set-server=utf8mb4

- --collation-server=utf8mb4_unicode_ci

ports:

- containerPort: 3306

env:

- name: MYSQL_USER

valueFrom:

secretKeyRef:

name: myblog

key: MYSQL_USER

- name: MYSQL_ROOT_PASSWORD

valueFrom:

secretKeyRef:

name: myblog

key: MYSQL_PASSWD

- name: MYSQL_DATABASE

value: "myblog"

resources:

requests:

memory: 100Mi

cpu: 50m

limits:

memory: 500Mi

cpu: 100m

readinessProbe:

tcpSocket:

port: 3306

initialDelaySeconds: 5

periodSeconds: 10

livenessProbe:

tcpSocket:

port: 3306

initialDelaySeconds: 15

periodSeconds: 20

volumeMounts:

- name: mysql-data

mountPath: /var/lib/mysql

deploy-myblog.yaml:

apiVersion: apps/v1 # 版本

kind: Deployment # 类型

metadata: #元数据

name: myblog # deployment的名称

namespace: luffy

spec: # 详细说明

replicas: 1 #指定Pod副本数

selector: #指定Pod的选择器

matchLabels: # 匹配标签,

app: myblog # 与下面选择器相对应,选择器选择 pod里面带有 app=myblog这样label的pod

template: # 模板

metadata:

labels: #给Pod打label,与matchLabels是一起使用的,意思就是给pod定义一个matchLabel相匹配的标签

app: myblog

spec: # 到这以后基本都是pod的写法,注意这两个spec的位置,空格。

containers:

- name: myblog

image: 10.0.1.5:5000/myblog:v1

imagePullPolicy: IfNotPresent

env:

- name: MYSQL_HOST

valueFrom:

configMapKeyRef:

name: myblog

key: MYSQL_HOST

- name: MYSQL_PORT

valueFrom:

configMapKeyRef:

name: myblog

key: MYSQL_PORT

- name: MYSQL_USER

valueFrom:

secretKeyRef:

name: myblog

key: MYSQL_USER

- name: MYSQL_PASSWD

valueFrom:

secretKeyRef:

name: myblog

key: MYSQL_PASSWD

ports:

- containerPort: 8002

resources:

requests:

memory: 100Mi

cpu: 50m

limits:

memory: 500Mi

cpu: 100m

livenessProbe:

httpGet:

path: /blog/index/

port: 8002

scheme: HTTP

initialDelaySeconds: 10 # 容器启动后第一次执行探测是需要等待多少秒

periodSeconds: 15 # 执行探测的频率

timeoutSeconds: 2 # 探测超时时间

readinessProbe:

httpGet:

path: /blog/index/

port: 8002

scheme: HTTP

initialDelaySeconds: 10

timeoutSeconds: 2

periodSeconds: 15

注:

[root@k8s-master week2]# vim mysql-deploy.yaml

[root@k8s-master week2]# vim myblog-deploy.yaml

[root@k8s-master week2]# kubectl delete -f mysql.yaml

[root@k8s-master week2]# kubectl delete -f myblog.yaml

创建Deployment

$ kubectl create -f deploy-mysql.yaml

$ kubectl create -f deploy-myblog.yaml

查看Deployment

# kubectl api-resources

$ kubectl -n luffy get deploy

NAME READY UP-TO-DATE AVAILABLE AGE

myblog 1/1 1 1 2m22s

mysql 1/1 1 1 2d11h

* `NAME` 列出了集群中 Deployments 的名称。

* `READY`显示当前正在运行的副本数/期望的副本数。

* `UP-TO-DATE`显示已更新以实现期望状态的副本数。

* `AVAILABLE`显示应用程序可供用户使用的副本数。

* `AGE` 显示应用程序运行的时间量。

# 查看pod

$ kubectl -n luffy get po

NAME READY STATUS RESTARTS AGE

myblog-7c96c9f76b-qbbg7 1/1 Running 0 109s

mysql-85f4f65f99-w6jkj 1/1 Running 0 2m28s

# 查看replicaSet

$ kubectl -n luffy get rs

# 删除pod发现deployment会自动重新创建、

[root@k8s-master week2]# kubectl -n luffy get po

NAME READY STATUS RESTARTS AGE

myblog-5d9b76df88-bxbfh 1/1 Running 0 5m12s

mysql-7446f4dc7b-jp2ps 1/1 Running 0 67m

[root@k8s-master week2]# kubectl delete po myblog-5d9b76df88-tbzj4 -n luffy

pod "myblog-5d9b76df88-tbzj4" deleted

[root@k8s-master week2]# kubectl -n luffy get po

NAME READY STATUS RESTARTS AGE

myblog-5d9b76df88-bxbfh 1/1 Running 0 8m20s

mysql-7446f4dc7b-jp2ps 1/1 Running 0 70m

假如deployment在,却没有拉起pod怎么办?

# 查看kube-controller-manager-k8s-master日志,正常他会生成pod元数据,去查看为什么没有生成

$ kubectl -n kube-system logs -f kube-controller-manager-k8s-master

面试题

# kube-controller-manager和scheduler谁在前,谁在后?

kube-controller-manager在前,因为它负载是生成pod元数据,写到etcd中,schedule才能去调度

schedule是负责调度pod,如果没有pod没法调度

副本保障机制

controller实时检测pod状态,并保障副本数一直处于期望的值。

## 删除pod,观察pod状态变化

$ kubectl -n luffy delete pod myblog-7c96c9f76b-qbbg7

# 观察pod

$ kubectl get pods -o wide

## 设置两个副本, 或者通过kubectl -n luffy edit deploy myblog的方式,最好通过修改文件,然后apply的方式,这样yaml文件可以保持同步

$ kubectl -n luffy scale deploy myblog --replicas=2

deployment.extensions/myblog scaled

# 观察pod

$ kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE

myblog-7c96c9f76b-qbbg7 1/1 Running 0 11m

myblog-7c96c9f76b-s6brm 1/1 Running 0 55s

mysql-85f4f65f99-w6jkj 1/1 Running 0 11m

Pod驱逐策略

K8S 有个特色功能叫 pod eviction,它在某些场景下如节点 NotReady,或者资源不足时,把 pod 驱逐至其它节点,这也是出于业务保护的角度去考虑的。

-

Kube-controller-manager: 周期性检查所有节点状态,当节点处于 NotReady 状态超过一段时间后,驱逐该节点上所有 pod。

pod-eviction-timeout:NotReady 状态节点超过该时间后,执行驱逐,默认 5 min,适用于k8s 1.13版本之前- 1.13版本后,集群开启

TaintBasedEvictions 与TaintNodesByCondition功能,即taint-based-evictions,即节点若失联或者出现各种异常情况,k8s会自动为node打上污点,同时为pod默认添加如下容忍设置:

[root@k8s-master week2]# kubectl -n luffy get po myblog-5d9b76df88-7b4qh -oyaml|grep -A 8 "tolerations" tolerations: - effect: NoExecute key: node.kubernetes.io/not-ready operator: Exists tolerationSeconds: 300 - effect: NoExecute key: node.kubernetes.io/unreachable operator: Exists tolerationSeconds: 300tolerations: - effect: NoExecute key: node.kubernetes.io/not-ready operator: Exists tolerationSeconds: 300 - effect: NoExecute key: node.kubernetes.io/unreachable operator: Exists tolerationSeconds: 300即各pod可以独立设置驱逐容忍时间。

-

Kubelet: 周期性检查本节点资源,当资源不足时,按照优先级驱逐部分 pod

memory.available:节点可用内存nodefs.available:节点根盘可用存储空间nodefs.inodesFree:节点inodes可用数量imagefs.available:镜像存储盘的可用空间imagefs.inodesFree:镜像存储盘的inodes可用数量

$ df -TH

存储盘满了,会去检查/var/lib/docker,或者docker所在目录,在没有设置的情况下使用率超过百分之80会出发kubelet的一些操作,比如清理image,检查到快满了会操作一些docker相关的操作,一旦去清理会全部把本地的镜像删除。还会有一个操作,假如镜像删除不了,会去驱逐你的pod,将pod赶到别的机器上去,也是处于保护业务的一种考虑。本质上说就是为了业务的稳定运行。

服务更新

修改服务,重新打tag模拟服务更新。

更新方式:

-

修改yaml文件,使用

kubectl -n luffy apply -f deploy-myblog.yaml来应用更新 -

kubectl -n luffy edit deploy myblog在线更新 -

kubectl -n luffy set image deploy myblog myblog=10.0.1.5:5000/myblog:v2 --record

注

kubectl -n luffy set image deploy myblog myblog=10.0.1.5:5000/myblog:v2 --record

# 参数解释

# set image 设置镜像

# 给名字叫myblog的deploy,设置镜像

# myblog=10.0.1.5:5000/myblog:v2,myblog为容器名称=镜像地址,指定替换哪个容器的镜像

# --record 记录 不写也可以更改

可以 kubectl -n luffy set image -h 查看

修改文件测试:

注

修改内容测试,v2

[root@k8s-master ~]# vim /root/2021/python-demo/blog/templates/index.html

[root@k8s-master python-demo]# pwd

/root/2021/python-demo

[root@k8s-master python-demo]# docker build . -t 10.0.1.5:5000/myblog:v2 -f Dockerfile

[root@k8s-master python-demo]# docker push 10.0.1.5:5000/myblog:v2

[root@k8s-master python-demo]# kubectl -n luffy edit deploy myblog

deployment.apps/myblog edited

改成v2

image: 10.0.1.5:5000/myblog:v2

注意查看pod的变化

[root@k8s-master python-demo]# kubectl -n luffy get po -owide -w

NAME READY STATUS RESTARTS AGE IP NODE

myblog-5d9b76df88-7b4qh 1/1 Running 0 29m 10.244.0.7 k8s-master

myblog-5d9b76df88-bxbfh 1/1 Running 0 36m 10.244.1.16 k8s-slave1

mysql-7446f4dc7b-jp2ps 1/1 Running 0 98m 10.0.1.6 k8s-slave1

# 可以通过查看yaml文件看更新策略

$ kubectl -n luffy get deploy -oyaml |more

更新策略

...

spec:

replicas: 2 #指定Pod副本数

selector: #指定Pod的选择器

matchLabels:

app: myblog

strategy:

rollingUpdate:

maxSurge: 25% # 最大允许3个pod出现,replicas指定pod副本数是2,所以2+2*0.25=2.5,向上取整是2

maxUnavailable: 25% # 最多允许2+2*0.5(向下取整是0):所以是2+0=2

type: RollingUpdate #指定更新方式为滚动更新,默认策略,通过get deploy yaml查看

...

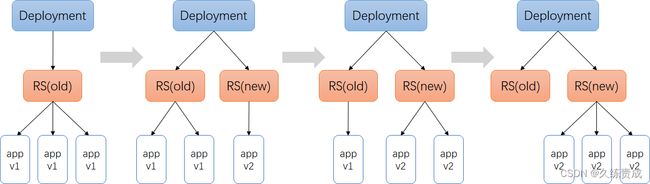

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传

策略控制:

- maxSurge:最大激增数, 指更新过程中, 最多可以比replicas预先设定值多出的pod数量, 可以为固定值或百分比,默认为desired Pods数的25%。计算时向上取整(比如3.4,取4),更新过程中最多会有replicas + maxSurge个pod

- maxUnavailable: 指更新过程中, 最多有几个pod处于无法服务状态 , 可以为固定值或百分比,默认为desired Pods数的25%。计算时向下取整(比如3.6,取3)

在Deployment rollout时,需要保证Available(Ready) Pods数不低于 desired pods number - maxUnavailable; 保证所有的非异常状态Pods数不多于 desired pods number + maxSurge。

以myblog为例,使用默认的策略,更新过程:

- maxSurge 25%,2个实例,向上取整,则maxSurge为1,意味着最多可以有2+1=3个Pod,那么此时会新创建1个ReplicaSet,RS-new,把副本数置为1,此时呢,副本控制器就去创建这个新的Pod

- 同时,maxUnavailable是25%,副本数2*25%,向下取整,则为0,意味着,滚动更新的过程中,不能有少于2个可用的Pod,因此,旧的Replica(RS-old)会先保持不动,等RS-new管理的Pod状态Ready后,此时已经有3个Ready状态的Pod了,那么由于只要保证有2个可用的Pod即可,因此,RS-old的副本数会有2个变成1个,此时,会删掉一个旧的Pod

- 删掉旧的Pod的时候,由于总的Pod数量又变成2个了,因此,距离最大的3个还有1个Pod可以创建,所以,RS-new把管理的副本数由1改成2,此时又会创建1个新的Pod,等RS-new管理了2个Pod都ready后,那么就可以把RS-old的副本数由1置为0了,这样就完成了滚动更新

#查看滚动更新事件

$ kubectl -n luffy describe deploy myblog

...

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal ScalingReplicaSet 11s deployment-controller Scaled up replica set myblog-6cf56fc848 to 1

Normal ScalingReplicaSet 11s deployment-controller Scaled down replica set myblog-6fdcf98f9 to 1

Normal ScalingReplicaSet 11s deployment-controller Scaled up replica set myblog-6cf56fc848 to 2

Normal ScalingReplicaSet 6s deployment-controller Scaled down replica set myblog-6fdcf98f9 to 0

$ kubectl get rs

NAME DESIRED CURRENT READY AGE

myblog-6cf56fc848 2 2 2 16h

myblog-6fdcf98f9 0 0 0 16h

除了滚动更新以外,还有一种策略是Recreate,直接在当前的pod基础上先删后建:

...

strategy:

type: Recreate

...

我们课程中的mysql服务应该使用Recreate来管理:

$ kubectl -n luffy edit deploy mysql

...

selector:

matchLabels:

app: mysql

strategy:

type: Recreate

template:

metadata:

creationTimestamp: null

labels:

app: mysql

...

服务回滚

通过滚动升级的策略可以平滑的升级Deployment,若升级出现问题,需要最快且最好的方式回退到上一次能够提供正常工作的版本。为此K8S提供了回滚机制。

revision:更新应用时,K8S都会记录当前的版本号,即为revision,当升级出现问题时,可通过回滚到某个特定的revision,默认配置下,K8S只会保留最近的几个revision,可以通过Deployment配置文件中的spec.revisionHistoryLimit属性增加revision数量,默认是10。

查看当前:

$ kubectl -n luffy rollout history deploy myblog ##CHANGE-CAUSE为空

$ kubectl delete -f deploy-myblog.yaml ## 方便演示到具体效果,删掉已有deployment

记录回滚:

$ kubectl apply -f deploy-myblog.yaml --record

$ kubectl -n luffy set image deploy myblog myblog=172.21.51.143:5000/myblog:v2 --record=true

查看deployment更新历史:

$ kubectl -n luffy rollout history deploy myblog

deployment.extensions/myblog

REVISION CHANGE-CAUSE

1 kubectl create --filename=deploy-myblog.yaml --record=true

2 kubectl set image deploy myblog myblog=172.21.51.143:5000/demo/myblog:v1 --record=true

回滚到具体的REVISION:

$ kubectl -n luffy rollout undo deploy myblog --to-revision=1

deployment.extensions/myblog rolled back

# 访问应用测试

Kubernetes服务访问之Service

通过以前的学习,我们已经能够通过Deployment来创建一组Pod来提供具有高可用性的服务。虽然每个Pod都会分配一个单独的Pod IP,然而却存在如下两个问题:

- Pod IP仅仅是集群内可见的虚拟IP,外部无法访问。

- Pod IP会随着Pod的销毁而消失,当ReplicaSet对Pod进行动态伸缩时,Pod IP可能随时随地都会变化,这样对于我们访问这个服务带来了难度。

Service 负载均衡之Cluster IP

service是一组pod的服务抽象,相当于一组pod的LB,负责将请求分发给对应的pod。service会为这个LB提供一个IP,一般称为cluster IP 。使用Service对象,通过selector进行标签选择,找到对应的Pod:

myblog/deployment/svc-myblog.yaml

apiVersion: v1

kind: Service

metadata:

name: myblog

namespace: luffy

spec:

ports:

- port: 80 # 这是映射到这个ClusterIP上的端,可以自定义

protocol: TCP # 协议是tcp

targetPort: 8002 # 容器内的端口是8002

selector: # 节点选择器

app: myblog # 选择app=myblog的pod

type: ClusterIP # k8s中svc有三种类型,分别ClusterIP、NodePort、LoadBanlancer

# 查看labes

[root@k8s-master ~]# kubectl -n luffy get po --show-labels

NAME READY STATUS RESTARTS AGE LABELS

myblog-5d9b76df88-k9cqs 1/1 Running 0 110s app=myblog,pod-template-hash=5d9b76df88

myblog-5d9b76df88-wthjt 1/1 Running 0 12h app=myblog,pod-template-hash=5d9b76df88

mysql-7446f4dc7b-5t9db 1/1 Running 0 16h app=mysql,pod-template-hash=7446f4dc7b

# 可以看出selector选择器可以管理如下的pod

[root@k8s-master ~]# kubectl -n luffy get po -l app=myblog

NAME READY STATUS RESTARTS AGE

myblog-5d9b76df88-k9cqs 1/1 Running 0 3m19s

myblog-5d9b76df88-wthjt 1/1 Running 0 12h

操作演示:

## 别名

$ alias kd='kubectl -n luffy'

## 创建服务

$ kd create -f svc-myblog.yaml

$ kd get po --show-labels

NAME READY STATUS RESTARTS AGE LABELS

myblog-5c97d79cdb-jn7km 1/1 Running 0 6m5s app=myblog

mysql-85f4f65f99-w6jkj 1/1 Running 0 176m app=mysql

$ kd get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

myblog ClusterIP 10.99.174.93 80/TCP 7m50s

$ kd describe svc myblog

Name: myblog

Namespace: demo

Labels:

Annotations:

Selector: app=myblog

Type: ClusterIP #这是一个虚拟ip,可以通过80端口访问他

IP: 10.99.174.93

Port: 80/TCP

TargetPort: 8002/TCP

Endpoints: 10.244.0.68:8002

Session Affinity: None

Events:

## 扩容myblog服务

$ kd scale deploy myblog --replicas=2

deployment.extensions/myblog scaled

## 再次查看

$ kd describe svc myblog

Name: myblog

Namespace: demo

Labels:

Annotations:

Selector: app=myblog

Type: ClusterIP

IP: 10.99.174.93

Port: 80/TCP

TargetPort: 8002/TCP

Endpoints: 10.244.0.68:8002,10.244.1.158:8002

Session Affinity: None

Events:

注释:

# 创建服务

[root@k8s-master week2]# kubectl -n luffy create -f svc-myblog.yaml

[root@k8s-master week2]# kubectl -n luffy get po --show-labels

NAME READY STATUS RESTARTS AGE LABELS

myblog-5d9b76df88-txhbn 1/1 Running 1 99m app=myblog,pod-template-hash=5d9b76df88

myblog-5d9b76df88-wthjt 1/1 Running 1 14h app=myblog,pod-template-hash=5d9b76df88

mysql-58d95d459c-xtcjl 1/1 Running 0 66m app=mysql,pod-template-hash=58d95d459c

[root@k8s-master week2]# kubectl -n luffy get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

myblog ClusterIP 10.104.72.57 80/TCP 102m

[root@k8s-master week2]# kubectl -n luffy get pod -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

myblog-5d9b76df88-txhbn 1/1 Running 1 100m 10.244.0.11 k8s-master

myblog-5d9b76df88-wthjt 1/1 Running 1 14h 10.244.1.20 k8s-slave1

mysql-58d95d459c-xtcjl 1/1 Running 0 66m 10.244.1.21 k8s-slave1

[root@k8s-master week2]# kubectl -n luffy describe svc myblog

Name: myblog

Namespace: luffy

Labels:

Annotations:

Selector: app=myblog

Type: ClusterIP

IP: 10.104.72.57 # 访问这个地址的80 端口,会请求转发到下面的Endpoints对应的两个地址+端口上

Port: 80/TCP

TargetPort: 8002/TCP

Endpoints: 10.244.0.11:8002,10.244.1.20:8002

Session Affinity: None

Events:

# 可以看到访问 clusterIP的80 端口会转发到下面的Endpoints地址上,也就是myblog——pod上去

[root@k8s-master week2]# curl 10.104.72.57/blog/index/

<!DOCTYPE html>

"en">

"UTF-8">

首页<<span class="token operator">/</span>title>

<<span class="token operator">/</span>head>

<body>

<h3>我的博客列表:<<span class="token operator">/</span>h3>

<<span class="token operator">/</span>br>

<<span class="token operator">/</span>br>

<a href=<span class="token string">" /blog/article/edit/0 "</span>>写博客<<span class="token operator">/</span>a>

<<span class="token operator">/</span>body>

<span class="token comment"># 删除一个pod,自动重建ip地址会发现改变</span>

<span class="token namespace">[root@k8s-master week2]</span><span class="token comment"># kubectl -n luffy get po -owide</span>

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

myblog<span class="token operator">-</span>5d9b76df88<span class="token operator">-</span>txhbn 1<span class="token operator">/</span>1 Running 1 104m 10<span class="token punctuation">.</span>244<span class="token punctuation">.</span>0<span class="token punctuation">.</span>11 k8s<span class="token operator">-</span>master <none> <none>

myblog<span class="token operator">-</span>5d9b76df88<span class="token operator">-</span>wthjt 1<span class="token operator">/</span>1 Running 1 14h 10<span class="token punctuation">.</span>244<span class="token punctuation">.</span>1<span class="token punctuation">.</span>20 k8s<span class="token operator">-</span>slave1 <none> <none>

mysql<span class="token operator">-</span>58d95d459c<span class="token operator">-</span>xtcjl 1<span class="token operator">/</span>1 Running 0 70m 10<span class="token punctuation">.</span>244<span class="token punctuation">.</span>1<span class="token punctuation">.</span>21 k8s<span class="token operator">-</span>slave1 <none> <none>

<span class="token namespace">[root@k8s-master week2]</span><span class="token comment"># kubectl -n luffy delete po myblog-5d9b76df88-txhbn </span>

pod <span class="token string">"myblog-5d9b76df88-txhbn"</span> deleted

<span class="token comment"># ip已经从原来的11变成了12</span>

<span class="token namespace">[root@k8s-master week2]</span><span class="token comment"># kubectl -n luffy get po -owide</span>

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

myblog<span class="token operator">-</span>5d9b76df88<span class="token operator">-</span>l69gj 1<span class="token operator">/</span>1 Running 0 45s 10<span class="token punctuation">.</span>244<span class="token punctuation">.</span>0<span class="token punctuation">.</span>12 k8s<span class="token operator">-</span>master <none> <none>

myblog<span class="token operator">-</span>5d9b76df88<span class="token operator">-</span>wthjt 1<span class="token operator">/</span>1 Running 1 14h 10<span class="token punctuation">.</span>244<span class="token punctuation">.</span>1<span class="token punctuation">.</span>20 k8s<span class="token operator">-</span>slave1 <none> <none>

mysql<span class="token operator">-</span>58d95d459c<span class="token operator">-</span>xtcjl 1<span class="token operator">/</span>1 Running 0 72m 10<span class="token punctuation">.</span>244<span class="token punctuation">.</span>1<span class="token punctuation">.</span>21 k8s<span class="token operator">-</span>slave1 <none> <none>

<span class="token comment"># 再次查看,svc后端的地址也会更新成12</span>

<span class="token namespace">[root@k8s-master week2]</span><span class="token comment"># kubectl -n luffy describe svc myblog</span>

Name: myblog

Namespace: luffy

Labels: <none>

Annotations: <none>

Selector: app=myblog

<span class="token function">Type</span>: ClusterIP

IP: 10<span class="token punctuation">.</span>104<span class="token punctuation">.</span>72<span class="token punctuation">.</span>57

Port: <unset> 80<span class="token operator">/</span>TCP

TargetPort: 8002<span class="token operator">/</span>TCP

Endpoints: 10<span class="token punctuation">.</span>244<span class="token punctuation">.</span>0<span class="token punctuation">.</span>12:8002<span class="token punctuation">,</span>10<span class="token punctuation">.</span>244<span class="token punctuation">.</span>1<span class="token punctuation">.</span>20:8002

Session Affinity: None

Events: <none>

<span class="token comment"># 会与endpoints绑定,创建svc是默认创建一个同名endpoints</span>

<span class="token namespace">[root@k8s-master week2]</span><span class="token comment"># kubectl -n luffy get endpoints myblog</span>

NAME ENDPOINTS AGE

myblog 10<span class="token punctuation">.</span>244<span class="token punctuation">.</span>0<span class="token punctuation">.</span>12:8002<span class="token punctuation">,</span>10<span class="token punctuation">.</span>244<span class="token punctuation">.</span>1<span class="token punctuation">.</span>20:8002 113m

</code></pre>

<p>Service与Pod如何关联:</p>

<p>service对象创建的同时,会创建同名的endpoints对象,若服务设置了readinessProbe, 当readinessProbe检测失败时,endpoints列表中会剔除掉对应的pod_ip,这样流量就不会分发到健康检测失败的Pod中</p>

<pre><code class="prism language-powershell">$ kd get endpoints myblog

NAME ENDPOINTS AGE

myblog 10<span class="token punctuation">.</span>244<span class="token punctuation">.</span>0<span class="token punctuation">.</span>68:8002<span class="token punctuation">,</span>10<span class="token punctuation">.</span>244<span class="token punctuation">.</span>1<span class="token punctuation">.</span>158:8002 7m

</code></pre>

<p>Service Cluster-IP如何访问:</p>

<pre><code class="prism language-powershell">$ kd get svc myblog

NAME <span class="token function">TYPE</span> CLUSTER<span class="token operator">-</span>IP EXTERNAL<span class="token operator">-</span>IP PORT<span class="token punctuation">(</span>S<span class="token punctuation">)</span> AGE

myblog ClusterIP 10<span class="token punctuation">.</span>99<span class="token punctuation">.</span>174<span class="token punctuation">.</span>93 <none> 80<span class="token operator">/</span>TCP 13m

$ curl 10<span class="token punctuation">.</span>99<span class="token punctuation">.</span>174<span class="token punctuation">.</span>93<span class="token operator">/</span>blog<span class="token operator">/</span>index<span class="token operator">/</span>

</code></pre>

<p>为mysql服务创建service:</p>

<pre><code class="prism language-yaml"><span class="token key atrule">apiVersion</span><span class="token punctuation">:</span> v1

<span class="token key atrule">kind</span><span class="token punctuation">:</span> Service

<span class="token key atrule">metadata</span><span class="token punctuation">:</span>

<span class="token key atrule">name</span><span class="token punctuation">:</span> mysql

<span class="token key atrule">namespace</span><span class="token punctuation">:</span> luffy

<span class="token key atrule">spec</span><span class="token punctuation">:</span>

<span class="token key atrule">ports</span><span class="token punctuation">:</span>

<span class="token punctuation">-</span> <span class="token key atrule">port</span><span class="token punctuation">:</span> <span class="token number">3306</span>

<span class="token key atrule">protocol</span><span class="token punctuation">:</span> TCP

<span class="token key atrule">targetPort</span><span class="token punctuation">:</span> <span class="token number">3306</span>

<span class="token key atrule">selector</span><span class="token punctuation">:</span>

<span class="token key atrule">app</span><span class="token punctuation">:</span> mysql

<span class="token key atrule">type</span><span class="token punctuation">:</span> ClusterIP

</code></pre>

<p>访问mysql:</p>

<pre><code class="prism language-powershell">$ kubectl apply <span class="token operator">-</span>f svc<span class="token operator">-</span>mysql<span class="token punctuation">.</span>yaml

<span class="token namespace">[root@k8s-master week2]</span><span class="token comment"># kubectl -n luffy get svc mysql</span>

NAME <span class="token function">TYPE</span> CLUSTER<span class="token operator">-</span>IP EXTERNAL<span class="token operator">-</span>IP PORT<span class="token punctuation">(</span>S<span class="token punctuation">)</span> AGE

mysql ClusterIP 10<span class="token punctuation">.</span>106<span class="token punctuation">.</span>120<span class="token punctuation">.</span>127 <none> 3306<span class="token operator">/</span>TCP 102m

<span class="token namespace">[root@k8s-master week2]</span><span class="token comment"># curl 10.106.120.127:3306</span>

5<span class="token punctuation">.</span>7<span class="token punctuation">.</span>34m<span class="token punctuation">]</span>

4>ÿÿaRSFqx

kMmysql_native_password<span class="token operator">!</span>ÿ<span class="token comment">#08S01Got packets out of order</span>

</code></pre>

<p>目前使用hostNetwork部署,通过宿主机ip+port访问,弊端:</p>

<ul>

<li>服务使用hostNetwork,使得宿主机的端口大量暴漏,存在安全隐患</li>

<li>容易引发端口冲突</li>

</ul>

<p>服务均属于k8s集群,尽可能使用k8s的网络访问,因此可以对目前myblog访问mysql的方式做改造:</p>

<ul>

<li>为mysql创建一个固定clusterIp的Service,把clusterIp配置在myblog的环境变量中</li>

<li>利用集群服务发现的能力,组件之间通过service name来访问</li>

</ul>

<h6>服务发现</h6>

<p>在k8s集群中,组件之间可以通过定义的Service名称实现通信。</p>

<p>演示服务发现:</p>

<pre><code class="prism language-powershell"><span class="token comment">## 演示思路:在myblog的容器中直接通过service名称访问服务,观察是否可以访问通</span>

<span class="token comment"># 先查看服务</span>

$ kd get svc

NAME <span class="token function">TYPE</span> CLUSTER<span class="token operator">-</span>IP EXTERNAL<span class="token operator">-</span>IP PORT<span class="token punctuation">(</span>S<span class="token punctuation">)</span> AGE

myblog ClusterIP 10<span class="token punctuation">.</span>99<span class="token punctuation">.</span>174<span class="token punctuation">.</span>93 <none> 80<span class="token operator">/</span>TCP 59m

mysql ClusterIP 10<span class="token punctuation">.</span>108<span class="token punctuation">.</span>214<span class="token punctuation">.</span>84 <none> 3306<span class="token operator">/</span>TCP 35m

<span class="token comment"># 进入myblog容器</span>

$ kd exec <span class="token operator">-</span>ti myblog<span class="token operator">-</span>5c97d79cdb<span class="token operator">-</span>j485f bash

<span class="token namespace">[root@myblog-5c97d79cdb-j485f myblog]</span><span class="token comment"># curl mysql:3306</span>

5<span class="token punctuation">.</span>7<span class="token punctuation">.</span>29 <span class="token punctuation">)</span>→ <span class="token punctuation">(</span>mysql_native_password ot packets out of order

<span class="token namespace">[root@myblog-5c97d79cdb-j485f myblog]</span><span class="token comment"># curl myblog/blog/index/</span>

我的博客列表

</code></pre>

<p>虽然podip和clusterip都不固定,但是service name是固定的,而且具有完全的跨集群可移植性,因此组件之间调用的同时,完全可以通过service name去通信,这样避免了大量的ip维护成本,使得服务的yaml模板更加简单。因此可以对mysql和myblog的部署进行优化改造:</p>

<ol>

<li>mysql可以去掉hostNetwork部署,使得服务只暴漏在k8s集群内部网络</li>

<li>configMap中数据库地址可以换成Service名称,这样跨环境的时候,配置内容基本上可以保持不用变化</li>

</ol>

<pre><code class="prism language-powershell">注:为什么在myblog容器内 curl mysql:3306 也能通?

<span class="token namespace">[root@k8s-master week2]</span><span class="token comment"># kubectl -n luffy get svc</span>

NAME <span class="token function">TYPE</span> CLUSTER<span class="token operator">-</span>IP EXTERNAL<span class="token operator">-</span>IP PORT<span class="token punctuation">(</span>S<span class="token punctuation">)</span> AGE

myblog ClusterIP 10<span class="token punctuation">.</span>104<span class="token punctuation">.</span>72<span class="token punctuation">.</span>57 <none> 80<span class="token operator">/</span>TCP 116m

mysql ClusterIP 10<span class="token punctuation">.</span>106<span class="token punctuation">.</span>120<span class="token punctuation">.</span>127 <none> 3306<span class="token operator">/</span>TCP 104m

<span class="token namespace">[root@k8s-master week2]</span><span class="token comment"># kubectl -n luffy get po</span>

NAME READY STATUS RESTARTS AGE

myblog<span class="token operator">-</span>5d9b76df88<span class="token operator">-</span>l69gj 1<span class="token operator">/</span>1 Running 0 7m43s

myblog<span class="token operator">-</span>5d9b76df88<span class="token operator">-</span>wthjt 1<span class="token operator">/</span>1 Running 1 14h

<span class="token namespace">[root@k8s-master week2]</span><span class="token comment"># kubectl -n luffy exec -ti myblog-5d9b76df88-l69gj -- bash</span>

<span class="token namespace">[root@myblog-5d9b76df88-l69gj myblog]</span><span class="token comment"># curl 10.106.120.127:3306</span>

5<span class="token punctuation">.</span>7<span class="token punctuation">.</span>34ͱxn<span class="token punctuation">)</span> ÿÿ~Y<span class="token punctuation">[</span><span class="token comment">#Nj8%|9mysql_native_password!ÿ#08S01Got packets out of order</span>

依次回去如下解析

<span class="token namespace">[root@myblog-5d9b76df88-l69gj myblog]</span><span class="token comment"># cat /etc/resolv.conf </span>

nameserver 10<span class="token punctuation">.</span>96<span class="token punctuation">.</span>0<span class="token punctuation">.</span>10 <span class="token comment"># 所有的pod这一行都是一样的,下面的则是同一个namespace下是相同的内容</span>

search luffy<span class="token punctuation">.</span>svc<span class="token punctuation">.</span>cluster<span class="token punctuation">.</span>local svc<span class="token punctuation">.</span>cluster<span class="token punctuation">.</span>local cluster<span class="token punctuation">.</span>local

options ndots:5

<span class="token comment"># 定义一个search,会一次去做解析,如果不同就继续下挨个匹配</span>

luffy<span class="token punctuation">.</span>svc<span class="token punctuation">.</span>cluster<span class="token punctuation">.</span>local

svc<span class="token punctuation">.</span>cluster<span class="token punctuation">.</span>local

cluster<span class="token punctuation">.</span>local

<span class="token namespace">[root@myblog-5d9b76df88-l69gj myblog]</span><span class="token comment"># curl mysql.luffy.svc.cluster.local:3306</span>

5<span class="token punctuation">.</span>7<span class="token punctuation">.</span>34K8Op RWÿÿxJQ6B?v:A42mysql_native_password<span class="token operator">!</span>ÿ<span class="token comment">#08S01Got packets out of order</span>

【为什么会通,还要看namespace,一般namespace的端口是53】

<span class="token namespace">[root@myblog-5d9b76df88-l69gj myblog]</span><span class="token comment"># curl 10.96.0.10:53</span>

curl: <span class="token punctuation">(</span>52<span class="token punctuation">)</span> Empty reply <span class="token keyword">from</span> server

<span class="token comment"># 既然能通,那么10.96.0.10是从哪来的</span>

<span class="token comment"># 参数解释 </span>

<span class="token operator">-</span>A 跨整个namespace查询,等于 <span class="token operator">--</span>all<span class="token operator">-</span>namespace

<span class="token namespace">[root@k8s-master ~]</span><span class="token comment"># kubectl get service -A|grep "10.96.0.10"</span>

kube<span class="token operator">-</span>system kube<span class="token operator">-</span>dns ClusterIP 10<span class="token punctuation">.</span>96<span class="token punctuation">.</span>0<span class="token punctuation">.</span>10 <none> 53<span class="token operator">/</span>UDP<span class="token punctuation">,</span>53<span class="token operator">/</span>TCP<span class="token punctuation">,</span>9153<span class="token operator">/</span>TCP 2d12

<span class="token comment"># 怎么查看这个svc是哪个pod</span>

<span class="token namespace">[root@k8s-master ~]</span><span class="token comment"># kubectl -n kube-system describe svc kube-dns</span>

Name: kube<span class="token operator">-</span>dns

Namespace: kube<span class="token operator">-</span>system

Labels: k8s<span class="token operator">-</span>app=kube<span class="token operator">-</span>dns

kubernetes<span class="token punctuation">.</span>io<span class="token operator">/</span>cluster<span class="token operator">-</span>service=true

kubernetes<span class="token punctuation">.</span>io<span class="token operator">/</span>name=KubeDNS

Annotations: prometheus<span class="token punctuation">.</span>io<span class="token operator">/</span>port: 9153

prometheus<span class="token punctuation">.</span>io<span class="token operator">/</span>scrape: true

Selector: k8s<span class="token operator">-</span>app=kube<span class="token operator">-</span>dns <span class="token comment"># 用它搜索查找pod</span>

<span class="token function">Type</span>: ClusterIP

IP: 10<span class="token punctuation">.</span>96<span class="token punctuation">.</span>0<span class="token punctuation">.</span>10

Port: dns 53<span class="token operator">/</span>UDP

TargetPort: 53<span class="token operator">/</span>UDP

Endpoints: 10<span class="token punctuation">.</span>244<span class="token punctuation">.</span>0<span class="token punctuation">.</span>4:53<span class="token punctuation">,</span>10<span class="token punctuation">.</span>244<span class="token punctuation">.</span>0<span class="token punctuation">.</span>5:53

Port: dns<span class="token operator">-</span>tcp 53<span class="token operator">/</span>TCP

TargetPort: 53<span class="token operator">/</span>TCP

Endpoints: 10<span class="token punctuation">.</span>244<span class="token punctuation">.</span>0<span class="token punctuation">.</span>4:53<span class="token punctuation">,</span>10<span class="token punctuation">.</span>244<span class="token punctuation">.</span>0<span class="token punctuation">.</span>5:53

Port: metrics 9153<span class="token operator">/</span>TCP

TargetPort: 9153<span class="token operator">/</span>TCP

Endpoints: 10<span class="token punctuation">.</span>244<span class="token punctuation">.</span>0<span class="token punctuation">.</span>4:9153<span class="token punctuation">,</span>10<span class="token punctuation">.</span>244<span class="token punctuation">.</span>0<span class="token punctuation">.</span>5:9153

Session Affinity: None

Events: <none>

<span class="token namespace">[root@k8s-master ~]</span><span class="token comment"># kubectl -n kube-system get po -l k8s-app=kube-dns -owide</span>

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

coredns<span class="token operator">-</span>6d56c8448f<span class="token operator">-</span>csr4b 1<span class="token operator">/</span>1 Running 1 2d12h 10<span class="token punctuation">.</span>244<span class="token punctuation">.</span>0<span class="token punctuation">.</span>4 k8s<span class="token operator">-</span>master <none> <none>

coredns<span class="token operator">-</span>6d56c8448f<span class="token operator">-</span>jh2gm 1<span class="token operator">/</span>1 Running 1 2d12h 10<span class="token punctuation">.</span>244<span class="token punctuation">.</span>0<span class="token punctuation">.</span>5 k8s<span class="token operator">-</span>master <none> <none>

<span class="token comment"># 所有的pod这一行的namespace都是一样的,下面的则是同一个namespace下是相同的内容</span>

<span class="token namespace">[root@k8s-master week2]</span><span class="token comment"># kubectl -n luffy get pod</span>

NAME READY STATUS RESTARTS AGE

myblog<span class="token operator">-</span>5d9b76df88<span class="token operator">-</span>85k24 1<span class="token operator">/</span>1 Running 0 7m41s

myblog<span class="token operator">-</span>5d9b76df88<span class="token operator">-</span>t26t4 1<span class="token operator">/</span>1 Running 0 8m48s

mysql<span class="token operator">-</span>7446f4dc7b<span class="token operator">-</span>7kpj8 1<span class="token operator">/</span>1 Running 0 8m43s

<span class="token namespace">[root@k8s-master week2]</span><span class="token comment"># kubectl -n luffy exec -ti myblog-5d9b76df88-85k24 -- cat /etc/resolv.conf </span>

nameserver 10<span class="token punctuation">.</span>96<span class="token punctuation">.</span>0<span class="token punctuation">.</span>10