kubernetes进阶-- 资源监控

概述

-

Metrics-Server是集群核心监控数据的聚合器,用来替换之前的heapster。主要采集各个结点的pod的cpu和内存的使用量 -

容器相关的 Metrics 主要来自于

kubelet内置的cAdvisor服务,有了Metrics-Server之后,用户就可以通过标准的 Kubernetes API 来访问到这些监控数据。- Metrics API 只可以查询当前的度量数据,并不保存历史数据。

- Metrics API URI 为 /apis/metrics.k8s.io/,在 k8s.io/metrics 维护。

- 必须部署 metrics-server 才能使用该 API,metrics-server 通过调用 Kubelet SummaryAPI 获取数据。

-

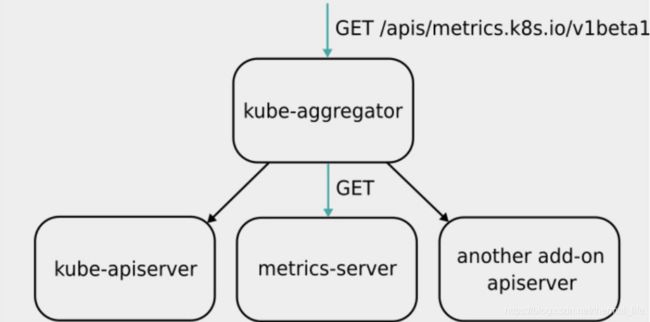

Metrics Server 并不是 kube-apiserver 的一部分,而是通过 Aggregator 这种插件机制,在独立部署的情况下同 kube-apiserver 一起统一对外服务的。

-

kube-aggregator 其实就是一个根据 URL 选择具体的 API 后端的代理服务器,就比如上图中的三个后端。

-

Metrics-server属于Core metrics(核心指标),提供API metrics.k8s.io,仅提供Node和Pod的CPU和内存使用情况。而其他Custom Metrics(自定义指标)由Prometheus等组件来完成。

-

资源下载:https://github.com/kubernetes-incubator/metrics-server

Metrics-Server部署

[root@server2 ~]# wget https://github.com/kubernetes-sigs/metrics-server/releases/download/v0.3.6/components.yaml

我们需要提前将里面的镜像准备好到harbor仓库.然后再运行:

[root@server2 ~]# kubectl apply -f components.yaml

clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader created

clusterrolebinding.rbac.authorization.k8s.io/metrics-server:system:auth-delegator created

rolebinding.rbac.authorization.k8s.io/metrics-server-auth-reader created

apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io created

serviceaccount/metrics-server created

deployment.apps/metrics-server created

service/metrics-server created

clusterrole.rbac.authorization.k8s.io/system:metrics-server created

clusterrolebinding.rbac.authorization.k8s.io/system:metrics-server created

[root@server2 ~]# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

metrics-server-7cdfcc6666-d7ndm 1/1 Running 0 29s

[root@server2 ~]# kubectl top nodes

error: metrics not available yet

[root@server2 ~]# kubectl top pod -n kube-system

W0710 18:58:53.377881 18235 top_pod.go:274] Metrics not available for pod kube-system/calico-kube-controllers-76d4774d89-9fp2s, age: 76h53m31.377871204s

error: Metrics not available for pod kube-system/calico-kube-controllers-76d4774d89-9fp2s, age: 76h53m31.377871204s

[root@server2 ~]# kubectl -n kube-system logs metrics-server-7cdfcc6666-d7ndm

....

lookup server2 on 10.96.0.10:53: no such host,

lookup server3 on 10.96.0.10:53: no such host,

lookup server4 on 10.96.0.10:53: no such host]

但是此时是无法获取数据的,因为解析有问题,解析不到我们的结点信息,先通过dns的pod进行解析,然后通过结点上的DNS去互联网上进行解析,是无法解析到我们的 server1,server2,server3这样的主机名的,所以我们需要自己加进去.

因为没有内网的DNS服务器,所以metrics-server无法解析节点名字。可以直接修改coredns的configmap,讲各个节点的主机名加入到hosts中,这样所有Pod都可以从CoreDNS中解析各个节点的名字。

[root@server2 ~]# kubectl get cm -n kube-system

NAME DATA AGE

calico-config 4 12d

coredns 1 22d

extension-apiserver-authentication 6 22d

kube-proxy 2 22d

kubeadm-config 2 22d

kubelet-config-1.18 1 22d

[root@server2 ~]# kubectl -n kube-system edit cm coredns

...

}

ready

hosts {

172.25.254.2 server2

172.25.254.3 server3

172.25.254.4 server4

fallthrough /解析不到继续往下解析

}

kubernetes cluster.local in-addr.arpa ip6.arpa {

这时出现另外一个错误.

[root@server2 ~]# kubectl -n kube-system logs metrics-server-7cdfcc6666-zt74k

....

ps://server3:10250/stats/summary?only_cpu_and_memory=true: x509: certificate signed by unknown authority, unable to fully scrape

metrics from source kubelet_summary:server2: unable to fetch metrics from Kubelet server2 (server2): Get https://server2:10250/stats

/summary?only_cpu_and_memory=true: x509: certificate signed by unknown authority, unable to fully scrape metrics from source

kubelet_summary:server4: unable to fetch metrics from Kubelet server4 (server4): Get https://server4:10250/stats

/summary?only_cpu_and_memory=true: x509: certificate signed by unknown authority]

这时的问题是x509: certificate signed by unknown authority 证书没有签名的原因.

Metric Server 支持一个参数 --kubelet-insecure-tls,可以跳过这一检查,然而官方也明确说了,这种方式不推荐生产使用。

所以我们需要启用TLS Bootstrap 证书签发:

[root@server2 ~]# vim /var/lib/kubelet/config.yaml

...

streamingConnectionIdleTimeout: 0s

syncFrequency: 0s

volumeStatsAggPeriod: 0s

serverTLSBootstrap: true 加上这个参数

[root@server2 ~]# systemctl restart kubelet.service /重启服务

[root@server2 ~]# kubectl get csr

NAME AGE SIGNERNAME REQUESTOR CONDITION

csr-xj6zs 0s kubernetes.io/kubelet-serving system:node:server2 Pending /可以看出正在等待我们的签名

[root@server2 ~]# kubectl certificate approve csr-xj6zs /签名

certificatesigningrequest.certificates.k8s.io/csr-xj6zs approved

[root@server2 ~]# kubectl get csr

NAME AGE SIGNERNAME REQUESTOR CONDITION

csr-xj6zs 2m24s kubernetes.io/kubelet-serving system:node:server2 Approved,Issued

其他两个结点也是一样的操作.

[root@server2 ~]# scp /var/lib/kubelet/config.yaml server3:/var/lib/kubelet/config.yaml

root@server3's password:

config.yaml 100% 807 907.8KB/s 00:00

[root@server2 ~]# scp /var/lib/kubelet/config.yaml server4:/var/lib/kubelet/config.yaml

root@server4's password:

config.yaml 100% 807 854.7KB/s 00:00

文件复制过去,然后在这两个结点上重启服务.

[root@server2 ~]# kubectl get csr

NAME AGE SIGNERNAME REQUESTOR CONDITION

csr-lfhkd 28s kubernetes.io/kubelet-serving system:node:server4 Pending

csr-pvsr7 65s kubernetes.io/kubelet-serving system:node:server3 Pending /看到三个证书请求

csr-xj6zs 6m10s kubernetes.io/kubelet-serving system:node:server2 Approved,Issued

[root@server2 ~]# kubectl certificate approve csr-pvsr7

certificatesigningrequest.certificates.k8s.io/csr-pvsr7 approved

[root@server2 ~]# kubectl certificate approve csr-lfhkd /都签名

certificatesigningrequest.certificates.k8s.io/csr-lfhkd approved

[root@server2 ~]# kubectl get csr

NAME AGE SIGNERNAME REQUESTOR CONDITION

csr-lfhkd 54s kubernetes.io/kubelet-serving system:node:server4 Approved,Issued

csr-pvsr7 91s kubernetes.io/kubelet-serving system:node:server3 Approved,Issued

csr-xj6zs 6m36s kubernetes.io/kubelet-serving system:node:server2 Approved,Issued

全部授权后,我们即可以看到:

[root@server2 ~]# kubectl top nodes

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

server2 122m 6% 943Mi 33%

server3 41m 4% 396Mi 20%

server4 57m 5% 548Mi 28%

[root@server2 ~]# kubectl top pod --all-namespaces

NAMESPACE NAME CPU(cores) MEMORY(bytes)

ingress-nginx ingress-nginx-controller-jskzg 1m 89Mi

kube-system calico-kube-controllers-76d4774d89-9fp2s 1m 12Mi

kube-system calico-node-bg9c4 21m 55Mi

kube-system calico-node-lvxrc 13m 50Mi

kube-system calico-node-smn7b 13m 55Mi

kube-system coredns-5fd54d7f56-2d7h9 2m 10Mi

kube-system coredns-5fd54d7f56-2q2ws 2m 11Mi

kube-system etcd-server2 14m 62Mi

kube-system kube-apiserver-server2 24m 362Mi

kube-system kube-controller-manager-server2 13m 46Mi

kube-system kube-proxy-6ldt5 1m 14Mi

kube-system kube-proxy-86ckh 1m 13Mi

kube-system kube-proxy-9nr5k 1m 14Mi

kube-system kube-scheduler-server2 3m 16Mi

kube-system metrics-server-7cdfcc6666-r2gkg 1m 10Mi

nfs-client-provisioner nfs-client-provisioner-576d464467-l8nzz 0m 0Mi

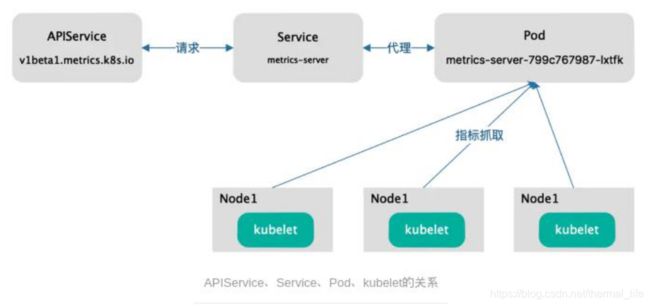

它的请求流程是:

apiserver 请求 metrics-server 创建的 svc,然后svc访问后端的pod,pod上运行的是 metrics-server,连接各个结点的kubelet,去抓取数据。

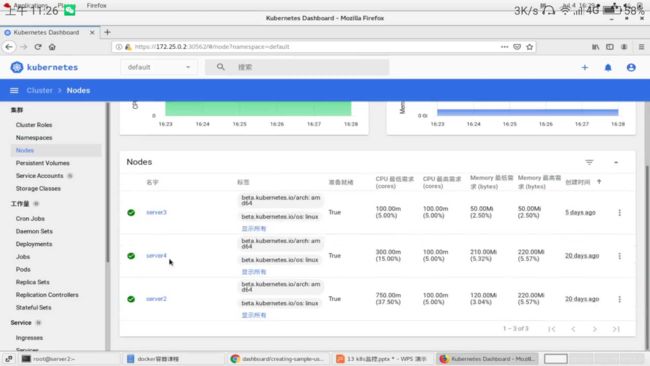

Dashboard部署

每次使用命令去查看资源是不方便的,我们可以做一个图形化的展示:

-

Dashboard可以给用户提供一个可视化的 Web 界面来查看当前集群的各种信息。用户可以用 Kubernetes Dashboard 部署容器化的应用、监控应用的状态、执行故障排查任务以及管理 Kubernetes 各种资源。

-

网址:https://github.com/kubernetes/dashboard

同样是先下载ymal文件,然后先下载文件中所需要的镜像:

[root@server2 ~]# wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.3/aio/deploy/recommended.yaml

[root@server2 ~]# vim recommended.yaml

image: dashboard:v2.0.3

image:metrics-scraper:v1.0.4

[root@server2 ~]# kubectl apply -f recommended.yaml

...

[root@server2 ~]# kubectl get svc -n kubernetes-dashboard /创建了一个新的命名空间

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

dashboard-metrics-scraper ClusterIP 10.101.147.250 8000/TCP 24s

kubernetes-dashboard ClusterIP 10.104.127.48 443/TCP 24s /采用的clusterIP的方式

[root@server2 ~]# kubectl get pod -n kubernetes-dashboard

NAME READY STATUS RESTARTS AGE

dashboard-metrics-scraper-6c45d5bb84-cst5h 1/1 Running 0 31s

kubernetes-dashboard-9b9d5676f-2hs8q 1/1 Running 0 32s

我们怎样通过外部去访问哪?

[root@server2 ~]# kubectl -n kubernetes-dashboard edit svc dashboard-metrics-scraper

service/dashboard-metrics-scraper edited

...

spec:

clusterIP: 10.101.147.250

ports:

- port: 8000

protocol: TCP

targetPort: 8000

selector:

k8s-app: dashboard-metrics-scraper

sessionAffinity: None

type: NodePort /更改svc的类型为 NodePort

[root@server2 ~]# kubectl get svc -n kubernetes-dashboard

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

dashboard-metrics-scraper NodePort 10.101.147.250 8000:32379/TCP 3m7s

kubernetes-dashboard ClusterIP 10.104.127.48 443/TCP 3m7s

然后我们访问任意一个结点的32379端口就可以了。

这里会可能出现证书冲突的问题,我们只需要清理掉原来的证书即可。

我们使用token登陆.

[root@server2 ~]# kubectl -n kubernetes-dashboard describe secrets kubernetes-dashboard-token-p6c78

Name: kubernetes-dashboard-token-p6c78

Namespace: kubernetes-dashboard

Labels:

Annotations: kubernetes.io/service-account.name: kubernetes-dashboard

kubernetes.io/service-account.uid: fa94689f-ea3d-4a3c-b9b6-3035b180831f

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1025 bytes

namespace: 20 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IlBFMlhOZUItQ1d4c2NKNVNUdi1xY1o5WDA2ekJocFN3OU1FNW5URHVweXcifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZC10b2tlbi1wNmM3OCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6ImZhOTQ2ODlmLWVhM2QtNGEzYy1iOWI2LTMwMzViMTgwODMxZiIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlcm5ldGVzLWRhc2hib2FyZDprdWJlcm5ldGVzLWRhc2hib2FyZCJ9.sBl-1iHaScEtFzYpLNFGZ2052rs40Kyl1L6HjsQjdyJhyAszdDKumydOunWi3Tg5_cSEFYO6lZFPPug8xaTDhdQ7yOjqi4rtQJ85e3tdVtB0lbORdD58YRdfKE4FCCkz_Tpt31X5ATtHWY2Ap09R-oxPKs5Kn4we3BP43X3T0zjGVa5_mtR-dBO1Cgy8udX5wPtlEf4UGyaRMASy_3F4R93woEZCG9Q1ZMWSVaImwR0aywb_FKfBU0UDTRfHou0l26kDJuRwkynKKFhaXc9ksVzT_-xxiDnZKSURtkxNFFIC6aNCo3rtZ65qQu8Mf_WHo_YpbyvVT9n3_xgPwbtboA

默认dashboard对集群没有操作权限,需要授权:

[root@server2 ~]# vim recommended.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard-admin

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard