mac使用虚拟机(VirtualBox+centos7)搭建kubernetes(K8S)集群

文章目录

-

- 说明

- 一、环境准备

-

- 1. 配置主机网络

- 2. 配置磁盘空间

- 3. 安装虚拟机配置网络

- 4.设置Linux环境(三台均需要设置)

- 二、安装docker kubeadm kubelet kubectl(三台均需要设置)

-

- 1.安装docker环境

- 2.kubeadm kubelet kubectl

- 三、部署k8s-master(将 k8s-node1 作为master,所在以下操作在 k8s-node1上操作)

-

- 1.初始化master

- 四、部署k8s从节点

- 五、kubesphere可视化(安装在k8s-node1 (master))

-

- 1、安装kubesphere前提条件说明

- 2、安装helm

-

-

- 2-1、安装

- 2-2、创建权限

- 2-3、初始化helm

- 2-4、等待安装完成

-

- 3、安装 OpenEBS 创建 LocalPV 存储类型

-

-

- 3-1、前提条件

- 3-2、安装 OpenEBS

-

- 4、最小化安装kubesphere

- 一、额外说明

-

- kubernetes默认的可视化工具(Dashboard)

- get_helm.sh

- kubesphere-minimal.yaml

说明

文档地址

- 官方文档:https://kubernetes.io/zh/docs/home/

- k8s操作文档:https://kubernetes.io/zh/docs/reference/kubectl/overview/

- kubectl操作命令文档:https://kubernetes.io/docs/reference/generated/kubectl/kubectl-commands

建议及其他说明:

- 在关键点配置完成后将虚拟机做一下备份,方便出现异常时回退。

- 文中部分操作命令均来自官方,在文中有标记官方文档说明的地方,建议配合官方文档使用。

一、环境准备

1. 配置主机网络

主机网络地址设定为192.168.56.1,以后所有虚拟机IP均为192.168.56.***

后续在配置虚拟机时会使用两块网卡,一块用于外网(NAT)、一块用于内网(host-only)

以上主机网络地址就是用于内网使用

2. 配置磁盘空间

用于存储虚拟机文件。

点击左上角VirtualBox–>偏好设置–>默认虚拟电脑位置

Mac没有分区概念所以按照自己需求填写即可,Windows需要放在磁盘空闲大的盘符。

3. 安装虚拟机配置网络

安装虚拟机、配置网络步骤可以查看此博客

分别配置三台(可以配置一台,其他的直接复制即可,复制完修改静态IP地址),主机名在下一步有设置,可忽略

| 默认网卡地址(会自动生成)[ip addr可查看] | 内网IP地址(可配置) | 主机名(可配置) |

|---|---|---|

| 10.0.2.15 | 192.168.56.100 | k8s-node1 |

| 10.0.2.5 | 192.168.56.101 | k8s-node2 |

| 10.0.2.4 | 192.168.56.102 | k8s-node3 |

若三台主机默认网卡地址一样的问题解决:此博客文章末尾有说明

4.设置Linux环境(三台均需要设置)

- 禁掉防火墙

systemctl stop firewalld # 关闭防火墙

systemctl disable firewalld # 禁掉防火墙

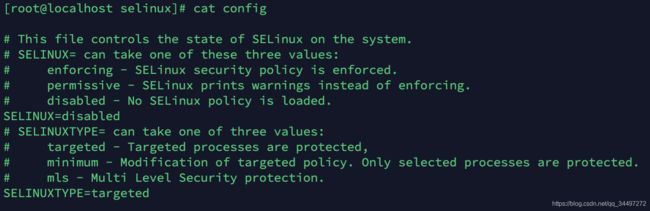

- 禁用SELINUX (linux默认的安全策略)

vi /etc/selinux/config

# enforcing - SELinux security policy is enforced 默认是开启状态的

将SELINUX=enforcing改为SELINUX=disabled

# 禁用掉

# 也可以使用命令

# sed -i 's/enforcing/disabled/' /etc/selinux

# 设置当前会话禁用

setenforce 0

- 关闭swap(内存交换)

# swapoff -a # 临时

# 永久

vi /etc/fstab

# 最后一行swap相关的注释即可

- 设置主机名

hostname # 可以查看主机名,主机名不能是localhost

# 重新设置主机名。按照上方提前约定好的分别起名为k8s-node1、k8s-node2、k8s-node3

hostnamectl set-hostname k8s-node1

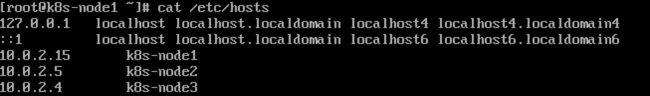

- 修改host文件,配置主机名和网卡的关系,保证修改完后IP和主机名均可以正常ping通

| 默认网卡地址 | 内网IP地址(可配置) | 主机名(可配置) |

|---|---|---|

| 10.0.2.15 | 192.168.56.100 | k8s-node1 |

| 10.0.2.5 | 192.168.56.101 | k8s-node2 |

| 10.0.2.4 | 192.168.56.102 | k8s-node3 |

vi /etc/hosts

# 将网卡和主机名对应关系保存到hosts文件中

10.0.2.15 k8s-node1

10.0.2.5 k8s-node2

10.0.2.4 k8s-node3

- 将桥接的IPv4的流量传递到iptables的链,为了更精确的统计流量指标

# 编辑文件,将规则写入k8s.conf

cat > /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge-nf-call-ip6tables=1

net.bridge.bridge-nf-call-iptables=1

EOF

# 使规则生效,执行后有对应规则输出,说明配置成功了

sysctl --system

二、安装docker kubeadm kubelet kubectl(三台均需要设置)

1.安装docker环境

kubernetes需要容器环境,采用docker。 安装配置网络步骤可以查看此博客

2.kubeadm kubelet kubectl

- kubeadm快速安装Kubernetes集群的工具

kubeadm init可以初始化master节点

kubeadm join将从节点加入到集群中 - kubelet 每一个node节点上的代理,帮node创建pod,管理网络等

- kubectl 命令行程序,通过命令操作kubernetes集群

添加阿里云yum镜像

cat > /etc/yum.repos.d/kubernetes.repo <

安装指定版本的(kubelet kubeadm kubectl),不指定版本安装最新

yum install -y kubelet-1.17.3 kubeadm-1.17.3 kubectl-1.17.3

设置开启自启动

systemctl enable kubelet # 设置开机自启动

systemctl start kubelet # 启动

systemctl status kubelet # 查看状态

三、部署k8s-master(将 k8s-node1 作为master,所在以下操作在 k8s-node1上操作)

1.初始化master

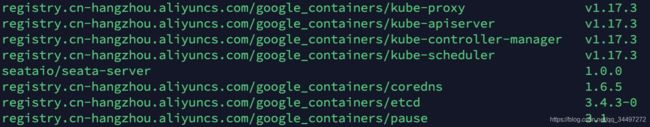

- 执行kubeadm init命令需要下载很多镜像,提前将镜像下载

新建master_images.sh脚本,将下方脚本内容复制到里面,然后./master_images.sh执行即可

以下为脚本内容,循环下载docker镜像。

#!/bin/bash

images=(

kube-apiserver:v1.17.3

kube-proxy:v1.17.3

kube-controller-manager:v1.17.3

kube-scheduler:v1.17.3

coredns:1.6.5

etcd:3.4.3-0

pause:3.1

)

for imageName in ${images[@]} ; do

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/$imageName

# docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/$imageName k8s.gcr.io/$imageName

done

- kubeadm init

# apiserver地址,apiserver是master的组件,所有操作均需要通过apiserver

# 默认下载镜像国内无法访问,设置下载镜像为阿里云

# 初始化需要下载一些docker镜像,直接执行可能中间出错也不清楚,可能需等待很久,所以提前执行上方脚本,下载好镜像

# kubernetes版本

# pod为kubernetes中最小的单元,pod内部存在多个docker容器,相互直接需要通信

kubeadm init \

--apiserver-advertise-address=10.0.2.15 \

--image-repository registry.cn-hangzhou.aliyuncs.com/google_containers \

--kubernetes-version v1.17.3 \

--service-cidr=10.96.0.0/16 \

--pod-network-cidr=10.244.0.0/16

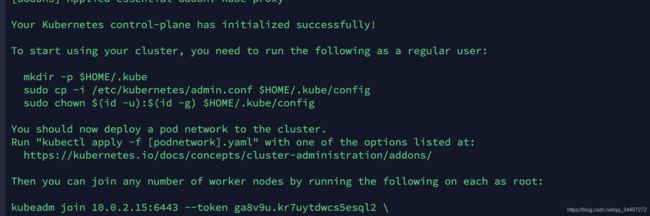

执行初始化需要等待一段时间,谨记执行完后不要清屏,保留启动日志,后面有使用。

主要含三块内容:1、需要执行的三条命令 2、配置网络 3、从结点执行命令(此命令有有效期,2小时左右,但是超时也有解决方案)

- init执行完成后,按照提示执行以下三条命令

# 命令来自于初始化init时输出的日志

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

- 按照提示,需要安装pod网络插件(flannel)

# 安装

kubectl apply -f kube-flannel.yml

# 若安装中出现异常可以删除

# kubectl delete -f kube-flannel.yml

- 查看是否运行正常

kubectl get pods --all-namespaces # 查询所有名称空间的pods

保证kube-flannel-ds-amd64-xl5p9处于运行(Running)状态。需要稍微等一会儿。

四、部署k8s从节点

- 在 k8s-node1 (master)上查看主结点的状态

# 确保master结点处于Ready

kubectl get nodes

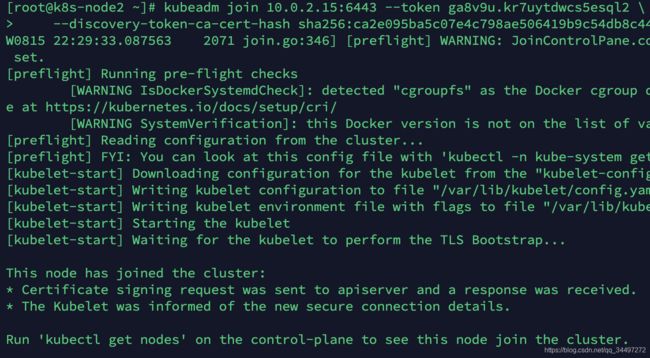

- 在其他两台虚拟机上执行加入集群中(注:以下命令在另外两台从结点上执行)

# 命令来自于初始化init时的日志

kubeadm join 10.0.2.15:6443 --token ga8v9u.kr7uytdwcs5esql2 \

--discovery-token-ca-cert-hash sha256:ca2e095ba5c07e4c798ae506419b9c54db8c44d8aceba360d757d8b3d3abe8bd

运行完之后会显示已经加入集群,通过[kubectl get nodes]这个命令可以查看(在 k8s-node1 (master)查看)。

kubectl此命令截止目前未在从节点配置,所以需要在主节点查看。

- 监控结点信息

若在主节点通过[kubectl get nodes]命令可以查看结点处于NotReady,则可以通过以下命令监控pod进度

# 监控命令,多处于init状态,则稍微等会即可,等init变为running就OK了

watch kubectl get pod -n kube-system -o wide

- 集群搭建完毕

# 三个结点均显示Ready说明已经搭建完毕

kubectl get nodes

五、kubesphere可视化(安装在k8s-node1 (master))

说明:

- kubernetes也有默认的可视化工具(Dashboard)

- kubesphere更加强大。

- 默认的可视化工具文章末尾有简单介绍,但是未测试。

- 以下主要使用kubesphere,kubesphere可以打通全部的devops流水线链路。而且集成了很多的组件

- kubesphere官方地址:https://kubesphere.io/zh/

1、安装kubesphere前提条件说明

官方文档说明:https://kubesphere.com.cn/docs/zh-CN/installation/prerequisites/

一定要看清Kubernetes版本要求以及硬件要求(集群现有可用内存至少在 2G以上),确保自己满足安装的条件,若不满足条件,则不要安装kubesphere。

2、安装helm

2-1、安装

官方文档地址:https://devopscube.com/install-configure-helm-kubernetes/

以下安装的是helm 2,官方文档中有对应说明(Installing & Configuring Helm 2)

# 校验是否有安装过

helm version

# 下载脚本语言并执行

curl -L https://git.io/get_helm.sh | bash

# 但是可能由于网络问题无法下载,若无法无法下载可以使用文章末尾get_helm.sh分享,直接运行即可。

# 个人使用文章末尾get_helm.sh,安装过程需要下载一个压缩包,可能需要等待一会儿。

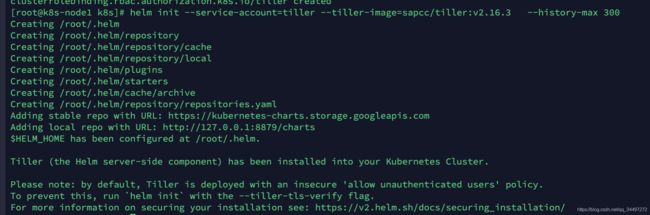

根据安装日志发现helm和tiller均安装成功,也有显示安装的路径信息。

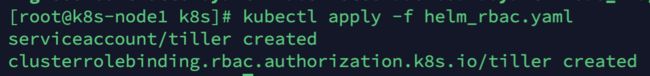

2-2、创建权限

# 新建一个yaml文件

vi helm-rbac.yaml

# 将下方内容复制到此yaml文件中,yaml文件来自官方。上一步有说明官方文档地址

# 应用配置

kubectl apply -f helm_rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: tiller

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: tiller

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: tiller

namespace: kube-system

2-3、初始化helm

# --tiller-image 指定镜像

helm init --service-account=tiller --tiller-image=sapcc/tiller:v2.16.3 --history-max 300

2-4、等待安装完成

# 查看tiller是否安装完成

kubectl get pods --all-namespaces

# 若未完成的可以打开监控,等待tiller完成

watch kubectl get pod -n kube-system -o wide

3、安装 OpenEBS 创建 LocalPV 存储类型

官方文档地址:https://kubesphere.com.cn/docs/zh-CN/appendix/install-openebs/

3-1、前提条件

# 查看节点信息,k8s-node1为当前的master

kubectl get node -o wide

# 确认master是否有Taint,是否在调度(k8s-node1为master的name)

kubectl describe node k8s-node1 | grep Taint

# 去掉master的Taint(k8s-node1为master的name)

kubectl taint nodes k8s-node1 node-role.kubernetes.io/master:NoSchedule-

# 去掉之后再次查看,会显示没有taint了

3-2、安装 OpenEBS

- 创建 OpenEBS 的 namespace

kubectl create ns openebs

- 集群中安装了 Helm,通过 Helm 命令来安装 OpenEBS(官方文档地址中有使用kubectl 安装的命令)

helm install --namespace openebs --name openebs stable/openebs --version 1.5.0

- 安装 OpenEBS 后将自动创建 4 个 StorageClass,查看创建的 StorageClass

# 查看所有名称空间的

kubectl get sc --all-namespaces

# 若显示No resources found,说明还未部署成功

# 查看有那些还在部署中

kubectl get pods --all-namespaces

# 可以打开监控等待所有均安装完成 -n openebs(openebs名称空间下的)

kubectl get pod -n openebs -o wide

- 将 openebs-hostpath设置为 默认的 StorageClass

kubectl patch storageclass openebs-hostpath -p '{"metadata": {"annotations":{"storageclass.kubernetes.io/is-default-class":"true"}}}'

# 这一行为执行命令后输出的日志 storageclass.storage.k8s.io/openebs-hostpath patched

- 由于在开头手动去掉了 master 节点的 Taint,将 master 节点 Taint 加上,避免业务相关的工作负载调度到 master 节点抢占 master 资源

# k8s-node1为master

kubectl taint nodes k8s-node1 node-role.kubernetes.io/master=:NoSchedule

4、最小化安装kubesphere

官方地址:https://kubesphere.com.cn/docs/zh-CN/installation/install-on-k8s/

完整安装要求:集群可用 CPU > 8 Core 且可用内存 > 16 G,个人测试所以采用最小化安装,后续需要其他组件再安装。

# 官方有说明,若您的服务器提示无法访问 GitHub,可将 kubesphere-minimal.yaml 或 kubesphere-complete-setup.yaml 文件保存到本地作为本地的静态文件,再通过命令进行安装。

kubectl apply -f https://raw.githubusercontent.com/kubesphere/ks-installer/master/kubesphere-minimal.yaml

# 若无法访问GitHub,可按照官方操作,也可以参考文章末尾有kubesphere-minimal.yaml

# kubectl apply -f kubesphere-minimal.yaml

监控安装过程(需要等待,一直到出现截图的内容,但是看到截图内容还不能直接运行)

# 监控安装命令

kubectl logs -n kubesphere-system $(kubectl get pod -n kubesphere-system -l app=ks-install -o jsonpath='{.items[0].metadata.name}') -f

# Error from server (BadRequest): container "installer"

# 还在准备中

# 通过kubectl get pod --all-namespaces命令可以查看

# 监控

# watch kubectl get pod --all-namespaces -o wide

# 当kubesphere-system ks-installer-75b8d89dff-mmtbj处于Running时则可以查看【上方监控安装命令】

上方截图有显示访问地址(显示的IP不能使用,改为内网IP即可)、账号、密码,但是还显示必须等其他其他节点的都运行完成后才能使用,使用以下命令查看是否还有在安装中的,等待所有节点均安装完成就可以登录了。

kubectl get pod --all-namespaces

一、额外说明

kubernetes默认的可视化工具(Dashboard)

get_helm.sh

新建get_helm.sh文件,将下方脚本内容复制进去,直接运行即可。

#!/usr/bin/env bash

# Copyright The Helm Authors.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

# The install script is based off of the MIT-licensed script from glide,

# the package manager for Go: https://github.com/Masterminds/glide.sh/blob/master/get

PROJECT_NAME="helm"

TILLER_NAME="tiller"

: ${USE_SUDO:="true"}

: ${HELM_INSTALL_DIR:="/usr/local/bin"}

# initArch discovers the architecture for this system.

initArch() {

ARCH=$(uname -m)

case $ARCH in

armv5*) ARCH="armv5";;

armv6*) ARCH="armv6";;

armv7*) ARCH="arm";;

aarch64) ARCH="arm64";;

x86) ARCH="386";;

x86_64) ARCH="amd64";;

i686) ARCH="386";;

i386) ARCH="386";;

esac

}

# initOS discovers the operating system for this system.

initOS() {

OS=$(echo `uname`|tr '[:upper:]' '[:lower:]')

case "$OS" in

# Minimalist GNU for Windows

mingw*) OS='windows';;

esac

}

# runs the given command as root (detects if we are root already)

runAsRoot() {

local CMD="$*"

if [ $EUID -ne 0 -a $USE_SUDO = "true" ]; then

CMD="sudo $CMD"

fi

$CMD

}

# verifySupported checks that the os/arch combination is supported for

# binary builds.

verifySupported() {

local supported="darwin-386\ndarwin-amd64\nlinux-386\nlinux-amd64\nlinux-arm\nlinux-arm64\nlinux-ppc64le\nwindows-386\nwindows-amd64"

if ! echo "${supported}" | grep -q "${OS}-${ARCH}"; then

echo "No prebuilt binary for ${OS}-${ARCH}."

echo "To build from source, go to https://github.com/helm/helm"

exit 1

fi

if ! type "curl" > /dev/null && ! type "wget" > /dev/null; then

echo "Either curl or wget is required"

exit 1

fi

}

# checkDesiredVersion checks if the desired version is available.

checkDesiredVersion() {

if [ "x$DESIRED_VERSION" == "x" ]; then

# Get tag from release URL

local release_url="https://github.com/helm/helm/releases"

if type "curl" > /dev/null; then

TAG=$(curl -Ls $release_url | grep 'href="/helm/helm/releases/tag/v2.' | grep -v no-underline | head -n 1 | cut -d '"' -f 2 | awk '{n=split($NF,a,"/");print a[n]}' | awk 'a !~ $0{print}; {a=$0}')

elif type "wget" > /dev/null; then

TAG=$(wget $release_url -O - 2>&1 | grep 'href="/helm/helm/releases/tag/v2.' | grep -v no-underline | head -n 1 | cut -d '"' -f 2 | awk '{n=split($NF,a,"/");print a[n]}' | awk 'a !~ $0{print}; {a=$0}')

fi

else

TAG=$DESIRED_VERSION

fi

}

# checkHelmInstalledVersion checks which version of helm is installed and

# if it needs to be changed.

checkHelmInstalledVersion() {

if [[ -f "${HELM_INSTALL_DIR}/${PROJECT_NAME}" ]]; then

local version=$("${HELM_INSTALL_DIR}/${PROJECT_NAME}" version -c | grep '^Client' | cut -d'"' -f2)

if [[ "$version" == "$TAG" ]]; then

echo "Helm ${version} is already ${DESIRED_VERSION:-latest}"

return 0

else

echo "Helm ${TAG} is available. Changing from version ${version}."

return 1

fi

else

return 1

fi

}

# downloadFile downloads the latest binary package and also the checksum

# for that binary.

downloadFile() {

HELM_DIST="helm-$TAG-$OS-$ARCH.tar.gz"

DOWNLOAD_URL="https://get.helm.sh/$HELM_DIST"

CHECKSUM_URL="$DOWNLOAD_URL.sha256"

HELM_TMP_ROOT="$(mktemp -dt helm-installer-XXXXXX)"

HELM_TMP_FILE="$HELM_TMP_ROOT/$HELM_DIST"

HELM_SUM_FILE="$HELM_TMP_ROOT/$HELM_DIST.sha256"

echo "Downloading $DOWNLOAD_URL"

if type "curl" > /dev/null; then

curl -SsL "$CHECKSUM_URL" -o "$HELM_SUM_FILE"

elif type "wget" > /dev/null; then

wget -q -O "$HELM_SUM_FILE" "$CHECKSUM_URL"

fi

if type "curl" > /dev/null; then

curl -SsL "$DOWNLOAD_URL" -o "$HELM_TMP_FILE"

elif type "wget" > /dev/null; then

wget -q -O "$HELM_TMP_FILE" "$DOWNLOAD_URL"

fi

}

# installFile verifies the SHA256 for the file, then unpacks and

# installs it.

installFile() {

HELM_TMP="$HELM_TMP_ROOT/$PROJECT_NAME"

local sum=$(openssl sha1 -sha256 ${HELM_TMP_FILE} | awk '{print $2}')

local expected_sum=$(cat ${HELM_SUM_FILE})

if [ "$sum" != "$expected_sum" ]; then

echo "SHA sum of ${HELM_TMP_FILE} does not match. Aborting."

exit 1

fi

mkdir -p "$HELM_TMP"

tar xf "$HELM_TMP_FILE" -C "$HELM_TMP"

HELM_TMP_BIN="$HELM_TMP/$OS-$ARCH/$PROJECT_NAME"

TILLER_TMP_BIN="$HELM_TMP/$OS-$ARCH/$TILLER_NAME"

echo "Preparing to install $PROJECT_NAME and $TILLER_NAME into ${HELM_INSTALL_DIR}"

runAsRoot cp "$HELM_TMP_BIN" "$HELM_INSTALL_DIR"

echo "$PROJECT_NAME installed into $HELM_INSTALL_DIR/$PROJECT_NAME"

if [ -x "$TILLER_TMP_BIN" ]; then

runAsRoot cp "$TILLER_TMP_BIN" "$HELM_INSTALL_DIR"

echo "$TILLER_NAME installed into $HELM_INSTALL_DIR/$TILLER_NAME"

else

echo "info: $TILLER_NAME binary was not found in this release; skipping $TILLER_NAME installation"

fi

}

# fail_trap is executed if an error occurs.

fail_trap() {

result=$?

if [ "$result" != "0" ]; then

if [[ -n "$INPUT_ARGUMENTS" ]]; then

echo "Failed to install $PROJECT_NAME with the arguments provided: $INPUT_ARGUMENTS"

help

else

echo "Failed to install $PROJECT_NAME"

fi

echo -e "\tFor support, go to https://github.com/helm/helm."

fi

cleanup

exit $result

}

# testVersion tests the installed client to make sure it is working.

testVersion() {

set +e

HELM="$(which $PROJECT_NAME)"

if [ "$?" = "1" ]; then

echo "$PROJECT_NAME not found. Is $HELM_INSTALL_DIR on your "'$PATH?'

exit 1

fi

set -e

echo "Run '$PROJECT_NAME init' to configure $PROJECT_NAME."

}

# help provides possible cli installation arguments

help () {

echo "Accepted cli arguments are:"

echo -e "\t[--help|-h ] ->> prints this help"

echo -e "\t[--version|-v ]"

echo -e "\te.g. --version v2.4.0 or -v latest"

echo -e "\t[--no-sudo] ->> install without sudo"

}

# cleanup temporary files to avoid https://github.com/helm/helm/issues/2977

cleanup() {

if [[ -d "${HELM_TMP_ROOT:-}" ]]; then

rm -rf "$HELM_TMP_ROOT"

fi

}

# Execution

#Stop execution on any error

trap "fail_trap" EXIT

set -e

# Parsing input arguments (if any)

export INPUT_ARGUMENTS="${@}"

set -u

while [[ $# -gt 0 ]]; do

case $1 in

'--version'|-v)

shift

if [[ $# -ne 0 ]]; then

export DESIRED_VERSION="${1}"

else

echo -e "Please provide the desired version. e.g. --version v2.4.0 or -v latest"

exit 0

fi

;;

'--no-sudo')

USE_SUDO="false"

;;

'--help'|-h)

help

exit 0

;;

*) exit 1

;;

esac

shift

done

set +u

initArch

initOS

verifySupported

checkDesiredVersion

if ! checkHelmInstalledVersion; then

downloadFile

installFile

fi

testVersion

kubesphere-minimal.yaml

新建kubesphere-minimal.yaml文件,将下方yaml内容复制进去,再通过命令执行。

---

apiVersion: v1

kind: Namespace

metadata:

name: kubesphere-system

---

apiVersion: v1

data:

ks-config.yaml: |

---

persistence:

storageClass: ""

etcd:

monitoring: False

endpointIps: 192.168.0.7,192.168.0.8,192.168.0.9

port: 2379

tlsEnable: True

common:

mysqlVolumeSize: 20Gi

minioVolumeSize: 20Gi

etcdVolumeSize: 20Gi

openldapVolumeSize: 2Gi

redisVolumSize: 2Gi

metrics_server:

enabled: False

console:

enableMultiLogin: False # enable/disable multi login

port: 30880

monitoring:

prometheusReplicas: 1

prometheusMemoryRequest: 400Mi

prometheusVolumeSize: 20Gi

grafana:

enabled: False

logging:

enabled: False

elasticsearchMasterReplicas: 1

elasticsearchDataReplicas: 1

logsidecarReplicas: 2

elasticsearchMasterVolumeSize: 4Gi

elasticsearchDataVolumeSize: 20Gi

logMaxAge: 7

elkPrefix: logstash

containersLogMountedPath: ""

kibana:

enabled: False

openpitrix:

enabled: False

devops:

enabled: False

jenkinsMemoryLim: 2Gi

jenkinsMemoryReq: 1500Mi

jenkinsVolumeSize: 8Gi

jenkinsJavaOpts_Xms: 512m

jenkinsJavaOpts_Xmx: 512m

jenkinsJavaOpts_MaxRAM: 2g

sonarqube:

enabled: False

postgresqlVolumeSize: 8Gi

servicemesh:

enabled: False

notification:

enabled: False

alerting:

enabled: False

kind: ConfigMap

metadata:

name: ks-installer

namespace: kubesphere-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: ks-installer

namespace: kubesphere-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

creationTimestamp: null

name: ks-installer

rules:

- apiGroups:

- ""

resources:

- '*'

verbs:

- '*'

- apiGroups:

- apps

resources:

- '*'

verbs:

- '*'

- apiGroups:

- extensions

resources:

- '*'

verbs:

- '*'

- apiGroups:

- batch

resources:

- '*'

verbs:

- '*'

- apiGroups:

- rbac.authorization.k8s.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- apiregistration.k8s.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- apiextensions.k8s.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- tenant.kubesphere.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- certificates.k8s.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- devops.kubesphere.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- monitoring.coreos.com

resources:

- '*'

verbs:

- '*'

- apiGroups:

- logging.kubesphere.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- jaegertracing.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- storage.k8s.io

resources:

- '*'

verbs:

- '*'

- apiGroups:

- admissionregistration.k8s.io

resources:

- '*'

verbs:

- '*'

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: ks-installer

subjects:

- kind: ServiceAccount

name: ks-installer

namespace: kubesphere-system

roleRef:

kind: ClusterRole

name: ks-installer

apiGroup: rbac.authorization.k8s.io

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: ks-installer

namespace: kubesphere-system

labels:

app: ks-install

spec:

replicas: 1

selector:

matchLabels:

app: ks-install

template:

metadata:

labels:

app: ks-install

spec:

serviceAccountName: ks-installer

containers:

- name: installer

image: kubesphere/ks-installer:v2.1.1

imagePullPolicy: "Always"