【Doris】通过Stream Load解决Doris同步产生err=-235 or -215 or -238问题

Doris版本:0.15.0-rc04

文章目录

-

- 任务流程

- 异常说明

- Stream Load介绍

-

- 简单说明

- 支持数据格式

- 前置条件

- 启动批量删除方式

- 相关代码

- 示例

任务流程

异常说明

当MySQL端批量进行Delete或Update操作,产生大量Binlog,进入到Flink实时同步任务中,Flink实时同步任务通过拼装INSERT INTO语句,批量执行数据同步,这时,就有可能会导致Doris的数据版本超过了最大的限制,后续操作将会被拒绝

官网说明:

Q4. tablet writer write failed, tablet_id=27306172, txn_id=28573520, err=-235 or -215 or -238

这个错误通常发生在数据导入操作中。新版错误码为 -235,老版本错误码可能是 -215。

这个错误的含义是,对应tablet的数据版本超过了最大限制(默认500,由 BE 参数 max_tablet_version_num 控制),后续写入将被拒绝。

比如问题中这个错误,即表示 27306172 这个tablet的数据版本超过了限制。

这个错误通常是因为导入的频率过高,大于后台数据的compaction速度,导致版本堆积并最终超过了限制。此时,我们可以先通过show tablet 27306172 语句,然后执行结果中的 show proc 语句,查看tablet各个副本的情况。

结果中的 versionCount即表示版本数量。如果发现某个副本的版本数量过多,则需要降低导入频率或停止导入,并观察版本数是否有下降。

如果停止导入后,版本数依然没有下降,则需要去对应的BE节点查看be.INFO日志,搜索tablet id以及 compaction关键词,检查compaction是否正常运行。

关于compaction调优相关,可以参阅 ApacheDoris 公众号文章:Doris 最佳实践-Compaction调优(3)

-238 错误通常出现在同一批导入数据量过大的情况,从而导致某一个 tablet 的 Segment 文件过多(默认是 200,由 BE 参数 max_segment_num_per_rowset 控制)。此时建议减少一批次导入的数据量,或者适当提高 BE 配置参数值来解决。

Stream Load介绍

简单说明

- Stream Load 是一个同步的导入方式,用户通过发送 HTTP 协议发送请求将本地文件或数据流导入到 Doris 中。Stream load 同步执行导入并返回导入结果。用户可直接通过请求的返回体判断本次导入是否成功。

- Stream Load 主要适用于导入本地文件,或通过程序导入数据流中的数据。

- Stream load 中,Doris 会选定一个节点作为 Coordinator 节点。该节点负责接数据并分发数据到其他数据节点。

- 用户通过 HTTP 协议提交导入命令。如果提交到 FE,则 FE 会通过 HTTP redirect 指令将请求转发给某一个 BE。用户也可以直接提交导入命令给某一指定 BE。

- 导入的最终结果由 Coordinator BE 返回给用户。

- Stream Load 和 Broker Load,从导入性能、开发成本上进行了比较,在导入性能上,Broker Load 要比 Stream Load 略胜一筹,而在开发成本上两种方式并没有明显的差异。而且对于大表的同步,Broker Load 的导入方式可以做到单表一次导入一个事务,而 Stream Load 在单表数据量超 10G 时则需要拆分后进行数据导入,由于表的并不大,所以暂时使用Stream Load来进行

支持数据格式

- 目前 Stream Load 支持两个数据格式:CSV(文本) 和 JSON

前置条件

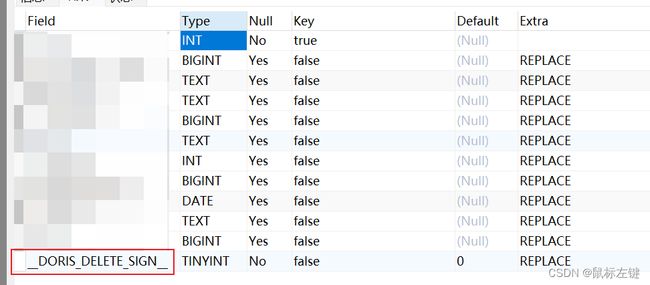

- 需要确认表是否支持批量删除,可以通过 设置一个session variable 来显示隐藏列

SET show_hidden_columns=true,之后使用desc tablename,如果输出中有__DORIS_DELETE_SIGN__列则支持,如果没有则不支持

启动批量删除方式

启用批量删除支持有一下两种形式:

- 通过在fe 配置文件中增加enable_batch_delete_by_default=true 重启fe 后新建表的都支持批量删除,此选项默认为false

- 对于没有更改上述fe 配置或对于以存在的不支持批量删除功能的表,可以使用如下语句:

ALTER TABLE tablename ENABLE FEATURE "BATCH_DELETE"来启用批量删除。本操作本质上是一个schema change 操作,操作立即返回,可以通过show alter table column 来确认操作是否完成

相关代码

import com.alibaba.fastjson.JSONObject;

import com.platform.common.model.RespContent;

import lombok.extern.slf4j.Slf4j;

import java.io.*;

import java.net.HttpURLConnection;

import java.net.URL;

import java.util.*;

@Slf4j

public class DorisUltils {

private final static List DORIS_SUCCESS_STATUS = new ArrayList<>(Arrays.asList("Success", "Publish Timeout"));

public static String loadUrlStr = "http://%s/api/%s/%s/_stream_load";

public static void loadBatch(String data, String jsonformat, String columns, String loadUrlStr, String mergeType, String db, String tbl, String authEncoding) {

Calendar calendar = Calendar.getInstance();

//导入的lable,全局唯一

String label = String.format("flink_import_%s%02d%02d_%02d%02d%02d_%s",

calendar.get(Calendar.YEAR), calendar.get(Calendar.MONTH) + 1, calendar.get(Calendar.DAY_OF_MONTH),

calendar.get(Calendar.HOUR_OF_DAY), calendar.get(Calendar.MINUTE), calendar.get(Calendar.SECOND),

UUID.randomUUID().toString().replaceAll("-", ""));

HttpURLConnection feConn = null;

HttpURLConnection beConn = null;

int status = 0;

String respMsg = "", respContent = "";

try {

// build request and send to fe

feConn = getConnection(loadUrlStr, label, columns, jsonformat, mergeType, authEncoding);

status = feConn.getResponseCode();

// fe send back http response code TEMPORARY_REDIRECT 307 and new be location

if (status != 307) {

log.warn("status is not TEMPORARY_REDIRECT 307, status: {},url:{}", status, loadUrlStr);

return;

}

String location = feConn.getHeaderField("Location");

if (location == null) {

log.warn("redirect location is null");

return;

}

// build request and send to new be location

beConn = getConnection(location, label, columns, jsonformat, mergeType, authEncoding);

// send data to be

BufferedOutputStream bos = new BufferedOutputStream(beConn.getOutputStream());

bos.write(data.getBytes());

bos.close();

// get respond

status = beConn.getResponseCode();

// respMsg = beConn.getResponseMessage();

InputStream stream = (InputStream) beConn.getContent();

BufferedReader br = new BufferedReader(new InputStreamReader(stream));

StringBuilder response = new StringBuilder();

String line;

while ((line = br.readLine()) != null) {

response.append(line);

}

respContent = response.toString();

RespContent respContentResult = JSONObject.parseObject(respContent, RespContent.class);

// 检测

if (status == 200) {

if (!checkStreamLoadStatus(respContentResult)) {

log.warn("Stream {} '{}.{}' Date Fail:{},失败连接:{},失败原因:{}", mergeType, db, tbl, data, loadUrlStr, respContent);

} else {

log.info("Stream {} '{}.{}' Data Success : {}条,用时{}毫秒,数据是:{}", mergeType, db, tbl, respContentResult.getNumberLoadedRows(), respContentResult.getLoadTimeMs(), data);

}

} else {

log.warn("Stream Load Request failed: {},message:{}", status, respContentResult.getMessage());

}

} catch (Exception e) {

e.printStackTrace();

String err = "failed to load audit via AuditLoader plugin with label: " + label;

status = -1;

respMsg = e.getMessage();

respContent = err;

log.warn(err, respMsg);

} finally {

if (feConn != null) {

feConn.disconnect();

}

if (beConn != null) {

beConn.disconnect();

}

}

}

//获取http连接信息

private static HttpURLConnection getConnection(String urlStr, String label, String columns, String jsonformat, String mergeType, String authEncoding) throws IOException {

URL url = new URL(urlStr); // e.g:http://192.168.1.1:8030/api/db_name/table_name/_stream_load

HttpURLConnection conn = (HttpURLConnection) url.openConnection();

conn.setInstanceFollowRedirects(false);

conn.setRequestMethod("PUT");

conn.setRequestProperty("Authorization", "Basic " + authEncoding);

conn.addRequestProperty("Expect", "100-continue");

conn.addRequestProperty("Content-Type", "text/plain; charset=UTF-8");

conn.addRequestProperty("label", label);

conn.addRequestProperty("max_filter_ratio", "0");

conn.addRequestProperty("strict_mode", "false");

conn.addRequestProperty("columns", columns);// e.g:id,field_1,field_2,field_3

conn.addRequestProperty("format", "json");

// conn.addRequestProperty("jsonpaths", jsonformat);

conn.addRequestProperty("strip_outer_array", "true");

conn.addRequestProperty("merge_type", mergeType); // e.g:APPEND(增)或 DELETE(删)

conn.setDoOutput(true);

conn.setDoInput(true);

return conn;

}

/**

* 判断StreamLoad是否成功

*

* @param respContent streamload返回的响应信息(JSON格式)

* @return

*/

public static Boolean checkStreamLoadStatus(RespContent respContent) {

if (DORIS_SUCCESS_STATUS.contains(respContent.getStatus())

&& respContent.getNumberTotalRows() == respContent.getNumberLoadedRows()) {

return true;

} else {

return false;

}

}

public static void main(String[] args) {

String authEncoding = Base64.getEncoder().encodeToString(String.format("%s:%s", username, password).getBytes(StandardCharsets.UTF_8));

loadBatch("[{\"a\":\"1\",\"b\":\"2\"},{\"a\":\"3\",\"b\":\"4\"}]","","a,b","http://192.168.1.1:8030/api/db_name/table_name/_stream_load","APPEND","db_name","table_name",authEncoding);

}

}

public class RespContent {

private String status;

private long numberLoadedRows;

private long numberTotalRows;

private String message;

private long loadTimeMs;

public RespContent(String status, long numberLoadedRows, long numberTotalRows, String message, long loadTimeMs) {

this.status = status;

this.numberLoadedRows = numberLoadedRows;

this.numberTotalRows = numberTotalRows;

this.message = message;

this.loadTimeMs = loadTimeMs;

}

public String getStatus() {

return status;

}

public void setStatus(String status) {

this.status = status;

}

public String getMessage() {

return message;

}

public void setMessage(String message) {

this.message = message;

}

public long getNumberLoadedRows() {

return numberLoadedRows;

}

public void setNumberLoadedRows(long numberLoadedRows) {

this.numberLoadedRows = numberLoadedRows;

}

public long getNumberTotalRows() {

return numberTotalRows;

}

public void setNumberTotalRows(long numberTotalRows) {

this.numberTotalRows = numberTotalRows;

}

public long getLoadTimeMs() {

return loadTimeMs;

}

public void setLoadTimeMs(long loadTimeMs) {

this.loadTimeMs = loadTimeMs;

}

}

注意:

- 组装的数据格式必须是:[{“a”:“1”,“b”:“2”},{“a”:“3”,“b”:“4”}]

- “strict_mode”=“false"or"true”,是否是严格模式,比如:true的话,如果字段是时间类型的话,不能存入null,只能是时间

- “merge_type”=“DELETE"or"APPEND”,是删除数据还是添加数据

- "label"必须是唯一的

- 需要注意:因为FE接收到请求后,会转发到BE执行,所以FE机器需要开通8030端口,同时BE机器需要开通8040端口

示例

数据源:

[

{

"a":"name1",

"b":"17868"

},

{

"a":"name2",

"b":"17870"

}

]

返回结果:

{

"TxnId":7149254,

"Label":"flink_import_20220728_173414_073e6992f5504e408bf530154ad5808d",

"Status":"Success",

"Message":"OK",

"NumberTotalRows":800,

"NumberLoadedRows":800,

"NumberFilteredRows":0,

"NumberUnselectedRows":0,

"LoadBytes":12801,

"LoadTimeMs":42,

"BeginTxnTimeMs":0,

"StreamLoadPutTimeMs":0,

"ReadDataTimeMs":0,

"WriteDataTimeMs":22,

"CommitAndPublishTimeMs":19,

"ErrorURL": "http://192.168.1.1:8042/api/_load_error_log?file=__shard_0/error_log_insert_stmt_db18266d4d9b4ee5-abb00ddd64bdf005_db18266d4d9b4ee5_abb00ddd64bdf005"

}

Stream load 导入结果参数解释说明:

- TxnId:导入的事务ID。用户可不感知。

- Label:导入 Label。由用户指定或系统自动生成。

- Status:导入完成状态。

“Success”:表示导入成功。

“Publish Timeout”:该状态也表示导入已经完成,只是数据可能会延迟可见,无需重试。

“Label Already Exists”:Label 重复,需更换 Label。

“Fail”:导入失败。 - Message:导入错误信息。

- NumberTotalRows:导入总处理的行数。

- NumberLoadedRows:成功导入的行数。

- NumberFilteredRows:数据质量不合格的行数。

- NumberUnselectedRows:被 where 条件过滤的行数。

- LoadBytes:导入的字节数。

- LoadTimeMs:导入完成时间。单位毫秒。

- BeginTxnTimeMs:向Fe请求开始一个事务所花费的时间,单位毫秒。

- StreamLoadPutTimeMs:向Fe请求获取导入数据执行计划所花费的时间,单位毫秒。

- ReadDataTimeMs:读取数据所花费的时间,单位毫秒。

- WriteDataTimeMs:执行写入数据操作所花费的时间,单位毫秒。

- CommitAndPublishTimeMs:向Fe请求提交并且发布事务所花费的时间,单位毫秒。

- ErrorURL:如果有数据质量问题,通过访问这个 URL 查看具体错误行。

注意:由于 Stream load 是同步的导入方式,所以并不会在 Doris 系统中记录导入信息,用户无法异步的通过查看导入命令看到 Stream load。使用时需监听创建导入请求的返回值获取导入结果。

doris文档地址 :https://doris.apache.org/zh-CN/docs/data-operate/import/import-way/stream-load-manual/