Centos7快速单机搭建ceph(Octopus版)

-

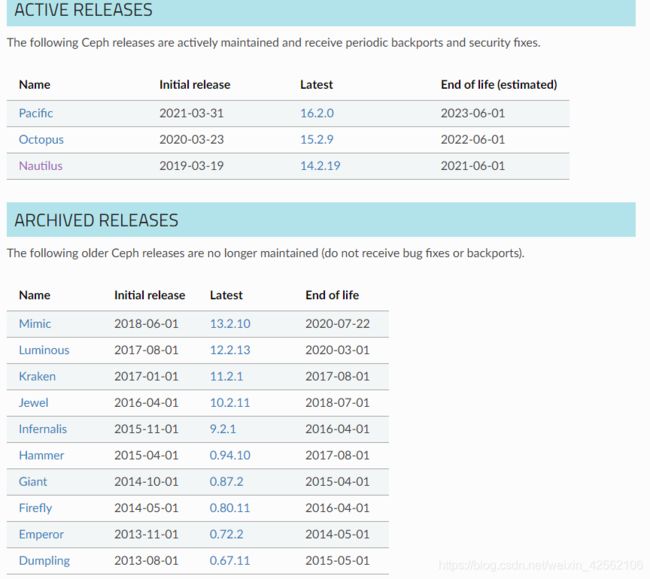

由于官网停止了很多版本的维护所以更新一下博客

https://docs.ceph.com/en/latest/releases/

- 1 开始部署配置阿里云的源

rm /etc/yum.repos.d/* -rf

curl http://mirrors.aliyun.com/repo/Centos-7.repo > /etc/yum.repos.d/Centos-7.repo

curl http://mirrors.aliyun.com/repo/epel-7.repo > /etc/yum.repos.d/epel.repo

cat </etc/yum.repos.d/ceph.repo

[Ceph-SRPMS]

name=Ceph SRPMS packages

baseurl=https://mirrors.aliyun.com/ceph/rpm-octopus/el7/SRPMS/

enabled=1

gpgcheck=0

type=rpm-md

[Ceph-aarch64]

name=Ceph aarch64 packages

baseurl=https://mirrors.aliyun.com/ceph/rpm-octopus/el7/aarch64/

enabled=1

gpgcheck=0

type=rpm-md

[Ceph-noarch]

name=Ceph noarch packages

baseurl=https://mirrors.aliyun.com/ceph/rpm-octopus/el7/noarch/

enabled=1

gpgcheck=0

type=rpm-md

[Ceph-x86_64]

name=Ceph x86_64 packages

baseurl=https://mirrors.aliyun.com/ceph/rpm-octopus/el7/x86_64/

enabled=1

gpgcheck=0

type=rpm-md

END

yum clean all

yum makecache

systemctl stop firewalld

-

2 绑定主机名

echo 192.168.8.137 $HOSTNAME >> /etc/hosts

-

3 安装包和初始化

yum install ceph ceph-radosgw ceph-deploy -y

yum install python-setuptools

mkdir -p /data/ceph-cluster && cd /data/ceph-cluster

ceph-deploy new $HOSTNAME

-

4 更新默认配置文件

echo osd pool default size = 1 >> ceph.conf

echo osd crush chooseleaf type = 0 >> ceph.conf

echo osd max object name len = 256 >> ceph.conf

echo osd journal size = 128 >> ceph.conf

-

5 初始化监控节点,mgr,admin,mds

ceph-deploy mon create-initial

ceph-deploy admin $HOSTNAME

ceph-deploy mgr create $HOSTNAME

ceph-deploy mds create $HOSTNAME

-

6添加磁盘

ceph-deploy disk zap $HOSTNAME /dev/sdb

ceph-deploy osd create --data /dev/sdb $HOSTNAME

[root@node137 ceph]# ceph -s | tail -n 10

mgr: node137(active, since 69m)

mds: mycephfs:1 {0=node137=up:active}

osd: 1 osds: 1 up (since 71m), 1 in (since 78m)

data:

pools: 4 pools, 51 pgs

objects: 24 objects, 11 KiB

usage: 1.0 GiB used, 19 GiB / 20 GiB avail

pgs: 51 active+clean

-

7 创建ceph filesystem

[root@node137 ceph-cluster]# ceph osd pool create cephfs_data 12

pool 'cephfs_data' created

[root@node137 ceph-cluster]# ceph osd pool create cephfs_metadata 6

pool 'cephfs_metadata' created

[root@node137 ceph-cluster]# ceph fs new mycephfs cephfs_metadata cephfs_data

new fs with metadata pool 3 and data pool 2

温馨提示此处需要把ceph.client.admin.keyring复制到客户端的/etc/ceph/目录才客户端才能挂载文件

#服务互传方式

scp ceph.client.admin.keyring [email protected]:/etc/ceph/

以上文件存储配置完毕

-

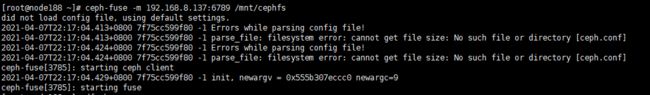

1 客户端安装

yum install -y ceph-fuse

[root@node137 ceph]# ceph df

--- RAW STORAGE ---

CLASS SIZE AVAIL USED RAW USED %RAW USED

hdd 20 GiB 19 GiB 4.4 MiB 1.0 GiB 5.02

TOTAL 20 GiB 19 GiB 4.4 MiB 1.0 GiB 5.02

--- POOLS ---

POOL ID PGS STORED OBJECTS USED %USED MAX AVAIL

device_health_metrics 1 1 0 B 0 0 B 0 18 GiB

cephfs_data 2 12 0 B 0 0 B 0 18 GiB

cephfs_metadata 3 6 11 KiB 23 576 KiB 0 18 GiB

rbd_pool 4 32 19 B 1 64 KiB 0 18 GiB

-

RBD块存储使用

1 创建池和初始化

[root@node137 ceph]# ceph osd pool create rbd_pool 10 10

pool 'rbd_pool' created

[root@node137 ceph]# rbd pool init rbd_pool

2 再创建块设备

rbd create -p rbd_pool --image rbd-demon.img --size 2G

查看

[root@node137 ceph]# rbd -p rbd_pool ls

rbd-demon.img

查看详情

[root@node137 ceph]# rbd info rbd_pool/rbd-demon.img

rbd image 'rbd-demon.img':

size 2 GiB in 512 objects

order 22 (4 MiB objects)

snapshot_count: 0

id: 5e7e4a6d5ae2

block_name_prefix: rbd_data.5e7e4a6d5ae2

format: 2

features: layering, exclusive-lock, object-map, fast-diff, deep-flatten

op_features:

flags:

create_timestamp: Wed Apr 7 23:08:06 2021

access_timestamp: Wed Apr 7 23:08:06 2021

modify_timestamp: Wed Apr 7 23:08:06 2021

由于提供于k8s使用到此就结束完成

以下删除演示

#创建

[root@node137 ceph]# rbd create -p rbd_pool --image rbd-demonxxx.img --size 1G

[root@node137 ceph]# rbd -p rbd_pool ls

rbd-demon.img

rbd-demonxxx.img

#删除

[root@node137 ceph]# rbd rm -p rbd_pool --image rbd-demonxxx.img

Removing image: 100% complete...done.

[root@node137 ceph]# rbd -p rbd_pool ls

rbd-demon.img

###以下清除警告

[root@node137 ceph]# ceph -s

cluster:

id: 6514a383-fa74-43b1-9333-82d53c4bacd5

health: HEALTH_WARN

Module 'restful' has failed dependency: No module named 'pecan' <<<<-----警告

4 pool(s) have no replicas configured

2 pool(s) have non-power-of-two pg_num

services:

mon: 1 daemons, quorum node137 (age 107m)

mgr: node137(active, since 105m)

mds: mycephfs:1 {0=node137=up:active}

osd: 1 osds: 1 up (since 107m), 1 in (since 114m)

data:

pools: 4 pools, 51 pgs

objects: 29 objects, 11 KiB

usage: 1.0 GiB used, 19 GiB / 20 GiB avail

pgs: 51 active+clean

查看警告详情提示安装pecan包

[root@node137 ceph]# ceph health detail

HEALTH_WARN Module 'restful' has failed dependency: No module named 'pecan'; 4 pool(s) have no replicas configured; 2 pool(s) have non-power-of-two pg_num

[WRN] MGR_MODULE_DEPENDENCY: Module 'restful' has failed dependency: No module named 'pecan'

Module 'restful' has failed dependency: No module named 'pecan'

[WRN] POOL_NO_REDUNDANCY: 4 pool(s) have no replicas configured

pool 'device_health_metrics' has no replicas configured

pool 'cephfs_data' has no replicas configured

pool 'cephfs_metadata' has no replicas configured

pool 'rbd_pool' has no replicas configured

[WRN] POOL_PG_NUM_NOT_POWER_OF_TWO: 2 pool(s) have non-power-of-two pg_num

pool 'cephfs_data' pg_num 12 is not a power of two

pool 'cephfs_metadata' pg_num 6 is not a power of two

install

pip3 install -i https://pypi.tuna.tsinghua.edu.cn/simple pecan

pip3 install -i https://pypi.tuna.tsinghua.edu.cn/simple werkzeug

消除

[root@node137 ~]# ceph -s

cluster:

id: 6514a383-fa74-43b1-9333-82d53c4bacd5

health: HEALTH_WARN

4 pool(s) have no replicas configured

2 pool(s) have non-power-of-two pg_num

services:

mon: 1 daemons, quorum node137 (age 114s)

mgr: node137(active, since 92s)

mds: mycephfs:1 {0=node137=up:active}

osd: 1 osds: 1 up (since 106s), 1 in (since 118m)

data:

pools: 4 pools, 51 pgs

objects: 29 objects, 18 KiB

usage: 1.0 GiB used, 19 GiB / 20 GiB avail

pgs: 51 active+clean

io:

client: 170 B/s wr, 0 op/s rd, 0 op/s wr