LangChain 2模块化prompt template并用streamlit生成网站 实现给动物取名字

上一节实现了 LangChain 实现给动物取名字,

实际上每次给不同的动物取名字,还得修改源代码,这周就用模块化template来实现。

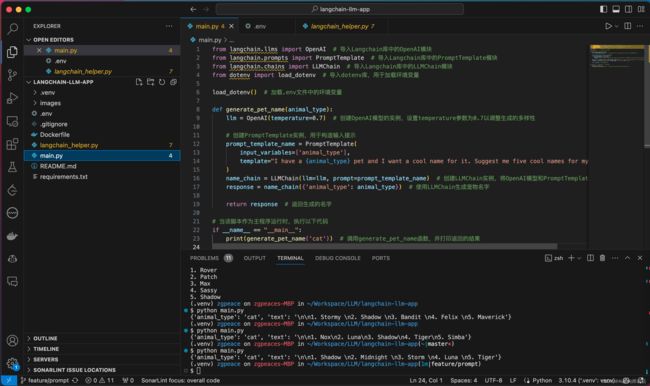

1. 添加promptTemplate

from langchain.llms import OpenAI # 导入Langchain库中的OpenAI模块

from langchain.prompts import PromptTemplate # 导入Langchain库中的PromptTemplate模块

from langchain.chains import LLMChain # 导入Langchain库中的LLMChain模块

from dotenv import load_dotenv # 导入dotenv库,用于加载环境变量

load_dotenv() # 加载.env文件中的环境变量

def generate_pet_name(animal_type):

llm = OpenAI(temperature=0.7) # 创建OpenAI模型的实例,设置temperature参数为0.7以调整生成的多样性

# 创建PromptTemplate实例,用于构造输入提示

prompt_template_name = PromptTemplate(

input_variables=['animal_type'],

template="I have a {animal_type} pet and I want a cool name for it. Suggest me five cool names for my pet."

)

name_chain = LLMChain(llm=llm, prompt=prompt_template_name) # 创建LLMChain实例,将OpenAI模型和PromptTemplate传入

response = name_chain({'animal_type': animal_type}) # 使用LLMChain生成宠物名字

return response # 返回生成的名字

# 当该脚本作为主程序运行时,执行以下代码

if __name__ == "__main__":

print(generate_pet_name('cat')) # 调用generate_pet_name函数,并打印返回的结果

运行和输出

$ python main.py

{'animal_type': 'cat', 'text': '\n\n1. Shadow \n2. Midnight \n3. Storm \n4. Luna \n5. Tiger'}

(.venv) zgpeace on zgpeaces-MBP in ~/Workspace/LLM/langchain-llm-app(1m|feature/prompt)

$ python main.py

{'animal_type': 'cow', 'text': '\n\n1. Milky\n2. Mooly\n3. Bessie\n4. Daisy\n5. Buttercup'}

(.venv) zgpeace on zgpeaces-MBP in ~/Workspace/LLM/langchain-llm-app(4m|feature/prompt*)

2. 添加新的参数pte_color

from langchain.llms import OpenAI # 导入Langchain库中的OpenAI模块

from langchain.prompts import PromptTemplate # 导入Langchain库中的PromptTemplate模块

from langchain.chains import LLMChain # 导入Langchain库中的LLMChain模块

from dotenv import load_dotenv # 导入dotenv库,用于加载环境变量

load_dotenv() # 加载.env文件中的环境变量

def generate_pet_name(animal_type, pet_color):

llm = OpenAI(temperature=0.7) # 创建OpenAI模型的实例,设置temperature参数为0.7以调整生成的多样性

# 创建PromptTemplate实例,用于构造输入提示

prompt_template_name = PromptTemplate(

input_variables=['animal_type', 'pet_color'],

template="I have a {animal_type} pet and I want a cool name for it. Suggest me five cool names for my pet."

)

name_chain = LLMChain(llm=llm, prompt=prompt_template_name) # 创建LLMChain实例,将OpenAI模型和PromptTemplate传入

response = name_chain({'animal_type': animal_type, 'pet_color': pet_color}) # 使用LLMChain生成宠物名字

return response # 返回生成的名字

# 当该脚本作为主程序运行时,执行以下代码

if __name__ == "__main__":

print(generate_pet_name('cow', 'black')) # 调用generate_pet_name函数,并打印返回的结果

运行结果

$ python main.py

{'animal_type': 'cow', 'pet_color': 'black', 'text': '\n\n1. Daisy\n2. Maverick\n3. Barnaby\n4. Bessie\n5. Bossy'}

(.venv) zgpeace on zgpeaces-MBP in ~/Workspace/LLM/langchain-llm-app(6m|feature/prompt*)

3. 重构代码

把逻辑放到langchain_helper.py, 清空main.py代码

4. 用Streamlit 生成网页

main.py 代码实现

import langchain_helper as lch

import streamlit as st

st.title("Pets name generator")

add path environment in .zshrc

export PATH="/Library/Frameworks/Python.framework/Versions/3.10/bin:$PATH"

source .zshrc

zgpeaces-MBP at ~/Workspace/LLM/langchain-llm-app ±(feature/prompt) ✗ ❯ streamlit run main.py

Welcome to Streamlit!

If you’d like to receive helpful onboarding emails, news, offers, promotions,

and the occasional swag, please enter your email address below. Otherwise,

leave this field blank.

Email:

You can find our privacy policy at https://streamlit.io/privacy-policy

Summary:

- This open source library collects usage statistics.

- We cannot see and do not store information contained inside Streamlit apps,

such as text, charts, images, etc.

- Telemetry data is stored in servers in the United States.

- If you'd like to opt out, add the following to ~/.streamlit/config.toml,

creating that file if necessary:

[browser]

gatherUsageStats = false

You can now view your Streamlit app in your browser.

Local URL: http://localhost:8501

Network URL: http://192.168.50.10:8501

For better performance, install the Watchdog module:

$ xcode-select --install

$ pip install watchdog

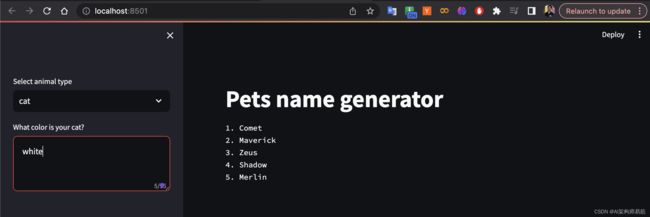

5. Streamlit 生成网页输入跟Langchain互动获取名字

main.py

import langchain_helper as lch # 导入名为langchain_helper的模块,并使用别名lch

import streamlit as st # 导入Streamlit库,并使用别名st

st.title("Pets name generator") # 在Streamlit应用中设置标题

# 通过侧边栏选择宠物类型

animal_type = st.sidebar.selectbox("Select animal type", ["dog", "cat", "cow", "horse", "pig", "sheep"])

# 根据宠物类型设置宠物颜色,使用侧边栏的文本区域输入

if animal_type in ['dog', 'cat', 'cow', 'horse', 'pig', 'sheep']:

pet_color = st.sidebar.text_area(label=f"What color is your {animal_type}?", max_chars=15)

else:

pet_color = st.sidebar.text_area(label="What color is your pet?", max_chars=15)

# 如果有输入颜色,调用generate_pet_name函数生成宠物名字并显示

if pet_color:

response = lch.generate_pet_name(animal_type, pet_color)

st.text(response['pet_name'])

langchain_hepler.py 实现

from langchain.llms import OpenAI # 导入Langchain库中的OpenAI模块

from langchain.prompts import PromptTemplate # 导入Langchain库中的PromptTemplate模块

from langchain.chains import LLMChain # 导入Langchain库中的LLMChain模块

from dotenv import load_dotenv # 导入dotenv库,用于加载环境变量

load_dotenv() # 加载.env文件中的环境变量

def generate_pet_name(animal_type, pet_color):

llm = OpenAI(temperature=0.7) # 创建OpenAI模型的实例,设置temperature参数为0.7以调整生成的多样性

# 创建PromptTemplate实例,用于构造输入提示

prompt_template_name = PromptTemplate(

input_variables=['animal_type', 'pet_color'],

template="I have a {animal_type} pet and I want a cool name for it. Suggest me five cool names for my pet."

)

name_chain = LLMChain(llm=llm, prompt=prompt_template_name, output_key='pet_name') # 创建LLMChain实例,将OpenAI模型和PromptTemplate传入

response = name_chain({'animal_type': animal_type, 'pet_color': pet_color}) # 使用LLMChain生成宠物名字

return response # 返回生成的名字

# 当该脚本作为主程序运行时,执行以下代码

if __name__ == "__main__":

print(generate_pet_name('cow', 'black')) # 调用generate_pet_name函数,并打印返回的结果

参考

- https://github.com/zgpeace/pets-name-langchain/tree/feature/prompt

- https://youtu.be/lG7Uxts9SXs?si=H1CISGkoYiKRSF5V

- Streamlit - https://streamlit.io