Protein Structure Representation Learning by Geometric Pretraining-通过几何预训练进行蛋白质结构表示学习

Protein Structure Representation Learning by Geometric Pretraining-通过几何预训练进行蛋白质结构表示学习

Abstract

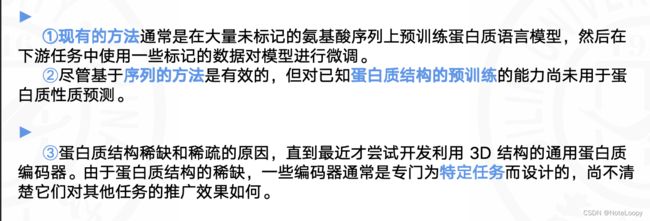

学习有效的蛋白质表示对于生物学的各种任务(例如预测蛋白质功能或结构)至关重要。现有方法通常在大量未标记的氨基酸序列上预训练蛋白质语言模型,然后在下游任务中使用一些标记数据对模型进行微调。尽管基于序列的方法很有效,尚未探索对少量已知蛋白质结构进行预训练的力量用于蛋白质特性预测,尽管已知蛋白质结构是蛋白质功能的决定因素。在本文中,我们提出根据蛋白质的 3D 结构来预训练蛋白质表示。我们首先提出一个简单而有效的编码器来学习蛋白质几何特征,我们通过利用多视图对比学习和不同的自我预测任务来预训练蛋白质图编码器。

1. Introduction

Proteins are workhorses of the cell and are implicated in a broad range of applications ranging from therapeutics to material. They consist of a linear chain of amino acids (residues) folding into a specific 3D conformation. Due to the advent of low cost sequencing technologies, in recent years a massive volume of protein sequences has been newly discovered. As the function annotation of a new protein sequence remains costly and time-consuming, accurate and efficient in silico protein function annotation methods are needed to bridge the existing sequence-function gap.

蛋白质是细胞的主力,涉及从治疗到材料的广泛应用。它们由折叠成特定 3D 构象的线性氨基酸(残基)链组成。由于低成本测序技术的出现,近年来新发现了大量的蛋白质序列。由于新蛋白质序列的功能注释仍然昂贵且耗时,需要有效的和精确的计算机蛋白质功能注释方法来弥补现有的序列功能差距。

As a large number of protein functions are governed by their folded structures, several data-driven approaches rely on learning representations of the protein structures, which then can be used for a variety of tasks such as protein design, structure classification, model quality assessment , and function prediction. Unfortunately, due to the challenge of experimental protein structure determination, the number of reported protein structures is orders of magnitude lower than the size of datasets in other machine learning application domains. For example, 182K structures exist in Protein Data Bank (PDB)(vs 47M protein sequences in Pfam ) and vs 10M annotated images in ImageNet.

由于大量蛋白质功能受其折叠结构控制,因此多种数据驱动方法依赖于学习蛋白质结构的表示,然后将其用于各种任务,例如蛋白质设计、结构分类、模型质量评估和功能预测。不幸的是,由于实验蛋白质结构测定的挑战,报告的蛋白质结构的数量比其他机器学习应用领域的数据集大小低几个数量级,例如,蛋白质数据库 (PDB) 中存在 182K 结构,而 ImageNet 中存在 10M 带注释的图像。

也就是说:与其他领域的数据集相比,可用于训练和研究的蛋白质结构数据相对较少,这可能在开发机器学习模型时提出一些挑战。

As a remedy to this problem, recent works have leveraged the large volume of unlabeled protein sequences data to learn an effective representation of known proteins (Bepler & Berger, 2019; 2021; Rives et al., 2021; Elnaggar et al., 2021). A number of studies have employed pretraining protein encoders on millions of sequences via self-supervised learning (Hadsell et al., 2006; Devlin et al., 2018; Chen et al., 2020). However, these methods neither directly capture nor leverage the available protein structural information that is known to be the determinants of protein function.

作为解决这个问题的方法,最近的工作利用大量未标记的蛋白质序列数据来学习已知蛋白质的有效表示。许多研究通过自我监督学习对数百万个序列进行预训练蛋白质编码器。然而,这些方法既不直接捕获也不利用已知是蛋白质功能决定因素的可用蛋白质结构信息。

To better utilize this critical structural information, several structure-based protein encoders are proposed. However, due to the scarcity of protein structures, these encoders are often specifically designed for individual tasks, and it is unclear how well they generalize to other tasks. Little attempts have been made until recently to develop universal protein encoders that exploit 3D structures due to the above-mentioned reasons of scarcity and sparsity of protein structures. Thanks to the recent advances in highly accurate deep learning-based protein structure prediction methods, it is now possible to efficiently predict the structure of a large number of protein sequences with reasonable confidence.

为了更好地利用这种关键的结构信息,提出了几种基于结构的蛋白质编码器。然而,由于蛋白质结构的稀缺,这些编码器通常是专门为单个任务而设计的,**并且尚不清楚它们对其他任务的推广效果如何。**由于上述蛋白质结构稀缺和稀疏的原因,直到最近才尝试开发利用 3D 结构的通用蛋白质编码器。得益于基于深度学习的高精度蛋白质结构预测方法的最新进展,现在可以以合理的置信度有效地预测大量蛋白质序列的结构。

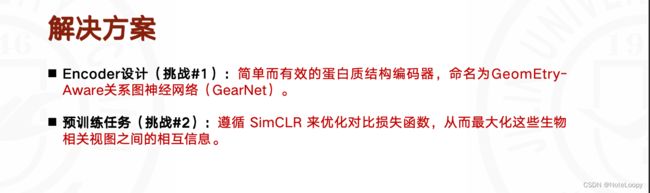

Motivated by this development, we develop a universal protein encoder pretrained on the largest possible number of protein structures (both experimental and predicted) that is able to generalize to a variety of property prediction tasks. We propose a simple yet effective structure-based encoder called GeomEtry-Aware Relational Graph Neural Network (GearNet), which encodes spatial information by adding different types of sequential or structural edges and then performs relational message passing on protein residue graphs. Inspired by the recent geometry-based encoders for small molecules (Klicpera et al., 2020), we propose an edge message passing mechanism to enhance the protein structure encoder.

受这一发展的推动,**我们开发了一种通用蛋白质编码器,在最大可能数量的蛋白质结构(实验的和预测的)上进行预训练,能够泛化到各种属性预测任务。**我们提出了一种简单而有效的基于结构的编码器,称为 GeomEtry-Aware 关系图神经网络(GearNet),它通过添加不同类型的顺序或结构边来编码空间信息,然后在蛋白质残基图上执行关系消息传递。受到最近基于几何的小分子编码器(Klicpera 等人,2020)的启发,我们提出了一种边消息传递机制来增强蛋白质结构编码器。

We further introduce different geometric pretraining methods for learning the protein structure encoder by following popular self-supervised learning frameworks such as contrastive learning and self-prediction. In the contrastive learning scenario, we aim to maximize the similarity between the learned representations of different augmented views from the same protein, while minimizing the similarity between those from different proteins. For self-prediction, the model performs masked prediction of different geometric or biochemical properties, such as residue types, Euclidean distances, angles and dihedral angles, during training.

我们通过遵循流行的自监督学习框架(例如对比学习和自我预测),进一步介绍了用于学习蛋白质结构编码器的不同几何预训练方法,进一步介绍了用于学习蛋白质结构编码器的不同几何预训练方法。在对比学习场景中,我们的目标是最大化来自同一蛋白质的不同增强视图的学习表示之间的相似性,同时最小化来自不同蛋白质的那些表示之间的相似性。对于自我预测,该模型在训练过程中对不同的几何或生化特性(例如残基类型、欧几里德距离、角度和二面角)进行掩码预测。

Extensive experiments on several existing benchmarks, including Enzyme Commission number prediction (Gligorijevi ́ c et al., 2021), Gene Ontology term prediction (Gligorijevi ́ c et al., 2021), fold classification (Hou et al., 2018) and reaction classification (Hermosilla et al., 2021) verify our GearNet augmented with edge message passing can consistently and significantly outperform existing protein encoders on most tasks in a supervised setting. Further, by employing the proposed pretraining methods, our model trained on less than a million samples achieves state-of-the-art results on different tasks, even better than the sequence-based encoders pretrained on million- or billion-scale datasets.

对几个现有基准进行了广泛的实验,包括酶委员会编号预测、基因本体术语预测、折叠分类和反应分类(Hermosilla 等人,2021)验证了我们通过边缘消息传递增强的 GearNet 在监督环境中的大多数任务上能够一致且显着地优于现有的蛋白质编码器。此外,通过采用所提出的预训练方法,我们的模型在不到一百万个样本上进行训练,在不同的任务上实现了最先进的结果,甚至比在百万或十亿规模数据集上预训练的基于序列的编码器更好。

-

Related Work

Previous works seek to learn protein representations based on different modalities of proteins, including amino acid sequences (Rao et al., 2019; Elnaggar et al., 2021; Rives et al., 2021), multiple sequence alignments (MSAs) (Rao et al., 2021; Biswas et al., 2021; Meier et al., 2021) and protein structures (Hermosilla et al., 2021; Gligorijevi ́ c et al., 2021; Somnath et al., 2021). These works share the common goal of learning informative protein representations that can benefit various downstream applications, like predicting protein function (Gligorijevi ́ c et al., 2021; Rives et al., 2021) and protein-protein interaction (Wang et al., 2019), as well as designing protein sequences (Biswas et al., 2021).

之前的工作试图基于不同的蛋白质模式来学习蛋白质表示,包括氨基酸序列、多重序列比对(MSA)、和蛋白质结构。这些工作的共同目标是学习信息丰富的蛋白质表示,这可以使各种下游应用受益,例如预测蛋白质功能和蛋白质-蛋白质相互作用,以及设计蛋白质序列。

2.1. Sequence-Based Methods

Sequence-based protein representation learning is mainly inspired by the methods of modeling natural language sequences. Recent methods aim to capture the biochemical and co-evolutionary knowledge underlying a large-scale protein sequence corpus by self-supervised pretraining, and such knowledge is then transferred to specific downstream tasks by finetuning. Typical pretraining objectives explored in existing methods include next amino acid prediction (Alley et al., 2019; Elnaggar et al., 2021), masked language modeling (MLM) (Rao et al., 2019; Elnaggar et al., 2021; Rives et al., 2021), pairwise MLM (He et al., 2021) and contrastive predictive coding (CPC) (Lu et al., 2020). Compared to sequence-based approaches that learn in the whole sequence space, MSA-based methods (Rao et al., 2021; Biswas et al., 2021; Meier et al., 2021) leverage the sequences within a protein family to capture the conserved and variable regions of homologous sequences, which imply specific structures and functions of the protein family

基于序列的蛋白质表示学习主要受到自然语言序列建模方法的启发。最近的方法旨在通过自监督预训练来捕获大规模蛋白质序列语料库背后的生化和共同进化知识。然后通过微调将这些知识转移到特定的下游任务。现有方法探索的典型预训练目标比如:下一个氨基酸预测、掩码语言建模 (MLM)、成对的MLM、对比预测编码 (CPC)。与在整个序列空间中学习的基于序列的方法相比,基于 MSA 的方法利用蛋白质家族中的序列去捕获同源序列的保守区和可变区?这意味着蛋白质家族的特定结构和功能。

2.2. Structure-Based Methods

Although sequence-based methods pretrained on large-scale databases perform well, structure-based methods should be, in principle, a better solution to learning an informative protein representation, as the function of a protein is determined by its structure. This line of works seeks to encode spatial information in protein structures by 3D CNNs (Derevyanko et al., 2018) or graph neural networks (GNNs) (Gligorijevi ́ c et al., 2021; Baldassarre et al., 2021; Jing et al., 2021). Among these methods, IEConv (Hermosilla et al., 2021) tries to fit the inductive bias of protein structure modeling, which introduced a graph convolution layer incorporating intrinsic and extrinsic distances between nodes. Another potential direction is to extract features from protein surfaces (Gainza et al., 2020; Sverrisson et al., 2021; Dai & Bailey-Kellogg, 2021). Somnath et al. (2021) combined the advantages of both worlds and proposed a parameterefficient multi-scale model. Besides, there are also works that enhance pretrained sequence-based models by incorporating structural information in the pretraining stage (Bepler & Berger, 2021) or finetuning stage (Wang et al., 2021).

尽管在大规模数据库上预训练的基于序列的方法表现良好,原则上,基于结构的方法应该是学习信息丰富的蛋白质表示的更好解决方案,因为蛋白质的功能是由其结构决定的。这一系列工作旨在通过 3D CNN 对蛋白质结构中的空间信息进行编码,或者通过GNNs。在这些方法中,IEConv试图拟合蛋白质结构建模的归纳偏差,它引入了一个包含节点之间的内在和外在距离的图卷积层。另一个潜在的方向是从蛋白质表面提取特征 (2021)结合了两者的优点,提出了一种参数有效的多尺度模型。此外,还有一些工作通过在预训练阶段或微调阶段结合结构信息来增强预训练的基于序列的模型

Despite progress in the design of structure-based encoders, there are few works focusing on structure-based pretraining for proteins. To the best of our knowledge, the only attempt is a concurrent work (Hermosilla & Ropinski, 2022), which applies a contrastive learning method on an encoder (Hermosilla et al., 2021) relying on cumbersome convolutional and pooling layers. Compared with these existing works, our proposed encoder is conceptually simpler and more effective on many different tasks, thanks to the proposed relational graph convolutional layer and edge message passing layer, which are able to efficiently capture the sequential and structural information. Furthermore, we introduce five structure-based pretraining methods within the contrastive learning and self-prediction frameworks, which can serve as a solid starting point for enabling self-supervised learning on protein structures.

**尽管基于结构的编码器的设计取得了进展,但很少有工作关注基于结构的蛋白质预训练。**据我们所知,唯一的尝试是并行工作,它在依赖于繁琐的卷积层和池化层的编码器上应用对比学习方法。与这些现有的工作相比,我们提出的编码器在概念上更简单,并且在许多不同的任务上更有效,多亏了提出的关系图卷积层和边缘消息传递层,它们能够有效地捕获顺序和结构信息,此外,我们在对比学习和自我预测框架内引入了五种基于结构的预训练方法,这可以作为实现蛋白质结构自我监督学习的坚实起点。

-

Structure-Based Protein Encoder

Existing protein encoders are either designed for specific tasks or cumbersome for pretraining due to the dependency on computationally expensive convolutions. In contrast, here we propose a simple yet effective protein structure encoder, named GeomEtry-Aware Relational Graph Neural Network (GearNet). We utilize edge message passing to enhance the effectiveness of GearNet, which is novel in the field of protein structure modeling. In contrast, previous works (Hermosilla et al., 2021; Somnath et al., 2021) only consider message passing among residues or atoms.

现有的蛋白质编码器要么是为特定任务而设计的,要么是由于依赖于计算成本昂贵的卷积而导致预训练很麻烦。相比之下,我们在这里提出了一种简单而有效的蛋白质结构编码器,命名为GeomEtry-Aware关系图神经网络(GearNet)。我们利用边消息传递来增强 GearNet 的有效性,这在蛋白质结构建模领域是新颖的。相比之下,之前的工作仅考虑残基或原子之间的消息传递。

3.1. Geometry-Aware Relational Graph Neural Network

Given protein structures, our model aims to learn representations encoding their spatial and chemical information. These representations should be invariant under translations and rotations in 3D space. To achieve this requirement, we first construct our protein graph based on the spatial features invariant under these transformations.

给定蛋白质结构,我们的模型旨在学习编码其空间和化学信息的表示。这些表示在 3D 空间中的平移和旋转下应该保持不变。为了实现这一要求,我们首先根据这些变换下不变的空间特征构建蛋白质图。

Protein graph construction. We represent the structure of a protein as a residue-level relational graph G = (V, E, R), where V and E denotes the set of nodes and edges respectively, and R is the set of edge types. We use (i, j, r) to denote the edge from node i to node j with type r. We use n and m to denote the number of nodes and edges, respectively. In this work, each node in the protein graph represents the alpha carbon of a residue with the 3D coordinates of all nodes x ∈ Rn×3. We use fi and f(i,j,r) to denote the feature for node i and edge (i, j, r), respectively, in which reside types, sequential and spatial distances are considered.

蛋白质图构建。我们将蛋白质的结构表示为残基级关系图 G = (V, E, R),其中V和E分别表示节点和边的集合,R 是边类型的集合。我们使用 (i, j, r) 来表示从节点 i 到节点 j 的类型为 r 的边。我们用 n 和 m 分别表示节点和边的数量。在这项工作中,蛋白质图中的每个节点代表残基的α碳,所有节点的3D坐标 x ∈ Rn×3。我们使用 fi 和 f(i,j,r) 分别表示节点 i 和边 (i, j, r) 的特征,其中考虑了驻留类型、顺序距离和空间距离。

Then, we add three different types of directed edges into our graphs: sequential edges, radius edges and K-nearest neighbor edges. Among these, sequential edges will be further divided into 5 types of edges based on the relative sequential distance d ∈ {−2, −1, 0, 1, 2} between two end nodes, where we add sequential edges only between the nodes within the sequential distance of 2. These edge types reflect different geometric properties, which all together yield a comprehensive featurization of proteins. More details of the graph and feature construction process can be found in Appendix C.1.

然后,我们将三种不同类型的有向边添加到图中:顺序边、半径边和 K 最近邻边。其中,根据两个端节点之间的相对顺序距离 d ∈ {−2, −1, 0, 1, 2},顺序边将进一步分为 5 种类型的边,其中我们仅在内部节点之间添加顺序边连续距离为 2。这些边缘类型反映了不同的几何特性,这些特性共同产生了蛋白质的全面特征化。有关图表和特征构建过程的更多详细信息,请参阅附录 C.1。

Relational graph convolutional layer. Upon the protein graphs defined above, we utilize a GNN to derive per-residue and whole-protein representations. One simple example of GNNs is the GCN (Kipf & Welling, 2017), where messages are computed by multiplying node features with a convolutional kernel matrix shared among all edges. To increase the capacity in protein structure modeling, IEConv (Hermosilla et al., 2021) proposed to apply a learnable kernel function on edge features. In this way, m different kernel matrices can be applied on different edges, which achieves good performance but induces high memory costs.

关系图卷积层。根据上面定义的蛋白质图,我们利用 GNN 来导出每个残基和整个蛋白质的表示。GNN 的一个简单示例是 GCN,其中消息是通过将节点特征与所有边共享的卷积核矩阵相乘来计算的。为了提高蛋白质结构建模的能力,IEConv(Hermosilla 等人,2021)提出在边缘特征上应用可学习的核函数。这样,m个不同的核矩阵可以应用于不同的边缘,这实现了良好的性能,但会导致较高的内存成本。

To balance model capacity and memory cost, we use a relational graph convolutional neural network (Schlichtkrull et al., 2018) to learn graph representations, where a convolutional kernel matrix is shared within each edge type and there are |R| different kernel matrices in total. Formally, the relational graph convolutional layer used in our model is defined as

- 初始输入: (h^{(0)}_i = f_i)

- 层更新:

[ u^{(l)}i = \sigma \left( \text{BN} \left( \sum{r \in R} W_r \sum_{j \in N_r(i)} h^{(l-1)}_j \right) \right) ] - 节点更新:

[ h^{(l)}_i = h^{(l-1)}_i + u^{(l)}_i ]

Specifically, we use node features fi as initial representations. Then, given the node representation h(l) i for node i at the l-th layer, we compute updated node representation u(l) i by aggregating features from neighboring nodes Nr(i), where Nr(i) = {j ∈ V|(j, i, r) ∈ E} denotes the neighborhood of node i with the edge type r, and Wr denotes the learnable convolutional kernel matrix for edge type r. Here BN denotes a batch normalization layer and we use a ReLU function as the activation σ(·). Finally, we update h(l) i with u(l) i and add a residual connection from last layer.

具体来说,我们使用节点特征fi作为初始表示。然后,给出第l层节点i的节点表示hi(l),我们通过聚合邻居节点Nr(i)的特征来计算更新的节点表示ui(l),其中Nr(i)={j∈V|(j,i,r)∈E}表示边型为r的节点i的邻域,和Wr表示边类型为r的可学习卷积核矩阵。这里BN表示一个批归一化层,我们使用ReLU函数作为激活函数σ ( · )。最后,用ui(l)更新hi(l) ,并在最后一层增加一个残差连接。

3.2 EDGE MESSAGE PASSING LAYER

As in the literature of molecular representation learning, many geometric encoders show benefits from explicitly modeling interactions between edges. For example, DimeNet (Klicpera et al., 2020) uses a 2D spherical Fourier-Bessel basis function to represent angles between two edges and pass messages between edges. AlphaFold2 (Jumper et al., 2021) leverages the triangle attention designed for transformers to model pair representations. Inspired by this observation, we propose a variant of GearNet enhanced with an edge message passing layer, named as GearNet-Edge. The edge message passing layer can be seen as a sparse version of the pair representation update designed for graph neural networks. The main objective is to model the dependency between different interactions of a residue with other sequentially or spatially adjacent residues.

正如在分子表示学习的文献中,许多几何编码器显示出从显式建模边缘之间的相互作用中获得的好处。例如,DimeNet (克利茨佩拉等, 2020)使用2D球面傅里叶-贝塞尔基函数来表示两条边之间的角度,并在边之间传递消息。AlphaFold2 ( Jumper等, 2021)利用为Transformer设计的三角形注意力来建模成对表示。受此启发,我们提出了一种带有边缘消息传递层的GearNet变体,命名为GearNet - Edge。边消息传递层可以看作是为图神经网络设计的对表示更新的稀疏版本。主要目标是建模一个残基与其他残基的不同相互作用之间的依赖关系

Formally, we first construct a relational graph G′ = (V′, E′, R′) among edges, which is also known as line graph in the literature (Harary & Norman, 1960). Each node in the graph G′ corresponds to an edge in the original graph. G′ links edge (i, j, r1) in the original graph to edge (w, k, r2) if and only if j = w and i 6= k. The type of this edge is determined by the angle between (i, j, r1) and (w, k, r2). The angular information reflects the relative position between two edges that determines the strength of their interaction. For example, edges with smaller angles point to closer directions and thus are likely to share stronger interactions. To save memory costs for computing a large number of kernel matrices, we discretize the range [0, π] into 8 bins and use the index of the bin as the edge type.

在形式上,我们首先构造了边之间的关系图G′= ( V′, E′, R′),在文献(哈拉里&诺曼, 1960)中也称为线图。图G′中的每个节点对应原图中的一条边。G′将原图中的边( i , j , r1)链接到边( w , k , r2)当且仅当j = w,i!= k。该边的类型由( i , j , r1)和( w , k , r2)之间的夹角决定。角度信息反映了两条边之间的相对位置,决定了它们相互作用的强度。例如,角度较小的边缘指向更近的方向,因此可能具有更强的相互作用。为了节省计算大量核矩阵的内存成本,我们将范围 [0, π] 离散化为 8 个 bin,并使用 bin 的索引作为边类型。然后,我们在图 G′ 上应用类似的关系图卷积网络来获得每条边的消息函数。形式上,边缘消息传递层定义为

下面是在Markdown中表示该数学公式的方式:

-

初始输入: ( m^{(0)}_{(i, j, r_1)} = f(i, j, r_1) )

-

层更新:

[ m^{(l)}{(i, j, r_1)} = \sigma \left( \text{BN} \left( \sum{r \in R’} W’r \sum{(w, k, r_2) \in N’r((i, j, r_1))} m^{(l-1)}{(w, k, r_2)} \right) \right) ]

这个数学公式看起来是描述了一个包含多个索引的数学模型,可能与图或网格结构有关。让我逐步解释公式的各个部分:

-

初始输入(l=0时):

- ( m^{(0)}_{(i, j, r_1)} ) 表示初始输入,其中 ( i ) 和 ( j ) 是坐标索引,( r_1 ) 是另一个索引,这些索引可能代表了某种空间位置或特征。

-

层更新:

- ( m^{(l)}_{(i, j, r_1)} ) 表示在第 ( l ) 层时,具有相同坐标和索引的新值。

- ( \sigma ) 是激活函数,通常用于引入非线性。

- BN 是批量归一化,用于规范化输入以加速训练。

- ( \sum_{r \in R’} W’r \sum{(w, k, r_2) \in N’r((i, j, r_1))} m^{(l-1)}{(w, k, r_2)} ) 表示对邻居节点的加权和,其中 ( N’_r((i, j, r_1)) ) 表示与当前节点 ( (i, j, r_1) ) 相关的邻居集合,权重为 ( W’_r )。

整体而言,这个公式描述了一个多层的神经网络或图神经网络中的节点更新规则。每一层通过对邻居节点的加权和来更新当前节点的表示,其中激活函数和批量归一化用于引入非线性和规范化。这样的模型可能被用于处理包含多个维度或特征的数据结构,如图或网格。

Notably, it is a novel idea to use relational message passing to model different spatial interactions among residues. In addition, to the best of our knowledge, this is the first work that explores edge message passing for macromolecular representation learning. Compared with the triangle attention in AlphaFold2, our method considers angular information to model different types of interactions between edges, which are more efficient for sparse edge message passing.

值得注意的是,使用关系消息传递来模拟残基之间不同的空间相互作用是一个新颖的想法。此外,据我们所知,这是第一个探索大分子表示学习的边缘消息传递的工作。与 AlphaFold2 中的三角形注意力相比,我们的方法考虑角度信息来建模边缘之间不同类型的交互,这对于稀疏边缘消息传递更有效。

Invariance of GearNet and GearNet-Edge. The graph construction process and input features of GearNet and GearNet-Edge only rely on features (distances and angles) invariant to translation, rotation and reflection. Besides, the message passing layers use pre-defined edge types and other 3D information invariantly. Therefore, GearNet and GearNet-Edge can achieve E(3)-invariance.

GearNet 和 GearNet-Edge 的不变性。 GearNet和GearNet-Edge的图构建过程和输入特征仅依赖于平移、旋转和反射不变的特征(距离和角度)。此外,消息传递层不变地使用预定义的边缘类型和其他 3D 信息。因此,GearNet和GearNet-Edge可以实现E(3)-不变性。

4 GEOMETRIC PRETRAINING METHODS

In this section, we study how to boost protein representation learning via self-supervised pretraining on a massive collection of unlabeled protein structures. Despite the efficacy of self-supervisedpretraining in many domains, applying it to protein representation learning is nontrivial due to the difficulty of capturing both biochemical and spatial information in protein structures. To address the challenge, we first introduce our multiview contrastive learning method with novel augmentation functions to discover correlated co-occurrence of protein substructures and align their representations in the latent space. Furthermore, four self-prediction baselines are also proposed for pretraining structure-based encoders.

在本节中,我们研究如何通过对大量未标记蛋白质结构进行自我监督预训练来促进蛋白质表示学习。尽管自我监督的有效性许多领域的预训练,由于捕获蛋白质结构中的生化和空间信息很困难,因此将其应用于蛋白质表示学习并非易事。为了应对这一挑战,我们首先引入具有新颖增强功能的多视图对比学习方法,以发现蛋白质子结构的相关共现并在潜在空间中对齐它们的表示。此外,还提出了四个自预测基线用于预训练基于结构的编码器。

4.1 MULTIVIEW CONTRASTIVE LEARNING

It is known that structural motifs within folded protein structures are biologically related (Mackenzie & Grigoryan, 2017) and protein substructures can reflect the evolution history and functions of proteins (Ponting & Russell, 2002). Inspired by recent contrastive learning methods (Chen et al., 2020; He et al., 2020), our framework aims to preserve the similarity between these correlated substructures before and after mapping to a low-dimensional latent space. Specifically, using a similarity measurement defined in the latent space, biologically-related substructures are embedded close to each other while unrelated ones are mapped far apart. Figure 1 illustrates the high-level idea.

众所周知,折叠蛋白质结构内的结构基序具有生物学相关性,蛋白质亚结构可以反映蛋白质的进化历史和功能。受最近的对比学习方法(Chen et al., 2020; He et al., 2020)的启发,我们的框架旨在保留映射到低维潜在空间之前和之后这些相关子结构之间的相似性。具体来说,使用潜在空间中定义的相似性测量,生物相关的子结构被嵌入到彼此靠近的位置,而不相关的子结构则被映射到远离的位置。图 1 阐释了高级思想。

Constructing views that reflect protein substructures. Given a protein graph G, we consider two different sampling schemes for constructing views. The first one is subsequence cropping, which randomly samples a left residue l and a right residue r and takes all residues ranging from l to r. This sampling scheme aims to capture protein domains, consecutive protein subsequences that reoccur in different proteins and indicate their functions (Ponting & Russell, 2002). However, simply sampling protein subsequences cannot fully utilize the 3D structural information in protein data. Therefore, we further introduce a subspace cropping scheme to discover spatially correlated structural motifs. We randomly sample a residue p as the center and select all residues within a Euclidean ball with a predefined radius d. For the two sampling schemes, we take the corresponding subgraphs from the protein residue graph G = (V, E, R). In specific, the subsequence graph G(seq) l,r and the subspace graph G(space) p,d can be written as:

构建反映蛋白质子结构的视图。给定蛋白质图 G,我们考虑两种不同的采样方案来构建视图。第一个是子序列裁剪,随机采样左残基 l 和右残基 r,并获取从 l 到 r 范围内的所有残基。该采样方案旨在捕获蛋白质结构域、在不同蛋白质中重复出现的连续蛋白质子序列并表明其功能。然而,简单地对蛋白质子序列进行采样并不能充分利用蛋白质数据中的3D结构信息。因此,我们进一步引入子空间裁剪方案来发现空间相关的结构图案。我们随机采样一个残基 p 作为中心,并选择具有预定义半径 d 的欧几里德球内的所有残基。对于这两种采样方案,我们从蛋白质残基图 G = (V, E, R) 中取出相应的子图。具体来说,子序列图 G(seq) l,r 和子空间图 G(space) p,d 可以写为:

这段文字描述了两种采样方案对应的蛋白质残基图 (G = (V, E, R)) 中的子图。具体来说,子序列图 (G(\text{seq}){l,r}) 和子空间图 (G(\text{space}){p,d}) 的构建方式如下:

-

子序列图 (G(\text{seq})_{l,r}) 的构建:

- (V(\text{seq})_{l,r}) 包含了原始图 (G) 中序号在 (l) 和 (r) 之间的所有节点。

- (E(\text{seq}){l,r}) 包含了连接 (V(\text{seq}){l,r}) 中节点的所有边。

- (R) 是原始图 (G) 中边的类型集合。

- 因此,(G(\text{seq}){l,r} = (V(\text{seq}){l,r}, E(\text{seq})_{l,r}, R)) 是子序列图的表示。

-

子空间图 (G(\text{space})_{p,d}) 的构建:

- (V(\text{space})_{p,d}) 包含了原始图 (G) 中与空间中点 (p) 距离最大为 (d) 的所有节点。

- (E(\text{space}){p,d}) 包含了连接 (V(\text{space}){p,d}) 中节点的所有边。

- (R) 是原始图 (G) 中边的类型集合。

- 因此,(G(\text{space}){p,d} = (V(\text{space}){p,d}, E(\text{space})_{p,d}, R)) 是子空间图的表示。

这两个子图的区别在于它们选择节点的方式,其中子序列图 (G(\text{seq}){l,r}) 关注于蛋白质序列中的顺序位置,而子空间图 (G(\text{space}){p,d}) 关注于节点在空间中的位置。

举例说明:

-

子序列图 (G(\text{seq})_{l,r}):

- 假设蛋白质序列中有一段 (l) 到 (r) 的残基序列,那么子序列图将包含这段序列中的所有节点和它们之间的边。

- 例子:如果 (l = 10),(r = 20),那么 (G(\text{seq})_{l,r}) 将包含序列位置在 10 到 20 之间的所有残基。

-

子空间图 (G(\text{space})_{p,d}):

- 假设在蛋白质的三维空间中选择了一个点 (p),并定义了一个距离阈值 (d),那么子空间图将包含与点 (p) 的距离不超过 (d) 的所有节点及它们之间的边。

- 例子:如果选择空间中的一个点 (p) 代表蛋白质的某个结构域,并设置 (d = 5) Å,则 (G(\text{space})_{p,d}) 将包含距离点 (p) 不超过 5 Å 的所有残基。

为什么子空间图更能捕获结构信息?

- 子空间图关注于蛋白质在三维空间中的结构,因此能够捕捉到蛋白质的立体构象和空间关系。

- 通过选择不同的空间点 (p) 和距离阈值 (d),可以定位和提取感兴趣的结构域,使得子空间图更具灵活性。

- 考虑到蛋白质结构与其生物功能密切相关,子空间图的构建方式有助于在蛋白质结构中关注于特定的结构元素,从而更好地理解蛋白质的功能和活性。

总的来说,子序列图关注于蛋白质的序列顺序,而子空间图关注于蛋白质在三维空间中的结构,两者结合可以更全面地分析蛋白质的结构与功能。

Contrastive learning. We follow SimCLR (Chen et al., 2020) to optimize a contrastive loss function and thus maximize the mutual information between these biologically correlated views. For each protein G, we sample two views Gx and Gy by first randomly choosing one sampling scheme for extracting substructures and then randomly selecting one of the two noise functions with equal probability. We compute the graph representations hx and hy of two views using our structurebased encoder. Then, a two-layer MLP projection head is applied to map the representations to a lower-dimensional space, denoted as zx and zy. Finally, an InfoNCE loss function is defined by distinguishing views from the same or different proteins using their similarities (Oord et al., 2018). For a positive pair x and y, we treat views from other proteins in the same mini-batch as negative pairs. Mathematically, the loss function for a positive pair of views x and y can be written as:

对比学习。我们遵循 SimCLR (Chen et al., 2020) 来优化对比损失函数,从而最大化这些生物相关视图之间的相互信息。对于每个蛋白质 G,我们通过首先随机选择一种采样方案来提取子结构,然后以相等的概率随机选择两个噪声函数之一来对两个视图 Gx 和 Gy 进行采样。我们使用基于结构的编码器计算两个视图的图形表示 hx 和 hy。然后,应用两层 MLP 投影头将表示映射到低维空间,表示为 zx 和 zy。最后,InfoNCE 损失函数是通过利用相同或不同蛋白质的相似性区分视图来定义的(Oord 等人,2018)。对于正对 x 和 y,我们将同一小批量中其他蛋白质的视图视为负对。从数学上讲,一对正视图 x 和 y 的损失函数可以写为:

,其中涉及了样本 (x) 和 (y),以及一些与它们相关的表示 (z_x) 和 (z_y)。让我来解释一下这个公式的各个部分:

-

(L_{x,y}):表示样本 (x) 和 (y) 之间的损失。

-

(-\log):对公式中的指数进行对数运算,通常用于将概率值映射到损失空间。

-

(\text{exp}(\text{sim}(z_x, z_y)/\tau)):表示 (z_x) 和 (z_y) 的相似度(可能是余弦相似度或其他相似度度量)的指数形式。分子部分表示样本 (x) 和 (y) 之间的相似度。

-

(\sum_{k=1}^{2B} 1[k \neq x] \text{exp}(\text{sim}(z_y, z_k)/\tau)):对 (k) 的范围进行求和,其中 (k) 遍历样本集合中的所有元素,但排除了 (x)。这一部分表示对样本 (y) 与其他所有样本 (k) 之间相似度的加权和。

-

(\tau):是一个正的超参数,通常称为温度,它调整了相似度分布的平滑度。

整体而言,这个损失函数是为了促使 (z_x) 和 (z_y) 相似度更大,同时确保 (z_y) 与其他所有样本 (k) 的相似度相对较小。这样的目标可能有助于在训练中推动模型学到更有区分性的表示。

Sizes of sampled substructures. The design of our random sampling scheme aims to extract biologically meaningful substructures for contrastive learning. To attain this goal, it is of critical importance to determine the sampling length r − l for subsequence cropping and radius d for subspace cropping. On the one hand, we need sufficiently long subsequences and large subspaces to ensure that the sampled substructures can meaningfully reflect the whole protein structure and thus share high mutual information with each other. On the other hand, if the sampled substructures are too large, the generated views will be so similar that the contrastive learning problem becomes trivial. Furthermore, large substructures limit the batch size used in contrastive learning, which has been shown to be harmful for the performance (Chen et al., 2020).

采样子结构的大小。我们的随机抽样方案的设计旨在提取具有生物学意义的子结构以进行对比学习。为了实现这一目标,确定后续裁剪的采样长度 r − l 和子空间裁剪的半径 d 至关重要。一方面,我们需要足够长的子序列和大的子空间,以确保采样的子结构能够有意义地反映整个蛋白质结构,从而彼此共享高互信息。另一方面,如果采样的子结构太大,生成的视图将非常相似,以至于对比学习问题变得微不足道。此外,大型子结构限制了对比学习中使用的批量大小,这已被证明对性能有害(Chen 等人,2020)。

To study the effect of sizes of sampled substructures, we show experimental results on Enzyme Commission (abbr. EC, details in Sec. 5.1) using Multiview Contrast with the subsequence and substructure cropping function, respectively. To prevent the influence of noise functions, we only consider identity transformation in both settings. The results are plotted in Figure 2. For both subsequence and subspace cropping, as the sampled substructures become larger, the results first rise and then drop from a certain threshold. This phenomenon agrees with our analysis above. In practice, we set 50 residues as the length for subsequence and 15 as the radius for subspace cropping, which are large enough to capture most structural motifs according to statistics in previous studies (Tateno et al., 1997).

为了研究采样子结构大小的影响,我们分别使用具有子序列和子结构裁剪功能的多视图对比度在 Enzyme Commission(缩写 EC,详细信息见第 5.1 节)上展示了实验结果。为了防止噪声函数的影响,我们只考虑两种设置中的恒等变换。结果如图 2 所示。对于子序列和子空间裁剪,随着采样的子结构变大,结果首先上升,然后从某个阈值下降。这个现象与我们上面的分析是一致的。在实践中,我们将 50 个残基设置为子序列的长度,将 15 个残基设置为子空间裁剪的半径,根据先前研究的统计,这些残基足够大以捕获大多数结构基序(Tateno et al., 1997)。

In Appendix J, we visualize the representations of the pretrained model with this method and assign familial labels based on domain annotations. We can observe clear separation based on the familial classification of the proteins in the dataset, which proves the effectiveness of our pretraining method.

在附录 J 中,我们使用此方法可视化预训练模型的表示,并根据领域注释分配家族标签。我们可以根据数据集中蛋白质的家族分类观察到清晰的分离,这证明了我们的预训练方法的有效性。

4.2 STRAIGHTFORWARD BASELINES: SELF-PREDICTION METHODS

Another line of model pre-training research is based on the recent progress of self-prediction methods in natural language processing (Devlin et al., 2018; Brown et al., 2020). Along that line, given a protein, our objective can be formulated as predicting one part of the protein given the remainder of the structure. Here, we propose four self-supervised tasks based on physicochemical or geometric properties: residue types, distances, angles and dihedrals.

模型预训练研究的另一条线是基于自然语言处理中自我预测方法的最新进展(Devlin et al., 2018;Brown et al., 2020)。沿着这条线,给定一种蛋白质,我们的目标可以表述为在给定结构的其余部分的情况下预测蛋白质的一部分。在这里,我们提出了四个基于物理化学或几何特性的自监督任务:残基类型、距离、角度和二面角。

The four methods perform masked prediction on single residues, residue pairs, triplets and quadruples, respectively. Masked residue type prediction is widely used for pretraining protein language models (Bepler & Berger, 2021) and also known as masked inverse folding in the protein community (Yang et al., 2022). Distances, angles and dihedrals have been shown to be important features that reflect the relative position between residues (Klicpera et al., 2020). For angle and dihedral prediction, we sample adjacent edges to better capture local structual information. Since angular values are more sensitive to errors in protein structures than distances, we use discretized values for prediction. The four objectives are summarized in Table 1 and details will be discussed in Appendix. D.

. Since angular values are more sensitive to errors in protein structures than distances, we use discretized values for prediction. The four objectives are summarized in Table 1 and details will be discussed in Appendix. D.

这四种方法分别对单个残基、残基对、三元组和四元组进行屏蔽预测。掩蔽残基类型预测广泛用于预训练蛋白质语言,在蛋白质界也称为掩蔽反向折叠。距离、角度和二面角已被证明是反映残基之间相对位置的重要特征(Klicpera 等人,2020)。对于角度和二面角预测,我们对相邻边缘进行采样以更好地捕获局部结构信息。由于角度值对蛋白质结构中的误差比距离更敏感,因此我们使用离散值进行预测。表 1 总结了这四个目标,详细信息将在附录D讨论