Presto使用Docker独立运行Hive Standalone Metastore管理MinIO(S3)

在Hive 3.0.0以及之后,Hive Metastore便可独立于Hive单独运行,可作为各数据的元数据中心。本文介绍使用Docker运行Hive Standalone Metastore,并以Presto中的Hive连接器为例,通过Hive Metastore管理MinIO(S3兼容的对象存储)中的数据。

本文涉及的组件及其版本:

| 组件名称 | 组件版本 |

| Hive Standalone Metastore | 3.1.2 |

| hadoop | 3.2.2 |

| mysql | 5.7.35 |

| presto | 0.261 |

| MinIO | 8.3.3 |

如果您还未安装Minio,可参考:https://min.io/download

mysql安装方式参考:https://lrting-top.blog.csdn.net/article/details/120424755

presto安装方式参考:https://blog.csdn.net/weixin_39636364/article/details/120518455

构建Dockerfile

Hive MetaStore需要以关系型数据库作为元数据管理,本文以MySQL为例,作为元数据存储。

- MySQL版本:5.7.35

- hostname:192.168.1.15

- port:3306

- username:root

- password:Pass-123-root

- database:metastore

除此之外,在上文中我们说到,要用此Hive MetaStore作为MinIO的元数据管理,所以您还需配置MinIO的配置信息:

- fs.s3a.endpoint:http://192.168.1.15:9000

- fs.s3a.path.style.access:true

- fs.s3a.connection.ssl.enabled:false

- fs.s3a.access.key:minio

- fs.s3a.secret.key:minio123

以上述配置信息构建Hive Metastore的配置信息,metastore-site.xml

<configuration>

<property>

<name>fs.s3a.access.keyname>

<value>M6ZBZGI1IIDA1O130OP8value>

property>

<property>

<name>fs.s3a.secret.keyname>

<value>f0BSwBw5GLKSS8hpIZd+qhJBIKooqq7xQdiowhpyvalue>

property>

<property>

<name>fs.s3a.connection.ssl.enabledname>

<value>falsevalue>

property>

<property>

<name>fs.s3a.path.style.accessname>

<value>truevalue>

property>

<property>

<name>fs.s3a.endpointname>

<value>http://192.168.1.15:9000value>

property>

<property>

<name>javax.jdo.option.ConnectionURLname>

<value>jdbc:mysql://192.168.1.15:3306/metastore?useSSL=false&serverTimezone=UTCvalue>

property>

<property>

<name>javax.jdo.option.ConnectionDriverNamename>

<value>com.mysql.jdbc.Drivervalue>

property>

<property>

<name>javax.jdo.option.ConnectionUserNamename>

<value>rootvalue>

property>

<property>

<name>javax.jdo.option.ConnectionPasswordname>

<value>m98Edicinesvalue>

property>

<property>

<name>hive.metastore.event.db.notification.api.authname>

<value>falsevalue>

property>

<property>

<name>metastore.thrift.urisname>

<value>thrift://localhost:9083value>

<description>Thrift URI for the remote metastore. Used by metastore client to connect to remote metastore.description>

property>

<property>

<name>metastore.task.threads.alwaysname>

<value>org.apache.hadoop.hive.metastore.events.EventCleanerTaskvalue>

property>

<property>

<name>metastore.expression.proxyname>

<value>org.apache.hadoop.hive.metastore.DefaultPartitionExpressionProxyvalue>

property>

<property>

<name>metastore.warehouse.dirname>

<value>/user/hive/warehousevalue>

property>

configuration>

在构建Hive Metastore镜像时,你还需要下载如下安装包以及JAR包:

- hive-standalone-metastore-3.1.2-bin.tar.gz

- hadoop-3.2.2.tar.gz

- mysql-connector-java-5.1.49.jar

本文以将上述软件包放置于HTTP服务器为例:

全部Dockerfile为:

FROM centos:centos7

RUN yum install -y wget java-1.8.0-openjdk-devel && yum clean all

ARG HTTP_SERVER_HOSTNAME_PORT=192.168.1.15:11180

WORKDIR /install

RUN wget http://${HTTP_SERVER_HOSTNAME}/downloads/hive-standalone-metastore-3.1.2-bin.tar.gz

RUN tar zxvf hive-standalone-metastore-3.1.2-bin.tar.gz

RUN rm -rf hive-standalone-metastore-3.1.2-bin.tar.gz

RUN mv apache-hive-metastore-3.1.2-bin metastore

RUN wget http://${HTTP_SERVER_HOSTNAME}/downloads/hadoop-3.2.2.tar.gz

RUN tar zxvf hadoop-3.2.2.tar.gz

RUN rm -rf hadoop-3.2.2.tar.gz

RUN mv hadoop-3.2.2 hadoop

RUN wget http://${HTTP_SERVER_HOSTNAME}/downloads/mysql-connector-java-5.1.49.jar

RUN cp mysql-connector-java-5.1.49.jar ./metastore/lib

ENV JAVA_HOME=/usr/lib/jvm/java-1.8.0-openjdk

ENV HADOOP_HOME=/install/hadoop

RUN rm -f /install/metastore/lib/guava-19.0.jar \

&& cp ${HADOOP_HOME}/share/hadoop/common/lib/guava-27.0-jre.jar /install/metastore/lib \

&& cp ${HADOOP_HOME}/share/hadoop/tools/lib/hadoop-aws-3.2.2.jar /install/metastore/lib \

&& cp ${HADOOP_HOME}/share/hadoop/tools/lib/aws-java-sdk-bundle-*.jar /install/metastore/lib

# copy Hive metastore configuration file

COPY metastore-site.xml /install/metastore/conf/

# Hive metastore data folder

VOLUME ["/user/hive/warehouse"]

WORKDIR /install/metastore

RUN bin/schematool -initSchema -dbType mysql

CMD ["/install/metastore/bin/start-metastore"]

构建Docker镜像

将metastore-site.xml与Dockerfile文件放置于同一个目录下,并进入该目录中执行:

docker build . -t minio-hive-standalone-metastore:v1.0

运行Hive Metastore

docker run -d -p 9083:9083/tcp --name minio-hive-metastore minio-hive-standalone-metastore:v1.0

使用Presto测试Hive Metastore

如果您还没有安装好Presto,请先按照文档https://blog.csdn.net/weixin_39636364/article/details/120518455对catalog配置进行如下修改,并启动presto server

connector.name=hive-hadoop2

hive.metastore.uri=thrift://URL:9083

hive.metastore.username=metastore

hive.s3.aws-access-key=minio

hive.s3.aws-secret-key=minio123

hive.s3.endpoint=http://URL:9000

hive.s3.path-style-access=true

进入presto cli,查看catalogs:

show catalogs;

得到:

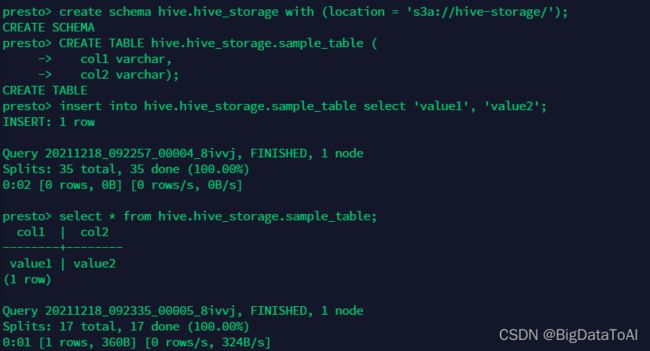

创建schema:

已知我们在MinIO上有一个hive-storage的buckets,那么执行如下命令创建schema

create schema hive.hive_storage with (location = 's3a://hive-storage/');

在该schema中创建表:

CREATE TABLE hive.hive_storage.sample_table (

col1 varchar,

col2 varchar);

在表中插入数据

insert into hive.hive_storage.sample_table select 'value1', 'value2';

数据查询:

select * from hive.hive_storage.sample_table;

全部操作结果为: