Elasticsearch分词器插件和文档批量操作

一、ik分词器插件

Elasticsearch提供插件机制对系统进行扩展,这里我们离线安装 ik中文分词插件。

1、离线安装

本地下载相应的插件,解压,然后手动上传到 elasticsearch的plugins目录,然后重启ES实例就可以了。

注意:

- IK分词器的版本一定要与 Elasticsearch的版本一致,否则 Elasticsearch无法启动。

- IK自 v5.0.0 起,移除名为 ik 的 analyzer和 tokenizer,请分别使用 ik_smart 和 ik_max_word。

1)下载ik中文分词插件

下载 ik中文分词器-Github地址:https://github.com/medcl/elasticsearch-analysis-ik

因为我再使用 docker安装时,做了数据卷挂载目录 /opt/soft/elasticsearch/plugins。

所以在该目录下创建文件夹 analysis-ik。并将 zip包放至 analysis-ik目录下,解压之后删除zip包。

[root@centos7 analysis-ik]# ls

commons-codec-1.9.jar config httpclient-4.5.2.jar plugin-descriptor.properties

commons-logging-1.2.jar elasticsearch-analysis-ik-7.17.4.jar httpcore-4.4.4.jar plugin-security.policy

3)重启ES

进入ES容器服务,将该文件放到复制到 /opt/bitnami/elasticsearch/plugin/目录下面.。然后重启 ES容器服务。

I have no name!@5474ad4715c6:/usr/share/elasticsearch/plugins$ cp -rp ./analysis-ik/ /opt/bitnami/elasticsearch/plugins/

4)查看已安装插件

进入 /opt/bitnami/elasticsearch/bin/目录,通过 ./elasticsearch-plugin list 查看已安装的插件。

I have no name!@5474ad4715c6:/$ cd /opt/bitnami/elasticsearch/bin/

I have no name!@5474ad4715c6:/opt/bitnami/elasticsearch/bin$ ./elasticsearch-plugin list

analysis-ik

repository-s3

2、测试ik分词效果

ik提供两种分词算法:ik_smart 和 ik_max_word

- ik_smart : 最粗粒度的拆分

- ik_max_word : 最细粒度的拆分

- ik分词器也支持自定义扩展词词典。

ik_max_word 和 ik_smart 什么区别?

- ik_smart:会做最粗粒度的拆分,比如会将“中华人民共和国国歌”拆分为“中华人民共和国,国歌”,适合 Phrase 查询。

- ik_max_word:会将文本做最细粒度的拆分,比如会将“中华人民共和国国歌”拆分为“中华人民共和国,中华人民,中华,华人,人民共和国,人民,人,民,共和国,共和,和,国国,国歌”,会穷尽各种可能的组合,适合 Term Query;

2.1 最粗粒度的拆分

#ik_smart:会做最粗粒度的拆分

POST _analyze

{

"analyzer":"ik_smart",

"text":"中华人民共和国国歌"

}

#结果

{

"tokens" : [

{

"token" : "中华人民共和国",

"start_offset" : 0,

"end_offset" : 7,

"type" : "CN_WORD",

"position" : 0

},

{

"token" : "国歌",

"start_offset" : 7,

"end_offset" : 9,

"type" : "CN_WORD",

"position" : 1

}

]

}

2.2 最细粒度的拆分

#ik_smart:会做最粗粒度的拆

POST _analyze

{

"analyzer":"ik_smart",

"text":"中华人民共和国"

}

#ik_max_word:会将文本做最细粒度的拆分

POST _analyze

{

"analyzer":"ik_max_word",

"text":"中华人民共和国国歌"

}

#结果

{

"tokens" : [

{

"token" : "中华人民共和国",

"start_offset" : 0,

"end_offset" : 7,

"type" : "CN_WORD",

"position" : 0

},

{

"token" : "中华人民",

"start_offset" : 0,

"end_offset" : 4,

"type" : "CN_WORD",

"position" : 1

},

{

"token" : "中华",

"start_offset" : 0,

"end_offset" : 2,

"type" : "CN_WORD",

"position" : 2

},

{

"token" : "华人",

"start_offset" : 1,

"end_offset" : 3,

"type" : "CN_WORD",

"position" : 3

},

{

"token" : "人民共和国",

"start_offset" : 2,

"end_offset" : 7,

"type" : "CN_WORD",

"position" : 4

},

{

"token" : "人民",

"start_offset" : 2,

"end_offset" : 4,

"type" : "CN_WORD",

"position" : 5

},

{

"token" : "共和国",

"start_offset" : 4,

"end_offset" : 7,

"type" : "CN_WORD",

"position" : 6

},

{

"token" : "共和",

"start_offset" : 4,

"end_offset" : 6,

"type" : "CN_WORD",

"position" : 7

},

{

"token" : "国",

"start_offset" : 6,

"end_offset" : 7,

"type" : "CN_CHAR",

"position" : 8

},

{

"token" : "国歌",

"start_offset" : 7,

"end_offset" : 9,

"type" : "CN_WORD",

"position" : 9

}

]

}

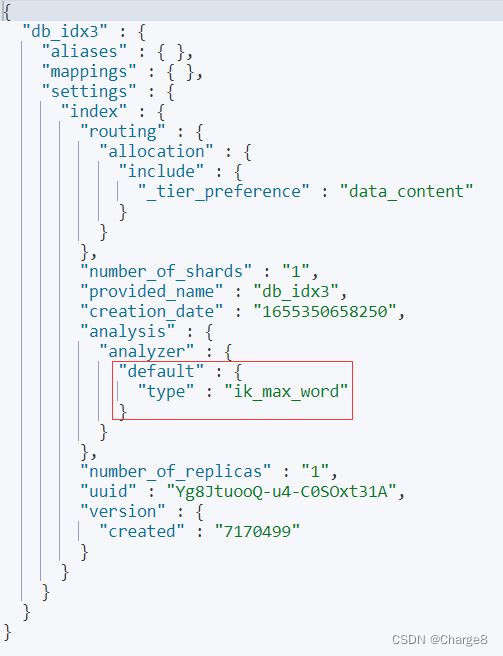

3、索引指定分词器

创建索引时,我们可以指定 ik分词器作为默认分词器。

PUT /db_idx3

{

"settings":{

"index":{

"analysis.analyzer.default.type":"ik_max_word"

}

}

}

1)插入一些文档数据。

PUT /db_idx3/_doc/1

{

"name":"赵云",

"sex":1,

"age":18,

"desc":"王者打野位"

}

PUT /db_idx3/_doc/2

{

"name":"韩信",

"sex":1,

"age":19,

"desc":"打野野王"

}

PUT /db_idx3/_doc/3

{

"name":"赵子龙",

"sex":1,

"age":20,

"desc":"常山五虎赵子龙"

}

2)ik_max_word:会将文本做最细粒度的拆分

#ik_max_word:会将文本做最细粒度的拆分

POST _analyze

{

"analyzer":"ik_max_word",

"text":"王者打野位"

}

#结果

{

"tokens" : [

{

"token" : "王者",

"start_offset" : 0,

"end_offset" : 2,

"type" : "CN_WORD",

"position" : 0

},

{

"token" : "打野",

"start_offset" : 2,

"end_offset" : 4,

"type" : "CN_WORD",

"position" : 1

},

{

"token" : "位",

"start_offset" : 4,

"end_offset" : 5,

"type" : "CN_CHAR",

"position" : 2

}

]

}

3)分词高亮查询

GET /db_idx3/_search

{

"query":{

"term":{

"desc": {

"value":"打野"

}

}

},

"highlight": {

"fields": {

"*":{}

}

}

}

二、文档批量操作

文档批量操作使用 bulk API 允许在单个步骤中进行多次 create 、 index 、 update 或 delete 请求。

- 支持在一次API调用中,对不同的索引进行操作

- 操作中单条操作失败,并不会影响其他操作

- 返回结果包括了每一条操作执行的结果

基本格式:

{ action: { metadata }}\n

{ request body }\n

{ action: { metadata }}\n

{ request body }\n

...

注意:create,index,delete和update 操作类型,它们也可以组合一起使用。

1、create批量创建文档

POST _bulk

{"create":{"_index":"db_idx4", "_type":"_doc", "_id":1}}

{"id":1,"name":"赵子龙1","sex":1,"age":18,"desc":"常山五虎赵子龙1"}

{"create":{"_index":"db_idx4", "_type":"_doc", "_id":2}}

{"id":2,"name":"赵子龙2","sex":1,"age":19,"desc":"常山五虎赵子龙2"}

- 如果原文档不存在,则是创建

- 如果原文档存在,则是报错 document already exists。

2、index创建或全量替换

POST _bulk

{"index":{"_index":"db_idx4", "_type":"_doc", "_id":2}}

{"id":2,"name":"赵子龙222","sex":1,"desc":"常山五虎赵子龙222"}

{"index":{"_index":"db_idx4", "_type":"_doc", "_id":3}}

{"id":3,"name":"赵子龙3","sex":1,"age":20,"desc":"常山五虎赵子龙3"}

- 如果原文档不存在,则是创建

- 如果原文档存在,则是替换(全量修改原文档)

3、update批量修改

POST _bulk

{"update":{"_index":"db_idx4", "_type":"_doc", "_id":2}}

{"doc":{"age":99, "desc":"常山五虎赵子龙update", "title":"五虎上将"}}

{"update":{"_index":"db_idx4", "_type":"_doc", "_id":3}}

{"doc":{"desc":"常山五虎赵子龙3update"}}

- 原文档必须存在该id记录,更新只会对相应字段做增量修改

- 如果原文档不存在修改字段,则会添加该字段

4、delete批量删除

POST _bulk

{"delete":{"_index":"db_idx4", "_type":"_doc", "_id":2}}

{"delete":{"_index":"db_idx4", "_type":"_doc", "_id":3}}

5、组合应用

POST _bulk

{"create":{"_index":"db_idx4", "_type":"_doc", "_id":2}}

{"id":2,"name":"赵子龙2","sex":1,"age":19,"desc":"常山五虎赵子龙2"}

{"index":{"_index":"db_idx4", "_type":"_doc", "_id":3}}

{"id":3,"name":"赵子龙3","sex":1,"age":20,"desc":"常山五虎赵子龙3"}

{"update":{"_index":"db_idx4", "_type":"_doc", "_id":3}}

{"doc":{"age":99, "desc":"常山五虎赵子龙update", "title":"五虎上将"}}

{"delete":{"_index":"db_idx4", "_type":"_doc", "_id":2}}

6、批量读取

ES的批量查询可以使用 _mget和 _msearch两种。

- _mget:需要我们知道文档的id,可以指定不同的index,也可以指定返回值source。

- _msearch:可以通过多字段查询来进行一个批量的查找。

6.1 _mget

#1、可以通过ID批量获取不同index和type的数据

GET _mget

{

"docs":[

{

"_index":"db_idx4",

"_tyep":"_doc",

"_id":1

},

{

"_index":"db_idx4",

"_tyep":"_doc",

"_id":3

}

]

}

#2、可以通过ID批量获取index的数据

GET /db_idx4/_mget

{

"docs":[

{"_id":1},

{"_id":3}

]

}

#2、简化后

GET /db_idx4/_mget

{

"ids":[1,3]

}

6.2 _msearch

在_msearch中,请求格式和 bulk类似。_msearch查询数据需要两个对象:

- 第一个设置 index和 type,如果查该 index可以直接用空对象表示

- 第二个设置查询语句。查询语句和search相同。

GET /db_idx4/_msearch

{}

{"query": {"match_all":{}}, "from" : 0, "size" : 2}

GET _msearch

{"index":"db_idx4"}

{"query": {"match_all" : {}}, "size" : 1}

参考文章:

- 官方文档:https://www.elastic.co/guide/cn/elasticsearch/guide/current/bulk.html

– 求知若饥,虚心若愚。