MIT 6.824分布式 LAB2D:Raft

Lab 2D是lab2的最后阶段了,这一阶段就是加了一个快照机制,但是这种实验中这个快照是何时以及如何进行的,建议大家事前先去看一看,不然就会碰到各种问题。例如,我在测试过程中莫名其妙发现leader死锁了,以及测试显示lastapplied的index值和commandIndex值不匹配等问题。

这个实验的代码修改范围挺大,因为涉及到了rf.log的索引值的修改,以及log replication的运行逻辑调整。写lab2D的过程,感觉就是以往的实验差不多,跑测试,然后找bug,然后打补丁。

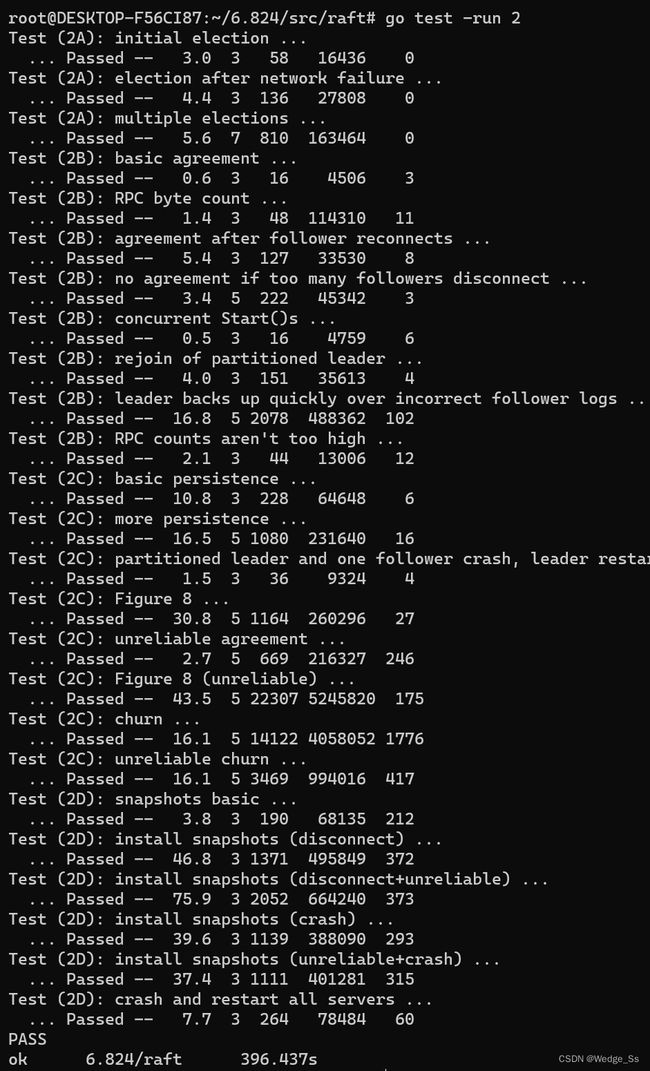

此外本实验室需要通过LAB 2A+2B+2C+2D的所有测试,在其中好像又发现了点过去写的bug,这次也调整过来,最终也全部测试通过。

介绍

Lab 2D就是让我们实现snapshot,让节点中的执行后的状态能够保存下来,并将那些快照之前的log值都删掉,可以避免每个节点中log太多占据了太多内存,以及避免节点重启后需要从头开始执行大量的log指令,节点仅需读取快照,并重新执行快照后的log指令即可。

go test -run 2 # 这个就是用来进行Lab2的所有测试的指令

注意事项(实验教程中Hint)

1、实验教程中建议我们先加入lastIncludeIndex这个变量,在该变量的存在的前提下修改代码,并重新跑Lab2B和2C。

2、在实验中,由于有部分Log在进行snapshot后会被丢弃,因此需要做好记录已经进入快照的log的index值即lastIncludedIndex,我们需要借助这个变量来访问指定index的log在rf.log数组所在的位置。

3、当follower的Log过于落后,和leader相比,部分缺少的log在leader那儿都已经进快照了,那么leader就需要给follower调用InstallSnapshot RPC。

4、论文中的快照发送方式是将快照进行切片,分片进行传输,但是本实验中,我们无需考虑这些东西,因此offset机制就不应该在本实验中设计。

5、每个节点的log在进行snapshot后必须丢弃掉快照前的Log,确保那些log的内存能够被释放,那些log在丢弃掉后,不应该还能访问到。

6、在快照后,对rf.log进行修剪后,leader在调用AppendEntries RPC时需要发送prevLogIndex和prevLogTerm仍然都需要发送的,那么就需要注意,因为可能经过快照后,rf.log中的所有记录直接都被清空了,那么就需要查看lastIncludedIndex和lastIncludedTerm。因此lastIncluedeIndex和lastIncludedTerm也需要进行持久化。

7、本实验完成后,不仅要通过Lab2D的测试,应当要能够一次性通过Lab2的所有测试。

8、实验中并不建议我们实现CondInstallSnapshot函数,让我们实现InstallSnapshot这个RPC函数即可。

以上是实验教程中的提示,下面在各个代码实现处,还要列出我当时碰到的各种情况,需要注意的地方。

本次实验内容

-

调整Raft结构体

-

调整persist函数

-

调整readPersist函数

-

实现Snapshot函数

-

实现InstallSnapshot的RPC

-

调整AppendEntries函数

-

调整Start函数

-

调整ticker函数

代码阶段

注意:基本上代码通过rf.log数组来访问log时,都需要用到rf.lastIncludedIndex。因此,需要在前面阶段代码的基础上,在rf.log[j-rf.lastIncludedIndex],rf.log[rf.lastApplied-rf.lastIncludedIndex]等这些地方加上"-rf.lastIncludedIndex"

调整Raft结构体

由于加入了快照机制,那么就需要考虑rf.log被修剪的情况,需要记录lastIncludedIndex和lastIncludedTerm这两个参数,这两个变量指的是被经过快照后,被修剪的最后一个log的index和term值。在rf.log数组中访问指定index的log时均需要使用该变量,例如:访问index=n的log,通过rf.log数组来访问的话,就是rf.log[n-rf.lastIncludedIndex]即为index=n的log。因此直接把这两个变量加到raft结构体中,利用rf.mu这个互斥锁来方便各个协程进行互斥使用。

代码如下:

type Raft struct {

mu sync.Mutex // Lock to protect shared access to this peer's state

peers []*labrpc.ClientEnd // RPC end points of all peers

persister *Persister // Object to hold this peer's persisted state

me int // this peer's index into peers[]

dead int32 // set by Kill()

// Your data here (2A, 2B, 2C).

// Look at the paper's Figure 2 for a description of what

// state a Raft server must maintain.

peerNum int

// persistent state

currentTerm int

voteFor int

log []LogEntry

lastIncludedIndex int

lastIncludedTerm int

// volatile state

commitIndex int

lastApplied int

// lastHeardTime time.Time

state string

lastLogIndex int

lastLogTerm int

// send each commited command to applyCh

applyCh chan ApplyMsg

// Candidate synchronize election with condition variable

mesMutex sync.Mutex // used to lock variable opSelect

messageCond *sync.Cond

// opSelect == 1 -> start election, opSelect == -1 -> stay still, opSelect == 2 -> be a leader, opSelect == 3 -> election timeout

opSelect int

// special state for leader

nextIndex []int

matchIndex []int

}调整persist函数

lastIncludedIndex和lastIncludedTerm这两个变量应当持久化,同时节点的log记录也不再是完整的了,而是经过快照机制修剪过的了。

代码如下:

func (rf *Raft) persist() {

// Your code here (2C).

// Example:

// w := new(bytes.Buffer)

// e := labgob.NewEncoder(w)

// e.Encode(rf.xxx)

// e.Encode(rf.yyy)

// data := w.Bytes()

// rf.persister.SaveRaftState(data)

w := new(bytes.Buffer)

e := labgob.NewEncoder(w)

e.Encode(rf.currentTerm)

e.Encode(rf.voteFor)

e.Encode(rf.log)

e.Encode(rf.lastIncludedIndex)

e.Encode(rf.lastIncludedTerm)

data := w.Bytes()

rf.persister.SaveRaftState(data)

// fmt.Printf("%v raft%d persists its state, term:%d, voteFor:%d, logLength:%d\n", time.Now(), rf.me, rf.currentTerm, rf.voteFor, len(rf.log))

}调整readPersist函数

注意:

在没有快照机制之前,节点崩溃重启是将rf.commitIndex设置为0,rf.lastApplied设置为0,由于节点的log记录并没有修剪,是完整的,通过leader更新commitIndex来重新执行log中的command来恢复状态。

在有快照机制后,节点在崩溃后重启的时候,由于log是经过修剪的,节点开始就可以读取快照来获取状态,并读取持久化的信息得知lastIncludedIndex和lastIncludedTerm从而得知哪些log已经被修剪了,得知快照后的状态对应的commitIndex和lastApplied,因此如果有快照的话,那么节点重启后的commitIndex和lastApplied不应该为0。

此外,可能节点的log经过快照被修剪光了,无法通过rf.log来获取lastLogIndex和lastLogTerm。那么此时的lastLogIndex和lastLogTerm就应该等同于lastIncludedIndex和lastIncludedTerm。

以上的注意点即为该函数需要调整的点。

代码如下:

func (rf *Raft) readPersist(data []byte) {

if data == nil || len(data) < 1 { // bootstrap without any state?

return

}

r := bytes.NewBuffer(data)

d := labgob.NewDecoder(r)

var term int

var voteFor int

var log []LogEntry

var lastIncludedIndex int

var lastIncludedTerm int

if d.Decode(&term) != nil || d.Decode(&voteFor) != nil || d.Decode(&log) != nil || d.Decode(&lastIncludedIndex) != nil || d.Decode(&lastIncludedTerm) != nil {

// fmt.Printf("Error: raft%d readPersist.", rf.me)

} else {

rf.mu.Lock()

rf.currentTerm = term

rf.voteFor = voteFor

rf.log = log

rf.lastIncludedIndex = lastIncludedIndex

rf.lastIncludedTerm = lastIncludedTerm

rf.commitIndex = lastIncludedIndex

rf.lastApplied = lastIncludedIndex

var logLength = len(rf.log)

// fmt.Printf("%v raft%d readPersist, term:%d, voteFor:%d, logLength:%d, lastIncludedIndex:%d, lastIncludedTerm:%d\n", time.Now(), rf.me, rf.currentTerm, rf.voteFor, logLength, lastIncludedIndex, lastIncludedTerm)

if logLength == 1 {

rf.lastLogIndex = rf.lastIncludedIndex

rf.lastLogTerm = rf.lastIncludedTerm

} else {

rf.lastLogTerm = rf.log[logLength-1].Term

rf.lastLogIndex = rf.log[logLength-1].Index

}

rf.mu.Unlock()

}

}实现Snapshot函数

该函数主要由节点自己调用,来创建一个新的快照。

主要流程就是;检查该创建的快照是否已经过时,若过时则直接return。根据快照范围内log的index,将节点自身的log数组进行修剪。创建好快照后,节点那些需要持久化的信息也会更新,像rf.log、rf.lastIncludedIndex、rf.lastIncludedTerm。那么就还需要将这些更新后的信息进行持久化保存,此时需要持久化保存快照和节点的信息,实验教程是推荐使用persister.SaveStateAndSnapshot函数。

注意:6.824中快照的间隔是每10条command进行一次快照,因此节点在进行将已经提交了的指令发送到applyCh进行执行的时候不能获取有rf.mu这个互斥锁,因为在你提交指令并将该指令发送到applyCh执行的同时,测试脚本会调用Snapshot函数进行快照,但是我设计的这个函数也需要获取rf.mu互斥锁,那么这个节点就会进入死锁状态:无法获取rf.mu互斥锁进行快照,另一边是需要等快照结束才能继续提交指令并执行,以及后续动作。

func (rf *Raft) Snapshot(index int, snapshot []byte) {

// Your code here (2D).

rf.mu.Lock()

if index <= rf.lastIncludedIndex {

rf.mu.Unlock()

return

}

// fmt.Printf("%v raft%d persists and creates a snapshot from %d to %d\n", time.Now(), rf.me, rf.lastIncludedIndex, index)

for cutIndex, val := range rf.log {

if val.Index == index {

rf.lastIncludedIndex = index

rf.lastIncludedTerm = val.Term

rf.log = rf.log[cutIndex+1:]

var tempLogArray []LogEntry = make([]LogEntry, 1)

// make sure the log array is valid starting with index=1

rf.log = append(tempLogArray, rf.log...)

}

}

w := new(bytes.Buffer)

e := labgob.NewEncoder(w)

e.Encode(rf.currentTerm)

e.Encode(rf.voteFor)

e.Encode(rf.log)

e.Encode(rf.lastIncludedIndex)

e.Encode(rf.lastIncludedTerm)

data := w.Bytes()

rf.persister.SaveStateAndSnapshot(data, snapshot)

rf.mu.Unlock()

}实现InstallSnapshot的RPC

有快照机制后,当leader修剪log后,在进行log replication的时候,部分follower缺少已经被leader快照修剪没了的log,那么leader就需要调用该RPC来将自身的快照发送给该follower,来解决这个问题。

设计InstallSnapshot RPC还需要设计RPC调用过程中的结构体设计。

InstallSnapshot RPC的结构体设计

根据paper可以直接简单的得到以下两个结构体

type InstallSnapshotArgs struct {

Term int

LeaderId int

LastIncludedIndex int

LastIncludedTerm int

Data []byte

}

type InstallSnapshotReply struct {

Term int

}InstallSnapshot函数设计

注意:需要检查发送来的快照是否是过时的,避免旧的快照把本地新的快照给取代了,发生数据回滚。

同时还需要注意,节点收到快照有两种可能:1、发送来的args.LastIncludedIndex比本节点的lastLogIndex都要大,那么节点仅需将本地log全部删除,然后通过args的数据来更新本地信息,同时,节点的lastLogIndex也应当变更为args.LastIncludedIndex,lastLogTerm也应当变更为args.LastIncludedTerm;2、发送来的args.LastIncludedIndex比本节点的lastLogIndex要小,那么节点仅需将包括LastIncludeIndex和在此之前的全部Log修剪掉即可,无需改动lastLogIndex。

实验教程中说明,当一个节点收到InstallSnapshot时,需要该快照信息放到applyMsg中,然后放到applyCh中才可。如果只是将接受到的信息更新了节点的本地信息,不把快照的信息生成一个applyMsg并插入到applyCh中,测试将出错。

那么此处将生成applyMsg插入到applyCh中时又需要注意上面说的,插入msg到管道applyCh中时,不能拥有rf.mu互斥锁,避免和测试脚本调用snapshot函数时发生死锁。

需要注意的点都如上所示,下面就是具体代码实现:

func (rf *Raft) InstallSnapshot(args *InstallSnapshotArgs, reply *InstallSnapshotReply) {

rf.mu.Lock()

reply.Term = rf.currentTerm

if reply.Term > args.Term {

rf.mu.Unlock()

return

}

if args.LastIncludedIndex > rf.lastIncludedIndex {

// fmt.Printf("%v raft%v install snapshot:%d to %d from leader%d \n", time.Now(), rf.me, rf.lastIncludedIndex, args.LastIncludedIndex, args.LeaderId)

rf.lastIncludedIndex = args.LastIncludedIndex

rf.lastIncludedTerm = args.LastIncludedTerm

if rf.lastLogIndex < args.LastIncludedIndex {

rf.lastLogIndex = args.LastIncludedIndex

rf.lastLogTerm = args.LastIncludedTerm

rf.log = rf.log[0:1]

} else {

for cutIndex, val := range rf.log {

if val.Index == args.LastIncludedIndex {

rf.log = rf.log[cutIndex+1:]

var tempLogArray []LogEntry = make([]LogEntry, 1)

// make sure the log array is valid starting with index=1

rf.log = append(tempLogArray, rf.log...)

}

}

}

if rf.lastApplied < rf.lastIncludedIndex {

rf.lastApplied = rf.lastIncludedIndex

}

if rf.commitIndex < rf.lastIncludedIndex {

rf.commitIndex = rf.lastIncludedIndex

}

w := new(bytes.Buffer)

e := labgob.NewEncoder(w)

e.Encode(rf.currentTerm)

e.Encode(rf.voteFor)

e.Encode(rf.log)

e.Encode(rf.lastIncludedIndex)

e.Encode(rf.lastIncludedTerm)

data := w.Bytes()

rf.persister.SaveStateAndSnapshot(data, args.Data)

var snapApplyMsg ApplyMsg

snapApplyMsg.SnapshotValid = true

snapApplyMsg.SnapshotIndex = args.LastIncludedIndex

snapApplyMsg.SnapshotTerm = args.LastIncludedTerm

snapApplyMsg.Snapshot = args.Data

// fmt.Printf("%v raft%d LII:%d, LIT:%d, LLI:%d, LLT:%d, LA:%d, CI:%d, len:%d\n", time.Now(), rf.me, rf.lastIncludedIndex, rf.lastIncludedTerm, rf.lastLogIndex, rf.lastLogTerm, rf.lastApplied, rf.commitIndex, len(rf.log))

rf.mu.Unlock()

rf.applyCh <- snapApplyMsg

} else {

// fmt.Printf("%v raft%v recv an out of date snapshot:%d to %d from leader%d \n", time.Now(), rf.me, rf.lastIncludedIndex, args.LastIncludedIndex, args.LeaderId)

rf.mu.Unlock()

}

}sendInstallSnapshot函数设计

这个函数就是给leader调用installSnapshot用的一个接口,仿照前面的那些即可

func (rf *Raft) sendInstallSnapshot(server int, args *InstallSnapshotArgs, reply *InstallSnapshotReply) bool {

ok := rf.peers[server].Call("Raft.InstallSnapshot", args, reply)

return ok

}调整AppendEntries函数

当节点在和leader发送来的args中寻找log replication的匹配点logIndex的时候,logIndex开始会设置为lastLogIndex,然后进行比较是否为匹配点,若不是则logIndex--,这样进行遍历,若直到logIndex = 0的时候即该节点本地的内存中的第一个log也没有匹配上,那么这里就有两种情况:1、节点没有进行过快照,表明该节点的log没有经过修剪,那么该节点没有一个log是有效的,需要从头开始给该节点进行log replication,follower将index=0,Term=0这个匹配点发送给leader,让其从头开始给follower发送log;2、节点进行过快照,那么还需比较进行lastIncludedIndex和lastIncludedTerm,查看lastIncludedIndex、lastIncludedTerm是否能够和args.PrevLogIndex、args.PrevLogTerm匹配的上,若能够匹配的上则直接把匹配点后续的log直接复制到节点的rf.log即可;若不能够匹配的上的话,则follower直接将lastIncludedTerm和lastincludedIndex发送给leader,由于快照中的Log都是已经提交过的,必然leader也有,这个精准的匹配点将发送给leader。

func (rf *Raft) AppendEntries(args *AppendEntriesArgs, reply *AppendEntriesReply) {

rf.mu.Lock()

defer rf.mu.Unlock()

reply.Term = rf.currentTerm

if args.Term < rf.currentTerm {

reply.Success = false

} else {

// fmt.Printf("raft%d receive ae from leader%d\n", rf.me, args.LeaderId)

if args.Entries == nil {

// if the args.Entries is empty, it means that the ae message is a heartbeat message.

if args.LeaderCommit > rf.commitIndex {

// fmt.Printf("%v raft%d update commitIndex from %d to %d\n", time.Now(), rf.me, rf.commitIndex, args.LeaderCommit)

rf.commitIndex = args.LeaderCommit

for rf.lastApplied < rf.commitIndex {

rf.lastApplied++

var applyMsg = ApplyMsg{}

applyMsg.Command = rf.log[rf.lastApplied-rf.lastIncludedIndex].Command

applyMsg.CommandIndex = rf.log[rf.lastApplied-rf.lastIncludedIndex].Index

applyMsg.CommandValid = true

// fmt.Printf("%v raft%d insert the msg%d into applyCh\n", time.Now(), rf.me, rf.lastApplied)

rf.mu.Unlock()

rf.applyCh <- applyMsg

// fmt.Printf("%v raft%d insert the msg%d into applyCh\n", time.Now(), rf.me, rf.lastApplied)

rf.mu.Lock()

}

}

reply.Success = true

} else {

// if the args.Entries is not empty, it means that we should update our entries to be aligned with leader's.

var match bool = false

if args.PrevLogTerm > rf.lastLogTerm {

reply.Term = rf.lastLogTerm

// fmt.Printf("%v 1 raft%d prevIndex:%d lastIndex:%d\n", time.Now(), rf.me, args.PrevLogIndex, rf.lastLogIndex)

reply.Success = false

} else if args.PrevLogTerm == rf.lastLogTerm {

if args.PrevLogIndex <= rf.lastLogIndex {

match = true

} else {

reply.Term = rf.lastLogTerm

reply.ConflictIndex = rf.lastLogIndex

// fmt.Printf("%v 2 raft%d prevIndex:%d lastIndex:%d\n", time.Now(), rf.me, args.PrevLogIndex, rf.lastLogIndex)

reply.Success = false

}

} else if args.PrevLogTerm < rf.lastLogTerm {

// ---------------key region--------------

var logIndex = len(rf.log) - 1

for logIndex >= 0 {

if rf.log[logIndex].Term > args.PrevLogTerm {

logIndex--

continue

}

if rf.log[logIndex].Term == args.PrevLogTerm {

reply.Term = args.PrevLogTerm

if rf.log[logIndex].Index >= args.PrevLogIndex {

match = true

} else {

// fmt.Printf("%v 3 raft%d prevIndex:%d lastIndex:%d\n", time.Now(), rf.me, args.PrevLogIndex, rf.lastLogIndex)

reply.ConflictIndex = rf.log[logIndex].Index

reply.Success = false

}

break

}

if logIndex == 0 && rf.lastIncludedIndex != 0 {

if rf.lastIncludedTerm == args.PrevLogTerm && rf.lastIncludedIndex == args.PrevLogIndex {

match = true

} else {

reply.Success = false

// fmt.Printf("%v 4 raft%d prevIndex:%d lastIndex:%d\n", time.Now(), rf.me, args.PrevLogIndex, rf.lastLogIndex)

reply.Term = rf.lastIncludedTerm

reply.ConflictIndex = rf.lastIncludedIndex

}

}

if rf.log[logIndex].Term < args.PrevLogTerm {

reply.Term = rf.log[logIndex].Term

// fmt.Printf("%v 5 raft%d prevIndex:%d lastIndex:%d\n", time.Now(), rf.me, args.PrevLogIndex, rf.lastLogIndex)

reply.Success = false

break

}

}

}

if match {

// Notice!!

// we need to consider a special situation: followers may receive an older log replication request, and followers should do nothing at that time

// so followers should ignore those out-of-date log replication requests or followers will choose to synchronized and delete lastest logs

var length = len(args.Entries)

var index = args.PrevLogIndex + length

reply.Success = true

if index < rf.lastLogIndex {

// check if the ae is out-of-date

if index <= rf.lastIncludedIndex || args.Entries[length-1].Term == rf.log[index-rf.lastIncludedIndex].Term {

// fmt.Printf("%v raft%d receive a out-of-date ae and do nothing. prevLogIndex:%d, length:%d from leader%d\n", time.Now(), rf.me, args.PrevLogIndex, length, args.LeaderId)

return

}

}

// fmt.Printf("%v raft%d recv preIndex:%d,len:%d,leader:%d\n", time.Now(), rf.me, args.PrevLogIndex, length, args.LeaderId)

if args.PrevLogIndex+1 < rf.lastIncludedIndex {

for cutIndex, val := range args.Entries {

if val.Index == rf.lastIncludedIndex {

rf.log = make([]LogEntry, 1)

rf.log = append(rf.log, args.Entries[cutIndex+1:]...)

}

}

} else {

rf.log = rf.log[:args.PrevLogIndex+1-rf.lastIncludedIndex]

rf.log = append(rf.log, args.Entries...)

}

// fmt.Printf("%v raft%d log:%v\n", time.Now(), rf.me, rf.log)

var logLength = len(rf.log)

rf.lastLogIndex = rf.log[logLength-1].Index

rf.lastLogTerm = rf.log[logLength-1].Term

rf.persist()

}

}

if rf.currentTerm < args.Term {

// fmt.Printf("%v raft%d update term from %d to %d\n", time.Now(), rf.me, rf.currentTerm, args.Term)

}

rf.currentTerm = args.Term

rf.state = "follower"

rf.changeOpSelect(-1)

rf.messageCond.Broadcast()

}

}调整Start函数

这里调整的主要是针对那些log过于落后的follower需要installSnapshot而调整的。

注意:start函数中,存在循环尝试给follower发送sendAppendEntries来进行log replication,每次循环中都需要释放和获取锁,再次获取锁后,节点本身的状态可能发生了更新,在2D实验中,我们需要额外考虑的一个状态更新就是快照导致rf.log进行了修剪。同时在发送完sendAppendEntreis后,又要重新获取锁那么此时对rf.log进行任何操作前也都要先检查rf.lastIncludedIndex是否发生了改变,否则再对rf.log进行访问的时候就会发生错误。

因此存在一种情况,leader尝试给follower进行log replication寻找匹配点的时候,进入下一次循环的时候发现leader创建了快照,rf.log经过了修剪,nextIndex已经小于rf.lastIncludedIndex即匹配点必然处于快照中,那么此时就直接发送快照。

此外,在Leader给follower发送快照后,更新了follower的状态后,leader本地对该follower的状态记录也需要更新,rf.matchIndex和rf.nextIndex都需要更新。

在正常情况中,当leader发送给follower,然后进行寻找log replicaition的匹配点的时候,同样需要对nextIndex进行递减寻找,如果发现nextIndex-1-rf.lastIncludedIndex==0 并且 rf.lastIncludedInex!=0,表明Leader本地的第一个log也不是匹配点,leader创造过快照对rf.log进行过修剪,那么还需要查看快照中的最后一个log是否匹配,若不匹配则需要先发送快照来解决那些过于落后的log,后续再发送本地有的log给follower。

在Start函数中,我们碰到nextIndex-1-rf.lastIncludedIndex==0的情况,这种边缘情况,那么我们就需要考虑到快照对rf.log进行修剪的情况。

同时,在Start函数中本地对那些已经提交了的msg进行执行,将msg插入到applyCh管道中时,务必不能拥有rf.mu这个互斥锁,不然后果上文也已经讲过了。

代码实现如下:

func (rf *Raft) Start(command interface{}) (int, int, bool) {

index := -1

term := -1

isLeader := true

// Your code here (2B).

_, isLeader = rf.GetState()

if !isLeader {

return index, term, isLeader

}

rf.mu.Lock()

// var length = len(rf.log)

// index = rf.log[length-1].Index + 1

rf.lastLogTerm = rf.currentTerm

rf.lastLogIndex = rf.nextIndex[rf.me]

index = rf.nextIndex[rf.me]

term = rf.lastLogTerm

var peerNum = rf.peerNum

var entry = LogEntry{Index: index, Term: term, Command: command}

rf.log = append(rf.log, entry)

// if command == 0 {

// fmt.Printf("%v leader%d send a command:%v to update followers' log, index:%d term:%d\n", time.Now(), rf.me, command, index, term)

// } else {

// fmt.Printf("%v leader%d receive a command:%v, index:%d term:%d\n", time.Now(), rf.me, command, index, term)

// }

// fmt.Printf("%v leader%d receive a command:%v, index:%d term:%d\n", time.Now(), rf.me, command, index, term)

rf.matchIndex[rf.me] = index

rf.nextIndex[rf.me] = index + 1

rf.persist()

// rf.mu.Unlock()

for i := 0; i < peerNum; i++ {

if i == rf.me {

continue

}

// rf.mu.Lock()

go func(id int, nextIndex int) {

var args = &AppendEntriesArgs{}

rf.mu.Lock()

if rf.currentTerm > term {

rf.mu.Unlock()

return

}

if rf.nextIndex[id] > nextIndex+1 {

// out of date gorouine should not send RPC to save network bandwidth

rf.mu.Unlock()

return

}

args.Entries = make([]LogEntry, 0)

// if rf.nextIndex[id] < index {

// for j := rf.nextIndex[id] + 1; j <= index; j++ {

// args.Entries = append(args.Entries, rf.log[j])

// }

// }

if nextIndex < index {

for j := nextIndex + 1; j <= index; j++ {

args.Entries = append(args.Entries, rf.log[j-rf.lastIncludedIndex])

}

}

args.Term = term

args.LeaderId = rf.me

rf.mu.Unlock()

for {

var reply = &AppendEntriesReply{}

rf.mu.Lock()

if rf.currentTerm > term {

// fmt.Printf("%v raft%d is no longer leader and stop sending log to raft%d\n", time.Now(), rf.me, id)

rf.mu.Unlock()

return

}

if nextIndex <= rf.lastIncludedIndex {

if nextIndex != rf.lastIncludedIndex {

rf.mu.Unlock()

return

}

// fmt.Printf("%v leader%d send installsnapshot to raft%d 674\n", time.Now(), rf.me, id)

var snapArgs InstallSnapshotArgs

var snapReply InstallSnapshotReply

snapArgs.Term = rf.currentTerm

snapArgs.LastIncludedIndex = rf.lastIncludedIndex

snapArgs.LastIncludedTerm = rf.lastIncludedTerm

snapArgs.LeaderId = rf.me

snapArgs.Data = rf.persister.ReadSnapshot()

rf.mu.Unlock()

var count = 0

for {

if count == 3 {

return

}

if rf.sendInstallSnapshot(id, &snapArgs, &snapReply) {

break

}

count++

}

rf.mu.Lock()

if rf.currentTerm < snapReply.Term {

rf.currentTerm = snapReply.Term

rf.state = "follower"

rf.voteFor = -1

// fmt.Printf("%v raft%d sendInstallSnapshot finds a higher term, updates its term to %d\n", time.Now(), rf.me, snapReply.Term)

} else {

if rf.matchIndex[id] < snapArgs.LastIncludedIndex {

rf.matchIndex[id] = snapArgs.LastIncludedIndex

}

if rf.nextIndex[id] <= snapArgs.LastIncludedIndex {

rf.nextIndex[id] = snapArgs.LastIncludedIndex + 1

}

}

rf.mu.Unlock()

return

}

if nextIndex-1-rf.lastIncludedIndex == 0 && rf.lastIncludedIndex != 0 {

args.PrevLogIndex = rf.lastIncludedIndex

args.PrevLogTerm = rf.lastIncludedTerm

} else {

args.PrevLogIndex = rf.log[nextIndex-1-rf.lastIncludedIndex].Index

args.PrevLogTerm = rf.log[nextIndex-1-rf.lastIncludedIndex].Term

}

// fmt.Printf(" 679---nextIndex=%d, rf.lastIncludedIndex=%d\n", nextIndex, rf.lastIncludedIndex)

// args.PrevLogIndex = rf.log[nextIndex-1-rf.lastIncludedIndex].Index

// args.PrevLogTerm = rf.log[nextIndex-1-rf.lastIncludedIndex].Term

args.Entries = rf.log[nextIndex-rf.lastIncludedIndex : index+1-rf.lastIncludedIndex]

// args.Entries = append([]LogEntry{rf.log[nextIndex]}, args.Entries...)

// fmt.Printf("%v leader%d send log:%d-%d to raft%d\n", time.Now(), rf.me, nextIndex, index, id)

rf.mu.Unlock()

var count = 0

for {

if count == 3 {

return

}

// if sendAE failed, retry util success

if rf.sendAppendEntries(id, args, reply) {

break

}

count++

}

rf.mu.Lock()

if reply.Term > args.Term {

// fmt.Printf("%v when sending log leader%d find a higher term, term:%d\n", time.Now(), rf.me, args.Term)

if reply.Term > rf.currentTerm {

rf.currentTerm = reply.Term

rf.state = "follower"

rf.voteFor = -1

// fmt.Printf("%v raft%d sendAppendEntreis finds a higher term, updates its term to %d\n", time.Now(), rf.me, reply.Term)

rf.mu.Unlock()

break

}

// fmt.Printf("%v goroutine (term:%d, raft%d send log to raft%d) is out of date. Stop the goroutine.\n", time.Now(), args.Term, rf.me, id)

rf.mu.Unlock()

break

}

var ifSendInstallSnapshot bool

if !reply.Success {

// fmt.Printf("%v fail nextIndex:%d prevIndex:%d prevTerm:%d reply.Term:%d\n", time.Now(), nextIndex, args.PrevLogIndex, args.PrevLogTerm, reply.Term)

if nextIndex <= rf.lastIncludedIndex {

ifSendInstallSnapshot = true

} else if rf.log[nextIndex-1-rf.lastIncludedIndex].Term > reply.Term {

for rf.log[nextIndex-1-rf.lastIncludedIndex].Term > reply.Term {

nextIndex--

if nextIndex-1-rf.lastIncludedIndex == 0 && rf.lastIncludedIndex != 0 {

if rf.lastIncludedTerm != reply.Term {

// fmt.Printf("%v leader%d send installsnapshot to raft%d 750\n", time.Now(), rf.me, id)

ifSendInstallSnapshot = true

}

}

}

if reply.ConflictIndex != 0 {

nextIndex = reply.ConflictIndex + 1

if nextIndex <= rf.lastIncludedIndex {

// fmt.Printf("%v leader%d send installsnapshot to raft%d 758\n", time.Now(), rf.me, id)

ifSendInstallSnapshot = true

}

}

} else {

if reply.ConflictIndex != 0 {

nextIndex = reply.ConflictIndex + 1

if nextIndex <= rf.lastIncludedIndex {

// fmt.Printf("%v leader%d send installsnapshot to raft%d 766\n", time.Now(), rf.me, id)

ifSendInstallSnapshot = true

}

} else {

nextIndex--

}

}

if ifSendInstallSnapshot {

var snapArgs InstallSnapshotArgs

var snapReply InstallSnapshotReply

snapArgs.Term = rf.currentTerm

snapArgs.LastIncludedIndex = rf.lastIncludedIndex

snapArgs.LastIncludedTerm = rf.lastIncludedTerm

snapArgs.LeaderId = rf.me

snapArgs.Data = rf.persister.ReadSnapshot()

rf.mu.Unlock()

var count = 0

for {

if count == 3 {

return

}

if rf.sendInstallSnapshot(id, &snapArgs, &snapReply) {

break

}

count++

}

rf.mu.Lock()

if rf.currentTerm < snapReply.Term {

rf.currentTerm = snapReply.Term

rf.state = "follower"

rf.voteFor = -1

// fmt.Printf("%v raft%d sendInstallSnapshot finds a higher term, updates its term to %d\n", time.Now(), rf.me, snapReply.Term)

} else {

if rf.matchIndex[id] < snapArgs.LastIncludedIndex {

rf.matchIndex[id] = snapArgs.LastIncludedIndex

}

if rf.nextIndex[id] <= snapArgs.LastIncludedIndex {

rf.nextIndex[id] = snapArgs.LastIncludedIndex + 1

}

if index > snapArgs.LastIncludedIndex+1 {

nextIndex = snapArgs.LastIncludedIndex + 1

} else {

rf.mu.Unlock()

return

}

}

}

// fmt.Printf("%v leader%d try sending nextIndex:%d log to follower%d\n", time.Now(), rf.me, nextIndex, id)

// nextIndex--

if nextIndex == 0 {

// fmt.Printf("Error:leader%d send log to raft%d, length:%d \n", rf.me, id, len(args.Entries))

rf.mu.Unlock()

break

}

rf.mu.Unlock()

} else {

if rf.matchIndex[id] < index {

// fmt.Printf("%v leader%d send log from %d to %d to raft%d\n", time.Now(), rf.me, nextIndex, index, id)

rf.matchIndex[id] = index

} else {

// fmt.Printf("%v leader%d send out of date log from %d to %d to raft%d\n", time.Now(), rf.me, nextIndex, index, id)

rf.mu.Unlock()

return

}

// we need to check if most of the raft nodes have reach a agreement.

var mp = make(map[int]int)

for _, val := range rf.matchIndex {

mp[val]++

}

var tempArray = make([]num2num, 0)

for k, v := range mp {

tempArray = append(tempArray, num2num{key: k, val: v})

}

// sort.Slice(tempArray, func(i, j int) bool {

// return tempArray[i].val > tempArray[j].val

// })

sort.Slice(tempArray, func(i, j int) bool {

return tempArray[i].key > tempArray[j].key

})

var voteAddNum = 0

for j := 0; j < len(tempArray); j++ {

if tempArray[j].val+voteAddNum >= (rf.peerNum/2)+1 {

if rf.commitIndex < tempArray[j].key {

// fmt.Printf("%v %d nodes have received msg%d, leader%d update commitIndex from %d to %d\n", time.Now(), tempArray[j].val+voteAddNum, tempArray[j].key, rf.me, rf.commitIndex, tempArray[j].key)

rf.commitIndex = tempArray[j].key

for rf.lastApplied < rf.commitIndex {

rf.lastApplied++

var applyMsg = ApplyMsg{}

applyMsg.Command = rf.log[rf.lastApplied-rf.lastIncludedIndex].Command

applyMsg.CommandIndex = rf.log[rf.lastApplied-rf.lastIncludedIndex].Index

applyMsg.CommandValid = true

// fmt.Printf("%v leader%d insert the msg%d into applyCh\n", time.Now(), rf.me, rf.lastApplied)

rf.mu.Unlock()

rf.applyCh <- applyMsg

rf.mu.Lock()

}

break

}

}

voteAddNum += tempArray[j].val

}

rf.mu.Unlock()

break

}

time.Sleep(10 * time.Millisecond)

}

}(i, rf.nextIndex[i])

// we update the nextIndex array at first time, even if the follower hasn't received the msg.

if index+1 > rf.nextIndex[i] {

rf.nextIndex[i] = index + 1

}

// rf.mu.Unlock()

}

rf.mu.Unlock()

return index, term, isLeader

}调整ticker函数

这里需要调整是因为我之前写的代码不兼容有快照的情况,我这里写的比较特殊。

情况如下:

rf.nextIndex[i] = rf.log[length-1].Index + 1

if length == 1 && rf.lastIncludedIndex != 0 {

rf.nextIndex[i] = rf.lastIncludedIndex + 1

} else {

rf.nextIndex[i] = rf.log[length-1].Index + 1

}我此处给nextIndex赋值是通过去rf.log中寻找,但是可能快照导致rf.log中没有记录了,需要考虑这种情况。其余没有变动。

运行结果图