spark on yarn集群的安装与搭建

注:(搭建spark on yarn 需要jdk,hadoop环境,其搭建可参照前面jdk和hadoop的安装与搭建)

.1.解压spark安装包

[root@master /]# tar -zxvf /h3cu/spark-3.1.1-bin-hadoop3.2.tgz -C /usr/local/src/2.进入到src目录下

[root@master /]# cd /usr/local/src/

[root@master src]# ls

hadoop hbase jdk spark-3.1.1-bin-hadoop3.2 zk

[root@master src]# mv spark-3.1.1-bin-hadoop3.2/ spark #改名为spark

[root@master src]# ls #查看

hadoop hbase jdk spark zk

3.添加环境变量

[root@master src]# vi /etc/profile

#在其末尾追加

export SPARK_HOME=/usr/local/src/spark

export PATH=$PATH:$SPARK_HOME/bin:$SPARK_HOME/sbin

[root@master src]# source /etc/profile #使环境变量生效.4.进入spark的conf目录下

[root@master src]# cd spark/conf/

[root@master conf]# ls

fairscheduler.xml.template metrics.properties.template spark-env.sh.template

log4j.properties.template spark-defaults.conf.template workers.template

[root@master src]# cd spark/conf/

[root@master conf]# mv spark-env.sh.template spark-env.sh #改名

[root@master conf]# vi spark-env.sh

#在其末尾追加

export JAVA_HOME=/usr/local/src/jdk

export HADOOP_HOME=/usr/local/src/hadoop

export YARN_CONF_DIR=/usr/local/src/hadoop/etc/hadoop

export SPARK_MASTER_HOST=master

[root@master conf]# mv workers.template workers #改名

[root@master conf]# vi workers

#删除原有的localhost,追加

master

slave1

slave2

5.分发

[root@master conf]# scp /etc/profile slave1:/etc/

profile 100% 2388 1.4MB/s 00:00

[root@master conf]# scp /etc/profile slave2:/etc/

profile 100% 2388 1.0MB/s 00:00

[root@master conf]# scp -r /usr/local/src/spark/ slave2:/usr/local/src/

[root@master conf]# scp -r /usr/local/src/spark/ slave2:/usr/local/src/

在另外两台虚拟机上分别执行source /etc/profile 使环境变量生效6.测试(SparkPi)

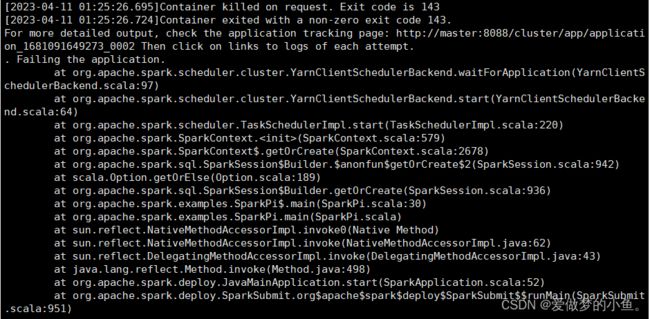

[root@master conf]# spark-submit --master yarn --class org.apache.spark.examples.SparkPi /usr/local/src/spark/examples/jars/spark-examples_2.12-3.1.1.jar7.出现报错(For more detailed output, check the application tracking page: http://master:8088/cluster/app/application_1681091649273_0002 Then click on links to logs of each attempt.

. Failing the application.

)

8.解决在hadoop的yarn-site.xml下加入然后重启Hadoop

yarn.nodemanager.pmen-check-enabled

false

yarn.nodemanager.vmem-check-enabled

false

9.出现新的报错

这是由于hadoop的异常关闭导致安全模式的打开

关闭安全模式

[root@master conf]# hadoop dfsadmin -safemode leave

WARNING: Use of this script to execute dfsadmin is deprecated.

WARNING: Attempting to execute replacement "hdfs dfsadmin" instead.

Safe mode is OFF

10.解决

2023-04-11 01:36:31,187 INFO cluster.YarnScheduler: Removed TaskSet 0.0, whose tasks have all completed, from pool

2023-04-11 01:36:31,201 INFO scheduler.DAGScheduler: ResultStage 0 (reduce at SparkPi.scala:38) finished in 4.576 s

2023-04-11 01:36:31,210 INFO scheduler.DAGScheduler: Job 0 is finished. Cancelling potential speculative or zombie tasks for this job

2023-04-11 01:36:31,211 INFO cluster.YarnScheduler: Killing all running tasks in stage 0: Stage finished

2023-04-11 01:36:31,218 INFO scheduler.DAGScheduler: Job 0 finished: reduce at SparkPi.scala:38, took 4.814020 s

Pi is roughly 3.142955714778574 ###############

2023-04-11 01:36:31,369 INFO server.AbstractConnector: Stopped Spark@50d68830{HTTP/1.1, (http/1.1)}{0.0.0.0:4040}

2023-04-11 01:36:31,390 INFO ui.SparkUI: Stopped Spark web UI at http://master.mynetwork2:4040

2023-04-11 01:36:31,404 INFO cluster.YarnClientSchedulerBackend: Interrupting monitor thread

2023-04-11 01:36:31,475 INFO cluster.YarnClientSchedulerBackend: Shutting down all executors

2023-04-11 01:36:31,479 INFO cluster.YarnSchedulerBackend$YarnDriverEndpoint: Asking each executor to shut down

2023-04-11 01:36:31,515 INFO cluster.YarnClientSchedulerBackend: YARN client scheduler backend Stopped

2023-04-11 01:36:31,597 INFO spark.MapOutputTrackerMasterEndpoint: MapOutputTrackerMasterEndpoint stopped!