基于springboot项目中使用docker-compose+es+kibana+logstash+mysql 提高数据查询效率

基于springboot项目中使用docker-compose+es+kibana+logstash+mysql 提高数据查询效率

1.拉取logstash,kibana,es,mysql镜像

#命令拉取镜像:docker pull

docker pull docker.io/logstash:7.6.2

docker pull docker.io/kibana:7.6.2

docker pull docker.io/elasticsearch:7.6.2

docker pull mysql:5.7

-------------------------------------------------------------------------------------------------------------------

命令查看镜像:docker images

docker.io/logstash 7.6.2 fa5b3b1e9757 16 months ago 813 MB

docker.io/kibana 7.6.2 f70986bc5191 16 months ago 1.01 GB

docker.io/elasticsearch 7.6.2 f29a1ee41030 16 months ago 791 MB

docker.io/mobz/elasticsearch-head 5 b19a5c98e43b 4 years ago 824 MB

mysql 5.7 8cf625070931 2 weeks ago 448MB

2.创建mysql表

-------------------------------------------------------------------------------------------------------------------

SET NAMES utf8mb4;

SET FOREIGN_KEY_CHECKS = 0;

-- ----------------------------

-- Table structure for T_MESSAGES_PUSH

-- ----------------------------

DROP TABLE IF EXISTS `T_MESSAGES_PUSH`;

CREATE TABLE `T_MESSAGES_PUSH` (

`ID` int(11) NOT NULL AUTO_INCREMENT,

`MESSAGE_ID` varchar(32) NOT NULL COMMENT '消息ID',

`TITLE` varchar(64) DEFAULT NULL COMMENT '主题',

`SEND_TYPE` varchar(2) DEFAULT NULL COMMENT '发送方式',

`CONTENT` varchar(255) NOT NULL COMMENT '消息主体',

`CATE_GORY` varchar(2) DEFAULT NULL COMMENT '消息类别',

`STATUS` varchar(2) DEFAULT NULL COMMENT '消息状态 0正常,1过期',

`EFFECTIVE_START_TIME` date NOT NULL COMMENT '有效期开始时间',

`EFFECTIVE_END_TIME` date NOT NULL COMMENT '有效期结束时间',

`PUSHER` varchar(16) DEFAULT NULL COMMENT '推送人',

`RECEIVER` varchar(16) DEFAULT NULL COMMENT '接收人',

`IS_CONSUME_STATUS` varchar(2) DEFAULT NULL COMMENT '消息消费状态 0成功,1失败',

PRIMARY KEY (`ID`)

) ENGINE=InnoDB AUTO_INCREMENT=3 DEFAULT CHARSET=utf8;

-------------------------------------------------------------------------------------------------------------------3.创建容器挂载的本地目录及文件

3.1 创建本地挂载文件对应logstash 容器的文件

#创建相应的本地挂载文件 这边我直接用cp从服务器上拉取的 远程文件当作本地挂载的文件

docker cp logstash:/usr/share/logstash/config/ /Users/jiajie/software/elasticsearch/logstash/

docker cp logstash:/usr/share/logstash/pipeline/ /Users/jiajie/software/elasticsearch/logstash/

docker cp logstash:/usr/share/logstash/logstash-core/lib/jars/ /Users/jiajie/software/elasticsearch/logstash/注意 logstash 需要依赖mysql-connector-java 相关jar包

mysql5.7版本对应的 可以使用 mysql-connector-java-8.0.24.jar

下载地址 https://mvnrepository.com/artifact/mysql/mysql-connector-java3.2 修改本地挂载文件(logstash.yml)对应 容器的文件

vi /Users/jiajie/software/elasticsearch/logstash/config/logstash.yml

--------------------------------------------------------------------

#设置服务器外部可以访问

http.host: "0.0.0.0"

#指定es的访问地址,可以写成公网的ip 这边我指定的是 容器内部IP

xpack.monitoring.elasticsearch.hosts: [ "http://172.19.0.2:9200" ]

--------------------------------------------------------------------3.3 修改本地挂载文件(logstash.conf)对应 容器的文件

vi /Users/jiajie/software/elasticsearch/logstash/pipeline/logstash.conf

--------------------------------------------------------------

# logstash.conf

input{ //同步源

jdbc{

type => "t_messages_push"

#数据库地址

jdbc_connection_string => "jdbc:mysql://106.15.51.1:3307/umz-messages?serverTimezone=Asia/Shanghai&characterEncoding=utf8&useSSL=false"

jdbc_user => "root" #数据用户

jdbc_password => "root" ##数据密码

jdbc_driver_library => "/usr/share/logstash/logstash-core/lib/jars/mysql-connector-java-8.0.24.jar"

jdbc_driver_class => "com.mysql.jdbc.Driver"

jdbc_paging_enabled => "true"

jdbc_page_size => "50000"

jdbc_default_timezone => "Asia/Shanghai"

#对应的sql 单个数据表同步操作,

#如需多表的话,这边可以映射成一个sql文件 statement_filepath => "/Users/jiajie/umz.sql"

statement => "select t1.ID as id,t1.MESSAGE_ID as messageId,t1.TITLE as title,t1.SEND_TYPE as sendType,t1.CONTENT as content,t1.CATE_GORY as category,t1.`STATUS` as status,

t1.EFFECTIVE_START_TIME as effectiveStartTime,t1.EFFECTIVE_END_TIME as effectiveEndTime,t1.PUSHER as pusher,t1.RECEIVER as receiver,t1.IS_CONSUME_STATUS as isConsumeStatus

from T_MESSAGES_PUSH t1"

clean_run => false

#同步的间隔时间

schedule => "*/5 * * * *"

}

}

output{ //输出目标源

if [type]=="t_messages_push" { //如果索引为 t_messages_push

elasticsearch {

hosts => "172.19.0.2:9200" #es的访问地址,可以指定公网的ID 我这边指定的是容器内部访问ip

# 索引名

index => "t_messages_push"

#索引类型

document_type => "messages"

document_id => "%{id}" #惟一ID 不能重复

}

}

if [type]=="merchant_shop" {

elasticsearch{

hosts => "172.19.0.2:9200"

index => "merchant_shop"

document_type => "shop"

document_id => "%{id}"

}

}

stdout{

codec => json_lines #日志输出方式

}

}

----------------------------------------------------------------3.4 创建本地挂载文件(kibana.conf)对应 容器的文件

vi /Users/jiajie/software/elasticsearch/kibana/kibana.yml

------------------------------------------------------------------

server.port: 5601

# 服务IP

server.host: "0.0.0.0"

# ES

elasticsearch.hosts: ["http://172.19.0.2:9200"]

# 汉化

i18n.locale: "zh-CN"

------------------------------------------------------------------4.创建docker-compose.yml 启动文件

----------------------------------------------------------------------------------------

version: "3" #版本号

services:

elasticsearch: #服务名称(不是容器名)

image: docker.io/elasticsearch:7.6.2 #使用的镜像

ports:

- "9200:9200" #暴露的端口信息和docker run -d -p 80:80 一样

- "9300:9300"

restart: "always" #重启策略,能够使服务保持始终运行,生产环境推荐使用

container_name: elasticsearch #容器名称

environment:

- "ES_JAVA_OPTS=-Xms512m -Xmx512m" #这边最好分配下内存,访止内存溢出

- "discovery.type=single-node"

kibana: #服务名称(不是容器名)

image: docker.io/kibana:7.6.2 #使用的镜像

ports:

- "5601:5601" #暴露的端口信息和docker run -d -p 80:80 一样

restart: "always" #重启策略,能够使服务保持始终运行,生产环境推荐使用

container_name: kibana #容器名称

environment:

- "ES_JAVA_OPTS=-Xms215m -Xmx215m" #这边最好分配下内存,访止内存溢出

# XPACK_MONITORING_ELASTICSEARCH_HOSTS: http://172.19.0.2:9200

#挂载文件

volumes:

- /Users/jiajie/software/elasticsearch/kibana/kibana.yml:/usr/share/kibana/config/kibana.yml

depends_on: #基于 elasticsearch服务启动

- elasticsearch

logstash: #服务名称(不是容器名)

image: logstash:7.6.2 #使用的镜像

ports:

# - "9600":"9600"

- "5044:5044" #暴露的端口信息和docker run -d -p 80:80 一样

restart: "always" #重启策略,能够使服务保持始终运行,生产环境推荐使用

container_name: logstash #容器名称

environment:

- "ES_JAVA_OPTS=-Xms215m -Xmx215m"

privileged: true

volumes: #映射本地和远程文件,后面要修改配置文件,只需要需要本地配置文件 重启应用生效

- /Users/jiajie/software/elasticsearch/logstash/config/:/usr/share/logstash/config/

- /Users/jiajie/software/elasticsearch/logstash/pipeline/:/usr/share/logstash/pipeline/

- /Users/jiajie/software/elasticsearch/logstash/jars/:/usr/share/logstash/logstash-core/lib/jars/

depends_on: #基于 elasticsearch服务启动

- elasticsearch

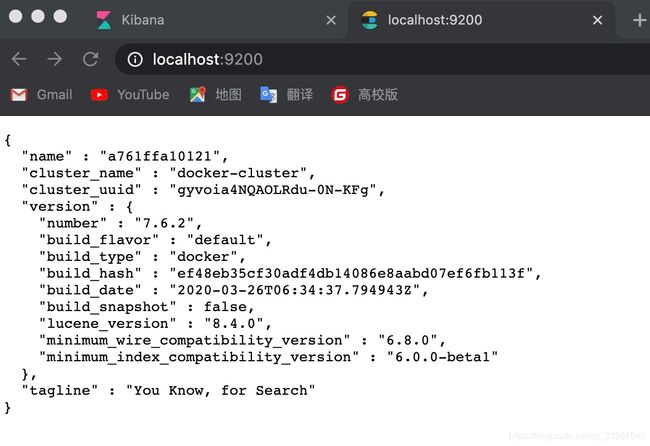

---------------------------------------------------------------------------------------------------------------5.启动应用容器

进入docker-compose.yml文件目录下 执行 docker-compose up -d 命令 后台启动#访问kibana

http://localhost:5601/app/kibana#/dev_tools/console#es访问地址

http://localhost:9200/6.安装es-head 插件

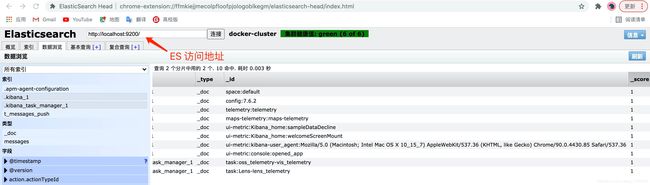

方式1:直接在谷歌浏览器中增加插件 ffmkiejjmecolpfloofpjologoblkegm.zip解压安装

方式2:也可以用docker的方式安装

docker pull docker.io/mobz/elasticsearch-head:5 访问地址

chrome-extension://ffmkiejjmecolpfloofpjologoblkegm/elasticsearch-head/index.html

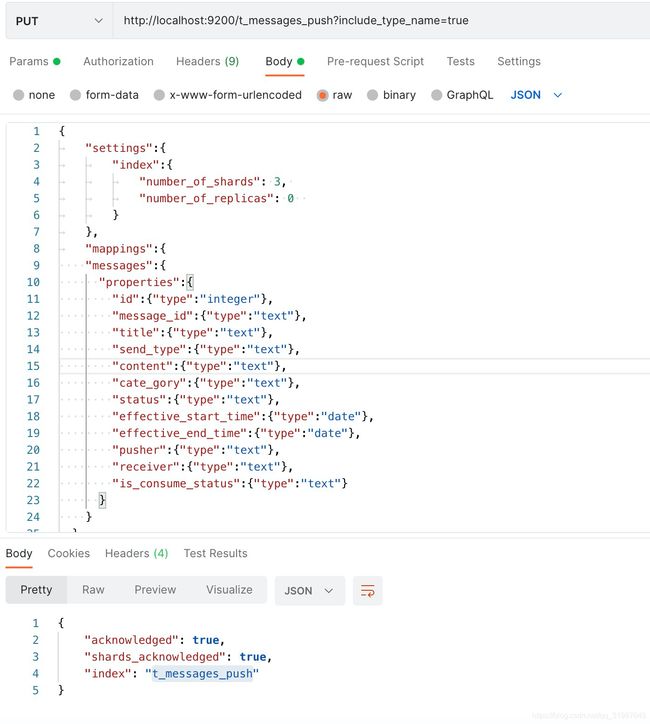

连接时写入 自己的es 请求地址7.创建索引

put http://localhost:9200/t_messages_push?include_type_name=true

{

"settings":{

"index":{

"number_of_shards": 3,

"number_of_replicas": 0

}

},

"mappings":{

"messages":{

"properties":{

"id":{"type":"integer"},

"message_id":{"type":"text"},

"title":{"type":"text"},

"send_type":{"type":"text"},

"content":{"type":"text"},

"cate_gory":{"type":"text"},

"status":{"type":"text"},

"effective_start_time":{"type":"date"},

"effective_end_time":{"type":"date"},

"pusher":{"type":"text"},

"receiver":{"type":"text"},

"is_consume_status":{"type":"text"}

}

}

}

}可以用postman请求----------也可以使用 kibana请求8.测试

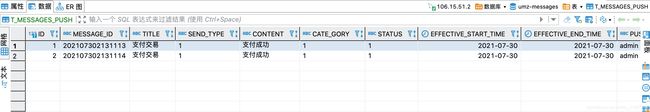

8.1 新增表数据

#数据库新增数据

-- ----------------------------

-- Records of T_MESSAGES_PUSH

-- ----------------------------

BEGIN;

INSERT INTO `T_MESSAGES_PUSH` VALUES (1, '202107302131113', '支付交易', '1', '支付成功', '1', '1', '2021-07-30', '2021-07-30', 'admin', 'admin', '0');

INSERT INTO `T_MESSAGES_PUSH` VALUES (2, '202107302131114', '支付交易', '1', '支付成功', '1', '1', '2021-07-30', '2021-07-30', 'admin', 'admin', '0');

COMMIT;

SET FOREIGN_KEY_CHECKS = 1;8.2 查看logstash容器打印日志

#进入容器

docker exec -it /bin/bash8.3 kibana查看相应的数据

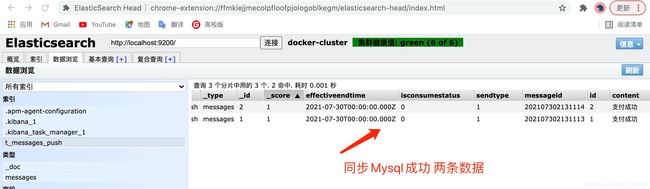

8.4 es-head查看相应的数据

9.springboot项目整合ES

9.1 引入pom.xml 依赖

org.springframework.boot

spring-boot-starter-data-elasticsearch

9.2 application.yml

spring:

elasticsearch:

rest:

uris: http://localhost:9200 //es访问地址9.3 编写测试controllere用户业务类

-------------------------------------------------------------------------------------------------------------

package com.microservice.messages.soa.basics.web;

import com.microservice.common.ApiResult;

import com.microservice.common.BaseResult;

import com.microservice.common.ResultCode;

import com.microservice.messages.soa.basics.MessagesDocument;

import com.microservice.messages.soa.basics.service.MessagePushService;

import com.microservice.messages.soa.basics.vo.MessagesPushVo;

import io.swagger.annotations.Api;

import io.swagger.annotations.ApiImplicitParam;

import io.swagger.annotations.ApiImplicitParams;

import io.swagger.annotations.ApiOperation;

import lombok.extern.slf4j.Slf4j;

import org.elasticsearch.search.aggregations.metrics.percentiles.hdr.InternalHDRPercentiles;

import org.springframework.web.bind.annotation.*;

import javax.annotation.Resource;

import javax.validation.Valid;

import java.lang.ref.PhantomReference;

import java.util.Optional;

/**

* @author JIAJIE

* @version 1.0.0

* @ClassName MessageController.java

* @Description TODO

* @createTime 2021年07月28日 15:52:00

*/

@Slf4j

@Api(tags = "消息管理")

@RestController

@RequestMapping(value = "/umz-messages/basics/message")

public class MessageController extends BaseResult {

@Resource

private MessagePushService messagePushService;

/**

* 跟据消息ID查询消息主体

* @param messageId 消息ID

* @return

*/

@ApiOperation(value = "跟据消息ID查询消息主体")

@RequestMapping(value = "/getMessagesPush", method = RequestMethod.GET)

public ApiResult getMessagesPush(@RequestParam(value = "messageId",required = true) String messageId) {

MessagesPushVo messagesPushVo = null;

try {

messagesPushVo = messagePushService.getMessagesPushList(messageId);

}catch (Exception e){

log.info("===【Exception:{}】===", e.toString());

return error(ResultCode.SYSTEM_EXCEPTION.getCode(),ResultCode.SYSTEM_EXCEPTION.getValue());

}

return success(messagesPushVo);

}

}

-----------------------------------------------------------------------------------------------------------------------------------------

package com.microservice.messages.soa.basics.service;

import com.microservice.messages.soa.basics.MessagesDocument;

import com.microservice.messages.soa.basics.vo.MessagesPushVo;

import java.io.IOException;

import java.util.List;

import java.util.Optional;

/**

* @author JIAJIE

* @version 1.0.0

* @ClassName MessageService.java

* @Description TODO

* @createTime 2021年07月28日 15:27:00

*/

public interface MessagePushService {

/**

* 跟据消息ID查询消息主体

* @param messageId 消息ID

* @return

*/

MessagesPushVo getMessagesPushList(String messageId) throws IOException;

}

-----------------------------------------------------------------------------------------------------------------------------------------

package com.microservice.messages.soa.basics.service.impl;

import com.alibaba.fastjson.JSON;

import com.microservice.common.IndexEnum;

import com.microservice.messages.soa.base.dao.BaseService;

import com.microservice.messages.soa.basics.MessagesDocument;

import com.microservice.messages.soa.basics.dao.MessageRepository;

import com.microservice.messages.soa.basics.service.MessagePushService;

import com.microservice.messages.soa.basics.vo.MessagesPushVo;

import org.apache.commons.lang3.StringUtils;

import org.elasticsearch.index.query.QueryBuilders;

import org.elasticsearch.index.query.TermQueryBuilder;

import org.elasticsearch.search.SearchHit;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.stereotype.Service;

import java.io.IOException;

import java.util.List;

import java.util.Objects;

import java.util.Optional;

/**

* @author JIAJIE

* @version 1.0.0

* @ClassName MessagePushServiceImpl.java

* @Description TODO

* @createTime 2021年07月28日 15:40:00

*/

@Service

public class MessagePushServiceImpl extends BaseService implements MessagePushService {

@Autowired

private MessageRepository messageRepository;

//这边需要小写 不知道原因还在研究

public static final String[] MESSAGE = {"id","messageid", "title", "sendtype", "content","category","status",

"effectivestarttime","effectiveendtime","pusher","receiver","isconsumestatus"};

@Override

public MessagesPushVo getMessagesPushList(String messageId) throws IOException {

// 精确查询

TermQueryBuilder query = QueryBuilders.termQuery("messageId", messageId);

// 执行搜索

SearchHit[] hits = queryInfo(IndexEnum.MESSAGES_PUSH,query,MESSAGE,null).getHits().getHits();

if (Objects.isNull(hits) || hits.length == 0) {

return null;

}

String result = hits[0].getSourceAsString();

MessagesPushVo productBaseVo = JSON.parseObject(result, MessagesPushVo.class);

return JSON.parseObject(result, MessagesPushVo.class);

}

}

-----------------------------------------------------------------------------------------------------------------------------------------

package com.microservice.messages.soa.base.dao;

import com.microservice.common.IndexEnum;

import org.elasticsearch.action.search.SearchRequest;

import org.elasticsearch.action.search.SearchResponse;

import org.elasticsearch.client.RequestOptions;

import org.elasticsearch.client.RestHighLevelClient;

import org.elasticsearch.common.Nullable;

import org.elasticsearch.common.unit.TimeValue;

import org.elasticsearch.index.query.QueryBuilder;

import org.elasticsearch.search.builder.SearchSourceBuilder;

import org.springframework.beans.factory.annotation.Autowired;

import java.io.IOException;

/**

* @author JIAJIE

* @version 1.0.0

* @ClassName BaseService.java

* @Description java es

* @createTime 2021年07月30日 20:18:00

*/

public abstract class BaseService {

/**

* es 客户端

*/

@Autowired

private RestHighLevelClient restHighLevelClient;

/**

* 默认查询超时时间60秒

*/

protected static final int DEF_TIMEOUT = 60;

/**

* 通用查询

*

* @param indexEnum 索引枚举

* @param query QueryBuilder

* @return SearchResponse

* @throws IOException 异常

*/

public SearchResponse queryInfo(IndexEnum indexEnum, QueryBuilder query,@Nullable String[] includes, @Nullable String[] excludes) throws IOException {

//搜索请求

SearchRequest request = new SearchRequest(indexEnum.getCode());

SearchSourceBuilder sourceBuilder = new SearchSourceBuilder();

// 具体查询

sourceBuilder.query(query);

sourceBuilder.timeout(TimeValue.timeValueSeconds(DEF_TIMEOUT));

// 查询返回字段

sourceBuilder.fetchSource(includes, excludes);

request.source(sourceBuilder);

// 执行搜索

return restHighLevelClient.search(request, RequestOptions.DEFAULT);

}

/**

* @param indexEnum 索引枚举

* @param query QueryBuilder

* @param includes 包含字段

* @param excludes 排除字段

* @return SearchResponse

* @throws IOException 异常

*/

public SearchResponse query(IndexEnum indexEnum, QueryBuilder query,

@Nullable String[] includes, @Nullable String[] excludes) throws IOException {

//搜索请求

SearchRequest request = new SearchRequest(indexEnum.getCode());

SearchSourceBuilder sourceBuilder = new SearchSourceBuilder();

// 具体查询

sourceBuilder.query(query);

sourceBuilder.timeout(TimeValue.timeValueSeconds(DEF_TIMEOUT));

// 查询返回字段

sourceBuilder.fetchSource(includes, excludes);

request.source(sourceBuilder);

// 执行搜索

return restHighLevelClient.search(request, RequestOptions.DEFAULT);

}

} 9.4 实体类

-----------------------------------------------------------------------------------------------------------------

package com.microservice.messages.soa.basics.vo;

import com.fasterxml.jackson.annotation.JsonFormat;

import io.swagger.annotations.ApiModelProperty;

import lombok.Data;

import org.springframework.data.annotation.Id;

import org.springframework.data.elasticsearch.annotations.DateFormat;

import org.springframework.data.elasticsearch.annotations.Field;

import org.springframework.data.elasticsearch.annotations.FieldType;

import java.util.Date;

/**

* @author JIAJIE

* @version 1.0.0

* @ClassName MessagesPushDto.java

* @Description TODO

* @createTime 2021年07月30日 20:09:00

*/

@Data

public class MessagesPushVo {

private Integer id;

@ApiModelProperty(value = "消息ID")

private String messageid;

@ApiModelProperty(value = "标题")

private String title;

@ApiModelProperty(value = "发送方式")

private String sendtype;

@ApiModelProperty(value = "消息主体")

private String content;

@ApiModelProperty(value = "消息类别")

private String category;

@ApiModelProperty(value = "消息状态 0正常,1过期")

private String status;

@ApiModelProperty(value = "有效期开始时间")

@JsonFormat(pattern = "yyyy-MM-dd HH:mm:ss", timezone = "GMT+8")

private Date effectivestarttime;

@ApiModelProperty(value = "有效期结束时间")

@JsonFormat(pattern = "yyyy-MM-dd HH:mm:ss", timezone = "GMT+8")

private Date effectiveendtime;

@ApiModelProperty(value = "推送人")

private String pusher;

@ApiModelProperty(value = "接收人")

private String receiver;

@ApiModelProperty(value = "消息消费状态 0成功,1失败")

private String isconsumestatus;

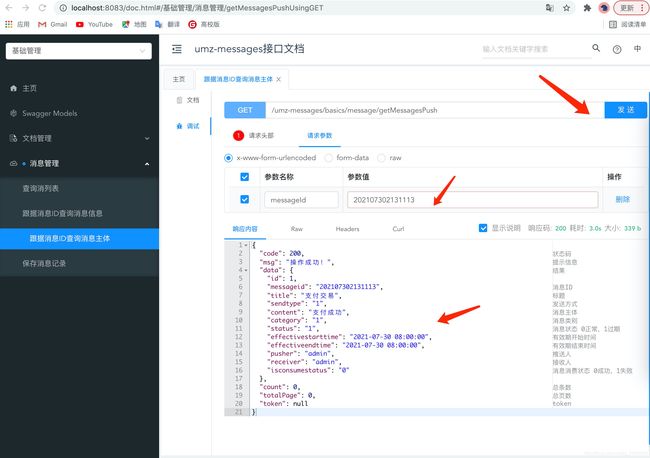

}10.运行springboot项目测试

http://localhost:8083/doc.html

到这边整个项目 整合完毕