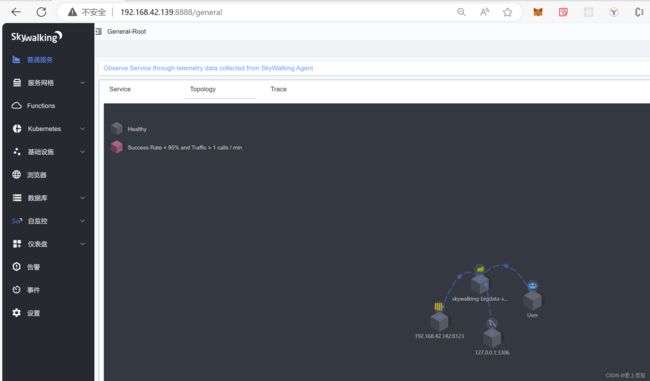

skywalking 9.0.0开启自监控和配置集群

一、skywalking介绍

SkyWalking是有国内开源爱好者吴晟开源并提交到Apache孵化器的开源项目,2017年12月SkyWalking成为Apache国内首个个人孵化项目,2019年4月17日SkyWalking从Apache基金会的孵化器毕业成为顶级项目,目前SkyWalking支持Java、.Net、Nodejs、Go、Python等探针,数据存储支持MySQL、Elasticsearch等,SkyWalking与Pinpoint相同,对业务代码无侵入,不过探针采集数据粒度相较于Pinpoint来说略粗,但性能表现优秀,目前SkyWalking增长势头强劲,社区活跃,中文文档齐全,没有语言障碍,支持多语言探针,这些都是SkyWalking的优势所在,还有就是SkyWalking支持很多框架,包括国产框架,例如,Dubbo、gRPC、SOFARPC等,同时也有很多开发者正在不断向社区提供更多插件以支持更多组件无缝接入SkyWalking

二、Skywalking支持的集群模式如下

2.1、zookeeper

2.2、kubernetes

2.3、consul

2.4、etcd

2.5、nacos

三、Skywalking集群配置

3.1、修改第一台服务器的application.yml

[root@node1 config]# cat application.yml

# Licensed to the Apache Software Foundation (ASF) under one or more

# contributor license agreements. See the NOTICE file distributed with

# this work for additional information regarding copyright ownership.

# The ASF licenses this file to You under the Apache License, Version 2.0

# (the "License"); you may not use this file except in compliance with

# the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

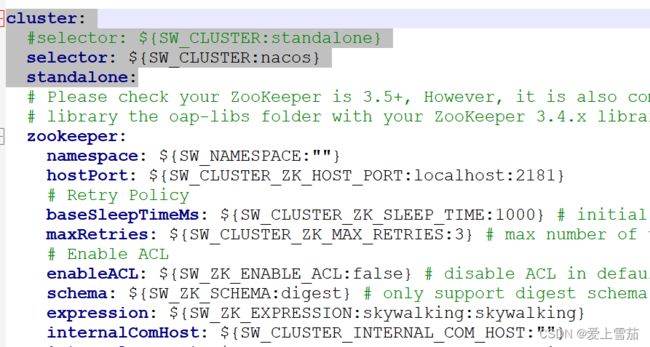

cluster:

selector: ${SW_CLUSTER:nacos}

#selector: ${SW_CLUSTER:standalone}

standalone:

# Please check your ZooKeeper is 3.5+, However, it is also compatible with ZooKeeper 3.4.x. Replace the ZooKeeper 3.5+

# library the oap-libs folder with your ZooKeeper 3.4.x library.

zookeeper:

namespace: ${SW_NAMESPACE:""}

hostPort: ${SW_CLUSTER_ZK_HOST_PORT:localhost:2181}

# Retry Policy

baseSleepTimeMs: ${SW_CLUSTER_ZK_SLEEP_TIME:1000} # initial amount of time to wait between retries

maxRetries: ${SW_CLUSTER_ZK_MAX_RETRIES:3} # max number of times to retry

# Enable ACL

enableACL: ${SW_ZK_ENABLE_ACL:false} # disable ACL in default

schema: ${SW_ZK_SCHEMA:digest} # only support digest schema

expression: ${SW_ZK_EXPRESSION:skywalking:skywalking}

internalComHost: ${SW_CLUSTER_INTERNAL_COM_HOST:""}

internalComPort: ${SW_CLUSTER_INTERNAL_COM_PORT:-1}

kubernetes:

namespace: ${SW_CLUSTER_K8S_NAMESPACE:default}

labelSelector: ${SW_CLUSTER_K8S_LABEL:app=collector,release=skywalking}

uidEnvName: ${SW_CLUSTER_K8S_UID:SKYWALKING_COLLECTOR_UID}

consul:

serviceName: ${SW_SERVICE_NAME:"SkyWalking_OAP_Cluster"}

# Consul cluster nodes, example: 10.0.0.1:8500,10.0.0.2:8500,10.0.0.3:8500

hostPort: ${SW_CLUSTER_CONSUL_HOST_PORT:localhost:8500}

aclToken: ${SW_CLUSTER_CONSUL_ACLTOKEN:""}

internalComHost: ${SW_CLUSTER_INTERNAL_COM_HOST:""}

internalComPort: ${SW_CLUSTER_INTERNAL_COM_PORT:-1}

etcd:

# etcd cluster nodes, example: 10.0.0.1:2379,10.0.0.2:2379,10.0.0.3:2379

endpoints: ${SW_CLUSTER_ETCD_ENDPOINTS:localhost:2379}

namespace: ${SW_CLUSTER_ETCD_NAMESPACE:/skywalking}

serviceName: ${SW_CLUSTER_ETCD_SERVICE_NAME:"SkyWalking_OAP_Cluster"}

authentication: ${SW_CLUSTER_ETCD_AUTHENTICATION:false}

user: ${SW_CLUSTER_ETCD_USER:}

password: ${SW_CLUSTER_ETCD_PASSWORD:}

internalComHost: ${SW_CLUSTER_INTERNAL_COM_HOST:""}

internalComPort: ${SW_CLUSTER_INTERNAL_COM_PORT:-1}

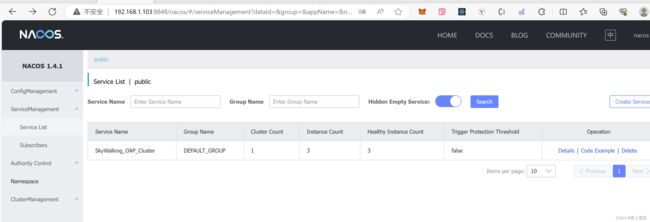

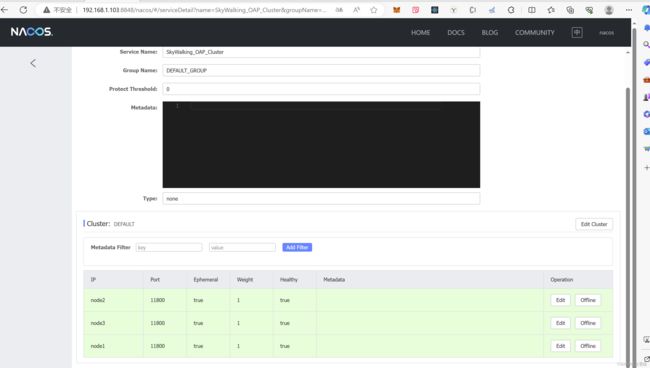

nacos:

serviceName: ${SW_SERVICE_NAME:"SkyWalking_OAP_Cluster"}

hostPort: ${SW_CLUSTER_NACOS_HOST_PORT:192.168.1.103:8848}

# Nacos Configuration namespace

namespace: ${SW_CLUSTER_NACOS_NAMESPACE:"public"}

# Nacos auth username

username: ${SW_CLUSTER_NACOS_USERNAME:""}

password: ${SW_CLUSTER_NACOS_PASSWORD:""}

# Nacos auth accessKey

accessKey: ${SW_CLUSTER_NACOS_ACCESSKEY:""}

secretKey: ${SW_CLUSTER_NACOS_SECRETKEY:""}

internalComHost: ${SW_CLUSTER_INTERNAL_COM_HOST:""}

internalComPort: ${SW_CLUSTER_INTERNAL_COM_PORT:-1}

core:

selector: ${SW_CORE:default}

default:

# Mixed: Receive agent data, Level 1 aggregate, Level 2 aggregate

# Receiver: Receive agent data, Level 1 aggregate

# Aggregator: Level 2 aggregate

role: ${SW_CORE_ROLE:Mixed} # Mixed/Receiver/Aggregator

restHost: ${SW_CORE_REST_HOST:node1}

restPort: ${SW_CORE_REST_PORT:12800}

restContextPath: ${SW_CORE_REST_CONTEXT_PATH:/}

restMinThreads: ${SW_CORE_REST_JETTY_MIN_THREADS:1}

restMaxThreads: ${SW_CORE_REST_JETTY_MAX_THREADS:200}

restIdleTimeOut: ${SW_CORE_REST_JETTY_IDLE_TIMEOUT:30000}

restAcceptQueueSize: ${SW_CORE_REST_JETTY_QUEUE_SIZE:0}

httpMaxRequestHeaderSize: ${SW_CORE_HTTP_MAX_REQUEST_HEADER_SIZE:8192}

gRPCHost: ${SW_CORE_GRPC_HOST:node1}

gRPCPort: ${SW_CORE_GRPC_PORT:11800}

maxConcurrentCallsPerConnection: ${SW_CORE_GRPC_MAX_CONCURRENT_CALL:0}

maxMessageSize: ${SW_CORE_GRPC_MAX_MESSAGE_SIZE:0}

gRPCThreadPoolQueueSize: ${SW_CORE_GRPC_POOL_QUEUE_SIZE:-1}

gRPCThreadPoolSize: ${SW_CORE_GRPC_THREAD_POOL_SIZE:-1}

gRPCSslEnabled: ${SW_CORE_GRPC_SSL_ENABLED:false}

gRPCSslKeyPath: ${SW_CORE_GRPC_SSL_KEY_PATH:""}

gRPCSslCertChainPath: ${SW_CORE_GRPC_SSL_CERT_CHAIN_PATH:""}

gRPCSslTrustedCAPath: ${SW_CORE_GRPC_SSL_TRUSTED_CA_PATH:""}

downsampling:

- Hour

- Day

# Set a timeout on metrics data. After the timeout has expired, the metrics data will automatically be deleted.

enableDataKeeperExecutor: ${SW_CORE_ENABLE_DATA_KEEPER_EXECUTOR:true} # Turn it off then automatically metrics data delete will be close.

dataKeeperExecutePeriod: ${SW_CORE_DATA_KEEPER_EXECUTE_PERIOD:5} # How often the data keeper executor runs periodically, unit is minute

recordDataTTL: ${SW_CORE_RECORD_DATA_TTL:3} # Unit is day

metricsDataTTL: ${SW_CORE_METRICS_DATA_TTL:7} # Unit is day

# The period of L1 aggregation flush to L2 aggregation. Unit is ms.

l1FlushPeriod: ${SW_CORE_L1_AGGREGATION_FLUSH_PERIOD:500}

# The threshold of session time. Unit is ms. Default value is 70s.

storageSessionTimeout: ${SW_CORE_STORAGE_SESSION_TIMEOUT:70000}

# The period of doing data persistence. Unit is second.Default value is 25s

persistentPeriod: ${SW_CORE_PERSISTENT_PERIOD:25}

# Cache metrics data for 1 minute to reduce database queries, and if the OAP cluster changes within that minute,

# the metrics may not be accurate within that minute.

enableDatabaseSession: ${SW_CORE_ENABLE_DATABASE_SESSION:true}

topNReportPeriod: ${SW_CORE_TOPN_REPORT_PERIOD:10} # top_n record worker report cycle, unit is minute

# Extra model column are the column defined by in the codes, These columns of model are not required logically in aggregation or further query,

# and it will cause more load for memory, network of OAP and storage.

# But, being activated, user could see the name in the storage entities, which make users easier to use 3rd party tool, such as Kibana->ES, to query the data by themselves.

activeExtraModelColumns: ${SW_CORE_ACTIVE_EXTRA_MODEL_COLUMNS:false}

# The max length of service + instance names should be less than 200

serviceNameMaxLength: ${SW_SERVICE_NAME_MAX_LENGTH:70}

instanceNameMaxLength: ${SW_INSTANCE_NAME_MAX_LENGTH:70}

# The max length of service + endpoint names should be less than 240

endpointNameMaxLength: ${SW_ENDPOINT_NAME_MAX_LENGTH:150}

# Define the set of span tag keys, which should be searchable through the GraphQL.

searchableTracesTags: ${SW_SEARCHABLE_TAG_KEYS:http.method,status_code,db.type,db.instance,mq.queue,mq.topic,mq.broker}

# Define the set of log tag keys, which should be searchable through the GraphQL.

searchableLogsTags: ${SW_SEARCHABLE_LOGS_TAG_KEYS:level}

# Define the set of alarm tag keys, which should be searchable through the GraphQL.

searchableAlarmTags: ${SW_SEARCHABLE_ALARM_TAG_KEYS:level}

# The number of threads used to prepare metrics data to the storage.

prepareThreads: ${SW_CORE_PREPARE_THREADS:2}

# Turn it on then automatically grouping endpoint by the given OpenAPI definitions.

enableEndpointNameGroupingByOpenapi: ${SW_CORE_ENABLE_ENDPOINT_NAME_GROUPING_BY_OPAENAPI:true}

storage:

selector: ${SW_STORAGE:mysql}

elasticsearch:

namespace: ${SW_NAMESPACE:""}

clusterNodes: ${SW_STORAGE_ES_CLUSTER_NODES:localhost:9200}

protocol: ${SW_STORAGE_ES_HTTP_PROTOCOL:"http"}

connectTimeout: ${SW_STORAGE_ES_CONNECT_TIMEOUT:3000}

socketTimeout: ${SW_STORAGE_ES_SOCKET_TIMEOUT:30000}

responseTimeout: ${SW_STORAGE_ES_RESPONSE_TIMEOUT:15000}

numHttpClientThread: ${SW_STORAGE_ES_NUM_HTTP_CLIENT_THREAD:0}

user: ${SW_ES_USER:""}

password: ${SW_ES_PASSWORD:""}

trustStorePath: ${SW_STORAGE_ES_SSL_JKS_PATH:""}

trustStorePass: ${SW_STORAGE_ES_SSL_JKS_PASS:""}

secretsManagementFile: ${SW_ES_SECRETS_MANAGEMENT_FILE:""} # Secrets management file in the properties format includes the username, password, which are managed by 3rd party tool.

dayStep: ${SW_STORAGE_DAY_STEP:1} # Represent the number of days in the one minute/hour/day index.

indexShardsNumber: ${SW_STORAGE_ES_INDEX_SHARDS_NUMBER:1} # Shard number of new indexes

indexReplicasNumber: ${SW_STORAGE_ES_INDEX_REPLICAS_NUMBER:1} # Replicas number of new indexes

# Super data set has been defined in the codes, such as trace segments.The following 3 config would be improve es performance when storage super size data in es.

superDatasetDayStep: ${SW_SUPERDATASET_STORAGE_DAY_STEP:-1} # Represent the number of days in the super size dataset record index, the default value is the same as dayStep when the value is less than 0

superDatasetIndexShardsFactor: ${SW_STORAGE_ES_SUPER_DATASET_INDEX_SHARDS_FACTOR:5} # This factor provides more shards for the super data set, shards number = indexShardsNumber * superDatasetIndexShardsFactor. Also, this factor effects Zipkin and Jaeger traces.

superDatasetIndexReplicasNumber: ${SW_STORAGE_ES_SUPER_DATASET_INDEX_REPLICAS_NUMBER:0} # Represent the replicas number in the super size dataset record index, the default value is 0.

indexTemplateOrder: ${SW_STORAGE_ES_INDEX_TEMPLATE_ORDER:0} # the order of index template

bulkActions: ${SW_STORAGE_ES_BULK_ACTIONS:5000} # Execute the async bulk record data every ${SW_STORAGE_ES_BULK_ACTIONS} requests

# flush the bulk every 10 seconds whatever the number of requests

# INT(flushInterval * 2/3) would be used for index refresh period.

flushInterval: ${SW_STORAGE_ES_FLUSH_INTERVAL:15}

concurrentRequests: ${SW_STORAGE_ES_CONCURRENT_REQUESTS:2} # the number of concurrent requests

resultWindowMaxSize: ${SW_STORAGE_ES_QUERY_MAX_WINDOW_SIZE:10000}

metadataQueryMaxSize: ${SW_STORAGE_ES_QUERY_MAX_SIZE:10000}

scrollingBatchSize: ${SW_STORAGE_ES_SCROLLING_BATCH_SIZE:5000}

segmentQueryMaxSize: ${SW_STORAGE_ES_QUERY_SEGMENT_SIZE:200}

profileTaskQueryMaxSize: ${SW_STORAGE_ES_QUERY_PROFILE_TASK_SIZE:200}

oapAnalyzer: ${SW_STORAGE_ES_OAP_ANALYZER:"{\"analyzer\":{\"oap_analyzer\":{\"type\":\"stop\"}}}"} # the oap analyzer.

oapLogAnalyzer: ${SW_STORAGE_ES_OAP_LOG_ANALYZER:"{\"analyzer\":{\"oap_log_analyzer\":{\"type\":\"standard\"}}}"} # the oap log analyzer. It could be customized by the ES analyzer configuration to support more language log formats, such as Chinese log, Japanese log and etc.

advanced: ${SW_STORAGE_ES_ADVANCED:""}

h2:

driver: ${SW_STORAGE_H2_DRIVER:org.h2.jdbcx.JdbcDataSource}

url: ${SW_STORAGE_H2_URL:jdbc:h2:mem:skywalking-oap-db;DB_CLOSE_DELAY=-1}

user: ${SW_STORAGE_H2_USER:sa}

metadataQueryMaxSize: ${SW_STORAGE_H2_QUERY_MAX_SIZE:5000}

maxSizeOfArrayColumn: ${SW_STORAGE_MAX_SIZE_OF_ARRAY_COLUMN:20}

numOfSearchableValuesPerTag: ${SW_STORAGE_NUM_OF_SEARCHABLE_VALUES_PER_TAG:2}

maxSizeOfBatchSql: ${SW_STORAGE_MAX_SIZE_OF_BATCH_SQL:100}

asyncBatchPersistentPoolSize: ${SW_STORAGE_ASYNC_BATCH_PERSISTENT_POOL_SIZE:1}

mysql:

properties:

jdbcUrl: ${SW_JDBC_URL:"jdbc:mysql://192.168.1.103:3306/swtest?rewriteBatchedStatements=true"}

dataSource.user: ${SW_DATA_SOURCE_USER:root}

dataSource.password: ${SW_DATA_SOURCE_PASSWORD:123456}

dataSource.cachePrepStmts: ${SW_DATA_SOURCE_CACHE_PREP_STMTS:true}

dataSource.prepStmtCacheSize: ${SW_DATA_SOURCE_PREP_STMT_CACHE_SQL_SIZE:250}

dataSource.prepStmtCacheSqlLimit: ${SW_DATA_SOURCE_PREP_STMT_CACHE_SQL_LIMIT:2048}

dataSource.useServerPrepStmts: ${SW_DATA_SOURCE_USE_SERVER_PREP_STMTS:true}

metadataQueryMaxSize: ${SW_STORAGE_MYSQL_QUERY_MAX_SIZE:5000}

maxSizeOfArrayColumn: ${SW_STORAGE_MAX_SIZE_OF_ARRAY_COLUMN:20}

numOfSearchableValuesPerTag: ${SW_STORAGE_NUM_OF_SEARCHABLE_VALUES_PER_TAG:2}

maxSizeOfBatchSql: ${SW_STORAGE_MAX_SIZE_OF_BATCH_SQL:2000}

asyncBatchPersistentPoolSize: ${SW_STORAGE_ASYNC_BATCH_PERSISTENT_POOL_SIZE:4}

tidb:

properties:

jdbcUrl: ${SW_JDBC_URL:"jdbc:mysql://localhost:4000/tidbswtest?rewriteBatchedStatements=true"}

dataSource.user: ${SW_DATA_SOURCE_USER:root}

dataSource.password: ${SW_DATA_SOURCE_PASSWORD:""}

dataSource.cachePrepStmts: ${SW_DATA_SOURCE_CACHE_PREP_STMTS:true}

dataSource.prepStmtCacheSize: ${SW_DATA_SOURCE_PREP_STMT_CACHE_SQL_SIZE:250}

dataSource.prepStmtCacheSqlLimit: ${SW_DATA_SOURCE_PREP_STMT_CACHE_SQL_LIMIT:2048}

dataSource.useServerPrepStmts: ${SW_DATA_SOURCE_USE_SERVER_PREP_STMTS:true}

dataSource.useAffectedRows: ${SW_DATA_SOURCE_USE_AFFECTED_ROWS:true}

metadataQueryMaxSize: ${SW_STORAGE_MYSQL_QUERY_MAX_SIZE:5000}

maxSizeOfArrayColumn: ${SW_STORAGE_MAX_SIZE_OF_ARRAY_COLUMN:20}

numOfSearchableValuesPerTag: ${SW_STORAGE_NUM_OF_SEARCHABLE_VALUES_PER_TAG:2}

maxSizeOfBatchSql: ${SW_STORAGE_MAX_SIZE_OF_BATCH_SQL:2000}

asyncBatchPersistentPoolSize: ${SW_STORAGE_ASYNC_BATCH_PERSISTENT_POOL_SIZE:4}

influxdb:

# InfluxDB configuration

url: ${SW_STORAGE_INFLUXDB_URL:http://localhost:8086}

user: ${SW_STORAGE_INFLUXDB_USER:root}

password: ${SW_STORAGE_INFLUXDB_PASSWORD:}

database: ${SW_STORAGE_INFLUXDB_DATABASE:skywalking}

actions: ${SW_STORAGE_INFLUXDB_ACTIONS:1000} # the number of actions to collect

duration: ${SW_STORAGE_INFLUXDB_DURATION:1000} # the time to wait at most (milliseconds)

batchEnabled: ${SW_STORAGE_INFLUXDB_BATCH_ENABLED:true}

fetchTaskLogMaxSize: ${SW_STORAGE_INFLUXDB_FETCH_TASK_LOG_MAX_SIZE:5000} # the max number of fetch task log in a request

connectionResponseFormat: ${SW_STORAGE_INFLUXDB_CONNECTION_RESPONSE_FORMAT:MSGPACK} # the response format of connection to influxDB, cannot be anything but MSGPACK or JSON.

postgresql:

properties:

jdbcUrl: ${SW_JDBC_URL:"jdbc:postgresql://localhost:5432/skywalking"}

dataSource.user: ${SW_DATA_SOURCE_USER:postgres}

dataSource.password: ${SW_DATA_SOURCE_PASSWORD:123456}

dataSource.cachePrepStmts: ${SW_DATA_SOURCE_CACHE_PREP_STMTS:true}

dataSource.prepStmtCacheSize: ${SW_DATA_SOURCE_PREP_STMT_CACHE_SQL_SIZE:250}

dataSource.prepStmtCacheSqlLimit: ${SW_DATA_SOURCE_PREP_STMT_CACHE_SQL_LIMIT:2048}

dataSource.useServerPrepStmts: ${SW_DATA_SOURCE_USE_SERVER_PREP_STMTS:true}

metadataQueryMaxSize: ${SW_STORAGE_MYSQL_QUERY_MAX_SIZE:5000}

maxSizeOfArrayColumn: ${SW_STORAGE_MAX_SIZE_OF_ARRAY_COLUMN:20}

numOfSearchableValuesPerTag: ${SW_STORAGE_NUM_OF_SEARCHABLE_VALUES_PER_TAG:2}

maxSizeOfBatchSql: ${SW_STORAGE_MAX_SIZE_OF_BATCH_SQL:2000}

asyncBatchPersistentPoolSize: ${SW_STORAGE_ASYNC_BATCH_PERSISTENT_POOL_SIZE:4}

zipkin-elasticsearch:

namespace: ${SW_NAMESPACE:""}

clusterNodes: ${SW_STORAGE_ES_CLUSTER_NODES:localhost:9200}

protocol: ${SW_STORAGE_ES_HTTP_PROTOCOL:"http"}

trustStorePath: ${SW_STORAGE_ES_SSL_JKS_PATH:""}

trustStorePass: ${SW_STORAGE_ES_SSL_JKS_PASS:""}

dayStep: ${SW_STORAGE_DAY_STEP:1} # Represent the number of days in the one minute/hour/day index.

indexShardsNumber: ${SW_STORAGE_ES_INDEX_SHARDS_NUMBER:1} # Shard number of new indexes

indexReplicasNumber: ${SW_STORAGE_ES_INDEX_REPLICAS_NUMBER:1} # Replicas number of new indexes

# Super data set has been defined in the codes, such as trace segments.The following 3 config would be improve es performance when storage super size data in es.

superDatasetDayStep: ${SW_SUPERDATASET_STORAGE_DAY_STEP:-1} # Represent the number of days in the super size dataset record index, the default value is the same as dayStep when the value is less than 0

superDatasetIndexShardsFactor: ${SW_STORAGE_ES_SUPER_DATASET_INDEX_SHARDS_FACTOR:5} # This factor provides more shards for the super data set, shards number = indexShardsNumber * superDatasetIndexShardsFactor. Also, this factor effects Zipkin and Jaeger traces.

superDatasetIndexReplicasNumber: ${SW_STORAGE_ES_SUPER_DATASET_INDEX_REPLICAS_NUMBER:0} # Represent the replicas number in the super size dataset record index, the default value is 0.

user: ${SW_ES_USER:""}

password: ${SW_ES_PASSWORD:""}

secretsManagementFile: ${SW_ES_SECRETS_MANAGEMENT_FILE:""} # Secrets management file in the properties format includes the username, password, which are managed by 3rd party tool.

bulkActions: ${SW_STORAGE_ES_BULK_ACTIONS:5000} # Execute the async bulk record data every ${SW_STORAGE_ES_BULK_ACTIONS} requests

# flush the bulk every 10 seconds whatever the number of requests

# INT(flushInterval * 2/3) would be used for index refresh period.

flushInterval: ${SW_STORAGE_ES_FLUSH_INTERVAL:15}

concurrentRequests: ${SW_STORAGE_ES_CONCURRENT_REQUESTS:2} # the number of concurrent requests

resultWindowMaxSize: ${SW_STORAGE_ES_QUERY_MAX_WINDOW_SIZE:10000}

metadataQueryMaxSize: ${SW_STORAGE_ES_QUERY_MAX_SIZE:5000}

segmentQueryMaxSize: ${SW_STORAGE_ES_QUERY_SEGMENT_SIZE:200}

profileTaskQueryMaxSize: ${SW_STORAGE_ES_QUERY_PROFILE_TASK_SIZE:200}

oapAnalyzer: ${SW_STORAGE_ES_OAP_ANALYZER:"{\"analyzer\":{\"oap_analyzer\":{\"type\":\"stop\"}}}"} # the oap analyzer.

oapLogAnalyzer: ${SW_STORAGE_ES_OAP_LOG_ANALYZER:"{\"analyzer\":{\"oap_log_analyzer\":{\"type\":\"standard\"}}}"} # the oap log analyzer. It could be customized by the ES analyzer configuration to support more language log formats, such as Chinese log, Japanese log and etc.

advanced: ${SW_STORAGE_ES_ADVANCED:""}

iotdb:

host: ${SW_STORAGE_IOTDB_HOST:127.0.0.1}

rpcPort: ${SW_STORAGE_IOTDB_RPC_PORT:6667}

username: ${SW_STORAGE_IOTDB_USERNAME:root}

password: ${SW_STORAGE_IOTDB_PASSWORD:root}

storageGroup: ${SW_STORAGE_IOTDB_STORAGE_GROUP:root.skywalking}

sessionPoolSize: ${SW_STORAGE_IOTDB_SESSIONPOOL_SIZE:8} # If it's zero, the SessionPool size will be 2*CPU_Cores

fetchTaskLogMaxSize: ${SW_STORAGE_IOTDB_FETCH_TASK_LOG_MAX_SIZE:1000} # the max number of fetch task log in a request

agent-analyzer:

selector: ${SW_AGENT_ANALYZER:default}

default:

# The default sampling rate and the default trace latency time configured by the 'traceSamplingPolicySettingsFile' file.

traceSamplingPolicySettingsFile: ${SW_TRACE_SAMPLING_POLICY_SETTINGS_FILE:trace-sampling-policy-settings.yml}

slowDBAccessThreshold: ${SW_SLOW_DB_THRESHOLD:default:200,mongodb:100} # The slow database access thresholds. Unit ms.

forceSampleErrorSegment: ${SW_FORCE_SAMPLE_ERROR_SEGMENT:true} # When sampling mechanism active, this config can open(true) force save some error segment. true is default.

segmentStatusAnalysisStrategy: ${SW_SEGMENT_STATUS_ANALYSIS_STRATEGY:FROM_SPAN_STATUS} # Determine the final segment status from the status of spans. Available values are `FROM_SPAN_STATUS` , `FROM_ENTRY_SPAN` and `FROM_FIRST_SPAN`. `FROM_SPAN_STATUS` represents the segment status would be error if any span is in error status. `FROM_ENTRY_SPAN` means the segment status would be determined by the status of entry spans only. `FROM_FIRST_SPAN` means the segment status would be determined by the status of the first span only.

# Nginx and Envoy agents can't get the real remote address.

# Exit spans with the component in the list would not generate the client-side instance relation metrics.

noUpstreamRealAddressAgents: ${SW_NO_UPSTREAM_REAL_ADDRESS:6000,9000}

meterAnalyzerActiveFiles: ${SW_METER_ANALYZER_ACTIVE_FILES:datasource,threadpool,satellite,spring-sleuth} # Which files could be meter analyzed, files split by ","

log-analyzer:

selector: ${SW_LOG_ANALYZER:default}

default:

lalFiles: ${SW_LOG_LAL_FILES:default}

malFiles: ${SW_LOG_MAL_FILES:""}

event-analyzer:

selector: ${SW_EVENT_ANALYZER:default}

default:

receiver-sharing-server:

selector: ${SW_RECEIVER_SHARING_SERVER:default}

default:

# For Jetty server

restHost: ${SW_RECEIVER_SHARING_REST_HOST:node1}

restPort: ${SW_RECEIVER_SHARING_REST_PORT:0}

restContextPath: ${SW_RECEIVER_SHARING_REST_CONTEXT_PATH:/}

restMinThreads: ${SW_RECEIVER_SHARING_JETTY_MIN_THREADS:1}

restMaxThreads: ${SW_RECEIVER_SHARING_JETTY_MAX_THREADS:200}

restIdleTimeOut: ${SW_RECEIVER_SHARING_JETTY_IDLE_TIMEOUT:30000}

restAcceptQueueSize: ${SW_RECEIVER_SHARING_JETTY_QUEUE_SIZE:0}

httpMaxRequestHeaderSize: ${SW_RECEIVER_SHARING_HTTP_MAX_REQUEST_HEADER_SIZE:8192}

# For gRPC server

gRPCHost: ${SW_RECEIVER_GRPC_HOST:node1}

gRPCPort: ${SW_RECEIVER_GRPC_PORT:0}

maxConcurrentCallsPerConnection: ${SW_RECEIVER_GRPC_MAX_CONCURRENT_CALL:0}

maxMessageSize: ${SW_RECEIVER_GRPC_MAX_MESSAGE_SIZE:0}

gRPCThreadPoolQueueSize: ${SW_RECEIVER_GRPC_POOL_QUEUE_SIZE:0}

gRPCThreadPoolSize: ${SW_RECEIVER_GRPC_THREAD_POOL_SIZE:0}

gRPCSslEnabled: ${SW_RECEIVER_GRPC_SSL_ENABLED:false}

gRPCSslKeyPath: ${SW_RECEIVER_GRPC_SSL_KEY_PATH:""}

gRPCSslCertChainPath: ${SW_RECEIVER_GRPC_SSL_CERT_CHAIN_PATH:""}

gRPCSslTrustedCAsPath: ${SW_RECEIVER_GRPC_SSL_TRUSTED_CAS_PATH:""}

authentication: ${SW_AUTHENTICATION:""}

receiver-register:

selector: ${SW_RECEIVER_REGISTER:default}

default:

receiver-trace:

selector: ${SW_RECEIVER_TRACE:default}

default:

receiver-jvm:

selector: ${SW_RECEIVER_JVM:default}

default:

receiver-clr:

selector: ${SW_RECEIVER_CLR:default}

default:

receiver-profile:

selector: ${SW_RECEIVER_PROFILE:default}

default:

receiver-zabbix:

selector: ${SW_RECEIVER_ZABBIX:default}

default:

port: ${SW_RECEIVER_ZABBIX_PORT:10051}

host: ${SW_RECEIVER_ZABBIX_HOST:node1}

activeFiles: ${SW_RECEIVER_ZABBIX_ACTIVE_FILES:agent}

service-mesh:

selector: ${SW_SERVICE_MESH:default}

default:

envoy-metric:

selector: ${SW_ENVOY_METRIC:default}

default:

acceptMetricsService: ${SW_ENVOY_METRIC_SERVICE:true}

alsHTTPAnalysis: ${SW_ENVOY_METRIC_ALS_HTTP_ANALYSIS:""}

alsTCPAnalysis: ${SW_ENVOY_METRIC_ALS_TCP_ANALYSIS:""}

# `k8sServiceNameRule` allows you to customize the service name in ALS via Kubernetes metadata,

# the available variables are `pod`, `service`, f.e., you can use `${service.metadata.name}-${pod.metadata.labels.version}`

# to append the version number to the service name.

# Be careful, when using environment variables to pass this configuration, use single quotes(`''`) to avoid it being evaluated by the shell.

k8sServiceNameRule: ${K8S_SERVICE_NAME_RULE:"${pod.metadata.labels.(service.istio.io/canonical-name)}"}

prometheus-fetcher:

selector: ${SW_PROMETHEUS_FETCHER:default}

default:

enabledRules: ${SW_PROMETHEUS_FETCHER_ENABLED_RULES:"self"}

maxConvertWorker: ${SW_PROMETHEUS_FETCHER_NUM_CONVERT_WORKER:-1}

active: ${SW_PROMETHEUS_FETCHER_ACTIVE:true}

kafka-fetcher:

selector: ${SW_KAFKA_FETCHER:-}

default:

bootstrapServers: ${SW_KAFKA_FETCHER_SERVERS:localhost:9092}

namespace: ${SW_NAMESPACE:""}

partitions: ${SW_KAFKA_FETCHER_PARTITIONS:3}

replicationFactor: ${SW_KAFKA_FETCHER_PARTITIONS_FACTOR:2}

enableNativeProtoLog: ${SW_KAFKA_FETCHER_ENABLE_NATIVE_PROTO_LOG:true}

enableNativeJsonLog: ${SW_KAFKA_FETCHER_ENABLE_NATIVE_JSON_LOG:true}

isSharding: ${SW_KAFKA_FETCHER_IS_SHARDING:false}

consumePartitions: ${SW_KAFKA_FETCHER_CONSUME_PARTITIONS:""}

kafkaHandlerThreadPoolSize: ${SW_KAFKA_HANDLER_THREAD_POOL_SIZE:-1}

kafkaHandlerThreadPoolQueueSize: ${SW_KAFKA_HANDLER_THREAD_POOL_QUEUE_SIZE:-1}

receiver-meter:

selector: ${SW_RECEIVER_METER:default}

default:

receiver-otel:

selector: ${SW_OTEL_RECEIVER:default}

default:

enabledHandlers: ${SW_OTEL_RECEIVER_ENABLED_HANDLERS:"oc"}

enabledOcRules: ${SW_OTEL_RECEIVER_ENABLED_OC_RULES:"istio-controlplane,k8s-node,oap,vm"}

receiver-zipkin:

selector: ${SW_RECEIVER_ZIPKIN:-}

default:

host: ${SW_RECEIVER_ZIPKIN_HOST:0.0.0.0}

port: ${SW_RECEIVER_ZIPKIN_PORT:9411}

contextPath: ${SW_RECEIVER_ZIPKIN_CONTEXT_PATH:/}

jettyMinThreads: ${SW_RECEIVER_ZIPKIN_JETTY_MIN_THREADS:1}

jettyMaxThreads: ${SW_RECEIVER_ZIPKIN_JETTY_MAX_THREADS:200}

jettyIdleTimeOut: ${SW_RECEIVER_ZIPKIN_JETTY_IDLE_TIMEOUT:30000}

jettyAcceptorPriorityDelta: ${SW_RECEIVER_ZIPKIN_JETTY_DELTA:0}

jettyAcceptQueueSize: ${SW_RECEIVER_ZIPKIN_QUEUE_SIZE:0}

instanceNameRule: ${SW_RECEIVER_ZIPKIN_INSTANCE_NAME_RULE:[spring.instance_id,node_id]}

receiver-browser:

selector: ${SW_RECEIVER_BROWSER:default}

default:

# The sample rate precision is 1/10000. 10000 means 100% sample in default.

sampleRate: ${SW_RECEIVER_BROWSER_SAMPLE_RATE:10000}

receiver-log:

selector: ${SW_RECEIVER_LOG:default}

default:

query:

selector: ${SW_QUERY:graphql}

graphql:

# Enable the log testing API to test the LAL.

# NOTE: This API evaluates untrusted code on the OAP server.

# A malicious script can do significant damage (steal keys and secrets, remove files and directories, install malware, etc).

# As such, please enable this API only when you completely trust your users.

enableLogTestTool: ${SW_QUERY_GRAPHQL_ENABLE_LOG_TEST_TOOL:false}

# Maximum complexity allowed for the GraphQL query that can be used to

# abort a query if the total number of data fields queried exceeds the defined threshold.

maxQueryComplexity: ${SW_QUERY_MAX_QUERY_COMPLEXITY:100}

# Allow user add, disable and update UI template

enableUpdateUITemplate: ${SW_ENABLE_UPDATE_UI_TEMPLATE:false}

alarm:

selector: ${SW_ALARM:default}

default:

telemetry:

selector: ${SW_TELEMETRY:prometheus}

none:

prometheus:

host: ${SW_TELEMETRY_PROMETHEUS_HOST:node1}

port: ${SW_TELEMETRY_PROMETHEUS_PORT:1234}

sslEnabled: ${SW_TELEMETRY_PROMETHEUS_SSL_ENABLED:false}

sslKeyPath: ${SW_TELEMETRY_PROMETHEUS_SSL_KEY_PATH:""}

sslCertChainPath: ${SW_TELEMETRY_PROMETHEUS_SSL_CERT_CHAIN_PATH:""}

configuration:

selector: ${SW_CONFIGURATION:none}

none:

grpc:

host: ${SW_DCS_SERVER_HOST:""}

port: ${SW_DCS_SERVER_PORT:80}

clusterName: ${SW_DCS_CLUSTER_NAME:SkyWalking}

period: ${SW_DCS_PERIOD:20}

apollo:

apolloMeta: ${SW_CONFIG_APOLLO:http://localhost:8080}

apolloCluster: ${SW_CONFIG_APOLLO_CLUSTER:default}

apolloEnv: ${SW_CONFIG_APOLLO_ENV:""}

appId: ${SW_CONFIG_APOLLO_APP_ID:skywalking}

period: ${SW_CONFIG_APOLLO_PERIOD:60}

zookeeper:

period: ${SW_CONFIG_ZK_PERIOD:60} # Unit seconds, sync period. Default fetch every 60 seconds.

namespace: ${SW_CONFIG_ZK_NAMESPACE:/default}

hostPort: ${SW_CONFIG_ZK_HOST_PORT:localhost:2181}

# Retry Policy

baseSleepTimeMs: ${SW_CONFIG_ZK_BASE_SLEEP_TIME_MS:1000} # initial amount of time to wait between retries

maxRetries: ${SW_CONFIG_ZK_MAX_RETRIES:3} # max number of times to retry

etcd:

period: ${SW_CONFIG_ETCD_PERIOD:60} # Unit seconds, sync period. Default fetch every 60 seconds.

endpoints: ${SW_CONFIG_ETCD_ENDPOINTS:http://localhost:2379}

namespace: ${SW_CONFIG_ETCD_NAMESPACE:/skywalking}

authentication: ${SW_CONFIG_ETCD_AUTHENTICATION:false}

user: ${SW_CONFIG_ETCD_USER:}

password: ${SW_CONFIG_ETCD_password:}

consul:

# Consul host and ports, separated by comma, e.g. 1.2.3.4:8500,2.3.4.5:8500

hostAndPorts: ${SW_CONFIG_CONSUL_HOST_AND_PORTS:1.2.3.4:8500}

# Sync period in seconds. Defaults to 60 seconds.

period: ${SW_CONFIG_CONSUL_PERIOD:60}

# Consul aclToken

aclToken: ${SW_CONFIG_CONSUL_ACL_TOKEN:""}

k8s-configmap:

period: ${SW_CONFIG_CONFIGMAP_PERIOD:60}

namespace: ${SW_CLUSTER_K8S_NAMESPACE:default}

labelSelector: ${SW_CLUSTER_K8S_LABEL:app=collector,release=skywalking}

nacos:

# Nacos Server Host

serverAddr: ${SW_CONFIG_NACOS_SERVER_ADDR:192.168.1.103}

# Nacos Server Port

port: ${SW_CONFIG_NACOS_SERVER_PORT:8848}

# Nacos Configuration Group

group: ${SW_CONFIG_NACOS_SERVER_GROUP:skywalking}

# Nacos Configuration namespace

namespace: ${SW_CONFIG_NACOS_SERVER_NAMESPACE:}

# Unit seconds, sync period. Default fetch every 60 seconds.

period: ${SW_CONFIG_NACOS_PERIOD:60}

# Nacos auth username

username: ${SW_CONFIG_NACOS_USERNAME:""}

password: ${SW_CONFIG_NACOS_PASSWORD:""}

# Nacos auth accessKey

accessKey: ${SW_CONFIG_NACOS_ACCESSKEY:""}

secretKey: ${SW_CONFIG_NACOS_SECRETKEY:""}

exporter:

selector: ${SW_EXPORTER:-}

grpc:

targetHost: ${SW_EXPORTER_GRPC_HOST:127.0.0.1}

targetPort: ${SW_EXPORTER_GRPC_PORT:9870}

health-checker:

selector: ${SW_HEALTH_CHECKER:-}

default:

checkIntervalSeconds: ${SW_HEALTH_CHECKER_INTERVAL_SECONDS:5}

configuration-discovery:

selector: ${SW_CONFIGURATION_DISCOVERY:default}

default:

disableMessageDigest: ${SW_DISABLE_MESSAGE_DIGEST:false}

receiver-event:

selector: ${SW_RECEIVER_EVENT:default}

default:

receiver-ebpf:

selector: ${SW_RECEIVER_EBPF:default}

default:

3.2、修改第一台服务器的webapp.yml

[root@node1 webapp]# cat webapp.yml

# Licensed to the Apache Software Foundation (ASF) under one or more

# contributor license agreements. See the NOTICE file distributed with

# this work for additional information regarding copyright ownership.

# The ASF licenses this file to You under the Apache License, Version 2.0

# (the "License"); you may not use this file except in compliance with

# the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

server:

port: 8888

spring:

cloud:

gateway:

routes:

- id: oap-route

uri: lb://oap-service

predicates:

- Path=/graphql/**

discovery:

client:

simple:

instances:

oap-service:

- uri: http://node1:12800

- uri: http://node2:12800

- uri: http://node3:12800

# - uri: http://:

# - uri: http://:

mvc:

throw-exception-if-no-handler-found: true

web:

resources:

add-mappings: true

management:

server:

base-path: /manage

3.3、修改第二台服务器的application.yml

[root@node2 config]# cat application.yml

# Licensed to the Apache Software Foundation (ASF) under one or more

# contributor license agreements. See the NOTICE file distributed with

# this work for additional information regarding copyright ownership.

# The ASF licenses this file to You under the Apache License, Version 2.0

# (the "License"); you may not use this file except in compliance with

# the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

cluster:

selector: ${SW_CLUSTER:nacos}

#selector: ${SW_CLUSTER:standalone}

standalone:

# Please check your ZooKeeper is 3.5+, However, it is also compatible with ZooKeeper 3.4.x. Replace the ZooKeeper 3.5+

# library the oap-libs folder with your ZooKeeper 3.4.x library.

zookeeper:

namespace: ${SW_NAMESPACE:""}

hostPort: ${SW_CLUSTER_ZK_HOST_PORT:localhost:2181}

# Retry Policy

baseSleepTimeMs: ${SW_CLUSTER_ZK_SLEEP_TIME:1000} # initial amount of time to wait between retries

maxRetries: ${SW_CLUSTER_ZK_MAX_RETRIES:3} # max number of times to retry

# Enable ACL

enableACL: ${SW_ZK_ENABLE_ACL:false} # disable ACL in default

schema: ${SW_ZK_SCHEMA:digest} # only support digest schema

expression: ${SW_ZK_EXPRESSION:skywalking:skywalking}

internalComHost: ${SW_CLUSTER_INTERNAL_COM_HOST:""}

internalComPort: ${SW_CLUSTER_INTERNAL_COM_PORT:-1}

kubernetes:

namespace: ${SW_CLUSTER_K8S_NAMESPACE:default}

labelSelector: ${SW_CLUSTER_K8S_LABEL:app=collector,release=skywalking}

uidEnvName: ${SW_CLUSTER_K8S_UID:SKYWALKING_COLLECTOR_UID}

consul:

serviceName: ${SW_SERVICE_NAME:"SkyWalking_OAP_Cluster"}

# Consul cluster nodes, example: 10.0.0.1:8500,10.0.0.2:8500,10.0.0.3:8500

hostPort: ${SW_CLUSTER_CONSUL_HOST_PORT:localhost:8500}

aclToken: ${SW_CLUSTER_CONSUL_ACLTOKEN:""}

internalComHost: ${SW_CLUSTER_INTERNAL_COM_HOST:""}

internalComPort: ${SW_CLUSTER_INTERNAL_COM_PORT:-1}

etcd:

# etcd cluster nodes, example: 10.0.0.1:2379,10.0.0.2:2379,10.0.0.3:2379

endpoints: ${SW_CLUSTER_ETCD_ENDPOINTS:localhost:2379}

namespace: ${SW_CLUSTER_ETCD_NAMESPACE:/skywalking}

serviceName: ${SW_CLUSTER_ETCD_SERVICE_NAME:"SkyWalking_OAP_Cluster"}

authentication: ${SW_CLUSTER_ETCD_AUTHENTICATION:false}

user: ${SW_CLUSTER_ETCD_USER:}

password: ${SW_CLUSTER_ETCD_PASSWORD:}

internalComHost: ${SW_CLUSTER_INTERNAL_COM_HOST:""}

internalComPort: ${SW_CLUSTER_INTERNAL_COM_PORT:-1}

nacos:

serviceName: ${SW_SERVICE_NAME:"SkyWalking_OAP_Cluster"}

hostPort: ${SW_CLUSTER_NACOS_HOST_PORT:192.168.1.103:8848}

# Nacos Configuration namespace

namespace: ${SW_CLUSTER_NACOS_NAMESPACE:"public"}

# Nacos auth username

username: ${SW_CLUSTER_NACOS_USERNAME:""}

password: ${SW_CLUSTER_NACOS_PASSWORD:""}

# Nacos auth accessKey

accessKey: ${SW_CLUSTER_NACOS_ACCESSKEY:""}

secretKey: ${SW_CLUSTER_NACOS_SECRETKEY:""}

internalComHost: ${SW_CLUSTER_INTERNAL_COM_HOST:""}

internalComPort: ${SW_CLUSTER_INTERNAL_COM_PORT:-1}

core:

selector: ${SW_CORE:default}

default:

# Mixed: Receive agent data, Level 1 aggregate, Level 2 aggregate

# Receiver: Receive agent data, Level 1 aggregate

# Aggregator: Level 2 aggregate

role: ${SW_CORE_ROLE:Mixed} # Mixed/Receiver/Aggregator

restHost: ${SW_CORE_REST_HOST:node2}

restPort: ${SW_CORE_REST_PORT:12800}

restContextPath: ${SW_CORE_REST_CONTEXT_PATH:/}

restMinThreads: ${SW_CORE_REST_JETTY_MIN_THREADS:1}

restMaxThreads: ${SW_CORE_REST_JETTY_MAX_THREADS:200}

restIdleTimeOut: ${SW_CORE_REST_JETTY_IDLE_TIMEOUT:30000}

restAcceptQueueSize: ${SW_CORE_REST_JETTY_QUEUE_SIZE:0}

httpMaxRequestHeaderSize: ${SW_CORE_HTTP_MAX_REQUEST_HEADER_SIZE:8192}

gRPCHost: ${SW_CORE_GRPC_HOST:node2}

gRPCPort: ${SW_CORE_GRPC_PORT:11800}

maxConcurrentCallsPerConnection: ${SW_CORE_GRPC_MAX_CONCURRENT_CALL:0}

maxMessageSize: ${SW_CORE_GRPC_MAX_MESSAGE_SIZE:0}

gRPCThreadPoolQueueSize: ${SW_CORE_GRPC_POOL_QUEUE_SIZE:-1}

gRPCThreadPoolSize: ${SW_CORE_GRPC_THREAD_POOL_SIZE:-1}

gRPCSslEnabled: ${SW_CORE_GRPC_SSL_ENABLED:false}

gRPCSslKeyPath: ${SW_CORE_GRPC_SSL_KEY_PATH:""}

gRPCSslCertChainPath: ${SW_CORE_GRPC_SSL_CERT_CHAIN_PATH:""}

gRPCSslTrustedCAPath: ${SW_CORE_GRPC_SSL_TRUSTED_CA_PATH:""}

downsampling:

- Hour

- Day

# Set a timeout on metrics data. After the timeout has expired, the metrics data will automatically be deleted.

enableDataKeeperExecutor: ${SW_CORE_ENABLE_DATA_KEEPER_EXECUTOR:true} # Turn it off then automatically metrics data delete will be close.

dataKeeperExecutePeriod: ${SW_CORE_DATA_KEEPER_EXECUTE_PERIOD:5} # How often the data keeper executor runs periodically, unit is minute

recordDataTTL: ${SW_CORE_RECORD_DATA_TTL:3} # Unit is day

metricsDataTTL: ${SW_CORE_METRICS_DATA_TTL:7} # Unit is day

# The period of L1 aggregation flush to L2 aggregation. Unit is ms.

l1FlushPeriod: ${SW_CORE_L1_AGGREGATION_FLUSH_PERIOD:500}

# The threshold of session time. Unit is ms. Default value is 70s.

storageSessionTimeout: ${SW_CORE_STORAGE_SESSION_TIMEOUT:70000}

# The period of doing data persistence. Unit is second.Default value is 25s

persistentPeriod: ${SW_CORE_PERSISTENT_PERIOD:25}

# Cache metrics data for 1 minute to reduce database queries, and if the OAP cluster changes within that minute,

# the metrics may not be accurate within that minute.

enableDatabaseSession: ${SW_CORE_ENABLE_DATABASE_SESSION:true}

topNReportPeriod: ${SW_CORE_TOPN_REPORT_PERIOD:10} # top_n record worker report cycle, unit is minute

# Extra model column are the column defined by in the codes, These columns of model are not required logically in aggregation or further query,

# and it will cause more load for memory, network of OAP and storage.

# But, being activated, user could see the name in the storage entities, which make users easier to use 3rd party tool, such as Kibana->ES, to query the data by themselves.

activeExtraModelColumns: ${SW_CORE_ACTIVE_EXTRA_MODEL_COLUMNS:false}

# The max length of service + instance names should be less than 200

serviceNameMaxLength: ${SW_SERVICE_NAME_MAX_LENGTH:70}

instanceNameMaxLength: ${SW_INSTANCE_NAME_MAX_LENGTH:70}

# The max length of service + endpoint names should be less than 240

endpointNameMaxLength: ${SW_ENDPOINT_NAME_MAX_LENGTH:150}

# Define the set of span tag keys, which should be searchable through the GraphQL.

searchableTracesTags: ${SW_SEARCHABLE_TAG_KEYS:http.method,status_code,db.type,db.instance,mq.queue,mq.topic,mq.broker}

# Define the set of log tag keys, which should be searchable through the GraphQL.

searchableLogsTags: ${SW_SEARCHABLE_LOGS_TAG_KEYS:level}

# Define the set of alarm tag keys, which should be searchable through the GraphQL.

searchableAlarmTags: ${SW_SEARCHABLE_ALARM_TAG_KEYS:level}

# The number of threads used to prepare metrics data to the storage.

prepareThreads: ${SW_CORE_PREPARE_THREADS:2}

# Turn it on then automatically grouping endpoint by the given OpenAPI definitions.

enableEndpointNameGroupingByOpenapi: ${SW_CORE_ENABLE_ENDPOINT_NAME_GROUPING_BY_OPAENAPI:true}

storage:

selector: ${SW_STORAGE:mysql}

elasticsearch:

namespace: ${SW_NAMESPACE:""}

clusterNodes: ${SW_STORAGE_ES_CLUSTER_NODES:localhost:9200}

protocol: ${SW_STORAGE_ES_HTTP_PROTOCOL:"http"}

connectTimeout: ${SW_STORAGE_ES_CONNECT_TIMEOUT:3000}

socketTimeout: ${SW_STORAGE_ES_SOCKET_TIMEOUT:30000}

responseTimeout: ${SW_STORAGE_ES_RESPONSE_TIMEOUT:15000}

numHttpClientThread: ${SW_STORAGE_ES_NUM_HTTP_CLIENT_THREAD:0}

user: ${SW_ES_USER:""}

password: ${SW_ES_PASSWORD:""}

trustStorePath: ${SW_STORAGE_ES_SSL_JKS_PATH:""}

trustStorePass: ${SW_STORAGE_ES_SSL_JKS_PASS:""}

secretsManagementFile: ${SW_ES_SECRETS_MANAGEMENT_FILE:""} # Secrets management file in the properties format includes the username, password, which are managed by 3rd party tool.

dayStep: ${SW_STORAGE_DAY_STEP:1} # Represent the number of days in the one minute/hour/day index.

indexShardsNumber: ${SW_STORAGE_ES_INDEX_SHARDS_NUMBER:1} # Shard number of new indexes

indexReplicasNumber: ${SW_STORAGE_ES_INDEX_REPLICAS_NUMBER:1} # Replicas number of new indexes

# Super data set has been defined in the codes, such as trace segments.The following 3 config would be improve es performance when storage super size data in es.

superDatasetDayStep: ${SW_SUPERDATASET_STORAGE_DAY_STEP:-1} # Represent the number of days in the super size dataset record index, the default value is the same as dayStep when the value is less than 0

superDatasetIndexShardsFactor: ${SW_STORAGE_ES_SUPER_DATASET_INDEX_SHARDS_FACTOR:5} # This factor provides more shards for the super data set, shards number = indexShardsNumber * superDatasetIndexShardsFactor. Also, this factor effects Zipkin and Jaeger traces.

superDatasetIndexReplicasNumber: ${SW_STORAGE_ES_SUPER_DATASET_INDEX_REPLICAS_NUMBER:0} # Represent the replicas number in the super size dataset record index, the default value is 0.

indexTemplateOrder: ${SW_STORAGE_ES_INDEX_TEMPLATE_ORDER:0} # the order of index template

bulkActions: ${SW_STORAGE_ES_BULK_ACTIONS:5000} # Execute the async bulk record data every ${SW_STORAGE_ES_BULK_ACTIONS} requests

# flush the bulk every 10 seconds whatever the number of requests

# INT(flushInterval * 2/3) would be used for index refresh period.

flushInterval: ${SW_STORAGE_ES_FLUSH_INTERVAL:15}

concurrentRequests: ${SW_STORAGE_ES_CONCURRENT_REQUESTS:2} # the number of concurrent requests

resultWindowMaxSize: ${SW_STORAGE_ES_QUERY_MAX_WINDOW_SIZE:10000}

metadataQueryMaxSize: ${SW_STORAGE_ES_QUERY_MAX_SIZE:10000}

scrollingBatchSize: ${SW_STORAGE_ES_SCROLLING_BATCH_SIZE:5000}

segmentQueryMaxSize: ${SW_STORAGE_ES_QUERY_SEGMENT_SIZE:200}

profileTaskQueryMaxSize: ${SW_STORAGE_ES_QUERY_PROFILE_TASK_SIZE:200}

oapAnalyzer: ${SW_STORAGE_ES_OAP_ANALYZER:"{\"analyzer\":{\"oap_analyzer\":{\"type\":\"stop\"}}}"} # the oap analyzer.

oapLogAnalyzer: ${SW_STORAGE_ES_OAP_LOG_ANALYZER:"{\"analyzer\":{\"oap_log_analyzer\":{\"type\":\"standard\"}}}"} # the oap log analyzer. It could be customized by the ES analyzer configuration to support more language log formats, such as Chinese log, Japanese log and etc.

advanced: ${SW_STORAGE_ES_ADVANCED:""}

h2:

driver: ${SW_STORAGE_H2_DRIVER:org.h2.jdbcx.JdbcDataSource}

url: ${SW_STORAGE_H2_URL:jdbc:h2:mem:skywalking-oap-db;DB_CLOSE_DELAY=-1}

user: ${SW_STORAGE_H2_USER:sa}

metadataQueryMaxSize: ${SW_STORAGE_H2_QUERY_MAX_SIZE:5000}

maxSizeOfArrayColumn: ${SW_STORAGE_MAX_SIZE_OF_ARRAY_COLUMN:20}

numOfSearchableValuesPerTag: ${SW_STORAGE_NUM_OF_SEARCHABLE_VALUES_PER_TAG:2}

maxSizeOfBatchSql: ${SW_STORAGE_MAX_SIZE_OF_BATCH_SQL:100}

asyncBatchPersistentPoolSize: ${SW_STORAGE_ASYNC_BATCH_PERSISTENT_POOL_SIZE:1}

mysql:

properties:

jdbcUrl: ${SW_JDBC_URL:"jdbc:mysql://192.168.1.103:3306/swtest?rewriteBatchedStatements=true"}

dataSource.user: ${SW_DATA_SOURCE_USER:root}

dataSource.password: ${SW_DATA_SOURCE_PASSWORD:123456}

dataSource.cachePrepStmts: ${SW_DATA_SOURCE_CACHE_PREP_STMTS:true}

dataSource.prepStmtCacheSize: ${SW_DATA_SOURCE_PREP_STMT_CACHE_SQL_SIZE:250}

dataSource.prepStmtCacheSqlLimit: ${SW_DATA_SOURCE_PREP_STMT_CACHE_SQL_LIMIT:2048}

dataSource.useServerPrepStmts: ${SW_DATA_SOURCE_USE_SERVER_PREP_STMTS:true}

metadataQueryMaxSize: ${SW_STORAGE_MYSQL_QUERY_MAX_SIZE:5000}

maxSizeOfArrayColumn: ${SW_STORAGE_MAX_SIZE_OF_ARRAY_COLUMN:20}

numOfSearchableValuesPerTag: ${SW_STORAGE_NUM_OF_SEARCHABLE_VALUES_PER_TAG:2}

maxSizeOfBatchSql: ${SW_STORAGE_MAX_SIZE_OF_BATCH_SQL:2000}

asyncBatchPersistentPoolSize: ${SW_STORAGE_ASYNC_BATCH_PERSISTENT_POOL_SIZE:4}

tidb:

properties:

jdbcUrl: ${SW_JDBC_URL:"jdbc:mysql://localhost:4000/tidbswtest?rewriteBatchedStatements=true"}

dataSource.user: ${SW_DATA_SOURCE_USER:root}

dataSource.password: ${SW_DATA_SOURCE_PASSWORD:""}

dataSource.cachePrepStmts: ${SW_DATA_SOURCE_CACHE_PREP_STMTS:true}

dataSource.prepStmtCacheSize: ${SW_DATA_SOURCE_PREP_STMT_CACHE_SQL_SIZE:250}

dataSource.prepStmtCacheSqlLimit: ${SW_DATA_SOURCE_PREP_STMT_CACHE_SQL_LIMIT:2048}

dataSource.useServerPrepStmts: ${SW_DATA_SOURCE_USE_SERVER_PREP_STMTS:true}

dataSource.useAffectedRows: ${SW_DATA_SOURCE_USE_AFFECTED_ROWS:true}

metadataQueryMaxSize: ${SW_STORAGE_MYSQL_QUERY_MAX_SIZE:5000}

maxSizeOfArrayColumn: ${SW_STORAGE_MAX_SIZE_OF_ARRAY_COLUMN:20}

numOfSearchableValuesPerTag: ${SW_STORAGE_NUM_OF_SEARCHABLE_VALUES_PER_TAG:2}

maxSizeOfBatchSql: ${SW_STORAGE_MAX_SIZE_OF_BATCH_SQL:2000}

asyncBatchPersistentPoolSize: ${SW_STORAGE_ASYNC_BATCH_PERSISTENT_POOL_SIZE:4}

influxdb:

# InfluxDB configuration

url: ${SW_STORAGE_INFLUXDB_URL:http://localhost:8086}

user: ${SW_STORAGE_INFLUXDB_USER:root}

password: ${SW_STORAGE_INFLUXDB_PASSWORD:}

database: ${SW_STORAGE_INFLUXDB_DATABASE:skywalking}

actions: ${SW_STORAGE_INFLUXDB_ACTIONS:1000} # the number of actions to collect

duration: ${SW_STORAGE_INFLUXDB_DURATION:1000} # the time to wait at most (milliseconds)

batchEnabled: ${SW_STORAGE_INFLUXDB_BATCH_ENABLED:true}

fetchTaskLogMaxSize: ${SW_STORAGE_INFLUXDB_FETCH_TASK_LOG_MAX_SIZE:5000} # the max number of fetch task log in a request

connectionResponseFormat: ${SW_STORAGE_INFLUXDB_CONNECTION_RESPONSE_FORMAT:MSGPACK} # the response format of connection to influxDB, cannot be anything but MSGPACK or JSON.

postgresql:

properties:

jdbcUrl: ${SW_JDBC_URL:"jdbc:postgresql://localhost:5432/skywalking"}

dataSource.user: ${SW_DATA_SOURCE_USER:postgres}

dataSource.password: ${SW_DATA_SOURCE_PASSWORD:123456}

dataSource.cachePrepStmts: ${SW_DATA_SOURCE_CACHE_PREP_STMTS:true}

dataSource.prepStmtCacheSize: ${SW_DATA_SOURCE_PREP_STMT_CACHE_SQL_SIZE:250}

dataSource.prepStmtCacheSqlLimit: ${SW_DATA_SOURCE_PREP_STMT_CACHE_SQL_LIMIT:2048}

dataSource.useServerPrepStmts: ${SW_DATA_SOURCE_USE_SERVER_PREP_STMTS:true}

metadataQueryMaxSize: ${SW_STORAGE_MYSQL_QUERY_MAX_SIZE:5000}

maxSizeOfArrayColumn: ${SW_STORAGE_MAX_SIZE_OF_ARRAY_COLUMN:20}

numOfSearchableValuesPerTag: ${SW_STORAGE_NUM_OF_SEARCHABLE_VALUES_PER_TAG:2}

maxSizeOfBatchSql: ${SW_STORAGE_MAX_SIZE_OF_BATCH_SQL:2000}

asyncBatchPersistentPoolSize: ${SW_STORAGE_ASYNC_BATCH_PERSISTENT_POOL_SIZE:4}

zipkin-elasticsearch:

namespace: ${SW_NAMESPACE:""}

clusterNodes: ${SW_STORAGE_ES_CLUSTER_NODES:localhost:9200}

protocol: ${SW_STORAGE_ES_HTTP_PROTOCOL:"http"}

trustStorePath: ${SW_STORAGE_ES_SSL_JKS_PATH:""}

trustStorePass: ${SW_STORAGE_ES_SSL_JKS_PASS:""}

dayStep: ${SW_STORAGE_DAY_STEP:1} # Represent the number of days in the one minute/hour/day index.

indexShardsNumber: ${SW_STORAGE_ES_INDEX_SHARDS_NUMBER:1} # Shard number of new indexes

indexReplicasNumber: ${SW_STORAGE_ES_INDEX_REPLICAS_NUMBER:1} # Replicas number of new indexes

# Super data set has been defined in the codes, such as trace segments.The following 3 config would be improve es performance when storage super size data in es.

superDatasetDayStep: ${SW_SUPERDATASET_STORAGE_DAY_STEP:-1} # Represent the number of days in the super size dataset record index, the default value is the same as dayStep when the value is less than 0

superDatasetIndexShardsFactor: ${SW_STORAGE_ES_SUPER_DATASET_INDEX_SHARDS_FACTOR:5} # This factor provides more shards for the super data set, shards number = indexShardsNumber * superDatasetIndexShardsFactor. Also, this factor effects Zipkin and Jaeger traces.

superDatasetIndexReplicasNumber: ${SW_STORAGE_ES_SUPER_DATASET_INDEX_REPLICAS_NUMBER:0} # Represent the replicas number in the super size dataset record index, the default value is 0.

user: ${SW_ES_USER:""}

password: ${SW_ES_PASSWORD:""}

secretsManagementFile: ${SW_ES_SECRETS_MANAGEMENT_FILE:""} # Secrets management file in the properties format includes the username, password, which are managed by 3rd party tool.

bulkActions: ${SW_STORAGE_ES_BULK_ACTIONS:5000} # Execute the async bulk record data every ${SW_STORAGE_ES_BULK_ACTIONS} requests

# flush the bulk every 10 seconds whatever the number of requests

# INT(flushInterval * 2/3) would be used for index refresh period.

flushInterval: ${SW_STORAGE_ES_FLUSH_INTERVAL:15}

concurrentRequests: ${SW_STORAGE_ES_CONCURRENT_REQUESTS:2} # the number of concurrent requests

resultWindowMaxSize: ${SW_STORAGE_ES_QUERY_MAX_WINDOW_SIZE:10000}

metadataQueryMaxSize: ${SW_STORAGE_ES_QUERY_MAX_SIZE:5000}

segmentQueryMaxSize: ${SW_STORAGE_ES_QUERY_SEGMENT_SIZE:200}

profileTaskQueryMaxSize: ${SW_STORAGE_ES_QUERY_PROFILE_TASK_SIZE:200}

oapAnalyzer: ${SW_STORAGE_ES_OAP_ANALYZER:"{\"analyzer\":{\"oap_analyzer\":{\"type\":\"stop\"}}}"} # the oap analyzer.

oapLogAnalyzer: ${SW_STORAGE_ES_OAP_LOG_ANALYZER:"{\"analyzer\":{\"oap_log_analyzer\":{\"type\":\"standard\"}}}"} # the oap log analyzer. It could be customized by the ES analyzer configuration to support more language log formats, such as Chinese log, Japanese log and etc.

advanced: ${SW_STORAGE_ES_ADVANCED:""}

iotdb:

host: ${SW_STORAGE_IOTDB_HOST:127.0.0.1}

rpcPort: ${SW_STORAGE_IOTDB_RPC_PORT:6667}

username: ${SW_STORAGE_IOTDB_USERNAME:root}

password: ${SW_STORAGE_IOTDB_PASSWORD:root}

storageGroup: ${SW_STORAGE_IOTDB_STORAGE_GROUP:root.skywalking}

sessionPoolSize: ${SW_STORAGE_IOTDB_SESSIONPOOL_SIZE:8} # If it's zero, the SessionPool size will be 2*CPU_Cores

fetchTaskLogMaxSize: ${SW_STORAGE_IOTDB_FETCH_TASK_LOG_MAX_SIZE:1000} # the max number of fetch task log in a request

agent-analyzer:

selector: ${SW_AGENT_ANALYZER:default}

default:

# The default sampling rate and the default trace latency time configured by the 'traceSamplingPolicySettingsFile' file.

traceSamplingPolicySettingsFile: ${SW_TRACE_SAMPLING_POLICY_SETTINGS_FILE:trace-sampling-policy-settings.yml}

slowDBAccessThreshold: ${SW_SLOW_DB_THRESHOLD:default:200,mongodb:100} # The slow database access thresholds. Unit ms.

forceSampleErrorSegment: ${SW_FORCE_SAMPLE_ERROR_SEGMENT:true} # When sampling mechanism active, this config can open(true) force save some error segment. true is default.

segmentStatusAnalysisStrategy: ${SW_SEGMENT_STATUS_ANALYSIS_STRATEGY:FROM_SPAN_STATUS} # Determine the final segment status from the status of spans. Available values are `FROM_SPAN_STATUS` , `FROM_ENTRY_SPAN` and `FROM_FIRST_SPAN`. `FROM_SPAN_STATUS` represents the segment status would be error if any span is in error status. `FROM_ENTRY_SPAN` means the segment status would be determined by the status of entry spans only. `FROM_FIRST_SPAN` means the segment status would be determined by the status of the first span only.

# Nginx and Envoy agents can't get the real remote address.

# Exit spans with the component in the list would not generate the client-side instance relation metrics.

noUpstreamRealAddressAgents: ${SW_NO_UPSTREAM_REAL_ADDRESS:6000,9000}

meterAnalyzerActiveFiles: ${SW_METER_ANALYZER_ACTIVE_FILES:datasource,threadpool,satellite,spring-sleuth} # Which files could be meter analyzed, files split by ","

log-analyzer:

selector: ${SW_LOG_ANALYZER:default}

default:

lalFiles: ${SW_LOG_LAL_FILES:default}

malFiles: ${SW_LOG_MAL_FILES:""}

event-analyzer:

selector: ${SW_EVENT_ANALYZER:default}

default:

receiver-sharing-server:

selector: ${SW_RECEIVER_SHARING_SERVER:default}

default:

# For Jetty server

restHost: ${SW_RECEIVER_SHARING_REST_HOST:node2}

restPort: ${SW_RECEIVER_SHARING_REST_PORT:0}

restContextPath: ${SW_RECEIVER_SHARING_REST_CONTEXT_PATH:/}

restMinThreads: ${SW_RECEIVER_SHARING_JETTY_MIN_THREADS:1}

restMaxThreads: ${SW_RECEIVER_SHARING_JETTY_MAX_THREADS:200}

restIdleTimeOut: ${SW_RECEIVER_SHARING_JETTY_IDLE_TIMEOUT:30000}

restAcceptQueueSize: ${SW_RECEIVER_SHARING_JETTY_QUEUE_SIZE:0}

httpMaxRequestHeaderSize: ${SW_RECEIVER_SHARING_HTTP_MAX_REQUEST_HEADER_SIZE:8192}

# For gRPC server

gRPCHost: ${SW_RECEIVER_GRPC_HOST:node2}

gRPCPort: ${SW_RECEIVER_GRPC_PORT:0}

maxConcurrentCallsPerConnection: ${SW_RECEIVER_GRPC_MAX_CONCURRENT_CALL:0}

maxMessageSize: ${SW_RECEIVER_GRPC_MAX_MESSAGE_SIZE:0}

gRPCThreadPoolQueueSize: ${SW_RECEIVER_GRPC_POOL_QUEUE_SIZE:0}

gRPCThreadPoolSize: ${SW_RECEIVER_GRPC_THREAD_POOL_SIZE:0}

gRPCSslEnabled: ${SW_RECEIVER_GRPC_SSL_ENABLED:false}

gRPCSslKeyPath: ${SW_RECEIVER_GRPC_SSL_KEY_PATH:""}

gRPCSslCertChainPath: ${SW_RECEIVER_GRPC_SSL_CERT_CHAIN_PATH:""}

gRPCSslTrustedCAsPath: ${SW_RECEIVER_GRPC_SSL_TRUSTED_CAS_PATH:""}

authentication: ${SW_AUTHENTICATION:""}

receiver-register:

selector: ${SW_RECEIVER_REGISTER:default}

default:

receiver-trace:

selector: ${SW_RECEIVER_TRACE:default}

default:

receiver-jvm:

selector: ${SW_RECEIVER_JVM:default}

default:

receiver-clr:

selector: ${SW_RECEIVER_CLR:default}

default:

receiver-profile:

selector: ${SW_RECEIVER_PROFILE:default}

default:

receiver-zabbix:

selector: ${SW_RECEIVER_ZABBIX:default}

default:

port: ${SW_RECEIVER_ZABBIX_PORT:10051}

host: ${SW_RECEIVER_ZABBIX_HOST:node2}

activeFiles: ${SW_RECEIVER_ZABBIX_ACTIVE_FILES:agent}

service-mesh:

selector: ${SW_SERVICE_MESH:default}

default:

envoy-metric:

selector: ${SW_ENVOY_METRIC:default}

default:

acceptMetricsService: ${SW_ENVOY_METRIC_SERVICE:true}

alsHTTPAnalysis: ${SW_ENVOY_METRIC_ALS_HTTP_ANALYSIS:""}

alsTCPAnalysis: ${SW_ENVOY_METRIC_ALS_TCP_ANALYSIS:""}

# `k8sServiceNameRule` allows you to customize the service name in ALS via Kubernetes metadata,

# the available variables are `pod`, `service`, f.e., you can use `${service.metadata.name}-${pod.metadata.labels.version}`

# to append the version number to the service name.

# Be careful, when using environment variables to pass this configuration, use single quotes(`''`) to avoid it being evaluated by the shell.

k8sServiceNameRule: ${K8S_SERVICE_NAME_RULE:"${pod.metadata.labels.(service.istio.io/canonical-name)}"}

prometheus-fetcher:

selector: ${SW_PROMETHEUS_FETCHER:default}

default:

enabledRules: ${SW_PROMETHEUS_FETCHER_ENABLED_RULES:"self"}

maxConvertWorker: ${SW_PROMETHEUS_FETCHER_NUM_CONVERT_WORKER:-1}

active: ${SW_PROMETHEUS_FETCHER_ACTIVE:true}

kafka-fetcher:

selector: ${SW_KAFKA_FETCHER:-}

default:

bootstrapServers: ${SW_KAFKA_FETCHER_SERVERS:localhost:9092}

namespace: ${SW_NAMESPACE:""}

partitions: ${SW_KAFKA_FETCHER_PARTITIONS:3}

replicationFactor: ${SW_KAFKA_FETCHER_PARTITIONS_FACTOR:2}

enableNativeProtoLog: ${SW_KAFKA_FETCHER_ENABLE_NATIVE_PROTO_LOG:true}

enableNativeJsonLog: ${SW_KAFKA_FETCHER_ENABLE_NATIVE_JSON_LOG:true}

isSharding: ${SW_KAFKA_FETCHER_IS_SHARDING:false}

consumePartitions: ${SW_KAFKA_FETCHER_CONSUME_PARTITIONS:""}

kafkaHandlerThreadPoolSize: ${SW_KAFKA_HANDLER_THREAD_POOL_SIZE:-1}

kafkaHandlerThreadPoolQueueSize: ${SW_KAFKA_HANDLER_THREAD_POOL_QUEUE_SIZE:-1}

receiver-meter:

selector: ${SW_RECEIVER_METER:default}

default:

receiver-otel:

selector: ${SW_OTEL_RECEIVER:default}

default:

enabledHandlers: ${SW_OTEL_RECEIVER_ENABLED_HANDLERS:"oc"}

enabledOcRules: ${SW_OTEL_RECEIVER_ENABLED_OC_RULES:"istio-controlplane,k8s-node,oap,vm"}

receiver-zipkin:

selector: ${SW_RECEIVER_ZIPKIN:-}

default:

host: ${SW_RECEIVER_ZIPKIN_HOST:0.0.0.0}

port: ${SW_RECEIVER_ZIPKIN_PORT:9411}

contextPath: ${SW_RECEIVER_ZIPKIN_CONTEXT_PATH:/}

jettyMinThreads: ${SW_RECEIVER_ZIPKIN_JETTY_MIN_THREADS:1}

jettyMaxThreads: ${SW_RECEIVER_ZIPKIN_JETTY_MAX_THREADS:200}

jettyIdleTimeOut: ${SW_RECEIVER_ZIPKIN_JETTY_IDLE_TIMEOUT:30000}

jettyAcceptorPriorityDelta: ${SW_RECEIVER_ZIPKIN_JETTY_DELTA:0}

jettyAcceptQueueSize: ${SW_RECEIVER_ZIPKIN_QUEUE_SIZE:0}

instanceNameRule: ${SW_RECEIVER_ZIPKIN_INSTANCE_NAME_RULE:[spring.instance_id,node_id]}

receiver-browser:

selector: ${SW_RECEIVER_BROWSER:default}

default:

# The sample rate precision is 1/10000. 10000 means 100% sample in default.

sampleRate: ${SW_RECEIVER_BROWSER_SAMPLE_RATE:10000}

receiver-log:

selector: ${SW_RECEIVER_LOG:default}

default:

query:

selector: ${SW_QUERY:graphql}

graphql:

# Enable the log testing API to test the LAL.

# NOTE: This API evaluates untrusted code on the OAP server.

# A malicious script can do significant damage (steal keys and secrets, remove files and directories, install malware, etc).

# As such, please enable this API only when you completely trust your users.

enableLogTestTool: ${SW_QUERY_GRAPHQL_ENABLE_LOG_TEST_TOOL:false}

# Maximum complexity allowed for the GraphQL query that can be used to

# abort a query if the total number of data fields queried exceeds the defined threshold.

maxQueryComplexity: ${SW_QUERY_MAX_QUERY_COMPLEXITY:100}

# Allow user add, disable and update UI template

enableUpdateUITemplate: ${SW_ENABLE_UPDATE_UI_TEMPLATE:false}

alarm:

selector: ${SW_ALARM:default}

default:

telemetry:

selector: ${SW_TELEMETRY:prometheus}

none:

prometheus:

host: ${SW_TELEMETRY_PROMETHEUS_HOST:node2}

port: ${SW_TELEMETRY_PROMETHEUS_PORT:1234}

sslEnabled: ${SW_TELEMETRY_PROMETHEUS_SSL_ENABLED:false}

sslKeyPath: ${SW_TELEMETRY_PROMETHEUS_SSL_KEY_PATH:""}

sslCertChainPath: ${SW_TELEMETRY_PROMETHEUS_SSL_CERT_CHAIN_PATH:""}

configuration:

selector: ${SW_CONFIGURATION:none}

none:

grpc:

host: ${SW_DCS_SERVER_HOST:""}

port: ${SW_DCS_SERVER_PORT:80}

clusterName: ${SW_DCS_CLUSTER_NAME:SkyWalking}

period: ${SW_DCS_PERIOD:20}

apollo:

apolloMeta: ${SW_CONFIG_APOLLO:http://localhost:8080}

apolloCluster: ${SW_CONFIG_APOLLO_CLUSTER:default}

apolloEnv: ${SW_CONFIG_APOLLO_ENV:""}

appId: ${SW_CONFIG_APOLLO_APP_ID:skywalking}

period: ${SW_CONFIG_APOLLO_PERIOD:60}

zookeeper:

period: ${SW_CONFIG_ZK_PERIOD:60} # Unit seconds, sync period. Default fetch every 60 seconds.

namespace: ${SW_CONFIG_ZK_NAMESPACE:/default}

hostPort: ${SW_CONFIG_ZK_HOST_PORT:localhost:2181}

# Retry Policy

baseSleepTimeMs: ${SW_CONFIG_ZK_BASE_SLEEP_TIME_MS:1000} # initial amount of time to wait between retries

maxRetries: ${SW_CONFIG_ZK_MAX_RETRIES:3} # max number of times to retry

etcd:

period: ${SW_CONFIG_ETCD_PERIOD:60} # Unit seconds, sync period. Default fetch every 60 seconds.

endpoints: ${SW_CONFIG_ETCD_ENDPOINTS:http://localhost:2379}

namespace: ${SW_CONFIG_ETCD_NAMESPACE:/skywalking}

authentication: ${SW_CONFIG_ETCD_AUTHENTICATION:false}

user: ${SW_CONFIG_ETCD_USER:}

password: ${SW_CONFIG_ETCD_password:}

consul:

# Consul host and ports, separated by comma, e.g. 1.2.3.4:8500,2.3.4.5:8500

hostAndPorts: ${SW_CONFIG_CONSUL_HOST_AND_PORTS:1.2.3.4:8500}

# Sync period in seconds. Defaults to 60 seconds.

period: ${SW_CONFIG_CONSUL_PERIOD:60}

# Consul aclToken

aclToken: ${SW_CONFIG_CONSUL_ACL_TOKEN:""}

k8s-configmap:

period: ${SW_CONFIG_CONFIGMAP_PERIOD:60}

namespace: ${SW_CLUSTER_K8S_NAMESPACE:default}

labelSelector: ${SW_CLUSTER_K8S_LABEL:app=collector,release=skywalking}

nacos:

# Nacos Server Host

serverAddr: ${SW_CONFIG_NACOS_SERVER_ADDR:192.168.1.103}

# Nacos Server Port

port: ${SW_CONFIG_NACOS_SERVER_PORT:8848}

# Nacos Configuration Group

group: ${SW_CONFIG_NACOS_SERVER_GROUP:skywalking}

# Nacos Configuration namespace

namespace: ${SW_CONFIG_NACOS_SERVER_NAMESPACE:}

# Unit seconds, sync period. Default fetch every 60 seconds.

period: ${SW_CONFIG_NACOS_PERIOD:60}

# Nacos auth username

username: ${SW_CONFIG_NACOS_USERNAME:""}

password: ${SW_CONFIG_NACOS_PASSWORD:""}

# Nacos auth accessKey

accessKey: ${SW_CONFIG_NACOS_ACCESSKEY:""}

secretKey: ${SW_CONFIG_NACOS_SECRETKEY:""}

exporter:

selector: ${SW_EXPORTER:-}

grpc:

targetHost: ${SW_EXPORTER_GRPC_HOST:127.0.0.1}

targetPort: ${SW_EXPORTER_GRPC_PORT:9870}

health-checker:

selector: ${SW_HEALTH_CHECKER:-}

default:

checkIntervalSeconds: ${SW_HEALTH_CHECKER_INTERVAL_SECONDS:5}

configuration-discovery:

selector: ${SW_CONFIGURATION_DISCOVERY:default}

default:

disableMessageDigest: ${SW_DISABLE_MESSAGE_DIGEST:false}

receiver-event:

selector: ${SW_RECEIVER_EVENT:default}

default:

receiver-ebpf:

selector: ${SW_RECEIVER_EBPF:default}

default:

3.4、修改第二台服务器的webapp.yml

[root@node1 webapp]# cat webapp.yml

# Licensed to the Apache Software Foundation (ASF) under one or more

# contributor license agreements. See the NOTICE file distributed with

# this work for additional information regarding copyright ownership.

# The ASF licenses this file to You under the Apache License, Version 2.0

# (the "License"); you may not use this file except in compliance with

# the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

server:

port: 8888

spring:

cloud:

gateway:

routes:

- id: oap-route

uri: lb://oap-service

predicates:

- Path=/graphql/**

discovery:

client:

simple:

instances:

oap-service:

- uri: http://node1:12800

- uri: http://node2:12800

- uri: http://node3:12800

# - uri: http://:

# - uri: http://:

mvc:

throw-exception-if-no-handler-found: true

web:

resources:

add-mappings: true

management:

server:

base-path: /manage

3.5、修改第三台服务器的application.yml

[root@node3 config]# cat application.yml

# Licensed to the Apache Software Foundation (ASF) under one or more

# contributor license agreements. See the NOTICE file distributed with

# this work for additional information regarding copyright ownership.

# The ASF licenses this file to You under the Apache License, Version 2.0

# (the "License"); you may not use this file except in compliance with

# the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

cluster:

selector: ${SW_CLUSTER:nacos}

#selector: ${SW_CLUSTER:standalone}

standalone:

# Please check your ZooKeeper is 3.5+, However, it is also compatible with ZooKeeper 3.4.x. Replace the ZooKeeper 3.5+

# library the oap-libs folder with your ZooKeeper 3.4.x library.

zookeeper:

namespace: ${SW_NAMESPACE:""}

hostPort: ${SW_CLUSTER_ZK_HOST_PORT:localhost:2181}

# Retry Policy

baseSleepTimeMs: ${SW_CLUSTER_ZK_SLEEP_TIME:1000} # initial amount of time to wait between retries

maxRetries: ${SW_CLUSTER_ZK_MAX_RETRIES:3} # max number of times to retry

# Enable ACL

enableACL: ${SW_ZK_ENABLE_ACL:false} # disable ACL in default

schema: ${SW_ZK_SCHEMA:digest} # only support digest schema

expression: ${SW_ZK_EXPRESSION:skywalking:skywalking}

internalComHost: ${SW_CLUSTER_INTERNAL_COM_HOST:""}

internalComPort: ${SW_CLUSTER_INTERNAL_COM_PORT:-1}

kubernetes:

namespace: ${SW_CLUSTER_K8S_NAMESPACE:default}

labelSelector: ${SW_CLUSTER_K8S_LABEL:app=collector,release=skywalking}

uidEnvName: ${SW_CLUSTER_K8S_UID:SKYWALKING_COLLECTOR_UID}

consul:

serviceName: ${SW_SERVICE_NAME:"SkyWalking_OAP_Cluster"}

# Consul cluster nodes, example: 10.0.0.1:8500,10.0.0.2:8500,10.0.0.3:8500

hostPort: ${SW_CLUSTER_CONSUL_HOST_PORT:localhost:8500}

aclToken: ${SW_CLUSTER_CONSUL_ACLTOKEN:""}

internalComHost: ${SW_CLUSTER_INTERNAL_COM_HOST:""}

internalComPort: ${SW_CLUSTER_INTERNAL_COM_PORT:-1}

etcd:

# etcd cluster nodes, example: 10.0.0.1:2379,10.0.0.2:2379,10.0.0.3:2379

endpoints: ${SW_CLUSTER_ETCD_ENDPOINTS:localhost:2379}

namespace: ${SW_CLUSTER_ETCD_NAMESPACE:/skywalking}

serviceName: ${SW_CLUSTER_ETCD_SERVICE_NAME:"SkyWalking_OAP_Cluster"}

authentication: ${SW_CLUSTER_ETCD_AUTHENTICATION:false}

user: ${SW_CLUSTER_ETCD_USER:}

password: ${SW_CLUSTER_ETCD_PASSWORD:}

internalComHost: ${SW_CLUSTER_INTERNAL_COM_HOST:""}

internalComPort: ${SW_CLUSTER_INTERNAL_COM_PORT:-1}

nacos:

serviceName: ${SW_SERVICE_NAME:"SkyWalking_OAP_Cluster"}

hostPort: ${SW_CLUSTER_NACOS_HOST_PORT:192.168.1.103:8848}

# Nacos Configuration namespace

namespace: ${SW_CLUSTER_NACOS_NAMESPACE:"public"}

# Nacos auth username

username: ${SW_CLUSTER_NACOS_USERNAME:""}

password: ${SW_CLUSTER_NACOS_PASSWORD:""}

# Nacos auth accessKey

accessKey: ${SW_CLUSTER_NACOS_ACCESSKEY:""}

secretKey: ${SW_CLUSTER_NACOS_SECRETKEY:""}

internalComHost: ${SW_CLUSTER_INTERNAL_COM_HOST:""}

internalComPort: ${SW_CLUSTER_INTERNAL_COM_PORT:-1}

core:

selector: ${SW_CORE:default}

default:

# Mixed: Receive agent data, Level 1 aggregate, Level 2 aggregate

# Receiver: Receive agent data, Level 1 aggregate

# Aggregator: Level 2 aggregate

role: ${SW_CORE_ROLE:Mixed} # Mixed/Receiver/Aggregator

restHost: ${SW_CORE_REST_HOST:node3}

restPort: ${SW_CORE_REST_PORT:12800}

restContextPath: ${SW_CORE_REST_CONTEXT_PATH:/}

restMinThreads: ${SW_CORE_REST_JETTY_MIN_THREADS:1}

restMaxThreads: ${SW_CORE_REST_JETTY_MAX_THREADS:200}

restIdleTimeOut: ${SW_CORE_REST_JETTY_IDLE_TIMEOUT:30000}

restAcceptQueueSize: ${SW_CORE_REST_JETTY_QUEUE_SIZE:0}

httpMaxRequestHeaderSize: ${SW_CORE_HTTP_MAX_REQUEST_HEADER_SIZE:8192}

gRPCHost: ${SW_CORE_GRPC_HOST:node3}

gRPCPort: ${SW_CORE_GRPC_PORT:11800}

maxConcurrentCallsPerConnection: ${SW_CORE_GRPC_MAX_CONCURRENT_CALL:0}

maxMessageSize: ${SW_CORE_GRPC_MAX_MESSAGE_SIZE:0}

gRPCThreadPoolQueueSize: ${SW_CORE_GRPC_POOL_QUEUE_SIZE:-1}

gRPCThreadPoolSize: ${SW_CORE_GRPC_THREAD_POOL_SIZE:-1}

gRPCSslEnabled: ${SW_CORE_GRPC_SSL_ENABLED:false}

gRPCSslKeyPath: ${SW_CORE_GRPC_SSL_KEY_PATH:""}

gRPCSslCertChainPath: ${SW_CORE_GRPC_SSL_CERT_CHAIN_PATH:""}

gRPCSslTrustedCAPath: ${SW_CORE_GRPC_SSL_TRUSTED_CA_PATH:""}

downsampling:

- Hour

- Day

# Set a timeout on metrics data. After the timeout has expired, the metrics data will automatically be deleted.

enableDataKeeperExecutor: ${SW_CORE_ENABLE_DATA_KEEPER_EXECUTOR:true} # Turn it off then automatically metrics data delete will be close.

dataKeeperExecutePeriod: ${SW_CORE_DATA_KEEPER_EXECUTE_PERIOD:5} # How often the data keeper executor runs periodically, unit is minute

recordDataTTL: ${SW_CORE_RECORD_DATA_TTL:3} # Unit is day

metricsDataTTL: ${SW_CORE_METRICS_DATA_TTL:7} # Unit is day

# The period of L1 aggregation flush to L2 aggregation. Unit is ms.

l1FlushPeriod: ${SW_CORE_L1_AGGREGATION_FLUSH_PERIOD:500}

# The threshold of session time. Unit is ms. Default value is 70s.

storageSessionTimeout: ${SW_CORE_STORAGE_SESSION_TIMEOUT:70000}

# The period of doing data persistence. Unit is second.Default value is 25s

persistentPeriod: ${SW_CORE_PERSISTENT_PERIOD:25}

# Cache metrics data for 1 minute to reduce database queries, and if the OAP cluster changes within that minute,

# the metrics may not be accurate within that minute.

enableDatabaseSession: ${SW_CORE_ENABLE_DATABASE_SESSION:true}

topNReportPeriod: ${SW_CORE_TOPN_REPORT_PERIOD:10} # top_n record worker report cycle, unit is minute

# Extra model column are the column defined by in the codes, These columns of model are not required logically in aggregation or further query,

# and it will cause more load for memory, network of OAP and storage.

# But, being activated, user could see the name in the storage entities, which make users easier to use 3rd party tool, such as Kibana->ES, to query the data by themselves.

activeExtraModelColumns: ${SW_CORE_ACTIVE_EXTRA_MODEL_COLUMNS:false}

# The max length of service + instance names should be less than 200

serviceNameMaxLength: ${SW_SERVICE_NAME_MAX_LENGTH:70}

instanceNameMaxLength: ${SW_INSTANCE_NAME_MAX_LENGTH:70}

# The max length of service + endpoint names should be less than 240

endpointNameMaxLength: ${SW_ENDPOINT_NAME_MAX_LENGTH:150}

# Define the set of span tag keys, which should be searchable through the GraphQL.

searchableTracesTags: ${SW_SEARCHABLE_TAG_KEYS:http.method,status_code,db.type,db.instance,mq.queue,mq.topic,mq.broker}

# Define the set of log tag keys, which should be searchable through the GraphQL.

searchableLogsTags: ${SW_SEARCHABLE_LOGS_TAG_KEYS:level}

# Define the set of alarm tag keys, which should be searchable through the GraphQL.

searchableAlarmTags: ${SW_SEARCHABLE_ALARM_TAG_KEYS:level}

# The number of threads used to prepare metrics data to the storage.

prepareThreads: ${SW_CORE_PREPARE_THREADS:2}

# Turn it on then automatically grouping endpoint by the given OpenAPI definitions.

enableEndpointNameGroupingByOpenapi: ${SW_CORE_ENABLE_ENDPOINT_NAME_GROUPING_BY_OPAENAPI:true}

storage:

selector: ${SW_STORAGE:mysql}

elasticsearch:

namespace: ${SW_NAMESPACE:""}

clusterNodes: ${SW_STORAGE_ES_CLUSTER_NODES:localhost:9200}

protocol: ${SW_STORAGE_ES_HTTP_PROTOCOL:"http"}

connectTimeout: ${SW_STORAGE_ES_CONNECT_TIMEOUT:3000}

socketTimeout: ${SW_STORAGE_ES_SOCKET_TIMEOUT:30000}

responseTimeout: ${SW_STORAGE_ES_RESPONSE_TIMEOUT:15000}

numHttpClientThread: ${SW_STORAGE_ES_NUM_HTTP_CLIENT_THREAD:0}

user: ${SW_ES_USER:""}

password: ${SW_ES_PASSWORD:""}

trustStorePath: ${SW_STORAGE_ES_SSL_JKS_PATH:""}

trustStorePass: ${SW_STORAGE_ES_SSL_JKS_PASS:""}

secretsManagementFile: ${SW_ES_SECRETS_MANAGEMENT_FILE:""} # Secrets management file in the properties format includes the username, password, which are managed by 3rd party tool.

dayStep: ${SW_STORAGE_DAY_STEP:1} # Represent the number of days in the one minute/hour/day index.

indexShardsNumber: ${SW_STORAGE_ES_INDEX_SHARDS_NUMBER:1} # Shard number of new indexes

indexReplicasNumber: ${SW_STORAGE_ES_INDEX_REPLICAS_NUMBER:1} # Replicas number of new indexes

# Super data set has been defined in the codes, such as trace segments.The following 3 config would be improve es performance when storage super size data in es.

superDatasetDayStep: ${SW_SUPERDATASET_STORAGE_DAY_STEP:-1} # Represent the number of days in the super size dataset record index, the default value is the same as dayStep when the value is less than 0

superDatasetIndexShardsFactor: ${SW_STORAGE_ES_SUPER_DATASET_INDEX_SHARDS_FACTOR:5} # This factor provides more shards for the super data set, shards number = indexShardsNumber * superDatasetIndexShardsFactor. Also, this factor effects Zipkin and Jaeger traces.

superDatasetIndexReplicasNumber: ${SW_STORAGE_ES_SUPER_DATASET_INDEX_REPLICAS_NUMBER:0} # Represent the replicas number in the super size dataset record index, the default value is 0.

indexTemplateOrder: ${SW_STORAGE_ES_INDEX_TEMPLATE_ORDER:0} # the order of index template

bulkActions: ${SW_STORAGE_ES_BULK_ACTIONS:5000} # Execute the async bulk record data every ${SW_STORAGE_ES_BULK_ACTIONS} requests

# flush the bulk every 10 seconds whatever the number of requests

# INT(flushInterval * 2/3) would be used for index refresh period.

flushInterval: ${SW_STORAGE_ES_FLUSH_INTERVAL:15}

concurrentRequests: ${SW_STORAGE_ES_CONCURRENT_REQUESTS:2} # the number of concurrent requests

resultWindowMaxSize: ${SW_STORAGE_ES_QUERY_MAX_WINDOW_SIZE:10000}

metadataQueryMaxSize: ${SW_STORAGE_ES_QUERY_MAX_SIZE:10000}

scrollingBatchSize: ${SW_STORAGE_ES_SCROLLING_BATCH_SIZE:5000}

segmentQueryMaxSize: ${SW_STORAGE_ES_QUERY_SEGMENT_SIZE:200}

profileTaskQueryMaxSize: ${SW_STORAGE_ES_QUERY_PROFILE_TASK_SIZE:200}

oapAnalyzer: ${SW_STORAGE_ES_OAP_ANALYZER:"{\"analyzer\":{\"oap_analyzer\":{\"type\":\"stop\"}}}"} # the oap analyzer.

oapLogAnalyzer: ${SW_STORAGE_ES_OAP_LOG_ANALYZER:"{\"analyzer\":{\"oap_log_analyzer\":{\"type\":\"standard\"}}}"} # the oap log analyzer. It could be customized by the ES analyzer configuration to support more language log formats, such as Chinese log, Japanese log and etc.

advanced: ${SW_STORAGE_ES_ADVANCED:""}

h2:

driver: ${SW_STORAGE_H2_DRIVER:org.h2.jdbcx.JdbcDataSource}

url: ${SW_STORAGE_H2_URL:jdbc:h2:mem:skywalking-oap-db;DB_CLOSE_DELAY=-1}

user: ${SW_STORAGE_H2_USER:sa}

metadataQueryMaxSize: ${SW_STORAGE_H2_QUERY_MAX_SIZE:5000}

maxSizeOfArrayColumn: ${SW_STORAGE_MAX_SIZE_OF_ARRAY_COLUMN:20}

numOfSearchableValuesPerTag: ${SW_STORAGE_NUM_OF_SEARCHABLE_VALUES_PER_TAG:2}

maxSizeOfBatchSql: ${SW_STORAGE_MAX_SIZE_OF_BATCH_SQL:100}

asyncBatchPersistentPoolSize: ${SW_STORAGE_ASYNC_BATCH_PERSISTENT_POOL_SIZE:1}

mysql:

properties:

jdbcUrl: ${SW_JDBC_URL:"jdbc:mysql://192.168.1.103:3306/swtest?rewriteBatchedStatements=true"}

dataSource.user: ${SW_DATA_SOURCE_USER:root}

dataSource.password: ${SW_DATA_SOURCE_PASSWORD:123456}

dataSource.cachePrepStmts: ${SW_DATA_SOURCE_CACHE_PREP_STMTS:true}

dataSource.prepStmtCacheSize: ${SW_DATA_SOURCE_PREP_STMT_CACHE_SQL_SIZE:250}

dataSource.prepStmtCacheSqlLimit: ${SW_DATA_SOURCE_PREP_STMT_CACHE_SQL_LIMIT:2048}

dataSource.useServerPrepStmts: ${SW_DATA_SOURCE_USE_SERVER_PREP_STMTS:true}

metadataQueryMaxSize: ${SW_STORAGE_MYSQL_QUERY_MAX_SIZE:5000}

maxSizeOfArrayColumn: ${SW_STORAGE_MAX_SIZE_OF_ARRAY_COLUMN:20}

numOfSearchableValuesPerTag: ${SW_STORAGE_NUM_OF_SEARCHABLE_VALUES_PER_TAG:2}

maxSizeOfBatchSql: ${SW_STORAGE_MAX_SIZE_OF_BATCH_SQL:2000}

asyncBatchPersistentPoolSize: ${SW_STORAGE_ASYNC_BATCH_PERSISTENT_POOL_SIZE:4}

tidb:

properties:

jdbcUrl: ${SW_JDBC_URL:"jdbc:mysql://localhost:4000/tidbswtest?rewriteBatchedStatements=true"}

dataSource.user: ${SW_DATA_SOURCE_USER:root}

dataSource.password: ${SW_DATA_SOURCE_PASSWORD:""}

dataSource.cachePrepStmts: ${SW_DATA_SOURCE_CACHE_PREP_STMTS:true}

dataSource.prepStmtCacheSize: ${SW_DATA_SOURCE_PREP_STMT_CACHE_SQL_SIZE:250}

dataSource.prepStmtCacheSqlLimit: ${SW_DATA_SOURCE_PREP_STMT_CACHE_SQL_LIMIT:2048}

dataSource.useServerPrepStmts: ${SW_DATA_SOURCE_USE_SERVER_PREP_STMTS:true}

dataSource.useAffectedRows: ${SW_DATA_SOURCE_USE_AFFECTED_ROWS:true}

metadataQueryMaxSize: ${SW_STORAGE_MYSQL_QUERY_MAX_SIZE:5000}

maxSizeOfArrayColumn: ${SW_STORAGE_MAX_SIZE_OF_ARRAY_COLUMN:20}

numOfSearchableValuesPerTag: ${SW_STORAGE_NUM_OF_SEARCHABLE_VALUES_PER_TAG:2}

maxSizeOfBatchSql: ${SW_STORAGE_MAX_SIZE_OF_BATCH_SQL:2000}

asyncBatchPersistentPoolSize: ${SW_STORAGE_ASYNC_BATCH_PERSISTENT_POOL_SIZE:4}

influxdb:

# InfluxDB configuration

url: ${SW_STORAGE_INFLUXDB_URL:http://localhost:8086}

user: ${SW_STORAGE_INFLUXDB_USER:root}

password: ${SW_STORAGE_INFLUXDB_PASSWORD:}

database: ${SW_STORAGE_INFLUXDB_DATABASE:skywalking}

actions: ${SW_STORAGE_INFLUXDB_ACTIONS:1000} # the number of actions to collect

duration: ${SW_STORAGE_INFLUXDB_DURATION:1000} # the time to wait at most (milliseconds)

batchEnabled: ${SW_STORAGE_INFLUXDB_BATCH_ENABLED:true}

fetchTaskLogMaxSize: ${SW_STORAGE_INFLUXDB_FETCH_TASK_LOG_MAX_SIZE:5000} # the max number of fetch task log in a request

connectionResponseFormat: ${SW_STORAGE_INFLUXDB_CONNECTION_RESPONSE_FORMAT:MSGPACK} # the response format of connection to influxDB, cannot be anything but MSGPACK or JSON.

postgresql:

properties:

jdbcUrl: ${SW_JDBC_URL:"jdbc:postgresql://localhost:5432/skywalking"}

dataSource.user: ${SW_DATA_SOURCE_USER:postgres}

dataSource.password: ${SW_DATA_SOURCE_PASSWORD:123456}

dataSource.cachePrepStmts: ${SW_DATA_SOURCE_CACHE_PREP_STMTS:true}

dataSource.prepStmtCacheSize: ${SW_DATA_SOURCE_PREP_STMT_CACHE_SQL_SIZE:250}

dataSource.prepStmtCacheSqlLimit: ${SW_DATA_SOURCE_PREP_STMT_CACHE_SQL_LIMIT:2048}

dataSource.useServerPrepStmts: ${SW_DATA_SOURCE_USE_SERVER_PREP_STMTS:true}

metadataQueryMaxSize: ${SW_STORAGE_MYSQL_QUERY_MAX_SIZE:5000}