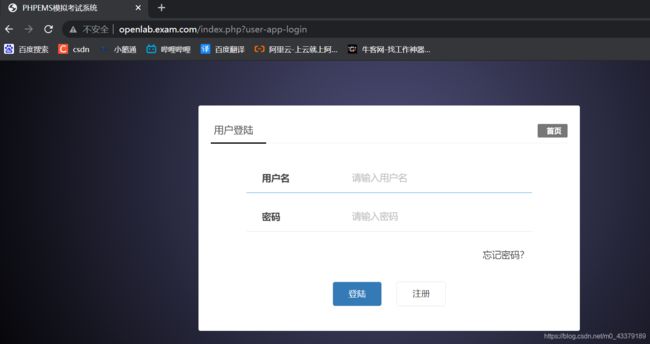

鸥鹏考试系统上线项目

1.项目背景及需求分析

1.1 项目背景

鸥鹏是一家以信息产业为主导的著名高新技术企业,是最专业的IT项目管理、项目开发、IT人力资源顾问公司。

该公司随着业务发展壮大,为了提高学员的学习水平,观测学员的学习状态由原有的线下转移到线上,现需要为其设计一套容纳上万人同时在线的考试系统的web网站架构。

1.2 需求分析

为满足鸥鹏中心、西安分校及其他省份校区10000+人的同时在线学习及考试需求,设计鸥鹏支持全国各校区的考试系统服务器架构。

2.项目建设

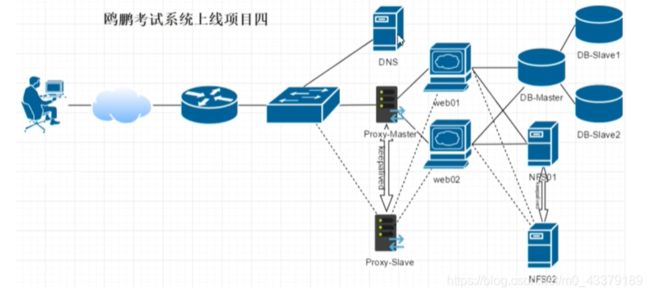

架构图

由于10000+人同时访问网站,访问量太大,应用服务器作为网站服务器入口压力越来越大,为了提高并发,我们通过web服务器集群分担请求数,前端采用负载均衡技术调度用户请求,根据分发策略将用户请求分发到后端的web服务器,web服务器使用NFS作为共享存储。

为了防止数据库存在单点故障; 共享存储存在单点故障; 负载调度节点存在单点故障;

所以, 数据库通过主从同步,并配置读写分离; 共享存储使用两台机器,配合keepalive配置高可用; 负载调度使用两台机器,配合keepalive配置高可用;

主机资源规划

| IP地址 | 主机名 | 角色 |

|---|---|---|

| 192.168.233.132 | zljt-host-233.132.openlab.com | nginx+nfs+keepalived |

| 192.168.233.133 | zljt-host-233.133.openlab.com | nginx+nfs+keepalived |

| 192.168.233.100 | openlab.exam.com | VIP (nginx) |

| 192.168.233.200 | nfs.exam.com | VIP (nfs) |

| 192.168.233.134 | zljt-host-233.134.openlab.com | dns+db_master |

| 192.168.233.135 | zljt-host-233.135.openlab.com | web01+php+db_slave1 |

| 192.168.233.136 | zljt-host-233.136.openlab.com | web02+php+db_slave2 |

| 192.168.233.137 | zljt-host-233.137.openlab.com | mycat |

2.1 系统初始设置

所有主机做

1.停止防火墙

# systemctl stop firewalld

# systemctl disable firewalld

2.禁用SELinux

# sed -i '/^SELINUX=/ cSELINUX=disabled' /etc/selinux/config

# setenforce 0

3.设置主机名

# hostnamectl set-hostname xxx

4. 安装常用软件

# yum install bash-completion net-tools wget lrzsz psmisc vim dos2unix unzip

5.下载阿里云epel的repo 到/etc/yum.repos.d/

# wget -O /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo

6.配置/etc/hosts文件

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.233.132 zljt-host-233.132.openlab.com

192.168.233.133 zljt-host-233.133.openlab.com

192.168.233.134 zljt-host-233.134.openlab.com

192.168.233.135 zljt-host-233.135.openlab.com

192.168.233.136 zljt-host-233.136.openlab.com

192.168.233.137 zljt-host-233.137.openlab.com

192.168.233/100 openlab.exam.com

192.168.233.200 nfs.exam.com

测试

[root@zljt-host-233 ~]# ping -c 2 zljt-host-233.137.openlab.com

PING zljt-host-233.137.openlab.com (192.168.233.137) 56(84) bytes of data.

64 bytes from zljt-host-233.137.openlab.com (192.168.233.137): icmp_seq=1 ttl=64 time=1.31 ms

64 bytes from zljt-host-233.137.openlab.com (192.168.233.137): icmp_seq=2 ttl=64 time=0.484 ms

--- zljt-host-233.137.openlab.com ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1001ms

rtt min/avg/max/mdev = 0.484/0.900/1.316/0.416 ms

7.让各个服务器时间同步,132主机做时间服务器,其他五台机器向它同步。

132:

[root@zljt-host-233 ~]# vim /etc/chrony.conf

server ntp1.aliyun.com iburst

#server 1.centos.pool.ntp.org iburst

#server 2.centos.pool.ntp.org iburst

#server 3.centos.pool.ntp.org iburst

allow 192.168.233.0/24

[root@zljt-host-233 ~]# systemctl restart chronyd

[root@zljt-host-233 ~]# chronyc sources

210 Number of sources = 1

MS Name/IP address Stratum Poll Reach LastRx Last sample

===============================================================================

^* 120.25.115.20 2 6 33 3 -414us[ -595us] +/- 45ms

133-137:

[root@zljt-host-233 ~]# vim /etc/chrony.conf

server zljt-host-233.132.openlab.com iburst

#server 1.centos.pool.ntp.org iburst

#server 2.centos.pool.ntp.org iburst

#server 3.centos.pool.ntp.org iburst

[root@zljt-host-233 ~]# systemctl restart chronyd

[root@zljt-host-233 ~]# chronyc sources

210 Number of sources = 1

MS Name/IP address Stratum Poll Reach LastRx Last sample

===============================================================================

^* zljt-host-233.132.openla> 3 6 17 3 +20us[ +618us] +/- 53ms

2.2 配置DNS

134主机上做dns

1、安装 BIND

yum install bind bind-utils -y

2.配置DNS主配置文件

[root@zljt-host-233 ~]# vim /etc/named.conf

options {

listen-on port 53 { 192.168.233.134; };

//listen-on-v6 port 53 { ::1; };

directory "/var/named";

dump-file "/var/named/data/cache_dump.db";

statistics-file "/var/named/data/named_stats.txt";

memstatistics-file "/var/named/data/named_mem_stats.txt";

recursing-file "/var/named/data/named.recursing";

secroots-file "/var/named/data/named.secroots";

allow-query { any; };

forwarders { 8.8.8.8; };

[root@zljt-host-233 ~]# named-checkconf //检测

[root@zljt-host-233 ~]# systemctl enable named --now //设置开机自启动

配置DNS指向:

[root@zljt-host-233 ~]# nmcli con mod ens33 ipv4.dns 192.168.233.134

[root@zljt-host-233 ~]# systemctl restart network

测试转发:

[root@zljt-host-233 ~]# dig -t A www.12306.cn +short

www.12306.cn.lxdns.com.

113.142.80.253

113.142.80.254

3.配置正向解析域

主机域(openlab.com)

主机域和业务域无关, 且建议分开;

主机域其实是一个假域,也就是说, 主机域其实是不能解析到互联网上的, 只对局域网(内网)提供服务;

业务域(exam.com)

[root@zljt-host-233 ~]# vim /etc/named.rfc1912.zones

zone "openlab.com" IN {

type master;

file "openlab.com.zone";

allow-update { 192.168.233.134; };

};

zone "exam.com" IN {

type master;

file "exam.com.zone";

allow-update { 192.168.233.134; };

};

自定义区域数据库文件

一般而言是文本文件, 且只包含 资源记录, 宏定义 和 注释;

需要在自定义区域配置文件中指定存放路径, 可以绝对路径或相对路径 ( 相对于 /var/named ) 注意文件属性

建议拷贝模板修改

[root@zljt-host-233 ~]# cd /var/named

[root@zljt-host-233 named]# ls

data dynamic named.ca named.empty named.localhost named.loopback slaves

[root@zljt-host-233 named]# cp -p named.localhost openlab.com.zone

[root@zljt-host-233 named]# vim openlab.com.zone

$TTL 1D

@ IN SOA dns.openlab.com. admin.openlab.com. (

2021081301 ; serial

1D ; refresh

1H ; retry

1W ; expire

3H ) ; minimum

NS dns

dns A 192.168.233.134

zljt-host-233.132 A 192.168.233.132

zljt-host-233.133 A 192.168.233.133

zljt-host-233.134 A 192.168.233.134

zljt-host-233.135 A 192.168.233.135

zljt-host-233.136 A 192.168.233.136

zljt-host-233.137 A 192.168.233.137

[root@zljt-host-233 named]# cp -p named.localhost exam.com.zone

[root@zljt-host-233 named]# vim exam.com.zone

$TTL 1D

@ IN SOA dns.exam.com. admin.exam.com. (

2021081301 ; serial

1D ; refresh

1H ; retry

1W ; expire

3H ) ; minimum

NS dns

dns A 192.168.233.134

openlab A 192.168.233.100

nfs A 192.168.233.200

4.重启named服务并检查是否生效

[root@zljt-host-233 named]# named-checkconf

[root@zljt-host-233 named]# named-checkzone openlab.com openlab.com.zone

zone openlab.com/IN: loaded serial 2021081301

OK

[root@zljt-host-233 named]# named-checkzone exam.com exam.com.zone

zone exam.com/IN: loaded serial 2021081301

OK

[root@zljt-host-233 named]# systemctl restart named

测试:

[root@zljt-host-233 named]# dig -t A openlab.exam.com +short

192.168.233.100

[root@zljt-host-233 named]# dig -t A nfs.exam.com +short

192.168.233.200

[root@zljt-host-233 named]# dig -t A zljt-host-233.133.openlab.com +short

192.168.233.133

[root@zljt-host-233 named]# dig -t A zljt-host-233.137.openlab.com +short

192.168.233.137

所有节点设置DNS为192.168.233.134

[root@zljt-host-233 ~]# nmcli con mod ens33 ipv4.dns 192.168.233.134

[root@zljt-host-233 ~]# systemctl restart network

2.3 配置apache

配置web01的apache和php:

1、安装httpd和php相关软件

yum install httpd php php-mysql php-gd php-fpm -y

2、修改apache配置文件httpd.conf

[root@zljt-host-233 ~]# cd /etc/httpd/conf

[root@zljt-host-233 conf]# ls

httpd.conf magic

[root@zljt-host-233 conf]# cp httpd.conf{,.bak}

[root@zljt-host-233 conf]# vim httpd.conf

# 接受到php页面请求时,交给php引擎解释,而不是下载页面

AddType application/x-httpd-php .php

AddType application/x-httpd-php-source .phps

# 将目录的默认索引页面改为index.php

DirectoryIndex index.php index.html

# 如果php-fpm使用的是TCP socket,那么在httpd.conf末尾加上:

.php$> SetHandler "proxy:fcgi://127.0.0.1:9000"

</FilesMatch>

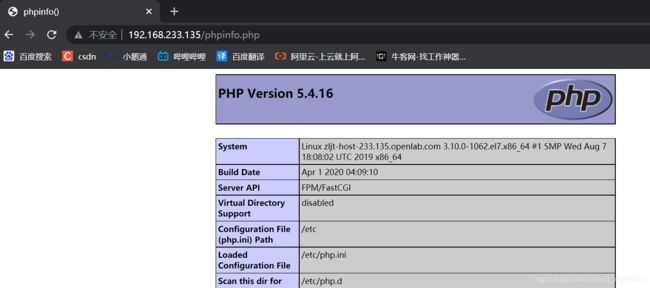

3、启动服务,测试apache和php协同

[root@zljt-host-233 conf]# httpd -t

Syntax OK

[root@zljt-host-233 conf]# systemctl enable httpd.service php-fpm.service --now

Created symlink from /etc/systemd/system/multi-user.target.wants/httpd.service to /usr/lib/systemd/system/httpd.service.

Created symlink from /etc/systemd/system/multi-user.target.wants/php-fpm.service to /usr/lib/systemd/system/php-fpm.service.

准备phpinfo.php文件:

# echo "" >/var/www/html/phpinfo.php

注意:测试完毕,删除phpinfo.php

# rm -f /var/www/html/phpinfo.php

浏览器测试:

配置web02的apache和php和web01相同,此处略。

2.3 配置数据库

1、分别在db-master(233.134),db-slave1(233.135)和db-slave2(233.136) 三台机器上安装MariaDB,并做初始设置。

1、安装MariaDB

[root@zljt-host-233 ~]# yum install -y mariadb-server

[root@zljt-host-233 ~]# systemctl enable mariadb --now

Created symlink from /etc/systemd/system/multi-user.target.wants/mariadb.service to /usr/lib/systemd/system/mariadb.service.

2、配置数据库

# mysql_secure_installation

设置root用户密码,禁用root远程登录,删除匿名用户和test数据库。

配置完毕后,测试数据库连接:

[root@zljt-host-233 ~]# mysql -uroot -p123456 -e 'select version();'

+----------------+

| version() |

+----------------+

| 5.5.68-MariaDB |

+----------------+

2、配置数据库主从同步

三台机器一主两从。

主库(Master):[ip为192.168.233.134 port为3306]

从库(Slave1):[ip为192.168.233.135 port为3306]

从库(Slave2):[ip为192.168.233.136 port为3306]

主库上配置:

1、设置server-id值并开启binlog参数

修改/etc/my.cnf,在[mysqld]下添加

log_bin = mysql-bin

server-id = 134

character-set-server=utf8 #支持汉字

binlog-ignore-db='information_schema mysql performance_schema' #配置忽略权限库同步参数

2、重启数据库:

[root@zljt-host-233 ~]# systemctl restart mariadb

[root@zljt-host-233 ~]# mysql -uroot -p123456

3、建立同步账号

MariaDB [(none)]> grant replication slave on *.* to 'rep'@"192.168.233.%" identified by "123456";

Query OK, 0 rows affected (0.00 sec)

MariaDB [(none)]> show grants for 'rep'@'192.168.233.%';

+----------------------------------------------------------------------------------------------------------------------------+

| Grants for rep@192.168.233.% |

+----------------------------------------------------------------------------------------------------------------------------+

| GRANT REPLICATION SLAVE ON *.* TO 'rep'@'192.168.233.%' IDENTIFIED BY PASSWORD '*6BB4837EB74329105EE4568DDA7DC67ED2CA2AD9' |

+----------------------------------------------------------------------------------------------------------------------------+

1 row in set (0.00 sec)

4、查看主库状态

查看主库状态,即当前日志文件名和二进制日志偏移量

MariaDB [(none)]> show master status;

+------------------+----------+--------------+---------------------------------------------+

| File | Position | Binlog_Do_DB | Binlog_Ignore_DB |

+------------------+----------+--------------+---------------------------------------------+

| mysql-bin.000001 | 398 | | information_schema mysql performance_schema |

+------------------+----------+--------------+---------------------------------------------+

1 row in set (0.00 sec)

从库上配置:

233.135上配置

1、设置server-id值并开启binlog参数

修改/etc/my.cnf,在[mysqld]下添加

server_id=135

character-set-server=utf8

log-slave-updates

log_bin = mysql-bin

expire_logs_days = 7

重启数据库

[root@zljt-host-233 ~]# systemctl restart mariadb

2.设置主从同步

MariaDB [(none)]> change master to

-> master_host='192.168.233.134',

-> master_port=3306,

-> master_user='rep',

-> master_password='123456',

-> master_log_file='mysql-bin.000001',

-> master_log_pos=398;

Query OK, 0 rows affected (0.05 sec)

3、启动主从同步

MariaDB [(none)]> start slave;

Query OK, 0 rows affected (0.00 sec)

4.检查状态

MariaDB [(none)]> show slave status\G;

*************************** 1. row ***************************

Slave_IO_State: Waiting for master to send event

Master_Host: 192.168.233.134

Master_User: rep

Master_Port: 3306

Connect_Retry: 60

Master_Log_File: mysql-bin.000001

Read_Master_Log_Pos: 398

Relay_Log_File: mariadb-relay-bin.000002

Relay_Log_Pos: 529

Relay_Master_Log_File: mysql-bin.000001

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

233.136上配置和135上相同,设置server_id为136,配置过程略。

3.、配置读写分离

在233.137上配置,使用mycat

1.部署jdk环境

MyCAT用Java开发,需要有JAVA运行环境,mycat依赖jdk的环境

上传jdk并安装

# yum localinstall jdk-8u144-linux-x64.rpm

测试:

[root@zljt-host-233 ~]# java -version

java version "1.8.0_144"

Java(TM) SE Runtime Environment (build 1.8.0_144-b01)

Java HotSpot(TM) 64-Bit Server VM (build 25.144-b01, mixed mode)

2、安装mycat

下载mycat

[root@zljt-host-233 ~]# wget -c http://dl.mycat.org.cn/1.6.7.4/Mycat-server-1.6.7.4-release/Mycat-server-1.6.7.4-release-20200105164103-linux.tar.gz

[root@zljt-host-233 ~]# tar xf Mycat-server-1.6.7.4-release-20200105164103-linux.tar.gz -C /usr/local/

[root@zljt-host-233 ~]# ln -sv /usr/local/mycat/bin/mycat /usr/sbin/

‘/usr/sbin/mycat’ -> ‘/usr/local/mycat/bin/mycat’

3、读写分离配置

[root@zljt-host-233 conf]# cp schema.xml{,.bak}

[root@zljt-host-233 conf]# vim schema.xml

"1.0"?>

<!DOCTYPE mycat:schema SYSTEM "schema.dtd">

"http://io.mycat/">

"TESTDB" checkSQLschema="true" sqlMaxLimit="100" randomDataNode="dn1" dataNode="dn1">

</schema>

"dn1" dataHost="localhost1" database="exam" />

"localhost1" maxCon="1000" minCon="10" balance="1"

writeType="0" dbType="mysql" dbDriver="native" switchType="1" slaveThreshold="100">

select user()</heartbeat>

<!-- can have multi write hosts -->

"hostM1" url="192.168.233.134:3306" user="mycat"

password="123456">

"db-slave1" url="192.168.233.135:3306" user="mycat" password="123456" /> "db-slave2" url="192.168.233.136:3306" user="mycat" password="123456" />

</writeHost>

</dataHost>

</mycat:schema>

修改数据端口 server.xml

[root@zljt-host-233 conf]# vim schema.xml

"serverPort">3306</property> "managerPort">9066</property>

4、创建数据库及管理用户

数据库在db-master即150.13上创建:

MariaDB [(none)]> CREATE DATABASE exam CHARACTER SET utf8 COLLATE utf8_general_ci;

Query OK, 1 row affected (0.01 sec)

从库上查看:

[root@zljt-host-233 ~]# mysql -uroot -p123456 -e 'show databases;' | grep exam

exam

主库上授权:

MariaDB [(none)]>grant all on exam.* to mycat@"192.168.233.%" identified by "123456";

MariaDB [(none)]> flush privileges;

分别在两个从库授权用户:

MariaDB [(none)]> grant select on exam.* to 'mycat'@'192.168.233.%' identified by '123456';

Query OK, 0 rows affected (0.00 sec)

MariaDB [(none)]> flush privileges;

Query OK, 0 rows affected (0.00 sec)

5.启动mycat

方法一:# mycat console #<=通过console命令启动mycat,这样方便查看信息

方法二:# mycat start

方法三:# startup_nowrap.sh #服务脚本方式启动

第一次启动使用 mycat console

确认无误后使用 mycat start

[root@zljt-host-233 conf]# mycat console

Running Mycat-server...

wrapper | --> Wrapper Started as Console

wrapper | Launching a JVM...

jvm 1 | Wrapper (Version 3.2.3) http://wrapper.tanukisoftware.org

jvm 1 | Copyright 1999-2006 Tanuki Software, Inc. All Rights Reserved.

jvm 1 |

jvm 1 | MyCAT Server startup successfully. see logs in logs/mycat.log

[root@zljt-host-233 conf]# mycat start

Starting Mycat-server...

设置开机自启动:

[root@zljt-host-233 conf]# echo "/usr/local/mycat/bin/mycat start" >> /etc/rc.local

解决/etc/rc.local启动不执行

[root@zljt-host-233 conf]# chmod +x /etc/rc.d/rc.local

注意:不给/etc/rc.d/rc.local增加执行权限,添加的开机命令启动后不会执行。

6、mycat管理命令与监控

mycat自身有类似其他数据库的管理监控方式,可通过mysql命令行,登陆端口9066执行相应的SQL操作,也 可通过jdbc的方式进行远程连接管理。

[root@zljt-host-233 ~]# mysql -uuser -puser -h192.168.233.137 -P9066 -DTESTDB

显示mycat数据节点的列表

MySQL [TESTDB]> show @@datanode;

+------+-----------------+-------+-------+--------+------+------+---------+------------+----------+---------+---------------+

| NAME | DATHOST | INDEX | TYPE | ACTIVE | IDLE | SIZE | EXECUTE | TOTAL_TIME | MAX_TIME | MAX_SQL | RECOVERY_TIME |

+------+-----------------+-------+-------+--------+------+------+---------+------------+----------+---------+---------------+

| dn1 | localhost1/exam | 0 | mysql | 0 | 10 | 1000 | 67 | 0 | 0 | 0 | -1 |

+------+-----------------+-------+-------+--------+------+------+---------+------------+----------+---------+---------------+

1 row in set (0.00 sec)

MySQL [TESTDB]> show @@heartbeat;

+-----------+-------+-----------------+------+---------+-------+--------+---------+--------------+---------------------+-------+

| NAME | TYPE | HOST | PORT | RS_CODE | RETRY | STATUS | TIMEOUT | EXECUTE_TIME | LAST_ACTIVE_TIME | STOP |

+-----------+-------+-----------------+------+---------+-------+--------+---------+--------------+---------------------+-------+

| hostM1 | mysql | 192.168.233.134 | 3306 | 1 | 0 | idle | 30000 | 1,1,1 | 2021-08-13 20:53:28 | false |

| db-slave1 | mysql | 192.168.233.135 | 3306 | 1 | 0 | idle | 30000 | 1,1,1 | 2021-08-13 20:53:28 | false |

| db-slave2 | mysql | 192.168.233.136 | 3306 | 1 | 0 | idle | 30000 | 1,1,1 | 2021-08-13 20:53:28 | false |

+-----------+-------+-----------------+------+---------+-------+--------+---------+--------------+---------------------+-------+

3 rows in set (0.02 sec)

RS_CODE状态为1,正常状态

连接到数据端口测试:

[root@zljt-host-233 ~]# mysql -uuser -puser -h192.168.233.137 -P3306 -DTESTDB

MySQL [TESTDB]> select @@server_id;

+-------------+

| @@server_id |

+-------------+

| 136 |

+-------------+

1 row in set (0.09 sec)

MySQL [TESTDB]> select @@server_id;

+-------------+

| @@server_id |

+-------------+

| 135 |

+-------------+

1 row in set (0.01 sec)

MySQL [TESTDB]> select @@server_id;

+-------------+

| @@server_id |

+-------------+

| 135 |

+-------------+

1 row in set (0.00 sec)

// 查询到的是slave的serer_id。

下面查询master的server_id.

MySQL [TESTDB]> begin;

Query OK, 0 rows affected (0.01 sec)

MySQL [TESTDB]> select @@server_id;

+-------------+

| @@server_id |

+-------------+

| 134 |

+-------------+

1 row in set (0.00 sec)

MySQL [TESTDB]> commit;

Query OK, 0 rows affected (0.01 sec)

2.4配置共享存储NFS

采用基于drbd+nfs实现

1、配置drbd

前提:所有的存储机器添加一块硬盘16G。

硬盘分区/dev/sdb,测试分2G大小

1.配置免密钥互信:

132上:

[root@zljt-host-233 ~]# ssh-keygen -f ~/.ssh/id_rsa -P '' -q

[root@zljt-host-233 ~]# ssh-copy-id 192.168.233.133

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

The authenticity of host '192.168.233.133 (192.168.233.133)' can't be established.

ECDSA key fingerprint is SHA256:tgsl6rLpV3RW5wFwnQriY3fp54OKTrhf21+VB/DVf0w.

ECDSA key fingerprint is MD5:e4:d5:0a:5b:93:28:a9:ce:32:30:d0:29:94:b2:a9:75.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

[email protected]'s password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh '192.168.233.133'"

and check to make sure that only the key(s) you wanted were added.

133:

[root@zljt-host-233 ~]# ssh-keygen -f ~/.ssh/id_rsa -P '' -q

[root@zljt-host-233 ~]# ssh-copy-id 192.168.233.132

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

The authenticity of host '192.168.233.132 (192.168.233.132)' can't be established.

ECDSA key fingerprint is SHA256:tgsl6rLpV3RW5wFwnQriY3fp54OKTrhf21+VB/DVf0w.

ECDSA key fingerprint is MD5:e4:d5:0a:5b:93:28:a9:ce:32:30:d0:29:94:b2:a9:75.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

[email protected]'s password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh '192.168.233.132'"

and check to make sure that only the key(s) you wanted were added.

2.安装drbd软件:

# rpm -Uvh https://www.elrepo.org/elrepo-release-7.0-3.el7.elrepo.noarch.rpm

# yum install -y drbd84-utils kmod-drbd84

3.升级内核:

yum -y install kernel-devel kernel kernel-headers

加载模块:

modprobe drbd

[root@zljt-host-233 ~]# modprobe drbd

[root@zljt-host-233 ~]# lsmod | grep drbd

drbd 397041 0

libcrc32c 12644 2 xfs,drbd

4.配置drbd全局文件:

[root@zljt-host-233 drbd.d]# ls

global_common.conf

[root@zljt-host-233 drbd.d]# cp global_common.conf{,.bak}

[root@zljt-host-233 drbd.d]# vim global_common.conf

global {

usage-count yes;

udev-always-use-vnr; # treat implicit the same as explicit volumes

}

common {

protocol C;

handlers {

pri-on-incon-degr "/usr/lib/drbd/notify-pri-on-incon-degr.sh; /usr/lib/drbd/notify-emergency-reboot.sh; echo b > /proc/sysrq-trigger ; reboot -f";

pri-lost-after-sb "/usr/lib/drbd/notify-pri-lost-after-sb.sh; /usr/lib/drbd/notify-emergency-reboot.sh; echo b > /proc/sysrq-trigger ; reboot -f";

local-io-error "/usr/lib/drbd/notify-io-error.sh; /usr/lib/drbd/notify-emergency-shutdown.sh; echo o > /proc/sysrq-trigger ; halt -f";

}

startup {

# wfc-timeout degr-wfc-timeout outdated-wfc-timeout wait-after-sb

}

options {

}

disk {

on-io-error detach;

}

net {

cram-hmac-alg "sha1";

shared-secret "nfs-HA";

}

syncer { rate 1000M; }

}

[root@zljt-host-233 drbd.d]# vi nfs.res

resource nfs {

meta-disk internal;

device /dev/drbd0;

disk /dev/sdb;

on zljt-host-233.132.openlab.com {

address 192.168.233.132:7789;

}

on zljt-host-233.133.openlab.com {

address 192.168.233.133:7789;

}

}

将配置文件拷贝到133主机上:

[root@zljt-host-233 drbd.d]# scp * zljt-host-233.133.openlab.com:/etc/drbd.d/

5.drbd启动

配置程序用户:

useradd -M -s /sbin/nologin haclient

chgrp haclient /lib/drbd/drbdsetup-84

chmod o-x /lib/drbd/drbdsetup-84

chmod u+s /lib/drbd/drbdsetup-84

chgrp haclient /usr/sbin/drbdmeta

chmod o-x /usr/sbin/drbdmeta

chmod u+s /usr/sbin/drbdmeta

初始化资源:drbdadm create-md nfs

[root@zljt-host-233 ~]# drbdadm create-md nfs

initializing activity log

initializing bitmap (512 KB) to all zero

Writing meta data...

New drbd meta data block successfully created.

success

启动drbd:systemctl enable --now drbd

设置DRBD主(只在一个节点操作)

[root@zljt-host-233 ~]# drbdsetup /dev/drbd0 primary --force

查看状态:

[root@zljt-host-233 ~]# drbdadm status

nfs role:Primary

disk:UpToDate

peer role:Secondary

replication:SyncSource peer-disk:Inconsistent done:3.29

2、配置nfs

1.安装nfs-utils

yum install nfs-utils rpcbind -y

2.配置nfs

创建存放app目录/webdata

# mkdir /webdata

3.格式化drbd设备--只在主节点做

mkfs.xfs /dev/drbd0

4.挂载drbd--只在主节点做

mount /dev/drbd0 /webdata/

5.上传web应用并解压--只在主节点做

[root@zljt-host-233 ~]# unzip phpems_zxmnks_v6.0.zip -d /webdata/

[root@zljt-host-233 ~]# cd /webdata/

[root@zljt-host-233 webdata]# ls

help phpems

[root@zljt-host-233 webdata]# rm -rf /webdata/help/

6.配置共享

[root@zljt-host-233 ~]# vim /etc/exports

/webdata 192.168.233.0/24(sync,rw)

7.启动nfs

创建apache用户,注意id要和web01及web02上一样

[root@zljt-host-233 ~]# id -u apache

48

[root@zljt-host-233 ~]# useradd apache -u 48 -M -s /sbin/nologin

修改属主为apache:

[root@zljt-host-233 ~]# chown -R apache /webdata/

[root@zljt-host-233 ~]# systemctl enable rpcbind nfs-server --now

Created symlink from /etc/systemd/system/multi-user.target.wants/nfs-server.service to /usr/lib/systemd/system/nfs-server.service.

2.5 配置前端负载nginx

1、安装nginx

[root@zljt-host-233 ~]# yum install http://nginx.org/packages/centos/7Server/x86_64/RPMS/nginx-1.14.2-1.el7_4.ngx.x86_64.rpm

2、配置文件

[root@zljt-host-233 ~]# cd /etc/nginx/conf.d/

[root@zljt-host-233 conf.d]# cp default.conf{,.bak}

[root@zljt-host-233 conf.d]# vim default.conf

upstream webpool {

server 192.168.233.135 weight=1 max_fails=2 fail_timeout=30s;

server 192.168.233.136 weight=1 max_fails=2 fail_timeout=30s;

}

server {

listen 80;

server_name openlab.exam.com;

location / {

proxy_pass http://webpool;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

}

}

配置文件复制至133:

[root@zljt-host-233 conf.d]# scp * zljt-host-233.133.openlab.com:/etc/nginx/conf.d/

default.conf 100% 393 494.9KB/s 00:00

default.conf.bak 100% 1093 1.1MB/s 00:00

3.启动服务

测试语法

[root@zljt-host-233 conf.d]# nginx -t

nginx: the configuration file /etc/nginx/nginx.conf syntax is ok

nginx: configuration file /etc/nginx/nginx.conf test is successful

[root@zljt-host-233 conf.d]# systemctl enable nginx --now

Created symlink from /etc/systemd/system/multi-user.target.wants/nginx.service to /usr/lib/systemd/system/nginx.service.

2.6 配置keepalived高可用

配置说明:

keepalived安装在233.132和233.133上,配置两个实例,互为主备,充分利用服务器资源;

配置nginx负载实例233.132为MASTER,233.133为BACKUP;

配置nfs存储实例233.133为MASTER,233.132为BACKUP;

1、下载

# wget -c http://www.nosuchhost.net/~cheese/fedora/packages/epel-7/x86_64/keepalived-2.0.6-1.el7.x86_64.rpm

2、安装keepalived

# yum localinstall keepalived-2.0.6-1.el7.x86_64.rpm

233.132上:

3.配置主配置文件:

[root@zljt-host-233 ~]# cd /etc/keepalived/

[root@zljt-host-233 keepalived]# ls

keepalived.conf

[root@zljt-host-233 keepalived]# cp keepalived.conf{,.bak}

[root@zljt-host-233 keepalived]# vim keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

acassen@firewall.loc

failover@firewall.loc

sysadmin@firewall.loc

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id LVS_DEVEL

}

vrrp_script check_nginx {

script "/etc/keepalived/check_nginx.sh"

interval 2

}

vrrp_script check_nfs {

script "/etc/keepalived/check_nfs.sh"

interval 2

}

vrrp_instance VI_nginx {

state MASTER

interface ens33

virtual_router_id 132

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

track_script {

check_nginx

}

virtual_ipaddress {

192.168.233.100

}

}

vrrp_instance VI_nfs {

state BACKUP

interface ens33

virtual_router_id 133

priority 80

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

track_script {

check_nfs

}

notify_stop /etc/keepalived/notify_stop.sh

notify_master /etc/keepalived/notify_master.sh

notify_backup /etc/keepalived/notify_backup.sh

virtual_ipaddress {

192.168.233.200

}

}

4.开发脚本:

[root@zljt-host-233 keepalived]# vim check_nginx.sh

#逻辑就是:如果nginx进程不存在则启动nginx,如果nginx无法启动则返回1,即VIP 漂移

#!/bin/bash

A=`ps -C nginx --no-header |wc -l`

if [ $A -eq 0 ];then

systemctl start nginx

sleep 3

if [ `ps -C nginx --no-header |wc -l` -eq 0 ]

then

exit 1

else

exit 0

fi

fi

[root@zljt-host-233 keepalived]# vim check_nfs.sh

# 逻辑就是:如果nfs或rpcbind状态不是active则返回1

#!/bin/bash

/sbin/service nfs status &>/dev/null

if [ $? -ne 0 ];then

/sbin/service nfs restart

/sbin/service nfs status &>/dev/null

if [ $? -ne 0 ];then

umount /dev/drbd0

drbdadm secondary nfs

systemctl stop keepalived

fi

fi

notify_backup.sh

notify_master.sh

notify_stop.sh

上面三个脚本用于监控keepalived保证drbd和nfs在同一节点运行。

[root@zljt-host-233 keepalived]# vim notify_backup.sh

#!/bin/bash

log_dir=/etc/keepalived/logs

[ -d ${log_dir} ] || mkdir -p ${log_dir}

time=`date "+%F %T"`

echo -e "$time ------notify_backup------\n" >> ${log_dir}/notify_backup.log

/sbin/service nfs stop &>> ${log_dir}/notify_backup.log

/bin/umount /dev/drbd0 &>> ${log_dir}/notify_backup.log

/sbin/drbdadm secondary nfs &>> ${log_dir}/notify_backup.log

echo -e "\n" >> ${log_dir}/notify_backup.log

[root@zljt-host-233 keepalived]# vim notify_master.sh

#!/bin/bash

log_dir=/etc/keepalived/logs

time=`date "+%F %T"`

[ -d ${log_dir} ] || mkdir -p ${log_dir}

echo -e "$time ------notify_master------\n" >> ${log_dir}/notify_master.log

/sbin/drbdadm primary nfs &>> ${log_dir}/notify_master.log

/bin/mount /dev/drbd0 /webdata &>> ${log_dir}/notify_master.log

/sbin/service nfs restart &>> ${log_dir}/notify_master.log

echo -e "\n" >> ${log_dir}/notify_master.log

[root@zljt-host-233 keepalived]# vim notify_stop.sh

#!/bin/bash

time=`date "+%F %T"`

log_dir=/etc/keepalived/logs

[ -d ${log_dir} ] || mkdir -p ${log_dir}

echo -e "$time ------notify_stop------\n" >> ${log_dir}/notify_stop.log

/sbin/service nfs stop &>> ${log_dir}/notify_stop.log

/bin/umount /webdata &>> ${log_dir}/notify_stop.log

/sbin/drbdadm secondary nfs &>> ${log_dir}/notify_stop.log

echo -e "\n" >> ${log_dir}/notify_stop.log

5.233.133上与132上配置基本相同

将132上文件拷贝过去修改

[root@zljt-host-233 keepalived]# scp * zljt-host-233.133.openlab.com:/etc/keepalived/

check_nfs.sh 100% 239 157.9KB/s 00:00

check_nginx.sh 100% 202 121.8KB/s 00:00

keepalived.conf 100% 1080 1.3MB/s 00:00

keepalived.conf.bak 100% 3550 4.0MB/s 00:00

notify_backup.sh 100% 403 418.3KB/s 00:00

notify_master.sh 100% 412 818.2KB/s 00:00

notify_stop.sh

6.修改主配置文件 (修改state和priority)

[root@zljt-host-233 keepalived]# vim keepalived.conf

vrrp_instance VI_nginx {

state BACKUP

interface ens33

virtual_router_id 132

priority 80

vrrp_instance VI_nfs {

state MASTER

interface ens33

virtual_router_id 133

priority 100

7.所有脚本增加执行权限:

[root@zljt-host-233 keepalived]# chmod +x *.sh

[root@zljt-host-233 keepalived]# ls

check_nfs.sh check_nginx.sh keepalived.conf keepalived.conf.bak notify_backup.sh notify_master.sh notify_stop.sh

8.启动服务

[root@zljt-host-233 keepalived]# systemctl enable keepalived.service --now

测试:查看ip

132上:

[root@zljt-host-233 keepalived]# ip a

2: ens33: ,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:5d:33:fc brd ff:ff:ff:ff:ff:ff

inet 192.168.233.132/24 brd 192.168.233.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet 192.168.233.100/32 scope global ens33

valid_lft forever preferred_lft forever

133上:

[root@zljt-host-233 keepalived]# ip a

2: ens33: ,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:5b:58:69 brd ff:ff:ff:ff:ff:ff

inet 192.168.233.133/24 brd 192.168.233.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet 192.168.233.200/32 scope global ens33

valid_lft forever preferred_lft forever

2.7 配置web应用

分别在两台web服务器上挂载nfs存储。

1.安装nfs

[root@zljt-host-233 ~]# yum install nfs-utils -y

2.挂载nfs存储

[root@zljt-host-233 ~]# vim /etc/fstab

nfs.exam.com:/webdata /var/www/html nfs defaults,_netdev 0 0

[root@zljt-host-233 ~]# mount -a

3.修改app

[root@zljt-host-233 ~]# cd /var/www/html/

[root@zljt-host-233 html]# ls

phpems

[root@zljt-host-233 html]# cd phpems/

[root@zljt-host-233 phpems]# ls

api app data files index.php lib pe6.sql tasks 数据库升级.txt

[root@zljt-host-233 phpems]# mysql -uroot -p123456 -h192.168.233.137 TESTDB < pe6.sql

[root@zljt-host-233 webdata]# cd phpems/

[root@zljt-host-233 phpems]# ls

api app data files index.php lib pe6.sql tasks 数据库升级.txt

[root@zljt-host-233 phpems]# vim lib/config.inc.php

define('DB','TESTDB');//MYSQL数据库名

define('DH','192.168.233.137');//MYSQL主机名,不用改

define('DU','root');//MYSQL数据库用户名

define('DP','123456');//MYSQL数据库用户密码

define('DTH','x2_');//系统表前缀,不用改

4.配置虚拟主机

分别在web01和web02上配置,两台集群的配置一样

[root@zljt-host-233 ~]# vim /etc/httpd/conf.d/vhost.conf

*:80>

DocumentRoot /var/www/html/phpems

ServerName openlab.exam.com

</VirtualHost>

5.重启web

[root@zljt-host-233 ~]# systemctl restart httpd php-fpm

6.在Windows的hosts文件加入域名解析

192.168.233.100 openlab.exam.com

在原有项目基础上再增加应用phpoa,访问域名为phpoa.exam.com

1、DNS配置

[root@zljt-host-233 ~]# cd /var/named/

[root@zljt-host-233 named]# vim exam.com.zone

$TTL 1D

@ IN SOA dns.exam.com. admin.exam.com. (

2021081302 ; serial

1D ; refresh

1H ; retry

1W ; expire

3H ) ; minimum

NS dns

dns A 192.168.233.134

openlab A 192.168.233.100

phpoa A 192.168.233.100

nfs A 192.168.233.200

检查配置文件是否有问题

[root@zljt-host-233 named]# named-checkzone exam.com exam.com.zone

zone exam.com/IN: loaded serial 2021081302

OK

重启服务

[root@zljt-host-233 named]# systemctl restart named

测试:

[root@zljt-host-233 named]# nslookup phpoa.exam.com

Server: 192.168.233.134

Address: 192.168.233.134#53

Name: phpoa.exam.com

Address: 192.168.233.100

可以给所有机器的hosts文件添加解析

vim /etc/hosts

192.168.233/100 openlab.exam.com phpoa.exam.com

2、数据库配置

1> 准备数据库oa

主库上做:

mysql -uroot -p123456 -e 'create database oa;'

从库上查看是否同步:

[root@zljt-host-233 ~]# mysql -uroot -p123456 -e 'show databases;'

+--------------------+

| Database |

+--------------------+

| information_schema |

| exam |

| mysql |

| oa |

| performance_schema |

+--------------------+

2> 授权用户mycat,主库上拥有所有权限,从库上只读权限。

主库上配置:

ysql -uroot -p123456 -e 'grant all on oa.* to mycat@"192.168.233.%" identified by "123456";'

从库上配置:

从库被给了所有权限,权限太大。

[root@zljt-host-233 ~]# mysql -uroot -p123456 -e 'show grants for mycat@"192.168.233.%";'

+------------------------------------------------------------------------------------------------------------------+

| Grants for mycat@192.168.233.% |

+------------------------------------------------------------------------------------------------------------------+

| GRANT USAGE ON *.* TO 'mycat'@'192.168.233.%' IDENTIFIED BY PASSWORD '*6BB4837EB74329105EE4568DDA7DC67ED2CA2AD9' |

| GRANT SELECT ON `exam`.* TO 'mycat'@'192.168.233.%' |

| GRANT ALL PRIVILEGES ON `oa`.* TO 'mycat'@'192.168.233.%' |

+------------------------------------------------------------------------------------------------------------------+

删除用户从新授权

[root@zljt-host-233 ~]# mysql -uroot -p123456 -e 'drop user mycat@"192.168.233.%";'

只给select权限

[root@zljt-host-233 ~]# mysql -uroot -p123456 -e 'grant select on oa.* to mycat@"192.168.233.%" identified by "123456";

[root@zljt-host-233 ~]# mysql -uroot -p123456 -e 'show grants for mycat@"192.168.233.%";'

+------------------------------------------------------------------------------------------------------------------+

| Grants for mycat@192.168.233.% |

+------------------------------------------------------------------------------------------------------------------+

| GRANT USAGE ON *.* TO 'mycat'@'192.168.233.%' IDENTIFIED BY PASSWORD '*6BB4837EB74329105EE4568DDA7DC67ED2CA2AD9' |

| GRANT SELECT ON `exam`.* TO 'mycat'@'192.168.233.%' |

| GRANT SELECT ON `oa`.* TO 'mycat'@'192.168.233.%' |

+------------------------------------------------------------------------------------------------------------------+

3> 扩展:mycat配置多库读写分离(137上)

第一步,增加一个逻辑库OA

修改server.xml,多个逻辑库之间以逗号间隔。

"schemas">TESTDB,OA</property>

第二步,配置schema.xml

每一个逻辑库对应一个dataNode

每一个dataNode对应一个物理数据库

dataHost建议复用

[root@zljt-host-233 conf]# vim schema.xml

"1.0"?>

<!DOCTYPE mycat:schema SYSTEM "schema.dtd">

"http://io.mycat/">

"TESTDB" checkSQLschema="true" sqlMaxLimit="100" randomDataNode="dn1" dataNode="dn1">

</schema>

"OA" checkSQLschema="true" sqlMaxLimit="100" randomDataNode="dn2" dataNode="dn2">

</schema>

writeType="0" dbType="mysql" dbDriver="native" switchType="1" slaveThreshold="100">

select user()</heartbeat>

<!-- can have multi write hosts -->

"hostM1" url="192.168.233.134:3306" user="mycat"

"TESTDB" checkSQLschema="true" sqlMaxLimit="100" randomDataNode="dn1" dataNode="dn1">

</schema>

"OA" checkSQLschema="true" sqlMaxLimit="100" randomDataNode="dn2" dataNode="dn2">

</schema>

"dn1" dataHost="localhost1" database="exam" />

writeType="0" dbType="mysql" dbDriver="native" switchType="1" slaveThreshold="100">

select user()</heartbeat>

<!-- can have multi write hosts -->

"hostM1" url="192.168.233.134:3306" user="mycat"

"dn2" dataHost="localhost1" database="oa" />

"localhost1" maxCon="1000" minCon="10" balance="1"

writeType="0" dbType="mysql" dbDriver="native" switchType="1" slaveThreshold="100">

select user()</heartbeat>

<!-- can have multi write hosts -->

"hostM1" url="192.168.233.134:3306" user="mycat"

password="123456">

"db-slave1" url="192.168.233.135:3306" user="mycat" password="123456" /> "db-slave2" url="192.168.233.136:3306" user="mycat" password="123456" />

</writeHost>

</dataHost>

</mycat:schema>

重启mycat:

[root@zljt-host-233 conf]# mycat restart

Stopping Mycat-server...

Mycat-server was not running.

Starting Mycat-server...

3、共享存储(在主节点做133上)

上传phpoa,解压至/webdata

# unzip PHPOA_v4.0.zip -d /webdata/

# cd /webdata/

# mv PHPOA_v4.0/www/ phpoa

# rm -rf PHPOA_v4.0/

# chown -R apache *

4、app应用

两台web服务器配置虚拟主机

# umount /var/www/html

# mount -a

# vim /etc/httpd/conf.d/vhost.conf

*:80>

DocumentRoot /var/www/html/phpems

ServerName openlab.exam.com

</VirtualHost>

*:80>

DocumentRoot /var/www/html/phpoa

ServerName phpoa.exam.com

</VirtualHost>

重启服务:

systemctl restart httpd.service php-fpm.service

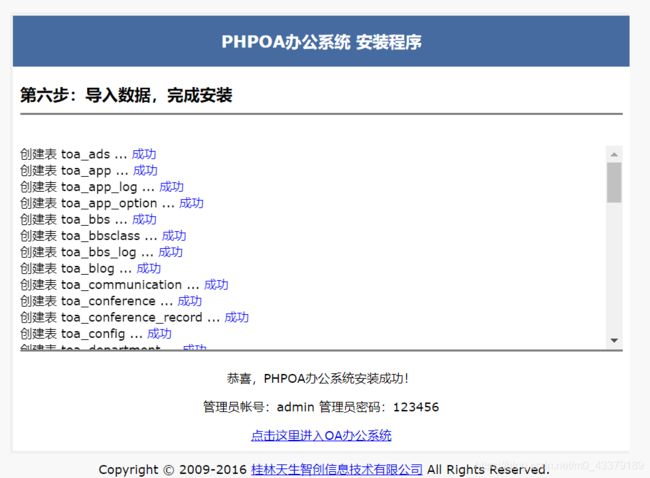

在线安装

http://phpoa.exam.com/install/install.php

如下图:

3. 项目整体测试

功能测试:

1、数据库测试

主从同步

136上:

[root@zljt-host-233 ~]# mysql -uroot -p123456 -e 'show slave status\G' | egrep "Slave_IO_Running|Slave_SQL_Running"

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

[root@zljt-host-233 ~]# mysql -uuser -puser -h192.168.233.137 -P9066 -DOA -e 'show @@datasource'

+----------+-----------+-------+-----------------+------+------+--------+------+------+---------+-----------+------------+

| DATANODE | NAME | TYPE | HOST | PORT | W/R | ACTIVE | IDLE | SIZE | EXECUTE | READ_LOAD | WRITE_LOAD |

+----------+-----------+-------+-----------------+------+------+--------+------+------+---------+-----------+------------+

| dn1 | hostM1 | mysql | 192.168.233.134 | 3306 | W | 0 | 10 | 1000 | 428 | 0 | 146 |

| dn1 | db-slave1 | mysql | 192.168.233.135 | 3306 | R | 0 | 10 | 1000 | 981 | 704 | 0 |

| dn1 | db-slave2 | mysql | 192.168.233.136 | 3306 | R | 0 | 0 | 1000 | 2 | 0 | 0 |

| dn2 | hostM1 | mysql | 192.168.233.134 | 3306 | W | 0 | 10 | 1000 | 428 | 0 | 146 |

| dn2 | db-slave1 | mysql | 192.168.233.135 | 3306 | R | 0 | 10 | 1000 | 981 | 704 | 0 |

| dn2 | db-slave2 | mysql | 192.168.233.136 | 3306 | R | 0 | 0 | 1000 | 2 | 0 | 0 |

+----------+-----------+-------+-----------------+------+------+--------+------+------+---------+-----------+------------+

心跳检测:

[root@zljt-host-233 ~]# mysql -uuser -puser -h192.168.233.137 -P9066 -DOA -e 'show @@heartbeat'

+-----------+-------+-----------------+------+---------+-------+--------+---------+--------------+---------------------+-------+

| NAME | TYPE | HOST | PORT | RS_CODE | RETRY | STATUS | TIMEOUT | EXECUTE_TIME | LAST_ACTIVE_TIME | STOP |

+-----------+-------+-----------------+------+---------+-------+--------+---------+--------------+---------------------+-------+

| hostM1 | mysql | 192.168.233.134 | 3306 | 1 | 0 | idle | 30000 | 4,2,2 | 2021-08-15 17:05:48 | false |

| db-slave1 | mysql | 192.168.233.135 | 3306 | 1 | 0 | idle | 30000 | 2,1,2 | 2021-08-15 17:05:48 | false |

| db-slave2 | mysql | 192.168.233.136 | 3306 | -1 | 2 | idle | 30000 | 3,4,4 | 2021-08-15 17:05:48 | false |

+-----------+-------+-----------------+------+---------+-------+--------+---------+--------------+---------------------+-------+

2.存储测试

1>DRBD测试

[root@zljt-host-233 ~]# cat /proc/drbd

version: 8.4.11-1 (api:1/proto:86-101)

GIT-hash: 66145a308421e9c124ec391a7848ac20203bb03c build by mockbuild@, 2020-04-05 02:58:18

0: cs:Connected ro:Secondary/Primary ds:UpToDate/UpToDate C r-----

ns:0 nr:77449 dw:77449 dr:0 al:0 bm:0 lo:0 pe:0 ua:0 ap:0 ep:1 wo:f oos:0

[root@zljt-host-233 ~]# drbdadm role nfs

Secondary/Primary

[root@zljt-host-233 ~]# drbdadm dstate nfs

UpToDate/UpToDate

2> nfs测试

[root@zljt-host-233 ~]# showmount -e localhost

Export list for localhost:

/webdata 192.168.233.0/24

3>高可用测试

查看VIP

[root@zljt-host-233 ~]# ip a | grep 192.168.233.200

inet 192.168.233.200/32 scope global ens33

查看挂载

[root@zljt-host-233 ~]# df -h | grep webdata

/dev/drbd0 16G 135M 16G 1% /webdata

测试主节点 nfs 停止

[root@zljt-host-233 ~]# systemctl stop nfs

[root@zljt-host-233 ~]# systemctl is-active nfs

active #停止后迅速又启动

测试keepalived停止(vip漂移,webdata也挂载到另一节点)

[root@zljt-host-233 ~]# systemctl stop keepalived.service

[root@zljt-host-233 ~]# systemctl status keepalived.service

● keepalived.service - LVS and VRRP High Availability Monitor

Loaded: loaded (/usr/lib/systemd/system/keepalived.service; enabled; vendor preset: disabled)

Active: inactive (dead) since Sun 2021-08-15 17:20:32 CST; 12s ago

Process: 948 ExecStart=/usr/sbin/keepalived $KEEPALIVED_OPTIONS (code=exited, status=0/SUCCESS)

Main PID: 965 (code=exited, status=0/SUCCESS)

Aug 15 13:31:32 zljt-host-233.133.openlab.com Keepalived_vrrp[968]: (VI_nfs) Sending/queueing gratuitous ARPs on ens33 for 192.168.233.200

Aug 15 13:31:32 zljt-host-233.133.openlab.com Keepalived_vrrp[968]: Sending gratuitous ARP on ens33 for 192.168.233.200

Aug 15 13:31:32 zljt-host-233.133.openlab.com Keepalived_vrrp[968]: Sending gratuitous ARP on ens33 for 192.168.233.200

Aug 15 13:31:32 zljt-host-233.133.openlab.com Keepalived_vrrp[968]: Sending gratuitous ARP on ens33 for 192.168.233.200

Aug 15 13:31:32 zljt-host-233.133.openlab.com Keepalived_vrrp[968]: Sending gratuitous ARP on ens33 for 192.168.233.200

Aug 15 17:20:31 zljt-host-233.133.openlab.com Keepalived[965]: Stopping

Aug 15 17:20:31 zljt-host-233.133.openlab.com systemd[1]: Stopping LVS and VRRP High Availability Monitor...

Aug 15 17:20:31 zljt-host-233.133.openlab.com Keepalived_vrrp[968]: (VI_nfs) sent 0 priority

Aug 15 17:20:31 zljt-host-233.133.openlab.com Keepalived_vrrp[968]: (VI_nfs) removing VIPs.

Aug 15 17:20:32 zljt-host-233.133.openlab.com systemd[1]: Stopped LVS and VRRP High Availability Monitor.

[root@zljt-host-233 ~]# ip a #vip已没了,漂移至另一个节点(132上)

2: ens33: ,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:5b:58:69 brd ff:ff:ff:ff:ff:ff

inet 192.168.233.133/24 brd 192.168.233.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet6 fe80::92bc:148d:88a2:7992/64 scope link tentative noprefixroute dadfailed

valid_lft forever preferred_lft forever

inet6 fe80::27a4:93e8:109e:904/64 scope link noprefixroute

valid_lft forever preferred_lft forever

132上查看

[root@zljt-host-233 ~]# ip a

2: ens33: ,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:5d:33:fc brd ff:ff:ff:ff:ff:ff

inet 192.168.233.132/24 brd 192.168.233.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet 192.168.233.100/32 scope global ens33

valid_lft forever preferred_lft forever

inet 192.168.233.200/32 scope global ens33

keepalive配置为抢占模式,重新启动刚停止的节点的keepalived,vip又恢复

[root@zljt-host-233 ~]# systemctl restart keepalived.service

[root@zljt-host-233 ~]# ip a

2: ens33: ,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:5b:58:69 brd ff:ff:ff:ff:ff:ff

inet 192.168.233.133/24 brd 192.168.233.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet 192.168.233.200/32 scope global ens33

valid_lft forever preferred_lft forever

建议生产中配置非抢占模式

3、应用服务器测试

应用服务器使用后端共享存储,分别访问两台应用服务器测试。

检查挂载nfs

[root@zljt-host-233 ~]# df -h /var/www/html/

Filesystem Size Used Avail Use% Mounted on

nfs.exam.com:/webdata 16G 135M 16G 1% /var/www/html

4、负载均衡功能测试

测试时临时断开共享存储,每个节点 web 页面内容不同进行测试调度算法。