残差网络ResNet

paper:https://arxiv.org/abs/1512.03385

当更深层次的网络能够开始收敛时,就会暴露出一个退化问题:随着网络深度的增加,精度会饱和(这可能并不奇怪),然后迅速退化。

残差网络结构的提出,就是解决了随着网络层数的加深,出现的梯度弥散、梯度爆炸、以及网络退化的现象。

架构:

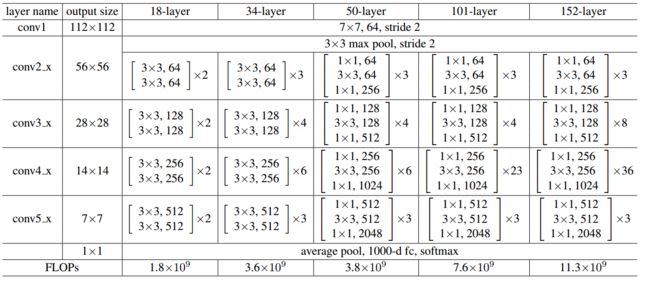

resnet系列如下表

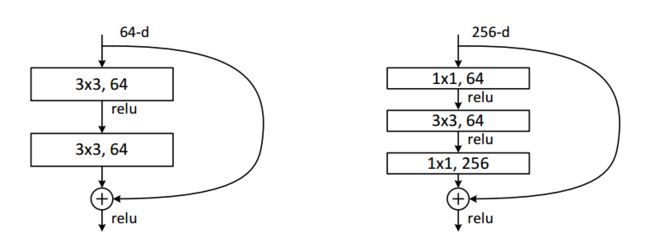

残差模块。左图:ResNet34的残差块(在56×56特征图上),右图:ResNet-50/101/152的“bottleneck”残差块。

ImageNet的示例网络架构。左图:VGG-19模型(196亿FLOP)作为参考。中间:具有34个参数层(36亿FLOP)的平面网络。右图:具有34个参数层(36亿FLOP)的残差网络。虚线快捷方式会增加维度。

Code

(1)ResNet18

class ResidualBlock(nn.Module):

def __init__(self, inchannel, outchannel, stride=1):

super(ResidualBlock, self).__init__()

self.left = nn.Sequential(

nn.Conv2d(inchannel, outchannel, kernel_size=3, stride=stride, padding=1, bias=False),

nn.BatchNorm2d(outchannel),

nn.ReLU(inplace=True),

nn.Conv2d(outchannel, outchannel, kernel_size=3, stride=1, padding=1, bias=False),

nn.BatchNorm2d(outchannel)

)

self.shortcut = nn.Sequential()

if stride != 1 or inchannel != outchannel:

self.shortcut = nn.Sequential(

nn.Conv2d(inchannel, outchannel, kernel_size=1, stride=stride, bias=False),

nn.BatchNorm2d(outchannel)

)

def forward(self, x):

out = self.left(x)

out = out + self.shortcut(x)

out = F.relu(out)

return out

class ResNet(nn.Module):

def __init__(self, ResidualBlock, num_classes=10):

super(ResNet, self).__init__()

self.inchannel = 64

self.conv1 = nn.Sequential(

nn.Conv2d(3, 64, kernel_size=3, stride=1, padding=1, bias=False),

nn.BatchNorm2d(64),

nn.ReLU()

)

self.layer1 = self.make_layer(ResidualBlock, 64, 2, stride=1)

self.layer2 = self.make_layer(ResidualBlock, 128, 2, stride=2)

self.layer3 = self.make_layer(ResidualBlock, 256, 2, stride=2)

self.layer4 = self.make_layer(ResidualBlock, 512, 2, stride=2)

self.fc = nn.Linear(512, num_classes)

def make_layer(self, block, channels, num_blocks, stride):

strides = [stride] + [1] * (num_blocks - 1)

layers = []

for stride in strides:

layers.append(block(self.inchannel, channels, stride))

self.inchannel = channels

return nn.Sequential(*layers)

def forward(self, x):

out = self.conv1(x)

out = self.layer1(out)

out = self.layer2(out)

out = self.layer3(out)

out = self.layer4(out)

out = F.avg_pool2d(out, 4)

out = out.view(out.size(0), -1)

out = self.fc(out)

return out

def ResNet18():

return ResNet(ResidualBlock)(2)ResNet34

class CommonBlock(nn.Module):

def __init__(self, in_channel, out_channel, stride):

super(CommonBlock, self).__init__()

self.conv1 = nn.Conv2d(in_channel, out_channel, kernel_size=3, stride=stride, padding=1, bias=False)

self.bn1 = nn.BatchNorm2d(out_channel)

self.conv2 = nn.Conv2d(out_channel, out_channel, kernel_size=3, stride=stride, padding=1, bias=False)

self.bn2 = nn.BatchNorm2d(out_channel)

def forward(self, x):

identity = x

x = F.relu(self.bn1(self.conv1(x)), inplace=True)

x = self.bn2(self.conv2(x))

x += identity

return F.relu(x, inplace=True)

class SpecialBlock(nn.Module):

def __init__(self, in_channel, out_channel, stride):

super(SpecialBlock, self).__init__()

self.change_channel = nn.Sequential(

nn.Conv2d(in_channel, out_channel, kernel_size=1, stride=stride[0], padding=0, bias=False),

nn.BatchNorm2d(out_channel)

)

self.conv1 = nn.Conv2d(in_channel, out_channel, kernel_size=3, stride=stride[0], padding=1, bias=False)

self.bn1 = nn.BatchNorm2d(out_channel)

self.conv2 = nn.Conv2d(out_channel, out_channel, kernel_size=3, stride=stride[1], padding=1, bias=False)

self.bn2 = nn.BatchNorm2d(out_channel)

def forward(self, x):

identity = self.change_channel(x)

x = F.relu(self.bn1(self.conv1(x)), inplace=True)

x = self.bn2(self.conv2(x))

x += identity

return F.relu(x, inplace=True)

class ResNet34(nn.Module):

def __init__(self, classes_num):

super(ResNet34, self).__init__()

self.prepare = nn.Sequential(

nn.Conv2d(3, 64, 7, 2, 3),

nn.BatchNorm2d(64),

nn.ReLU(inplace=True),

nn.MaxPool2d(3, 2, 1)

)

self.layer1 = nn.Sequential(

CommonBlock(64, 64, 1),

CommonBlock(64, 64, 1),

CommonBlock(64, 64, 1)

)

self.layer2 = nn.Sequential(

SpecialBlock(64, 128, [2, 1]),

CommonBlock(128, 128, 1),

CommonBlock(128, 128, 1),

CommonBlock(128, 128, 1)

)

self.layer3 = nn.Sequential(

SpecialBlock(128, 256, [2, 1]),

CommonBlock(256, 256, 1),

CommonBlock(256, 256, 1),

CommonBlock(256, 256, 1),

CommonBlock(256, 256, 1),

CommonBlock(256, 256, 1)

)

self.layer4 = nn.Sequential(

SpecialBlock(256, 512, [2, 1]),

CommonBlock(512, 512, 1),

CommonBlock(512, 512, 1)

)

self.pool = nn.AdaptiveAvgPool2d(output_size=(1, 1))

self.fc = nn.Sequential(

nn.Dropout(p=0.5),

nn.Linear(512, 256),

nn.ReLU(inplace=True),

nn.Dropout(p=0.5),

nn.Linear(256, classes_num)

)

def forward(self, x):

x = self.prepare(x)

x = self.layer1(x)

x = self.layer2(x)

x = self.layer3(x)

x = self.layer4(x)

x = self.pool(x)

x = x.reshape(x.shape[0], -1)

x = self.fc(x)

return x(3)ResNet50

class Res50(nn.Module):

def __init__(self, pretrained=True):

super(Res50, self).__init__()

self.de_pred = nn.Sequential(Conv2d(1024, 128, 1, same_padding=True, NL='relu'),

Conv2d(128, 1, 1, same_padding=True, NL='relu'))

initialize_weights(self.modules())

res = models.resnet50(pretrained=pretrained)

self.frontend = nn.Sequential(

res.conv1, res.bn1, res.relu, res.maxpool, res.layer1, res.layer2

)

self.own_reslayer_3 = make_res_layer(Bottleneck, 256, 6, stride=1)

self.own_reslayer_3.load_state_dict(res.layer3.state_dict())

def forward(self,x):

x = self.frontend(x)

x = self.own_reslayer_3(x)

x = self.de_pred(x)

x = F.upsample(x,scale_factor=8)

return x

def _initialize_weights(self):

for m in self.modules():

if isinstance(m, nn.Conv2d):

m.weight.data.normal_(0.0, std=0.01)

if m.bias is not None:

m.bias.data.fill_(0)

elif isinstance(m, nn.BatchNorm2d):

m.weight.fill_(1)

m.bias.data.fill_(0)

def make_res_layer(block, planes, blocks, stride=1):

downsample = None

inplanes=512

if stride != 1 or inplanes != planes * block.expansion:

downsample = nn.Sequential(

GauConv2d(inplanes, planes * block.expansion,

4, bias=False),

nn.BatchNorm2d(planes * block.expansion),

)

layers = []

layers.append(block(inplanes, planes, stride, downsample))

inplanes = planes * block.expansion

for i in range(1, blocks):

layers.append(block(inplanes, planes))

return nn.Sequential(*layers)

class Bottleneck(nn.Module):

expansion = 4

def __init__(self, inplanes, planes, stride=1, downsample=None):

super(Bottleneck, self).__init__()

self.conv1 = nn.Conv2d(inplanes, planes, kernel_size=1, bias=False)

self.bn1 = nn.BatchNorm2d(planes)

self.conv2 = nn.Conv2d(planes, planes, kernel_size=3, stride=stride,

padding=1, bias=False)

self.bn2 = nn.BatchNorm2d(planes)

self.conv3 = nn.Conv2d(planes, planes * self.expansion, kernel_size=1, bias=False)

self.bn3 = nn.BatchNorm2d(planes * self.expansion)

self.relu = nn.ReLU(inplace=True)

self.downsample = downsample

self.stride = stride

def forward(self, x):

residual = x

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

out = self.conv2(out)

out = self.bn2(out)

out = self.relu(out)

out = self.conv3(out)

out = self.bn3(out)

if self.downsample is not None:

residual = self.downsample(x)

out += residual

out = self.relu(out)

return out (4)ResNet101

# -*- coding: utf-8 -*-

# @Author: Song Dejia

# @Date: 2018-10-21 12:58:05

# @Last Modified by: Song Dejia

# @Last Modified time: 2018-10-23 14:47:57

import math

import torch

import torch.nn as nn

import torch.nn.functional as F

import torch.utils.model_zoo as model_zoo

class Bottleneck(nn.Module):

"""

通过 _make_layer 来构造Bottleneck

具体通道变化:

inplanes -> planes -> expansion * planes 直连 out1

inplanes -> expansion * planes 残差项 res

由于多层bottleneck级连 所以inplanes = expansion * planes

总体结构 expansion * planes -> planes -> expansion * planes

注意:

1.输出 ReLu(out1 + res)

2.与普通bottleneck不同点在于 其中的stride是可以设置的

3.input output shape是否相同取决于stride

out:[x+2rate-3]/stride + 1

res:[x-1]/stride + 1

"""

expansion = 4

def __init__(self, inplanes, planes, stride=1, rate=1, downsample=None):

super(Bottleneck, self).__init__()

self.conv1 = nn.Conv2d(inplanes, planes, kernel_size=1, bias=False)

self.bn1 = nn.BatchNorm2d(planes)

self.conv2 = nn.Conv2d(planes, planes, kernel_size=3, stride=stride, dilation=rate, padding=rate, bias=False)

self.bn2 = nn.BatchNorm2d(planes)

self.conv3 = nn.Conv2d(planes, planes * 4, kernel_size=1, bias=False)

self.bn3 = nn.BatchNorm2d(planes * 4)

self.relu = nn.ReLU(inplace=True)

self.downsample = downsample

self.stride = stride

self.rate = rate

def forward(self, x):

residual = x

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

out = self.conv2(out)

out = self.bn2(out)

out = self.relu(out)

out = self.conv3(out)

out = self.bn3(out)

if self.downsample is not None:

residual = self.downsample(x)

out += residual

out = self.relu(out)

return out

class ResNet(nn.Module):

def __init__(self, nInputChannels, block, layers, os=16, pretrained=False):

self.inplanes = 64

super(ResNet, self).__init__()

if os == 16:

strides = [1, 2, 2, 1]

rates = [1, 1, 1, 2]

blocks = [1, 2, 4]

elif os == 8:

strides = [1, 2, 1, 1]

rates = [1, 1, 2, 2]

blocks = [1, 2, 1]

else:

raise NotImplementedError

# Modules

self.conv1 = nn.Conv2d(nInputChannels, 64, kernel_size=7, stride=2, padding=3, bias=False)

self.bn1 = nn.BatchNorm2d(64)

self.relu = nn.ReLU(inplace=True)

self.maxpool = nn.MaxPool2d(kernel_size=3, stride=2, padding=1)

self.layer1 = self._make_layer(block, 64, layers[0], stride=strides[0], rate=rates[0])#64, 3

self.layer2 = self._make_layer(block, 128, layers[1], stride=strides[1], rate=rates[1])#128 4

self.layer3 = self._make_layer(block, 256, layers[2], stride=strides[2], rate=rates[2])#256 23

self.layer4 = self._make_MG_unit(block, 512, blocks=blocks, stride=strides[3], rate=rates[3])

self._init_weight()

if pretrained:

self._load_pretrained_model()

def _make_layer(self, block, planes, blocks, stride=1, rate=1):

"""

block class: 未初始化的bottleneck class

planes:输出层数

blocks:block个数

"""

downsample = None

if stride != 1 or self.inplanes != planes * block.expansion:

downsample = nn.Sequential(

nn.Conv2d(self.inplanes, planes * block.expansion, kernel_size=1, stride=stride, bias=False),

nn.BatchNorm2d(planes * block.expansion),

)

layers = []

layers.append(block(self.inplanes, planes, stride, rate, downsample))

self.inplanes = planes * block.expansion

for i in range(1, blocks):

layers.append(block(self.inplanes, planes))

return nn.Sequential(*layers)

def _make_MG_unit(self, block, planes, blocks=[1,2,4], stride=1, rate=1):

downsample = None

if stride != 1 or self.inplanes != planes * block.expansion:

downsample = nn.Sequential(

nn.Conv2d(self.inplanes, planes * block.expansion, kernel_size=1, stride=stride, bias=False),

nn.BatchNorm2d(planes * block.expansion),

)

layers = []

layers.append(block(self.inplanes, planes, stride, rate=blocks[0]*rate, downsample=downsample))

self.inplanes = planes * block.expansion

for i in range(1, len(blocks)):

layers.append(block(self.inplanes, planes, stride=1, rate=blocks[i]*rate))

return nn.Sequential(*layers)

def forward(self, input):

x = self.conv1(input)

x = self.bn1(x)

x = self.relu(x)

x = self.maxpool(x)

x = self.layer1(x)

low_level_feat = x

x = self.layer2(x)

x = self.layer3(x)

x = self.layer4(x)

return x, low_level_feat

def _init_weight(self):

for m in self.modules():

if isinstance(m, nn.Conv2d):

# n = m.kernel_size[0] * m.kernel_size[1] * m.out_channels

# m.weight.data.normal_(0, math.sqrt(2. / n))

torch.nn.init.kaiming_normal_(m.weight)

elif isinstance(m, nn.BatchNorm2d):

m.weight.data.fill_(1)

m.bias.data.zero_()

def _load_pretrained_model(self):

pretrain_dict = model_zoo.load_url('https://download.pytorch.org/models/resnet101-5d3b4d8f.pth')

model_dict = {}

state_dict = self.state_dict()

for k, v in pretrain_dict.items():

if k in state_dict:

model_dict[k] = v

state_dict.update(model_dict)

self.load_state_dict(state_dict)

def ResNet101(nInputChannels=3, os=16, pretrained=False):

model = ResNet(nInputChannels, Bottleneck, [3, 4, 23, 3], os, pretrained=pretrained)

return model(5)ResNet152

model_urls = {'resnet152': 'https://download.pytorch.org/models/resnet152-b121ed2d.pth'}

def conv3_3(in_planes,out_planes,stride = 1,groups = 1,dilation = 1):

'''3*3卷积,其中bias=False是因为加入BN层,没有必要加入偏置项'''

return nn.Conv2d(in_planes,out_planes,kernel_size = 3,stride = stride,

padding = dilation,groups = groups,bias = False,dilation = dilation)

def conv1_1(in_planes,out_planes,stride = 1):

'''1*1卷积'''

return nn.Conv2d(in_planes,out_planes,kernel_size=1,stride=stride,bias = False)

class Bottleneck(nn.Module):

'''resnet单元,大于50层的加入了1*1卷积降维升维的部分,主要目的是降低参数量'''

expansion = 4

def __init__(self,inplanes,planes,stride=1,downsample=None,groups = 1,

base_width=64,dilation=1,norm_layer = None):

super(Bottleneck,self).__init__()

if norm_layer is None:

norm_layer = nn.BatchNorm2d

#这里默认卷积分为1组64个channel,还可以分多个groups,每个groups设置width_per_group个channel

width = int(planes*(base_width/64.))*groups

self.conv1 =conv1_1(inplanes,width)

self.bn1 = norm_layer(width)

self.conv2 = conv3_3(width,width,stride,groups,dilation)

self.bn2 = norm_layer(width)

self.conv3 = conv1_1(width,planes*self.expansion)

self.bn3 = norm_layer(planes*self.expansion)

self.relu = nn.ReLU(inplace=True)

#加入SENet的位置

#self.se = SELayer(planes * 4, reduction)

self.downsample = downsample

self.stride = stride

def forward(self,x):

identity = x

#1*1卷积

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

#3*3卷积

out = self.conv2(out)

out = self.bn2(out)

out = self.relu(out)

#1*1卷积

out = self.conv3(out)

out = self.bn3(out)

# 加入SENet的位置

# out = self.se(out)

if self.downsample is not None:

identity = self.downsample(x)

out += identity

out = self.relu(out)

return out

class ResNet(nn.Module):

def __init__(self,block,layers,num_classes=1000,groups = 1,width_per_group=64,

norm_layer=None):

super(ResNet,self).__init__()

if norm_layer is None:

norm_layer = nn.BatchNorm2d

self._norm_layer = norm_layer

self.inplanes = 64

self.dilation = 1

self.groups = groups

self.base_width = width_per_group

self.conv1 = nn.Conv2d(3,self.inplanes,kernel_size=7,stride=2,padding = 3,bias=False)

self.bn1 = norm_layer(self.inplanes)

self.relu = nn.ReLU(inplace=True)

self.maxpool = nn.MaxPool2d(kernel_size=3,stride=2,padding=1)

self.layer1 = self._make_layer(block,64,layers[0])

self.layer2 = self._make_layer(block,128,layers[1],stride=2)

self.layer3 = self._make_layer(block, 256, layers[2], stride=2)

self.layer4 = self._make_layer(block, 512, layers[3], stride=2)

self.avgpool = nn.AdaptiveAvgPool2d((1,1))

self.fc = nn.Linear(512*block.expansion,num_classes)

for m in self.modules():

if isinstance(m, nn.Conv2d):

nn.init.kaiming_normal_(m.weight, mode='fan_out', nonlinearity='relu')

elif isinstance(m, (nn.BatchNorm2d, nn.GroupNorm)):

nn.init.constant_(m.weight, 1)

nn.init.constant_(m.bias, 0)

def _make_layer(self,block,planes,blocks,stride=1,dilate=False):

norm_layer = self._norm_layer

downsample = None

previous_dilation = self.dilation

if dilate:

self.dilation *= stride

stride = 1

if stride != 1 or self.inplanes != planes * block.expansion:

#这里是为了转换为相同的维度能够进行shortcut,即尺寸和维度都要相同

downsample = nn.Sequential(

conv1_1(self.inplanes, planes * block.expansion, stride),

norm_layer(planes * block.expansion),

)

#以下这个地方挺绕,先添加第一个block,然后再改变输入维度为planes*4,然后再添加剩余block

#其中self.inplanes=64开始,每个block结束通道变为palnes*4

layers=[]

layers.append(block(self.inplanes,planes,stride,downsample,self.groups,

self.base_width,previous_dilation,norm_layer))

self.inplanes = planes*block.expansion

for _ in range(1,blocks):

layers.append(block(self.inplanes, planes, groups=self.groups,

base_width=self.base_width, dilation=self.dilation,

norm_layer=norm_layer))

return nn.Sequential(*layers)

def forward(self,x):

x = self.conv1(x)

x = self.bn1(x)

x = self.relu(x)

x = self.maxpool(x)

x = self.layer1(x)

x = self.layer2(x)

x = self.layer3(x)

x = self.layer4(x)

x = self.avgpool(x)

x = torch.flatten(x,1)

x = self.fc(x)

return x

def _resnet(arch,block,layers,pretrained,progress,**kwargs):

model = ResNet(block,layers,**kwargs)

if pretrained:

state_dict = load_state_dict_from_url(model_urls[arch],progress=progress)

model.load_state_dict(state_dict)

return model

def resnet152(pretrained=False, progress=True, **kwargs):

return _resnet('resnet152', Bottleneck, [3, 8, 36, 3], pretrained, progress,**kwargs)