slurm 23.11.0集群 debian 11.5 安装

slurm 23.11.0集群 debian 11.5 安装

用途

Slurm(Simple Linux Utility for Resource Management, http://slurm.schedmd.com/ )是开源的、具有容错性和高度可扩展的Linux集群超级计算系统资源管理和作业调度系统。超级计算系统可利用Slurm对资源和作业进行管理,以避免相互干扰,提高运行效率。所有需运行的作业,无论是用于程序调试还是业务计算,都可以通过交互式并行 srun 、批处理式 sbatch 或分配式 salloc 等命令提交,提交后可以利用相关命令查询作业状态等。

架构

Slurm采用slurmctld服务(守护进程)作为中心管理器用于监测资源和作业,为了提高可用性,还可以配置另一个备份冗余管理器。各计算节点需启动slurmd守护进程,以便被用于作为远程shell使用:等待作业、执行作业、返回状态、再等待更多作业。slurmdbd(Slurm DataBase Daemon)数据库守护进程(非必需,建议采用,也可以记录到纯文本中等),可以将多个slurm管理的集群的记账信息记录在同一个数据库中。还可以启用slurmrestd(Slurm REST API Daemon)服务(非必需),该服务可以通过REST API与Slurm进行交互,所有功能都对应的API。用户工具包含 srun 运行作业、 scancel 终止排队中或运行中的作业、 sinfo 查看系统状态、 squeue 查看作业状态、 sacct 查看运行中或结束了的作业及作业步信息等命令。 sview 命令可以图形化显示系统和作业状态(可含有网络拓扑)。 scontrol 作为管理工具,可以监控、修改集群的配置和状态信息等。用于管理数据库的命令是 sacctmgr ,可认证集群、有效用户、有效记账账户等。

xxxxxxxxxx10 1#192.168.86.134 - 192.168.86.1362cd3ssh-keygen 4sed -i ‘s/#PermitRootLogin prohibit-password/PermitRootLogin yes/’ /etc/ssh/sshd_config5systemctl restart ssh6passwd7#192.168.86.134 8ssh-copy-id slurm-head9ssh-copy-id slurm-db10ssh-copy-id slurm-computebash

- SlurmDBD Node

- slurm-smd

- slurm-smd-slurmdbd

- Head Node (slurmctld node)

- slurm-smd

- slurm-smd-slurmctld

- Compute Nodes (slurmd node)

- slurm-smd

- slurm-smd-slurmd

192.168.86.134 slurm-head # 控制节点 Head Node

192.168.86.135 slurm-db #数据节点 SlurmDBD Node

192.168.86.136 slurm-compute #计算节点 Compute Nodes

注意:如果是老服务器,已有服务在运行,可不改具体的hostname只要对应名称进行替换

修改主机名

# 192.168.86.134

hostnamectl set-hostname slurm-head

# 192.168.86.135

hostnamectl set-hostname slurm-db

# 192.168.86.136

hostnamectl set-hostname slurm-compute

修改/etc/hosts

#192.168.86.134 - 192.168.86.136

echo "192.168.86.134 slurm-head

192.168.86.135 slurm-db

192.168.86.136 slurm-compute" >>/etc/hosts

cat /etc/hosts

修改debian的apt源

#192.168.86.134 - 192.168.86.136

mv /etc/apt/sources.list{,.bak}

echo "deb http://mirrors.tuna.tsinghua.edu.cn/debian/ bookworm main contrib non-free non-free-firmware

# deb-src http://mirrors.tuna.tsinghua.edu.cn/debian/ bookworm main contrib non-free non-free-firmware

deb http://mirrors.tuna.tsinghua.edu.cn/debian/ bookworm-updates main contrib non-free non-free-firmware

# deb-src http://mirrors.tuna.tsinghua.edu.cn/debian/ bookworm-updates main contrib non-free non-free-firmware

deb http://mirrors.tuna.tsinghua.edu.cn/debian/ bookworm-backports main contrib non-free non-free-firmware

# deb-src http://mirrors.tuna.tsinghua.edu.cn/debian/ bookworm-backports main contrib non-free non-free-firmware

deb https://security.debian.org/debian-security bookworm-security main contrib non-free non-free-firmware

# deb-src https://security.debian.org/debian-security bookworm-security main contrib non-free non-free-firmware" >/etc/apt/sources.list

apt update

apt -y install vim wget

同步时间

#192.168.86.134 - 192.168.86.136

apt update

apt install ntpdate -y

ntpdate ntp1.aliyun.com

远程免密

#192.168.86.134 - 192.168.86.136

cd

ssh-keygen

sed -i 's/#PermitRootLogin prohibit-password/PermitRootLogin yes/' /etc/ssh/sshd_config

systemctl restart ssh

#192.168.86.134

ssh-copy-id slurm-head

ssh-copy-id slurm-db

ssh-copy-id slurm-compute

安装munge

#192.168.86.134 - 192.168.86.136

export MUNGEUSER=1120

groupadd -g $MUNGEUSER munge

useradd -m -c "MUNGE Uid 'N' Gid Emporium" -d /var/lib/munge -u $MUNGEUSER -g munge -s /sbin/nologin munge

3.安装munge软件

#192.168.86.134 - 192.168.86.136

apt-get install -y munge libmunge-dev libmunge2 rng-tools make hwloc libhwloc-dev git gcc build-essential fakeroot devscripts debhelper libncurses-dev libgtk2.0-dev libpam0g-dev libperl-dev liblua5.3-dev libhwloc-dev dh-exec librrd-dev libipmimonitoring-dev hdf5-helpers libfreeipmi-dev libhdf5-dev man2html libcurl4-openssl-dev libpmix-dev libhttp-parser-dev libyaml-dev libjson-c-dev libjwt-dev liblz4-dev libdbus-1-dev librdkafka-dev libreadline-dev perl libpam0g-dev liblua5.3-dev libhwloc-dev

#192.168.86.135

apt-get install mariadb-server libmariadb-dev-compat libmariadb-dev -y

4.添加配置文件

rngd -r /dev/urandom

dd if=/dev/urandom bs=1 count=1024 > /etc/munge/munge.key

chown munge: /etc/munge/munge.key

chmod 400 /etc/munge/munge.key

chown -R munge: /var/lib/munge

chown -R munge: /var/run/munge

chown -R munge: /var/log/munge

scp /etc/munge/munge.key root@slurm-db:/etc/munge/

scp /etc/munge/munge.key root@slurm-compute:/etc/munge/

6.启动服务

systemctl restart munge

systemctl enable munge

systemctl status munge

#192.168.86.135 - 192.168.86.136

rngd -r /dev/urandom

chmod 700 /etc/munge

chown -R munge: /etc/munge

chown -R munge: /var/lib/munge

chown -R munge: /var/run/munge

chown -R munge: /var/log/munge

systemctl start munge

systemctl enable munge

systemctl status munge

安装slurm

#添加用户

#192.168.86.134 - 192.168.86.136

groupadd slurm

useradd -r -M -g slurm slurm

## 编译安装

#192.168.86.134 - 192.168.86.136

wget https://download.schedmd.com/slurm/slurm-23.11.0.tar.bz2

tar -xf slurm-23.11.0.tar.bz2

cd slurm-23.11.0/

./configure --enable-debug --prefix=/usr/local/slurm

make && make install

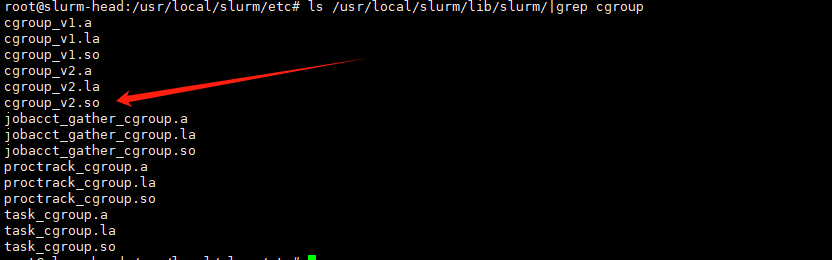

#查看是否缺少插件

#192.168.86.135

ls /usr/local/slurm/lib/slurm|grep accounting_storag|grep mysql

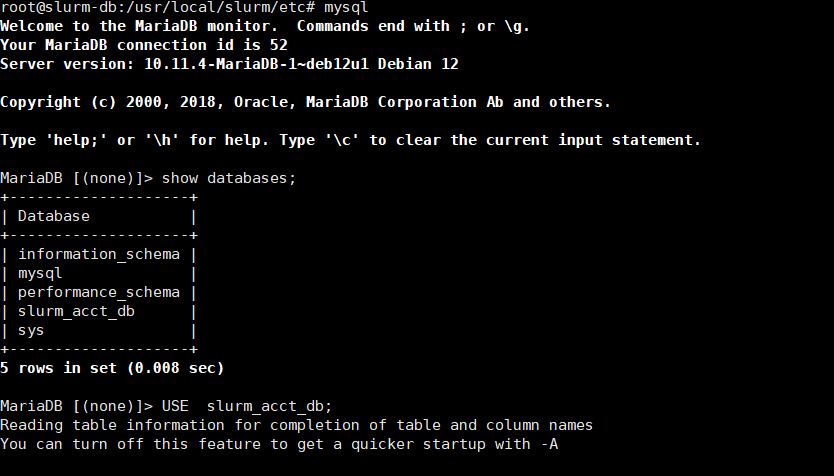

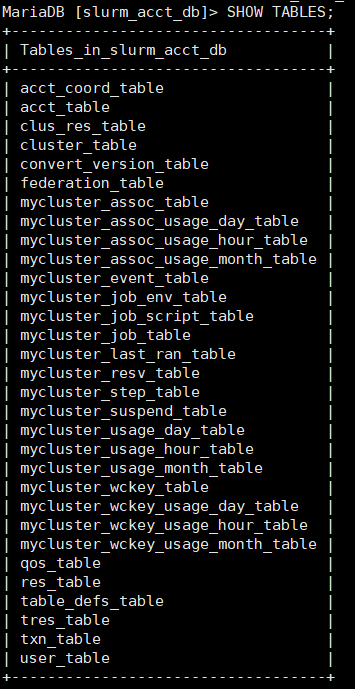

##启动并且登陆,创建数据库

systemctl start mariadb

mysql -u root

CREATE DATABASE slurm_acct_db;

CREATE USER 'slurm'@'192.168.86.135';

SET PASSWORD FOR 'slurm'@'192.168.86.135' = PASSWORD('mypassword');

GRANT ALL PRIVILEGES ON slurm_acct_db.* TO 'slurm'@'192.168.86.135';

FLUSH PRIVILEGES;

EXIT;

CREATE DATABASE slurm_acct_db;

CREATE USER 'slurm'@'192.168.86.134';

SET PASSWORD FOR 'slurm'@'192.168.86.134' = PASSWORD('mypassword');

GRANT ALL PRIVILEGES ON slurm_acct_db.* TO 'slurm'@'192.168.86.134';

FLUSH PRIVILEGES;

EXIT;

## 创建文件夹,并且赋权

mkdir -p /var/log/slurm/

chown slurm: /var/log/slurm/

## 编写配置文件

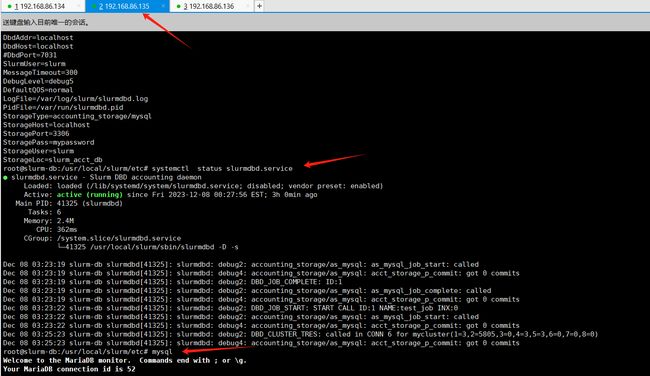

vim slurmdbd.conf

AuthType=auth/munge

DbdAddr=localhost

DbdHost=localhost

#DbdPort=7031

SlurmUser=slurm

MessageTimeout=300

DebugLevel=debug5

DefaultQOS=normal

LogFile=/var/log/slurm/slurmdbd.log

PidFile=/var/run/slurmdbd.pid

StorageType=accounting_storage/mysql

StorageHost=localhost

StoragePort=3306

StoragePass=mypassword

StorageUser=slurm

StorageLoc=slurm_acct_db

##更改文件权限

chown slurm: /usr/local/slurm/etc/slurmdbd.conf

chmod 600 /usr/local/slurm/etc/slurmdbd.conf

systemctl start slurmdbd

systemctl status slurmdbd

systemctl enable slurmdbd

#192.168.86.134 - 192.168.86.136 ##控制节点或计算节点

cp -rf etc/ /usr/local/slurm/

cp etc/slurm*.service /lib/systemd/system/

cd /usr/local/slurm/etc

cp slurm.conf.example slurm.conf

cp cgroup.conf.example cgroup.conf

cp slurmdbd.conf.example slurmdbd.conf

## 修改cgroup配置

echo "CgroupMountpoint=/sys/fs/cgroup" >> cgroup.conf

cat cgroup.conf

vim slurm.conf

ClusterName=myCluster

SlurmctldHost=slurm-head

#SlurmctldHost=

#

MpiDefault=none

ProctrackType=proctrack/cgroup

ReturnToService=1

SlurmctldPidFile=/var/run/slurmctld.pid

SlurmctldPort=6817

SlurmdPidFile=/var/run/slurmd.pid

SlurmdPort=6818

SlurmdSpoolDir=/var/spool/slurmd

SlurmdUser=root

StateSaveLocation=/var/spool/slurmctld

SwitchType=switch/none

TaskPlugin=task/affinity

#TaskPlugin=task/cgroup

#

#

# TIMERS

InactiveLimit=0

KillWait=30

MinJobAge=300

SlurmctldTimeout=120

SlurmdTimeout=300

Waittime=0

# SCHEDULING

SchedulerType=sched/backfill

SelectType=select/cons_tres

SelectTypeParameters=CR_Core_Memory

#

#

# JOB PRIORITY

AccountingStorageEnforce=qos,limits

AccountingStorageHost=slurm-db

AccountingStoragePass=/var/run/munge/munge.socket.2

AccountingStorageType=accounting_storage/slurmdbd

AccountingStorageUser=slurm

#AccountingStorageTRES=gres/gpu

JobCompHost=slurm-db

JobCompLoc=slurm_acct_db

JobCompPass=mypassword

JobCompType=jobcomp/none

JobCompUser=slurm

JobAcctGatherFrequency=30

JobAcctGatherType=jobacct_gather/linux

SlurmctldDebug=info

SlurmctldLogFile=/var/log/slurm/slurmctld.log

SlurmdDebug=info

SlurmdLogFile=/var/log/slurm/slurmd.log

#GresTypes=gpu

NodeName=slurm-head RealMemory=1935 State=UNKNOWN

NodeName=slurm-db RealMemory=1935 State=UNKNOWN

NodeName=slurm-compute RealMemory=1935 State=UNKNOWN

PartitionName=compute Nodes=slurm-head,slurm-compute Default=YES MaxTime=168:00:00 State=UP

## 创建一些文件,赋权

mkdir /var/log/slurm/

touch /var/log/slurm/slurmctld.log

chown slurm: /var/log/slurm/slurmctld.log

chmod u+rw /var/log/slurm/slurmctld.log

touch /var/log/slurm/slurmd.log

chown slurm: /var/log/slurm/slurmd.log

chmod u+rw /var/log/slurm/slurmd.log

mkdir -p /var/spool/slurmctld

chown slurm: /var/spool/slurmctld

mkdir -p /var/spool/slurmd

chown slurm: /var/spool/slurmd

chown slurm: /var/log/slurm/

systemctl restart slurmctld

systemctl status slurmctld

systemctl restart slurmd

systemctl status slurmd

systemctl enable slurmctld

systemctl enable slurmd

***有问题,根据提示,执行以下命令,看下面的踩坑环节***

journalctl -xeu slurmd

journalctl -xeu slurmctld

journalctl -xeu slurmdbd

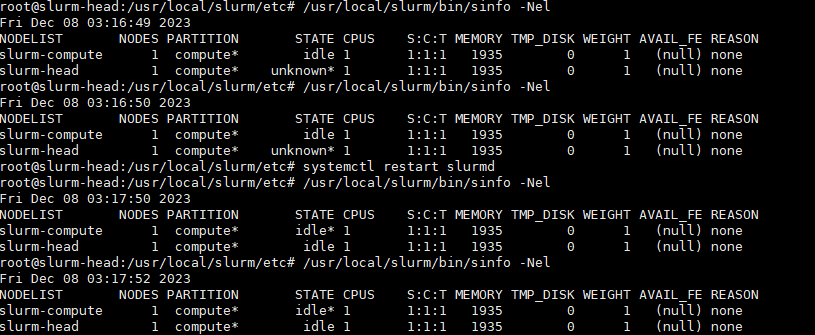

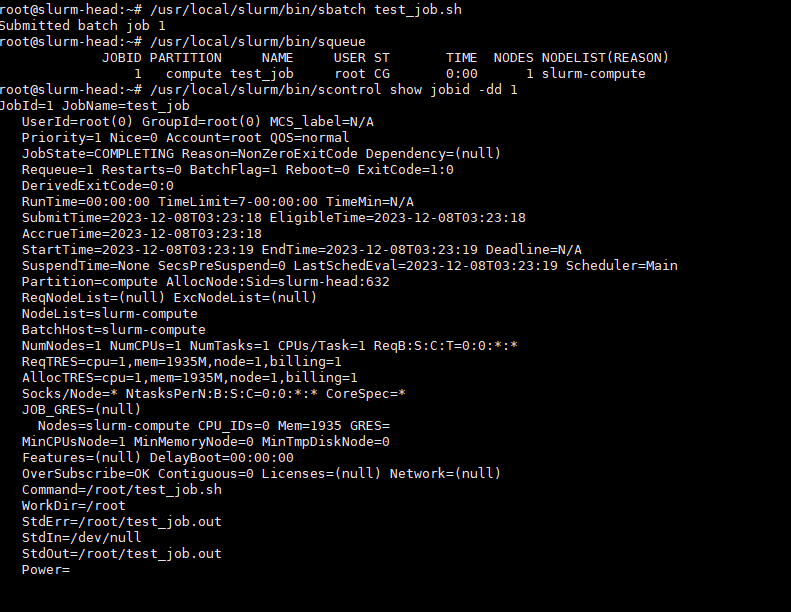

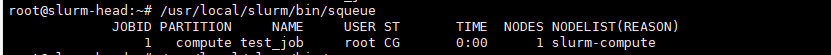

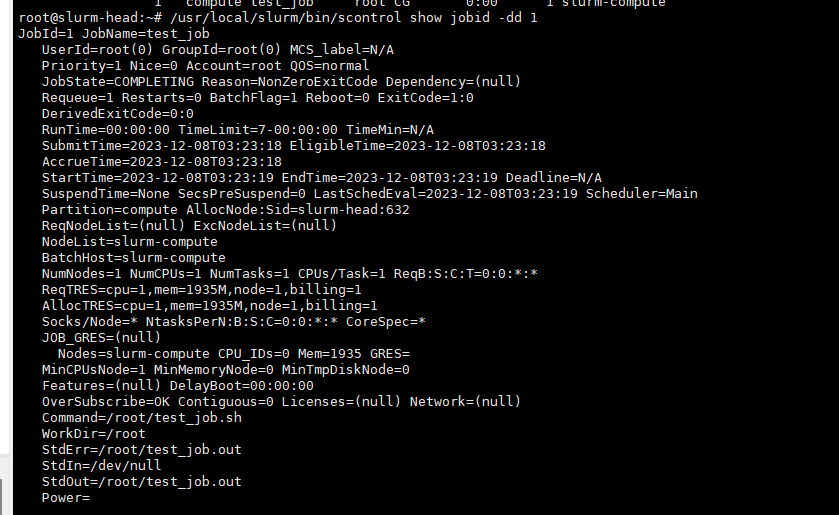

展示

- 集群状态验证

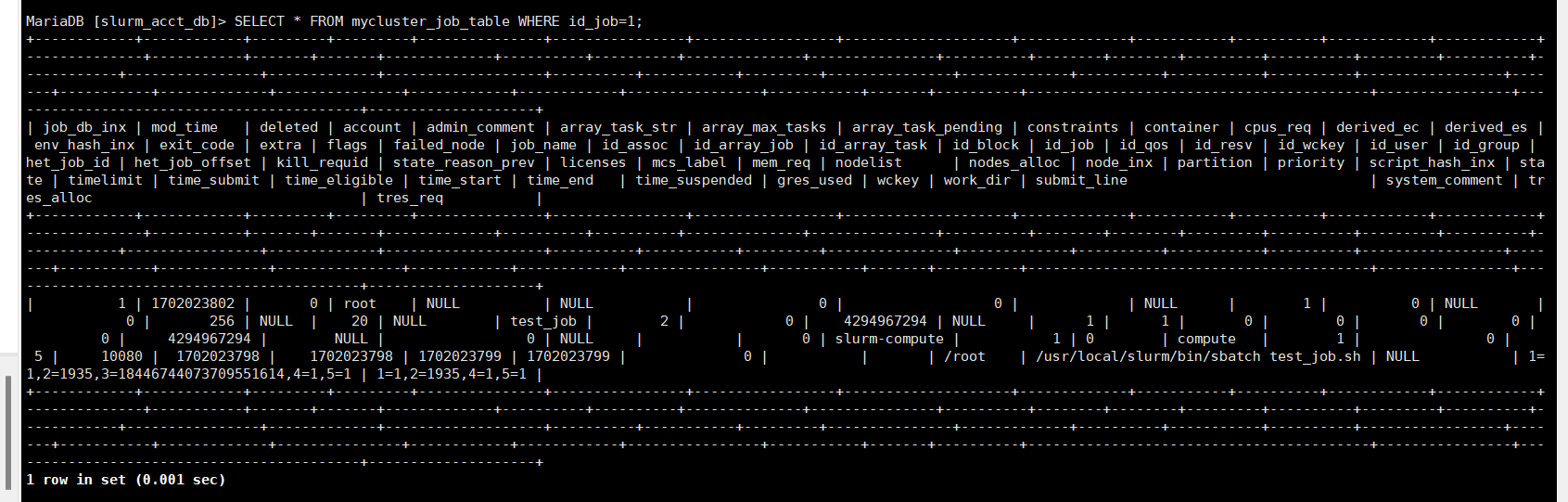

- 任务运行验证

- 数据库写入验证(与slurmdbd通信 192.168.86.135机器)

踩坑环节

-

Couldn’t find the specified plugin name for cgroup/v2 looking at all files

░░ The job identifier is 1747. Dec 07 06:28:09 slurm-head slurmd[59887]: slurmd: error: Couldn't find the specified plugin name for cgroup/v2 looking at all files Dec 07 06:28:09 slurm-head slurmd[59887]: slurmd: error: cannot find cgroup plugin for cgroup/v2 Dec 07 06:28:09 slurm-head slurmd[59887]: slurmd: error: cannot create cgroup context for cgroup/v2 Dec 07 06:28:09 slurm-head slurmd[59887]: slurmd: error: Unable to initialize cgroup plugin Dec 07 06:28:09 slurm-head slurmd[59887]: slurmd: error: slurmd initialization failed Dec 07 06:28:09 slurm-head systemd[1]: slurmd.service: Main process exited, code=exited, status=1/FAILURE ░░ Subject: Unit process exited这是因为,缺少/usr/local/slurm/lib/slurm/cgroup_v2.so,不要看网上的,很扯淡,基本上没有参考价值

解决:

#老老实实吧以下命令执行一遍

apt-get install -y munge libmunge-dev libmunge2 rng-tools make hwloc libhwloc-dev git gcc build-essential fakeroot devscripts debhelper libncurses-dev libgtk2.0-dev libpam0g-dev libperl-dev liblua5.3-dev libhwloc-dev dh-exec librrd-dev libipmimonitoring-dev hdf5-helpers libfreeipmi-dev libhdf5-dev man2html libcurl4-openssl-dev libpmix-dev libhttp-parser-dev libyaml-dev libjson-c-dev libjwt-dev liblz4-dev libdbus-1-dev librdkafka-dev libreadline-dev perl libpam0g-dev liblua5.3-dev libhwloc-dev

#然后重新config make

cd slurm-23.11.0/

make uninstall

make clean

./configure --enable-debug --prefix=/usr/local/slurm

make && make install

- slurmdbd无法运行,排除数据库信息不对后

需要make前,安装mariadb/mysql,哪怕你的mysql/mariadb不跟slurmdbd在一台,都是要装,不然缺少组件

缺少/usr/local/slurm/lib/slurm/accounting_storage_mysql.so

- error: cgroup namespace ‘freezer’ not mounted. aborting

说明你方向错了,没有按照我的配置,先按“Couldn’t find the specified plugin name for cgroup/v2 looking at all files”解决方案解决,然后cgrep.conf如下配置

#CgroupAutomount=yes

CgroupMountpoint=/sys/fs/cgroup

ConstrainCores=yes

ConstrainDevices=yes

ConstrainRAMSpace=yes

ConstrainSwapSpace=yes