SkyWalking的简单入门

文章目录

- SkyWalking 入门使用

-

- 背景

- 安装Elasticsearch并启动

- 安装SkyWalking并启动

- 配置SpringCloud微服务的Agent

- 查看SpringCloud的链路调用

-

- 单个微服务调用监控

- 微服务之间调用链路监控

- 全部微服务调用链路

- 停止SkyWalking

- 停止Elasticsearch

- 总结

SkyWalking 入门使用

背景

实际生产中,面对几十个、甚至成百上千个的微服务实例,如果一旦某个实例发生宕机,如果不能快速定位、提交预警,对实际生产造成的损失无疑是巨大的。所以,要对微服务进行监控、预警,对微服务的调用链路进行监控,迅速定位问题

安装Elasticsearch并启动

- 安装命令(以下命令为Mac命令,Linux下可以使用yum命令,Windows下可以直接在Elasticsearch官网下载对应版本的安装包即可)

brew update

brew search elasticsearch

brew install elasticsearch

- 启动elasticsearch

brew service start elasticsearch-full

- 检查是否启动成功

访问localhost:9200,是否有如下类似数据返回

{

"name" : "******",

"cluster_name" : "elasticsearch_name",

"cluster_uuid" : "rp73VaY8RRCgQrl4M5uR9A",

"version" : {

"number" : "7.7.1",

"build_flavor" : "default",

"build_type" : "tar",

"build_hash" : "ad56dce891c901a492bb1ee393f12dfff473a423",

"build_date" : "2020-05-28T16:30:01.040088Z",

"build_snapshot" : false,

"lucene_version" : "8.5.1",

"minimum_wire_compatibility_version" : "6.8.0",

"minimum_index_compatibility_version" : "6.0.0-beta1"

},

"tagline" : "You Know, for Search"

}

如果没有返回,则去存放日志位置,查看日志里的报错内容,如果不知道日志的存放位置,可以查看elasticsearch.yml中的path.log参数的值,对应的就是日志的存放位置

安装SkyWalking并启动

-

在SkyWalking官网下载对应的版本,这里下载的是Binary Distribution for ElasticSearch 7版本,因为上面安装的Elasticsearch版本是7

-

解压并修改配置

2.1 解压压缩包

tar -zxvf apache-skywalking-apm-es7-7.0.0.tar.gz2.2 进入解压后的文件夹,修改/config/application.yml配置

# Licensed to the Apache Software Foundation (ASF) under one # or more contributor license agreements. See the NOTICE file # distributed with this work for additional information # regarding copyright ownership. The ASF licenses this file # to you under the Apache License, Version 2.0 (the # "License"); you may not use this file except in compliance # with the License. You may obtain a copy of the License at # # http://www.apache.org/licenses/LICENSE-2.0 # # Unless required by applicable law or agreed to in writing, software # distributed under the License is distributed on an "AS IS" BASIS, # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. # See the License for the specific language governing permissions and # limitations under the License. #集群 本地是没有集群,默认单机,把集群的配置删除了 cluster: selector: ${SW_CLUSTER:standalone} standalone: core: selector: ${SW_CORE:default} default: # Mixed: Receive agent data, Level 1 aggregate, Level 2 aggregate # Receiver: Receive agent data, Level 1 aggregate # Aggregator: Level 2 aggregate role: ${SW_CORE_ROLE:Mixed} # Mixed/Receiver/Aggregator restHost: ${SW_CORE_REST_HOST:0.0.0.0} restPort: ${SW_CORE_REST_PORT:12800} restContextPath: ${SW_CORE_REST_CONTEXT_PATH:/} gRPCHost: ${SW_CORE_GRPC_HOST:0.0.0.0} gRPCPort: ${SW_CORE_GRPC_PORT:11800} gRPCSslEnabled: ${SW_CORE_GRPC_SSL_ENABLED:false} gRPCSslKeyPath: ${SW_CORE_GRPC_SSL_KEY_PATH:""} gRPCSslCertChainPath: ${SW_CORE_GRPC_SSL_CERT_CHAIN_PATH:""} gRPCSslTrustedCAPath: ${SW_CORE_GRPC_SSL_TRUSTED_CA_PATH:""} downsampling: - Hour - Day - Month # Set a timeout on metrics data. After the timeout has expired, the metrics data will automatically be deleted. enableDataKeeperExecutor: ${SW_CORE_ENABLE_DATA_KEEPER_EXECUTOR:true} # Turn it off then automatically metrics data delete will be close. dataKeeperExecutePeriod: ${SW_CORE_DATA_KEEPER_EXECUTE_PERIOD:5} # How often the data keeper executor runs periodically, unit is minute recordDataTTL: ${SW_CORE_RECORD_DATA_TTL:90} # Unit is minute minuteMetricsDataTTL: ${SW_CORE_MINUTE_METRIC_DATA_TTL:90} # Unit is minute hourMetricsDataTTL: ${SW_CORE_HOUR_METRIC_DATA_TTL:36} # Unit is hour dayMetricsDataTTL: ${SW_CORE_DAY_METRIC_DATA_TTL:45} # Unit is day monthMetricsDataTTL: ${SW_CORE_MONTH_METRIC_DATA_TTL:18} # Unit is month # Cache metric data for 1 minute to reduce database queries, and if the OAP cluster changes within that minute, # the metrics may not be accurate within that minute. enableDatabaseSession: ${SW_CORE_ENABLE_DATABASE_SESSION:true} topNReportPeriod: ${SW_CORE_TOPN_REPORT_PERIOD:10} # top_n record worker report cycle, unit is minute # Extra model column are the column defined by in the codes, These columns of model are not required logically in aggregation or further query, # and it will cause more load for memory, network of OAP and storage. # But, being activated, user could see the name in the storage entities, which make users easier to use 3rd party tool, such as Kibana->ES, to query the data by themselves. activeExtraModelColumns: ${SW_CORE_ACTIVE_EXTRA_MODEL_COLUMNS:false} storage: selector: ${SW_STORAGE:elasticsearch7} elasticsearch7: nameSpace: ${SW_NAMESPACE:"elasticsearch7"} clusterNodes: ${SW_STORAGE_ES_CLUSTER_NODES:localhost:9200} protocol: ${SW_STORAGE_ES_HTTP_PROTOCOL:"http"} # trustStorePath: ${SW_SW_STORAGE_ES_SSL_JKS_PATH:"../es_keystore.jks"} # trustStorePass: ${SW_SW_STORAGE_ES_SSL_JKS_PASS:""} enablePackedDownsampling: ${SW_STORAGE_ENABLE_PACKED_DOWNSAMPLING:true} # Hour and Day metrics will be merged into minute index. dayStep: ${SW_STORAGE_DAY_STEP:1} # Represent the number of days in the one minute/hour/day index. user: ${SW_ES_USER:""} password: ${SW_ES_PASSWORD:""} secretsManagementFile: ${SW_ES_SECRETS_MANAGEMENT_FILE:""} # Secrets management file in the properties format includes the username, password, which are managed by 3rd party tool. indexShardsNumber: ${SW_STORAGE_ES_INDEX_SHARDS_NUMBER:2} indexReplicasNumber: ${SW_STORAGE_ES_INDEX_REPLICAS_NUMBER:0} # Those data TTL settings will override the same settings in core module. recordDataTTL: ${SW_STORAGE_ES_RECORD_DATA_TTL:7} # Unit is day otherMetricsDataTTL: ${SW_STORAGE_ES_OTHER_METRIC_DATA_TTL:45} # Unit is day monthMetricsDataTTL: ${SW_STORAGE_ES_MONTH_METRIC_DATA_TTL:18} # Unit is month # Batch process setting, refer to https://www.elastic.co/guide/en/elasticsearch/client/java-api/5.5/java-docs-bulk-processor.html bulkActions: ${SW_STORAGE_ES_BULK_ACTIONS:1000} # Execute the bulk every 1000 requests flushInterval: ${SW_STORAGE_ES_FLUSH_INTERVAL:10} # flush the bulk every 10 seconds whatever the number of requests concurrentRequests: ${SW_STORAGE_ES_CONCURRENT_REQUESTS:2} # the number of concurrent requests resultWindowMaxSize: ${SW_STORAGE_ES_QUERY_MAX_WINDOW_SIZE:10000} metadataQueryMaxSize: ${SW_STORAGE_ES_QUERY_MAX_SIZE:5000} segmentQueryMaxSize: ${SW_STORAGE_ES_QUERY_SEGMENT_SIZE:200} profileTaskQueryMaxSize: ${SW_STORAGE_ES_QUERY_PROFILE_TASK_SIZE:200} advanced: ${SW_STORAGE_ES_ADVANCED:""} receiver-sharing-server: selector: ${SW_RECEIVER_SHARING_SERVER:default} default: authentication: ${SW_AUTHENTICATION:""} receiver-register: selector: ${SW_RECEIVER_REGISTER:default} default: receiver-trace: selector: ${SW_RECEIVER_TRACE:default} default: bufferPath: ${SW_RECEIVER_BUFFER_PATH:../trace-buffer/} # Path to trace buffer files, suggest to use absolute path bufferOffsetMaxFileSize: ${SW_RECEIVER_BUFFER_OFFSET_MAX_FILE_SIZE:100} # Unit is MB bufferDataMaxFileSize: ${SW_RECEIVER_BUFFER_DATA_MAX_FILE_SIZE:500} # Unit is MB bufferFileCleanWhenRestart: ${SW_RECEIVER_BUFFER_FILE_CLEAN_WHEN_RESTART:false} sampleRate: ${SW_TRACE_SAMPLE_RATE:10000} # The sample rate precision is 1/10000. 10000 means 100% sample in default. slowDBAccessThreshold: ${SW_SLOW_DB_THRESHOLD:default:200,mongodb:100} # The slow database access thresholds. Unit ms. receiver-jvm: selector: ${SW_RECEIVER_JVM:default} default: receiver-clr: selector: ${SW_RECEIVER_CLR:default} default: receiver-profile: selector: ${SW_RECEIVER_PROFILE:default} default: service-mesh: selector: ${SW_SERVICE_MESH:default} default: bufferPath: ${SW_SERVICE_MESH_BUFFER_PATH:../mesh-buffer/} # Path to trace buffer files, suggest to use absolute path bufferOffsetMaxFileSize: ${SW_SERVICE_MESH_OFFSET_MAX_FILE_SIZE:100} # Unit is MB bufferDataMaxFileSize: ${SW_SERVICE_MESH_BUFFER_DATA_MAX_FILE_SIZE:500} # Unit is MB bufferFileCleanWhenRestart: ${SW_SERVICE_MESH_BUFFER_FILE_CLEAN_WHEN_RESTART:false} istio-telemetry: selector: ${SW_ISTIO_TELEMETRY:default} default: envoy-metric: selector: ${SW_ENVOY_METRIC:default} default: alsHTTPAnalysis: ${SW_ENVOY_METRIC_ALS_HTTP_ANALYSIS:""} receiver_zipkin: selector: ${SW_RECEIVER_ZIPKIN:-} default: host: ${SW_RECEIVER_ZIPKIN_HOST:0.0.0.0} port: ${SW_RECEIVER_ZIPKIN_PORT:9411} contextPath: ${SW_RECEIVER_ZIPKIN_CONTEXT_PATH:/} receiver_jaeger: selector: ${SW_RECEIVER_JAEGER:-} default: gRPCHost: ${SW_RECEIVER_JAEGER_HOST:0.0.0.0} gRPCPort: ${SW_RECEIVER_JAEGER_PORT:14250} query: selector: ${SW_QUERY:graphql} graphql: path: ${SW_QUERY_GRAPHQL_PATH:/graphql} alarm: selector: ${SW_ALARM:default} default: telemetry: selector: ${SW_TELEMETRY:none} none: prometheus: host: ${SW_TELEMETRY_PROMETHEUS_HOST:0.0.0.0} port: ${SW_TELEMETRY_PROMETHEUS_PORT:1234} so11y: prometheusExporterEnabled: ${SW_TELEMETRY_SO11Y_PROMETHEUS_ENABLED:true} prometheusExporterHost: ${SW_TELEMETRY_PROMETHEUS_HOST:0.0.0.0} prometheusExporterPort: ${SW_TELEMETRY_PROMETHEUS_PORT:1234} receiver-so11y: selector: ${SW_RECEIVER_SO11Y:-} default: # 配置中心,这里没有,把多余的配置删掉了 configuration: selector: ${SW_CONFIGURATION:none} none: exporter: selector: ${SW_EXPORTER:-} grpc: targetHost: ${SW_EXPORTER_GRPC_HOST:127.0.0.1} targetPort: ${SW_EXPORTER_GRPC_PORT:9870}配置说明:

cluster: 有几种注册中心可以选择,默认standalone-单机

core: 默认配置

storage: 用来存储trace的数据存储,以便查询、展示,提供了H2、Mysql、ES、InfluxDB这几种存储方式,recordDataTTL是存储数据多长时间,默认是7天

这里选择es7,把其他几种存储方式注释掉。然后在storage的selector选项中选择elasticsearch7

其他多余的配置都被删掉了,如果想要配置集群、配置中心、或者存储选择H2、MySQL等,参考官方配置再加上即可 -

启动OAP、以及SkyWalkingUI

cd bin # 启动OAP oapService.sh # 启动SkyWalking UI webappService.sh访问localhost:8080,**如果8080端口被占用了怎么办?**进入webapp文件夹下的webaap.yml,修改port即可。启动之后,如图所示

记得把自动按钮点开、以及下面的开始筛选时间配置好,Skywalking就会每隔6秒刷新一次采集到的数据

配置SpringCloud微服务的Agent

-

以Eureka注册中心为例,演示如何配置agent。

1.1 在idea中的Run/Debug Configurations的VM options中加入如下配置

# -javaagent后面是skywalking-agent.jar的路径

-javaagent:/Users/jacksparrow414/skywalking/apache-skywalking-apm-bin-es7/agent/skywalking-agent.jar

1.2 在Environment viriables中添加两行配置

SW_AGENT_NAME=eureka;SW_AGENT_COLLECTOR_BACKEND_SERVICES=127.0.0.1:11800

SW_AGENT_NAME 对应的是服务的名字,可以任意取,同一个服务的不同实例,名字是一样的

1.3 类似的配置,分别配置gateway(网关)、one-service(服务1,对应两个实例DemoClientone、DemoClientthree)、two-service(服务2)。服务1、服务2均连接本地3306数据库。一共5个应用,依次启动:

注册中心->网关->服务1(两个实例)->服务2

再启动nginx

为什么启动nginx呢?

实际访问链路为:

web浏览器/App/H5小程序->发起请求->nginx反向代理->将请求转发至网关->网关根据接口路径->路由至不同的微服务实例

1.4 判断agent是否生效,在每个应用启动的时候,控制台会首先打印

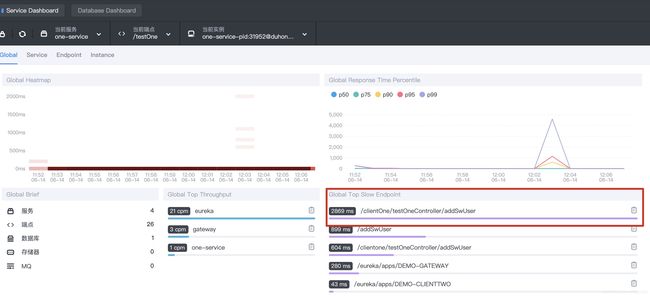

可以看到,有4个应用,1个数据库,是对的

查看SpringCloud的链路调用

单个微服务调用监控

- 请求one-service的一个实例DemoClientone的接口addSwUser接口,插入一条数据。

稍等一会可以在控制台查看到如下信息

1.4 在上图的Mysql/JDBC/PrepareStatement/execute中点击看到信息如下 如果想要看到执行SQL的参数怎么办呢?

如果想要看到执行SQL的参数怎么办呢?

在agent.config配置,设置下面的参数为true即可

plugin.mysql.trace_sql_parameters=${SW_MYSQL_TRACE_SQL_PARAMETERS:true}

配置之后,重启。再次请求接口,查看调用链路中的SQL调用如下

微服务之间调用链路监控

- 微服务之间调用,外部接口请求two-service,DemoClienttwo负载均衡请求one-service下的两个实例(DemoClientone、DemoClientthree)。

稍等一会,可以在控制台看到如下信息

可以看到,整个的调用链路为gateway(网关)->two-service->one-service

1.4 在上图的Mysql/JDBC/PrepareStatement/execute中点击看到信息如下

全部微服务调用链路

停止SkyWalking

由于官方没有提供关闭的脚本,详情见issue4698,只能手动去kill了

- 关闭Agent

# 我们配置的是11800,查找占用端口11800的进程

lsof -i:11800

# 使用pkill 直接杀掉父进程

pkill -9 34956

2. 关闭SkyWlking UI的服务端口

# 默认是8080端口

kill -9 8080

停止Elasticsearch

brew service stop elasticsearch-full

总结

- 快速使用SkyWalking完成对SpringCloud类的微服务架构进行简单的检测

- 告警功能的使用?

- 以上调用只是微服务的调用,而且还很单一。对于微服务集群,如:注册中心集群、网关集群、以及业务集群(一个实际业务服务,多个实例节点)。在集群的情况下,如何对集群中的服务进行平滑的适配SkyWalking,并被检测到?

- 对于集群的配置,如何结合配置中心来使配置简单、易用?

- 由于网关集群的前面可能是Nginx集群,如何把Nginx集群也纳入到SkyWalking的管理范围内?