VS2017+ FFmpeg+SDL 实现ts流解码播放

VS2017 环境配置

下载FFmpeg dev和share两个版本

将dev文件下的include 和 lib 文件夹添加到vs项目属性的vc++目录中的 包含目录和库目录中

再将share中bin文件夹下面的八个dll文件拷贝到项目中debug的那个文件夹中也就是包含exe的文件夹

然后在项目属性页的链接器输入里面附加依赖项添加刚才复制的八个dll文件的名字,之前有的不要删除

avcodec.lib;avdevice.lib;avfilter.lib;avformat.lib;avutil.lib;postproc.lib;swresample.lib;swscale.lib;

VS的SDL环境配置同理。

需要注意点:

ffmpeg的版本要和开发的版本一致 如果是64就选择64 如果是32就选择32位

如果你的项目引用有32位的dll(c++编译生成的),则只能选择32位平台,否则也会报错,整个项目要保持一致。

如果你的项目引用有32位的dll(c++编译生成的),则只能选择32位平台,否则也会报错,整个项目要保持一致。

如何检测是否配置好环境 直接引入头文件 如果没有红色 就表示配置成功了

extern "C" {

#include "libavutil/imgutils.h"

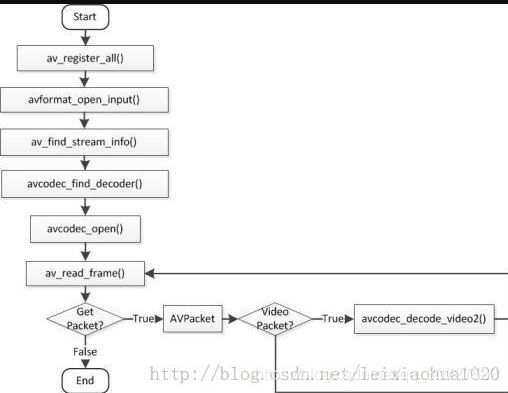

#include av_register_all();

2.打开视频文件

avformat_open_input()

3.检查数据流

avformat_find_stream_info()

4.找到第一个视频流,根据此获得解码器参数

v_idx = -1;

for (i = 0; i < p_fmt_ctx->nb_streams; i++)

{

if (p_fmt_ctx->streams[i]->codecpar->codec_type == AVMEDIA_TYPE_VIDEO)

{

v_idx = i;

printf("Find a video stream, index %d\n", v_idx);

frame_rate = p_fmt_ctx->streams[i]->avg_frame_rate.num /

p_fmt_ctx->streams[i]->avg_frame_rate.den;

break;

}

}

也可以直接调用

av_find_best_stream()

5.查找解码器

avcodec_find_decoder()

6.打开解码器

avcodec_open2()

7.为解码帧分配内存

avcodec_alloc_frame()

8.不停地从码流中提取出帧数据

av_read_frame()

9.向解码器发送数据

avcodec_send_packet()//之前版本对应 avcodec_decode_video2()

10.接收解码器输出数据,处理视频帧,解码得到一个frame

avcodec_receive_frame()//之前版本对应 avcodec_decode_video2()

原本的解码函数avcodec_decode_video2()被拆解为两个函数avcodec_send_packet()和avcodec_receive_frame()

解码流程到此结束 后面就是关于解码后数据的处理 可以通过av_write_frame()将编码后的视频码流写入文件,也可以通过SDL等将视频播放

#include