【深度学习】注意力机制(一)

本文介绍一些注意力机制的实现,包括SE/ECA/GE/A2-Net/GC/CBAM。

【深度学习】注意力机制(二)

【深度学习】注意力机制(三)

【深度学习】注意力机制(四)

【深度学习】注意力机制(五)

目录

一、SE(Squeeze-and-Excitation)

二、ECA(Efficient Channel Attention)

三、GE(Gather-Excite)

四、A2-Net(Double Attention Networks)

五、GCNet(Global Context)

六、CBAM(Convolutional Block Attention Module)

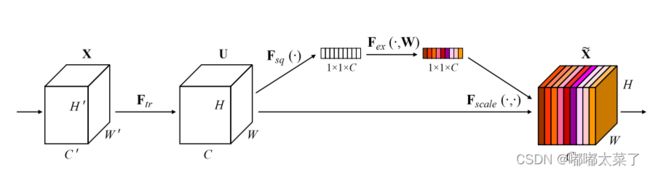

一、SE(Squeeze-and-Excitation)

SE是通道注意力机制,论文地址:论文地址

SE模块流程:

1、输入特征图经过自适应池化变为NC11的特征图,特征图resize为NC;

2、经过全连接层和Relu、sigmoid生成权重;

3、将权重和输入特征图相乘。

如下所示:

torch代码实现:

import numpy as np

import torch

from torch import nn

from torch.nn import init

class SEAttention(nn.Module):

def __init__(self, channel=512,reduction=16):

super().__init__()

self.avg_pool = nn.AdaptiveAvgPool2d(1)

self.fc = nn.Sequential(

nn.Linear(channel, channel // reduction, bias=False),

nn.ReLU(inplace=True),

nn.Linear(channel // reduction, channel, bias=False),

nn.Sigmoid()

)

def init_weights(self):

for m in self.modules():

if isinstance(m, nn.Conv2d):

init.kaiming_normal_(m.weight, mode='fan_out')

if m.bias is not None:

init.constant_(m.bias, 0)

elif isinstance(m, nn.BatchNorm2d):

init.constant_(m.weight, 1)

init.constant_(m.bias, 0)

elif isinstance(m, nn.Linear):

init.normal_(m.weight, std=0.001)

if m.bias is not None:

init.constant_(m.bias, 0)

def forward(self, x):

b, c, _, _ = x.size()

y = self.avg_pool(x).view(b, c)

y = self.fc(y).view(b, c, 1, 1)

return x * y.expand_as(x)二、ECA(Efficient Channel Attention)

ECA是通道注意力机制,论文:论文地址

ECA模块过程:

1、使用自适应池化将NCHW的特征图变为N1C的特征图(自适应池化、squeeze、transpose);

2、使用1D卷积生成N1C的特征图(在C通道做卷积),将经过1D卷积的特征图变为NC11(transpose、unsqueeze);

3、特征图通过sigmoid,生成NC11的权重,将权重与原特征图相乘;

如下图:

torch代码:

import torch

from torch import nn

from torch.nn.parameter import Parameter

class ECALayer(nn.Module):

"""Constructs a ECA module.

Args:

channel: Number of channels of the input feature map

k_size: Adaptive selection of kernel size

"""

def __init__(self, channel, k_size=3):

super(eca_layer, self).__init__()

self.avg_pool = nn.AdaptiveAvgPool2d(1)

self.conv = nn.Conv1d(1, 1, kernel_size=k_size, padding=(k_size - 1) // 2, bias=False)

self.sigmoid = nn.Sigmoid()

def forward(self, x):

# feature descriptor on the global spatial information

y = self.avg_pool(x)

# Two different branches of ECA module

y = self.conv(y.squeeze(-1).transpose(-1, -2)).transpose(-1, -2).unsqueeze(-1)

# Multi-scale information fusion

y = self.sigmoid(y)

return x * y.expand_as(x)

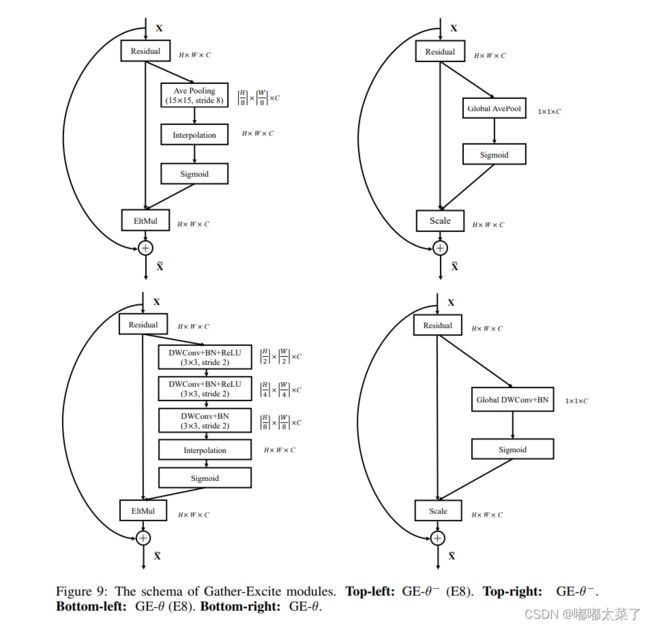

三、GE(Gather-Excite)

GE是空间注意力机制,论文:论文地址

该机制较为简单,有四种方式,总体流程如下(看图理解比较好,不多说了):

可以通过timm轻松调用该模块,timm实现的源码:

import math

from torch import nn as nn

import torch.nn.functional as F

from .create_act import create_act_layer, get_act_layer

from .create_conv2d import create_conv2d

from .helpers import make_divisible

from .mlp import ConvMlp

class GatherExcite(nn.Module):

""" Gather-Excite Attention Module

"""

def __init__(

self, channels, feat_size=None, extra_params=False, extent=0, use_mlp=True,

rd_ratio=1./16, rd_channels=None, rd_divisor=1, add_maxpool=False,

act_layer=nn.ReLU, norm_layer=nn.BatchNorm2d, gate_layer='sigmoid'):

super(GatherExcite, self).__init__()

self.add_maxpool = add_maxpool

act_layer = get_act_layer(act_layer)

self.extent = extent

if extra_params:

self.gather = nn.Sequential()

if extent == 0:

assert feat_size is not None, 'spatial feature size must be specified for global extent w/ params'

self.gather.add_module(

'conv1', create_conv2d(channels, channels, kernel_size=feat_size, stride=1, depthwise=True))

if norm_layer:

self.gather.add_module(f'norm1', nn.BatchNorm2d(channels))

else:

assert extent % 2 == 0

num_conv = int(math.log2(extent))

for i in range(num_conv):

self.gather.add_module(

f'conv{i + 1}',

create_conv2d(channels, channels, kernel_size=3, stride=2, depthwise=True))

if norm_layer:

self.gather.add_module(f'norm{i + 1}', nn.BatchNorm2d(channels))

if i != num_conv - 1:

self.gather.add_module(f'act{i + 1}', act_layer(inplace=True))

else:

self.gather = None

if self.extent == 0:

self.gk = 0

self.gs = 0

else:

assert extent % 2 == 0

self.gk = self.extent * 2 - 1

self.gs = self.extent

if not rd_channels:

rd_channels = make_divisible(channels * rd_ratio, rd_divisor, round_limit=0.)

self.mlp = ConvMlp(channels, rd_channels, act_layer=act_layer) if use_mlp else nn.Identity()

self.gate = create_act_layer(gate_layer)

def forward(self, x):

size = x.shape[-2:]

if self.gather is not None:

x_ge = self.gather(x)

else:

if self.extent == 0:

# global extent

x_ge = x.mean(dim=(2, 3), keepdims=True)

if self.add_maxpool:

# experimental codepath, may remove or change

x_ge = 0.5 * x_ge + 0.5 * x.amax((2, 3), keepdim=True)

else:

x_ge = F.avg_pool2d(

x, kernel_size=self.gk, stride=self.gs, padding=self.gk // 2, count_include_pad=False)

if self.add_maxpool:

# experimental codepath, may remove or change

x_ge = 0.5 * x_ge + 0.5 * F.max_pool2d(x, kernel_size=self.gk, stride=self.gs, padding=self.gk // 2)

x_ge = self.mlp(x_ge)

if x_ge.shape[-1] != 1 or x_ge.shape[-2] != 1:

x_ge = F.interpolate(x_ge, size=size)

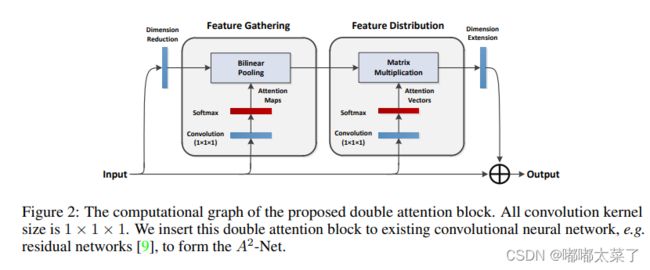

return x * self.gate(x_ge)四、A2-Net(Double Attention Networks)

双重注意力网络(A2-Nets)方法引入了新的关系函数用于非局部(NL)块,依次使用两个连续的注意力块。论文地址:论文地址

其计算过程类似于SelfAttention模块,可以看diamagnetic对照理解。

如下图:

代码如下:

import torch

import torch.nn as nn

import torch.nn.functional as F

class DoubleAtten(nn.Module):

"""

A2-Nets: Double Attention Networks. NIPS 2018

"""

def __init__(self,in_c):

"""

:param

in_c: 进行注意力refine的特征图的通道数目;

原文中的降维和升维没有使用

"""

super(DoubleAtten,self).__init__()

self.in_c = in_c

"""

以下对同一输入特征图进行卷积,产生三个尺度相同的特征图,即为文中提到A, B, V

"""

self.convA = nn.Conv2d(in_c,in_c,kernel_size=1)

self.convB = nn.Conv2d(in_c,in_c,kernel_size=1)

self.convV = nn.Conv2d(in_c,in_c,kernel_size=1)

def forward(self,input):

feature_maps = self.convA(input)

atten_map = self.convB(input)

b, _, h, w = feature_maps.shape

feature_maps = feature_maps.view(b, 1, self.in_c, h*w) # 对 A 进行reshape

atten_map = atten_map.view(b, self.in_c, 1, h*w) # 对 B 进行reshape 生成 attention_aps

global_descriptors = torch.mean((feature_maps * F.softmax(atten_map, dim=-1)),dim=-1) # 特征图与attention_maps 相乘生成全局特征描述子

v = self.convV(input)

atten_vectors = F.softmax(v.view(b, self.in_c, h*w), dim=-1) # 生成 attention_vectors

out = torch.bmm(atten_vectors.permute(0,2,1), global_descriptors).permute(0,2,1) # 注意力向量左乘全局特征描述子

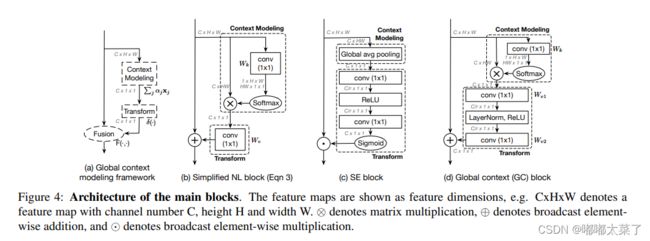

return out.view(b, _, h, w)五、GCNet(Global Context)

全局上下文网络(GC-Net)方法使用复杂的基于置换的操作将NL-块和SE块集成,以捕捉长期依赖关系。论文:论文地址

可以看出GC模块是对SE的改进,如下图:

该实现的初始化依赖于mmcv,代码如下:

import torch

from mmcv.cnn import constant_init, kaiming_init

from torch import nn

def last_zero_init(m):

if isinstance(m, nn.Sequential):

constant_init(m[-1], val=0)

else:

constant_init(m, val=0)

class ContextBlock(nn.Module):

def __init__(self,

inplanes,

ratio,

pooling_type='att',

fusion_types=('channel_add', )):

super(ContextBlock, self).__init__()

assert pooling_type in ['avg', 'att']

assert isinstance(fusion_types, (list, tuple))

valid_fusion_types = ['channel_add', 'channel_mul']

assert all([f in valid_fusion_types for f in fusion_types])

assert len(fusion_types) > 0, 'at least one fusion should be used'

self.inplanes = inplanes

self.ratio = ratio

self.planes = int(inplanes * ratio)

self.pooling_type = pooling_type

self.fusion_types = fusion_types

if pooling_type == 'att':

self.conv_mask = nn.Conv2d(inplanes, 1, kernel_size=1)

self.softmax = nn.Softmax(dim=2)

else:

self.avg_pool = nn.AdaptiveAvgPool2d(1)

if 'channel_add' in fusion_types:

self.channel_add_conv = nn.Sequential(

nn.Conv2d(self.inplanes, self.planes, kernel_size=1),

nn.LayerNorm([self.planes, 1, 1]),

nn.ReLU(inplace=True), # yapf: disable

nn.Conv2d(self.planes, self.inplanes, kernel_size=1))

else:

self.channel_add_conv = None

if 'channel_mul' in fusion_types:

self.channel_mul_conv = nn.Sequential(

nn.Conv2d(self.inplanes, self.planes, kernel_size=1),

nn.LayerNorm([self.planes, 1, 1]),

nn.ReLU(inplace=True), # yapf: disable

nn.Conv2d(self.planes, self.inplanes, kernel_size=1))

else:

self.channel_mul_conv = None

self.reset_parameters()

def reset_parameters(self):

if self.pooling_type == 'att':

kaiming_init(self.conv_mask, mode='fan_in')

self.conv_mask.inited = True

if self.channel_add_conv is not None:

last_zero_init(self.channel_add_conv)

if self.channel_mul_conv is not None:

last_zero_init(self.channel_mul_conv)

def spatial_pool(self, x):

batch, channel, height, width = x.size()

if self.pooling_type == 'att':

input_x = x

# [N, C, H * W]

input_x = input_x.view(batch, channel, height * width)

# [N, 1, C, H * W]

input_x = input_x.unsqueeze(1)

# [N, 1, H, W]

context_mask = self.conv_mask(x)

# [N, 1, H * W]

context_mask = context_mask.view(batch, 1, height * width)

# [N, 1, H * W]

context_mask = self.softmax(context_mask)

# [N, 1, H * W, 1]

context_mask = context_mask.unsqueeze(-1)

# [N, 1, C, 1]

context = torch.matmul(input_x, context_mask)

# [N, C, 1, 1]

context = context.view(batch, channel, 1, 1)

else:

# [N, C, 1, 1]

context = self.avg_pool(x)

return context

def forward(self, x):

# [N, C, 1, 1]

context = self.spatial_pool(x)

out = x

if self.channel_mul_conv is not None:

# [N, C, 1, 1]

channel_mul_term = torch.sigmoid(self.channel_mul_conv(context))

out = out * channel_mul_term

if self.channel_add_conv is not None:

# [N, C, 1, 1]

channel_add_term = self.channel_add_conv(context)

out = out + channel_add_term

return out六、CBAM(Convolutional Block Attention Module)

CBAM是通道-空间注意力机制,论文:论文地址

很简单的通道注意力和空间注意力融合。

如下图:

代码如下:

import numpy as np

import torch

from torch import nn

from torch.nn import init

class ChannelAttention(nn.Module):

def __init__(self,channel,reduction=16):

super().__init__()

self.maxpool=nn.AdaptiveMaxPool2d(1)

self.avgpool=nn.AdaptiveAvgPool2d(1)

self.se=nn.Sequential(

nn.Conv2d(channel,channel//reduction,1,bias=False),

nn.ReLU(),

nn.Conv2d(channel//reduction,channel,1,bias=False)

)

self.sigmoid=nn.Sigmoid()

def forward(self, x) :

max_result=self.maxpool(x)

avg_result=self.avgpool(x)

max_out=self.se(max_result)

avg_out=self.se(avg_result)

output=self.sigmoid(max_out+avg_out)

return output

class SpatialAttention(nn.Module):

def __init__(self,kernel_size=7):

super().__init__()

self.conv=nn.Conv2d(2,1,kernel_size=kernel_size,padding=kernel_size//2)

self.sigmoid=nn.Sigmoid()

def forward(self, x) :

max_result,_=torch.max(x,dim=1,keepdim=True)

avg_result=torch.mean(x,dim=1,keepdim=True)

result=torch.cat([max_result,avg_result],1)

output=self.conv(result)

output=self.sigmoid(output)

return output

class CBAMBlock(nn.Module):

def __init__(self, channel=512,reduction=16,kernel_size=49):

super().__init__()

self.ca=ChannelAttention(channel=channel,reduction=reduction)

self.sa=SpatialAttention(kernel_size=kernel_size)

def init_weights(self):

for m in self.modules():

if isinstance(m, nn.Conv2d):

init.kaiming_normal_(m.weight, mode='fan_out')

if m.bias is not None:

init.constant_(m.bias, 0)

elif isinstance(m, nn.BatchNorm2d):

init.constant_(m.weight, 1)

init.constant_(m.bias, 0)

elif isinstance(m, nn.Linear):

init.normal_(m.weight, std=0.001)

if m.bias is not None:

init.constant_(m.bias, 0)

def forward(self, x):

b, c, _, _ = x.size()

residual=x

out=x*self.ca(x)

out=out*self.sa(out)

return out+residual