NNDL 实验六 卷积神经网络(2)基础算子

目录

- 5.2 卷积神经网络的基础算子

-

- 5.2.1 卷积算子

-

- 5.2.1.1 多通道卷积

- 5.2.1.2 多通道卷积层算子

- 5.2.1.3 卷积算子的参数量和计算量

- 5.2.2 汇聚层算子

- 选做题:使用pytorch实现Convolution Demo

- 总结

5.2 卷积神经网络的基础算子

5.2.1 卷积算子

卷积层是指用卷积操作来实现神经网络中一层。

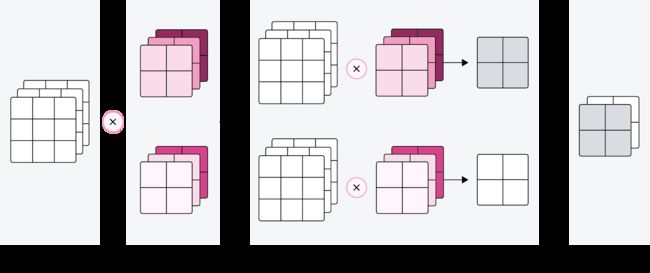

为了提取不同种类的特征,通常会使用多个卷积核一起进行特征提取。

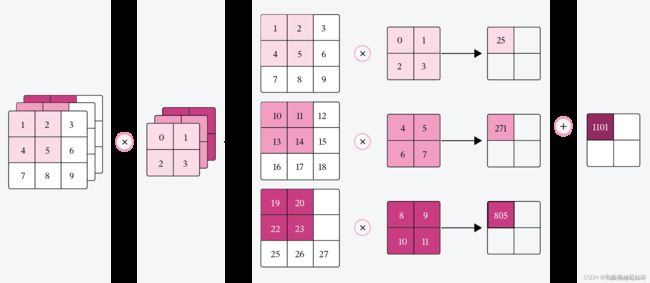

5.2.1.1 多通道卷积

5.2.1.2 多通道卷积层算子

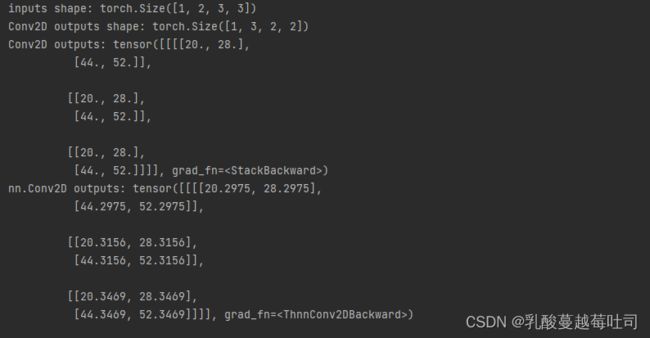

- 多通道卷积卷积层的代码实现

- Pytorch:torch.nn.Conv2d()代码实现

- 比较自定义算子和框架中的算子

import torch

import torch.nn as nn

class Conv2D(nn.Module):

def __init__(self, in_channels, out_channels, kernel_size, stride=1, padding=0, weight_attr=[], bias_attr=[]):

super(Conv2D, self).__init__()

# 创建卷积核

weight_attr = torch.randn([out_channels, in_channels, kernel_size, kernel_size])

weight_attr = torch.nn.init.constant(torch.tensor(weight_attr, dtype=torch.float32), val=1.0)

self.weight = torch.nn.Parameter(weight_attr)

# 创建偏置

bias_attr = torch.zeros([out_channels, 1])

bias_attr = torch.tensor(bias_attr, dtype=torch.float32)

self.bias = torch.nn.Parameter(bias_attr)

self.stride = stride

self.padding = padding

# 输入通道数

self.in_channels = in_channels

# 输出通道数

self.out_channels = out_channels

# 基础卷积运算

def single_forward(self, X, weight):

# 零填充

new_X = torch.zeros([X.shape[0], X.shape[1] + 2 * self.padding, X.shape[2] + 2 * self.padding])

new_X[:, self.padding:X.shape[1] + self.padding, self.padding:X.shape[2] + self.padding] = X

u, v = weight.shape

output_w = (new_X.shape[1] - u) // self.stride + 1

output_h = (new_X.shape[2] - v) // self.stride + 1

output = torch.zeros([X.shape[0], output_w, output_h])

for i in range(0, output.shape[1]):

for j in range(0, output.shape[2]):

output[:, i, j] = torch.sum(

new_X[:, self.stride * i:self.stride * i + u, self.stride * j:self.stride * j + v] * weight, [1, 2])

return output

def forward(self, inputs):

"""

输入:

- inputs:输入矩阵,shape=[B, D, M, N]

- weights:P组二维卷积核,shape=[P, D, U, V]

- bias:P个偏置,shape=[P, 1]

"""

feature_maps = []

# 进行多次多输入通道卷积运算

p = 0

for w, b in zip(self.weight, self.bias): # P个(w,b),每次计算一个特征图Zp

multi_outs = []

# 循环计算每个输入特征图对应的卷积结果

for i in range(self.in_channels):

single = self.single_forward(inputs[:, i, :, :], w[i])

multi_outs.append(single)

# print("Conv2D in_channels:",self.in_channels,"i:",i,"single:",single.shape)

# 将所有卷积结果相加

feature_map = torch.sum(torch.stack(multi_outs), 0) + b # Zp

feature_maps.append(feature_map)

# print("Conv2D out_channels:",self.out_channels, "p:",p,"feature_map:",feature_map.shape)

p += 1

# 将所有Zp进行堆叠

out = torch.stack(feature_maps, 1)

return out

inputs = torch.tensor([[[[0.0, 1.0, 2.0], [3.0, 4.0, 5.0], [6.0, 7.0, 8.0]],

[[1.0, 2.0, 3.0], [4.0, 5.0, 6.0], [7.0, 8.0, 9.0]]]])

conv2d = Conv2D(in_channels=2, out_channels=3, kernel_size=2)

print("inputs shape:", inputs.shape)

outputs = conv2d(inputs)

print("Conv2D outputs shape:", outputs.shape)

# 比较与torch API运算结果

weight_attr = torch.ones([3, 2, 2, 2])

bias_attr = torch.zeros([3, 1])

bias_attr = torch.tensor(bias_attr, dtype=torch.float32)

conv2d_torch = nn.Conv2d(in_channels=2, out_channels=3, kernel_size=2, bias=True)

conv2d_torch.weight = torch.nn.Parameter(weight_attr)

outputs_torch = conv2d_torch(inputs)

# 自定义算子运算结果

print('Conv2D outputs:', outputs)

# torch API运算结果

print('nn.Conv2D outputs:', outputs_torch)

5.2.1.3 卷积算子的参数量和计算量

5.2.2 汇聚层算子

汇聚层的作用是进行特征选择,降低特征数量,从而减少参数数量。

由于汇聚之后特征图会变得更小,如果后面连接的是全连接层,可以有效地减小神经元的个数,节省存储空间并提高计算效率。

常用的汇聚方法有两种,分别是:平均汇聚、最大汇聚。

- 平均汇聚:将输入特征图划分为2×2大小的区域,对每个区域内的神经元活性值取平均值作为这个区域的表示;

- 最大汇聚:使用输入特征图的每个子区域内所有神经元的最大活性值作为这个区域的表示。

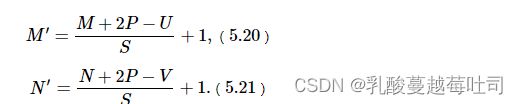

汇聚层输出的计算尺寸与卷积层一致,对于一个输入矩阵X∈RM×N和一个运算区域大小为U×V的汇聚层,步长为S,对输入矩阵进行零填充,那么最终输出矩阵大小则为

由于过大的采样区域会急剧减少神经元的数量,也会造成过多的信息丢失。目前,在卷积神经网络中比较典型的汇聚层是将每个输入特征图划分为2×2大小的不重叠区域,然后使用最大汇聚的方式进行下采样。

由于汇聚是使用某一位置的相邻输出的总体统计特征代替网络在该位置的输出,所以其好处是当输入数据做出少量平移时,经过汇聚运算后的大多数输出还能保持不变。比如:当识别一张图像是否是人脸时,我们需要知道人脸左边有一只眼睛,右边也有一只眼睛,而不需要知道眼睛的精确位置,这时候通过汇聚某一片区域的像素点来得到总体统计特征会显得很有用。这也就体现了汇聚层的平移不变特性。

-

代码实现一个简单的汇聚层。

-

torch.nn.MaxPool2d();torch.nn.avg_pool2d()代码实现

-

比较自定义算子和框架中的算子

import torch

import torch.nn as nn

class Pool2D(nn.Module):

def __init__(self, size=(2, 2), mode='max', stride=1):

super(Pool2D, self).__init__()

# 汇聚方式

self.mode = mode

self.h, self.w = size

self.stride = stride

def forward(self, x):

output_w = (x.shape[2] - self.w) // self.stride + 1

output_h = (x.shape[3] - self.h) // self.stride + 1

output = torch.zeros([x.shape[0], x.shape[1], output_w, output_h])

# 汇聚

for i in range(output.shape[2]):

for j in range(output.shape[3]):

# 最大汇聚

if self.mode == 'max':

value_m = max(torch.max(

x[:, :, self.stride * i:self.stride * i + self.w, self.stride * j:self.stride * j + self.h],

3).values[0][0])

output[:, :, i, j] = torch.tensor(value_m)

# 平均汇聚

elif self.mode == 'avg':

value_m = max(torch.mean(

x[:, :, self.stride * i:self.stride * i + self.w, self.stride * j:self.stride * j + self.h],

3)[0][0])

output[:, :, i, j] = torch.tensor(value_m)

return output

# 1.实现一个简单汇聚层

inputs = torch.tensor([[[[1., 2., 3., 4.], [5., 6., 7., 8.], [9., 10., 11., 12.], [13., 14., 15., 16.]]]])

pool2d = Pool2D(stride=2)

outputs = pool2d(inputs)

print("input: {}, \noutput: {}".format(inputs.shape, outputs.shape))

# 比较Maxpool2D与torch API运算结果

maxpool2d_torch = nn.MaxPool2d(kernel_size=(2, 2), stride=2)

outputs_torch = maxpool2d_torch(inputs)

# 自定义算子运算结果

print('Maxpool2D outputs:', outputs)

# torch API运算结果

print('nn.Maxpool2D outputs:', outputs_torch)

avgpool2d_torch = nn.AvgPool2d(kernel_size=(2, 2), stride=2)

outputs_torch = avgpool2d_torch(inputs)

pool2d = Pool2D(mode='avg', stride=2)

outputs = pool2d(inputs)

# 自定义算子运算结果

print('Avgpool2D outputs:', outputs)

# torch API运算结果

print('nn.Avgpool2D outputs:', outputs_torch)

- 由于汇聚层中没有参数,所以参数量为0;

- 最大汇聚中,没有乘加运算,所以计算量为0,

- 平均汇聚中,输出特征图上每个点都对应了一次求平均运算。

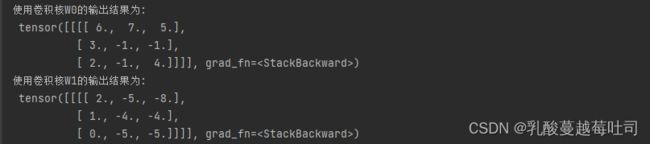

选做题:使用pytorch实现Convolution Demo

-

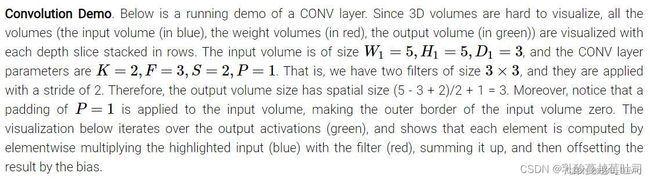

翻译以下内容

翻译:卷积演示。下面是一个正在运行的卷积层的示例,因为3D体积很难去可视化,所有的体积(输入体积(蓝色),权重体积(红色),输出体积(绿色))和每个深度层堆叠成行被可视化。输入体积是W1=5,H1=5,D1=3大小的,并且卷积层的参数K=2,F=3,S=2,P=1。就是说,我们有两个大小为3*3的卷积核(滤波器),并且他们的步长为2,因此,输出体积大小有空间大小为:(5-3+2)/2 +1 =3。此外,注意到padding(填充)的P=1被应用于输入体积,使得输入体积的外部边界为0。下面的可视化迭代输出激活(绿色),并且展示了每个元素的计算方法是将高亮的输入(蓝色)与过滤器(红色)逐个元素相乘,然后相加,用偏差抵消结果。

import torch

import torch.nn as nn

class Conv2D(nn.Module):

def __init__(self, in_channels, out_channels, kernel_size, stride=1, padding=0, weight_attr=[], bias_attr=[]):

super(Conv2D, self).__init__()

self.weight = torch.nn.Parameter(weight_attr)

self.bias = torch.nn.Parameter(bias_attr)

self.stride = stride

self.padding = padding

# 输入通道数

self.in_channels = in_channels

# 输出通道数

self.out_channels = out_channels

# 基础卷积运算

def single_forward(self, X, weight):

# 零填充

new_X = torch.zeros([X.shape[0], X.shape[1] + 2 * self.padding, X.shape[2] + 2 * self.padding])

new_X[:, self.padding:X.shape[1] + self.padding, self.padding:X.shape[2] + self.padding] = X

u, v = weight.shape

output_w = (new_X.shape[1] - u) // self.stride + 1

output_h = (new_X.shape[2] - v) // self.stride + 1

output = torch.zeros([X.shape[0], output_w, output_h])

for i in range(0, output.shape[1]):

for j in range(0, output.shape[2]):

output[:, i, j] = torch.sum(

new_X[:, self.stride * i:self.stride * i + u, self.stride * j:self.stride * j + v] * weight, [1, 2])

return output

def forward(self, inputs):

"""

输入:

- inputs:输入矩阵,shape=[B, D, M, N]

- weights:P组二维卷积核,shape=[P, D, U, V]

- bias:P个偏置,shape=[P, 1]

"""

feature_maps = []

# 进行多次多输入通道卷积运算

p = 0

for w, b in zip(self.weight, self.bias): # P个(w,b),每次计算一个特征图Zp

multi_outs = []

# 循环计算每个输入特征图对应的卷积结果

for i in range(self.in_channels):

single = self.single_forward(inputs[:, i, :, :], w[i])

multi_outs.append(single)

# print("Conv2D in_channels:",self.in_channels,"i:",i,"single:",single.shape)

# 将所有卷积结果相加

feature_map = torch.sum(torch.stack(multi_outs), 0) + b # Zp

feature_maps.append(feature_map)

# print("Conv2D out_channels:",self.out_channels, "p:",p,"feature_map:",feature_map.shape)

p += 1

# 将所有Zp进行堆叠

out = torch.stack(feature_maps, 1)

return out

# 传入矩阵参数

Input_Volume = torch.tensor([[[0, 1, 1, 0, 2], [2, 2, 2, 2, 1], [1, 0, 0, 2, 0], [0, 1, 1, 0, 0], [1, 2, 0, 0, 2]],

[[1, 0, 2, 2, 0], [0, 0, 0, 2, 0], [1, 2, 1, 2, 1], [1, 0, 0, 0, 0], [1, 2, 1, 1, 1]],

[[2, 1, 2, 0, 0], [1, 0, 0, 1, 0], [0, 2, 1, 0, 1], [0, 1, 2, 2, 2], [2, 1, 0, 0, 1]]])

Input_Volume = Input_Volume.reshape([1, 3, 5, 5])

# 创建卷积核

# 第一层卷积核

weight_attr1 = torch.tensor([[[-1, 1, 0], [0, 1, 0], [0, 1, 1]], [[-1, -1, 0], [0, 0, 0], [0, -1, 0]],

[[0, 0, -1], [0, 1, 0], [1, -1, -1]]], dtype=torch.float32)

weight_attr1 = weight_attr1.reshape([1, 3, 3, 3])

# 第二层卷积核

weight_attr2 = torch.tensor([[[1, 1, -1], [-1, -1, 1], [0, -1, 1]], [[0, 1, 0], [-1, 0, -1], [-1, 1, 0]],

[[-1, 0, 0], [-1, 0, 1], [-1, 0, 0]]], dtype=torch.float32)

weight_attr2 = weight_attr2.reshape([1, 3, 3, 3])

# 创建偏置1,2

bias_attr1 = torch.tensor(torch.ones([3, 1]))

bias_attr2 = torch.tensor(torch.zeros([3, 1]))

# 卷积核W0

conv2d_1 = Conv2D(in_channels=3, out_channels=3, kernel_size=3, stride=2, padding=1, weight_attr=weight_attr1,

bias_attr=bias_attr1)

output1 = conv2d_1(Input_Volume)

print("使用卷积核W0的输出结果为:\n", output1)

# 卷积核W1

conv2d_2 = Conv2D(in_channels=3, out_channels=2, kernel_size=3, stride=2, padding=1, weight_attr=weight_attr2,

bias_attr=bias_attr2)

output2 = conv2d_2(Input_Volume)

print("使用卷积核W1的输出结果为:\n", output2)

总结

这次实验主要是多通道卷积算子进行实现实验、卷积层和汇聚层一些基础算子的实现,做完实验后感觉算是理论结合实践了。到最后的选做题是整体的体会了一下利用不同卷积核提取不同特征的过程。

代码调试过程中结合理论课讲解的参数问题:conv2d的输入和卷积的权重都应该是四维的,其对应意义如下:

卷积核参数初始化:[输出通道数,输入的通道数,每层卷积核的高,每层卷积核宽]。

参考:

nn.Conv2d——二维卷积运算解读

【pytorch系列】卷积操作原理解析与nn.Conv2d用法详解

NNDL 实验5(上)