3ml乐谱制作工具

重点 (Top highlight)

Machine Learning (ML) is being adopted by businesses in almost every industry. Many businesses are looking towards ML Infrastructure platforms to propel their movement of leveraging AI in their business. Understanding the various platforms and offerings can be a challenge. The ML Infrastructure space is crowded, confusing, and complex. There are a number of platforms and tools spanning a variety of functions across the model building workflow.

几乎每个行业的企业都在采用机器学习(ML)。 许多企业正在寻找ML基础架构平台,以推动其在企业中利用AI的发展。 了解各种平台和产品可能是一个挑战。 ML基础结构空间拥挤,混乱且复杂 。 在整个模型构建工作流程中,有许多平台和工具涵盖了多种功能。

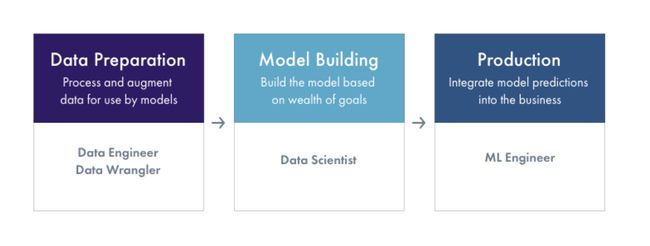

To understand the ecosystem, we broadly segment the machine learning workflow into three stages — data preparation, model building, and production. Understanding what the goals and challenges of each stage of the workflow can help make an informed decision on what ML Infrastructure platforms out there are best suited for your business’ needs.

为了了解生态系统,我们将机器学习工作流程大致分为三个阶段-数据准备,模型构建和生产。 了解工作流各个阶段的目标和挑战可以帮助您做出明智的决定,从而确定哪种ML基础架构平台最适合您的业务需求。

ML Infrastructure Platforms Diagram by Author ML基础结构平台图(按作者)Each of these stages of the Machine Learning workflow (Data Preparation, Model Building and Production) have a number of vertical functions. Some platforms cover functions across the ML workflow, while other platforms focus on single functions (ex: experiment tracking or hyperparameter tuning).

机器学习工作流的每个阶段(数据准备,模型构建和生产)都有许多垂直功能。 一些平台涵盖了ML工作流程中的所有功能,而其他平台则专注于单个功能(例如:实验跟踪或超参数调整)。

In our last posts, we dived in deeper into the Data Prep and Model Building parts of the ML workflow. In this post, we will dive deeper into Production.

在上一篇文章中,我们更深入地研究了ML工作流的数据准备和模型构建部分。 在本文中,我们将更深入地研究生产。

什么是生产? (What is Production?)

Launching a model into production can feel like crossing the finish line after a marathon. In most real-world environments, it can take a long time to get the data ready, model trained, and finally done with the research process. Then there’s the arduous process of putting the model into production which can involve complex deployment pipelines and serving infrastructures. The final stage of standing a model up in production involves checking the model is ready for production, packaging the model for deployment, deploying the model to a serving environment and monitoring the model & data in production.

将模型投入生产后,感觉就像在马拉松之后越过终点线。 在大多数现实环境中,准备好数据,训练模型并最终完成研究过程可能需要很长时间。 然后是将模型投入生产的艰巨过程,其中可能涉及复杂的部署管道和服务基础架构。 在生产中建立模型的最后阶段包括检查模型已准备好用于生产,打包模型以进行部署,将模型部署到服务环境以及监视生产中的模型和数据。

The production environment is by far, the most important, and surprisingly, least discussed part of the Model Lifecycle. This is where the model touches the business. It’s where the decisions the model makes actually improve outcomes or cause issues for customers. Training environments, where data scientists spend most of their time and thought, consist of just a sample of what the model will see in the real world.

到目前为止,生产环境是Model Lifecycle中最重要,最令人吃惊的,讨论最少的部分。 这就是模型与业务联系的地方。 模型在这里做出的决策实际上可以改善结果或为客户带来问题。 数据科学家花费大部分时间和思想的培训环境,仅包含模型在现实世界中看到的内容的示例。

研究到生产 (Research to Production)

One unique challenge in the operationalizing of Machine Learning is the movement from a research environment to a true production engineering environment. A Jupyter Notebook, the most common home of model development, is ostensibly a research environment. In a well controlled software development environment an engineer has version control, test coverage analysis, integration testing, tests that run at code check-ins, code reviews, and reproducibility. While there are many solutions trying to bring pieces of the software engineering workflow to Jupyter notebooks, these notebooks are first and foremost a research environment designed for rapid and flexible experimentation. This coupled with the fact that not all data scientists are software engineers by training, but have backgrounds that span many fields such as chemical engineering, physicists, and statisticians — you have what is one of the core problems in production ML:

机器学习的可操作性中的一个独特挑战是从研究环境到真正的生产工程环境的转变。 Jupyter笔记本,最常见的模型开发家,表面上是一个研究环境。 在一个受良好控制的软件开发环境中,工程师可以进行版本控制,测试覆盖率分析,集成测试,在代码签入时运行的测试,代码审查和可重复性。 尽管有许多解决方案试图将软件工程工作流程带入Jupyter笔记本电脑,但这些笔记本电脑首先是设计用于快速灵活实验的研究环境。 再加上并非所有数据科学家都是经过培训的软件工程师,而是拥有跨许多领域(例如化学工程,物理学家和统计学家)的背景知识,您就有生产ML的核心问题之一:

The core challenge in Production ML is uplifting a model from a research environment to a software engineering environment while still delivering the results of research.

的 核心挑战 生产ML中的是 振奋人心 来自一个模型 研究环境 到软件工程 环境 同时仍然提供 结果 研究。

In this blog post, we will highlight core areas that are needed to uplift research into production with consistency, reproducibility, and observability that we expect of software engineering.

在此博客文章中,我们将重点介绍以期望的软件工程一致性,可重复性和可观察性将研究提升到生产所需的核心领域。

模型验证 (Model Validation)

Note: Model validation is NOT to be confused with the validation data set.

注意: 请勿将模型验证与验证数据集混淆。

Quick Recap on Datasets: Models are built and evaluated using multiple datasets. The training data set is used to fit the parameters of the model. The validation data set is used to evaluate the model while tuning hyperparameters. The test set is used to evaluate the unbiased performance of the final model by presenting a dataset that wasn’t used to tune hyperparameters or used in any way in training.

数据集快速回顾:使用多个数据集构建和评估模型。 训练数据集用于拟合模型的参数。 验证数据集用于在调整超参数时评估模型。 该测试集用于通过呈现未用于调整超参数或在训练中以任何方式使用的数据集来评估最终模型的无偏性能。

什么是模型验证? (What is Model Validation?)

You’re a data scientist and you’ve built a well-performing model on your test set that addresses your business goals. Now, how do you validate that your model will work in a production environment?

您是一名数据科学家,并且已经在测试集中建立了一个性能良好的模型来解决您的业务目标。 现在, 您如何验证您的模型将在生产环境中工作 ?

Model validation is critical to delivering models that work in production. Models are not linear code. They are built from historical training data and deployed in complex systems that rely on real-time input data. The goal of model validation is to test model assumptions and demonstrate how well a model is likely to work under a large set of different environments. These model validation results should be saved and referenced to compare model performance when deployed in production environments.

模型验证对于交付可在生产环境中使用的模型至关重要。 模型不是线性代码。 它们是根据历史训练数据构建的,并部署在依赖实时输入数据的复杂系统中。 模型验证的目的是测试模型假设并演示模型在大量不同环境下的工作效果。 这些模型验证结果应保存并参考,以便在生产环境中部署时比较模型性能。

Models can be deployed to production environments in a variety of different ways, there are a number of places where translation from research to production can introduce errors. In some cases, migrating a model from research to production can literally involve translating a Python based Jupyter notebook to Java production code. While we will cover in depth on model storage, deployment and serving in the next section, it is important to note that some operationalization approaches insert additional risk that research results do not match production results. Platforms such as Algorithmia, SageMaker, Databricks and Anyscale are building platforms that are trying to allow research code to directly move to production without rewriting code.

可以通过多种不同的方式将模型部署到生产环境中,在很多地方,从研究到生产的转换都会引入错误。 在某些情况下,从研究迁移模式,生产可以从字面上翻译涉及一个基于Python Jupyter笔记本的Java产品代码。 尽管我们将在下一部分中深入介绍模型的存储,部署和服务,但需要注意的是,某些运营方法会带来额外的风险,即研究结果与生产结果不符。 诸如Algorithmia,SageMaker,Databricks和Anyscale之类的平台正在构建平台,这些平台试图允许研究代码直接移至生产环境而无需重写代码。

In the Software Development Lifecycle, unit testing, integration testing, benchmarking, build checks,etc help ensure that the software is considered with different inputs and validated before deploying into production. In the Model Development Lifecycle, model validation is a set of common & reproducible tests that are run prior to the model going into production.

在软件开发生命周期中,单元测试,集成测试,基准测试,构建检查等有助于确保在将软件部署到生产环境之前考虑使用不同的输入并对其进行验证。 在模型开发生命周期中,模型验证是在模型投入生产之前运行的一组常见且可重复的测试。

行业模型验证的现状 (Current State of Model Validation in Industry)

Model validation is varied across machine learning teams in the industry today. In less regulated use cases/industries or less mature data science organizations, the model validation process involves just the data scientist who built the model. The data scientist might submit a code review for their model built on a Jupyter notebook to the broader team. Another data scientist on the team might catch any modelling issues. Additionally model testing might consist of a very simple set of hold out tests that are part of the model development process.

当今行业中的机器学习团队,模型验证各不相同。 在管制较少的用例/行业或较不成熟的数据科学组织中,模型验证过程仅涉及构建模型的数据科学家。 数据科学家可能会将其在Jupyter笔记本上构建的模型的代码审查提交给更广泛的团队。 团队中的另一位数据科学家可能会遇到任何建模问题。 另外,模型测试可能包含一组非常简单的测试,这些测试是模型开发过程的一部分。

More mature machine learning teams have built out a wealth of tests that run both at code check-in and prior to model deployment. These tests might include feature checks, data quality checks, model performance by slice, model stress tests and backtesting. In the case of backtesting, the production ready model is fed prior historical production data, ideally testing the model on a large set of unseen data points.

越来越成熟的机器学习团队已经建立了很多测试,这些测试可以在代码检入时以及在模型部署之前运行。 这些测试可能包括功能检查,数据质量检查,按切片的模型性能,模型压力测试和回测。 在回测的情况下,生产就绪模型将被馈入先前的历史生产数据,理想情况下是在大量看不见的数据点上测试模型。

In regulated industries such as fintech and banking, the validation process can be very involved and can be even longer than the actual model building process. There are separate teams for model risk management focused on assessing the risk of the model and it’s outputs. Model Validation is a separate team whose job it is to break the model. It’s an internal auditing function that is designed to stress and find situations where the model breaks. A parallel to the software engineering world would be the QA team and code review process.

在诸如金融科技和银行业之类的受监管行业中,验证过程可能会非常复杂, 甚至可能比实际的模型构建过程更长 。 有独立的模型风险管理团队,专注于评估模型及其输出的风险。 模型验证是一个单独的团队,其职责是破坏模型。 这是一个内部审核功能,旨在强调和查找模型破坏的情况。 质量工程师团队和代码审查过程将与软件工程界平行。

模型验证检查 (Model Validation Checks)

Regardless of the industry, there are certain checks to do prior to deploying the model in production. These checks include (but are not limited to):

无论行业如何,在将模型部署到生产中之前都需要进行某些检查。 这些检查包括(但不限于):

- Model evaluation tests (Accuracy, RMSE, etc…) both overall and by slice 整体和按切片进行模型评估测试(准确性,RMSE等)

- Prediction distribution checks to compare model output vs previous versions 预测分布检查以比较模型输出与先前版本

- Feature distribution checks to compare highly important features to previous tests 功能分布检查以将非常重要的功能与以前的测试进行比较

- Feature importance analysis to compare changes in features models are using for decisions 特征重要性分析以比较用于决策的特征模型的变化

- Sensitivity analysis to random & extreme input noise 对随机和极端输入噪声的灵敏度分析

- Model Stress Testing 模型压力测试

- Bias & Discrimination 偏见与歧视

- Labeling Error and Feature Quality Checks 标签错误和功能质量检查

- Data Leakage Checks (includes Time Travel) 数据泄漏检查(包括时间旅行)

- Over-fitting & Under-fitting Checks 过度安装和不足安装检查

- Backtesting on historical data to compare and benchmark performance 对历史数据进行回测以比较和基准性能

- Feature pipeline tests that ensure no feature broke between research and production 功能流水线测试,确保研究和生产之间没有功能中断

ML Infrastructure tools that are focused on model validation provide an ability to perform these checks or analyze data from checks — in a repeatable, and reproducible fashion. They enable an organization to reduce the time to operationalize their models and deliver models with the same confidence they deliver software.

专注于模型验证的ML基础结构工具提供了以重复和可复制的方式执行这些检查或分析检查数据的功能。 它们使组织可以减少交付模型和交付模型的时间,而交付软件具有与交付软件相同的信心。

Example ML Infrastructure Platforms that enable Model Validation: Arize AI, SAS (finance), Other Startups

支持模型验证的示例ML基础架构平台:Arize AI,SAS(财务),其他启动

模型合规与审计 (Model Compliance and Audit)

In the most regulated industries, there can be an additional compliance and audit stage where models are reviewed by internal auditors or even external auditors. ML Infrastructure tools that are focused on compliance and audit teams often focus on maintaining a model inventory, model approval and documentation surrounding the model. They work with model governance to enforce policies on who has access to what models, what tier of validation a model has to go through, and aligning incentives across the organization.

在监管最严格的行业中,可能会有额外的合规性和审计阶段,内部审计员甚至外部审计员会审查模型。 专注于合规性和审核团队的ML基础架构工具通常专注于维护模型清单,模型批准和围绕模型的文档。 他们与模型治理一起执行有关谁有权访问哪些模型,模型必须经过的验证层级以及在整个组织内调整激励措施的政策。

Example ML Infrastructure: SAS Model Validation Solutions

ML基础结构示例:SAS模型验证解决方案

持续交付 (Continuous Delivery)

In organizations that are setup for continuous delivery and continuous integration, a subset of the validation checks above are run when:

在为连续交付和持续集成而设置的组织中,在以下情况下运行上述验证检查的子集:

- Code is checked in — Continuous Integration 检入代码—持续集成

- A new model is ready for production -Continuous Delivery 一个新的模型已经准备好生产-连续交付

The continuous integration tests can be run as code is checked in and are typically structured more as unit tests. A larger set of validation tests that are broader and may include backtesting are typically run when the model is ready for production. The continuous delivery validation of a model becomes even more important as teams are trying to continually retrain or auto-retrain based on model drift metrics.

连续集成测试可以在检入代码时运行,并且通常更像是单元测试。 当模型准备好生产时,通常会运行一组更广泛的验证测试,其中可能包括回测。 随着团队试图基于模型漂移指标进行连续再训练或自动再训练,模型的持续交付验证变得更加重要。

A number of tools such as ML Flow enable the model management workflow that integrates with CI/CD tests and records artifacts.These tools integrate into Github to enable pulling/storing the correct model and storing results initiated by GitHub actions.

诸如ML Flow之类的许多工具都启用了与CI / CD测试集成并记录工件的模型管理工作流,这些工具集成到Github中以启用/存储正确的模型并存储由GitHub动作启动的结果。

Example ML Infrastructure Platforms that Enable Continuous Delivery: Databricks, ML Flow, CML

支持连续交付的示例ML基础架构平台:数据块,ML流,CML

往下 (Up Next)

There are so many under discussed and extremely important topics on operationalizing AI that we will be diving into!Up next, we will be continuing deeper into Production ML and discuss model deployment, model serving, and model observability.

关于AI的可操作性,有许多讨论中且极其重要的主题,我们将深入研究!接下来,我们将继续深入生产ML,并讨论模型部署,模型服务和模型可观察性。

联系我们 (Contact Us)

If this blog post caught your attention and you’re eager to learn more, follow us on Twitter and Medium! If you’d like to hear more about what we’re doing at Arize AI, reach out to us at [email protected]. If you’re interested in joining a fun, rockstar engineering crew to help make models successful in production, reach out to us at [email protected]!

如果此博客文章引起您的注意,并且您渴望了解更多信息,请在Twitter和Medium上关注我们! 如果您想了解有关我们在Arize AI所做的更多信息,请通过[email protected]与我们联系。 如果您有兴趣加入有趣的Rockstar工程团队来帮助使模型成功生产,请通过[email protected]与我们联系!

翻译自: https://towardsdatascience.com/ml-infrastructure-tools-for-production-1b1871eecafb

3ml乐谱制作工具